Advanced ML: Learn how to Improve Accuracy by optimizing Hyper-paramaters

By-

Tanay Agrawal and Anubhav Kesari

twitter@agrawal_tanay

linkedin@kesarianubhav

Lets Get Started

Hyperparameters are settings that can be tuned to control the behavior of a

machine/deep learning algorithm.

In a Clustering algorithm

the radius of the cluster is one hyperparameter

In neural networks ,

learning rate is a one

The Optimization Part

How Do We Tune Them

Exhaustive Approach!!

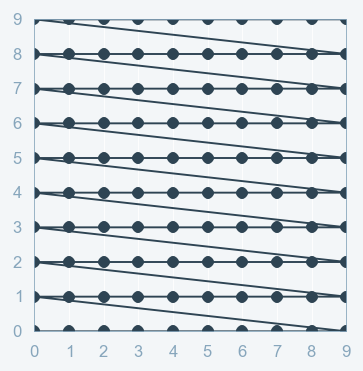

Grid Search

Prepare a grid of the possible combination of values of your hyper-parameter!!

Calculate Accuracy for all those combinations

Choose the combination for best accuracy

Yess! You Optimized it !!

Random Search

Prepare a grid of the possible combination of values of your hyperparameter !!

But dont check for every combination !!

Good !! Obviously Faster than Grid ..

But everything is upto luck !!!

Just Think !!

If you want to do a Regression Analysis

In sklearn library,there are around 20 approaches for Regression

On an average each has 5 hyperparameters

Taking 1000 sets of hyper-parameter for each algorithm, if each takes half a minute to train

It would take around 7 days to get you the best hyper-parameter for your data

Damn its slow

Do the Math!

And that was not even a

Neural Network

Its not always that slow is better! If you know what we mean!

Our Saviour

Tree-Structured Parzen Estimator

Do you remember Decision Tree ??

Good !!

We have something similar for you !!

TPE creates

the tree structure

based on history

Picks the best model

(one with max value of Expected Improvement)

Adds it in its history

Selecting next set of Hyper-param accordingly

So where do the Hyperopt fits in the Picture?

Hyperopt says!

Lets take a toy example,

Minimize: f(x,y) = x^2 - y^2

Such that x and y belongs to a range R1 and R2

Set a range :

let

x be [ -3,1]

y be [ -2,2]

Set the Objective Function:

f(x,y) = x^2 -y^2

best = fmin(f(x,y), space, algo=tpe)

Boom !! You'll get the

best range for x and y

AND NOW TIME TO GET OUR HANDS DIRTY !!!

If you know what we mean.

You can reach out to us

tanay_agrawal@hotmail.com

or

kesarianubhav@gmail.com

Efficient Hyperparameter Optimization-

By Anubhav Kesari

Efficient Hyperparameter Optimization-

- 1,849