Appium

TensorFlow Brain

and its

PROBLEMStatement

elementByClassname("android.v4.ViewPage")

elementByXpath(".//*[@text='cart']")

elementById("cart")

elementByClassChain(".//XCUITypeWindow//*//XCUIClassName")

Locator Strategies

elementByCustom("ai:cart")

AI Locator

Demo

Appium AI Plugin

- Get all leaf DOM elements from the application under test, including each element’s coordinates and size.

- Get a screenshot of the application.

- Crop the screenshot into lots of little screenshots

- Run each element’s cropped screenshot through the neural network.

How does

it WORK?

MODEL WORK?

How does the

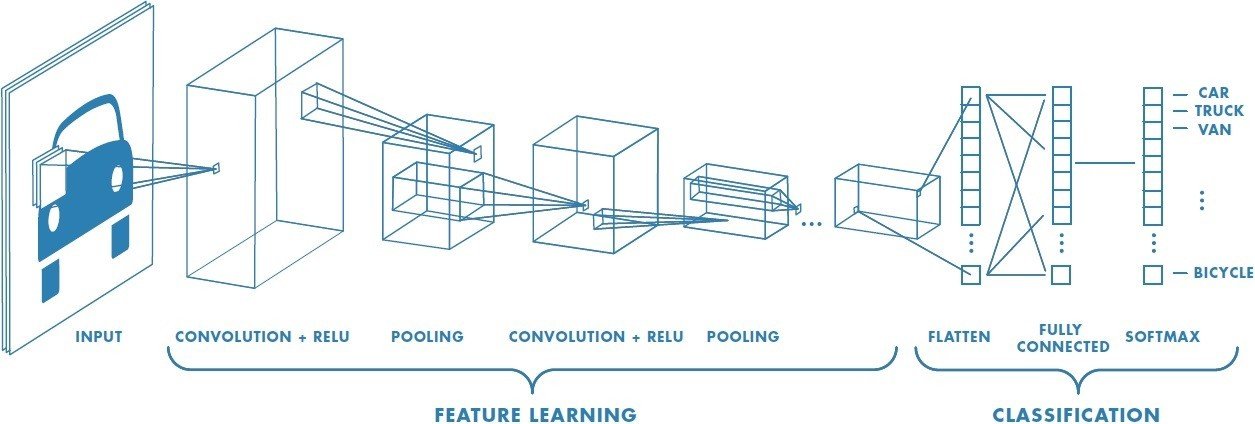

The model is a neural network that takes a 224✕224 pixel 3-channel color image as input and outputs 105 numbers that represent the confidence or probability that the input image matches each of the 105 labels that the model knows about (cart, edit, twitter, etc.

The model is MobileNet v1,2 which is a pre-trained model released by Google AI. MobileNet is a “deep”, “convolutional” neural network.

Neural Network

RECOGNIZE APP ICONS

Training the model to

Demo

| Selector Strategy | ||

|---|---|---|

| CSS/XPath | When your app’s DOM changes | Easy to understand why Easy to fix |

| Test.ai’s image classifier | When your app’s icons change visibly When the model changes (that is, when you upgrade the Appium plugin) |

Hard to understand why Hard to fix |

PROS

CONS

OBJECT DETECTION TECHNIQUE

Demo

- Greyscale images for training

- Usage of XPath (//*[not(child::*)]) to identify all the elements in the screen

- Pass all images to tensorflow at once

- Use of SSD (Single Shot MultiBox Detector)

- Usage of another MobileNet model for e.g 128✕128 pixels

- Model benchmarking requires a more accurate test dataset

- Reduce false positives and mis-classification

Future Improvements

Appium and AI

By Sai Krishna

Appium and AI

- 1,741