Kafka

Intro

Kafka is a distributed streaming platform.

fast

high accuracy

resilent

fault torlerent

decouple system dependency

high throughput

Before kafka

User

interact

Application

Database

service

User

interact

service

service

service

service

Database

Application

service

User

interact

service

service

service

service

Database

service commucation

Application

service

User

interact

service

service

service

service

Database

Application

Kafka

producer

User

interact

consumer

cosumer

producer

producer

Database

communicate via

Kafka

Application

Kafka

pub

pub

pub

sub

sub

producer

Kafka

event

metadata

key,

value,

timestamp

and event(message) store in disk

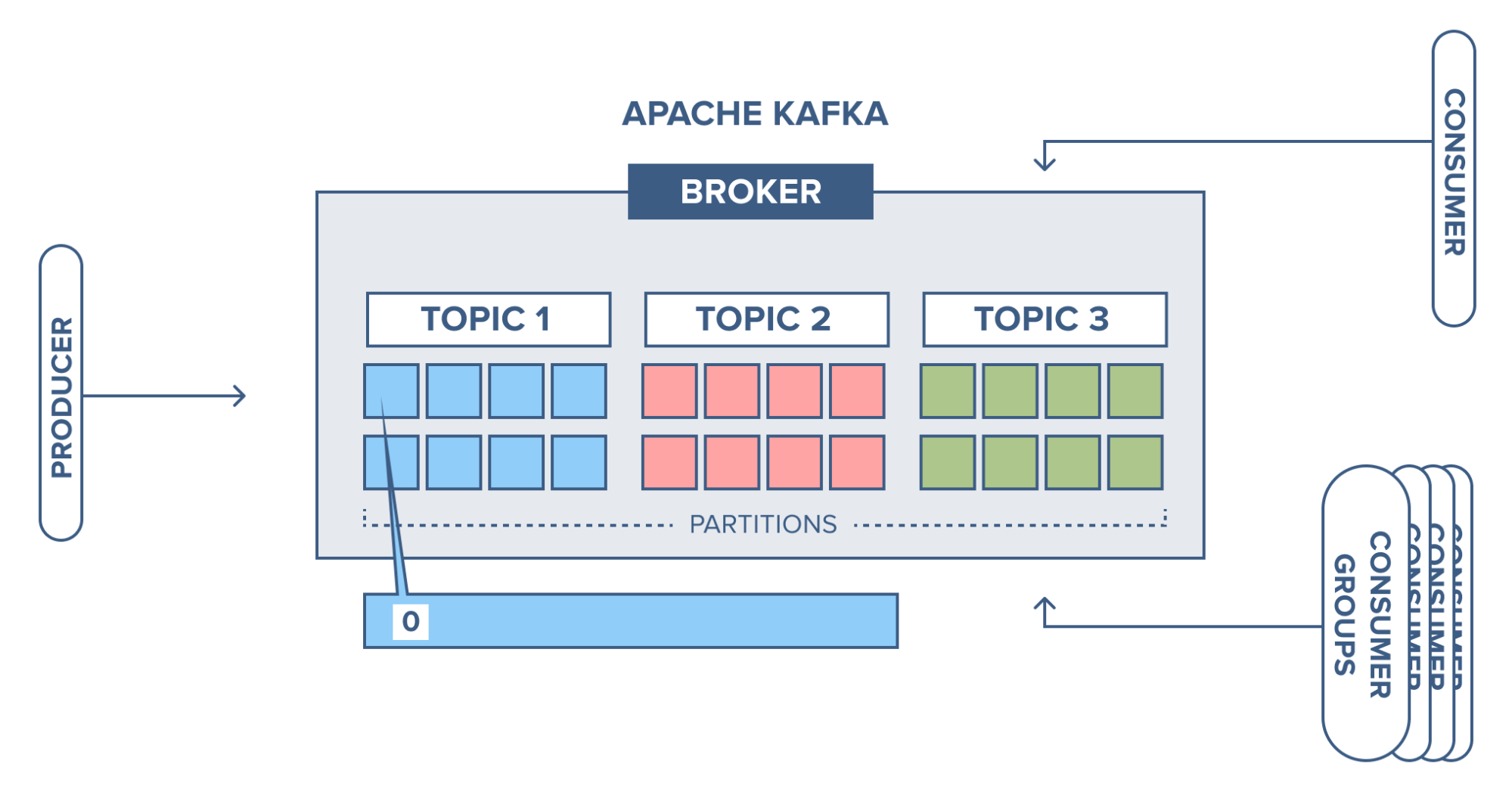

Kafka

broker

topic 1

topic 2

topic 3

producer

consumer

e

.

.

.

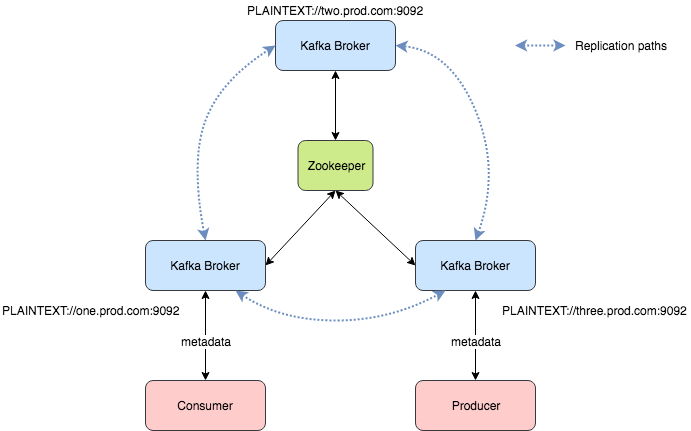

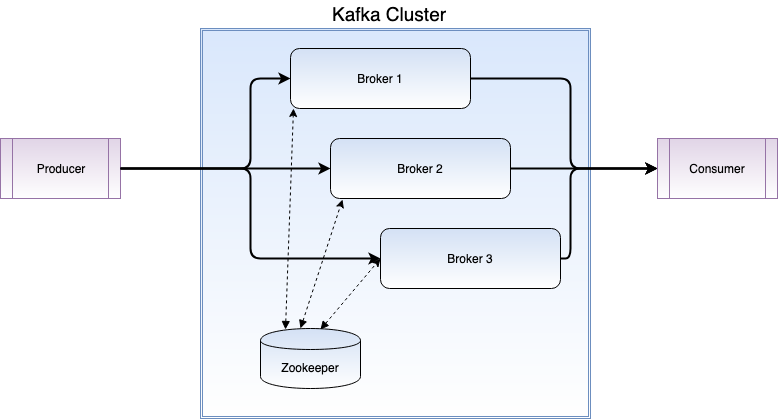

Kafka

cluster

broker 1

broker 2

broker 3

Zookeeper

zookeeper 管理 broker

Case

Article Views

Scenario

We need to record article views count each query from server, but in scalable system, this behavior will be a bottleneck.

When you update views counter in database, it will lock the data row (or whole table with bad index configuration), affecting the query performance.

Easy Solution

- Cache the views counter value in memory

- Write back to database in long period

Easy Solution

- Cache the views counter value in memory

- Write back to database in long period

Risk

- Accident shutdown

- Long term value sync issues between multiple application nodes

Fantastic Solution

Stream Processing

In memory database

In my case

consume

Views Counter

Consumer

Produce Kafka Message

Update everytime

Consumer

subscribe

consume

subscribe

Update Cached View

Get Cached View

Redis

Full Logs

Dedicated Table

Get Cached View

Update periodly

Aggragated

ArticleViews

ArticleViewLogs

const REDIS_URI = process.env.REDIS_URI || 'redis://127.0.0.1:6379';

const redisClient = redis.createClient({ url: REDIS_URI });

const CACHE_EXPIRE_SECONDS = 4 * 60 * 60; // 4 hours;

export async function getArticleViews(articleId: string): Promise<number> {

return new Promise((resolve) => {

redisClient.get(articleId, async (_, views) => {

if (views) {

resolve(Number(views));

} else {

const manager = await getManager();

const articleViewRecord = await manager.findOne(ArticleView, { ArticleId: articleId });

if (articleViewRecord) {

redisClient.set(articleId, articleViewRecord.views.toString());

redisClient.expire(articleId, CACHE_EXPIRE_SECONDS);

resolve(articleViewRecord.views);

} else {

redisClient.set(articleId, '0');

redisClient.expire(articleId, CACHE_EXPIRE_SECONDS);

resolve(0);

}

}

});

});

}Get from cache

When miss cache, find from aggregated table.

import { Kafka, logLevel } from 'kafkajs';

const KAFKA_CLIENT_ID = process.env.KAFKA_CLIENT_ID || 'wealth-usage-listener';

const KAFKA_BROKER_URI = process.env.KAFKA_BROKER_URI || 'localhost:7092';

const KAFKA_ARTICLE_VIEW_TOPIC = process.env.KAFKA_ARTICLE_VIEW_TOPIC || 'wealth-article-views';

const kafka = new Kafka({

logLevel: logLevel.INFO,

brokers: [KAFKA_BROKER_URI],

clientId: KAFKA_CLIENT_ID,

});

const initQueue: QueueRecord[] = [];

export default async function init() {

if (isInitialing || isConnected) {

return;

}

debugKafkaProducer('Connecting Producer...');

isInitialing = true;

producer.on('producer.connect', () => {

initQueue.map((record) => async () => {

await producer.send({

topic: KAFKA_ARTICLE_VIEW_TOPIC,

messages: [

{

key: 'id',

value: record.articleId,

...(record.memberId ? {

headers: {

memberId: record.memberId,

},

} : {}),

},

],

});

}).reduce((prev, next) => prev.then(next), Promise.resolve());

});

await producer.connect();

isConnected = true;

}

Create Producer

export async function recordArticleView(

articleId: string,

memberId?: string | undefined,

): Promise<void> {

redisClient.get(articleId, (_, views) => {

if (views) {

redisClient.set(articleId, (Number(views) + 1).toString());

} else {

redisClient.set(articleId, '1');

}

redisClient.expire(articleId, CACHE_EXPIRE_SECONDS);

});

if (!isConnected) {

debugKafkaProducer('Kafka Producer is not connected.');

initQueue.push({ articleId, memberId });

if (!isInitialing) {

init();

}

return;

}

await producer.send({

topic: KAFKA_ARTICLE_VIEW_TOPIC,

messages: [

{

key: 'id',

value: articleId,

...(memberId ? {

headers: {

memberId,

},

} : {}),

},

],

});

}Producer

const KAFKA_CONSUMER_GROUP_ID = process.env.KAFKA_CONSUMER_GROUP_ID || 'wealth-consumer';

const KAFKA_ARTICLE_VIEW_TOPIC = process.env.KAFKA_ARTICLE_VIEW_TOPIC || 'wealth-article-views';

const ARTICLE_VIEW_UPDATE_FEQ_IN_MS = Number(process.env.ARTICLE_VIEW_UPDATE_FEQ_IN_MS || '30000'); // 30 sec

const consumer = kafka.consumer({ groupId: KAFKA_CONSUMER_GROUP_ID });

export default async function run() {

await consumer.subscribe({ topic: KAFKA_ARTICLE_VIEW_TOPIC });

let lastSync = Date.now();

await consumer.run({

eachMessage: async ({ topic, message }) => {

const articleId = message.value!.toString();

if (topic === KAFKA_ARTICLE_VIEW_TOPIC && message?.key?.toString() === 'id') {

const manager = await getManager();

const log = manager.create(ArticleViewLog, {

ArticleId: articleId,

MemberId: message.headers?.memberId?.toString() ?? undefined,

});

await manager.save(log);

}

if (ARTICLE_VIEW_UPDATE_FEQ_IN_MS

&& (Date.now() - lastSync) > ARTICLE_VIEW_UPDATE_FEQ_IN_MS) {

const manager = await getManager();

const count = await manager.count(ArticleViewLog, { ArticleId: articleId });

const viewRecord = await manager.findOne(ArticleView, articleId);

if (viewRecord) {

viewRecord.views = count;

viewRecord.updatedAt = new Date();

await manager.save(viewRecord);

} else {

const newRecord = manager.create(ArticleView, {

ArticleId: articleId,

views: count,

});

await manager.save(newRecord);

}

lastSync = Date.now();

debugKafkaConsumer(`Article ${articleId} updated views to ${count}`);

}

},

});

}Create Consumer

import debug from 'debug';

import Koa from 'koa';

import { createConnection } from 'typeorm';

import { getArticleViews } from './worker';

const debugServer = debug('Wealth:UsageListenerServer');

const USAGE_LISTENER_PORT = Number(process.env.USAGE_LISTENER_PORT || '6068');

const app = new Koa();

app.use(async (ctx) => {

const articleId = ctx.url.replace(/^\//, '');

ctx.body = await getArticleViews(articleId);

});

createConnection().then(() => {

app.listen(USAGE_LISTENER_PORT, () => {

debugServer(`Usage Listener Server listen on ${USAGE_LISTENER_PORT}`);

});

});

Export Service

Database

Application

User

interact

update

data

Database

Application

update

data

event

(metadata)

Logging

order sequence of events

key,

value,

timestamp

Technically speaking, event streaming is the practice of capturing data in real-time from event sources like databases, sensors, mobile devices, cloud services, and software applications in the form of streams of events;

Kafka

By Jay Chou

Kafka

- 836