Building an Anagram Solver

with

Rust, Yew and Netlify

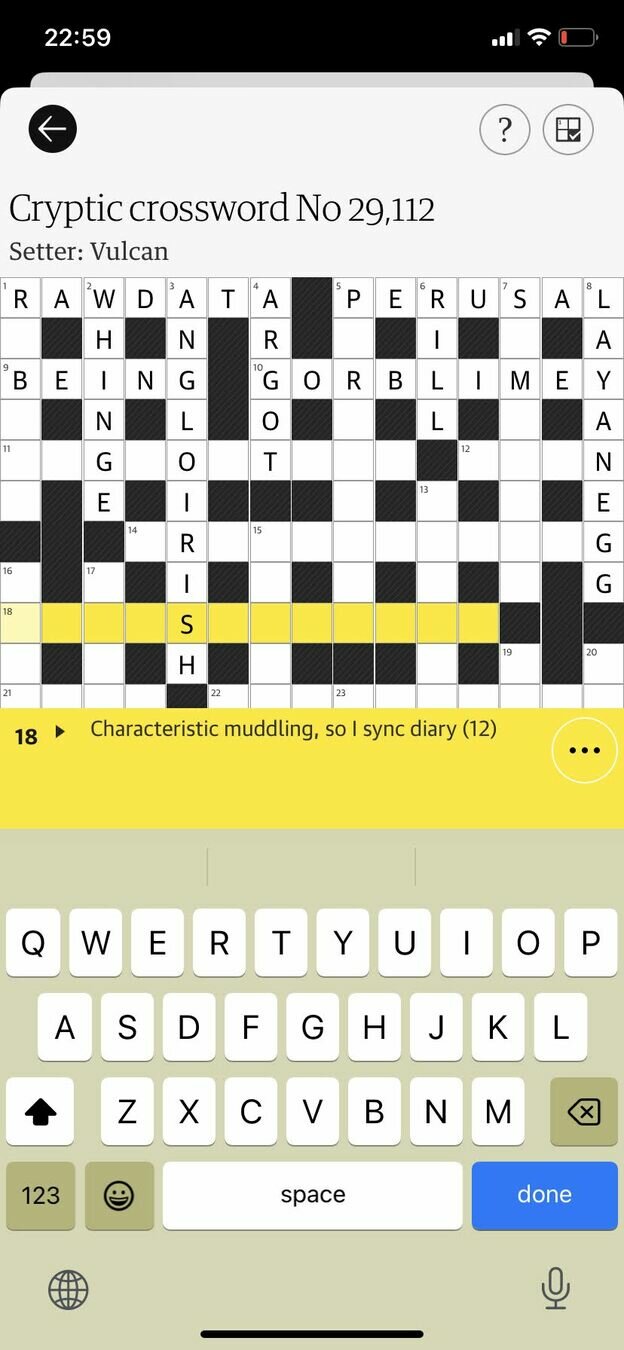

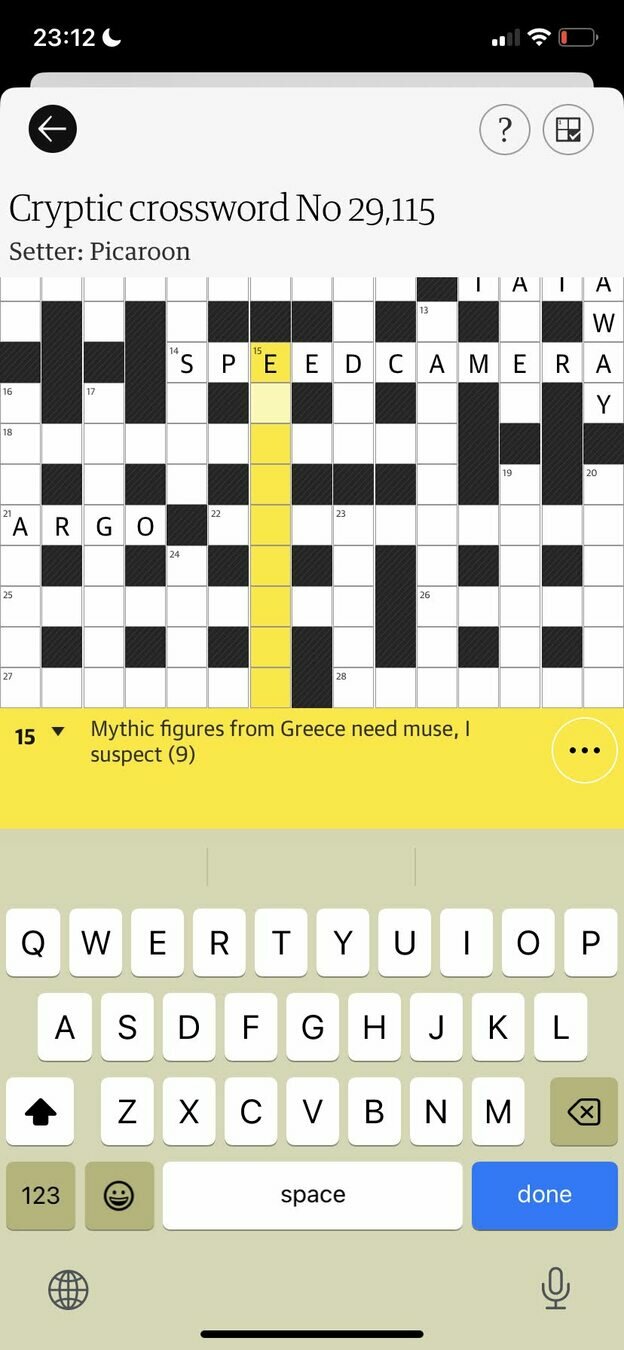

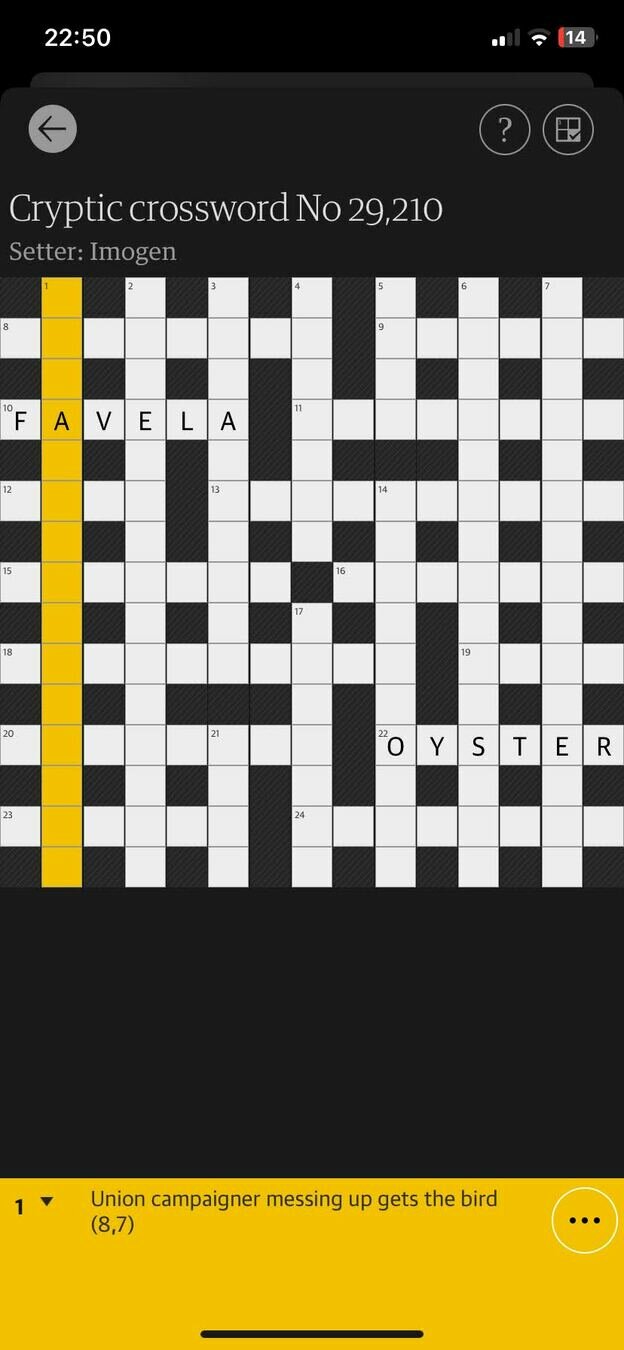

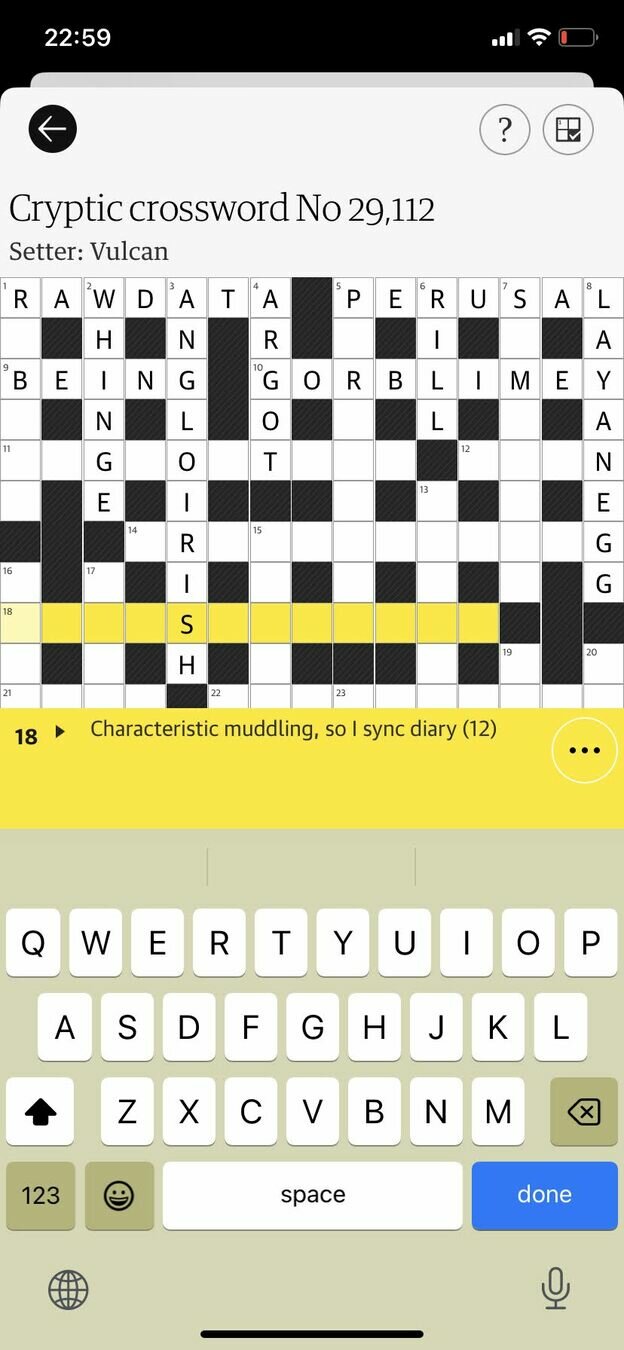

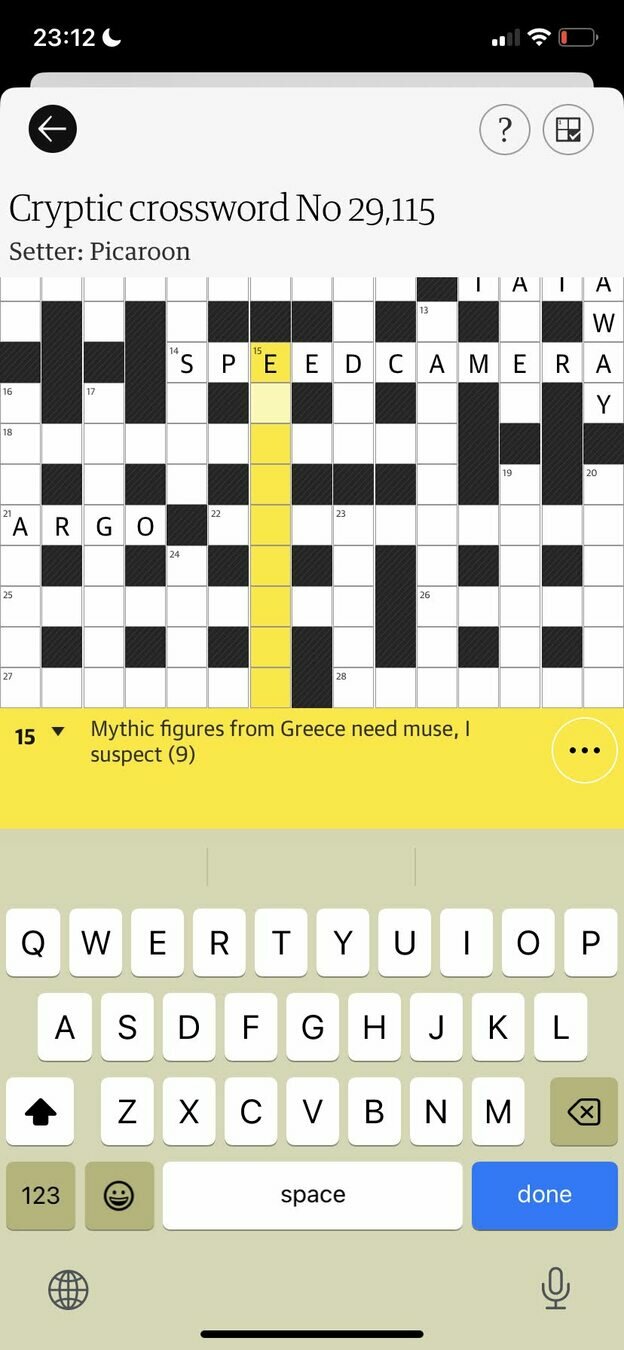

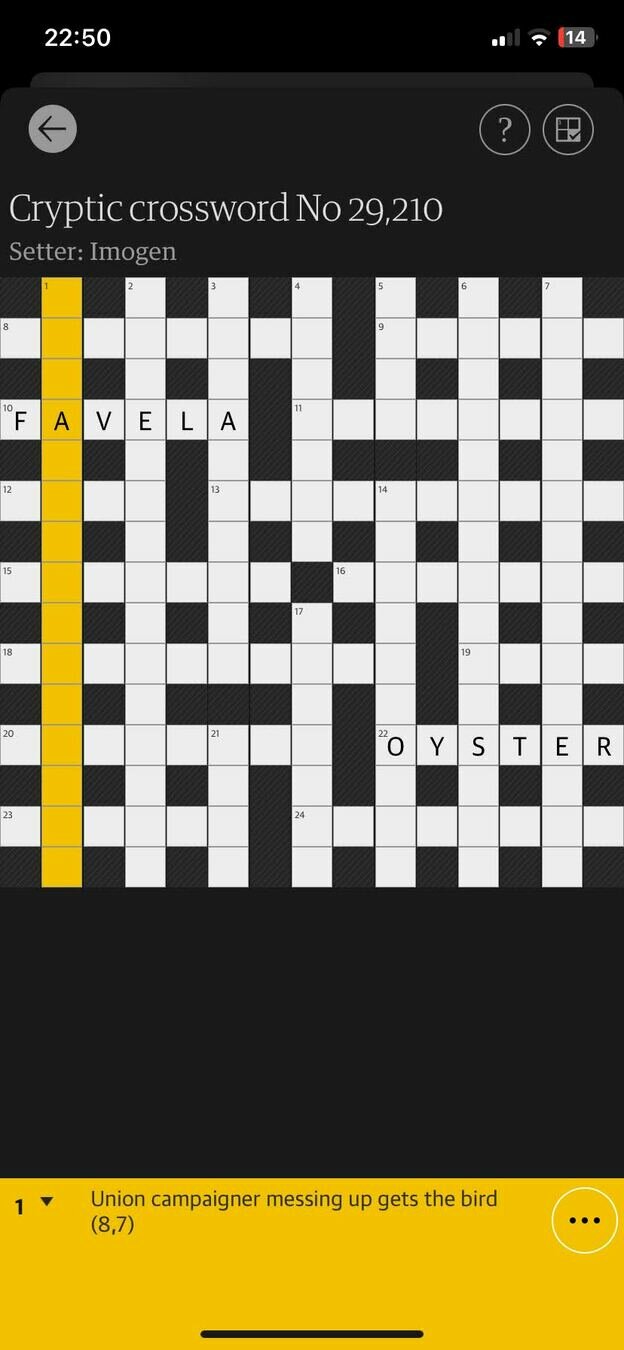

Characteristic muddling, so I sync diary (12)

lengthstraightanagram hintanagram

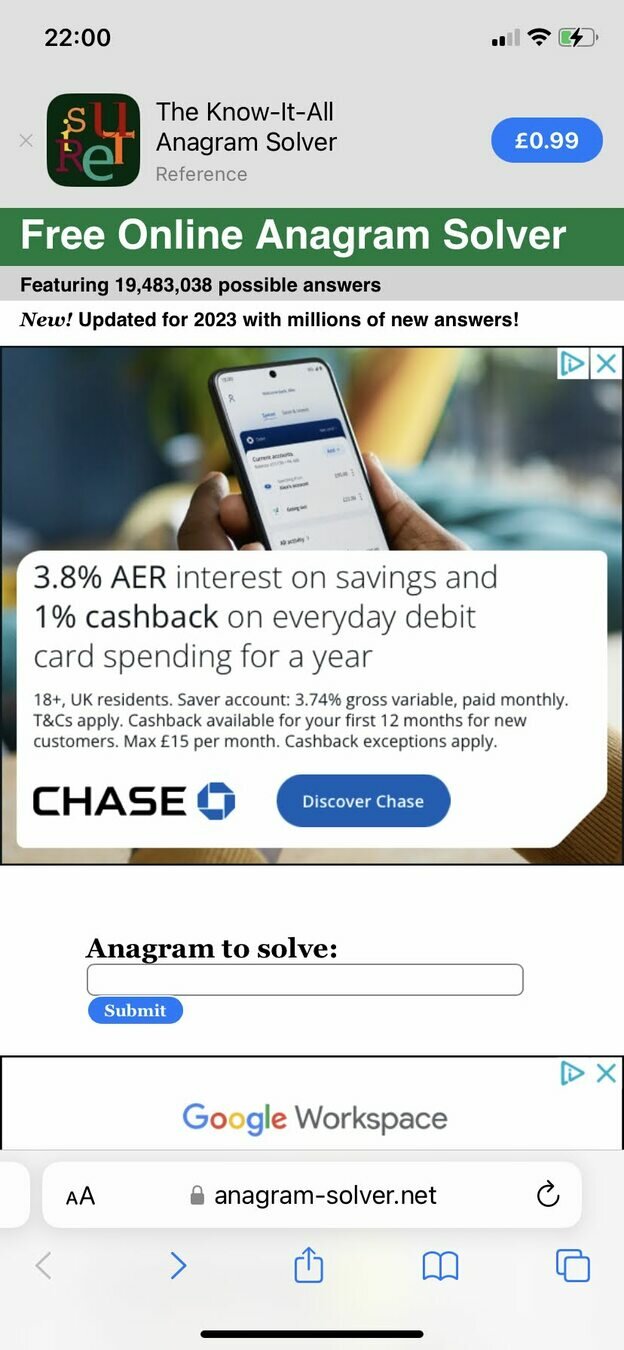

banner ad

giant ad

another

giant ad

button

text box

Mission

Build a better anagram solver

Project goals

- Build something useful (for me)

- Low (user-perceived) latency

- Become more familiar with Rust

- Learn about WebAssembly

- Learn about Netlify

- Have fun!

Data source

Database

Processing

Backend

Frontend

Data source

we need a big list of valid English words and phrases

also known as... a dictionary

Comprehensive, but unwieldy 🫤

kaikki.org to the rescue

raw XML dump

kaikki.org

machine-readable JSON

anagram database

- What shape should our data be?

- How should it be persisted?

- What shape should our data be?

- What kind of queries do we need to support?

Given a word as input,

I want all words that are anagrams of that word

DESIGNERS

Query

Result

DESIGNERS INGRESSED REDESIGNS

What's an anagram?

The same letters in a different order

Definition: the "anagram key" of a given word is the letters of the word sorted in alphabetical order (with spaces removed)

DESIGNERS INGRESSED REDESIGNS

DEEGINRSS

If two words have the same anagram key, they are anagrams of each other

Let's make our database a

Map(anagram key → list of words)

- trivial (and fast) to query

db = {

...,

"DEEGINRSS": [

"DESIGNERS",

"INGRESSED",

"REDESIGNS"

],

...

}

query = "DESIGNERS"

key = toAnagramKey(query)

result = db[key]

- What shape should our data be? ✅

- Map(anagram key -> list of words)

- How should it be persisted?

- about 1 million keys

- about 30 MB

Lots of possibilities!

- Relational DB - seems like overkill

- Redis - likewise

- DynamoDB - I don't want an AWS bill

- JSON file(s) on S3

- A 30MB file is not ideal, but could partition into smaller files

- Frontend could download from S3, so maybe we don't need a backend?

Maybe we don't need a database??

Could our "database" be simply a Rust HashMap inside the backend app?

- Everything is in memory - very fast lookup

- No need to worry about deserialization, error handling

- It's only 1 million keys - not crazy

JSON

Data processing pipeline - first attempt

kaikki.org

bash

jqword

list

backend

codegen using Rust

fn generate_code(hashmap: HashMap<String, Vec<String>>) -> Scope {

let mut scope = Scope::new();

scope.import("std::collections", "HashMap");

let function = scope

.new_fn("build_map")

.allow("dead_code")

.ret("HashMap<& 'static str, Vec<& 'static str>>");

function.line("HashMap::from([");

for (k, vs) in hashmap {

let values = vs

.into_iter()

.map(|x| format!("\"{}\"", x))

.collect::<Vec<String>>()

.join(", ");

function.line(format!("(\"{}\", vec![{}])", k, values));

}

function.line("])");

scope

}Using Rust to generate Rust

$ head src/database/data/generated.rs

use std::collections::HashMap;

#[allow(dead_code)]

fn build_map() -> HashMap<& 'static str, Vec<& 'static str>> {

HashMap::from([

("AACEEHHIMNORRTT", vec!["MORE THATCHERIAN"])

("ACCDEGIMNOPT", vec!["DECOMPACTING"])

("AACEEGHILMOPRST", vec!["ALPHAGEOMETRICS"])

("EEHMNNOT", vec!["MENTHONE"])

("DEENSUV", vec!["VENDUES"])

$ wc -l src/database/data/generated.rs

924713 src/database/data/generated.rs$ cargo build --release

Compiling hello v0.1.0 (/Users/chris/code/dusty-study/netlify/functions/hello)

thread 'rustc' has overflowed its stack

fatal runtime error: stack overflow

error: could not compile `hello` (bin "hello")🫤

$ head src/database/data/generated.rs

use std::collections::HashMap;

#[allow(dead_code)]

fn build_map() -> HashMap<& 'static str, Vec<& 'static str>> {

HashMap::from([

("AACEEHHIMNORRTT", vec!["MORE THATCHERIAN"]),

("ACCDEGIMNOPT", vec!["DECOMPACTING"]),

("AACEEGHILMOPRST", vec!["ALPHAGEOMETRICS"]),

("EEHMNNOT", vec!["MENTHONE"]),

("DEENSUV", vec!["VENDUES"]),

Oh there's a typo in the generated code, let's fix that...

Good news: this fixes the stack overflow

Bad news: now takes 10+ minutes (maybe forever?) to compile

🫤🫤🫤

JSON

Data processing pipeline - second attempt

kaikki.org

bash

jqword

list

backend

JSON

file

inject

Compile-time injection using include_str!

use std::collections::HashMap;

static JSON: &str = include_str!("anagrams.json");

pub fn build_map() -> HashMap<& 'static str, Vec<& 'static str>> {

serde_json::from_str(JSON).unwrap()

}- Have to parse the JSON

- But no need to download or read a file

$ ls -lh src/database/data/anagrams.json

-rw-r--r-- 1 chris staff 29M Jan 6 15:00 src/database/data/anagrams.json

$ ls -lh target/release/solve

-rwxr-xr-x 1 chris staff 33M Jan 6 15:02 target/release/solveOnly parsing the JSON once

Java

public class Database {

private static final Map<String, List<String>> db = loadDBFromJSON();

}Scala

object Database:

val db: Map[String, List[String]] = loadDBFromJSON()Rust

?????Only parsing the JSON once

use once_cell::sync::Lazy;

static DB: Lazy<HashMap<&str, Vec<& 'static str>>> = Lazy::new(|| {

build_map()

});

JSON

Architecture - recap

kaikki.org

bash

jqword

list

backend

JSON

file

inject

Frontend

- Heavily inspired by React

- Components, state, reducers, hooks, ...

- html! macro, equivalent to JSX

- Compiles to WebAssembly (wasm)

DEMO TIME

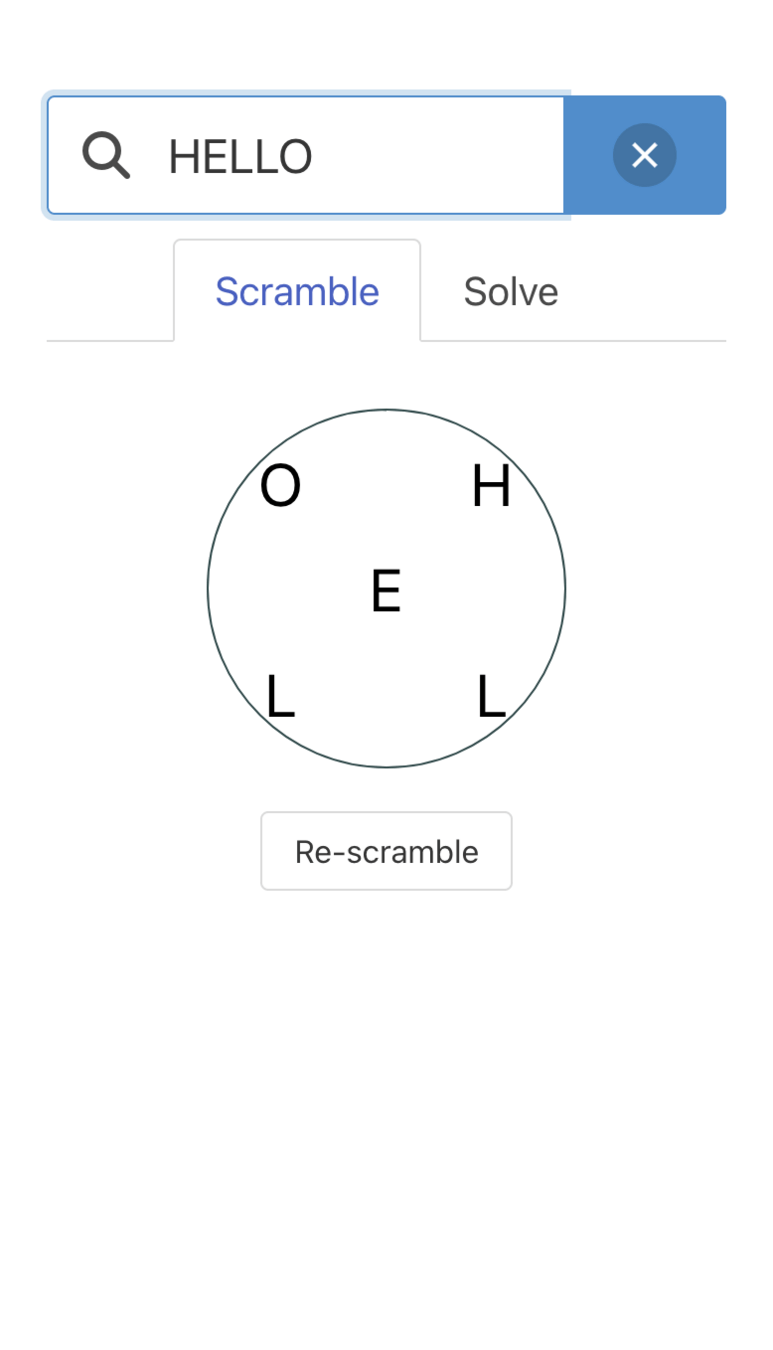

App

Content

Scrambler

Solver

let transform = format!("rotate({} 0 0) translate(0 -75) rotate(-{} 0 0)", rotation, rotation);rotate

translate

rotate

JSON

Deployment

kaikki.org

bash

jqword

list

backend

JSON

file

inject

Frontend

run on laptop, commit result to git

Netlify

Deploying the frontend

- Write a build script (3 lines of bash)

- Tell Netlify where to find the build script, and where the output files will be

- git push to GitHub

- Netlify builds and publishes the site on every push

Deploying the backend

- git push to GitHub

- Netlify notices ./netlify/functions/foo is a Rust project, builds it using Cargo

- Netlify deploys it as an AWS Lambda + API Gateway

- Backend is exposed on https://my-app.netlify.app/foo

Summary

- Build something useful (for me) ✅

- Low (user-perceived) latency ✅

- Become more familiar with Rust ✅

-

Learn about WebAssembly🟡 - Learn about Yew.rs ✅

- Learn about Netlify ✅

- Have fun! ✅

Building an Anagram Solver

By Chris Birchall

Building an Anagram Solver

- 796