DB Schema Design

For huge data consumption

Condition

Huge Reads

Huge Writes

In basic

Client App

Table Index

Data Rows

PK, Foreign Key

20 MegaBytes

500 MegaBytes

Decision Tree

Table Index

Data Rows

Hardware Limit

Storage: SSD

Up to 500MB/s

Full Table Search

Q: 5TB data generated per date, (1.44 trillion rows)

10s to scan

Optimized Index

Index to 5 million rows

1.4 M rows per disunion

36 Bytes per row

0.3s in 150MB

0.1s in 50MB

Reduce Index Size

Timestamp

Integer

Double

Varchar

Text

Integer

Data Reduce

Timestamp

Integer

Double

Varchar

Text

Integer

Enum

Rebased Timestamp

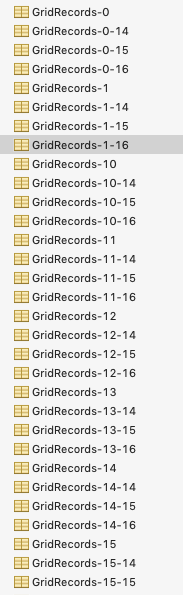

Data Partition

Map App

Zoom

Grid

Time

Client Aggregate

DB Warehouse

Dynamic Aggregate

15 mins

2 hours

8 hours

1 day

30 mins

45 mins

1 hour

1 hour 15 mins

1 hour 30 mins

1 hour 45 mins

2 hours 15 mins

…

Huge Write

Batch insert

File Insert

Predefined PK

Prealloc Rows

Split Partition

Batch Insert

INSERT INTO `Records` (`id`, `amount`, `time`) VALUES (1, 24, 1), (2, 25, 2)...

Reduce handshake

Optimize SQL parse

File Insert

LOAD DATA IN 'path/to/csv/file' INTO TABLE `Records`

Split Data Aggregate

Optimize SQL parse

Reduce handshake

Predefined PK

Auto increment will LOCK table to find unique id

Snowflake

But UUID is not effective type on query (Not integer)

Prealloc Rows

Alloc rows disk space on huge insert is not effective

Alloc with predefined PK

Update on data prepared

Split Partition

Decision Tree

Prepare for query

Storage Cluster

Thanks

DB Schema Design

By Chia Yu Pai

DB Schema Design

- 511