Semi-Supervised Recursive Autoencoders for Predicting Sentiment Distributions

Socher et al, 2011

Context

- Different from Socher 2011a

- Growing field

- Based on previous model

Summary

- Domain: NLP

- Introduces a novel framework

- Achieves state-of-the-art results

Background

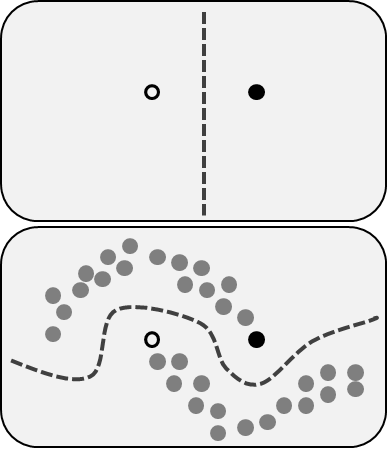

Semi-Supervised Learning

Semi-supervised learning uses both labeled data (S) and unlabeled data (U) as inputs. Its goal can either be to learn the labels of U or to learn the correct mapping from X to Y.

Semi-Supervised Learning

- For classification problems:

- learn categories from S

- learn features/boundaries from U

- Improves learning accuracy

- Great for NLP

Semi-Supervised Learning

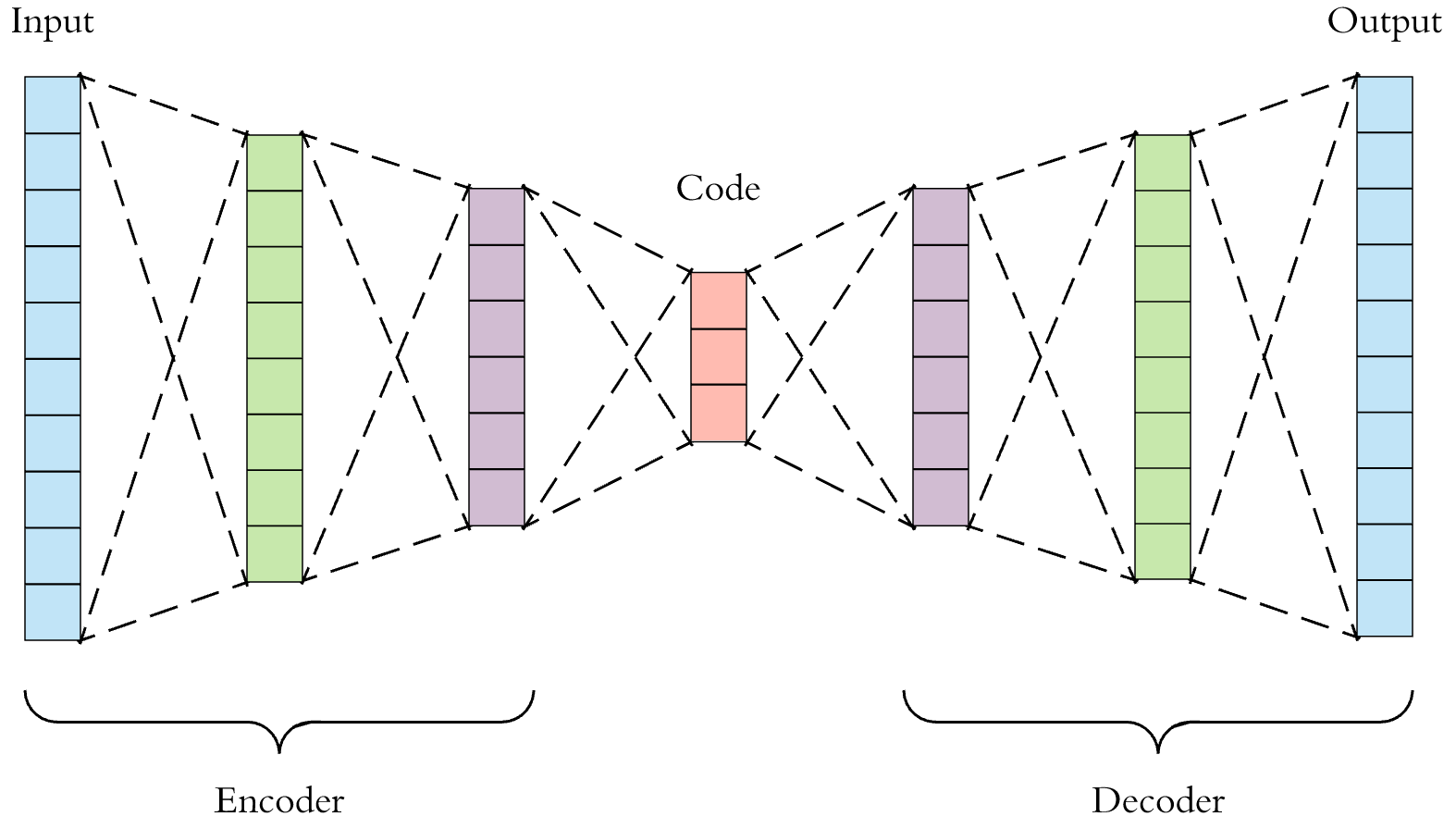

Autoencoders

Autoencoders are a form of unsupervised learning using neural networks. They map a set of inputs X to itself by learning an approximation of the identity function.

Autoencoders

Recursive Autoecoders

A recursive autoencoder (RAE) takes a set of inputs and recursively merges pairs until a single element remains, which captures the information of all inputs.

Recursive Autoecoders

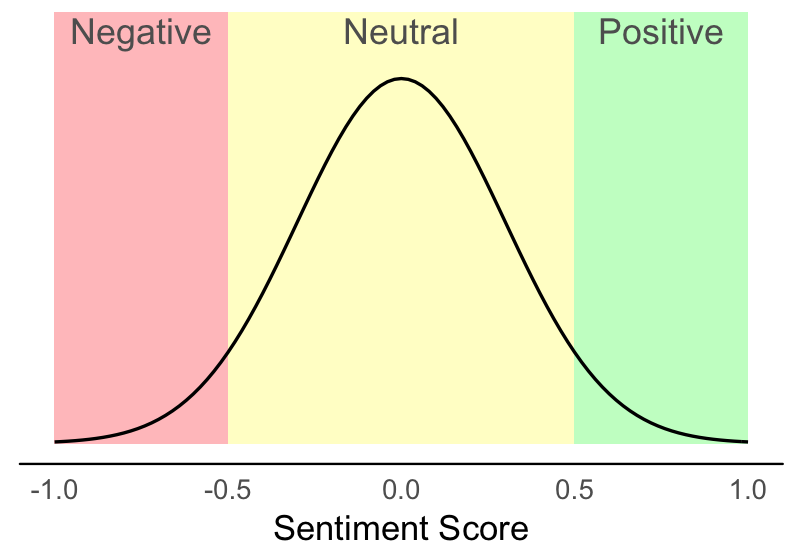

Sentiment Distributions

Sentiment analysis is the process of identifying and classifying the opinion or emotion expressed in a piece of text. A sentiment distribution is the distribution of sentiment labels over a body of texts.

Sentiment Distributions

- One-dimensional

Sentiment Distributions

- Multi-dimensional

Building the Model

Problem

Hypothesis

Learn how to identify the sentiment of a piece of text.

A semi-supervised recursive RAE can learn this mapping without the help of traditional resources.

Continuous Word Vectors

A continuous word vector is a mapping of a word to a vector in a feature space where each dimension captures some syntactic or semantic meaning.

Continuous Word Vectors

- random initialization

- sample from N (0, sigma^2)

- learned initialization

- pre-train with unsupervised neural language model

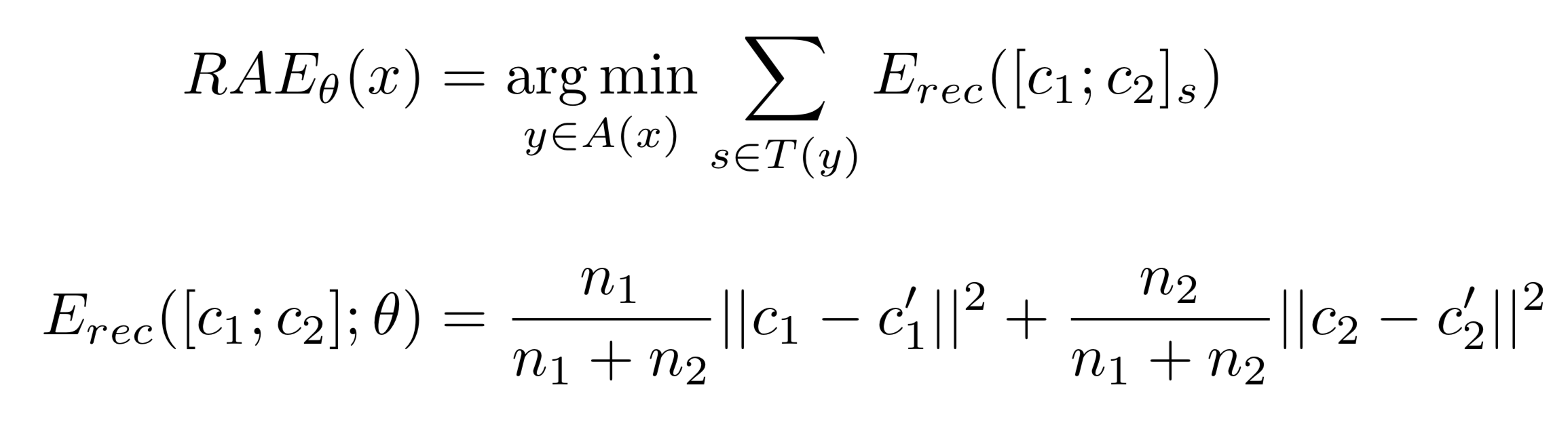

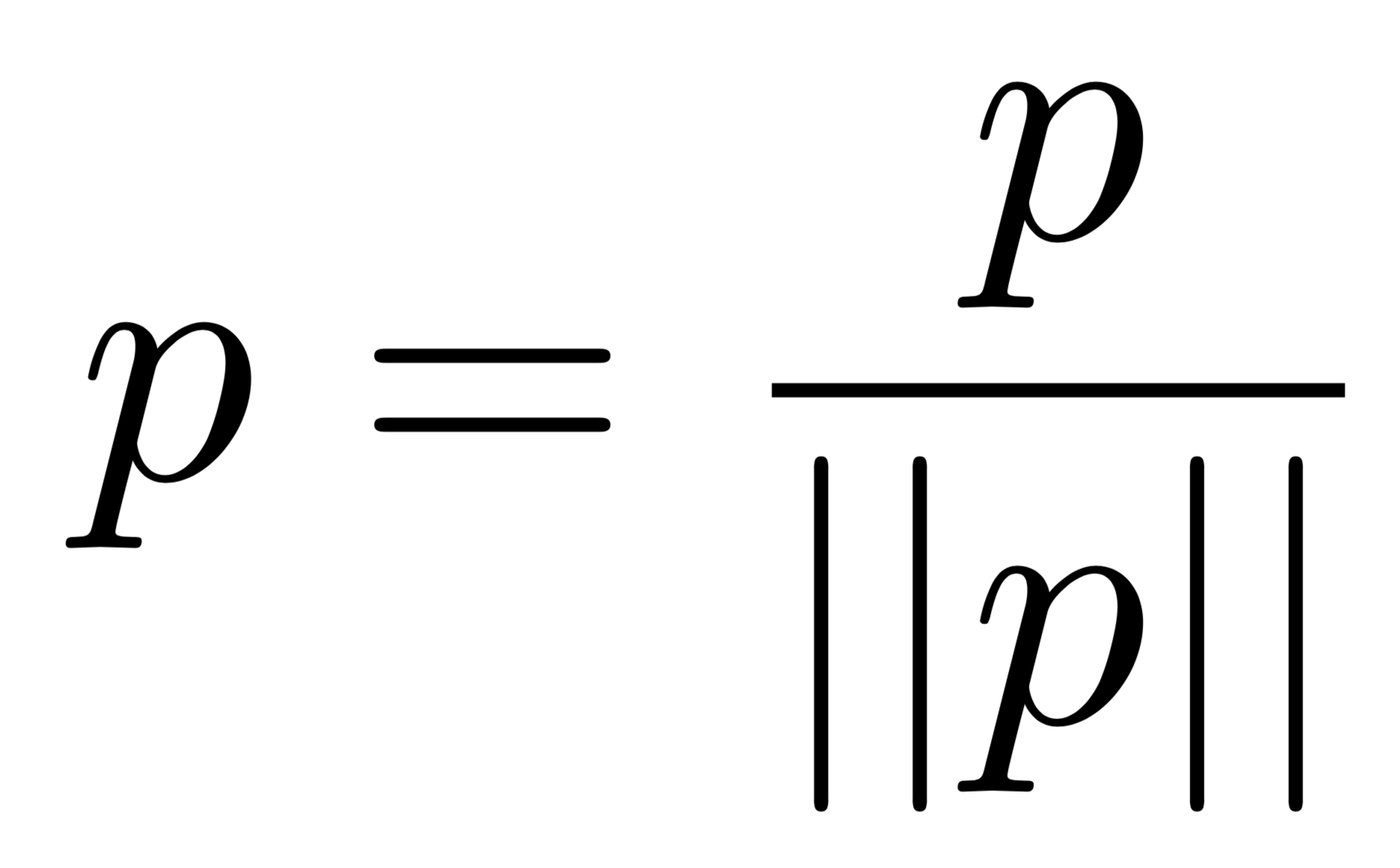

Structure Prediction

Structure prediction uses an RAE to find the vector representation of a sentence that minimizes the total reconstruction error over all levels of the recursion tree.

Structure Prediction

Structure Prediction

Greedy Algorithm

- For each neighboring pair of vectors (c1 , c2):

- Give them as input to the autoencoder

- Record the parent node p and reconstruction error

- Choose the pair with the lowest error and replace them with p

- Repeat the process with the new set of vectors until only a single vector remains

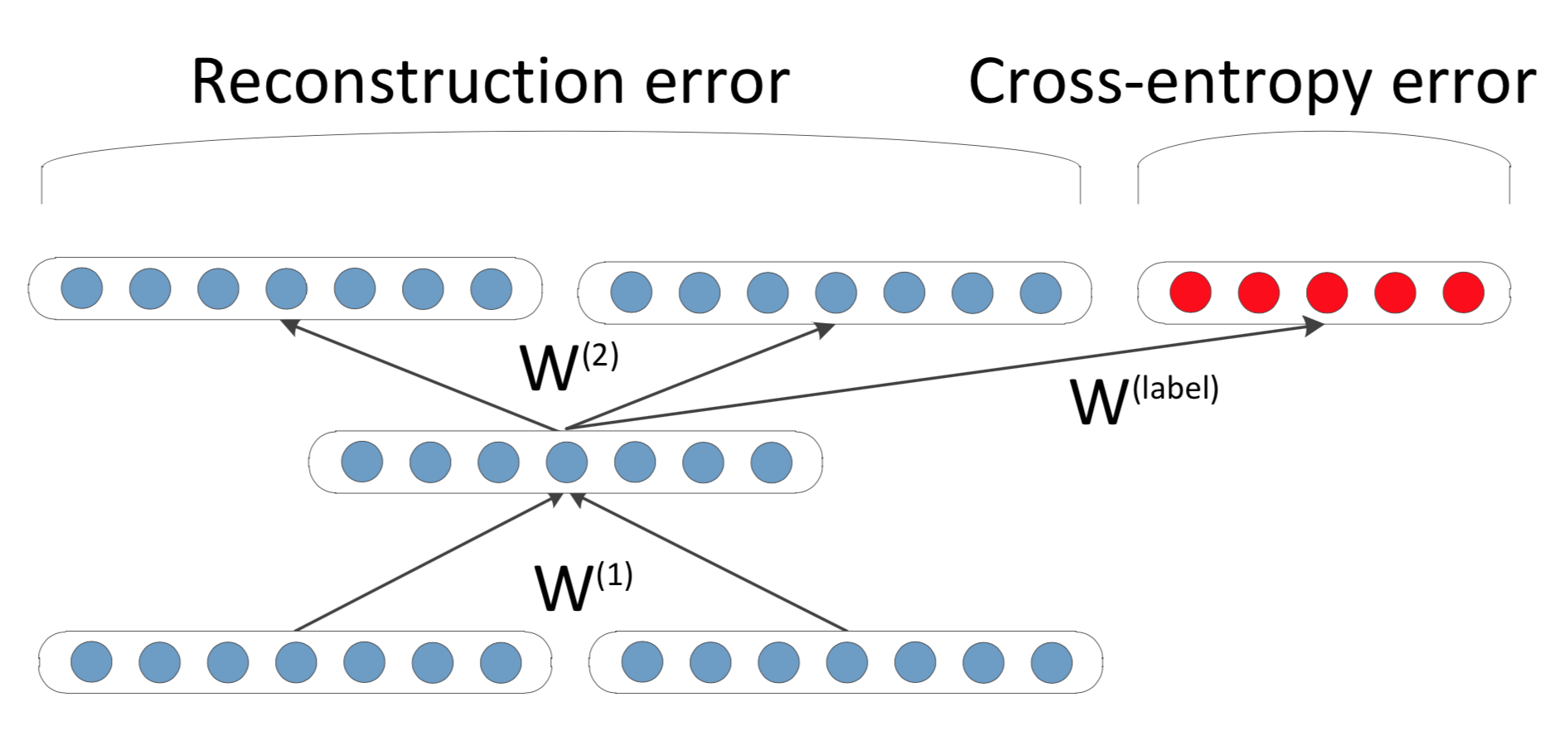

Semi-Supervised RAEs

A semi-supervised RAE applies an additional softmax layer at each level of the recursion tree to predict the sentiment distribution of the parent feature vector, p.

Semi-Supervised RAEs

Semi-Supervised RAEs

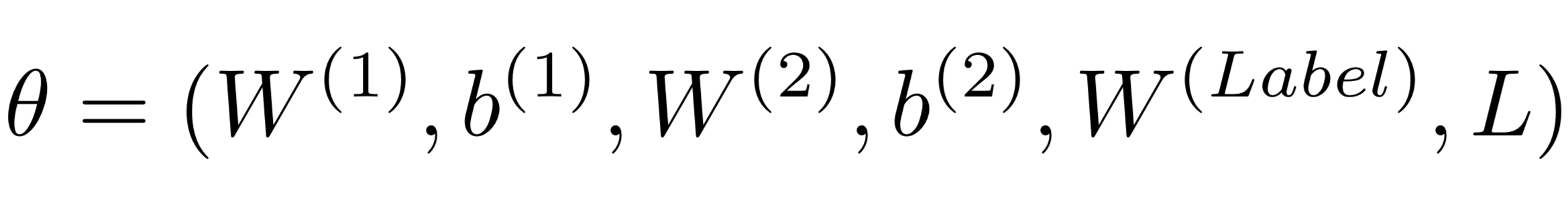

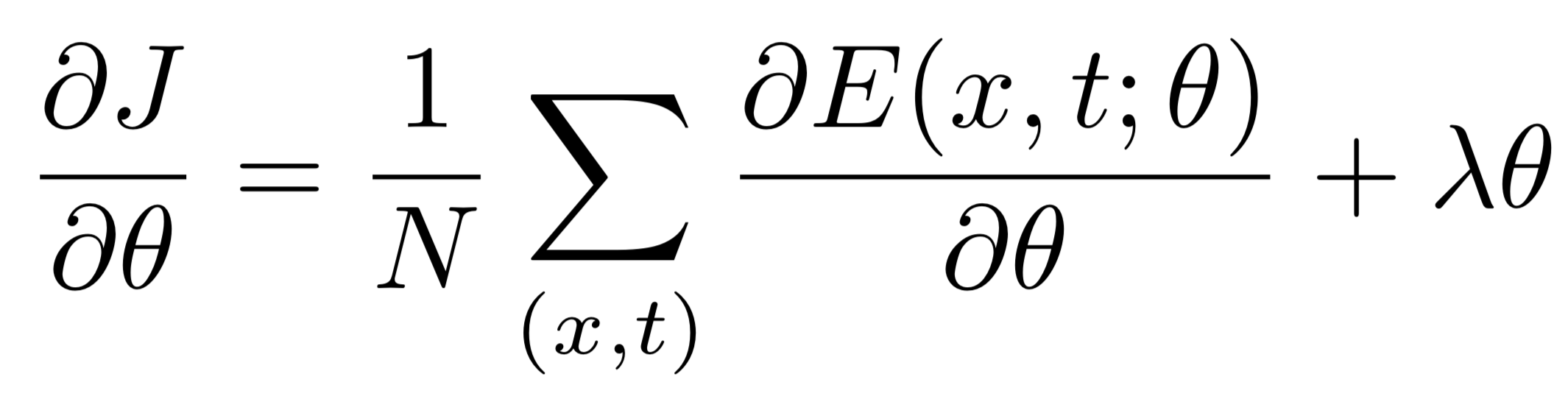

Learning (Step 1)

Given the current set of parameters

greedily construct the optimal tree for each sentence.

Learning (Step 2)

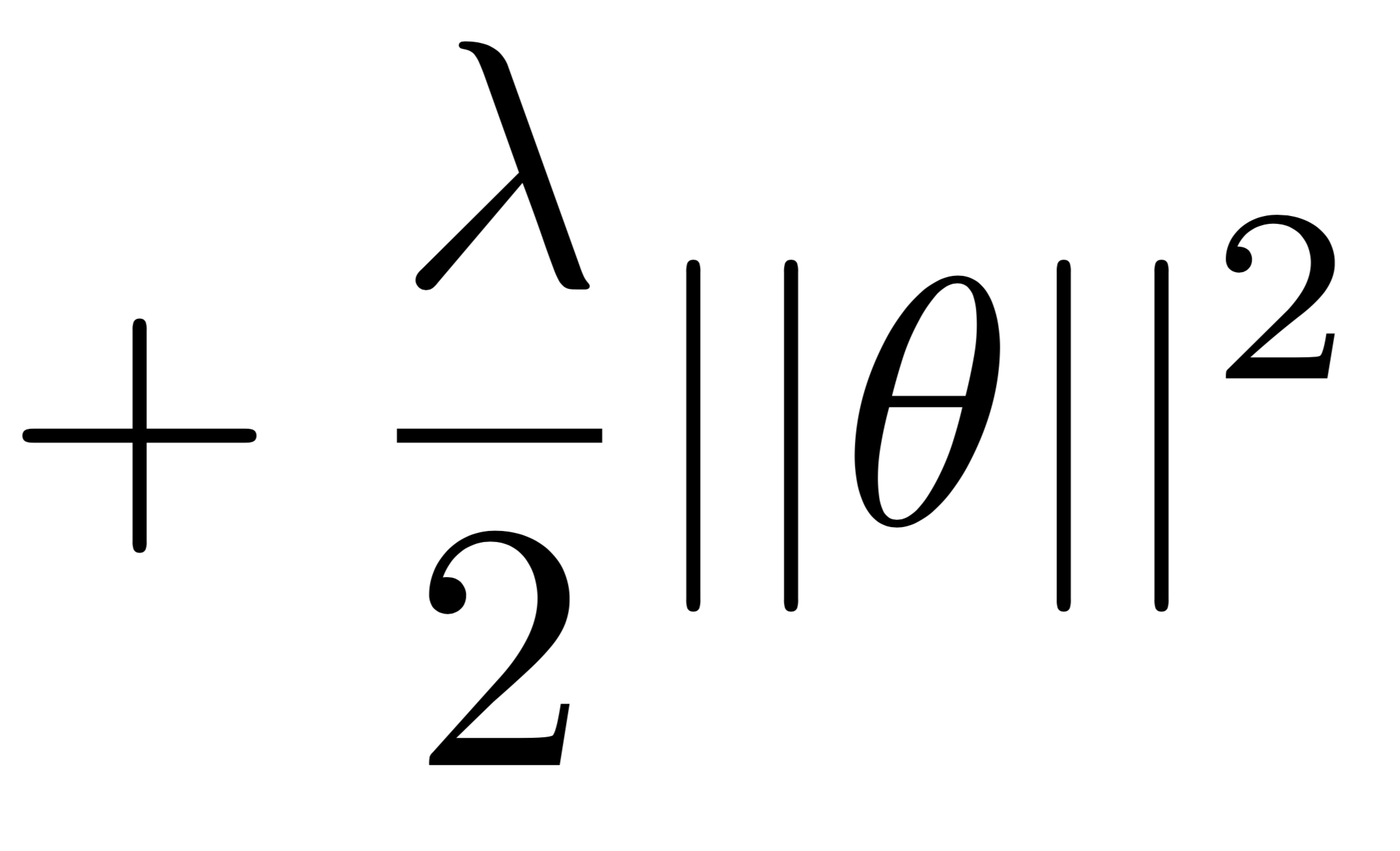

Compute the gradient for the objective function

and update the parameter values using L-BFGS.

Regularization

Weight decay

Length Normalization

Components of Learning

- Input: sentence

- Output: sentiment distribution

- Hypothesis Set: semi-supervised RAEs

- Learning Algorithm: L-BFGS; BPTS

- f: ideal mapping of sentences to sentiment labels

Experiments

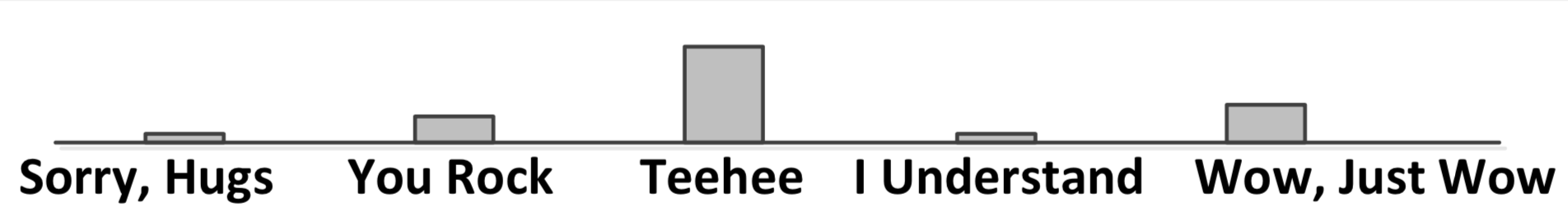

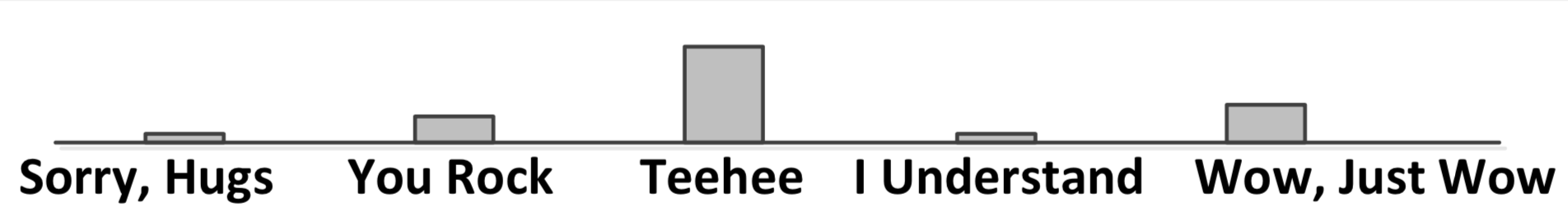

Remember this?

Example of multinomial sentiment distribution.

Experience Project

Website for anonymous sharing of personal stories with other users who can respond by "voting" for one of five categories:

- Sorry, Hugs (condolences)

- You Rock (approval/congratulations)

- Tehee (amusement)

- I Understand (empathy)

- Wow, Just Wow (surprise)

EP: Dataset

- 6,129 observations

- label distribution: [.22, .20, .11, .37, .10]

- 129 words per entry on average

- 49% training, 21% validation, 30% testing

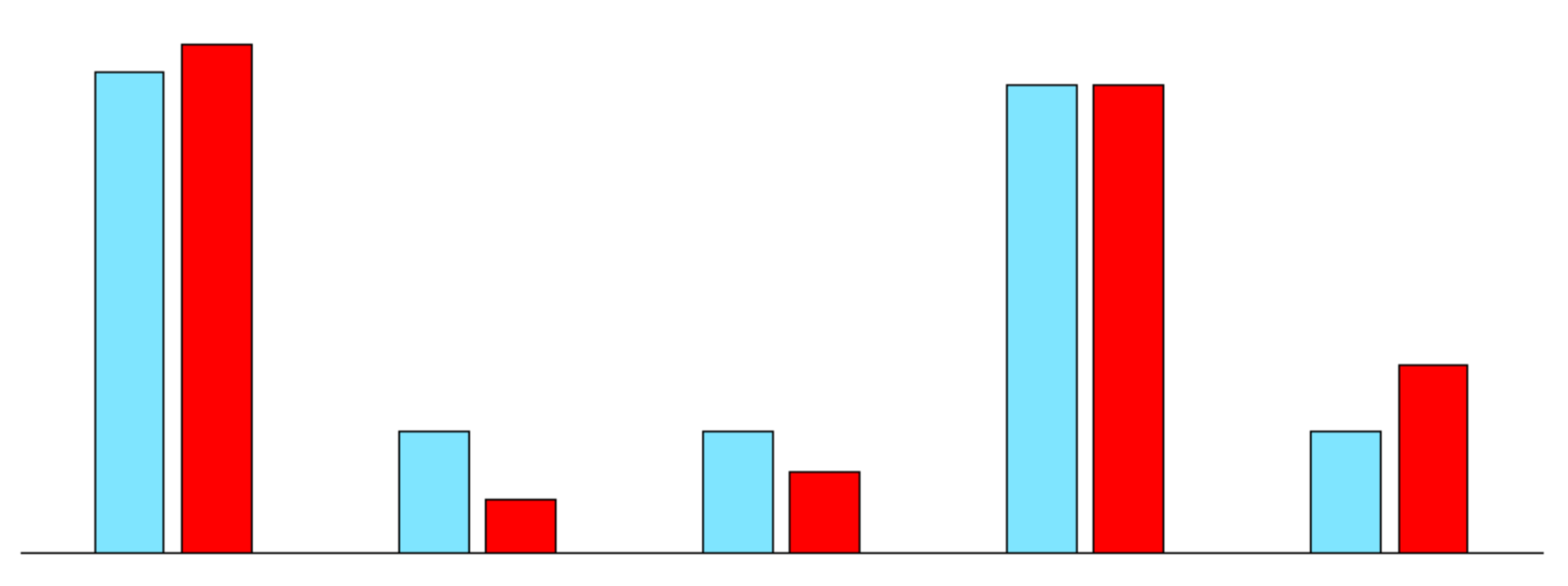

EP: Predicting the Label

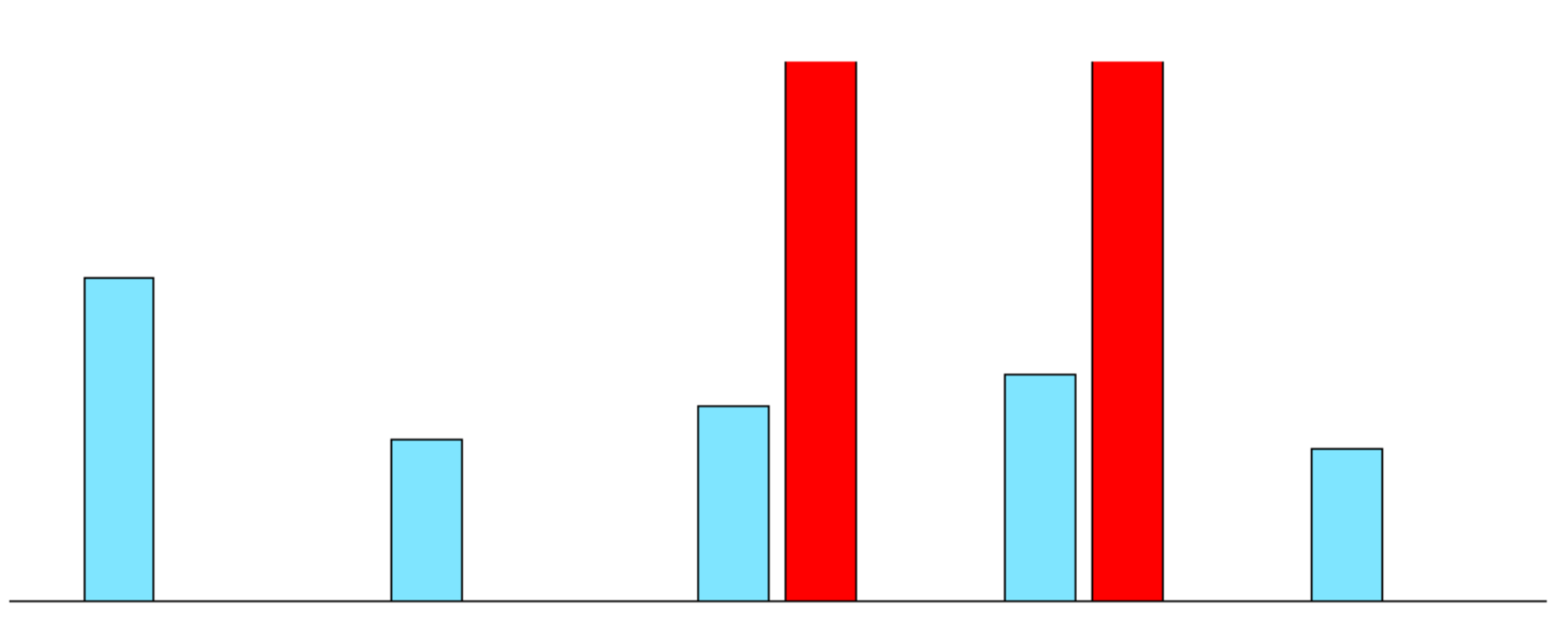

EP: Predicting the Distribution

EP: Predicting the Distribution

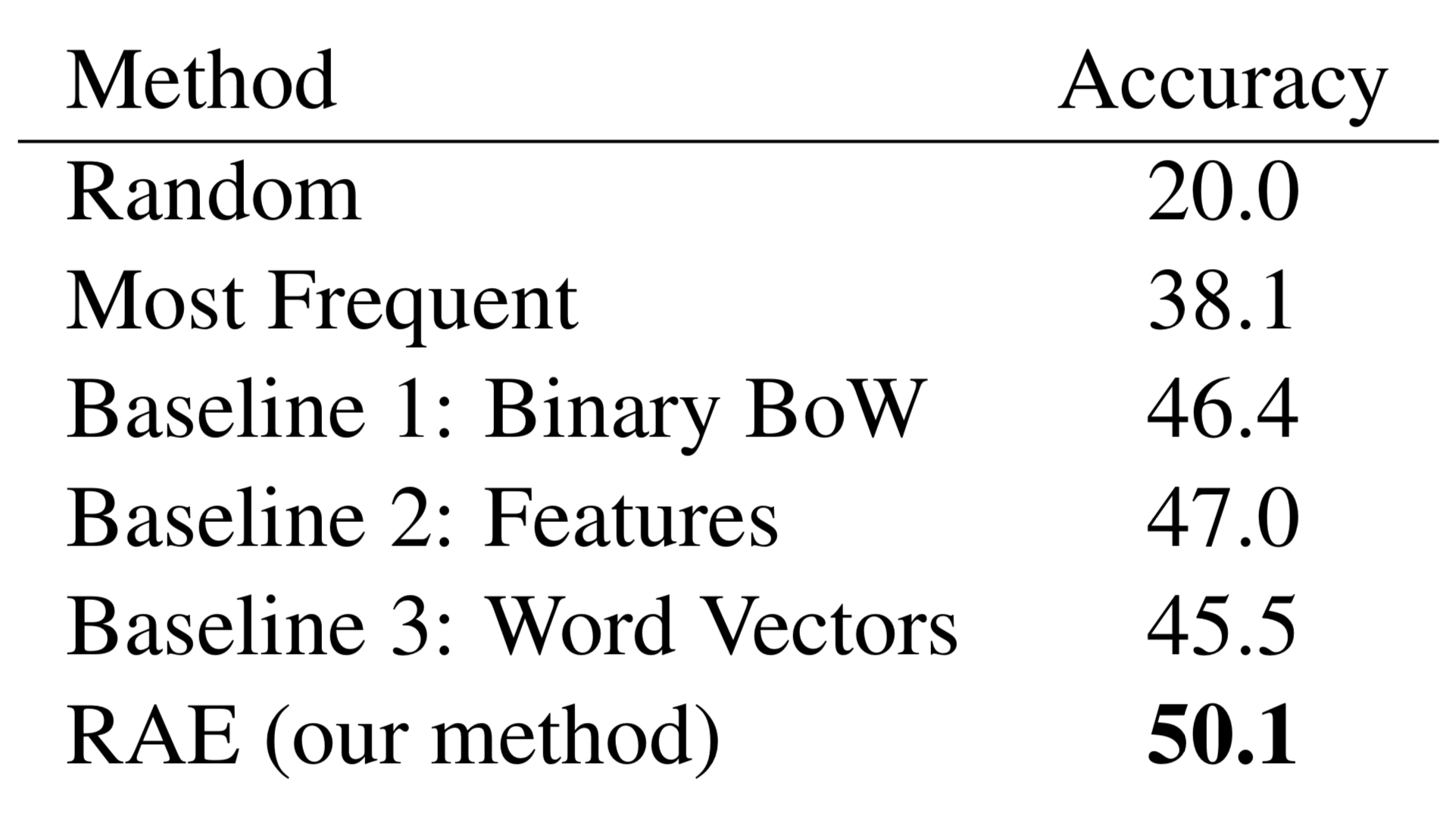

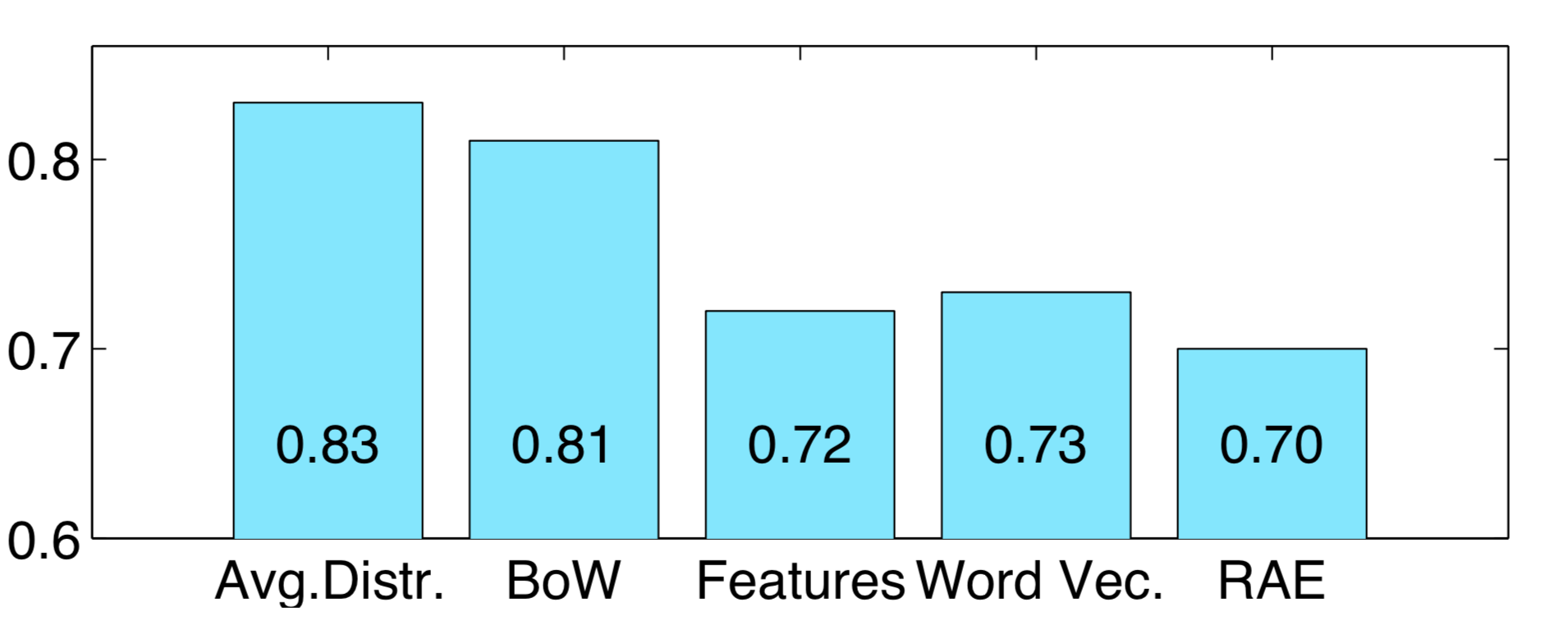

Comparing Approaches

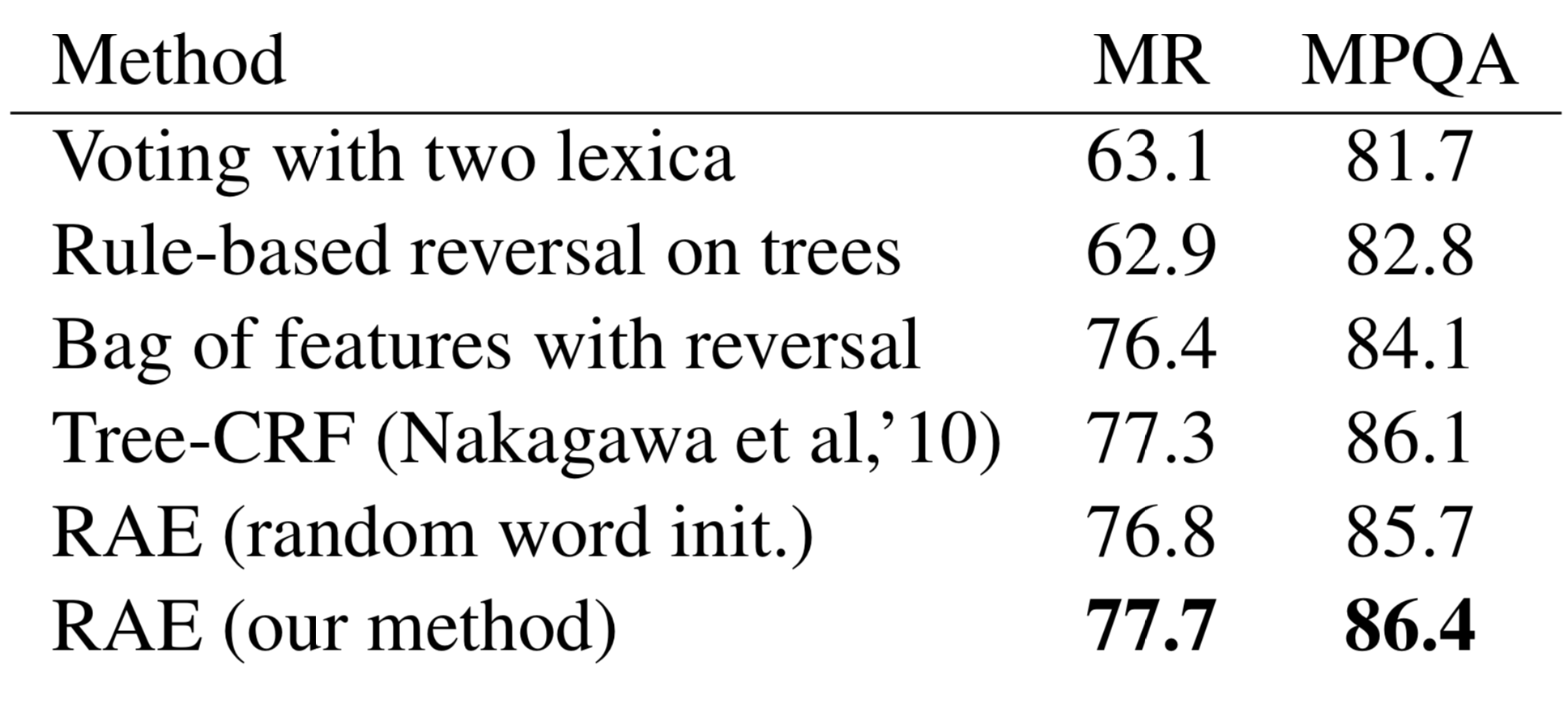

Most traditional sentiment analysis methods only perform binary polarity classification. In order to compare semi-supervised RAEs to these methods, the model was evaluated on two common datasets:

- movie reviews (MR)

- opinions (MPQA)

Comparing Approaches

Conclusion

Semi-Supervised Recursive Autoencoders for Predicting Sentiment Distributions

By chriselsner

Semi-Supervised Recursive Autoencoders for Predicting Sentiment Distributions

- 596