Applied Measure Theory

for Probabilistic Modeling

Chad Scherrer

July 2023

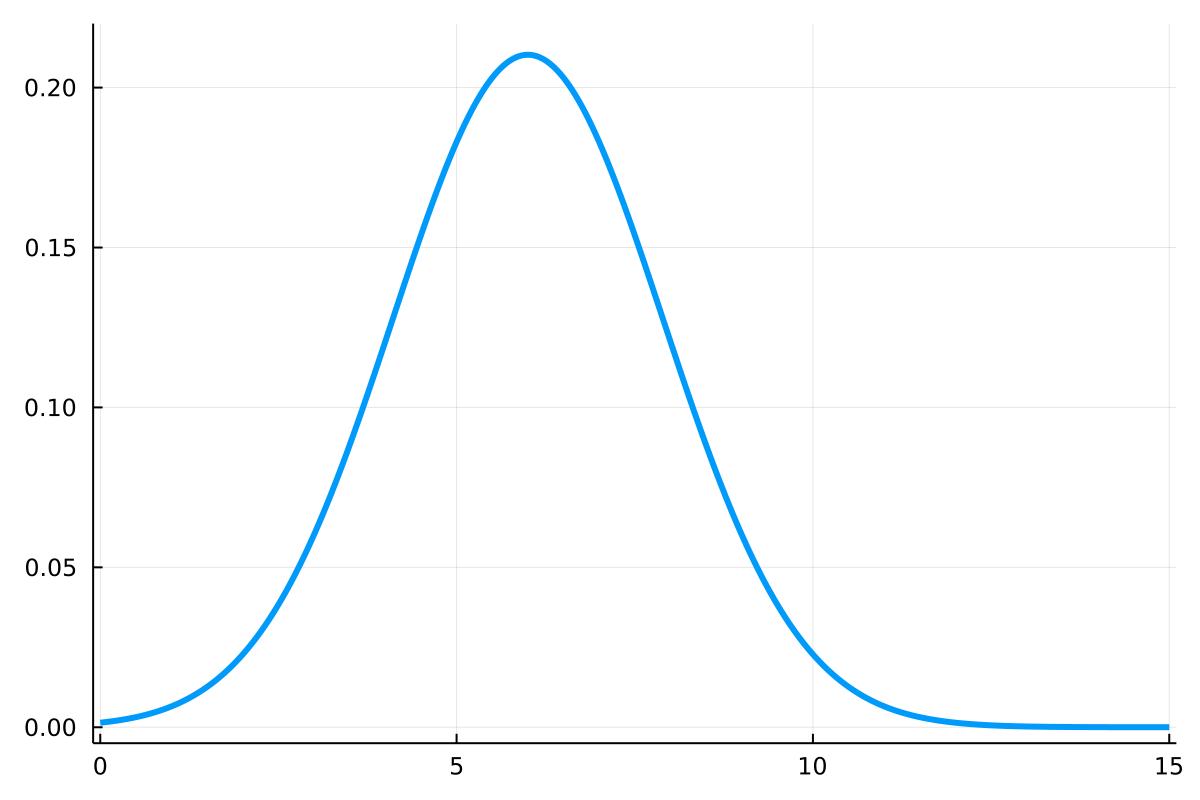

Motivation: Discrete vs Continuous Distributions

Discrete Distributions

- Expressed as a probability mass function

- Evaluation gives a probability

- Values sum to one

Continuous Distributions

- Expressed as a probability density function

- Evaluation gives a probability density

- Values integrate to one

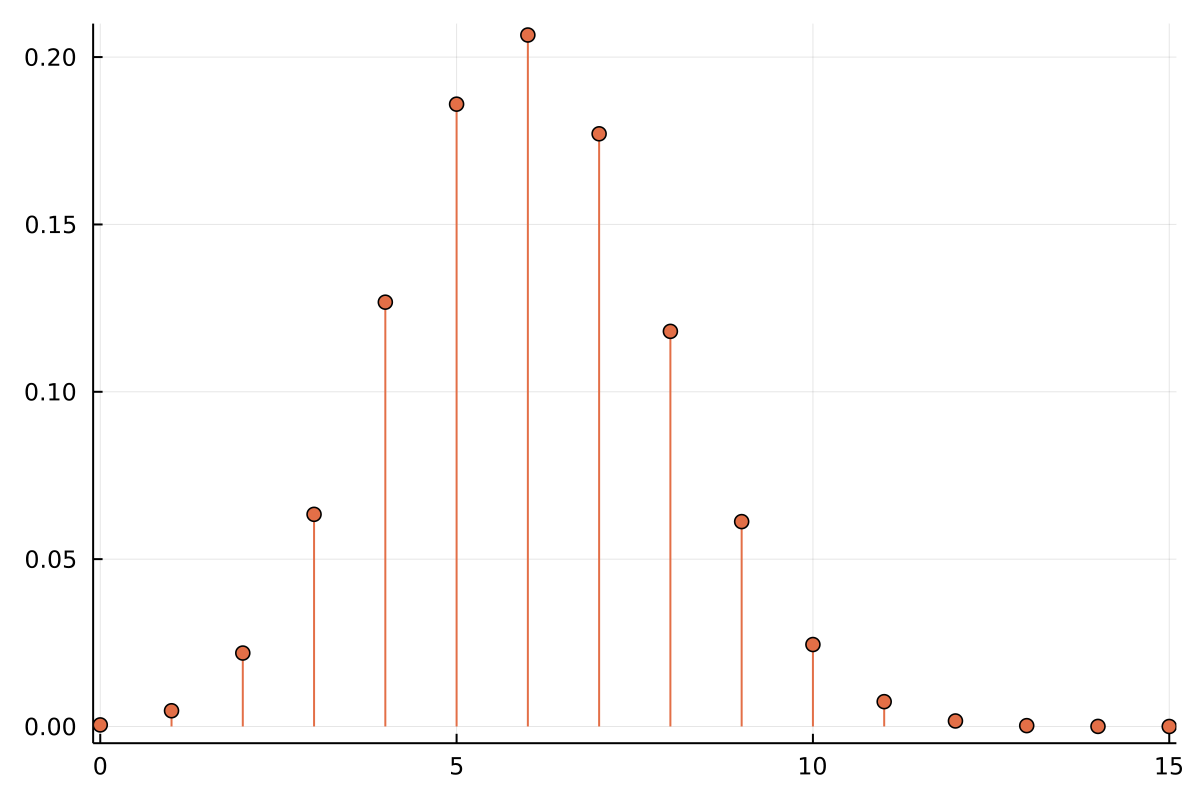

Motivation: Discrete vs Continuous Distributions

Discrete/Continuous Mixtures

- What is ?

- How is it interpreted?

- What is "integration"?

- How do we compute this?

discrete_part = Dirac(1)

continuous_part = Normal(15 * 0.4, sqrt(15 * 0.4 * 0.6))

m = 0.15 * discrete_part + 0.85 * continuous_partIn MeasureTheory.jl:

Importance Sampling

Rejection Sampling

Metropolis Hastings Sampling

KL Divergence

What's really going on?

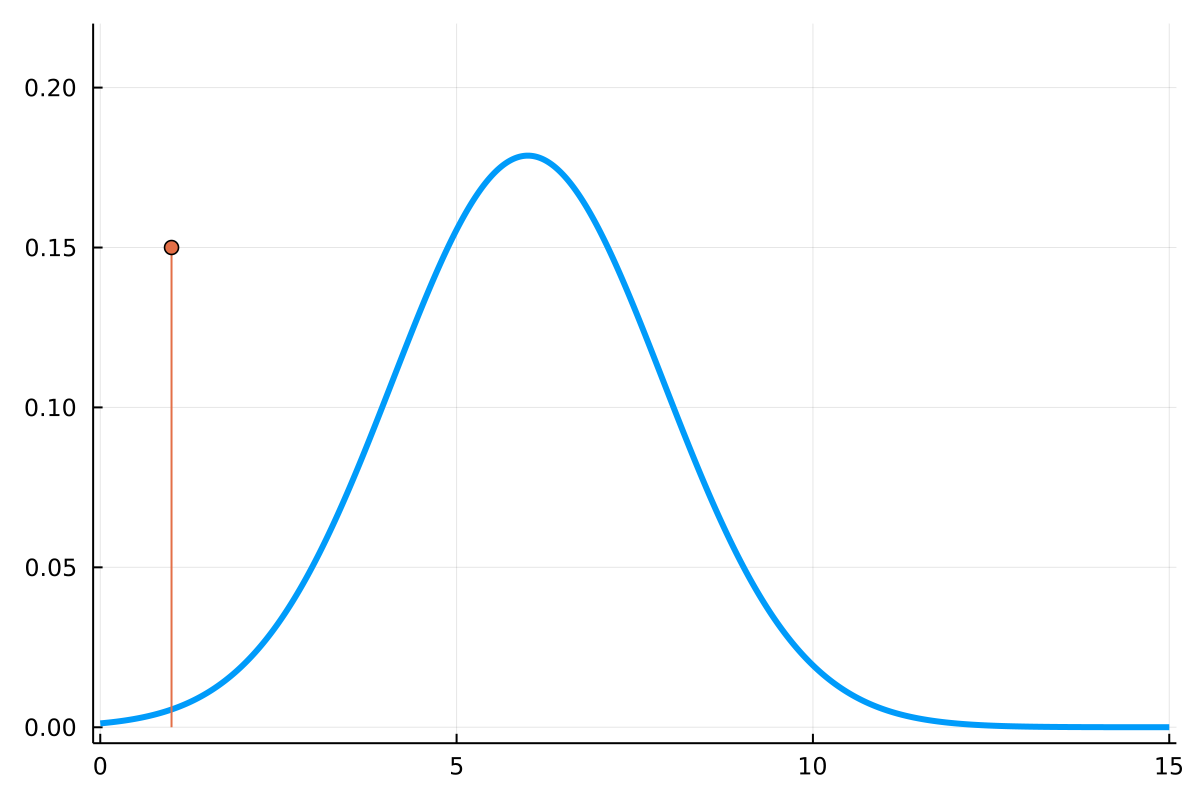

Densities are always relative!

The MeasureTheory.jl Approach

Densities are always relative

- Old and very standard idea (Lebesgue, Radon, Nikodym)

- But no other software we know of makes this explicit!

function basemeasure(::Normal{()})

WeightedMeasure(static(-0.5 * log2π), LebesgueMeasure())

end

function logdensity_def(d::Normal{()}, x)

-x^2 / 2

endComputational advantages

- Easily represent more complex measures than usually possible

- Common structure can share computation

- Static computation makes this fast

- But no other software we know of makes this explicit!

Each measure has a base measure...

.. and a log-density relative to that base measure

Computing Log-Densities

What actually happens when you call logdensityof(Normal(5,2), 1)?

Affine((μ=5, σ=2), Normal())

Normal(5,2)

proxy

insupport(Affine(...), 1)

✓

0.5 * LebesgueMeasure()

basemeasure

LebesgueMeasure()

basemeasure

ℓ == -3.6121

basemeasure

0.3989 * Affine((μ=5, σ=2), LebesgueMeasure())

ℓ = 0.0

ℓ += -2.0

ℓ += -0.9189

ℓ += -0.6931

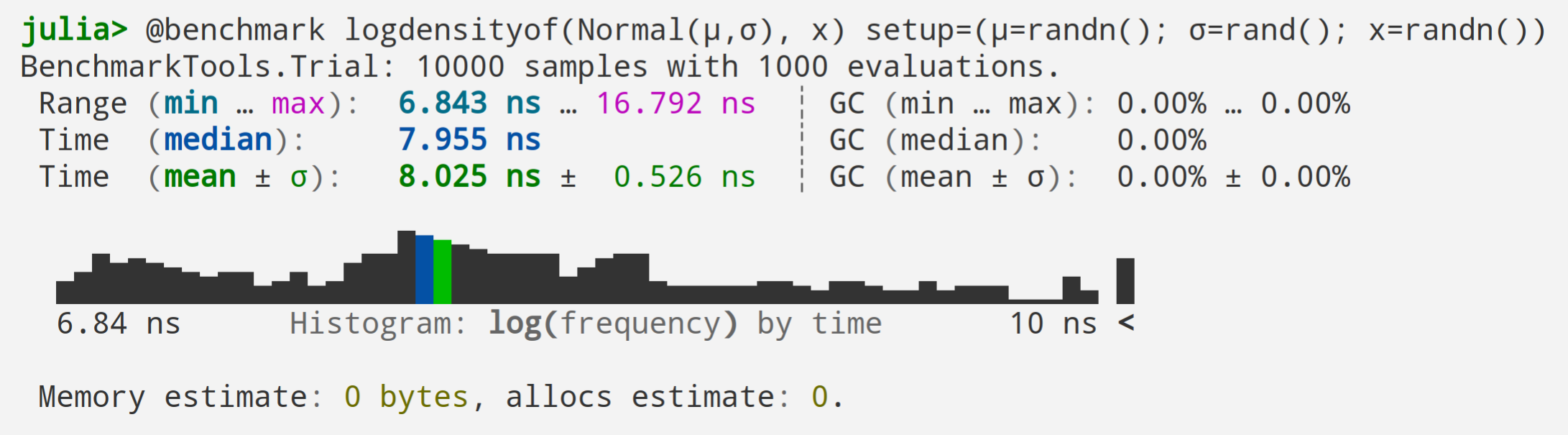

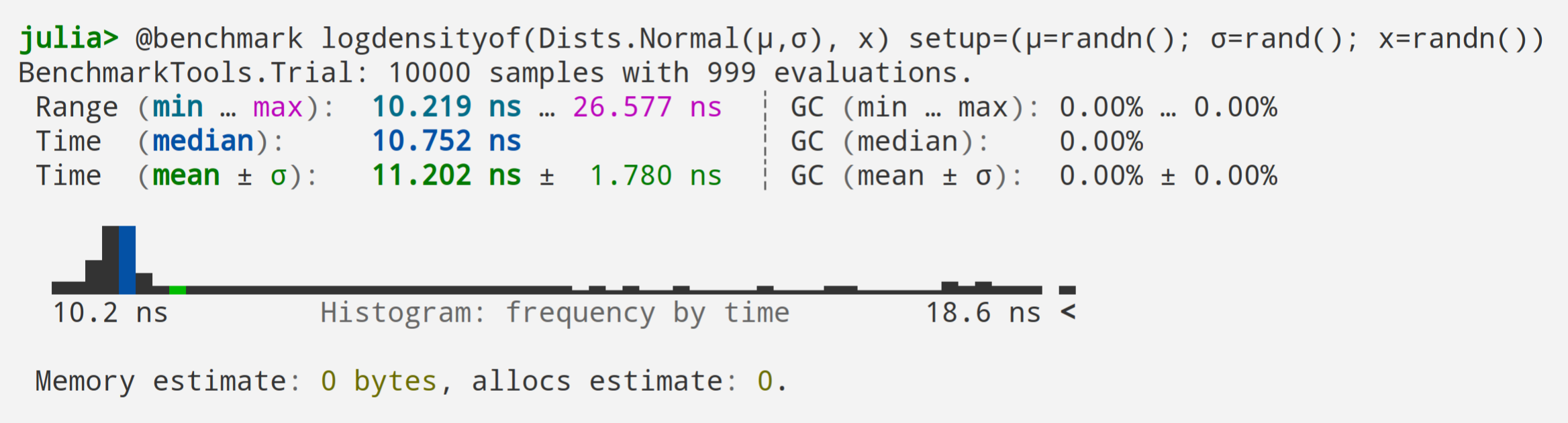

Can This be Fast?

Distributions.jl

MeasureTheory.jl

Computing Relative Log-Density

+

—

+

—

Parameterized Measures

Ways of writing Normal(0,2)

Normal(0,2)

Normal(μ=0, σ=2)

Normal(σ=2)

Normal(mean=0, std=2)

Normal(mu=0, sigma=2)Normal(μ=0, τ=0.25)Normal(μ=0, σ²=4)

Normal(mean=0, var=4)Normal(μ=0, logσ=0.69)Defining New Measures

A measure can be primitive or defined in terms of existing measures

Primitive Measures

TrivialMeasureLebesgueMeasureCountingMeasure

Product-like

-

ProductMeasure(⊗) -

PowerMeasure(^) For

Sum-like

-

SuperpositionMeasure(+) SpikeMixture-

IfElseMeasure(IfElse.ifelse)

Transformed Measures

-

PushforwardMeasure(pushfwd) -

Affine(affine)

Density-related

-

WeightedMeasure(*) -

PowerWeightedMeasure(↑) -

PointwiseProductMeasure(⊙) ParameterizedMeasure-

DensityMeasure(∫)

Support-related

-

RestrictedMeasure(restrict) -

HalfMeasure(Half) Dirac

IID Products

d = Beta(2,4) ^ (40,64)A PowerMeasure produces replicates a given measure over some shape.

⋆

⋆Independent and Identically Distributed

Products with Index Dependence

d = For(40,64) do i,j

Beta(i,j)

endFor(indices) do j

# maybe more computations

# ...

some_measure(j)

endFor produces independent samples with varying parameters.

Markov Chains

mc = Chain(Normal(μ=0.0)) do x Normal(μ=x) end

r = rand(mc)Define a new chain, take a sample

julia> take(r,100) == take(r,100)

trueThis returns a deterministic iterator

julia> logdensityof(mc, take(r, 1000))

-517.0515965372Evaluate on any finite subsequence

Working with Likelihoods

prior = HalfNormal()

Working with Likelihoods

prior = HalfNormal()

d = Normal(σ=2.0) ^ 10

lik = likelihood(d, x)

Working with Likelihoods

prior = HalfNormal()

d = Normal(σ=2.0) ^ 10

lik = likelihood(d, x)

post = prior ⊙ lik

Symbolic Evaluations

julia> using MeasureTheory, Symbolics

julia> @variables μ τ

2-element Vector{Num}:

μ

τ

julia> d = Normal(μ=μ, τ=τ) ^ 1000;

julia> x = randn(1000);

julia> ℓ = logdensityof(d, x) |> expand

500.0log(τ) + 3.81μ*τ - (503.81τ) - (500.0τ*(μ^2))- Types and functions are generic, so symbolic manipulations work out of the box

- Compare

- MeasureTheory.jl

- Distributions.jl

julia> logdensityof(Distributions.Normal(μ, 1 / √τ), 2.0)

ERROR: MethodError: no method matching logdensityof(::Num, ::Float64)Packages Using MeasureTheory.jl

- bat/BAT.jl: A Bayesian Analysis Toolkit in Julia (v3 will use MeasureBase.jl)

-

cscherrer/DistributionMeasures.jl: Conversions between Distributions.jl distributions and MeasureTheory.jl measures.

-

cscherrer/Soss.jl: Probabilistic programming via source rewriting

-

cscherrer/Tilde.jl: WIP successor to Soss.jl

-

jeremiahpslewis/IteratedProcessSimulations.jl: Simulate Machine Learning-based Business Processes in Julia

-

JuliaGaussianProcesses/AugmentedGPLikelihoods.jl: Provide all functions needed to work with augmented likelihoods (conditionally conjugate with Gaussians)

- mschauer/Mitosis.jl: Automatic probabilistic programming for scientific machine learning and dynamical models

-

mschauer/MitosisStochasticDiffEq.jl: Backward-filtering forward-guiding with StochasticDiffEq.jl

-

oschulz/ValueShapes.jl: Duality of view between named variables and flat vectors in Julia

-

ptiede/Comrade.jl: Composable Modeling of Radio Emission

-

ptiede/HyperCubeTransform.jl: We love to use nested sampling with the EHT, but it is rather annoying to

constantly write the prior transformation. This package will do that for you.

Thank You!

2023-06-MeasureTheory-Munich

By Chad Scherrer

2023-06-MeasureTheory-Munich

- 570