PFHub UpDates and Ideas

Daniel Wheeler

Phase Field Workshop, 2023-08-16

Long Term Vision for pfhub

- Central registry of phase field curated results with CLI/Web/API tool to view and query results in many way.

- Website is the registry and examples of using the tool with views + phase field guide materials

Please add ideas and open discussions on usnistgov/pfhub

UPdates

- Update environments

- Nix environments updated

- Native Python (Pip, Conda, Mamba) environment implemented / tested

- Implementing CLI tool for PFHub

- Zenodo submission process

- Papermill / Jupyter website build

- BM1, BM2, BM3, BM4, BM7, BM8

- Generated new schema using linkml (Trevor)

Fair Improvements

- New schema in human readable form using LinkML

- Seamless conversion between schema.org, json-schema, jsonld, yaml

- MaRDA working group for more general phase field schema (tomorrow)

- Require implementation to be in publicly accessible archive

- Encourage use of FAIR4RS principles (metadata.json in repo)

- Require curation of result data on Zenodo (or similar)

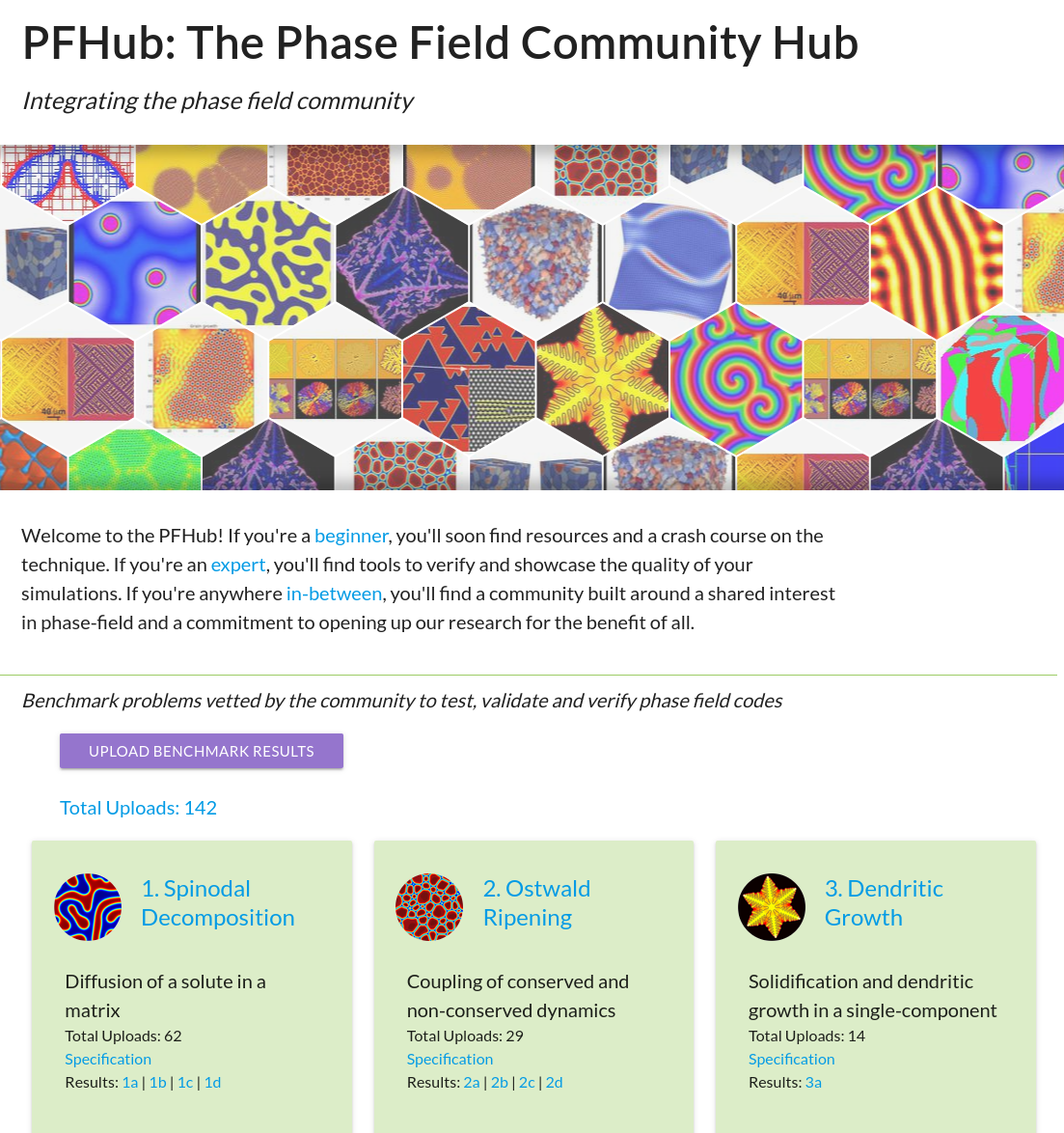

- Improve data accessibility using Jupyter Notebooks and Python utility (in place of JS stack and custom apps)

PFHUB CLI TOOL

- Makes the submission process more coherent

- CLI tool can be used by a user on the local filesystem for submissions

- View / compare results on local FS as they appear on website

- Use same CLI tool for automated submissions and continuous integration

- Implement Zenodo / PFHub submission to be a seamless process

- CLI tool can be used by a user on the local filesystem for submissions

- Not quite finished for this meeting

- First version on PyPI soon

- Eventually the CLI will be subsumed by an upload notebook hosted locally or via cloud service

What Next?

- What next?

- Split repository into python-pfhub and web

- Finish new upload process with upload notebook built using CLI

- Use Jupyter Book to build website (or equivalent)

- Update BM5, BM6 and include BM9

- Small things

- DOIs for benchmark notebooks with appropriate authors #1515

- Aspirational goals

- Cloud-hosted submission notebook

- Increase data capabilities, metrics and display

- Field data

- Expand beyond Zenodo

LOCAL FS

pfhub CLI

USER

submission process

notebooks

PFHUB.YAml

csv, VTK, ...

Github REview

pfhub CLI

reviewer

surge

ACTions

website

HOSTED Submission Notebook

PFHUB CLI

$ pfhub --help

Usage: pfhub [OPTIONS] COMMAND [ARGS]...

Submit results to PFHub and manipulate PFHub data

Options:

--help Show this message and exit.

Commands:

convert Convert between formats (old PFHub schema to new...

convert-to-old Convert between formats (new PFHub schema to old...

download Download a PFHub record

download-zenodo Download a Zenodo record

generate-notebook Generate the comparison notebook for the...

generate-yaml Infer a PFHub YAML file from GitHub ID, ORCID,...

submit Submit to Zenodo and open PFHub PR

submit-from-zenodo Submit an existing Zenodo record to PFHub

test Run the PFHub tests

upload Upload PFHub data to Zenodo

validate Validate a YAML file with the new PFHub schema

validate-old Validate a YAML file with the old PFHub schema

See the documentation at

https://github.com/usnistgov/pfhub/blob/master/CLI.md (under construction)

- What data to we currently collect?

- Provenance

- Benchmark ID

- Implementation repository

- Post-processed outputs

- Limited metadata

- run time

- memory usage

- simulation time

- Limited hardware data

- Limited software data

- Dataframe style data / time series

- time vs free energy

Data collection

OLD schema

---

_id: 93113e00-0c5e-11e8-b653-4f1ed6519c85

benchmark:

id: 3a

version: '1'

data:

- name: run_time

values:

- sim_time: '1500'

wall_time: '266576'

- name: memory_usage

values:

- unit: KB

value: '2000000'

- name: efficiency

transform:

- as: x

expr: "1. / datum.time_ratio"

type: formula

- as: y

expr: datum.memory

type: formula

values:

- memory: 2000000.0

time_ratio: 0.005626

- description: Free energy versus time

format:

parse:

free_energy: number

time: number

type: csv

name: free_energy

transform:

- as: x

expr: datum.time

type: formula

- as: y

expr: datum.free_energy

type: formula

type: line

url: https://gist.githubusercontent.com/wd15/7e06a3141a6fbf317b1daf39ef1b0fbb/raw/2b802a25593501b30cb0d8648a3b588dc54b36f7/time.csv

- description: Solid fraction versus time

format:

parse:

solid_fraction: number

time: number

type: csv

name: solid_fraction

transform:

- as: x

expr: datum.time

type: formula

- as: y

expr: datum.solid_fraction

type: formula

type: line

url: https://gist.githubusercontent.com/wd15/7e06a3141a6fbf317b1daf39ef1b0fbb/raw/2b802a25593501b30cb0d8648a3b588dc54b36f7/time.csv

- description: Tip position versus time

format:

parse:

time: number

tip_position: number

type: csv

name: tip_position

transform:

- as: x

expr: datum.time

type: formula

- as: y

expr: datum.tip_position

type: formula

type: line

url: https://gist.githubusercontent.com/wd15/7e06a3141a6fbf317b1daf39ef1b0fbb/raw/2b802a25593501b30cb0d8648a3b588dc54b36f7/time.csv

- description: Zero contour at t=1500s

format:

parse:

x: number

y: number

type: csv

name: phase_field_1500

type: line

url: https://gist.githubusercontent.com/wd15/7e06a3141a6fbf317b1daf39ef1b0fbb/raw/d0dcd61541604127a16c017891dcda1577c92997/contour.csv

date: 1518046097

layout: post

message: ' '

metadata:

author:

email: daniel.wheeler2@gmail.com

first: Daniel

github_id: wd15

last: Wheeler

hardware:

acc_architecture: none

clock_rate: '3.2'

cores: '1'

cpu_architecture: x86_64

nodes: '1'

parallel_model: serial

implementation:

container_url: ''

name: fipy

repo:

url: https://gist.github.com/wd15/7e06a3141a6fbf317b1daf39ef1b0fbb

version: fc9134b08a9c

summary: FiPy implementation of benchmark 3a on a 960x960 grid. The shape of the

dendrite doesn't look exactly like the version in the notebook.

timestamp: 2 February, 2018

New schema

id: fipy_1a_tkphd_pysparse

benchmark_problem: 1a.0

contributors:

- id: https://orcid.org/0000-0002-2920-8302

name: Trevor Keller

affiliation:

- NIST

email: trevor.keller@nist.gov

- id: https://orcid.org/0000-0002-2653-7418

name: Daniel Wheeler

affiliation:

- NIST

email: daniel.wheeler@nist.gov

date_created: '2017-01-10'

implementation:

url: https://github.com/usnistgov/FiPy-spinodal-decomposition-benchmark/tree/master/periodic

results:

fictive_time: 53333.3

hardware:

architecture: cpu

cores: 1

nodes: 1

memory_in_kb: 28600

time_in_s: 157187

dataset_temporal:

- name: free_energy.csv

columns:

- time

- free_energy

schema:

url: https://github.com/usnistgov/pfhub-schema/tree/e0010d9/project

summary: Serial Travis CI benchmark with FiPy, periodic domain

framework:

- url: https://www.ctcms.nist.gov/fipy/

name: FiPy

download: https://github.com/usnistgov/fipy

version: 3.1.2

- url: https://github.com/usnistgov/steppyngstounes

name: steppyngstounes

download: https://github.com/usnistgov/steppyngstounes

version: '0.0'Data queries

How can we currently query the data

- Plot the dendrite tip position for all results for a particular code

- Show results only from a particular author

- Show results that use >N nodes

- Show results that use a GPU

Better ways to query the data

- Show dendrite curves for all finite difference methods

- Show the transient free energy curve for all results with nominal O(h⁴) accuracy

- Show the resource usage per nominal DOF

- Characterize Ostwald ripening simulations by a length scale associated with the microstructure

- Color data points in an efficiency plot based on numerical method or meshing strategy

Improve schema

What else should we collect?

- Descriptions of discretization methods (FD, FV, FE, Spectral, ...)

- Nominal order of accuracy, nominal DOF, meshing strategy

- Description of linear solvers, preconditioners, non-linear strategy

- Time stepping strategy (implicit v explicit)

- Field variables at various times for statistical post-processing

- Links to input files (rather than just the implementation repository)

- Container (Docker build, Singularity build, Nix build)

- What about the actual problem being solved?

schema Discussion

Could we spend some time right now collecting ideas?

Think about these three questions.

- How can we improve the PFHub phase field schema?

- What data and metadata should PFHub require?

- How would you imagine querying the data? What questions would you ask?

- What publication could you generate given better data / metadata?

Collect some ideas here: https://github.com/usnistgov/pfhub/discussions/1514

Guyer rant: let's ask the question about how to use the data rather than waste time redesigning schemas

pfhub-workshop-aug-2023

By Daniel Wheeler

pfhub-workshop-aug-2023

- 473