On the use of Encoder-Decoder Inference Model for Reverse Engineering Machine Code

by

David N. & Richard B.

[William & Mary]

Background

Artificial Neural Networks & Decompilation

Neural Machine Translation: translate from English to Spanish

I am a computer scientist

Soy un científico de la computación

English

Spanish

Neural Machine Translation: encoder-decoder architecture that converts a sentence into a "thought" vector which is used by a decoder to generate a translation

I am a computer scientist

Encoder

Decoder

Soy un científico de la computación

English

Spanish

Tensors: geometric objects that describe linear relations and generalize the concept of scalar, vector, and matrix

Scalar

Vector

Matrix

Tensor

Tensors: geometric objects that describe linear relations and generalize the concept of scalar, vector, and matrix

Scalar

Vector

Matrix

Tensor

LLVM: compiler infrastructure written in c++, we used it to translate snippets into machine code

LLVM

Snippet

Machine Code

Apply the below optimization/configuration

unsigned square_int(unsigned a) {

return a*a;

}$ llc-3.0 -O3 sample.ll -march=x86-64 -o sample-x86-64.sLLVM

Snippet

Machine Code

Intermediate Representation (IR)

unsigned square_int(unsigned a) {

return a*a;

}define i32 @square_unsigned(i32 %a) {

%1 = mul i32 %a, %a

ret i32 %1

}$ llc-3.0 -O3 sample.ll -march=x86-64 -o sample-x86-64.sLLVM

Snippet

Machine Code

Used Machine Code

unsigned square_int(unsigned a) {

return a*a;

}define i32 @square_unsigned(i32 %a) {

%1 = mul i32 %a, %a

ret i32 %1

}square_unsigned:

imull %edi, %edi

movl %edi, %eax

ret$ llc-3.0 -O3 sample.ll -march=x86-64 -o sample-x86-64.sLLVM

Snippet

Machine Code

Approach

Neural Machine Translation: from machine code to snippet

Encoder

Decoder

Machine Code

Snippet

unsigned square_int(unsigned a) {

return a*a;

}square_unsigned:

imull %edi, %edi

movl %edi, %eax

ret- Only machine code

- Malware or Vulnerabilities (Powlowski, et al, 2017)

- State-of-the-art: Heuristics (SANER2018)

- Intuitive idea of language translators (SANER2018)

machine code

snippet

Reverse Engineering

Goal

To implement a DNN (seq2seq) that reverses a LLVM-based machine code using encoder-decoder inference model

Research Question

to what extent is the Neural Machine Translation model accurate for reversing machine code into c/c++ snippets?

Methodology

| Dataset | Pre-processing | Eval. Metrics | Architecture | Type of Validation | Training |

|---|---|---|---|---|---|

| OpenSSL (sampling) | One-hot embedding | - Accuracy - Loss |

-LSTM for encoder-decoder -Inference LSTM |

-Automatic Verification Dataset (split:0.2) | -Batch:64 -Epochs:50 |

| - | - | - | - | - | - |

Methodology

- TensorFlow + Keras Frameworks

- DataSet generated by using LLVM

- Controlling measure: Categorical Cross-Entropy

- Tensor Shape: (batch, sequence length, vocabulary size)

| Dataset | Pre-processing | Eval. Metrics | Architecture | Type of Validation | Training |

|---|---|---|---|---|---|

| OpenSSL (sampling) | One-hot embedding | - Accuracy - Loss |

-LSTM for encoder-decoder -Inference LSTM |

-Automatic Verification Dataset (split:0.2) | -Batch:64 -Epochs:50 |

| - | - | - | - | - | - |

Architecture for Neural Machine Translation

Pairs of <source code, machine code> are the inputs

u

n

s

\t

s

q

%

e

snippet (char by char)

machine code

The embedding is by creating one-hot character

u

n

s

\t

s

q

%

e

embedding for snippet

machine code

.

Feed char by char embeds into the encoder

u

n

s

\t

s

q

%

e

embedding for snippet

machine code

.

encoder

Generate the final encoders states (h & c) and feed them into the decoder

u

n

s

\t

s

q

%

e

embedding for snippet

machine code

.

encoder

decoder

The decoder receives 3 inputs (encoder states & machine code embeds)

u

n

s

\t

s

q

%

e

embedding for snippet

machine code

.

encoder

decoder

embedding for machine

Predict word probabilities in the softmax layer

u

n

s

\t

s

q

%

e

embedding for snippet

machine code

.

encoder

decoder

embedding for machine

softmax

We use the categorical cross entropy as loss function (learning to classify correct machine chars)

u

n

s

\t

s

q

%

e

embedding for snippet

machine code

.

encoder

decoder

embedding for machine

softmax

embedding for target

labelled translation

s

q

e

We use the categorical cross entropy as loss function (learning to classify correct machine chars)

u

n

s

\t

s

q

%

e

5. embedding for snippet

1. machine code

.

3. encoder

6. decoder

2. embedding for machine

7. softmax

8. embedding for target

9. labelled translation

s

q

e

4. snippet

Corpus Generation

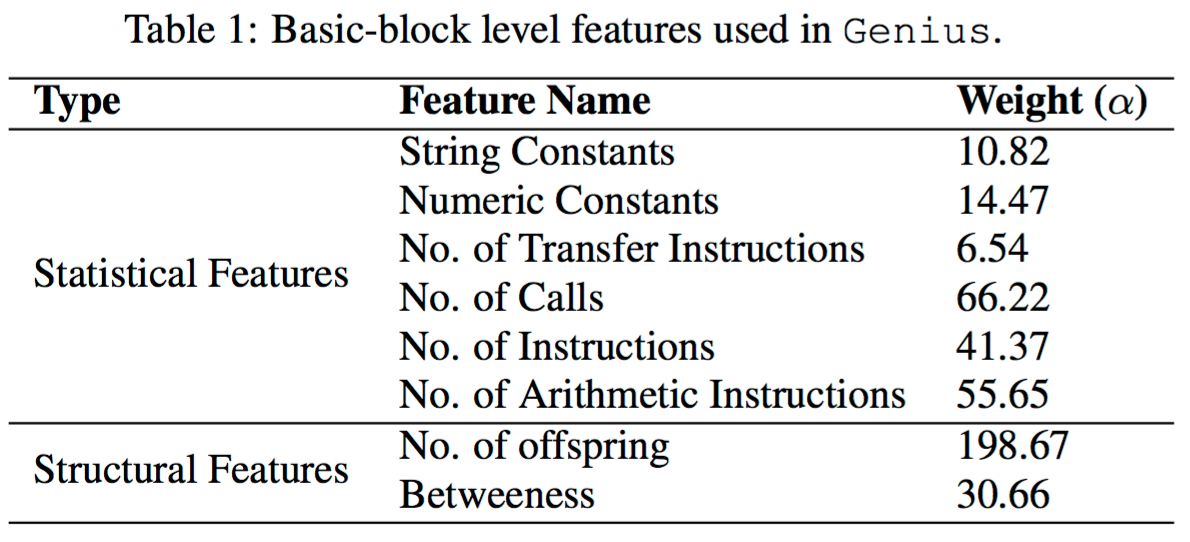

Attributed Control Flow Graphs

- Introduced by the authors of Genius (Qian Feng et al.)

- Adds basic block features to the vertices of a CFG

Attributed Control Flow Graphs

IDA Pro

Genius

IDA Free

Genius

Radare2

Reimplementation

Significant Cost

No Plugin Integration

Substantial Engineering

Collecting "Source \t Assembly" Snippets

OpenSSL v1.0.1f

Parse source files with Clang, extract functions from AST

Compile (Clang v6.0.0)

Disassemble (Radare2), extract functions

Set of functions in Assembly

Set of functions in C

Corpus

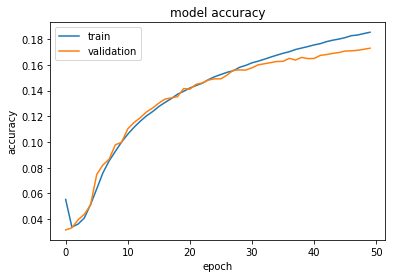

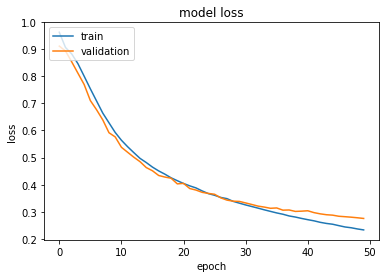

Initial Results

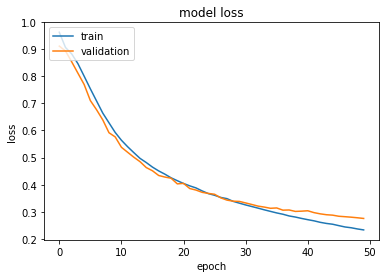

Model accuracy: training set vs validation set

Model loss: training set vs validation set

Encoder - Decoder Inference Model

u

n

s

\t

s

%

e

embedding for snippet

machine code

.

encoder

decoder

embedding for machine

softmax

embedding for target

labelled translation

s

q

e

Softmax layer: argmax of word probabilies

u

n

s

\t

embedding for snippet

machine code

.

encoder

decoder

embedding for machine

softmax

embedding for target

argmax

Reverse dictionary lookup in target machine code

u

n

s

\t

embedding for snippet

machine code

.

encoder

decoder

embedding for machine

softmax

Reverse Dictionary

argmax

Decoder predicts the first char

u

n

s

\t

embedding for snippet

machine code

.

encoder

decoder

embedding for machine

softmax

Reverse Dictionary

predicted machine code

s

argmax

Predicted char as decoder input

u

n

s

\t

s

embedding for snippet

machine code

.

encoder

decoder

embedding for machine

softmax

Reverse Dictionary

predicted machine code

s

argmax

Infer next char

u

n

s

\t

s

2. embedding for snippet

1. machine code

.

3. encoder

6. decoder

5. embedding for machine

softmax

8. Reverse Dictionary

9. predicted machine code

s

7. argmax

4. snippet

test_vprintf_stderr:

mov rax, qword [rip]

mov rdx, rsi

mov rsi, rdi

mov rdi, qword [rax]

jmp 0x15

From the following machine code

Input Machine Sentence

test_vprintf_stderr:

mov rax, qword [rip]

mov rdx, rsi

mov rsi, rdi

mov rdi, qword [rax]

jmp 0x15

int EVP_MD_meth_get_cb(SSL *s, unsigned int flags) {

if (ctx->seckey_ercory_teck = (const_unse_ned_canctx, corst unsigned char *),

unsigned char *));

} From the following machine code, infer the following snippet

Input Machine Sentence

Decoded Snippet Setence

Conclusion

| Dataset | Pre-processing | Eval. Metrics | Architecture | Type of Validation | Training |

|---|---|---|---|---|---|

| OpenSSL (sampling) | One-hot embedding | - Accuracy - Loss |

-LSTM for encoder-decoder -Inference LSTM |

-Automatic Verification Dataset (split:0.2) | -Batch:64 -Epochs:50 |

| - | - | - | - | - | - |

References

- [1] Struct2Vec https://arxiv.org/abs/1708.06525

-

[2] Feature Engineering http://web.cs.ucdavis.edu/~su/publications/icse08-clone.pdf

-

[3] Using Recurrent Neural Networks for Decompilation [SANER2018]

Reverse Engineering from Machine to C code

By David Nader Palacio

Reverse Engineering from Machine to C code

Proposal & Milestone

- 433