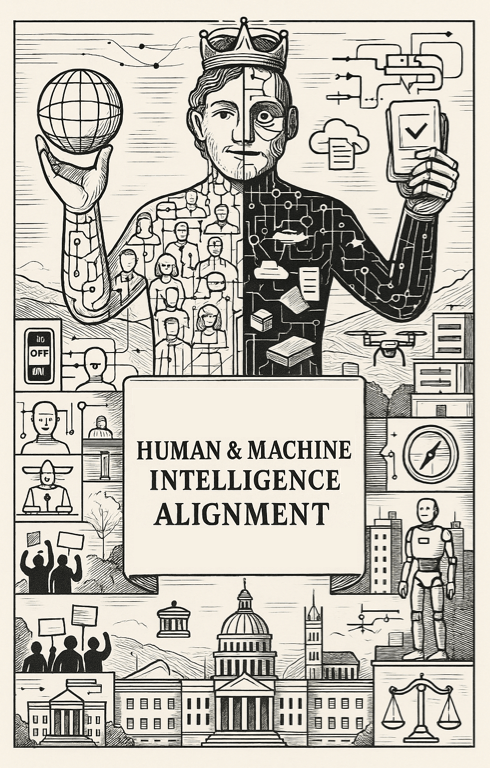

Hierarchy

HMIA 2025

HMIA 2025

TRIVIAL COOPERATION: shared goals and information - pick a goal and execute

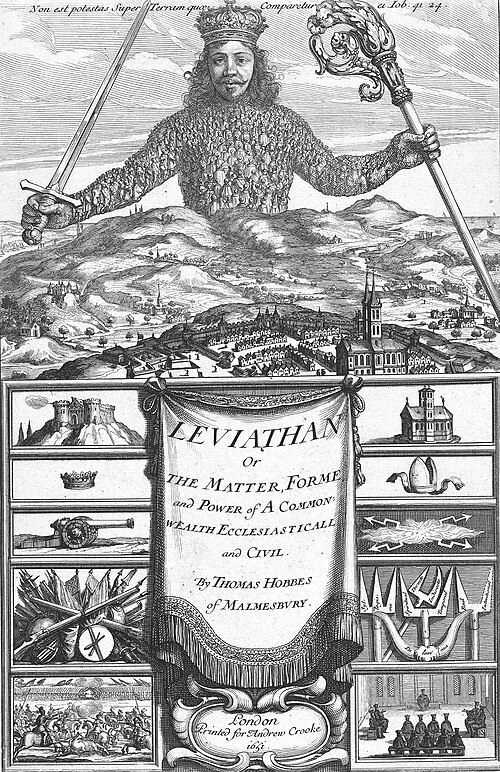

Hobbes' observation: scarcity + similar agents→competition life is brutish&short

Hobbes' fix: cede sovereignty to boss with credible enforcement. Command→order

Command Failure Mode (preferences)

Agents retain autonomy→effort substitution & selective obedience

Command Failure Mode (information)

Orders incomplete & ambiguous, environments shift.

Principals and Agents

From commands to contracts. Alignment by design: selection, monitoring, incentives to align autonomy with principals goals.

Agent as RL learner.

Naked RL is a clean micro-model: the agent updates a policy to maximize rewards.

Goodhart risk: m(·) omits what drives V(·), maximizing T(m(a)) reduces Us. Gaming, reward hacking, short termism.

Requires governance and guardrails. Lagged, hard-to-game proxies, HITL overrides, team rewards, culture, the "alignment stack"

Incentives are transfers on signals.

As soon as behavior is driven by 𝑇(𝑚(𝑎)) T(m(a)), the problem is no longer obedience—it’s measurement.

But T(m(a)) is always a lossy compression of what matters.

HMIA 2025

TRIVIAL COOPERATION: shared goals and information - pick a goal and execute

Hobbes' observation

scarcity + similar agents

competition

life is nasty, brutish, & short

→

HMIA 2025

Hobbes' Fix

cede sovereignty to boss with credible enforcement.

Command→order

HMIA 2025

Command Failure Mode (preferences)

Agents retain autonomy→effort substitution & selective obedience

Command Failure Mode (information)

Orders incomplete & ambiguous, environments shift.

Obedience Failure Modes

"THE" Principals and Agents Problem

Suppose P needs some help realizing their goals and objectives

A could provide help, but P and A care about different things

Read: A is not going to get out of assisting P the same thing that P will get out of having some help.

From commands to contracts. Alignment by design: selection, monitoring, incentives to align autonomy with principal's goals.

Principal's Challenge: design an intervention that aligns their utilities

You want "do the work I need" to be agent's "best option"

HMIA 2025

To get this agent to work for us, we need to sweeten things

An ordinary RL agent as our model

Agent does action a in state s and garners reward - has some utility

Add a transfer based on the behavior

HMIA 2025

Pay for time

Piece rates: pay per widget

Peformance bonus above threshold

Promotion (but delayed and fuzzy)

STOP+THINK: What are some common ways humans design T(aA)?

In AI/RL we call it "reward shaping" - tweaking reward signal to get desired behavior.

Kerr's Argument

HMIA 2025

Incentives are transfers on signals.

T, the incentive, is based on an observable signal.

STOP+THINK: How do we read these equations?

STOP+THINK: What is an everyday example of credit assignment problem?

HMIA 2025

As soon as behavior is driven by 𝑇(𝑚(𝑎)), the problem is no longer obedience—it’s measurement.

T(m(a)) is always a lossy compression of what matters.

STOP+THINK: What's that mean?

STOP+THINK: What's that mean?

Goodhart's Law: "when a measure becomes a target, it ceases to be a good measure"

STOP+THINK: Examples of measure becoming a target?

STOP+THINK: What's that mean?

HMIA 2025

HMIA 2025

Takeaway

Hierarchy - alignment by command and incentive, requires:

- governance and guardrails.

- Lagged, hard-to-game proxies;

- Human in the loop (HITL) overrides;

- team rewards;

- culture;

- the whole "alignment stack."

Example: Working Retail

tl;sc

managing humans in retail might be easier in some ways than managing robots in retail

STOP+THINK: If I am a retail company and I hire you for my store, what is my utility?

STOP+THINK: What signals about your work actions do your manager have?

STOP+THINK: What rewards do you respond to in the course of your job?

- Internalization (norms of politeness, honesty): Values like honesty and helpfulness have been learned long before the job starts. (hiring)

- Feedback Loops (enjoyment of helping): Smiles and gratitude from customers reinforce the behavior emotionally. (organizational culture)

- Peer Signaling: Co-workers' approval or disapproval regulates over-performance and conformity. (organizational culture, hiring)

- Managerial Oversight: Rules, checklists, and performance targets structure effort and attention. (organizational routines)

- Cultural Framing: The meaning of "good service" or "professionalism" is stabilized through shared language, training videos, and informal stories. (organizational culture)

STOP+THINK: What other than pay and review on stats might contribute to variance in the a sub A you deliver?

STOP+THINK: Which of these levers and influences are or are not available if we want to use robots in retail?

- Internalization (norms of politeness, honesty)

- Feedback Loops (enjoyment of helping)

- Peer Signaling

- Managerial Oversight

- Cultural Framing

|

Mechanism |

ML Analogy |

Availability |

Approximation |

Alignment Challenge |

Guardrails |

|---|---|---|---|---|---|

| Internalization | Value embedding | Partially | Hard constraints (no deceitful claims); constitutional rules (“prefer safe/transparent acts”); escalation policies | How to represent and update social norms that are situational and culturally coded | Periodic red-team tests; human review of edge cases; log-and-explain decisions |

| Feedback loops | RL signals | Yes (risky) | Pair short-term CSAT with lagged outcomes (30-day returns, complaint rate, repeat purchases) | Avoid “reward hacking” (e.g., maximizing smiles/positive tone without real help) | Penalize “star-begging” patterns; randomize survey prompts; weight durable outcomes more than immediate smiles |

| Peer signaling | Multi-agent coordination | Partially | Shared queue health; handoff quality; “assist” events credited to both giver and receiver | Maintain cooperation without competition for metrics | Team-level rewards for cooperation; cap individual metrics that encourage hoarding easy cases |

| Managerial oversight | Monitoring & human-in-the-loop | Yes | Real-time dashboards; safe-interrupt (“stop/ask human”); audit trails | Ensure corrigibility: robot accepts override and interprets feedback appropriately | Reward acceptance of human override (no penalty for deferring); require explanations for high-impact actions |

| Cultural framing | Brand policy | Yes (with care) | Style guides → operational checks (offer alternatives, confirm understanding, follow-up reminders) | Translate vague brand values (“friendly,” “helpful,” “authentic”) into operational behavior | Calibrate on customer narratives, not just tone scores; fairness audits across customer segments |

Example: Experts

tl;sc

experts require regulation beyond incentives

STOP+THINK: What does society hope for from expert intelligence?

Society (the principal) wants competent disinterested expertise that advances the public welfare.

STOP+THINK: What does society reward?

STOP+THINK: What can go wrong?

Above average pay; autonomy, exclusivity, status.

Guild interest, protection of low performers, exploitation based on knowledge asymmetry, conflicts of interest.

STOP+THINK: What do we do?

Meta governance: accreditation, standardized exams, continuing education, peer review.

Liability and accountability: malpractice, negligence standards, fiduciary duties, whistleblower protection

Incentive Hygeine: conflict of interest rules, disclosure requirements, separation of roles, rotation, cooling off periods

Measurement and Learning: registries, outcome tracking, incident reporting, public dashboards (outcomes not outputs)

HMIA 2025

CLASS

HMIA 2025

Resources

Author. YYYY. "Linked Title" (info)

NEXT Markets

HMIA 2025 Hierarchy

By Dan Ryan

HMIA 2025 Hierarchy

- 166