Kubernetes Workshop

github.com/eon01/kubernetes-workshop

Requirements

- Python 3.6.7

- python3-pip

- virtualenvwrapper

- Flask

- Minikube

- Github personal access tokens

- Text editor

# app.py

from flask import Flask, jsonify, abort, request

import urllib.request, json, os

from github import Github

app = Flask(__name__)

CLIENT_ID = os.environ['CLIENT_ID']

CLIENT_SECRET = os.environ['CLIENT_SECRET']

DEBUG = os.environ['DEBUG']

HOST = os.environ['HOST']

PORT = os.environ['PORT']

g = Github(CLIENT_ID, CLIENT_SECRET)

@app.route('/')

def get_repos():

r = []

try:

args = request.args

n = int(args['n'])

l = args['l']

except (ValueError, LookupError) as e:

abort(jsonify(error="Please provide 'n' and 'l' parameters"))

repositories = g.search_repositories(query='language:' + l)[:n]

try:

for repo in repositories:

with urllib.request.urlopen(repo.url) as url:

data = json.loads(url.read().decode())

r.append(data)

return jsonify({

'repos':r,

'status': 'ok'

})

except IndexError as e:

return jsonify({

'repos':r,

'status': 'ko'

})

if __name__ == '__main__':

app.run(debug=DEBUG, host=HOST, port=PORT)# .env

CLIENT_ID="xxxx"

CLIENT_SECRET="xxxx"

ENV="dev"

DEBUG="True"

HOST="0.0.0.0"

PORT=5000# Dockerfile

FROM python:3

ENV PYTHONUNBUFFERED 1

RUN adduser pyuser

RUN mkdir /app

WORKDIR /app

COPY requirements.txt /app

RUN pip install --upgrade pip

RUN pip install -r requirements.txt

COPY . .

RUN chmod +x app.py

RUN chown -R pyuser:pyuser /app

USER pyuser

EXPOSE 5000

CMD ["gunicorn", "app:app", "--config=config.py"]# config.py

import multiprocessing

workers = multiprocessing.cpu_count() * 2 + 1

threads = 2 * multiprocessing.cpu_count()

from os import environ as env

PORT = int(env.get("PORT", 5000))# Build

docker build -t tgr .

# Run

docker run -it --env-file .env -p 5000:5000 tgr

# Login to Docker hub

docker login

# Build and push

docker build -t eon01/tgr:1 . && docker push eon01/tgr:1

# Security / Dockeringore:

**.git

**.gitignore

**README.md

**env.*

**Dockerfile*

**docker-compose*

**.env

# Reset your tokens, rebuild and push

docker build -t eon01/tgr:1 . --no-cache && docker push eon01/tgr:1Deploying a K8s (Minikube) Cluster

# Start a cluster

minikube start -p workshop --extra-config=apiserver.enable-swagger-ui=true --alsologtostderr

# Check it

kubectl cluster-info

Deploying to Kubernetes

ReplicationController

apiVersion: v1

kind: ReplicationController

metadata:

name: app

spec:

replicas: 3

selector:

app: app

template:

metadata:

name: app

labels:

app: app

spec:

containers:

- name: tgr

image: reg/app:v1

ports:

- containerPort: 80ReplicaSet

apiVersion: extensions/v1beta1

kind: ReplicaSet

metadata:

name: app

spec:

replicas: 3

selector:

matchLabels:

app: app

template:

metadata:

labels:

app: app

environment: dev

spec:

containers:

- name: app

image: reg/app:v1

ports:

- containerPort: 80RS vs RC

-

Replica Set and Replication Controller do almost the same thing. They ensure that you have a specified number of pod replicas running at any given time in your cluster.

-

There are however some differences:

-

We are using `matchLabels` instead of `label`.

-

Replica Set use Set-Based selectors while replication controllers use Equity-Based selectors.

-

-

Selectors match Kubernetes objects (like pods) using the constraints of the specified label

Label selectors with equality-based requirements use three operators:`=`,`==` and `!=`

environment = production

tier != frontend

app == my_app (similar to app = my_app)In the last example, we used this notation:

...

spec:

replicas: 3

selector:

matchLabels:

app: app

template:

metadata:

... We could have used set-based requirements:

...

spec:

replicas: 3

selector:

matchExpressions:

- {key: app, operator: In, values: [app]}

template:

metadata:

...If we have more than 1 value for the app key, we can use:

...

spec:

replicas: 3

selector:

matchExpressions:

- {key: app, operator: In, values: [app, my_app, myapp, application]}

template:

metadata:

...And if we have other keys, we can use them like in the following example:

...

spec:

replicas: 3

selector:

matchExpressions:

- {key: app, operator: In, values: [app]}

- {key: tier, operator: NotIn, values: [frontend]}

- {key: environment, operator: NotIn, values: [production]}

template:

metadata:

...Newer Kubernetes resources such as Jobs, Deployments, ReplicaSets, and DaemonSets all support set-based requirements as well.

This is an example of how we use Kubectl with selectors

kubectl delete pods -l 'env in (production, staging, testing)'Until now, we have seen that the Replication Controller and Replica Set are two ways to deploy our container and manage it in a Kubernetes cluster.

The recommended way is using a Deployment that configures a ReplicaSet.

It is rather unlikely that we will ever need to create Pods directly for a production use-case since Deployments manages creating Pods for us by means of ReplicaSets.

apiVersion: v1

kind: Pod

metadata:

name: infinite

labels:

env: production

owner: eon01

spec:

containers:

- name: infinite

image: eon01/infiniteIn practice, we need:

-

A Deployment object : Containers are specified here.

-

A Service object: An abstract way to expose an application running on a set of Pods as a network service.

This is a Deployment object that creates 3 replica of the container app running the image "reg/app:v1". These containers can be reached using the port 80:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: app

spec:

replicas: 3

template:

metadata:

labels:

app: app

spec:

containers:

- name: app

image: reg/app:v1

ports:

- containerPort: 80This is the Deployment file we will use (save it to kubernetes/api-deployment.yaml):

apiVersion: apps/v1

kind: Deployment

metadata:

name: tgr

labels:

name: tgr

spec:

replicas: 1

selector:

matchLabels:

name: tgr

template:

metadata:

name: tgr

labels:

name: tgr

spec:

containers:

- name: tgr

image: eon01/tgr:1

ports:

- containerPort: 5000

resources:

requests:

memory: 128Mi

limits:

memory: 256Mi

env:

- name: CLIENT_ID

value: "xxxx"

- name: CLIENT_SECRET

value: "xxxxxxxxxxxxxxxxxxxxx"

- name: ENV

value: "prod"

- name: DEBUG

value: "False"

- name: HOST

value: "0.0.0.0"

- name: PORT

value: "5000"Kubernetes API Version

Let's first talk about the API version, in the first example, we used the extensions/v1beta1 and in the second one, we used apps/v1.

Kubernetes project development is very active and it may be confusing sometimes to follow all the software updates.

In Kubernetes version 1.9, apps/v1 is introduced, and extensions/v1beta1, apps/v1beta1 and apps/v1beta2 are deprecated.

To make things simpler, in order to know which version of the API you need to use, use the command:

kubectl api-versionsadmissionregistration.k8s.io/v1beta1

apiextensions.k8s.io/v1beta1

apiregistration.k8s.io/v1

apiregistration.k8s.io/v1beta1

apps/v1

apps/v1beta1

apps/v1beta2

authentication.k8s.io/v1

authentication.k8s.io/v1beta1

authorization.k8s.io/v1

authorization.k8s.io/v1beta1

autoscaling/v1

autoscaling/v2beta1

autoscaling/v2beta2

batch/v1

batch/v1beta1

certificates.k8s.io/v1beta1

coordination.k8s.io/v1

coordination.k8s.io/v1beta1

events.k8s.io/v1beta1

extensions/v1beta1

getambassador.io/v1

networking.k8s.io/v1

networking.k8s.io/v1beta1

node.k8s.io/v1beta1

policy/v1beta1

rbac.authorization.k8s.io/v1

rbac.authorization.k8s.io/v1beta1

scheduling.k8s.io/v1

scheduling.k8s.io/v1beta1

storage.k8s.io/v1

storage.k8s.io/v1beta1

v1-

v1 was the the first stable release of the Kubernetes API. It contains many core objects.

-

apps/v1 is the most common API group in Kubernetes and it includes functionality related to running applications on Kubernetes, like Deployments, RollingUpdates, and ReplicaSets.

-

autoscaling/v1 allows pods to be autoscaled based on different resource usage metrics.

-

batch/v1 is related to batch processing and and jobs

-

batch/v1beta1 is the beta release of batch/v1

-

certificates.k8s.io/v1beta1 validates network certificates for secure communication in your cluster.

-

extensions/v1beta1 includes many new, commonly used features. In Kubernetes 1.6, some of these features were relocated from extensions to specific API groups like apps .

-

policy/v1beta1 enables setting a pod disruption budget and new pod security rules

-

rbac.authorization.k8s.io/v1 includes extra functionality for Kubernetes RBAC (role-based access control)

-

..etc

Imperative

vs

Declarative

kubcetl create -f kubernetes/api-deployment.yaml

kubcetl apply -f kubernetes/api-deployment.yaml kubectl create is what we call Imperative Management of Kubernetes Objects Using Configuration Files. kubectl create overwrites all changes and if a resource having the same id already exists, it will encounter an error.

Using this approach you tell the Kubernetes API what you want to create, replace or delete, not how you want your K8s cluster world to look like.

kubectl apply is what we call Declarative Management of Kubernetes Objects Using Configuration Files approach. kubectl apply makes incremental changes. If an object already exists and you want to apply a new value for replica without deleting and recreating the object again, then kubectl apply is what you need. kubcetl apply can also be used even if the object (e.g deployment) does not exist yet.

Using a Private Container Registry

apiVersion: apps/v1

kind: Deployment

metadata:

name: tgr

labels:

name: tgr

spec:

replicas: 1

selector:

matchLabels:

name: tgr

template:

metadata:

name: tgr

labels:

name: tgr

spec:

containers:

- name: tgr

image: private/tgr:1

imagePullSecrets:

- name: registry-credentials

ports:

- containerPort: 5000

resources:

requests:

memory: 128Mi

limits:

memory: 256Mi

env:

- name: CLIENT_ID

value: "xxxx"

- name: CLIENT_SECRET

value: "xxxxxxxxxxxxxxxxxxxxx"

- name: ENV

value: "prod"

- name: DEBUG

value: "False"

- name: HOST

value: "0.0.0.0"

- name: PORT

value: "5000"This is how the registry-credentials secret is created:

kubectl create secret docker-registry registry-credentials \

--docker-server=<your-registry-server> \

--docker-username=<your-name> \

--docker-password=<your-pword> \

--docker-email=<your-email>You can also apply/create the registry-credentials using a YAML file.

apiVersion: v1

kind: Secret

metadata:

...

name: registry-credentials

...

data:

.dockerconfigjson: adjAalkazArrA ... JHJH1QUIIAAX0=

type: kubernetes.io/dockerconfigjsonIf you decode the .dockerconfigjson file using base64 --decode command, you will understand that it's a simple file storing the configuration to access a registry:

kubectl get secret regcred --output="jsonpath={.data.\.dockerconfigjson}" | base64 --decode

Output:

{"auths":{"your.private.registry.domain.com":{"username":"eon01","password":"xxxxxxxxxxx","email":"aymen@eralabs.io","auth":"dE3xxxxxxxxx"}}}Again, let's decode the "auth" value

"dE3xxxxxxxxx"|base64 --decode

# output:

eon01:xxxxxxxx Scaling Deployment

This command will show all the pods within a cluster default namespace.

kubectl get podsWe can scale our deployment using a command similar to the following one:

kubectl scale --replicas=<expected_replica_num> deployment <deployment_name>Our deployment is called tgr since it's the name we gave to it in the Deployment configuration. You can also make a verification by typing kubeclt get deployment. Let's scale it:

kubectl scale --replicas=2 deployment tgrEach of these containers will be accessible on the port 500 from outside the container but not from outside the cluster.

The number of pods/containers running for our API can be variable and may change dynamically.

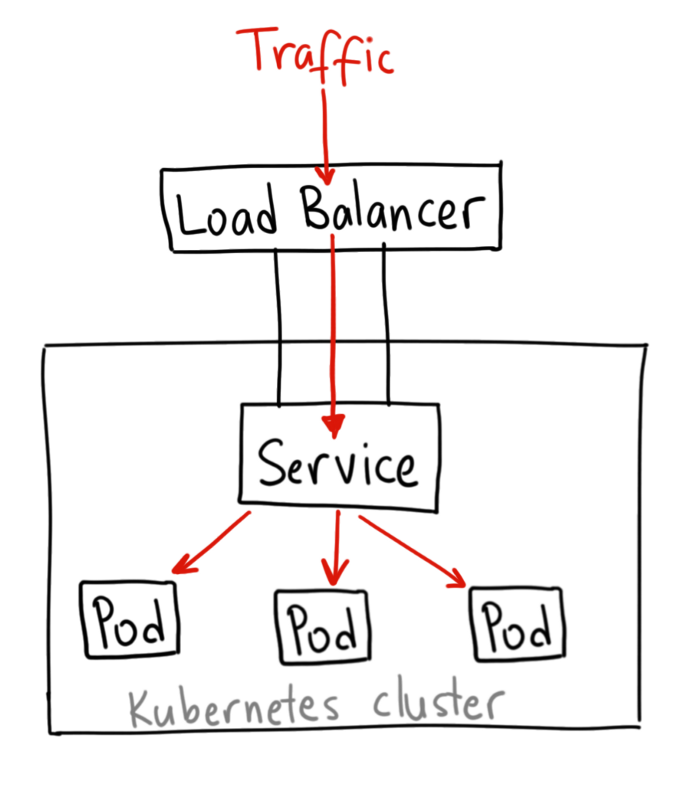

We can set up a load balancer that will balance traffic between the two pods we created, but since each pod can disappear to be recreated, its hostname and address will change.

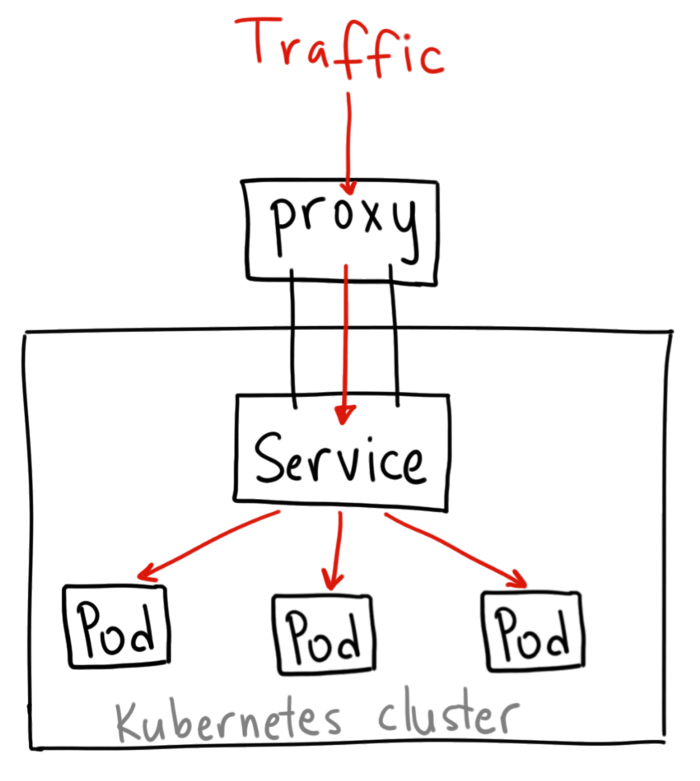

In all cases, pods are not meant to receive traffic directly but they need to be exposed to traffic using a Service. In other words, the set of Pods running in one moment in time could be different from the set of Pods running that application a moment later.

At the moment, the only service running is the cluster IP (which is related to Minikube and give us access to the cluster we created):

kubectl get servicesServices

In Kubernetes, since Pods are mortals, we should create an abstraction that defines a logical set of Pods and how to access them. This is the role of Services.

In our case creating a load balancer is a suitable solution. This is the configuration file of a Service object that will listen on the port 80 and load-balance traffic to the Pod with the label name equals to the app .

The latter is accessible internally using the port 5000 like it's defined in the Deployment configuration:

...

ports:

- containerPort: 5000

... This is how the Service looks like:

apiVersion: v1

kind: Service

metadata:

name: lb

labels:

name: lb

spec:

ports:

- port: 80

targetPort: 5000

selector:

name: tgr

type: LoadBalancerSave this file to kubernetes/api-service.yaml and deploy it using kubectl apply -f kubernetes/api-service.yaml. If you type kubectl get service, you will get the list of Services running in our local cluster:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 51m

lb LoadBalancer 10.99.147.117 <pending> 80:30546/TCP 21sNote that the ClusterIP does not have an external IP while the app Service external IP is pending. No need to wait for the external IP of the created service, since Minikube does not really deploy a load balancer and this feature will only work if you configure a Load Balancer provider.

If you are using a Cloud provider, say AWS, an AWS load balancer will be setup for you, GKE will provide a Cloud Load Balancer..etc You may also configure other types of load balancers.

There are different types of Services that we can use to expose the access to the API publicly

ClusterIP: is the default Kubernetes service. and it exposes the Service on a cluster-internal IP. and you can access it using the Kubernetes proxy.

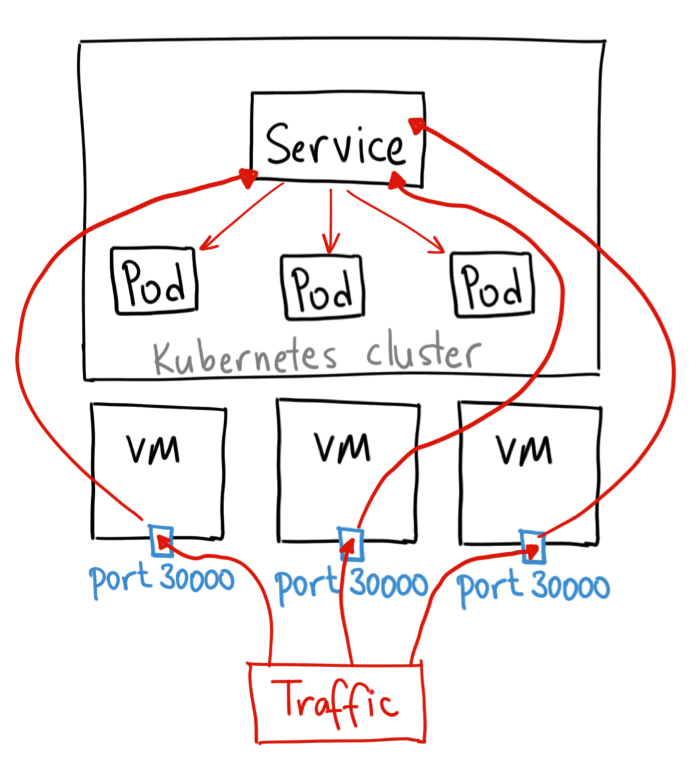

NodePort: Exposes the Service on each Node’s (VM's) IP at a static port called the NodePort. (In our example, we have a single node). This is a primitive way to make an application accessible from outside the cluster and is not suitable for many use cases since your nodes (VMs) IP addresses may change at any time. The service is accessible using <NodeIP>:<NodePort>.

LoadBalancer: This is more advanced than a NodePort Service. Usually, a Load Balancer exposes a Service externally using a cloud provider’s load balancer. NodePort and ClusterIP Services, to which the external load balancer routes, are automatically created.

We created a Load Balancer using a Service on our Minikube cluster, but since we don't have a Load Balancer to run, we can access the API service using the Cluster IP followed by the Service internal Port:

minikube -p workshop ipOutput:

192.168.99.100Now execute kubectl get services :

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 51m

lb LoadBalancer 10.99.147.117 <pending> 80:30546/TCP 21sUse the IP 192.168.99.199 followed by the port 30546 to access the API.

You can test this using a curl command:

curl "http://192.168.99.100:30546/?l=python&n=1"

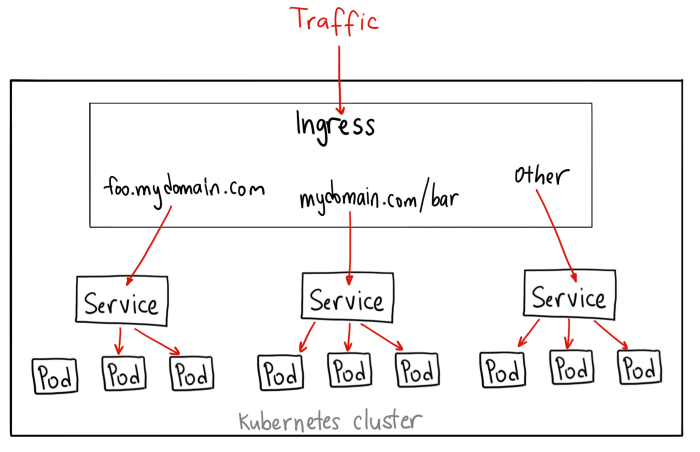

Inconvenient of Load Balancer Service

Typically, load balancers are provisioned by the Cloud provider you're using.

A load balancer can handle one service but imagine if you have 10 services, each one will need a load balancer, this is when it becomes costly.

The best solution, in this case, is setting up an Ingress controller that acts as a smart router and can be deployed at the edge of the cluster, therefore in the front of all the services you deploy.

An API Gateway

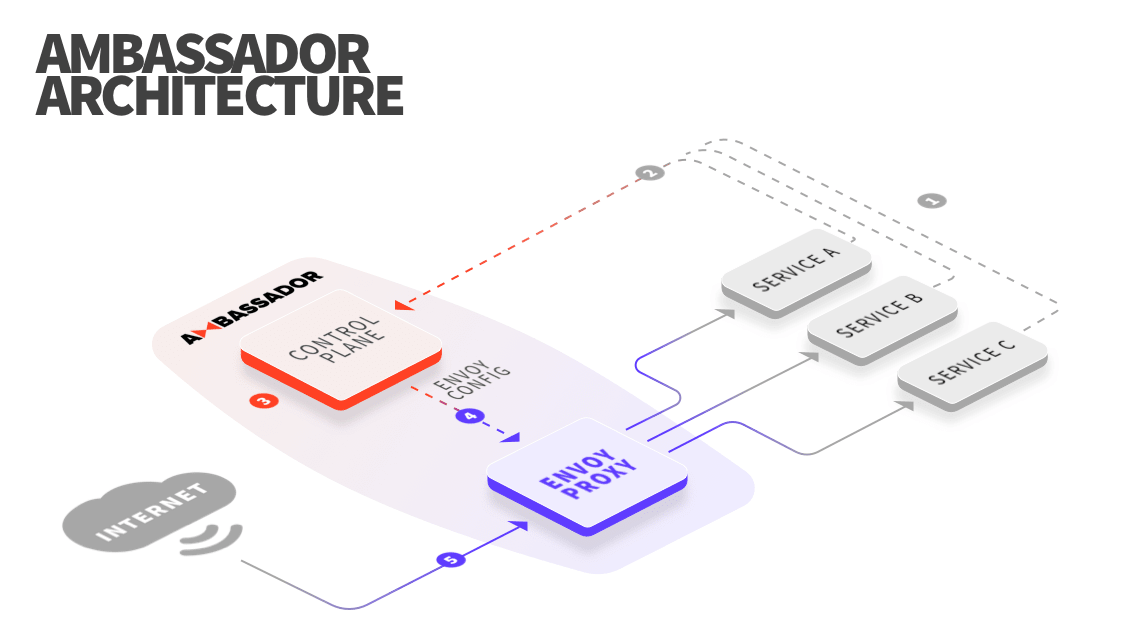

Ambassador is an Open Source Kubernetes-Native API Gateway built on the Envoy Proxy.

It provides a solution for traffic management and application security.

It's described as is a specialized control plane that translates Kubernetes annotations to Envoy configuration.

All traffic is directly handled by the high-performance Envoy Proxy.

Originally built at Lyft, Envoy is a high performance C++ distributed proxy designed for single services and applications, as well as a communication bus and “universal data plane” designed for large microservice “service mesh” architectures. Built on the learnings of solutions such as NGINX, HAProxy, hardware load balancers, and cloud load balancers, Envoy runs alongside every application and abstracts the network by providing common features in a platform-agnostic manner. When all service traffic in an infrastructure flows via an Envoy mesh, it becomes easy to visualize problem areas via consistent observability, tune overall performance, and add substrate features in a single place.

We are going to use Ambassador as an API Gateway, we no longer need the load balancer service we created in the first part. Let's remove it:

kubectl delete -f kubernetes/api-service.yamlTo deploy Ambassador in your default namespace, first you need to check if Kubernetes has RBAC enabled:

If RBAC is enabled:

kubectl cluster-info dump --namespace kube-system | grep authorization-modekubectl apply -f https://getambassador.io/yaml/ambassador/ambassador-rbac.yamlWithout RBAC, you can use:

kubectl apply -f https://getambassador.io/yaml/ambassador/ambassador-no-rbac.yamlAmbassador is deployed as a Kubernetes Service that references the ambassador Deployment you deployed previously. Create the following YAML and put it in a file called kubernetes/ambassador-service.yaml.

---

apiVersion: v1

kind: Service

metadata:

name: ambassador

spec:

type: LoadBalancer

externalTrafficPolicy: Local

ports:

- port: 80

targetPort: 8080

selector:

service: ambassadorDeploy the service:

kubectl apply -f ambassador-service.yamlNow let's use this file containing the Deployment configuration for our API as well as the Ambassador Service configuration relative to the same Deployment. Call this file kubernetes/api-deployment-with-ambassador.yaml:

---

apiVersion: v1

kind: Service

metadata:

name: tgr

annotations:

getambassador.io/config: |

---

apiVersion: ambassador/v1

kind: Mapping

name: tgr_mapping

prefix: /

service: tgr:5000

spec:

ports:

- name: tgr

port: 5000

targetPort: 5000

selector:

app: tgr

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tgr

spec:

replicas: 1

selector:

matchLabels:

app: tgr

strategy:

type: RollingUpdate

template:

metadata:

labels:

app: tgr

spec:

containers:

- name: tgr

image: eon01/tgr:1

ports:

- containerPort: 5000

env:

- name: CLIENT_ID

value: "453486b9225e0e26c525"

- name: CLIENT_SECRET

value: "a63e841d5c18f41b9264a1a2ac0675a1f903ee8c"

- name: ENV

value: "prod"

- name: DEBUG

value: "False"

- name: HOST

value: "0.0.0.0"

- name: PORT

value: "5000"Deploy the previously created configuration:

kubectl apply -f kubernetes/api-deployment-with-ambassador.yamlLet's test things out: We need the external IP for Ambassador:

kubectl get svc -o wide ambassadorYou should see something like:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

ambassador LoadBalancer 10.103.201.130 <pending> 80:30283/TCP 9m2s service=ambassadorIf you are using Minikube, it is normal to see the external IP in the pending state.

We can use minikube -p workshop service list to get the Ambassador IP. You will get an output similar to the following:

minikube -p workshop service list

--

|-------------|------------------|-----------------------------|

| NAMESPACE | NAME | URL |

|-------------|------------------|-----------------------------|

| default | ambassador | http://192.168.99.100:30283 |

| default | ambassador-admin | http://192.168.99.100:30084 |

| default | kubernetes | No node port |

| default | tgr | No node port |

| kube-system | kube-dns | No node port |

|-------------|------------------|-----------------------------|

Now you can use the API using the IP http://192.168.99.100:30283:

curl "http://192.168.99.100:30283/?l=python&n=1"

Edge Proxy vs Service Mesh

You may have heard of tools like Istio and Linkerd and it may be confusing to compare Ambassador or Envoy to these tools. We are going to understand the differences here.

Istio is described as a tool to connect, secure, control, and observe services.The same features are implemented by its alternatives like Linkerd or Consul. These tools are called Service Mesh.

Ambassador is a, API gateway for services (or microservices) and it's deployed at the edge of your network. It routes incoming traffic to a cluster internal services and this what we call "north-south" traffic.

Istio, in the other hand, is a service mesh for Kubernetes services (or microservices). It's designed to add application-level Layer (L7) observability, routing, and resilience to service-to-service traffic and this is what we call "east-west" traffic.

The fact that both Istio and Ambassador are built using Envoy, does not mean they have the same features or usability. Therefore, they can be deployed together in the same cluster.

Accessing the Kubernetes API

We can use the Kubernetes API using one of its client (Go, Python ..etc).

This is a quick example using Python Kubernetes:

pip install kubernetes

---

# import json

# import requests

# @app.route('/pods')

# def monitor():

#

# api_url = "http://kubernetes.default.svc/api/v1/pods/"

# response = requests.get(api_url)

# if response.status_code == 200:

# return json.loads(response.content.decode('utf-8'))

# else:

# return NoneAccessing the API from inside a POD

By default, a Pod is associated with a service account, and a credential (token) for that service account is placed into the filesystem tree of each container in that Pod, at /var/run/secrets/kubernetes.io/serviceaccount/token.

Let's try to go inside a Pod and access the API.

Use kubectl get pods to get a list of pods

NAME READY STATUS RESTARTS AGE

ambassador-64d8b877f9-4bzvn 1/1 Running 0 103m

ambassador-64d8b877f9-b68w6 1/1 Running 0 103m

ambassador-64d8b877f9-vw9mm 1/1 Running 0 103m

tgr-8d78d599f-pt5xx 1/1 Running 0 4m17s

---

kubectl exec -it tgr-8d78d599f-pt5xx bash

---

# Assign the token to a variable:

KUBE_TOKEN=$(cat /var/run/secrets/kubernetes.io/serviceaccount/token)

# Notice that the token file is added automatically by Kubernetes. We also have other variables already set like:

echo $KUBERNETES_SERVICE_HOST

#10.96.0.1

echo $KUBERNETES_PORT_443_TCP_PORT

#443

echo $HOSTNAME

#tgr-8d78d599f-pt5xxWe are going to use these variables to access the list of Pods using this Curl command:

curl -sSk -H "Authorization: Bearer $KUBE_TOKEN" \

https://$KUBERNETES_SERVICE_HOST:$KUBERNETES_PORT_443_TCP_PORT/api/v1/namespaces/default/pods/$HOSTNAME

---

#At this stage, you should have an error output saying that you don't have the rights to access this API endpoint, which is normal:

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "pods \"tgr-8d78d599f-pt5xx\" is forbidden: User \"system:serviceaccount:default:default\" cannot get resource \"pods\" in API group \"\" in the namespace \"default\"",

"reason": "Forbidden",

"details": {

"name": "tgr-8d78d599f-pt5xx",

"kind": "pods"

},

"code": 403

}The Pod is using the default Service Account and it does not have the right to list the Pods.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: pods-list

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["list"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: pods-list

subjects:

- kind: ServiceAccount

name: default

namespace: default

roleRef:

kind: ClusterRole

name: pods-list

apiGroup: rbac.authorization.k8s.io

# Then apply the new configuration using `kubectl apply -f kubernetes/service-account.yaml`.Using the above file, we created a new ClusterRole called pods-list and added some rules to it. Our ClusterRole has the rights to execute the verbs list on the resource pods. We didn't specify an API Group for this ClusterRole.

In the second part of the file, we assigned the new ClusterRole to a ServiceAccount (default) using a ClusterRoleBinding.

Note that we have the possibility to add other verbs and resources to the rule set.

resources:

- rolebindings

- roles

- pods

verbs:

- create

- delete

- deletecollection

- get

- list

- patch

- update

- watchNow that we deployed the new role, let's test it.

kubectl exec -it tgr-8d78d599f-pt5xx bashUse the same Curl:

KUBE_TOKEN=$(cat /var/run/secrets/kubernetes.io/serviceaccount/token)

curl -sSk -H "Authorization: Bearer $KUBE_TOKEN" https://$KUBERNETES_SERVICE_HOST:$KUBERNETES_PORT_443_TCP_PORT/api/v1/namespaces/default/pods/This will send us back the list of Pods.

You can even implement your own observability solution using the API.

{

"kind": "PodList",

"apiVersion": "v1",

"metadata": {

"selfLink": "/api/v1/namespaces/default/pods/",

"resourceVersion": "19589"

},

"items": [

{

"metadata": {

"name": "ambassador-64d8b877f9-4bzvn",

"generateName": "ambassador-64d8b877f9-",

"namespace": "default",

"selfLink": "/api/v1/namespaces/default/pods/ambassador-64d8b877f9-4bzvn",

"uid": "63f62ede-de77-441d-85f7-daf9cbc7040f",

"resourceVersion": "1047",

"creationTimestamp": "2019-08-19T08:12:47Z",

"labels": {

"pod-template-hash": "64d8b877f9",

"service": "ambassador"

},

"annotations": {

"consul.hashicorp.com/connect-inject": "false",

"sidecar.istio.io/inject": "false"

},

"ownerReferences": [

{

"apiVersion": "apps/v1",

"kind": "ReplicaSet",

"name": "ambassador-64d8b877f9",

"uid": "383c2e4b-7179-4806-b7bf-3682c7873a10",

"controller": true,

"blockOwnerDeletion": true

}

]

},

"spec": {

"volumes": [

{

"name": "ambassador-token-rdqq6",

"secret": {

"secretName": "ambassador-token-rdqq6",

"defaultMode": 420

}

}

],

"containers": [

{

"name": "ambassador",

"image": "quay.io/datawire/ambassador:0.75.0",

"ports": [

{

"name": "http",

"containerPort": 8080,

"protocol": "TCP"

},

{

"name": "https",

"containerPort": 8443,

"protocol": "TCP"

},

{

"name": "admin",

"containerPort": 8877,

"protocol": "TCP"

}

],

"env": [

{

"name": "AMBASSADOR_NAMESPACE",

"valueFrom": {

"fieldRef": {

"apiVersion": "v1",

"fieldPath": "metadata.namespace"

}

}

}

],

"resources": {

"limits": {

"cpu": "1",

"memory": "400Mi"

},

"requests": {

"cpu": "200m",

"memory": "100Mi"

}

},

"volumeMounts": [

{

"name": "ambassador-token-rdqq6",

"readOnly": true,

"mountPath": "/var/run/secrets/kubernetes.io/serviceaccount"

}

],

"livenessProbe": {

"httpGet": {

"path": "/ambassador/v0/check_alive",

"port": 8877,

"scheme": "HTTP"

},

"initialDelaySeconds": 30,

"timeoutSeconds": 1,

"periodSeconds": 3,

"successThreshold": 1,

"failureThreshold": 3

},

"readinessProbe": {

"httpGet": {

"path": "/ambassador/v0/check_ready",

"port": 8877,

"scheme": "HTTP"

},

"initialDelaySeconds": 30,

"timeoutSeconds": 1,

"periodSeconds": 3,

"successThreshold": 1,

"failureThreshold": 3

},

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File",

"imagePullPolicy": "IfNotPresent"

}

],

"restartPolicy": "Always",

"terminationGracePeriodSeconds": 30,

"dnsPolicy": "ClusterFirst",

"serviceAccountName": "ambassador",

"serviceAccount": "ambassador",

"nodeName": "minikube",

"securityContext": {

"runAsUser": 8888

},

"affinity": {

"podAntiAffinity": {

"preferredDuringSchedulingIgnoredDuringExecution": [

{

"weight": 100,

"podAffinityTerm": {

"labelSelector": {

"matchLabels": {

"service": "ambassador"

}

},

"topologyKey": "kubernetes.io/hostname"

}

}

]

}

},

"schedulerName": "default-scheduler",

"tolerations": [

{

"key": "node.kubernetes.io/not-ready",

"operator": "Exists",

"effect": "NoExecute",

"tolerationSeconds": 300

},

{

"key": "node.kubernetes.io/unreachable",

"operator": "Exists",

"effect": "NoExecute",

"tolerationSeconds": 300

}

],

"priority": 0,

"enableServiceLinks": true

},

"status": {

"phase": "Running",

"conditions": [

{

"type": "Initialized",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T08:12:47Z"

},

{

"type": "Ready",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T08:13:42Z"

},

{

"type": "ContainersReady",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T08:13:42Z"

},

{

"type": "PodScheduled",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T08:12:47Z"

}

],

"hostIP": "10.0.2.15",

"podIP": "172.17.0.4",

"startTime": "2019-08-19T08:12:47Z",

"containerStatuses": [

{

"name": "ambassador",

"state": {

"running": {

"startedAt": "2019-08-19T08:13:12Z"

}

},

"lastState": {

},

"ready": true,

"restartCount": 0,

"image": "quay.io/datawire/ambassador:0.75.0",

"imageID": "docker-pullable://quay.io/datawire/ambassador@sha256:decb2328426eb6fe77394f5b363943c282debb30bd1102d8e32ea5c6ee24f51b",

"containerID": "docker://ceec02e01104d3945b71c1162f7265695e20b4cb95e6d0b26a2e68937e498585"

}

],

"qosClass": "Burstable"

}

},

{

"metadata": {

"name": "ambassador-64d8b877f9-b68w6",

"generateName": "ambassador-64d8b877f9-",

"namespace": "default",

"selfLink": "/api/v1/namespaces/default/pods/ambassador-64d8b877f9-b68w6",

"uid": "7a302c85-43a1-45d8-86f7-4423424c0df5",

"resourceVersion": "1066",

"creationTimestamp": "2019-08-19T08:12:47Z",

"labels": {

"pod-template-hash": "64d8b877f9",

"service": "ambassador"

},

"annotations": {

"consul.hashicorp.com/connect-inject": "false",

"sidecar.istio.io/inject": "false"

},

"ownerReferences": [

{

"apiVersion": "apps/v1",

"kind": "ReplicaSet",

"name": "ambassador-64d8b877f9",

"uid": "383c2e4b-7179-4806-b7bf-3682c7873a10",

"controller": true,

"blockOwnerDeletion": true

}

]

},

"spec": {

"volumes": [

{

"name": "ambassador-token-rdqq6",

"secret": {

"secretName": "ambassador-token-rdqq6",

"defaultMode": 420

}

}

],

"containers": [

{

"name": "ambassador",

"image": "quay.io/datawire/ambassador:0.75.0",

"ports": [

{

"name": "http",

"containerPort": 8080,

"protocol": "TCP"

},

{

"name": "https",

"containerPort": 8443,

"protocol": "TCP"

},

{

"name": "admin",

"containerPort": 8877,

"protocol": "TCP"

}

],

"env": [

{

"name": "AMBASSADOR_NAMESPACE",

"valueFrom": {

"fieldRef": {

"apiVersion": "v1",

"fieldPath": "metadata.namespace"

}

}

}

],

"resources": {

"limits": {

"cpu": "1",

"memory": "400Mi"

},

"requests": {

"cpu": "200m",

"memory": "100Mi"

}

},

"volumeMounts": [

{

"name": "ambassador-token-rdqq6",

"readOnly": true,

"mountPath": "/var/run/secrets/kubernetes.io/serviceaccount"

}

],

"livenessProbe": {

"httpGet": {

"path": "/ambassador/v0/check_alive",

"port": 8877,

"scheme": "HTTP"

},

"initialDelaySeconds": 30,

"timeoutSeconds": 1,

"periodSeconds": 3,

"successThreshold": 1,

"failureThreshold": 3

},

"readinessProbe": {

"httpGet": {

"path": "/ambassador/v0/check_ready",

"port": 8877,

"scheme": "HTTP"

},

"initialDelaySeconds": 30,

"timeoutSeconds": 1,

"periodSeconds": 3,

"successThreshold": 1,

"failureThreshold": 3

},

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File",

"imagePullPolicy": "IfNotPresent"

}

],

"restartPolicy": "Always",

"terminationGracePeriodSeconds": 30,

"dnsPolicy": "ClusterFirst",

"serviceAccountName": "ambassador",

"serviceAccount": "ambassador",

"nodeName": "minikube",

"securityContext": {

"runAsUser": 8888

},

"affinity": {

"podAntiAffinity": {

"preferredDuringSchedulingIgnoredDuringExecution": [

{

"weight": 100,

"podAffinityTerm": {

"labelSelector": {

"matchLabels": {

"service": "ambassador"

}

},

"topologyKey": "kubernetes.io/hostname"

}

}

]

}

},

"schedulerName": "default-scheduler",

"tolerations": [

{

"key": "node.kubernetes.io/not-ready",

"operator": "Exists",

"effect": "NoExecute",

"tolerationSeconds": 300

},

{

"key": "node.kubernetes.io/unreachable",

"operator": "Exists",

"effect": "NoExecute",

"tolerationSeconds": 300

}

],

"priority": 0,

"enableServiceLinks": true

},

"status": {

"phase": "Running",

"conditions": [

{

"type": "Initialized",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T08:12:47Z"

},

{

"type": "Ready",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T08:13:50Z"

},

{

"type": "ContainersReady",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T08:13:50Z"

},

{

"type": "PodScheduled",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T08:12:47Z"

}

],

"hostIP": "10.0.2.15",

"podIP": "172.17.0.6",

"startTime": "2019-08-19T08:12:47Z",

"containerStatuses": [

{

"name": "ambassador",

"state": {

"running": {

"startedAt": "2019-08-19T08:13:18Z"

}

},

"lastState": {

},

"ready": true,

"restartCount": 0,

"image": "quay.io/datawire/ambassador:0.75.0",

"imageID": "docker-pullable://quay.io/datawire/ambassador@sha256:decb2328426eb6fe77394f5b363943c282debb30bd1102d8e32ea5c6ee24f51b",

"containerID": "docker://e0a0153c191b364c95b71e62cc7e1451892397f0f1c713cb8b34ba75d0364962"

}

],

"qosClass": "Burstable"

}

},

{

"metadata": {

"name": "ambassador-64d8b877f9-vw9mm",

"generateName": "ambassador-64d8b877f9-",

"namespace": "default",

"selfLink": "/api/v1/namespaces/default/pods/ambassador-64d8b877f9-vw9mm",

"uid": "6a633b1f-0ed4-4ced-8e55-8977078ab821",

"resourceVersion": "1054",

"creationTimestamp": "2019-08-19T08:12:47Z",

"labels": {

"pod-template-hash": "64d8b877f9",

"service": "ambassador"

},

"annotations": {

"consul.hashicorp.com/connect-inject": "false",

"sidecar.istio.io/inject": "false"

},

"ownerReferences": [

{

"apiVersion": "apps/v1",

"kind": "ReplicaSet",

"name": "ambassador-64d8b877f9",

"uid": "383c2e4b-7179-4806-b7bf-3682c7873a10",

"controller": true,

"blockOwnerDeletion": true

}

]

},

"spec": {

"volumes": [

{

"name": "ambassador-token-rdqq6",

"secret": {

"secretName": "ambassador-token-rdqq6",

"defaultMode": 420

}

}

],

"containers": [

{

"name": "ambassador",

"image": "quay.io/datawire/ambassador:0.75.0",

"ports": [

{

"name": "http",

"containerPort": 8080,

"protocol": "TCP"

},

{

"name": "https",

"containerPort": 8443,

"protocol": "TCP"

},

{

"name": "admin",

"containerPort": 8877,

"protocol": "TCP"

}

],

"env": [

{

"name": "AMBASSADOR_NAMESPACE",

"valueFrom": {

"fieldRef": {

"apiVersion": "v1",

"fieldPath": "metadata.namespace"

}

}

}

],

"resources": {

"limits": {

"cpu": "1",

"memory": "400Mi"

},

"requests": {

"cpu": "200m",

"memory": "100Mi"

}

},

"volumeMounts": [

{

"name": "ambassador-token-rdqq6",

"readOnly": true,

"mountPath": "/var/run/secrets/kubernetes.io/serviceaccount"

}

],

"livenessProbe": {

"httpGet": {

"path": "/ambassador/v0/check_alive",

"port": 8877,

"scheme": "HTTP"

},

"initialDelaySeconds": 30,

"timeoutSeconds": 1,

"periodSeconds": 3,

"successThreshold": 1,

"failureThreshold": 3

},

"readinessProbe": {

"httpGet": {

"path": "/ambassador/v0/check_ready",

"port": 8877,

"scheme": "HTTP"

},

"initialDelaySeconds": 30,

"timeoutSeconds": 1,

"periodSeconds": 3,

"successThreshold": 1,

"failureThreshold": 3

},

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File",

"imagePullPolicy": "IfNotPresent"

}

],

"restartPolicy": "Always",

"terminationGracePeriodSeconds": 30,

"dnsPolicy": "ClusterFirst",

"serviceAccountName": "ambassador",

"serviceAccount": "ambassador",

"nodeName": "minikube",

"securityContext": {

"runAsUser": 8888

},

"affinity": {

"podAntiAffinity": {

"preferredDuringSchedulingIgnoredDuringExecution": [

{

"weight": 100,

"podAffinityTerm": {

"labelSelector": {

"matchLabels": {

"service": "ambassador"

}

},

"topologyKey": "kubernetes.io/hostname"

}

}

]

}

},

"schedulerName": "default-scheduler",

"tolerations": [

{

"key": "node.kubernetes.io/not-ready",

"operator": "Exists",

"effect": "NoExecute",

"tolerationSeconds": 300

},

{

"key": "node.kubernetes.io/unreachable",

"operator": "Exists",

"effect": "NoExecute",

"tolerationSeconds": 300

}

],

"priority": 0,

"enableServiceLinks": true

},

"status": {

"phase": "Running",

"conditions": [

{

"type": "Initialized",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T08:12:47Z"

},

{

"type": "Ready",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T08:13:45Z"

},

{

"type": "ContainersReady",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T08:13:45Z"

},

{

"type": "PodScheduled",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T08:12:47Z"

}

],

"hostIP": "10.0.2.15",

"podIP": "172.17.0.5",

"startTime": "2019-08-19T08:12:47Z",

"containerStatuses": [

{

"name": "ambassador",

"state": {

"running": {

"startedAt": "2019-08-19T08:13:15Z"

}

},

"lastState": {

},

"ready": true,

"restartCount": 0,

"image": "quay.io/datawire/ambassador:0.75.0",

"imageID": "docker-pullable://quay.io/datawire/ambassador@sha256:decb2328426eb6fe77394f5b363943c282debb30bd1102d8e32ea5c6ee24f51b",

"containerID": "docker://b872fd025bea0e7be6e62c0e8edc5b64b3a370e6e931e73d2baf7bea4e42761d"

}

],

"qosClass": "Burstable"

}

},

{

"metadata": {

"name": "tgr-8d78d599f-pt5xx",

"generateName": "tgr-8d78d599f-",

"namespace": "default",

"selfLink": "/api/v1/namespaces/default/pods/tgr-8d78d599f-pt5xx",

"uid": "92ad5299-bf47-4715-b905-527d6449dc17",

"resourceVersion": "8429",

"creationTimestamp": "2019-08-19T09:51:48Z",

"labels": {

"app": "tgr",

"pod-template-hash": "8d78d599f"

},

"ownerReferences": [

{

"apiVersion": "apps/v1",

"kind": "ReplicaSet",

"name": "tgr-8d78d599f",

"uid": "7ca96835-bad1-4411-bad2-40be7776f152",

"controller": true,

"blockOwnerDeletion": true

}

]

},

"spec": {

"volumes": [

{

"name": "default-token-s49st",

"secret": {

"secretName": "default-token-s49st",

"defaultMode": 420

}

}

],

"containers": [

{

"name": "tgr",

"image": "eon01/tgr:2",

"ports": [

{

"containerPort": 5000,

"protocol": "TCP"

}

],

"env": [

{

"name": "CLIENT_ID",

"value": "453486b9225e0e26c525"

},

{

"name": "CLIENT_SECRET",

"value": "a63e841d5c18f41b9264a1a2ac0675a1f903ee8c"

},

{

"name": "ENV",

"value": "prod"

},

{

"name": "DEBUG",

"value": "True"

},

{

"name": "HOST",

"value": "0.0.0.0"

},

{

"name": "PORT",

"value": "5000"

}

],

"resources": {

},

"volumeMounts": [

{

"name": "default-token-s49st",

"readOnly": true,

"mountPath": "/var/run/secrets/kubernetes.io/serviceaccount"

}

],

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File",

"imagePullPolicy": "Always"

}

],

"restartPolicy": "Always",

"terminationGracePeriodSeconds": 30,

"dnsPolicy": "ClusterFirst",

"serviceAccountName": "default",

"serviceAccount": "default",

"nodeName": "minikube",

"securityContext": {

},

"schedulerName": "default-scheduler",

"tolerations": [

{

"key": "node.kubernetes.io/not-ready",

"operator": "Exists",

"effect": "NoExecute",

"tolerationSeconds": 300

},

{

"key": "node.kubernetes.io/unreachable",

"operator": "Exists",

"effect": "NoExecute",

"tolerationSeconds": 300

}

],

"priority": 0,

"enableServiceLinks": true

},

"status": {

"phase": "Running",

"conditions": [

{

"type": "Initialized",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T09:51:48Z"

},

{

"type": "Ready",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T09:51:53Z"

},

{

"type": "ContainersReady",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T09:51:53Z"

},

{

"type": "PodScheduled",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2019-08-19T09:51:48Z"

}

],

"hostIP": "10.0.2.15",

"podIP": "172.17.0.8",

"startTime": "2019-08-19T09:51:48Z",

"containerStatuses": [

{

"name": "tgr",

"state": {

"running": {

"startedAt": "2019-08-19T09:51:52Z"

}

},

"lastState": {

},

"ready": true,

"restartCount": 0,

"image": "eon01/tgr:2",

"imageID": "docker-pullable://eon01/tgr@sha256:af6f3ab4fb54ebeafed479df91c048d1d15514240812e9d6cd87591c1bcbe427",

"containerID": "docker://0dd1aea50f2b08e468f7dffcbb73899d76837e2db74a302b43387932a7347918"

}

],

"qosClass": "BestEffort"

}

}

]

}Thanks

Join Faun Community: www.faun.dev/join

Credits & References:

- Services type images, credits to: https://medium.com/@ahmetb

- Kubernetes NodePort vs LoadBalancer vs Ingress? When should I use what?: https://medium.com/google-cloud/kubernetes-nodeport-vs-loadbalancer-vs-ingress-when-should-i-use-what-922f010849e0

- Official K8s documentation: https://kubernetes.io/docs

- Ambassador documentation: https://www.getambassador.io/docs/

Kubernetes Workshop

By eon01

Kubernetes Workshop

- 7,254