First-order methods for structured optimization

Huang Fang, Department of Computer Science

Supervisor: Michael P. Friedlander

October 13th, 2021

- Optimization is everywhere: machine learning, operation research, data mining, theoretical computer science, etc.

- First-order methods:

- Why first-order methods?

Background and motivation

The literature

- Gradient descent can be traced back to Cauchy's work in1847.

- It is gaining increasing interest in the past 3 decades due to its empirical success.

- Fundamental works have been done by pioneering researchers (Bertsekas, Nesterov, etc.).

- There are still gaps between theory and practice.

- Coordinate optimization

- Mrrior descent

- Stochastic subgradient method

Coordinate Optimization

Coordinate Descent

For

- Select coordinate

- Update

Different coordinate selection rules:

- Random selection:

- Cyclic selection:

- Random permuted cyclic (the matrix AMGM inequality conjecture is false [LL20, S20])

- Greedy selection (Gauss-Southwell)

GCD for Sparse Optimization

regularizer

- One-norm regularization or nonnegative constraint can promote a sparse solution.

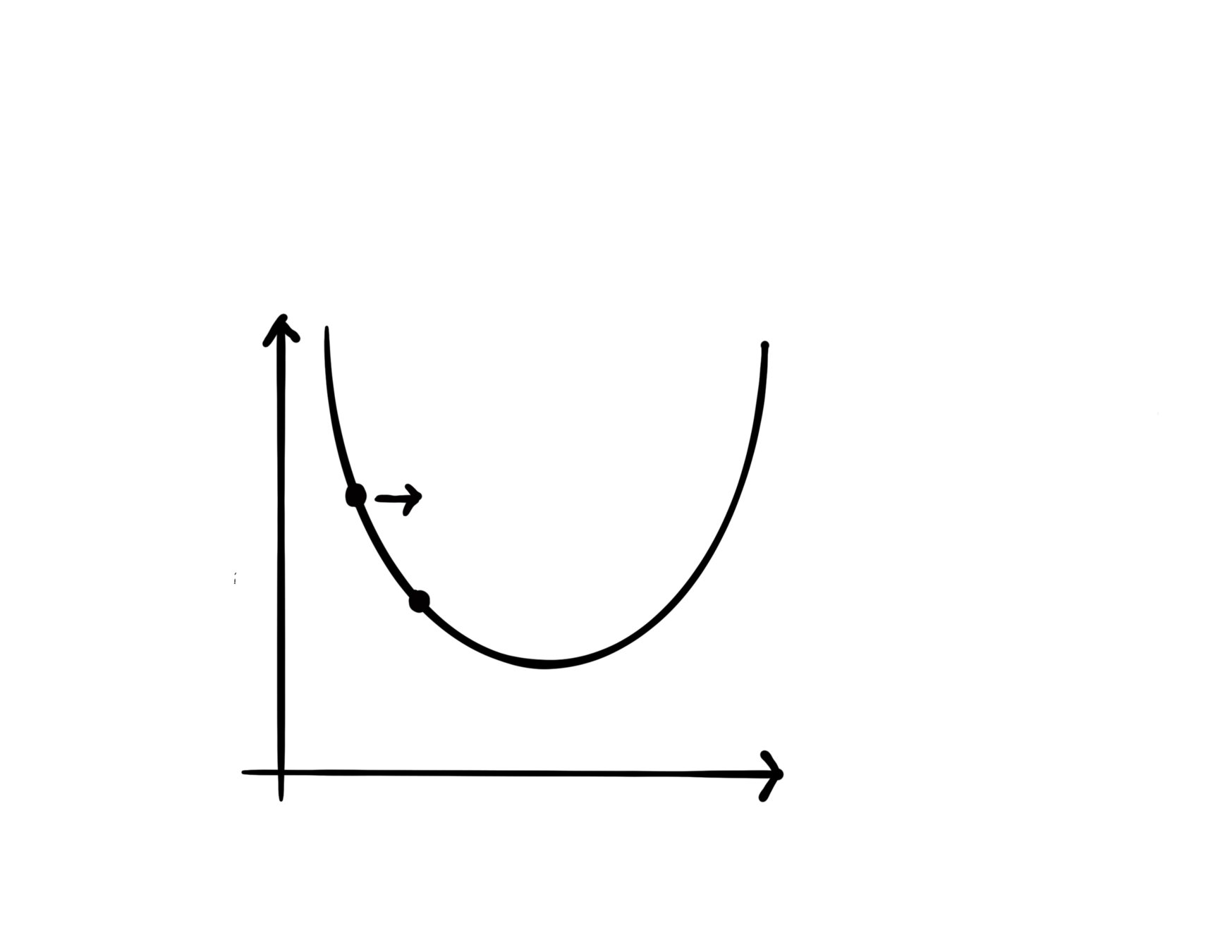

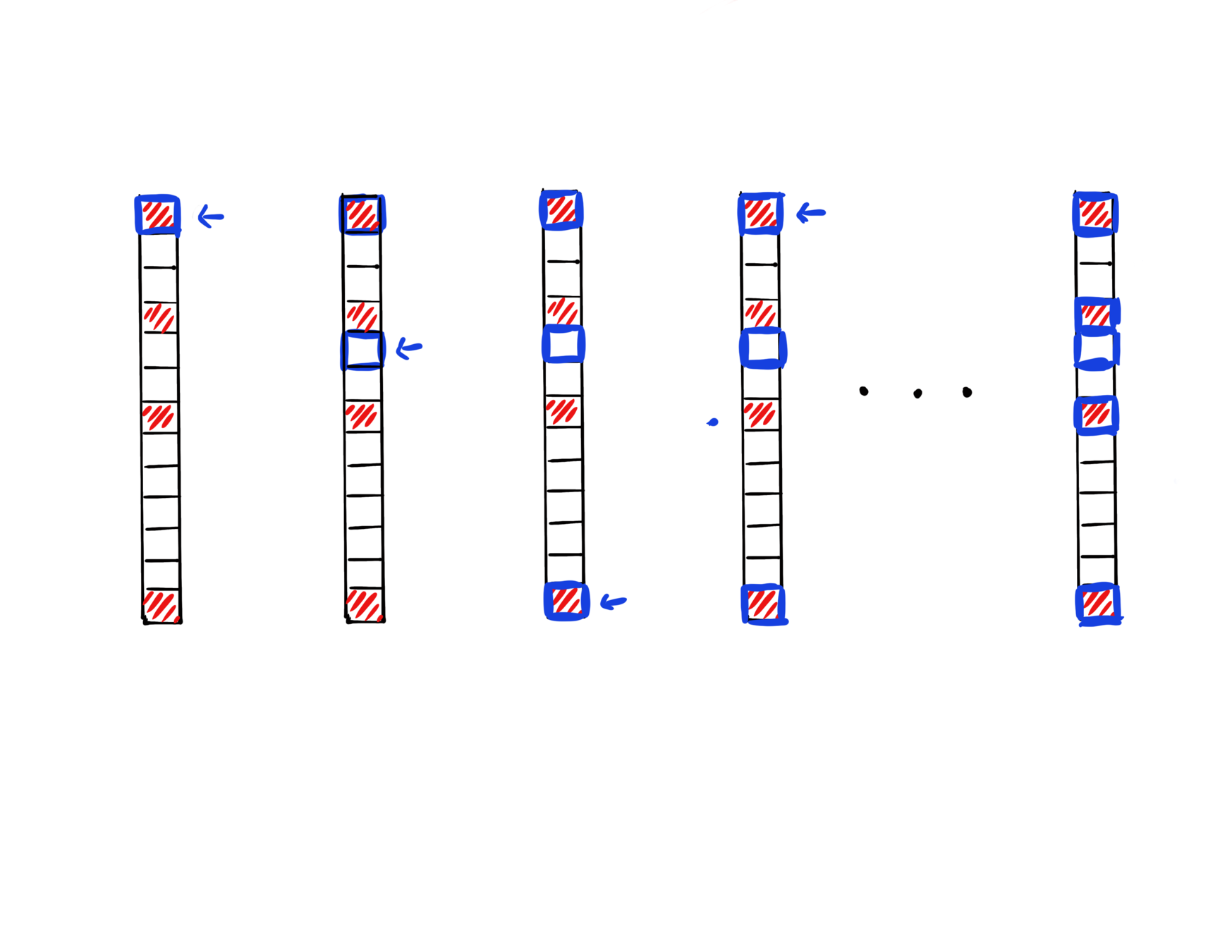

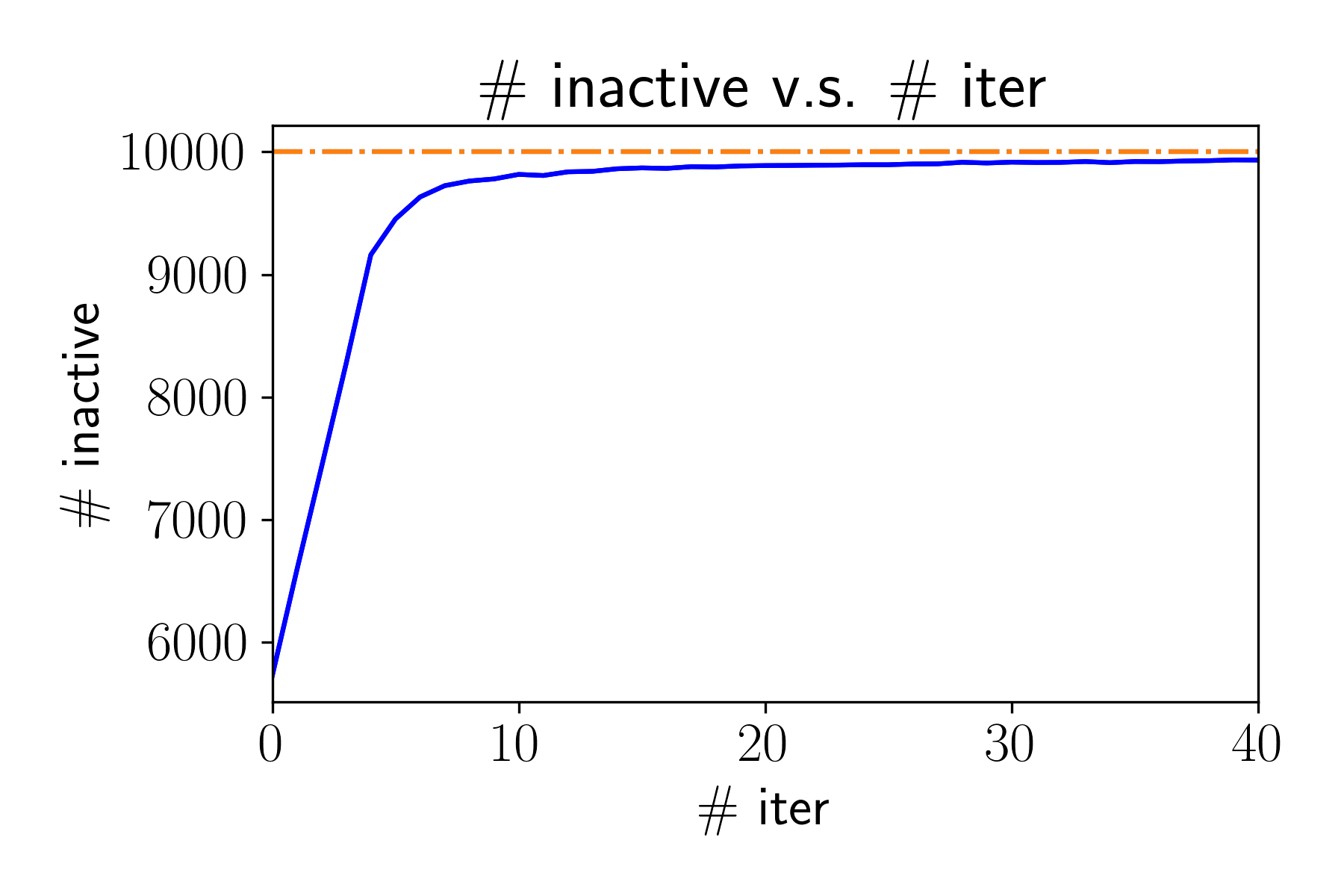

- When initailzed at zero, greedy CD is observed to have an implicit screening ability to select variables that are nonzero at solution.

Iter 1

Iter 2

Iter 3

Iter 4

Iter

data fitting

GCD for Sparse Optimization

[FFSF, AISTATS'20]

We provide a theoretical characterization of GCD's screening ability:

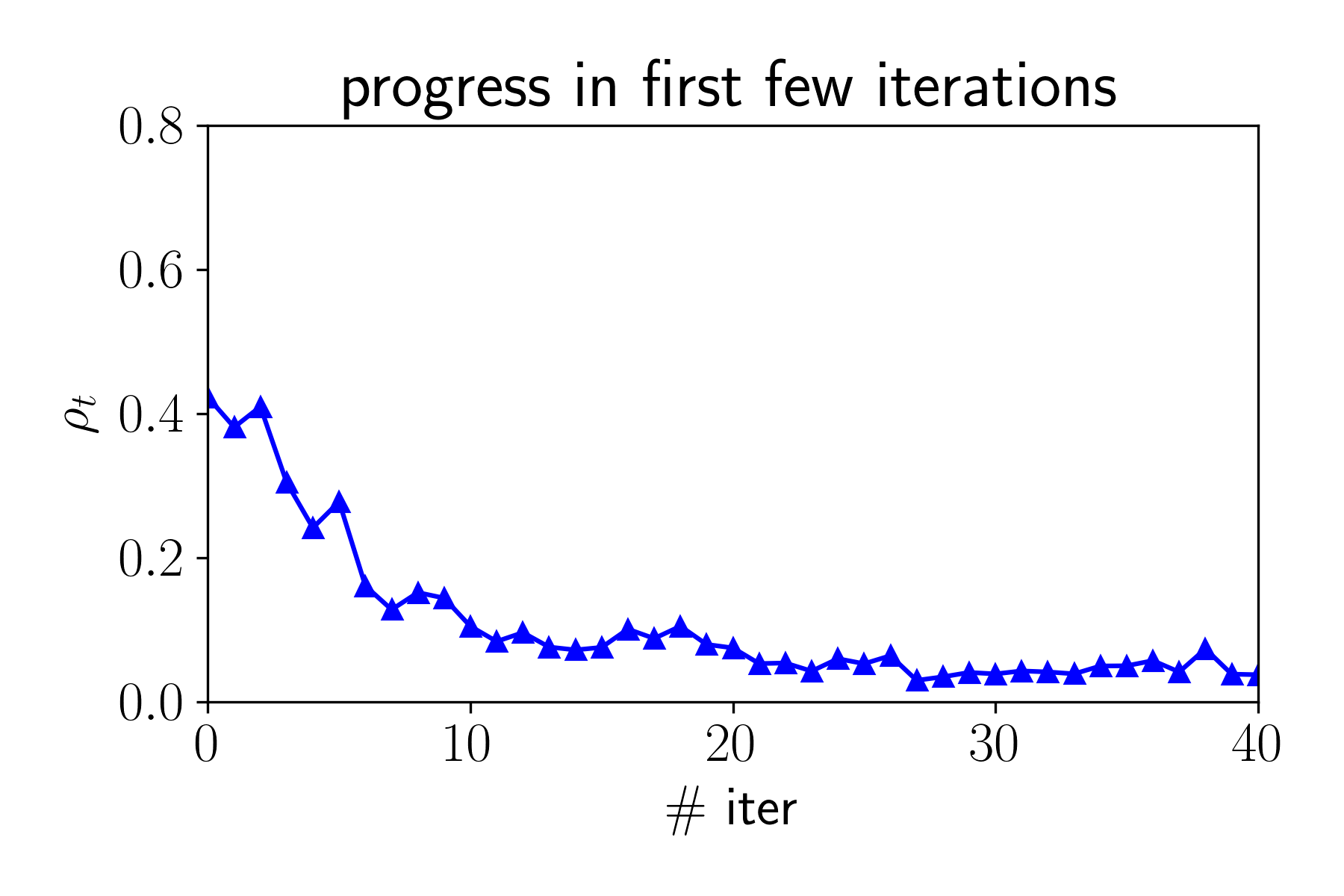

- GCD converges fast in first few iterations.

- The iterate is "close" the to solution when the iterate is still sparse, and sparsity pattern will not further expand anymore.

for

From coordinate to atom

Learning a sparse representation of an atomic set :

such that

- sparse vector:

- Low-rank matrix:

Our contribution: how to identify the atoms with nonzero coefficients at solution during the optimization process.

[FFF21] Submitted

Online Mirror Descent

Online Convex Optimization

Play a game for rounds, for

- Propose a point

- Suffer loss

The goal of online learning algorithm: obtain sublinear regret

player's loss

competitor's loss

Mirror Descent (MD) and Dual Averaging (DA)

- MD and DA are parameterized by mirror map, they have advantages over the vanilla projected subgradient method.

- When is known in advance or , both MD and DA guarantee regret

- When is unknown in advance and , then MD has rate while DA still guarantees regret.

Our contribution: fix the divergence issue of MD and obtain

regret.

OMD Algorithm

[FHPF, ICML'20]

Primal

Dual

Bregman projection

Figure accredited to Victor Portella

Stabilized OMD

[FHPF, ICML'20]

Primal

Dual

Bregman projection

}

With stabilization, OMD can obtain regret.

(Stochastic) Subgradient Method

Smooth v.s. Nonsmooth Minimization

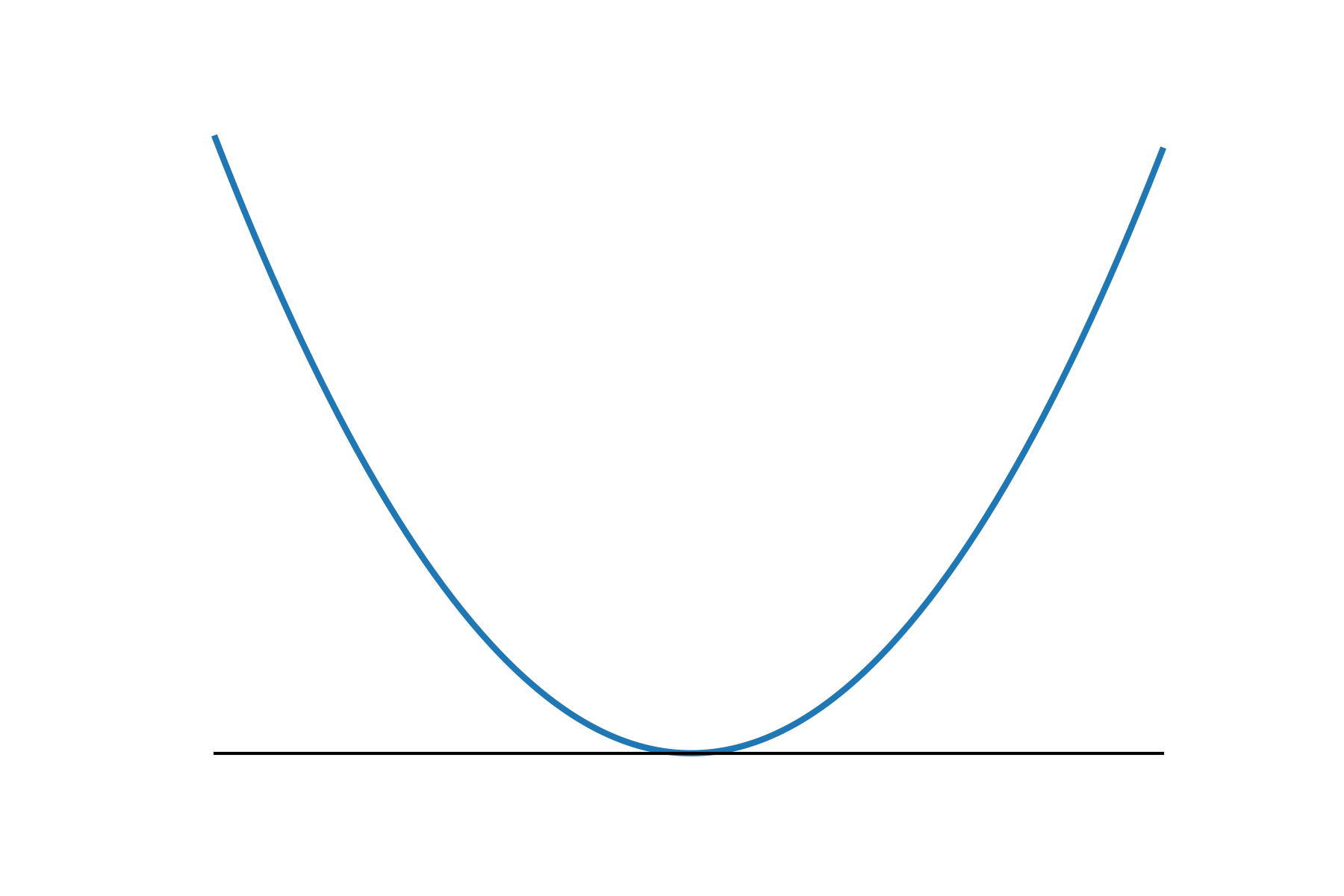

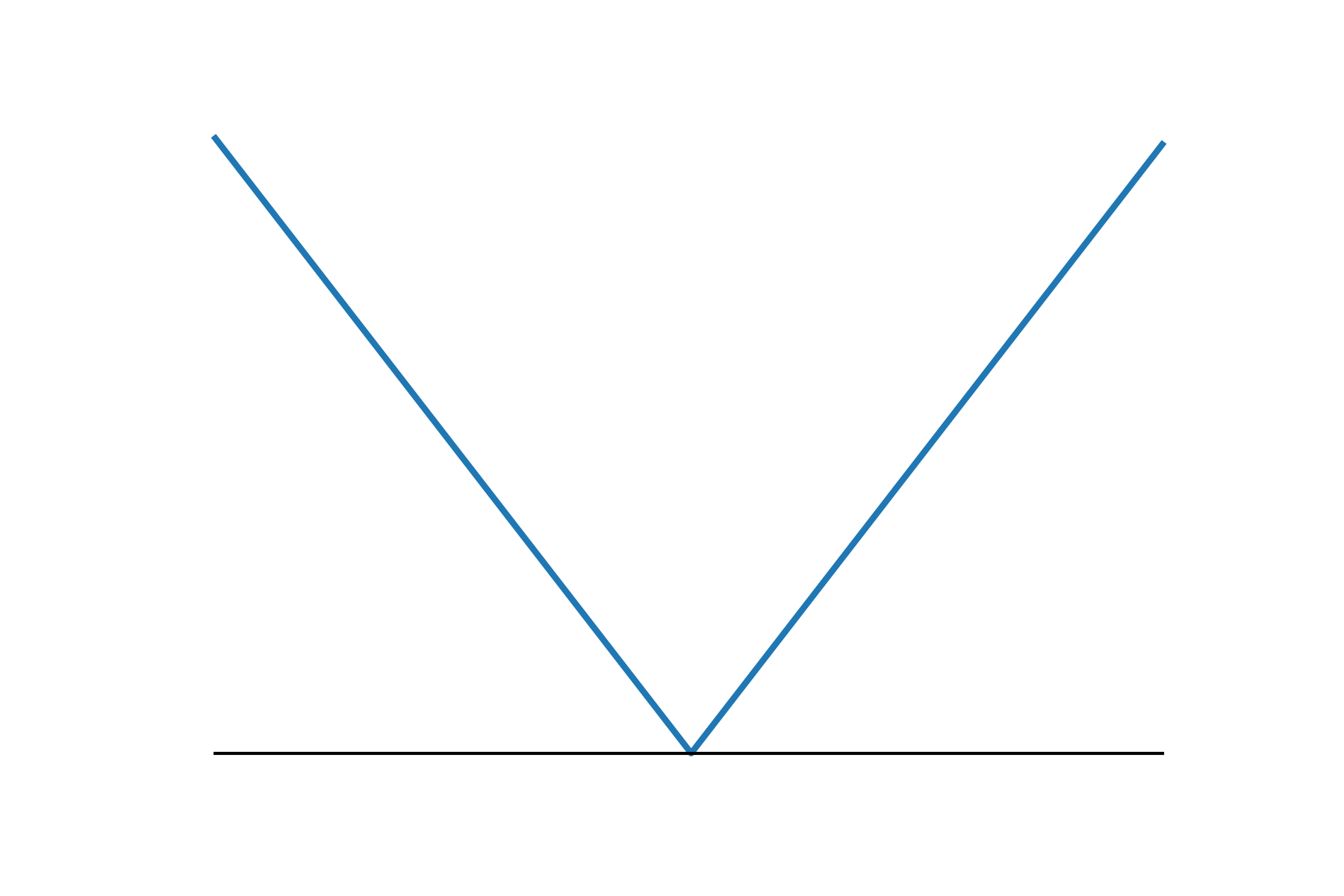

- Consider minimizing a convex function

- The iteration complexity of gradient descent (GD) and subgradient descent (subGD) for smooth and nonsmooth objectives:

- when is smooth:

- when is nonsmooth:

smooth

nonsmooth

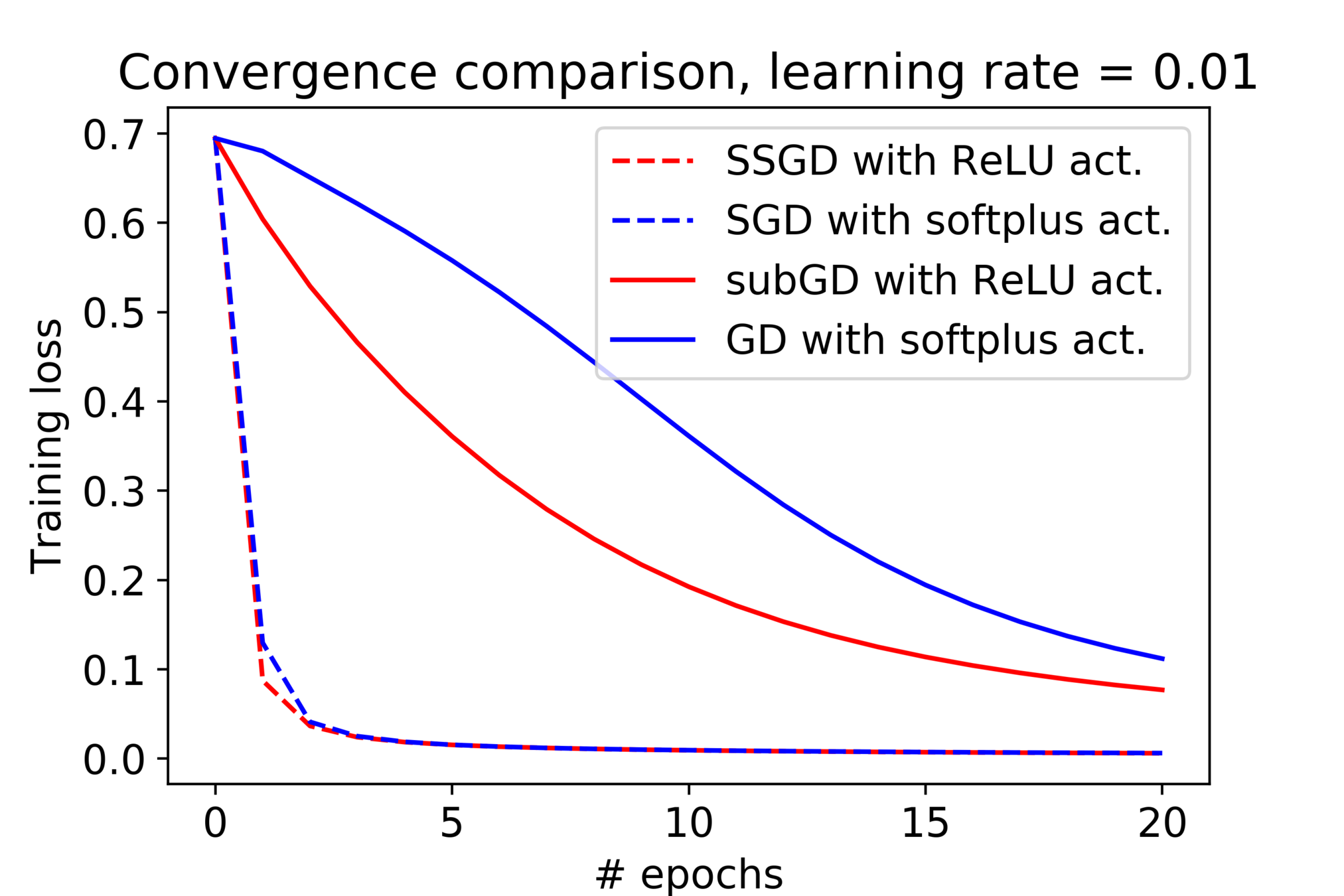

The empirical observation

Some discrepancies between theory and practice:

- Nonsmoothness from the model does not slow down our training in practice.

- The learning rate schedule can yield optimal iteration complexity but seldom used in practice.

Filling the gap

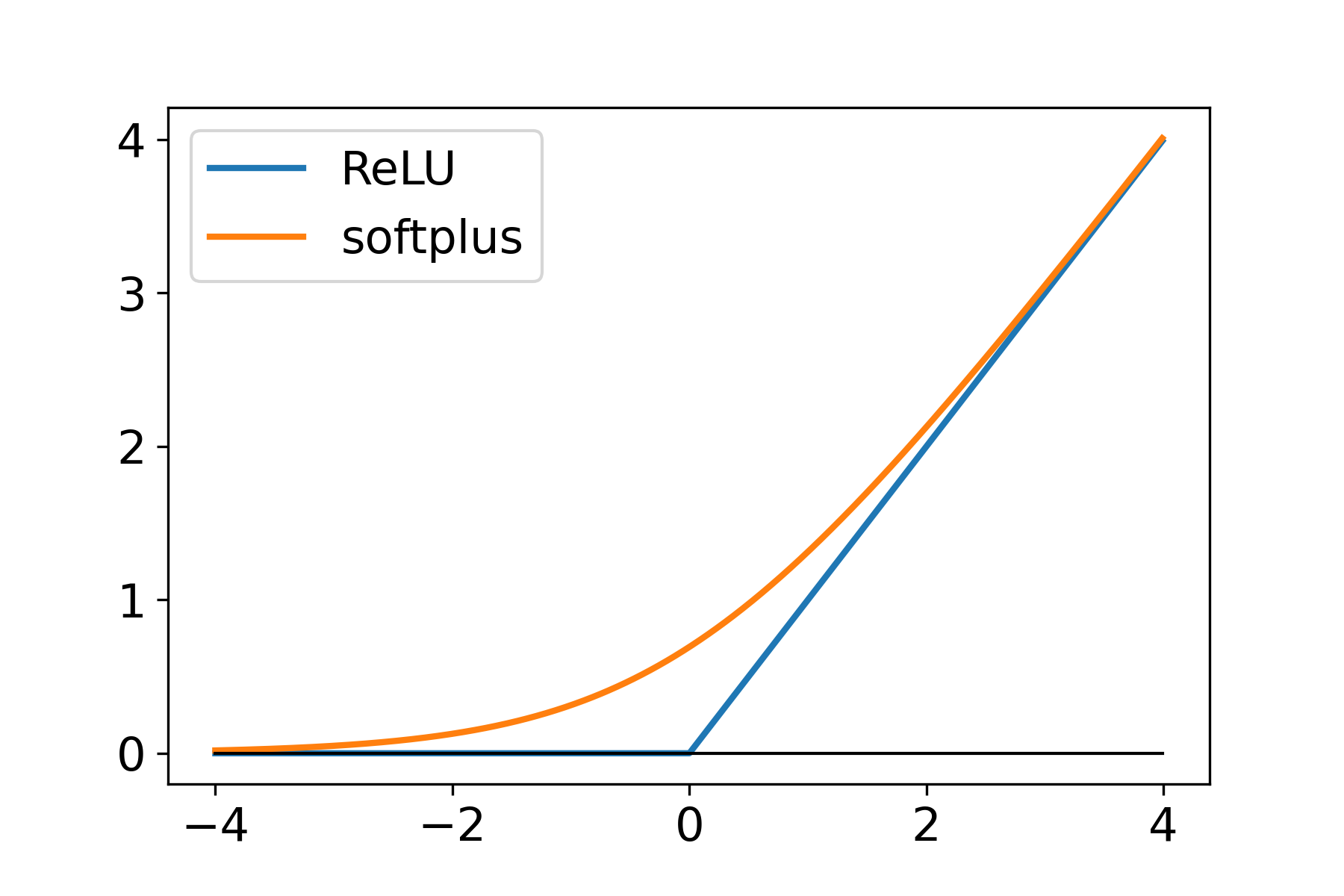

Our assumption:

- The objective satisfy certain structure:

where is a nonnegative, , convex, 1-smooth loss function, 's are Lipschitz continuous.

- The interpolation condition: there exist such that

We prove

[FFF, ICLR'21]

- Convex objective:

- Strongly convex objective:

Optimal

- The above rates match the rate of SGD for smooth objectives.

Acknowledgment

Advisor

Committee Members

Collaborators

University Examiners

External Reviewer

Thank you! Questions?

Backup slides

Backup slides for CD

GCD for Sparse Optimization

The GS-s rule:

The GS-r rule:

The GS-q rule:

Sparsity measure

where .

A key property:

The definition of

Norm inequality:

Sparsity measure

Matrix AMGM inequality

for all PSD matrix

The matrix AMGM inequality conjecture is false [LL20, S20])

Atom identification

where is the support function.

The primal-dual relationship:

This allows us to do screening base on dual variable:

Backup slides for OMD

Basics

Bregman divergence

Properties of mirror map

- is nonempty,

- is differentiable on , and

- , where is the boundary of , i.e.,

Examples:

Backup slides for SGD

Basics

| smooth | nonsmooth | smooth+IC | nonsmooth + IC | |

|---|---|---|---|---|

| convex | ||||

| strong cvx |

Semi-smooth properties

Assume , is -Lipschitz continuous and is nonnegative, convex, 1-smooth, minimum at 0, 1-dimensional function. Then

(the generalized growth condition)

Extension

High probability error bound

Conjecture: variance reduced SGD (SAG, SVRG, SAGA) + generalized Freedman inequality

Simple proof of exponential tail bound

thesis

By Fang Huang

thesis

Slides for my oral defense

- 983