KURSUS BDA

Abdullah Fathi

(MINDEF)

History of Big Data Analytics

History of Big Data Analytic

- The advent of Big Data Analytic was in response to the rise of Big Data, which began in 1990s.

- Before the term "Big Data" was coined, the concept was applied at the beginning of computer age when business use large spreadsheet to analyze numbers and look for trend

- Late 1990s and early 2000s was fueled by new sources of data (search engine and mobile devices)

- The faster the data created, the more that it need to be handled

- Whoever could tame the massive amounts of raw, unstructured information would open a treasure chest of insights about consumer behavior, business operations, natural phenomena and population changes never seen before.

History of Big Data Analytic

- Traditional data warehouses and relational databases could not handle the task

- In 2006, Hadoop was created by engineers at Yahoo and launched as an Apache open source project

- The distributed processing framework made it possible to run big data applications on a clustered platform

- At first, only large companies like Google and Facebook took advantage of big data analysis

- By the 2010s, retailers, banks, manufacturers and healthcare companies began to see the value of also being big data analytics companies

What is Big Data Analytic?

Set of techniques/processes used to discover relationships, recognize patterns, predict trends, and find associations in your data

Benefit & Advantages of BDA

- Risk Management: Banco de Oro, a Phillippine banking company, uses Big Data analytics to identify fraudulent activities and discrepancies.

- Product Development and Innovations: Rolls-Royce, uses Big Data analytics to analyze how efficient the engine designs are and if there is any need for improvements

- Quicker and Better Decision Making Within Organizations: Starbucks uses Big Data analytics to make strategic decisions.

- Improve Customer Experience: Delta Air Lines uses Big Data analysis to improve customer experiences. They monitor tweets to find out their customers’ experience regarding their journeys, delays, and so on

Example

Data Analytic

VS

Data Science

What's the difference?

Both data analytics and data science work depend on data

is an umbrella term for a more comprehensive set of fields that are focused on mining big data sets and

discovering innovative new insights, trends, methods, and processes.

Data Science

is a discipline based on gaining actionable insights to assist in a business's professional growth in an immediate sense.

It is part of a wider mission and could be considered a branch of data science.

Data Analytic

Data Science: Sources broader insights centered on the questions that need asking and subsequently answering

Data Analytics: Process dedicated to providing solutions to problems, issues, or roadblocks that are already present

Summary

But...

Data Science & Data Analytics are incredibly connected which both are delivering the same goals:

growth and improvement

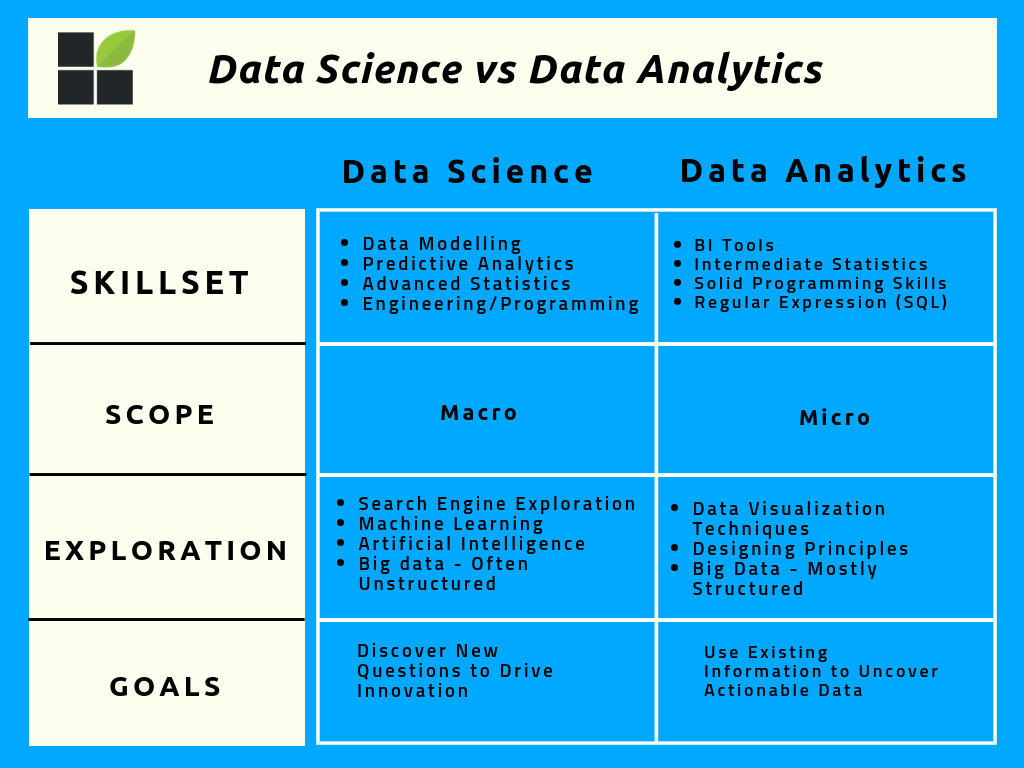

The Core Differences

Skillset

Data Science

- a comprehensive understanding of SQL database and coding.

- Working with large sets of unstructured metrics, and insights.

- The data scientist needs more "complex" skills in data modelling, predictive analytics, programming, data acquisition, and advanced statistics

- Machine learning and engineering or programming skills which enable them to manipulate data to their own will

Data Analytics

- Solid understanding of mathematics and statistical skills as well as programming skills.

- Knowledge of data visualisation tools (BI tools)

Scope (Big or Small)

Data Science

- Macro field that is multidisciplinary, covering a wider field of data exploration, working with enormous sets of structured and unstructured data

Data Analytics

- Micro field, drilling down into specific elements of business operations with a view to documenting departmental trends and streamlining processes either over specific time periods or in real time

- Concentrating mostly on structure data

Exploration

Data Science

- Data Science is used in major fields of corporate analytics, search engine engineering, and autonomous fields such as artificial intelligence (AI) and machine learning (ML)

Data Analytics

- Data analytics is a concept that continues to expand and evolve, but this particular field of digital information expertise or technology is often used within the healthcare, retail, gaming, and travel industries for immediate responses to challenges and business goals

Goals

Data Science

- Use the wealth of available digital metrics and insights to discover the questions that we need to ask to drive innovation, growth, progress, and evolution

Data Analytics

- Use existing information to uncover patterns and visualize insights in specific areas

Both Data Science and Data Analytics can be used to enhance your business’s efficiency, vision, and intelligence

Use Data Science to uncover new insight and Data Analytics for current insight to ensure the sustainable progress of your business

ROLES, SKILL-SETS & RESPONSIBILITY

Who is Data Analyst, Data Engineer and Data Scientist?

| Data Analyst | Data Engineer | Data Scientist |

|---|---|---|

| Data Analyst analyes numeric data and use it to help agencies/organisation/company make better decision | Data Engineer involves in preparing data. They develop, constructs, tests & maintain complete architecture | Data Scientist analyses and interpret complex data. They are data wranglers who organize big data |

Data Analyst

- Most entry-level professionals interested in getting into a data-related

- Good statistical knowledge

- Strong technical skills would be a plus

- Understand data handling, modeling and reporting techniques

- Understanding of the business

Data Engineer

- Master’s degree in a data-related field

- Good amount of experience as a Data Analyst

- Strong technical background with the ability to create and integrate APIs

- Understand data pipelining and performance optimization

Data Scientist

- Analyses and interpret complex digital data

- Acquiring enough experience and learning the various data scientist skills

- These skills include advanced statistical analyses, a complete understanding of machine learning, data conditioning etc

Skill-Sets

| Data Analyst | Data Engineer | Data Scientist |

|---|---|---|

| Data Warehousing | Data Warehousing & ETL | Statistical & Analytical Skills |

| Adobe & Google Analytic | Advanced Programming Knowledge | Data Mining |

| Programming Knowledge | Hadoop-based Analytics | ML & Deep Learning Principles |

| Scripting & Statistical Skills | In-dept knowledge of SQL/database | In-depth programming knowledge (SAS/R/Python coding) |

| Reporting & Data visualization | Data architecture & pipelining | Hadoop-based analytics |

| SQL/ database knowledge | ML concept knowledge | Data Optimization |

| Spread-Sheet knowledge | Scripting, reporting & data visualization | Decision making & soft skills |

Data Analyst's primary skill set revolves around data acquisition, handling, and processing

Data Engineer requires an intermediate level understanding of programming to build thorough algorithms along with master statistics and math

Data Scientist needs to master Data Analyst & Data Engineering. Data, stats and math along with in-depth programming knowledge for ML and Deep earning

ROLES AND RESPONSIBILITIES

Roles and responsibilities for data analyst, data engineer and data scientist are quite similar.

| Data Analyst | Data Engineer | Data Scientist |

|---|---|---|

| Pro-processing and data gathering | Develop, test & maintain architectures | Responsible for developing Operational Models |

| Emphasis on representing data via reporting and visualization | Understand programming and its complexity | Carry out data analytics and optimization using ML & Deep Learning |

| Responsible for statitical analysis & data interpretation | Deploy ML & statistical models | Involved in strategic planning for data analytics |

| Ensures data acquisition & aintenance | Building pipelines for various ETL operations | Integrate data & perform ad-hoc analysis |

| Optimize Statistical Efficiency & Quality | Ensures data accuracy and flexibility | Fill in the gap between the stakeholders and customer |

Data scientist and data engineers roles are quite similar, but a data scientist is the one who has the upper hand on all the data related activities

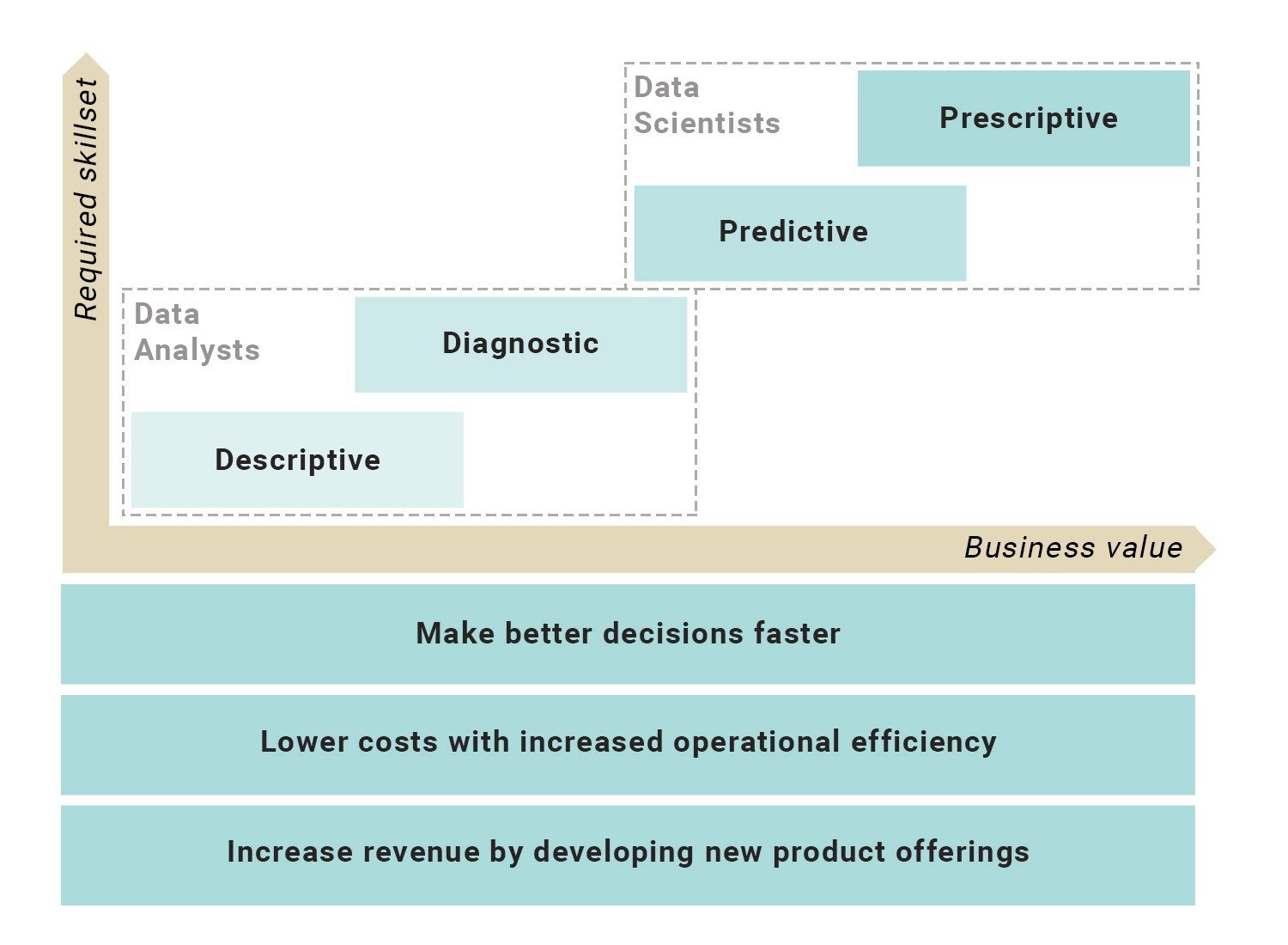

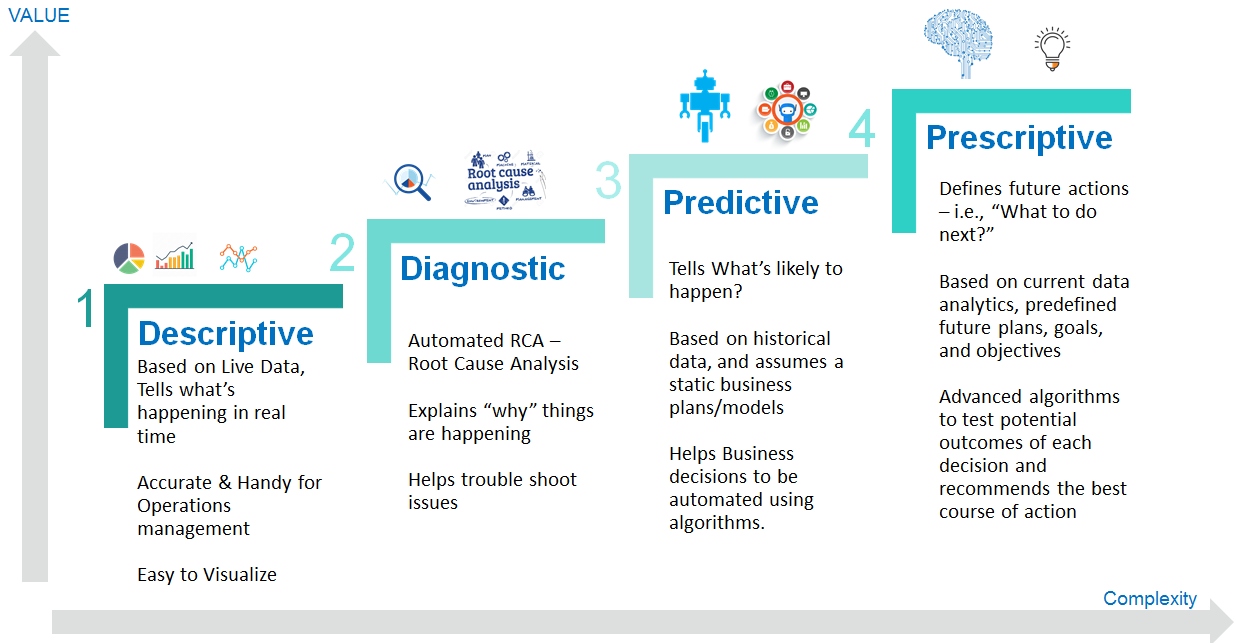

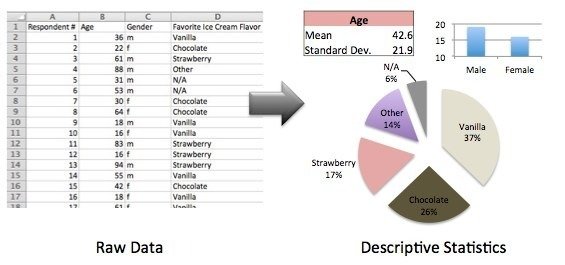

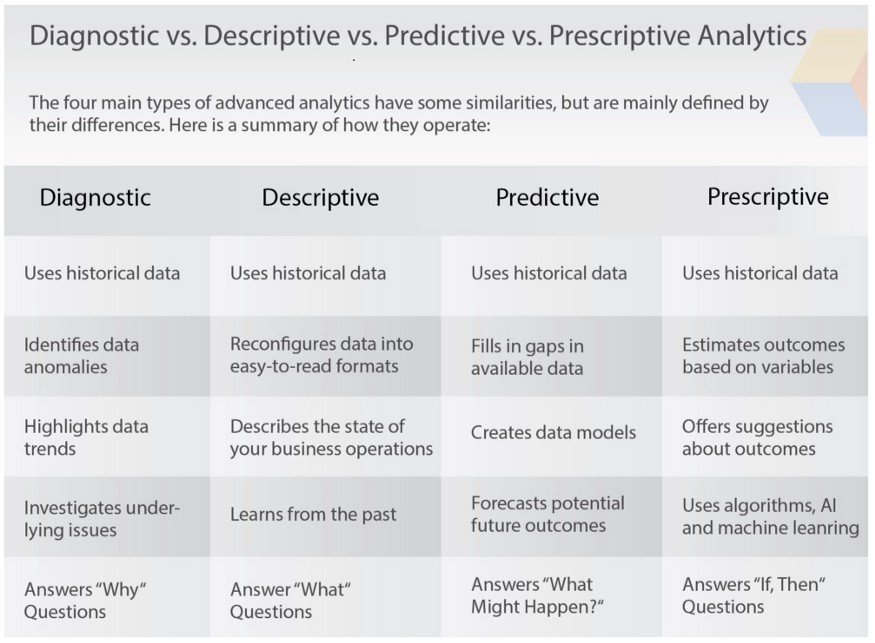

4 Major Types of Analytic

Descriptive

Analytic

Explains What Happened

-

Descriptive analytics juggles raw data from multiple data sources to give valuable insights into the past

-

Describing, summarizing, and identifying patterns through calculations of existing data, like mean, median, mode, percentage, frequency, and range

-

The baseline from which other data analysis begins.

-

It is only concerned with statistical analysis and absolute numbers, it can’t provide the reason or motivation for why and how those numbers developed

- Statistics

- Distribution

Some example of analyses used at this stage:

Statistic

- Provide valuable information

- The most important statistical parameters are the minimum, the maximum, the mean, and the standard deviation

| Minumum | Maximum | Mean | Standard Deviation | |

|---|---|---|---|---|

| Total Amount (RM) | 12 | 500 | 51 | 56 |

As we can see, each customer has spent an average of RM51, but some people have spent up to RM500

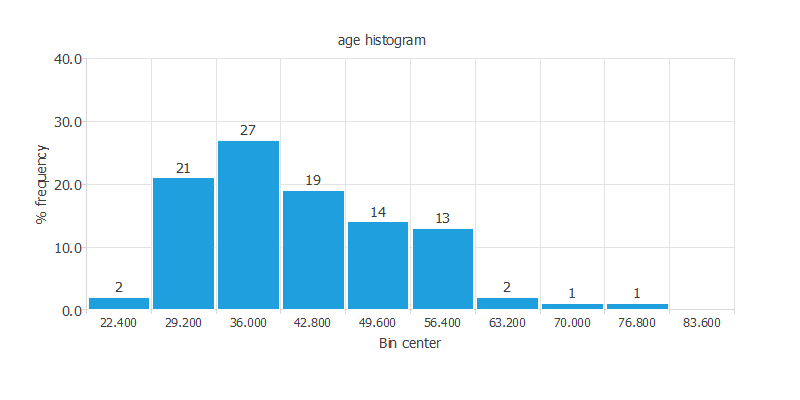

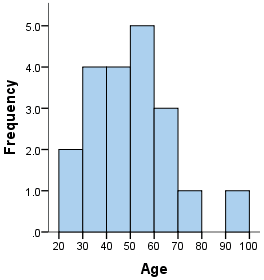

Distributions

- show how the data is arranged over its entire range

- Histograms show how continuous variables are distributed

- A normal (gaussian) or uniform distribution is, in general, desirable

Distributions

As we can see, most of the customers are between

30 and 40 years old

Diagnostic

Analytic

Explains Why Did Something Happened

-

Like descriptive analytics, diagnostic analytics also focus on the past

-

Look for cause and effect to illustrate why something happened

-

The objective is to compare past occurrences to determine causes

-

Provided in the context of probability, likelihood, or a distributed outcome.

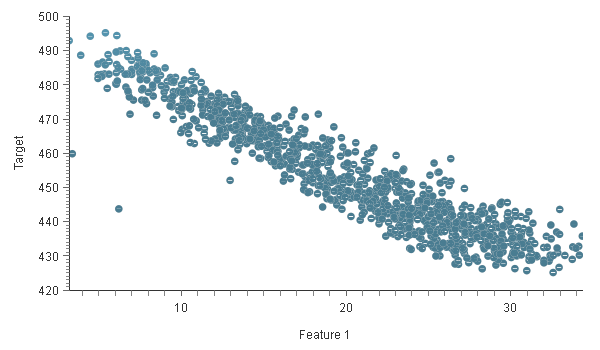

What is Diagnostic Analytics?

- Scatter Charts

- Correlation

In this stage, usually we focus on the following techniques:

Scatter Charts

Scatter charts might help to discover dependencies between the output variables and the input variables

Scatter Charts

The chart above shows that as the value of Feature 1 increases, the value of Target decreases

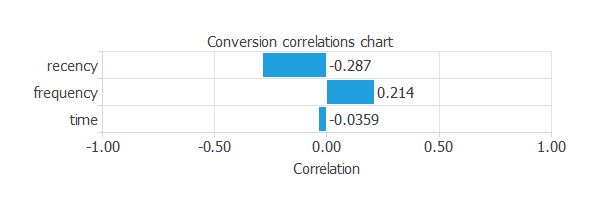

Correlations

- Helpful technique to discover dependencies between input and output variables

- A correlation is a numerical value between 0 and 1 that expresses the strength of the relationship between two variables.

The maximum correlation (-0.287) is a yield between the recency and the conversion. Such a high correlation indicates that we have to study this variable more thoroughly

Correlations

Predictive

Analytic

What is Likely To Happen

-

Uses the findings of descriptive and diagnostic analytics to detect clusters and exceptions and to predict future trends

-

Based on Machine Learning or Deep Learning

-

The more data points you have, the more accurate the prediction is likely to be

What is Predictive Analytics?

No analytics will be able to tell you exactly what WILL happen in the future.

Predictive analytics put in perspective what MIGHT happen, providing respective probabilities of likelihoods

given the variables that are being looked at

- K-Nearest Neighbour (KNN)

- Decision Trees

- Neural Networks

In this stage, It encompasses various machine learning techniques such as:

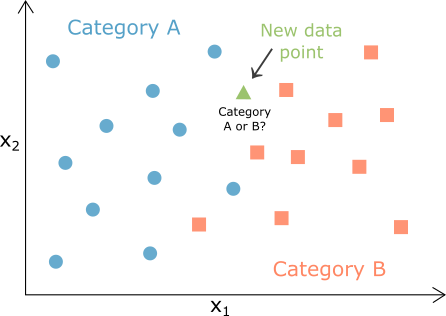

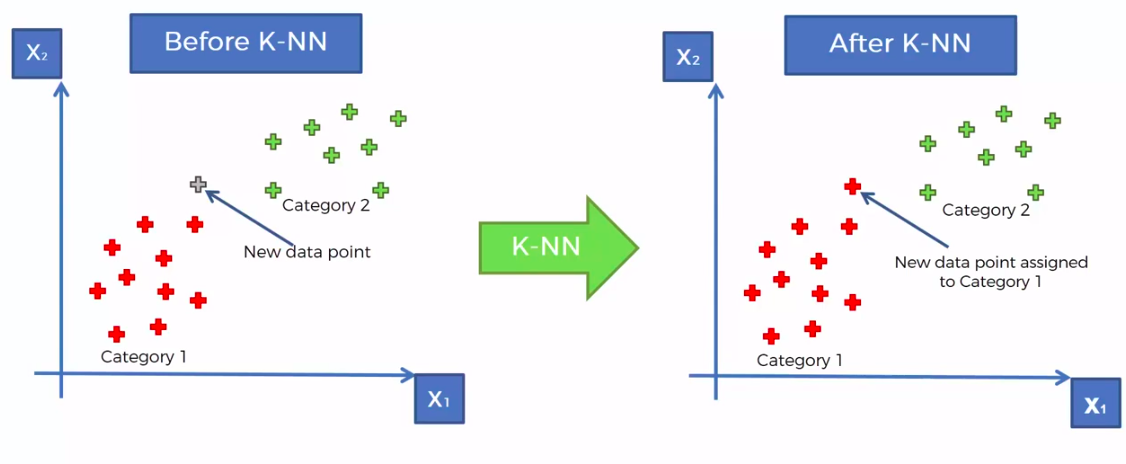

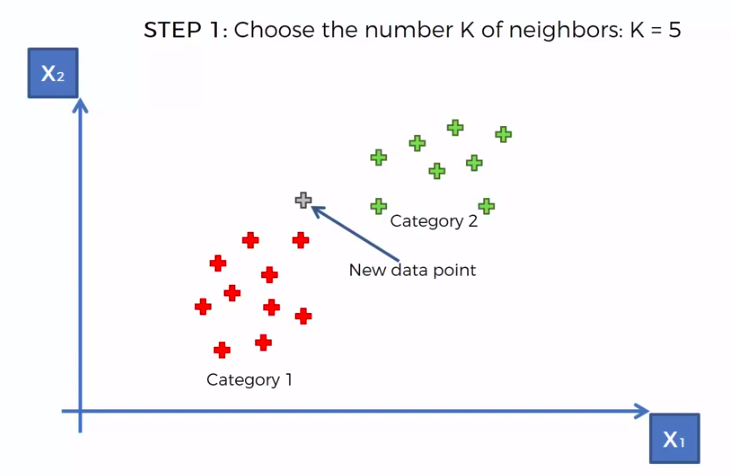

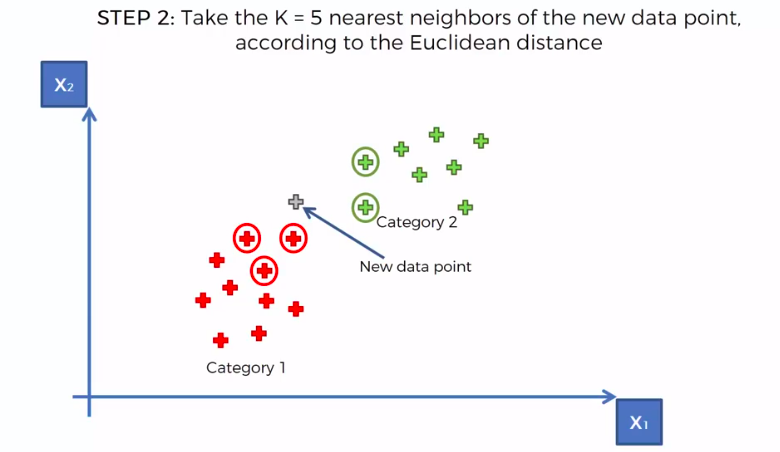

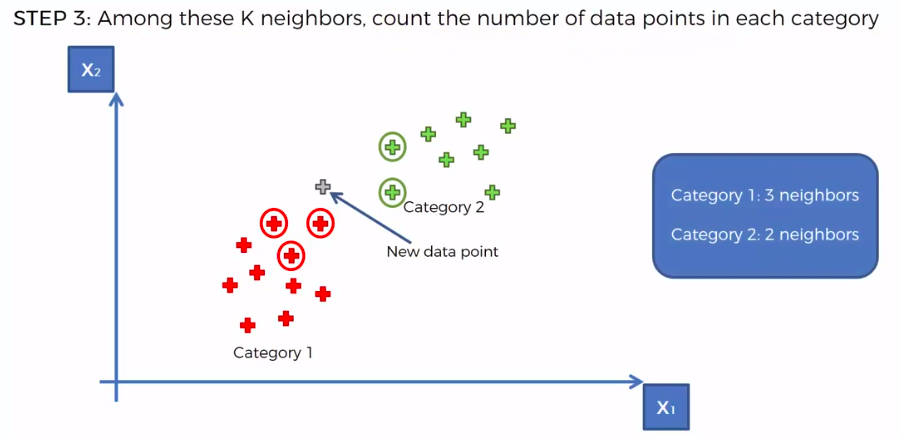

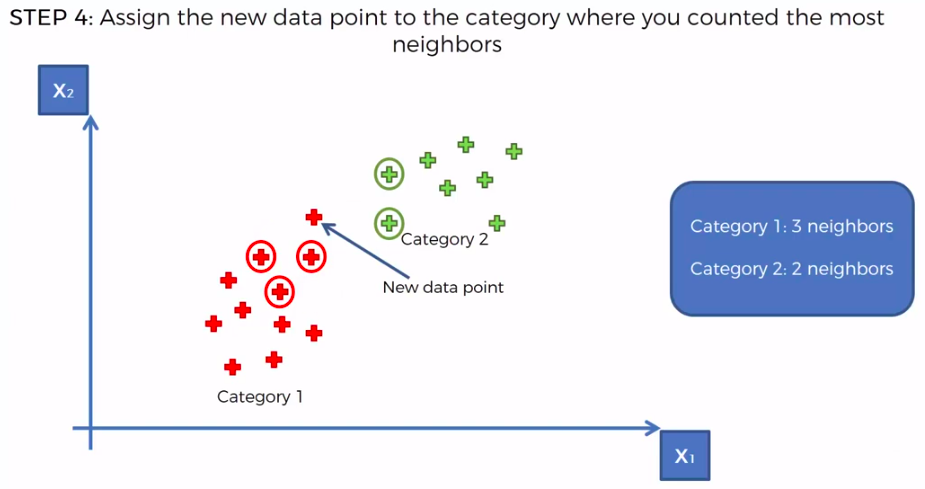

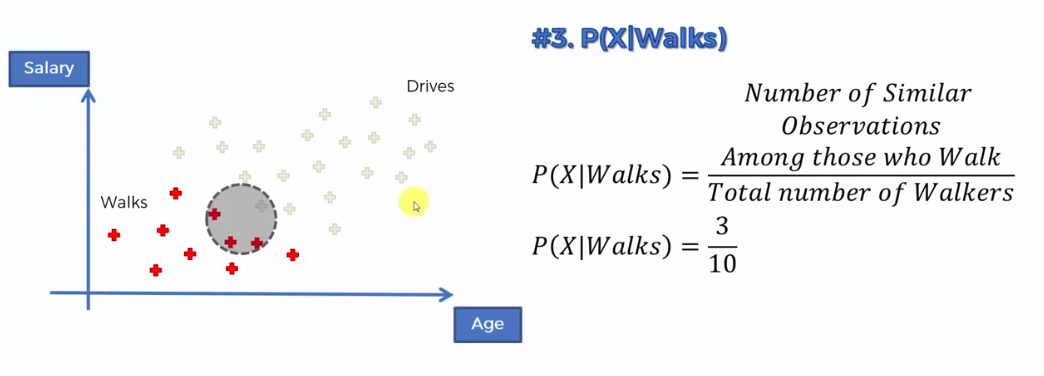

K – Nearest neighbors

K-nearest neighbors is a very simple method used for classification and approximation

K – Nearest neighbors

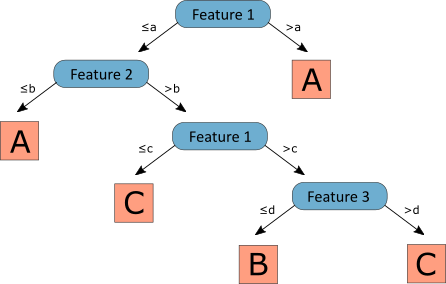

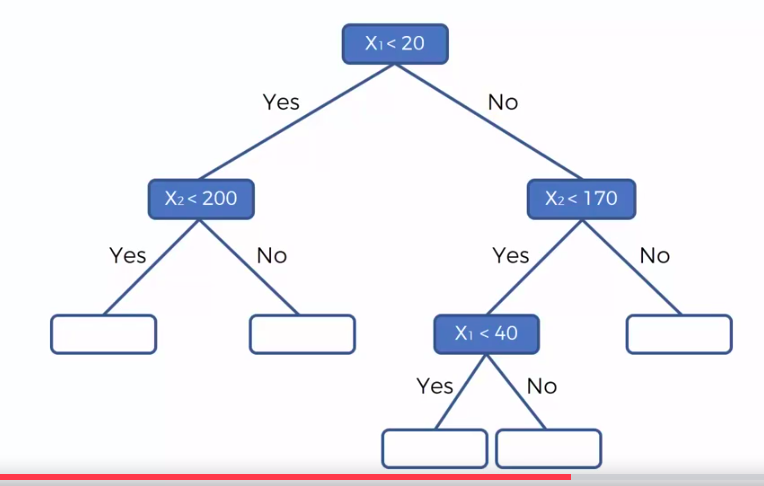

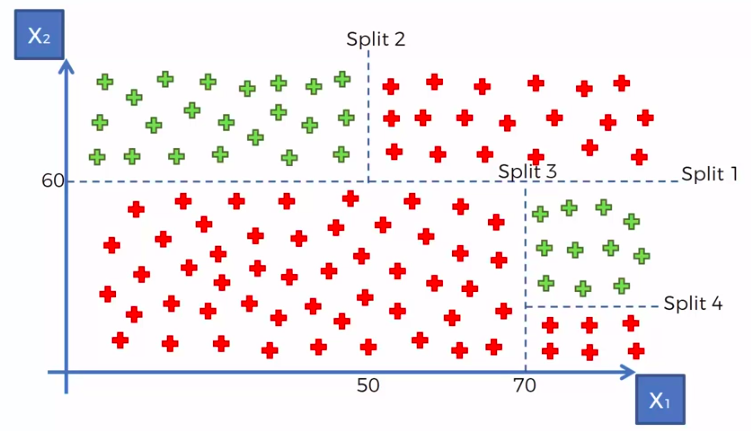

Decision Trees

- Decision trees are also a simple method used for classification and approximation.

- A decision tree is a mathematical model helping you to choose between several courses of action. It estimates probabilities to calculate likely outcomes

Decision Trees

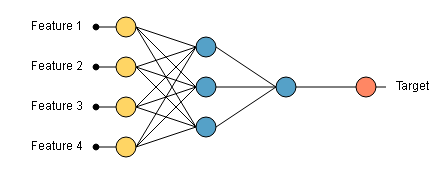

Neural Network

- One of the most powerful machine learning methods

- Used in classification and approximation tasks

- Neural networks are computational models based on the neural structure of the brain

Neural Network

The outputs from the neural network depend on the inputs fed to it and the different parameters within the neural network

The graph above shows a neural network with four inputs (feature 1, 2, 3, and 4). When we introduce the values of the four features in the neural network, we get an output

Prescriptive

Analytic

What Action Should be Taken

-

Combines all of your data and analytics, then outputs a model prescription: What action to take

-

Analyze multiple scenarios, predict the outcome of each, and decide which is the

best course of action based on the findings -

Prescribe what action to take to eliminate a

future problem or take full advantage of a promising trend -

Uses Machine Learning, Algorithms, Artificial Intelligence (AI)

What is Prescriptive Analytics?

In this phase, we not only is predicted what will happen in the future using our predictive model but also is shown to the decision-maker the implications of each option

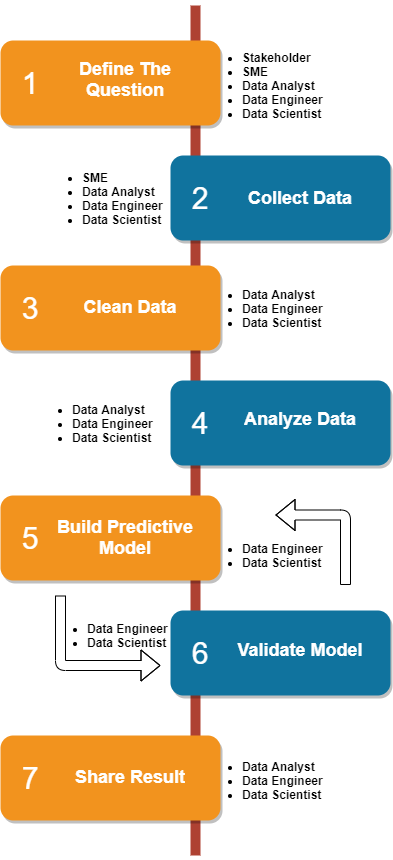

Steps to do BDA

Step 1: Defining the question

- Also known as Problem Statement

- Coming up with hypothesis and figuring how to test it

- Data analyst's job is to understand the business and its goals in enough depth that they can frame the problem the right way

- After you've defined a problem, determine which sources of data will best help you solve it

Step 2: Collecting the Data

- Create a strategy for collecting and aggregating the appropriate data.

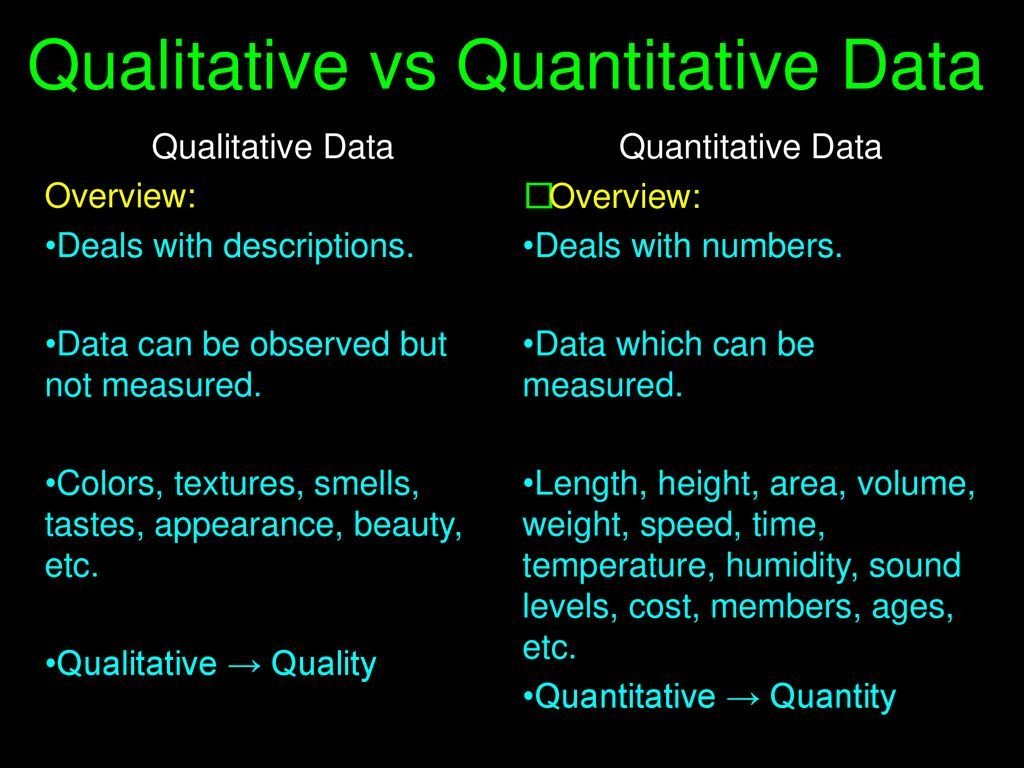

- Determine which data you need:

- Quantitative (numeric) data

- Qualitative (descriptive) data

- These data need to fit in one of these categories:

- 1st party data

- 2nd party data

- 3rd party data

Step 2: Collecting the Data

1st party data

- Internal data from your system in agencies or any sort of data from your agency that you can directly access to it.

- First-party data is usually structured and organized in a clear, defined way.

- Other sources of first-party data might include customer surveys, interviews or direct observation

Step 2: Collecting the Data

2nd party data

- First-party data of other agencies or organizations

- Example: system, app, social media activity

Step 2: Collecting the Data

3rd party data

- Data that has been collected and aggregated from numerous sources by third-party organizations

- Often contains a vast amount of unstructured data points

Step 3: Cleaning the data

- Make the data ready for analysis

- Make sure you're working with high-quality data

- Data cleaning task:

- Get Rid of unwanted observations

- Fix structural errors

- Standardize your data

- Remove unwanted outliers

- Type conversion and syntax errors

- Deal with missing data

- Validate your dataset

A good data analyst will spend around 60-80% of their time cleaning the data. Focusing on the wrong data points will severly impact your analytical result

What happens if an Analysis is Performed on Raw Data?

Wrong data class prevents calculations to be performed

Missing data prevents

functions to work properly

Outliers corrupt the output and produce bias

The size of the data requires too much computation

Step 3.1: Get Rid of unwanted observations

- Remove observations (or data points) you don't want

- Remove irrelevant observations, those that don't fit the problem you're looking to solve

- Let say we are running analysis on vegetarian eating habits, we could remove any meat-related observations from our data set.

- Remove duplicate data

- Duplicate data commonly occurs when you combine multiple datasets, scrape data online, or receive it from third-party sources.

Step 3.2: Fix structural errors

- Typos and inconsistent capitalization, which often occur during manual data entry

- "merah", "Merah" may appear as separate classes (or categories)

- Look out for the use of underscores, dashes, and other punctuation

Step 3.3: Standardize your data

- Decide whether values should be all lowercase or all uppercase, and keep this consistent throughout your dataset

- Numerical data use the same unit of measurement

- kilometre/meter: combining these in one dataset will cause a problem

- date (dd/mm/yyyy or mm/dd/yyyy)

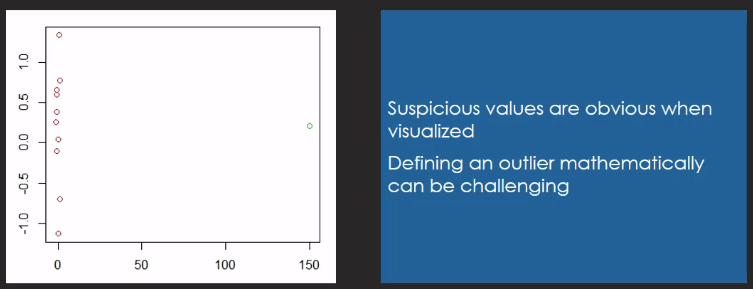

Step 3.4: Remove unwanted outliers

- Outliers are data point that dramatically differ from others in the set

- Can cause problem with certain of data models and analysis such as linear regression models

- Only remove outliers if you can prove that it is due to incorrect data entry

Deleting and Exchanging Outlier

Deleting and exchanging methods have their drawbacks with small samples

Assumption: The data point is faulty

An outlier totally out of scale might be a wrong measurement

- Not likely when measurements are automated

- Human mistakes are more likely to occur

Outliers can be valid measurements and they might reveal hidden potentials

Scatterplot with Outlier

Step 3.5: Type conversion and syntax errors

- Ensure that numbers are numerical data

- Ensure that text as text input, dates as object, and so on

- Remove syntax error/white space

Step 3.6: Deal with missing data

NA

- Missing data is common in raw data

- NA: Not Available or Not Applicable

- There could be various reasons behind NAs

- The amount of missing values matters

- Get information on the missing values from the person providing/creating the dataset

Step 3.6: Deal with missing data

- 3 common approach to handle missing data:

- Remove the entries associated to missing data: Losing other important information

- Impute (or guess) the missing data based on other similar data: Might reinforce existing pattern, which could be wrong

- Flag the data as missing (often the best one): ensure that empty field has the same value such as '0' (if numerical) or 'missing'

Carrying out an exploratory analysis

- Thing that many data analyst do (alongside cleaning data)

- Helps identify initial trends and characteristics, and can even refine your hypothesis.

Step 4: Analyze the data

3 Methods applied:

- Univariate Analysis

- Bivariate Analysis

- Multivariate Analysis

Exploratory Data Analysis (EDA): To discover relationships between measures in data and to gain insight on the trends, pattern and relationship among various entities with the help of statistic and visualisation tools

Univariate Analysis

Uni means one and variate means variable

- There is only one dependable variable

- Objective: derive the data, define and summarise it

- In dataset, it explore each variable separately

- 2 kind of variables:

- Categorical

- Numerical

Univariate Analysis: Frequency distribution Tables

Reflects how often an occurrence has taken place in the data. It gives a brief idea of the data and make it easier to find a pattern

| IQ Range | Number |

|---|---|

| 118-125 | 3 |

| 126-133 | 7 |

| 134-141 | 4 |

| 142-149 | 2 |

| 150-157 | 1 |

Example: The list of IQ scores is: 118, 139, 124, 125, 127, 128, 129, 130, 130, 133, 136, 138, 141, 142, 149, 130, 154

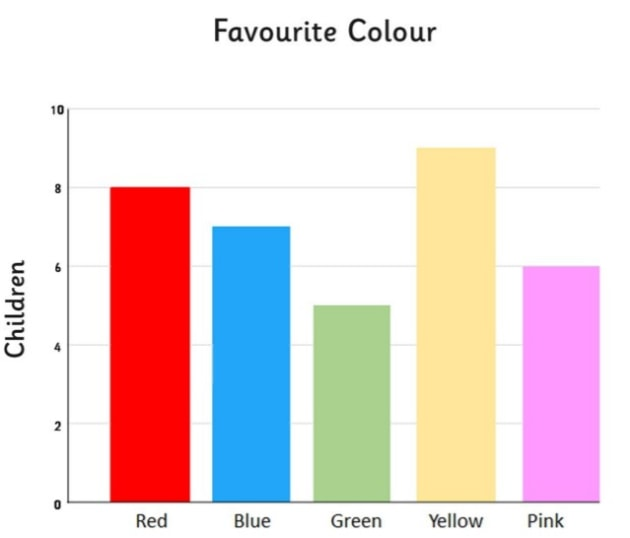

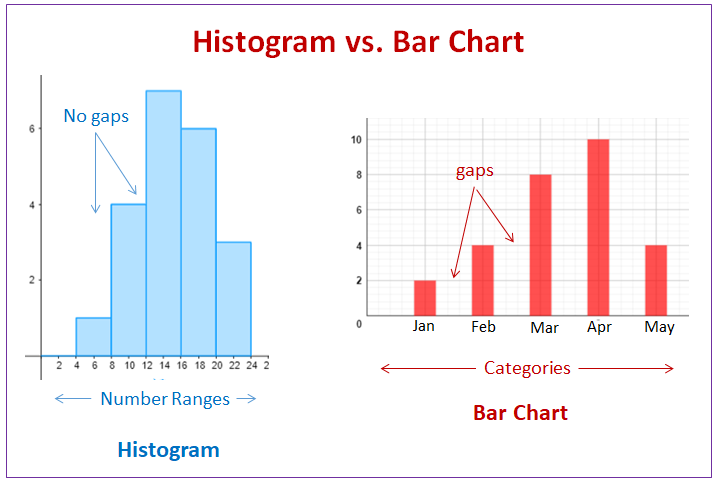

Univariate Analysis: Bar Charts

The bar graph is very convenient while comparing categories of data or different groups of data. It helps to track changes over time. Best to visualizing discrete data (variable that only store certain value)

Bar chart is a great way to display categorical variables in the x-axis. This type of graph denotes two aspects in the y-axis.

- The first one counts the number of occurrence between groups.

- The second one shows a summary statistic (min, max, average, and so on) of a variable in the y-axis

Univariate Analysis: Histogram

Similar to bar charts. Represent the group of variables with values in the y-axis

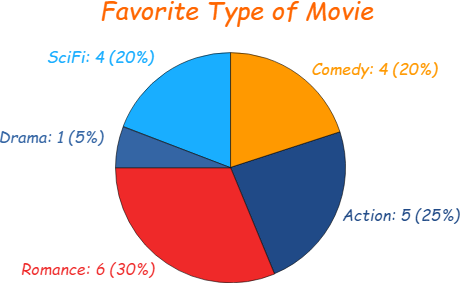

Univariate Analysis: Pie Charts

Mainly used to comprehend how a group is broken down into smaller pieces. The whole pie represents 100%.

Bivariate Analysis

Bi means two and variate means variable. Relationship between two variables

- There are 3 types of bivariate analysis:

- Two Numerical Variables

- Scatter Plot

- Linear Correlation

- Two Categorical Variables

- Chi-square test

- One Numerical and One Categorical

- z-test and t-test

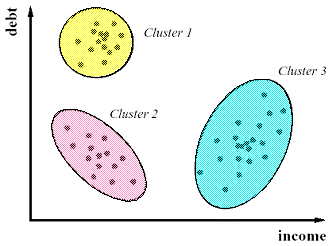

- Two Numerical Variables

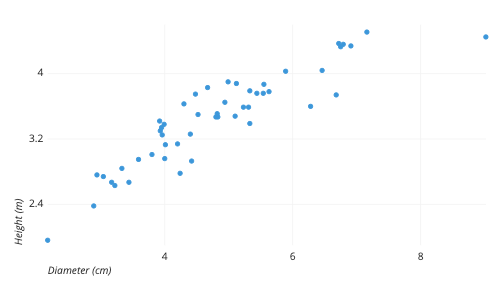

Bivariate Analysis: Scatter Plot

Represents individual pieces of data using dots. These plots make it easier to see if two variables are related to each other. The resulting pattern indicates the type (linear or non-linear) and strength of the relationship between two variables

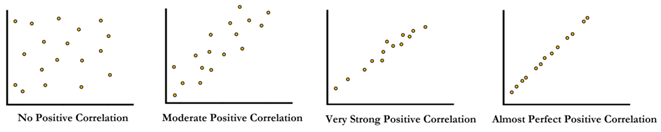

Bivariate Analysis:

Linear Correlation

Represents the strength of linear relationship between two numerical variables

Bivariate Analysis:

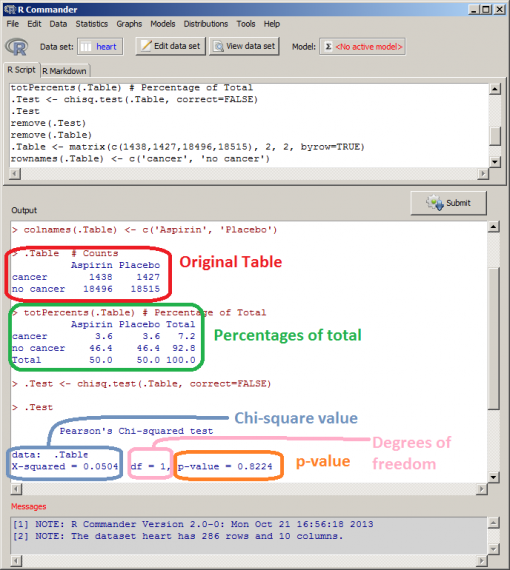

Chi-square Test

Use for determining the association between categorical variables

Bivariate Analysis:

Z-test and t-test

A t-test is a statistical test that is used to compare the means of two groups. It is often used in hypothesis testing to determine whether a process or treatment actually has an effect on the population of interest, or whether two groups are different from one another.

You want to know whether the mean petal length of iris flowers differs according to their species. You find two different species of irises growing in a garden and measure 25 petals of each species. You can test the difference between these two groups using a t-test.

- The null hypothesis (H0) is that the true difference between these group means is zero.

- The alternate hypothesis (Ha) is that the true difference is different from zero.

From the output table, we can see that the difference in means for our sample data is -4.084 (1.456 – 5.540), and the confidence interval shows that the true difference in means is between -3.836 and -4.331. So, 95% of the time, the true difference in means will be different from 0. Our p-value of 2.2e-16 is much smaller than 0.05, so we can reject the null hypothesis of no difference and say with a high degree of confidence that the true difference in means is not equal to zero.

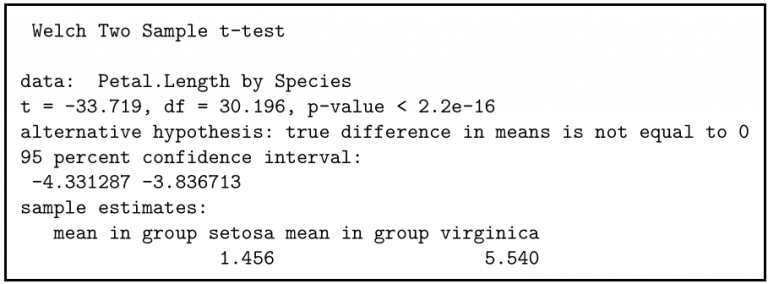

Multivariate Analysis

Required when more than two variables have to be analyzed simultaneously. It is hard to visualize a relationship among 4 variables in graph.

- There are 2 types of multivariate analysis:

- Cluster analysis

- Principal Component Analysis (PCA)

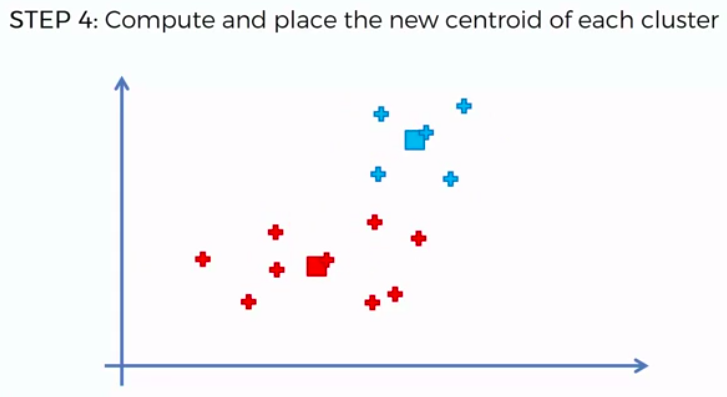

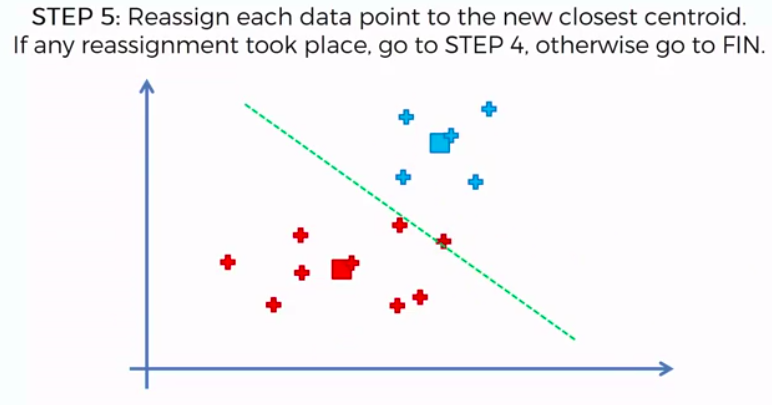

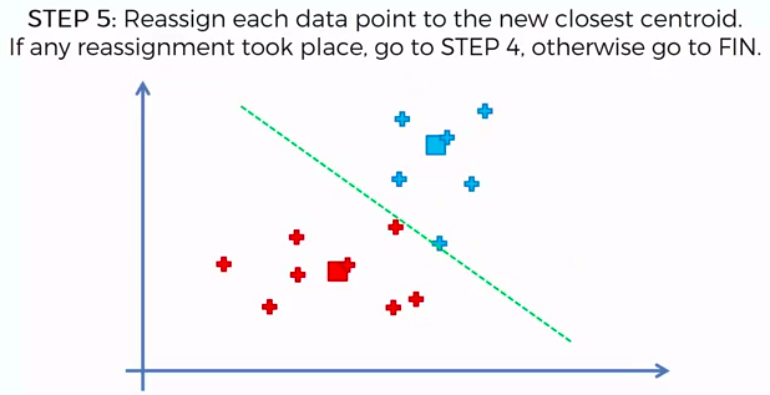

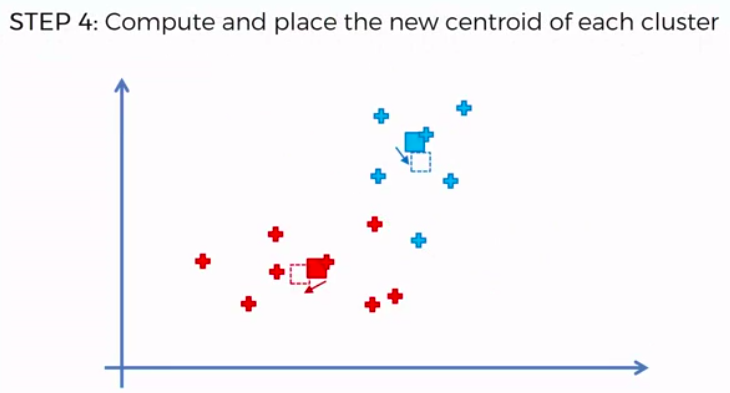

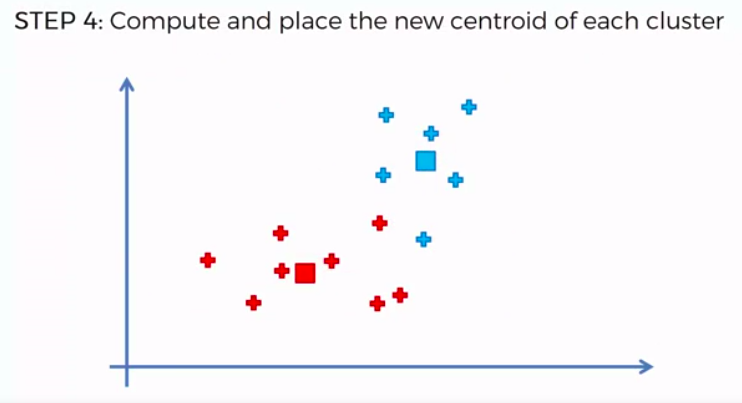

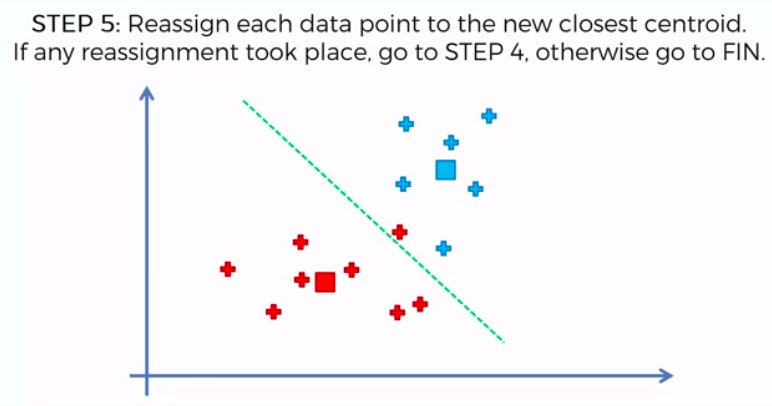

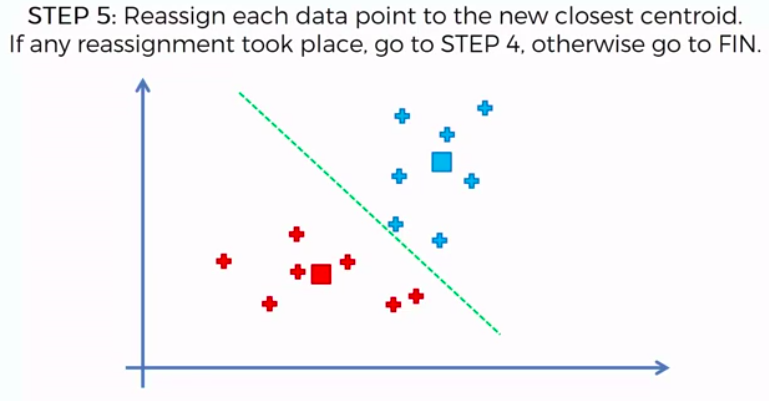

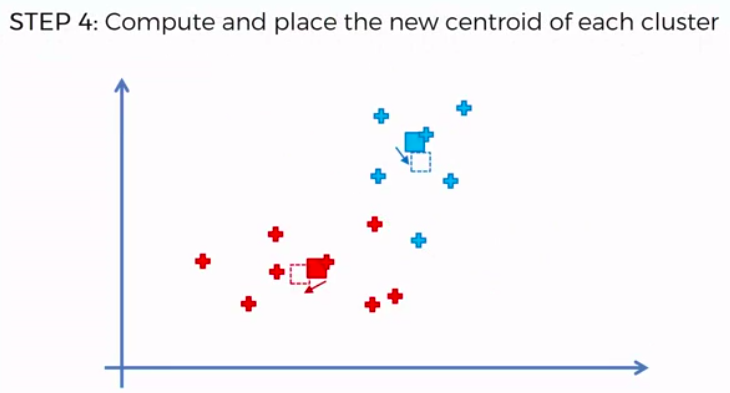

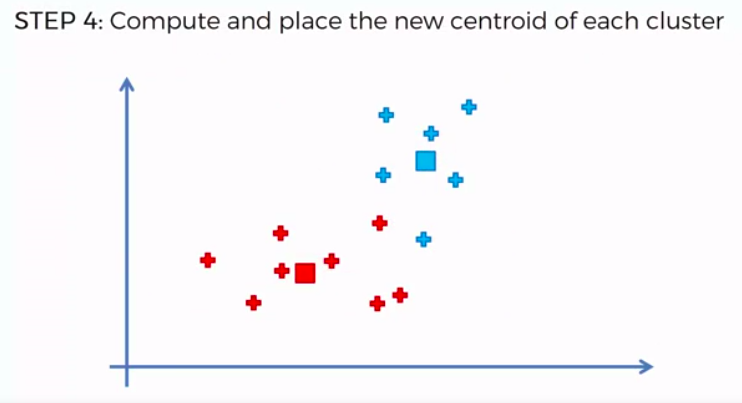

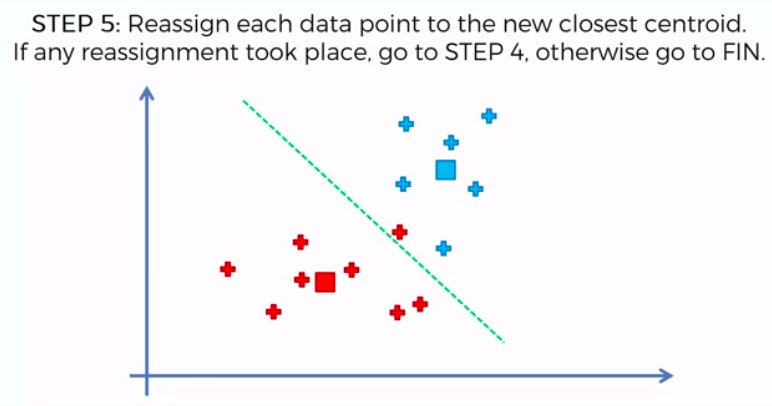

Multivariate Analysis:

Cluster Analysis

Classify different objects into clusters in a way that the similarity between two objects from the same group is maximum and minimal otherwise

Multivariate Analysis:

Principal Component Analysis (PCA)

Reducing dimensionality of a data table with large number of interrelated measures.

Step 5:

Build Predictive Model

Use various algorithms to build predictive models

Step 6:

Validation

Check the efficiency of our model

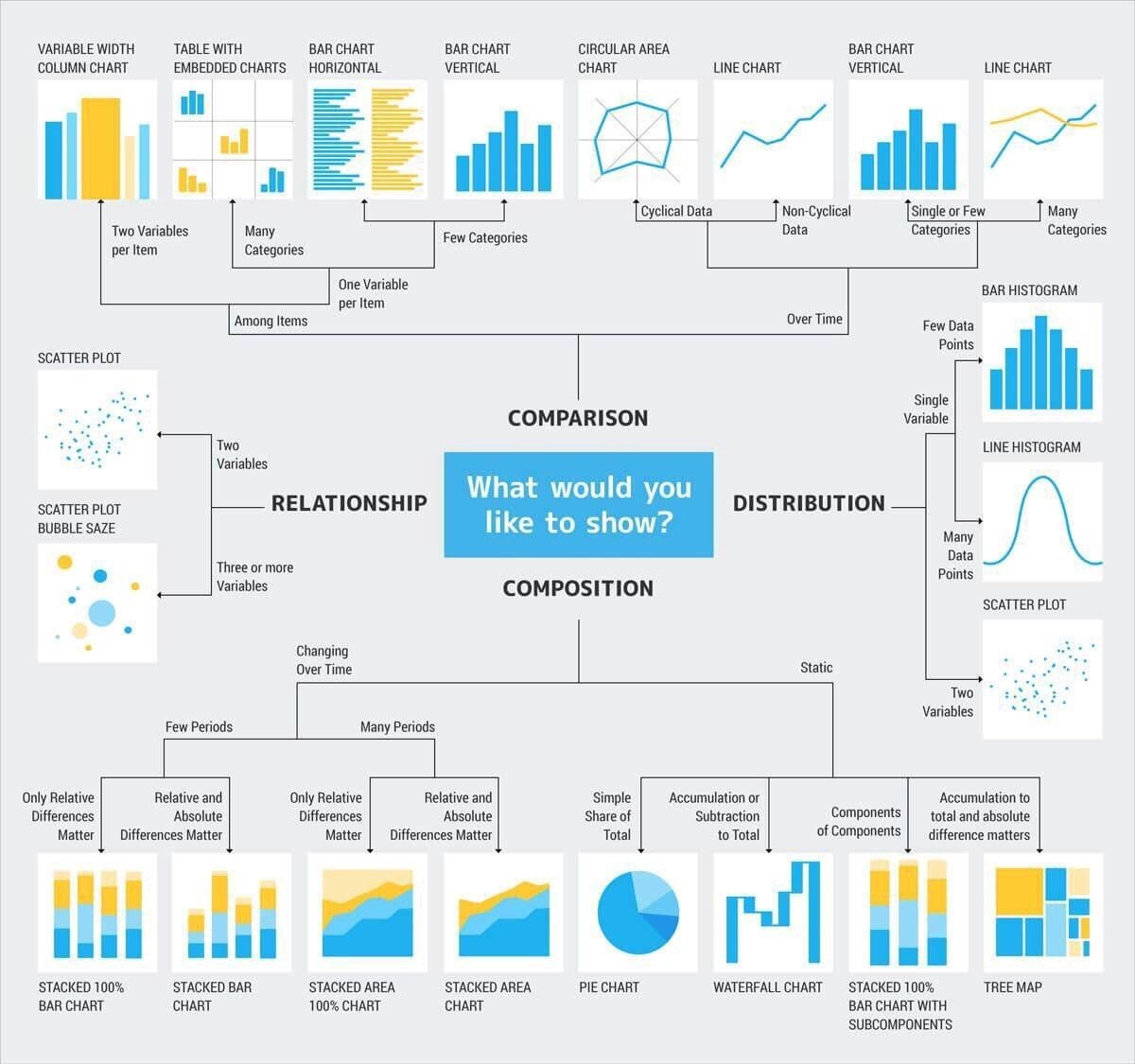

Step 7: Sharing your results

- Share the insight with the wider world

- Interpreting the outcomes, and presenting them in a manner that's digestible for all types of audience

- How you interpret will often influence the direction of business

- It's important to provide all the evidence that you've gathered.

BDA Technique

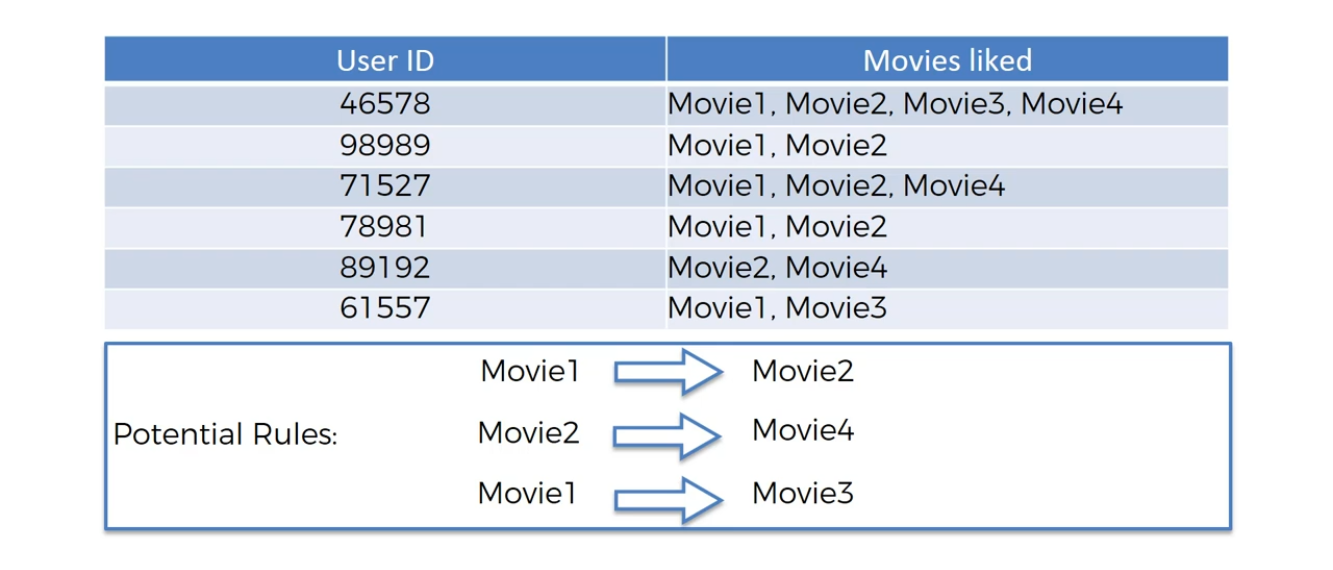

Association Rule Learning

Are people who purchase tea more or less likely to purchase carbonated drinks?

- Discover interesting correlations between variables in large databases

- First used by major supermarket chains to discover interesting relations between products,

People who bought also bought ...

Association Rule Learning is being used to help:

- Place product in better proximity to each other in order to increase sales

- Extract information about visitors to websites from web server logs

- Identify if people who buy milk and butter more likely to buy diapers

ARL:

Movie Recommendation

ARL:

Market Basket Optimisation

Classification Tree Analysis

Which categories does this document belong to?

- Method of identifying categories that a new observation belongs to

- Which it being used to:

- Automatically assign documents to categories

- Categorize organism into grouping

- Develop profile of students who take online course

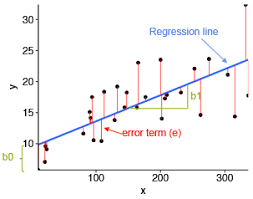

Regression Analysis

Which categories does this document belong to?

- Manipulating some independent variable to see how it influences a dependent variable

- Works best with quantitative data

- Regression analysis is used to determine:

- Levels of customer satisfaction affect customer loyalty

- The number of support calls received may influence by the weather forecast given the previous day

- Neighbourhood and size affect the listing price of houses

Time Series Analysis

Statistical technique used to identify trends and cycles over time

- Time series data is a sequence of data points which measure the same variable at different points in time.

- Main pattern you'll be looking out for in your data are:

- Trends: Stable, linear, increase or decrease over an extended time period

- Seasonality: Predictable fluctuations in the data due to seasonal factors over a short period of time. ex: you might see a peak in raincoat sales in November around the same time every year.

- Cyclic Pattern: Unpredictable cycles where the data fluctuates. As a result of economic or industry-related conditions.

Time series visualization

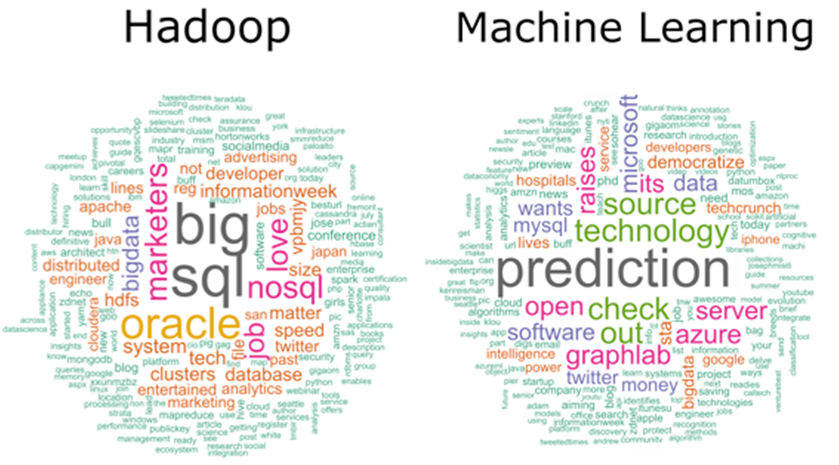

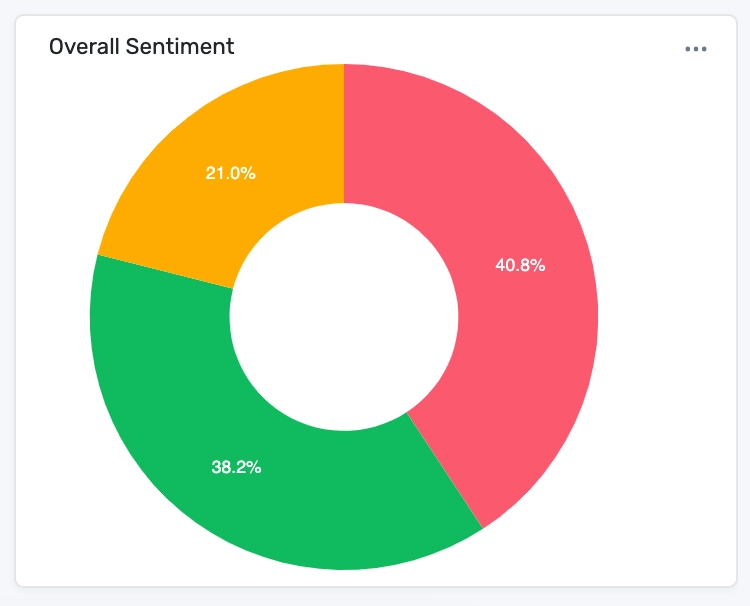

Sentiment Analysis

How well is our new return policy being received?

- Determine the sentiments of speakers or writers with respect to topic

- Use NLP to determine whether data is positive, negative or neutral

- Sentiment analysis is being used to help:

- Improve service at a hotel chain by analyzing guest comments

- Customize incentives and services to address what customers are really asking for

- Determine what consumers really think based on opinions from social media

- Emoji Sentiment

Preprocessing Text Data

- Create corpus: A bag of words model which is huge table known as sparse matrix

- Tokenization: Break every sentence into individual words.

- Clean the lists of words:

- Remove punctuation

- Remove digits and other symbols (except @ )

- Remove website link

- Remove empty spaces

- Remove Stopwords: useless words (ie: 'saya', 'kami', 'dia', dsb..

- Lemma/Stemming: remove grammar tense and transform each word into its original form

Wordcloud Visualization: Most frequent word appear in the data

Visualization for sentiment analysis: Overall Sentiment

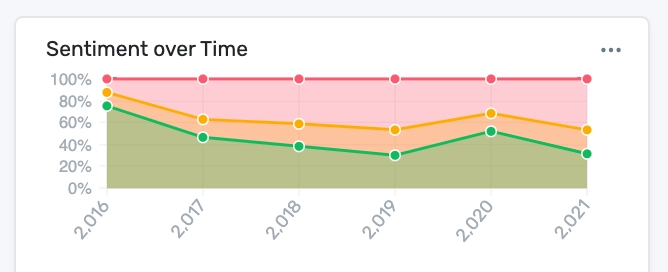

Visualization for sentiment analysis: Sentiment over time

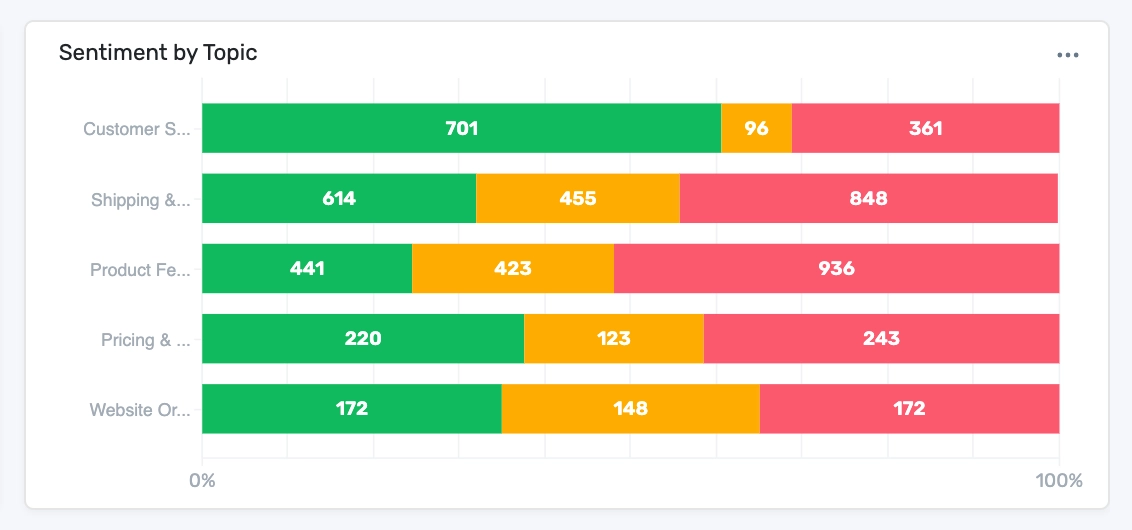

Visualization for sentiment analysis: Sentiment by topic

Challenge in Sentiment Analysis

- Many dialect in Malaysia

- Sarcasm

- No malay lexicon

Machine Learning

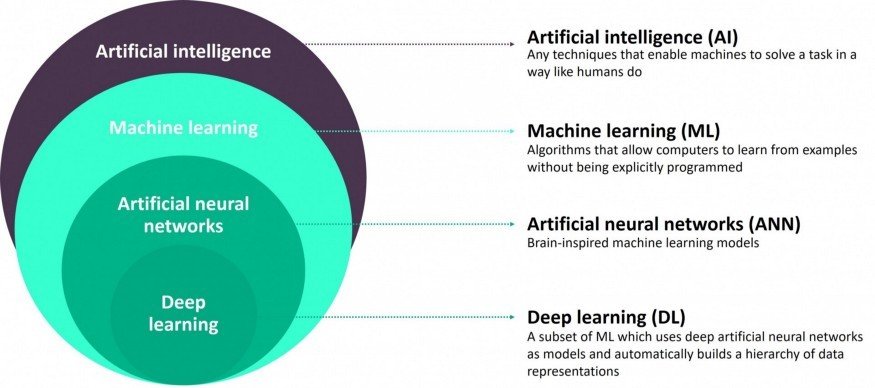

- Machine learning is a subset of artificial intelligence.

- Focuses mainly on designing systems which allow them to learn and make predictions based on some experience which is data.

Machine learning is becoming widespread among data scientist and is deployed in hundreds of products we use daily. One of the first ML application was spam filter

Applications of ML

Applications of ML

Supervised

VS

Unsupervised

Supervised Learning

Training data feed to the algorithm includes a label (answer)

-

Classification

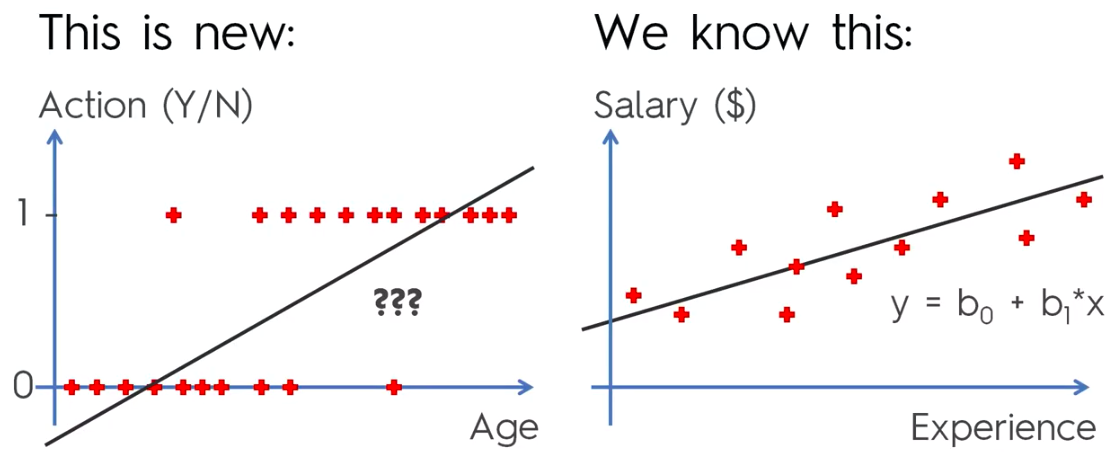

- Most used supervised learning technique

-

Regressions

- Commonly used in ML field to predict continuous value.

- Predict the value of dependant variable based on a set of independant variables (also called predictors or regressors)

Lists of some fundamental supervised learning algorithms

- Linear Regression

- Logistic Regression

- Neares Neighbours

- Support Vector Machine (SVM)

- Decision trees and Random Forest

- Neural Networks

Unsupervised Learning

Training data is unlabeled.

The system tries to learn without a reference

List of unsupervised learning algorithms

- K-mean

- Hierarchical Cluster Analysis

- Expectation Maximization

- Visualization and dimensionality reduction

- Principal Component Analysis

- Kernel PCA

- Locally-Linear Embedding

Regression

Simple Linear Regression

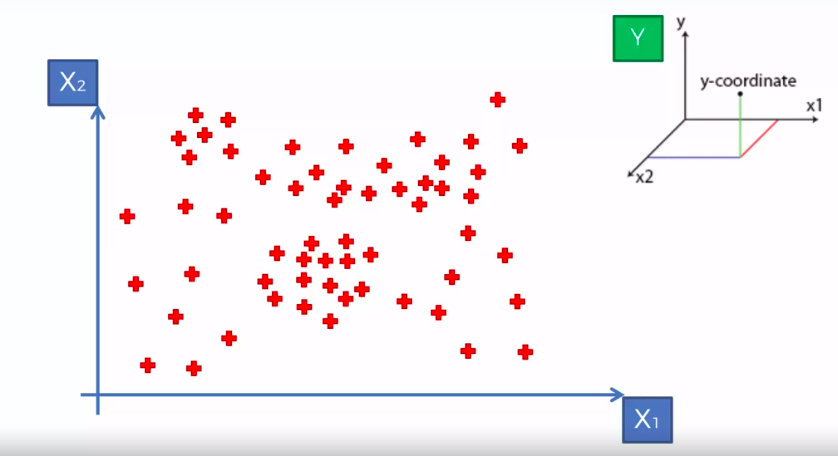

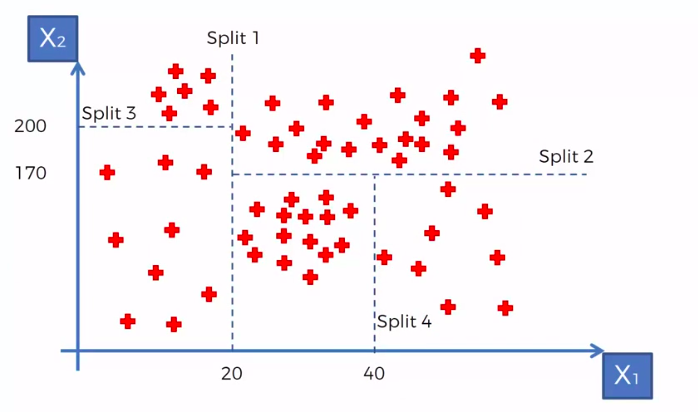

Decision Tree Regression

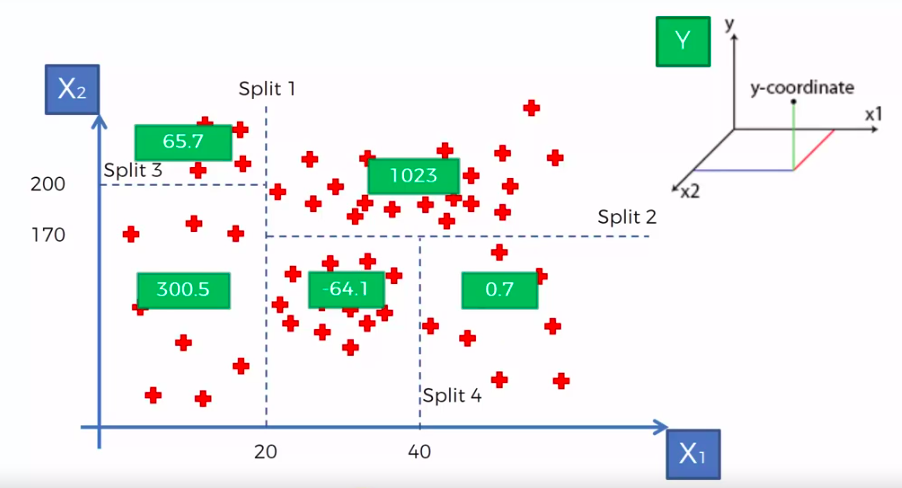

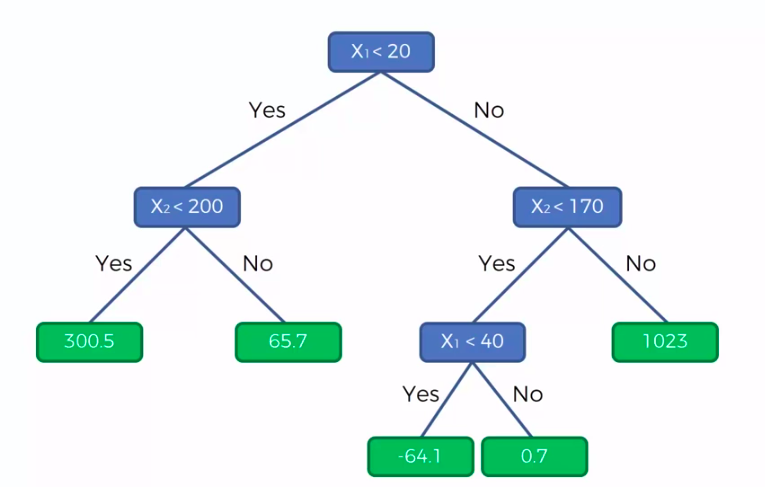

CART

Classification And Regression Trees

Classification Trees

Regression

Trees

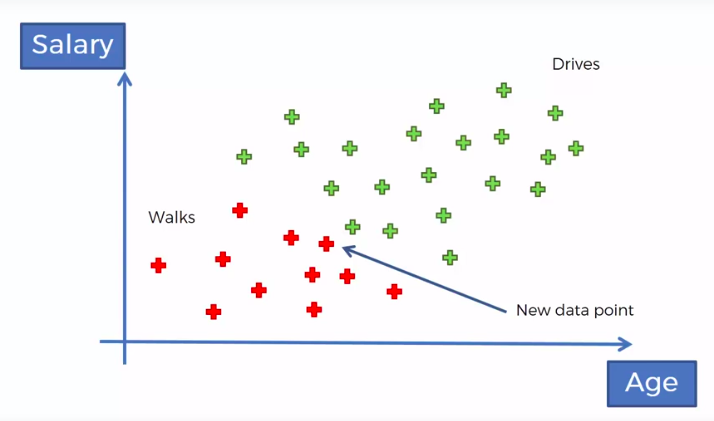

X1 and X2 is Independent Variable

Y is our Dependent Variable which we could not see because it is in another dimension (z-axis)

- Scatter plot will be split up into segment

- Split is determine by the algorithm.

- It is actually involve looking at something called information entropy

Calculate Mean/Average for each leaf

Random Forest Regression

We are not just predicting based on 1 Tree, We are predicting based on forest of trees. It will improve the accuracy of prediction because we take the average of many prediction

Classification

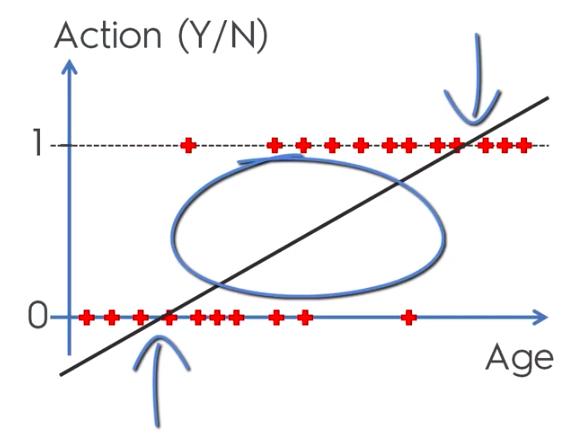

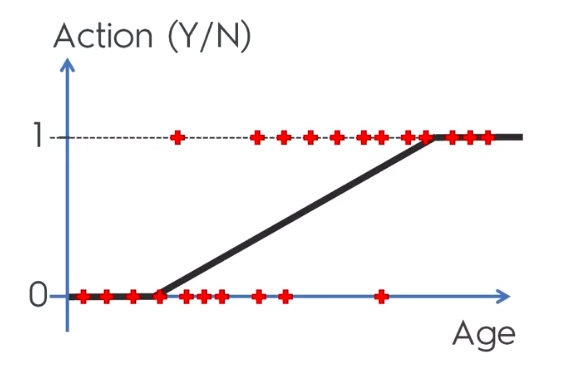

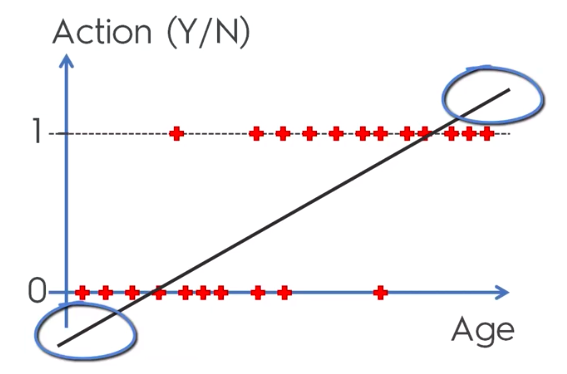

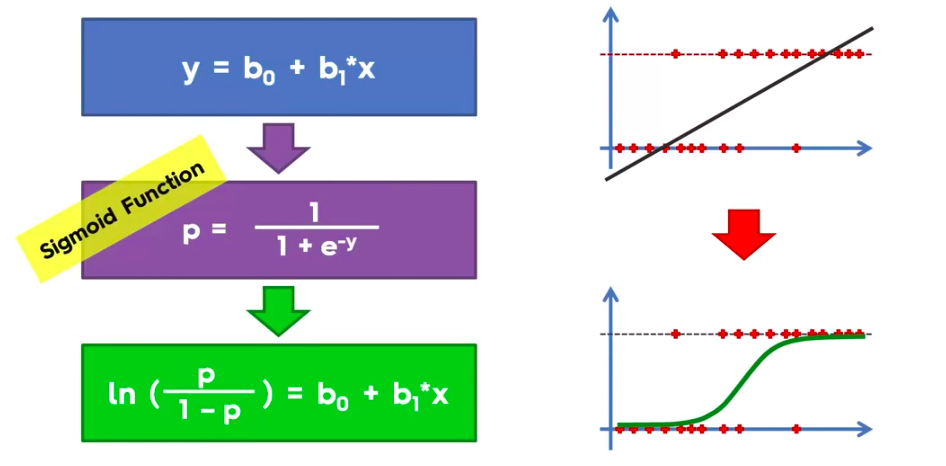

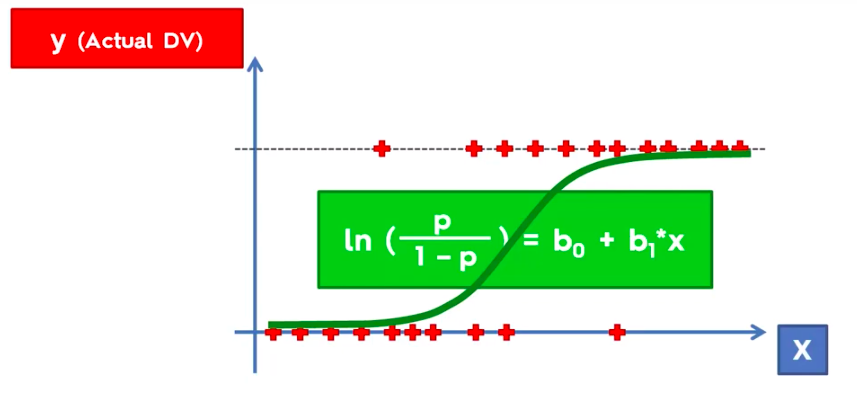

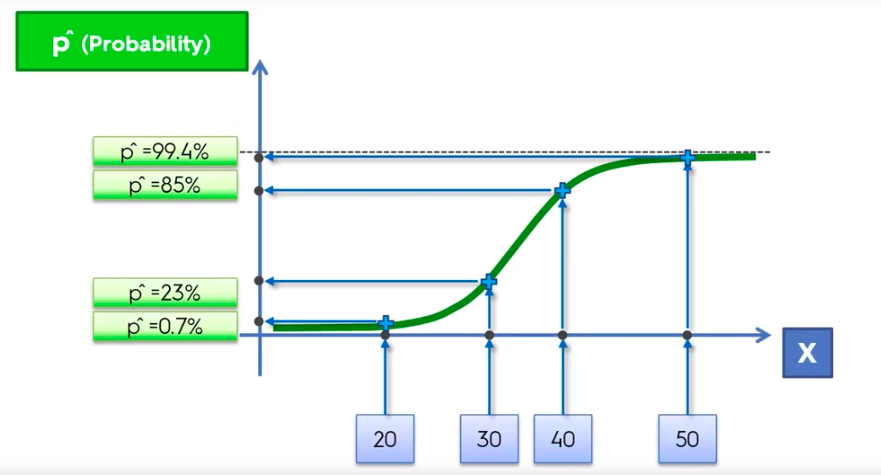

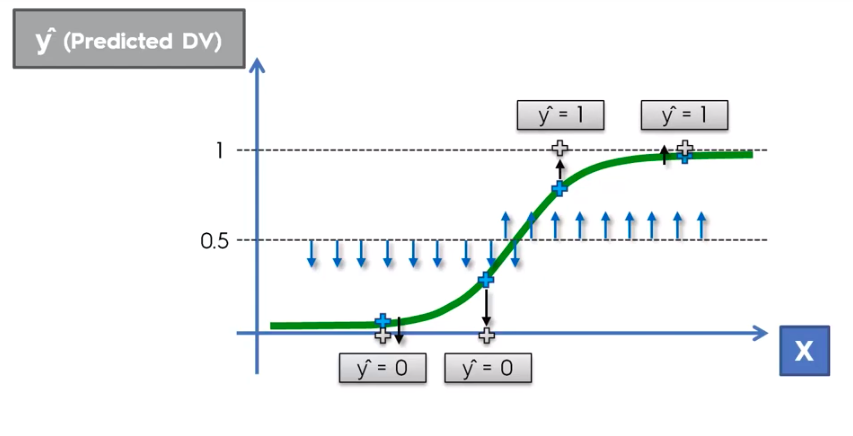

Logistic Regression

Logistic Regression Equation

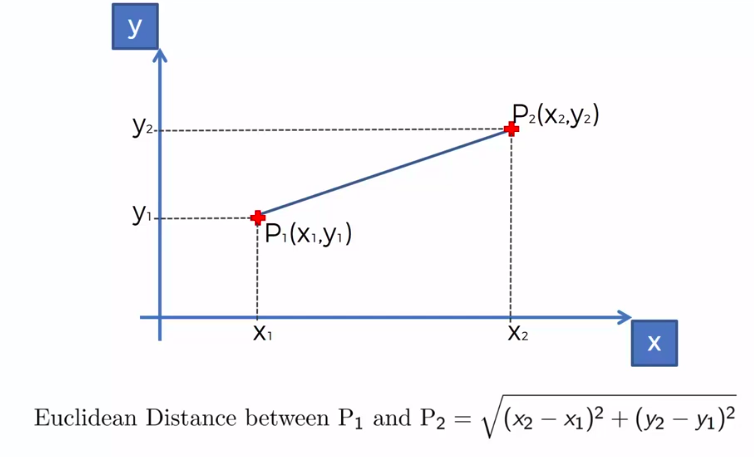

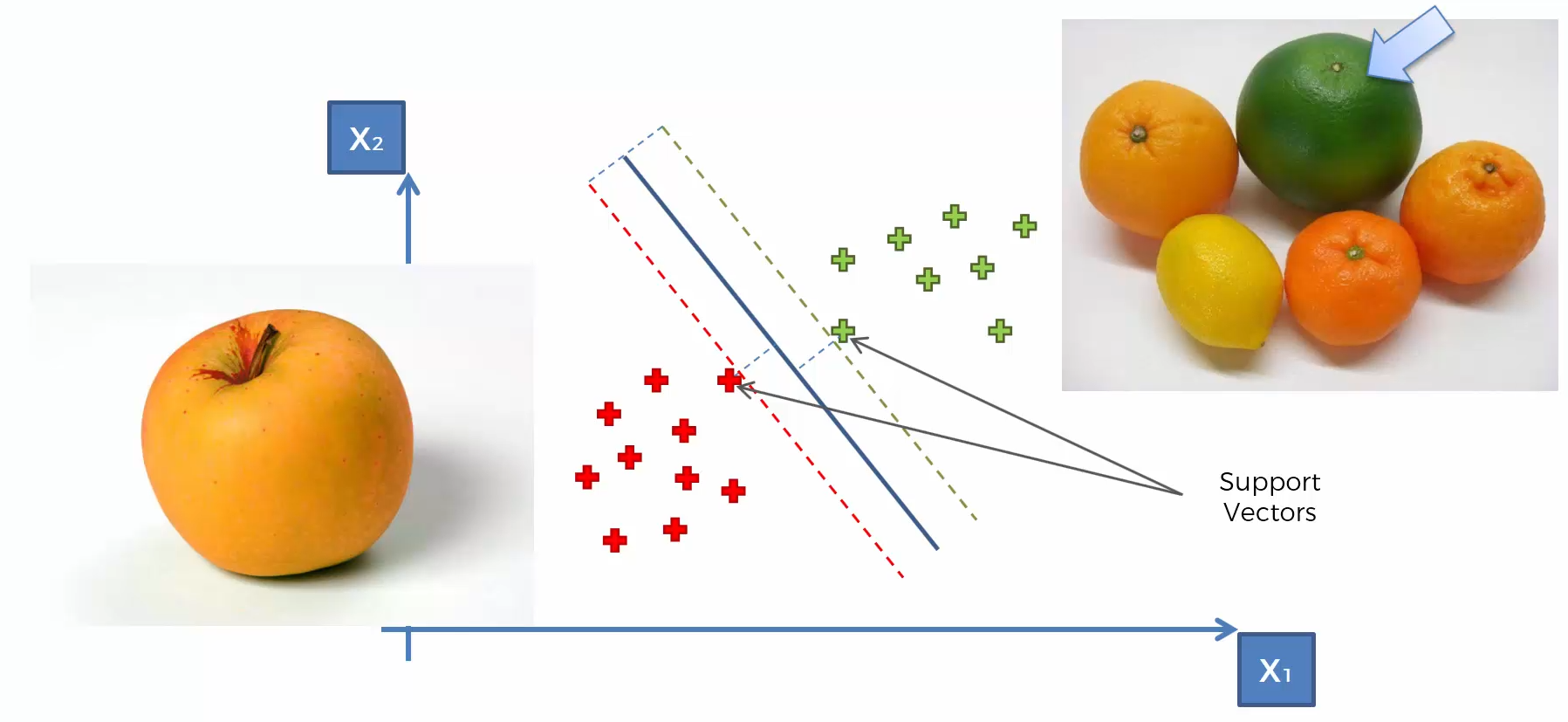

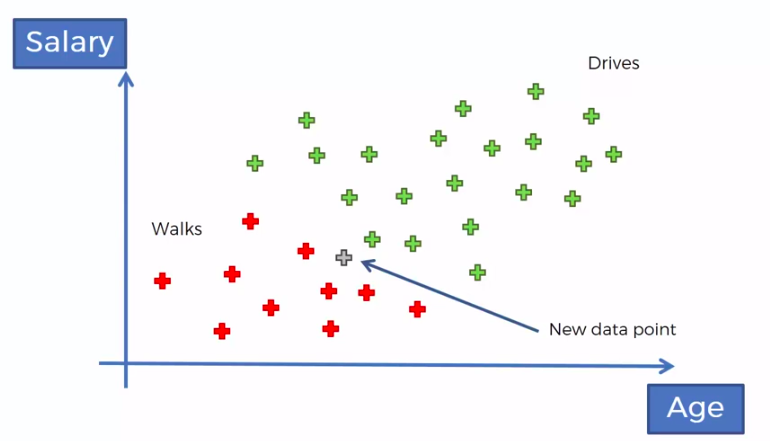

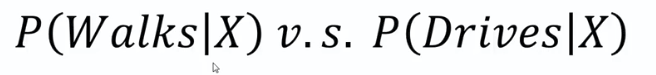

K-Nearest Neighbour

We can use other Distance as well such as Manhattan Distance. But Euclidean is the commonly used for geometry

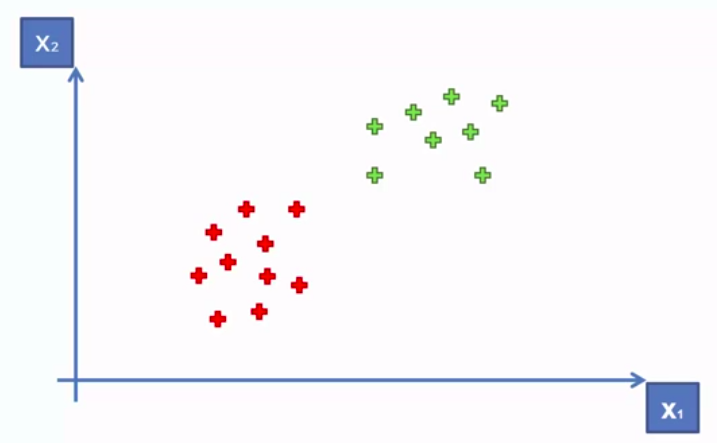

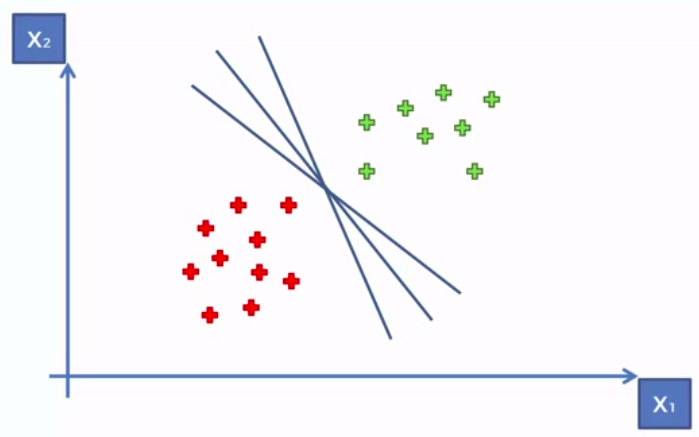

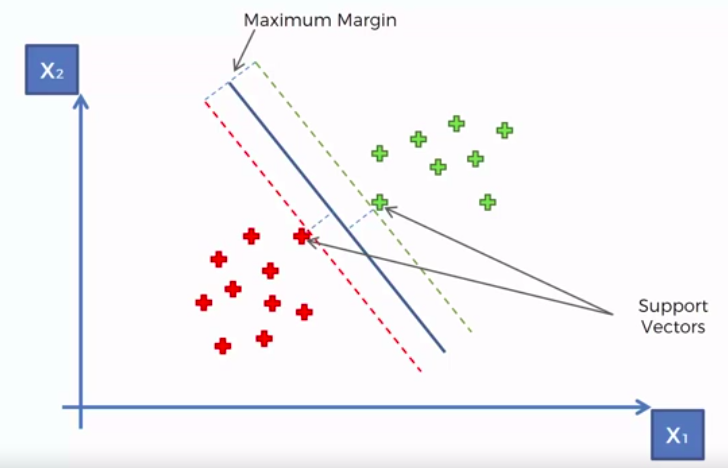

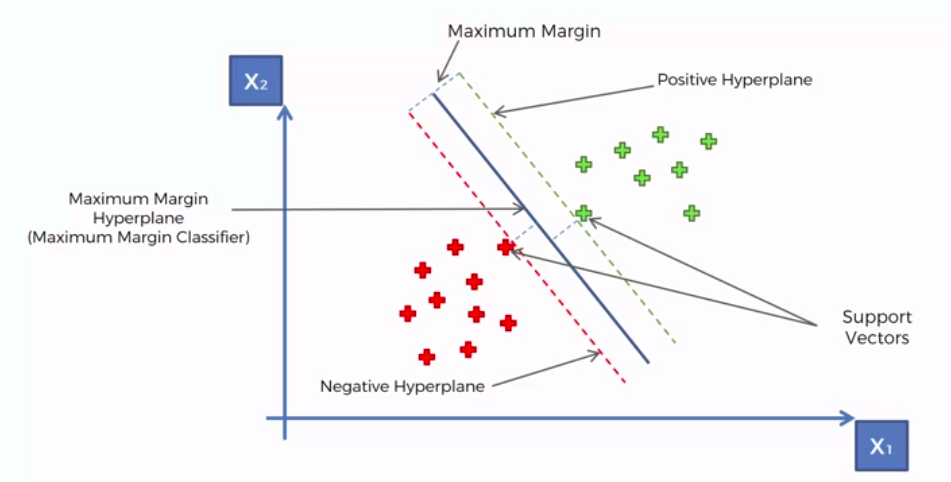

Support Vector Machine (SVM)

Whats so special about SVM?

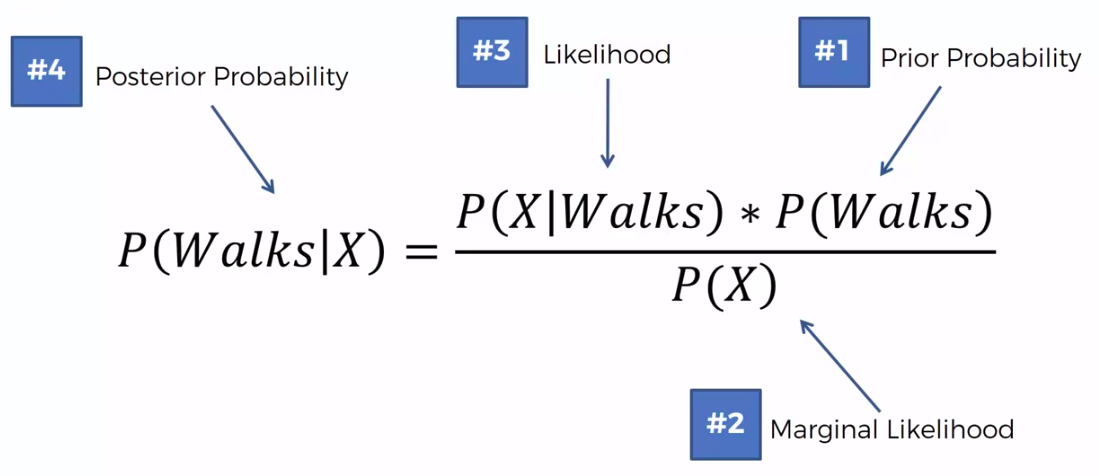

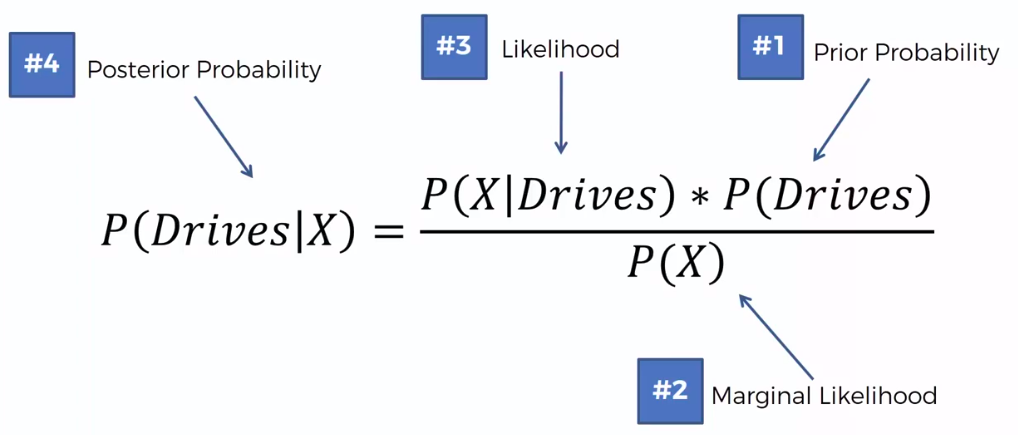

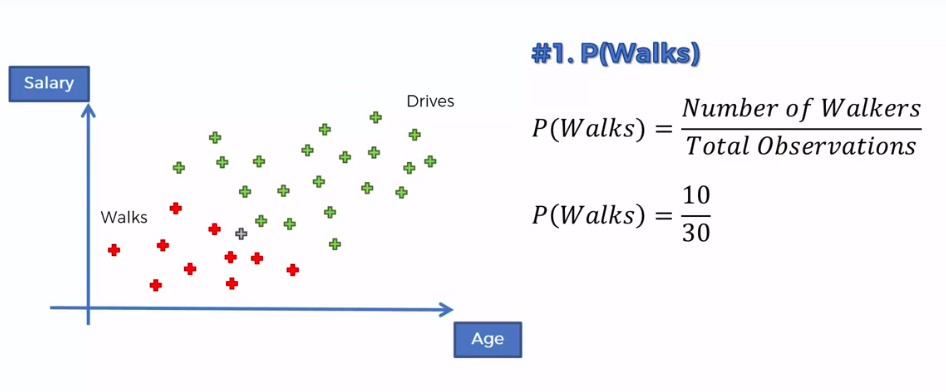

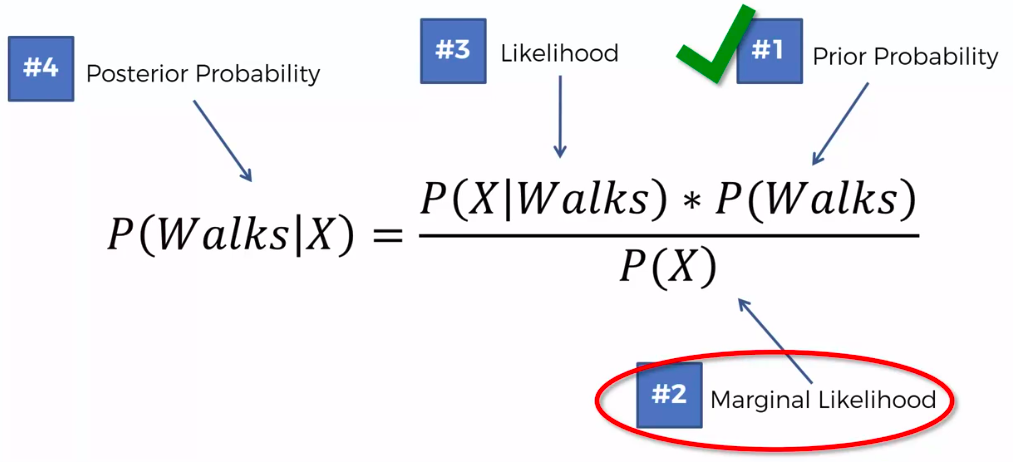

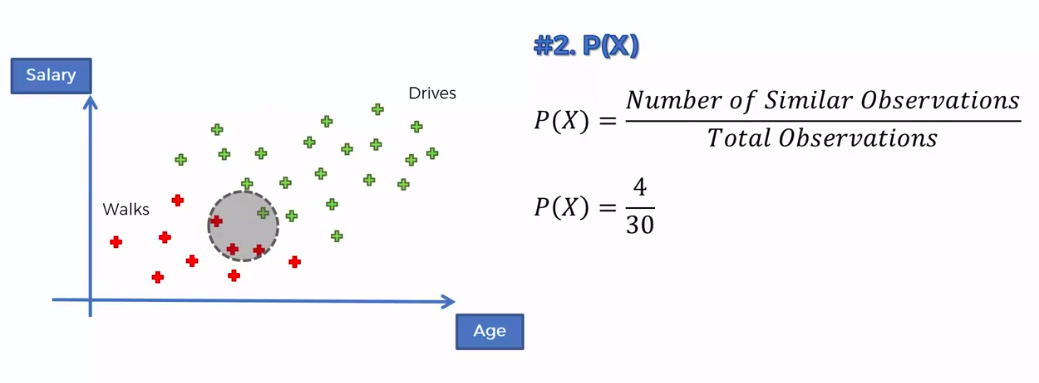

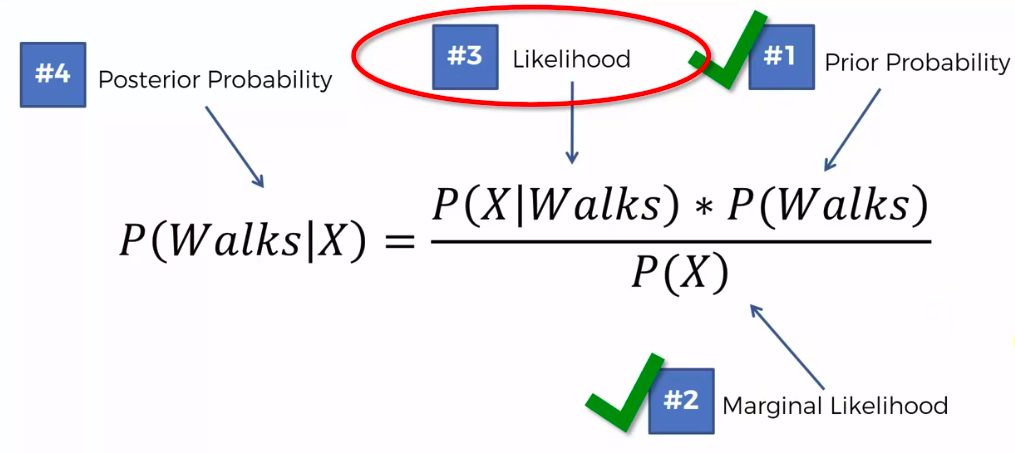

Naive Bayes

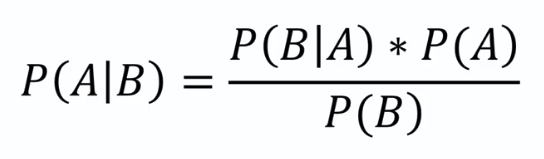

Bayes Theorem

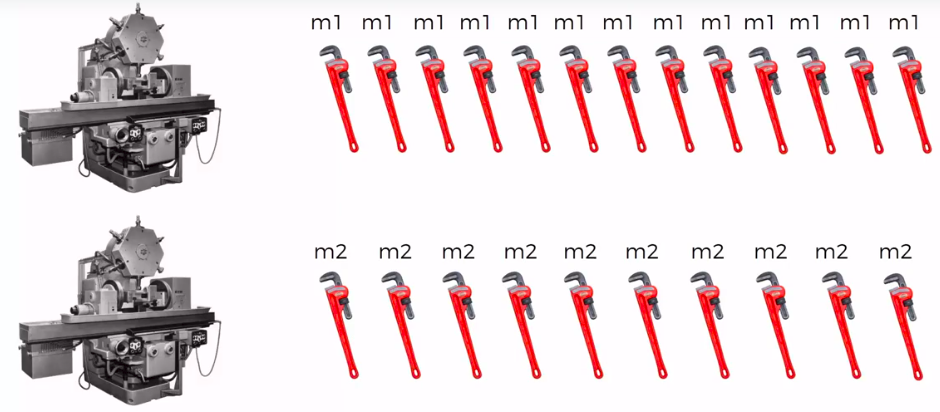

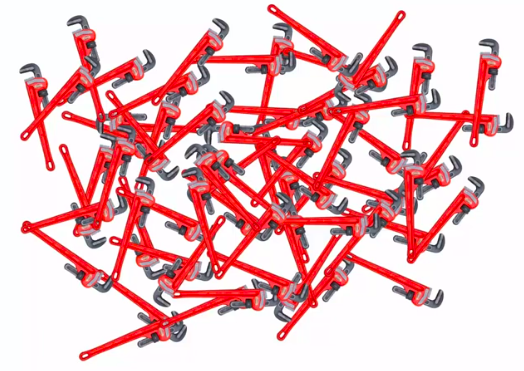

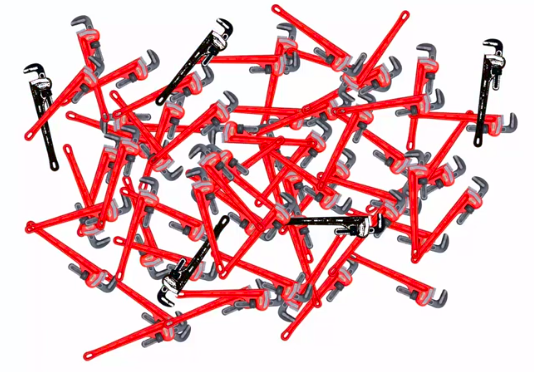

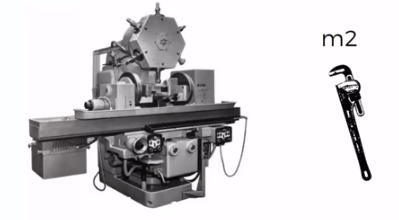

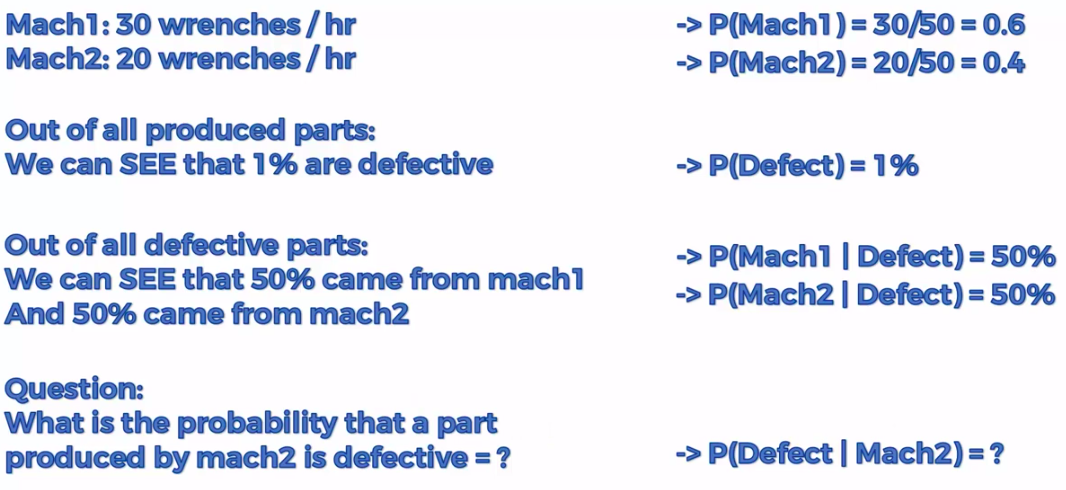

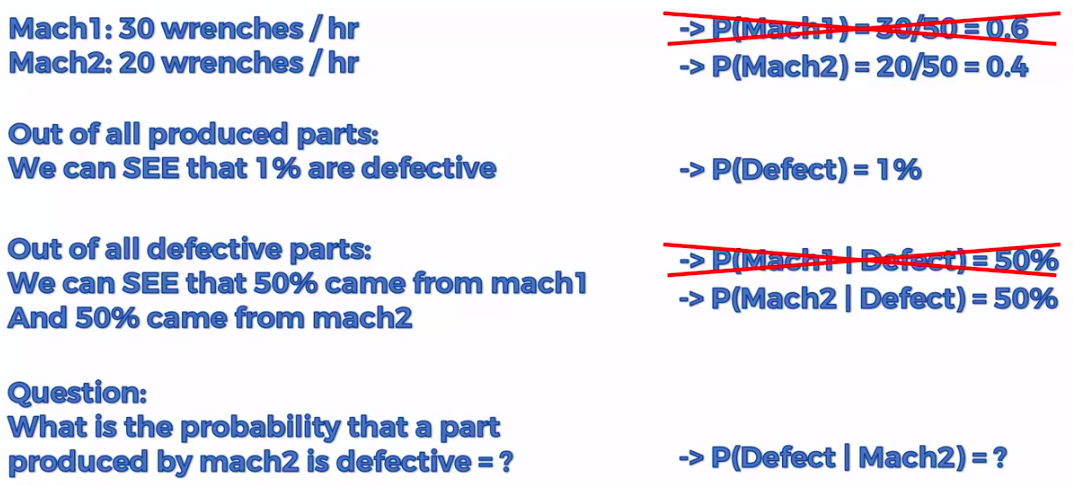

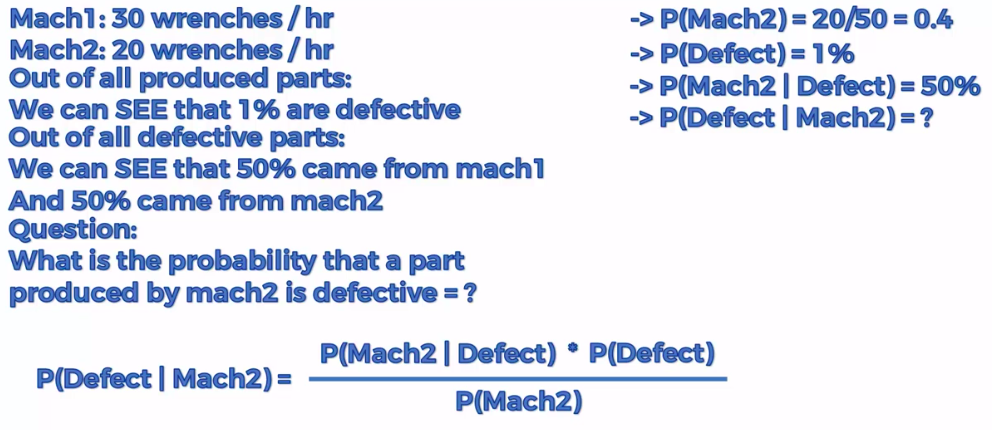

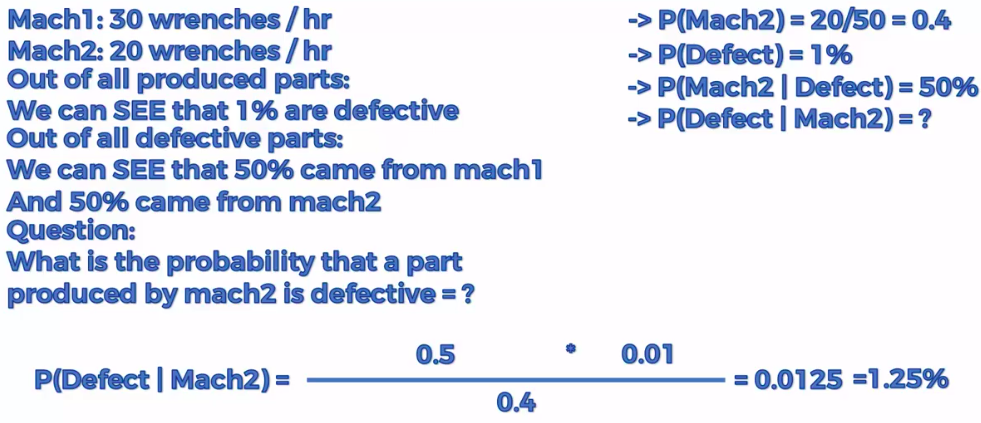

Defective Wrenche

What's the probability?

Mach1: 30 wrenches/hr

Mach2: 20 wrenches/hr

Out of all produced parts:

We can SEE that 1% are defective

Out of all defective parts:

We can SEE that 50% came from mach1 And 50% cam from mach2

Question:

What is the probability that a part produced by mach2 is defective = ?

Step 1

Step 2

Step 3

Assign class based on probability

Text

Ready ?

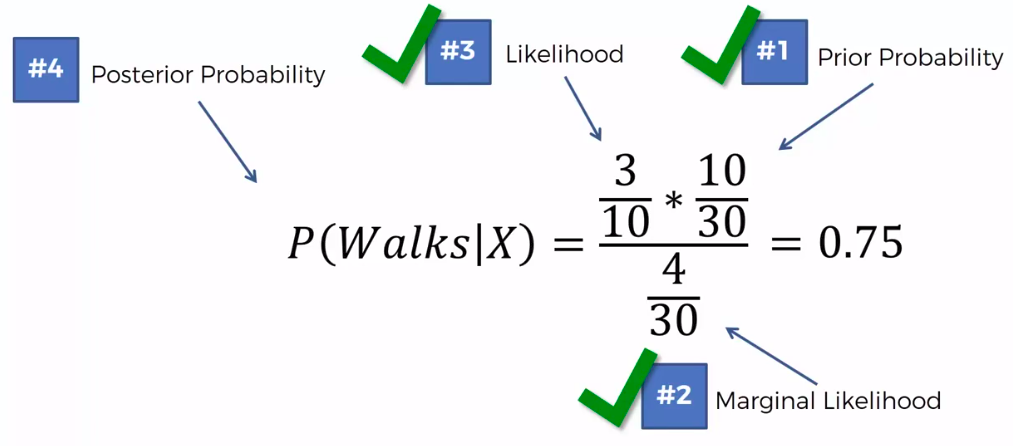

Step 1

Step 1

Step 1

Step 1

Step 1

Step 1

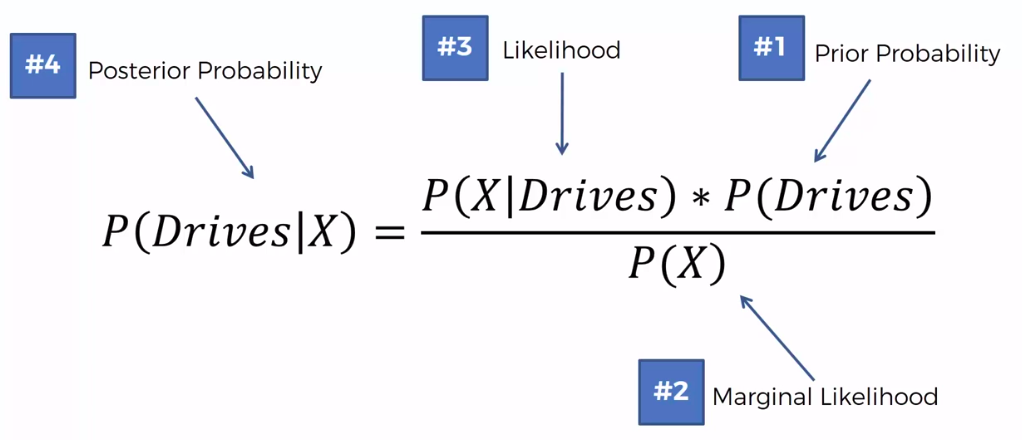

Step 2

Step 2

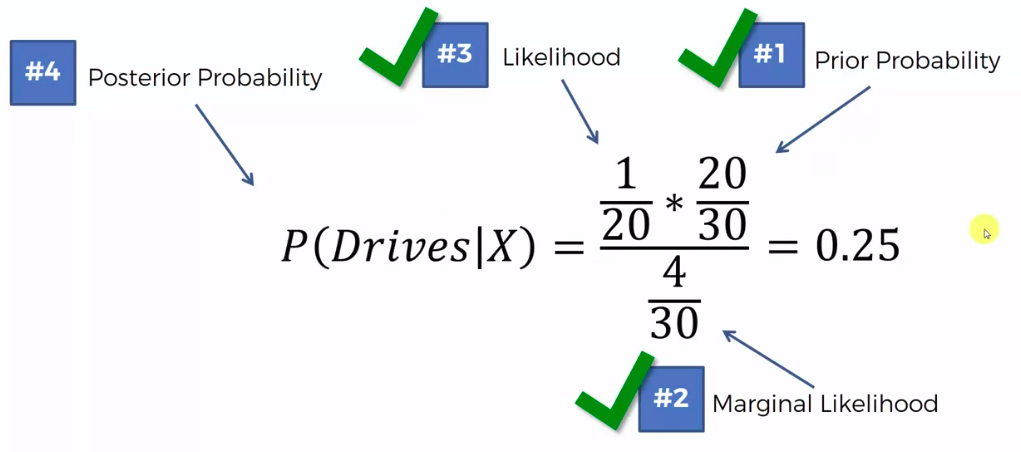

Step 3

0.75 VS 0.25

0.75 > 0.25

Decision Tree

CART

Classification And Regression Trees

Classification Trees

Regression

Trees

Clustering

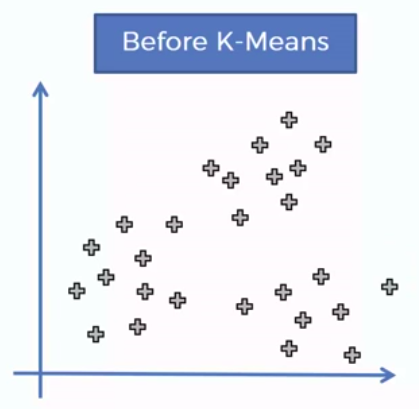

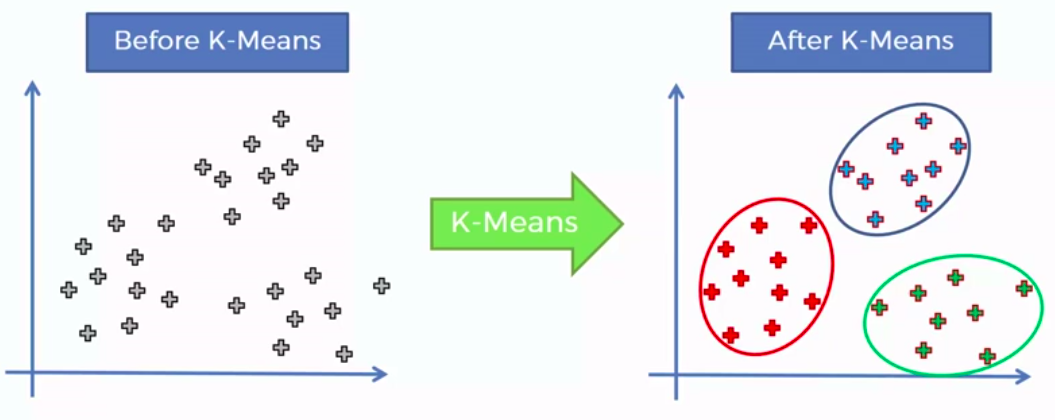

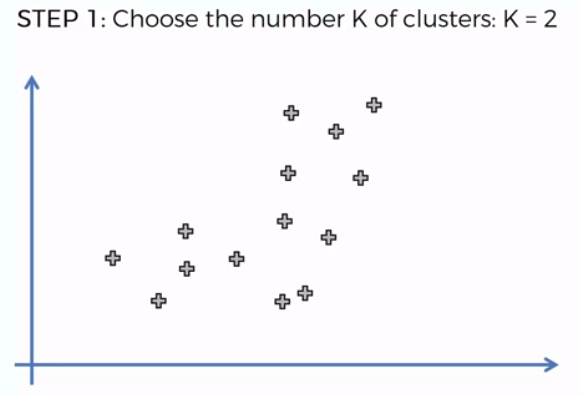

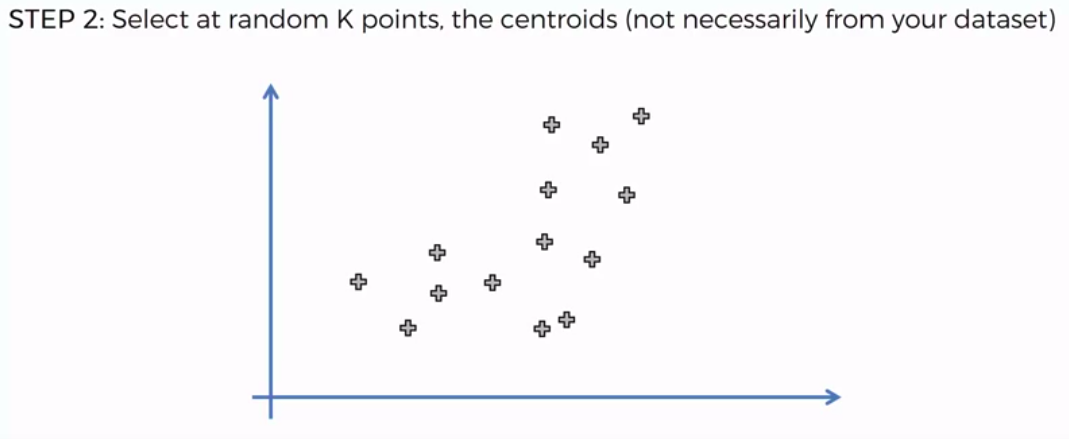

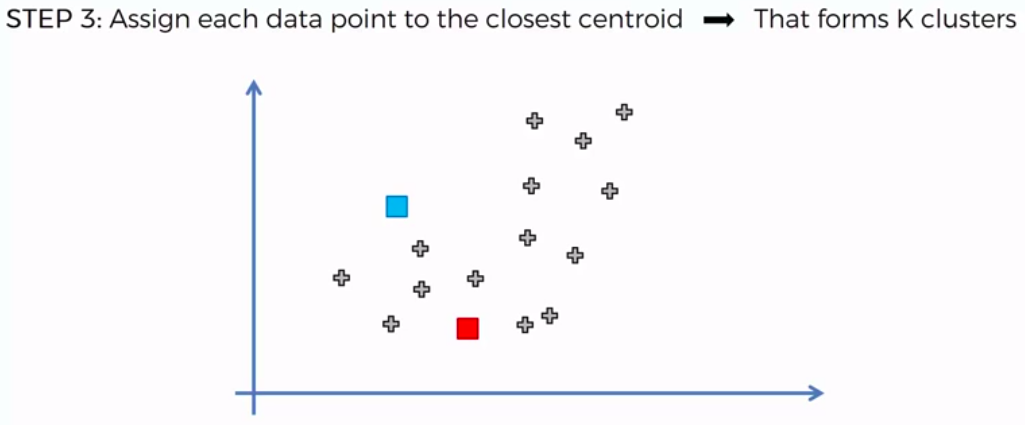

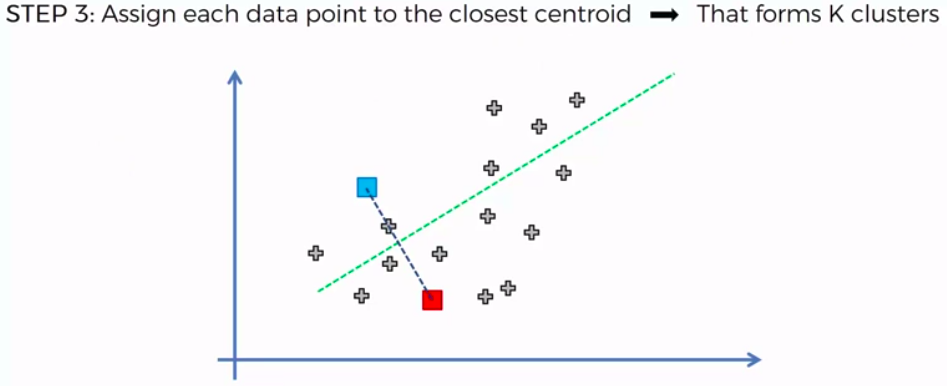

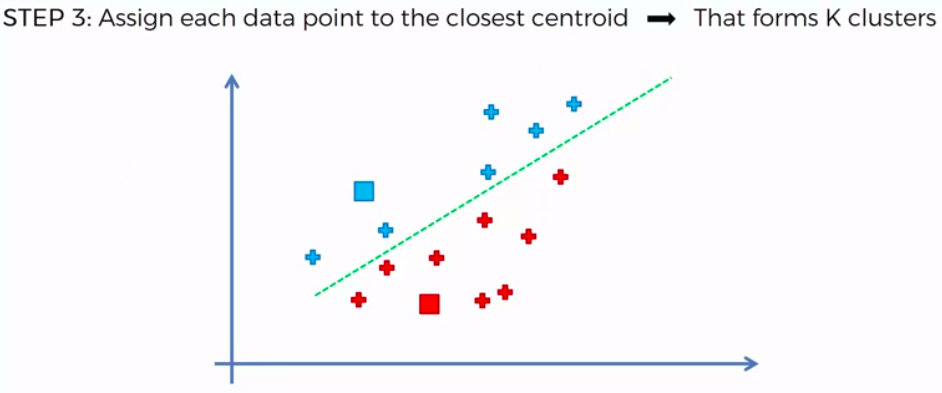

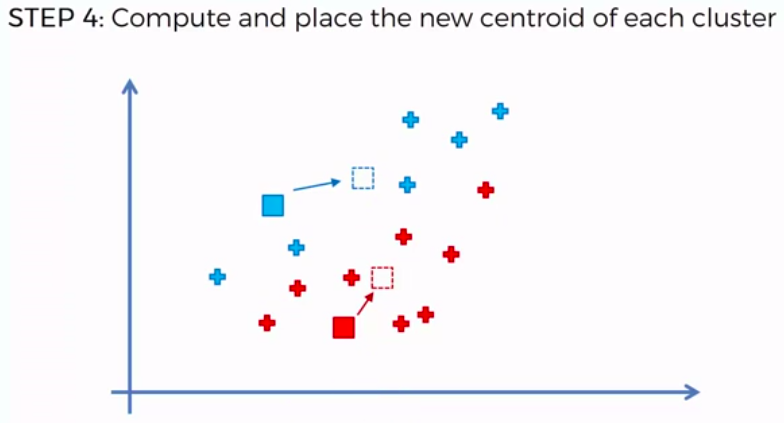

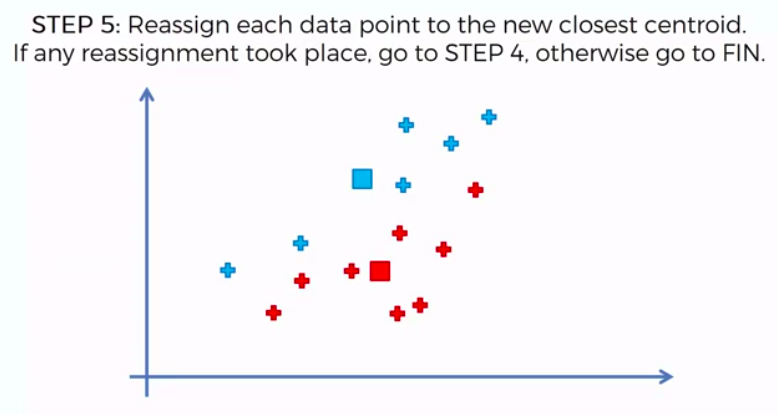

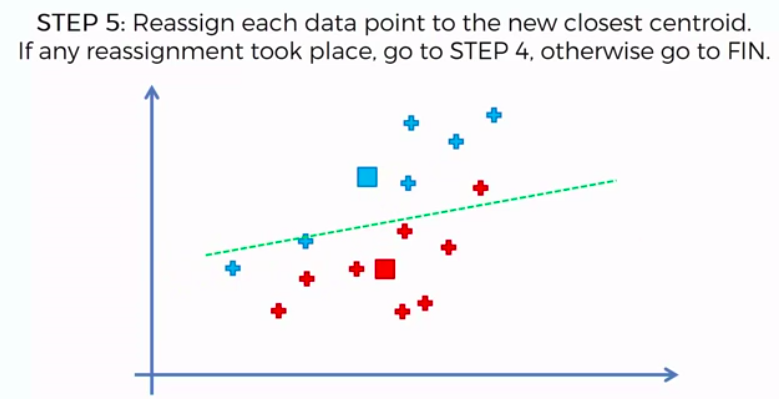

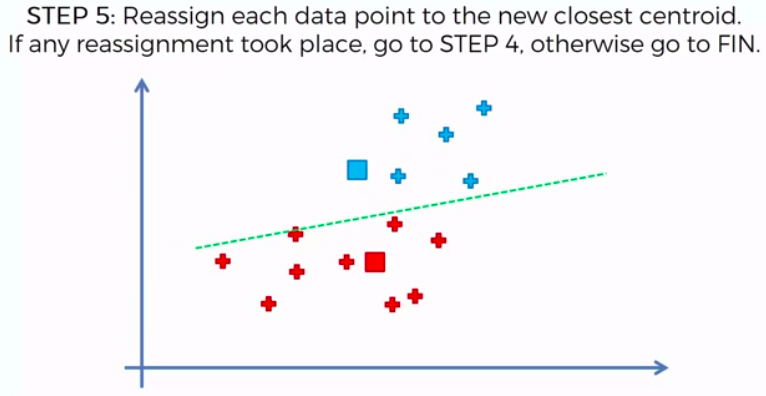

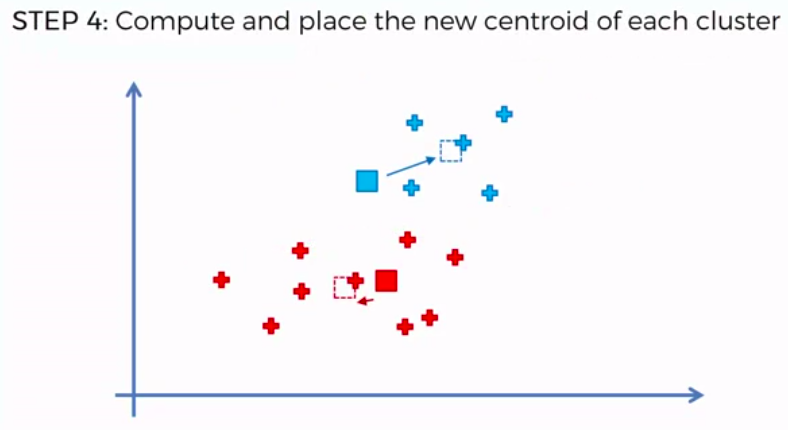

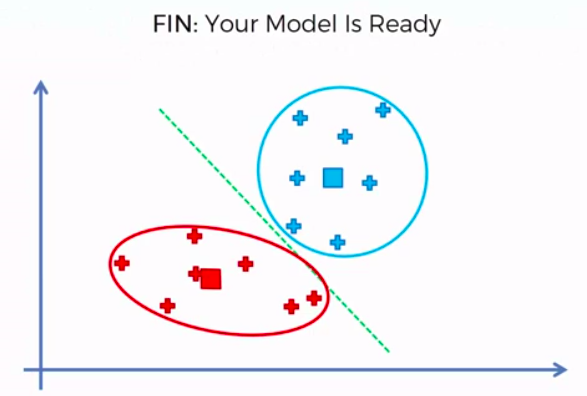

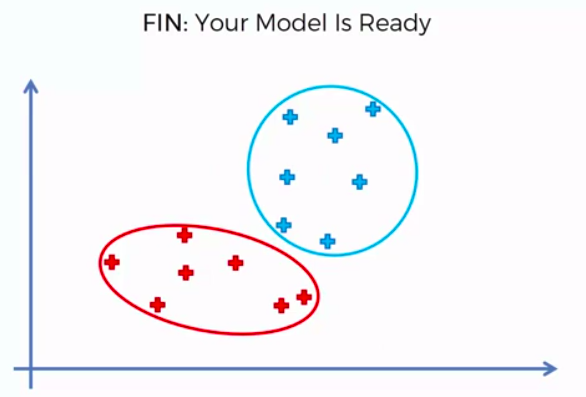

K-Means Clustering

We can apply K-Means for different purposes:

- Market Segmentation,

- Medicine with for example tumor detection,

- Fraud detection

- to simply identify some clusters of your customers in your company or business.

Deep Learning

Deep Learning is part of Machine Learning to find better patterns but when data is unstructured, it is difficult to find the pattern by ML algorithms. Basically it emulates the way human gain certain types of knowledge

How does Deep Learning (Neural Network) works?

- Initially, the network will be meaningless

- It gets trained based on data we passed to neural network

- As data progresses between the nodes from input to output it gets multiplied by weight and bias gets added before it goes through the activation function

- The whole process is called Feed Forward

- The final layer compiles the weighted inputs to produce an output

- The output layer intends to predict based on input data

When the neural network is learning(actually, it is not learning yet), initially it won't do a good job at predicting the correct output. As the whole training is to get the right value of weight and bias of each node so that the NN generalizes well. So actual learning happen when NN has to correct the value of weight and bias of each node

The error (difference between actual and predicted) is passed backward, cascading to the input layer. This cascade changes the weight and bias of each node in each layer. The entire process of cascading backward is called backpropagation, and this is how neural network learns.

Example: Fruit image layers can learn different features of the object like texture, color, shape, size, etc

Artifical Intelligence

Machine's that has cognitive intelligence, in short, act like humans

Assume that AI is a car. Machine Intelligence is the fuel that runs it

- It's not the only singular component that allows the car to run but is one of the more important ones. The concept of ML help systems achieve AI

- Systems that run based on ML, learn themselves and then make decision. This is the aim of AI

Best example to understand modern AI engineering is Self Driving cars

- Machine Learning and Deep Learning are what makes it possible to achieve AI

- ex: Computer Vision (DL) based application that identify objects like other cars, traffic signals, boards, footpath, people crossing the street, etc, and ML based applications that can predict how soon car is going to run out of fuel based on the distance of the destination and traffic

Sample use case

Recruitment and Retention in Military

- US military has a huge recruiting problem

- According to Heritage report, 71% of Americans ages 17-24 does not qualify for military service due to health problem, physical fitness, education, or criminality.

- Military branches struggling to find enough recruits to maintain a fighting force

- Additionally, low unemployment rates are continuing to exacerbate the problem

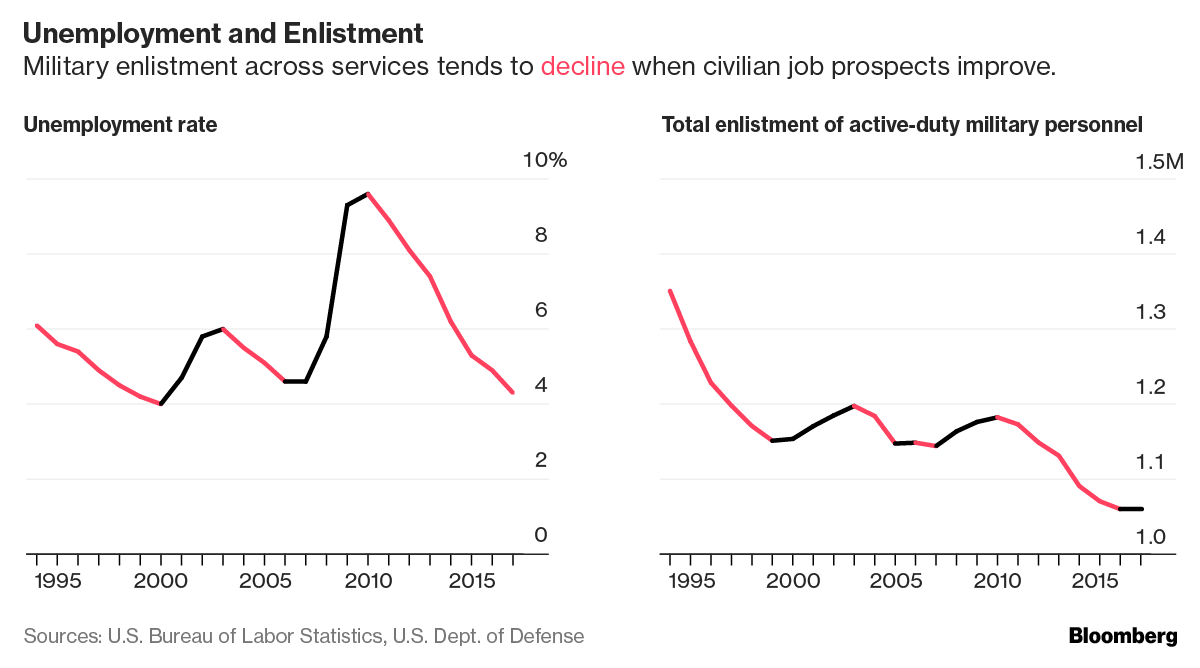

Graph below illustrate how unemployment is shrinking the recruit pool

Why does it matter?

- Long-term impact: security of nation, as a whole is at risk

- Losing the ability to secure the country

- Lose the ability to prevent war without a well-staffed and formidable military

- Degradation of armed service

- To ensure problems end in the most economical and ethical manner feasible

- Develop fact-based solutions to improve the efficiency in the recruitment process using ML

ML for Military Recruiting

Information describing past recruits:

- Height

- Weight

- Physical Fitness Score

- Military aptitude test scores

- Medical History

- Education

- Extra-curricular activities

- Develop a model that predicts whether or not any individual future prospect will enlist in the military

- The historical data indicate whether a recruit enlisted in any branch of service

- Break this problem by state, branch of service and other subset of the population to make better predictions.

- ML provides better insights for those most likely to enlist, giving recruiters the names of potential recruits to focus efforts to reach their objective

Utilizing ML will help every recruiter better prioritize their time and quickly turn the two recruits they need to enlist per month into three. High-quality recruits able to contribute to the overall defense and security of the nation in the process.

We can also look at the recruiting issue as a marketing problem. What would a 1% increase in awareness accomplish?

Can be accomplish by utilizing information the military has already collected:

- The data describing individuals that have expressed interest in the armed services would be plenty to begin training models.

- Social Media data to analyse social trends

- Training ML algorithms with these data will enable military recruiters to understand the best way to interact with prospective, high-quality recruits

A step further, dig deeper into the data to understand what jobs and interests each prospect may be interested in and put those options in front of them, increasing their propensity to engage and ultimately enlist

With BDA, the military can effectively and efficiently overcome the obstacles of finding the best recruits to serve

THANK YOU

MINDEF-BDA Training

By Abdullah Fathi

MINDEF-BDA Training

BDA Training Course Outline

- 668