Open source software at SynSense: motivation, challenges and examples

Gregor Lenz

13. 12. 2022

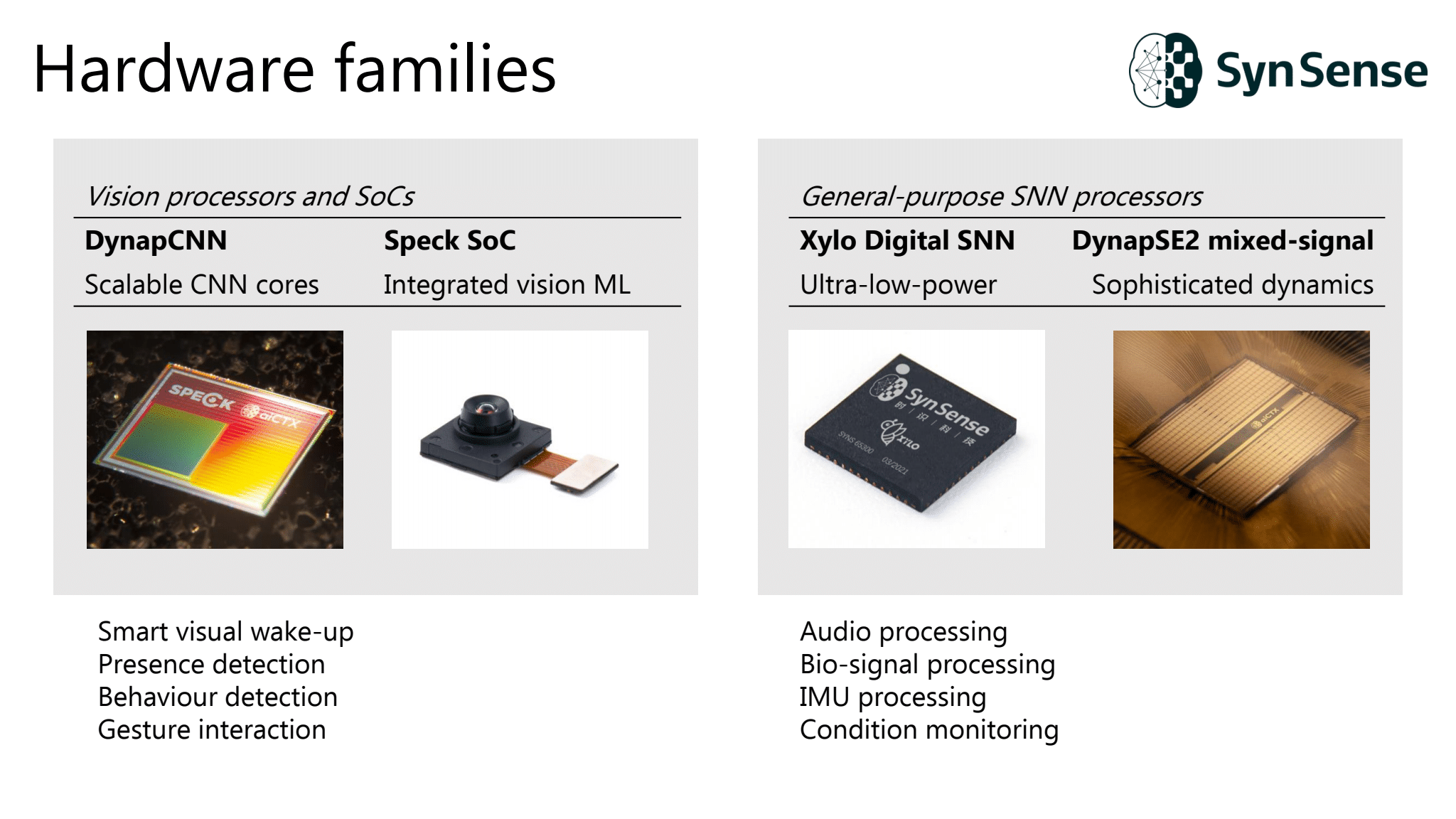

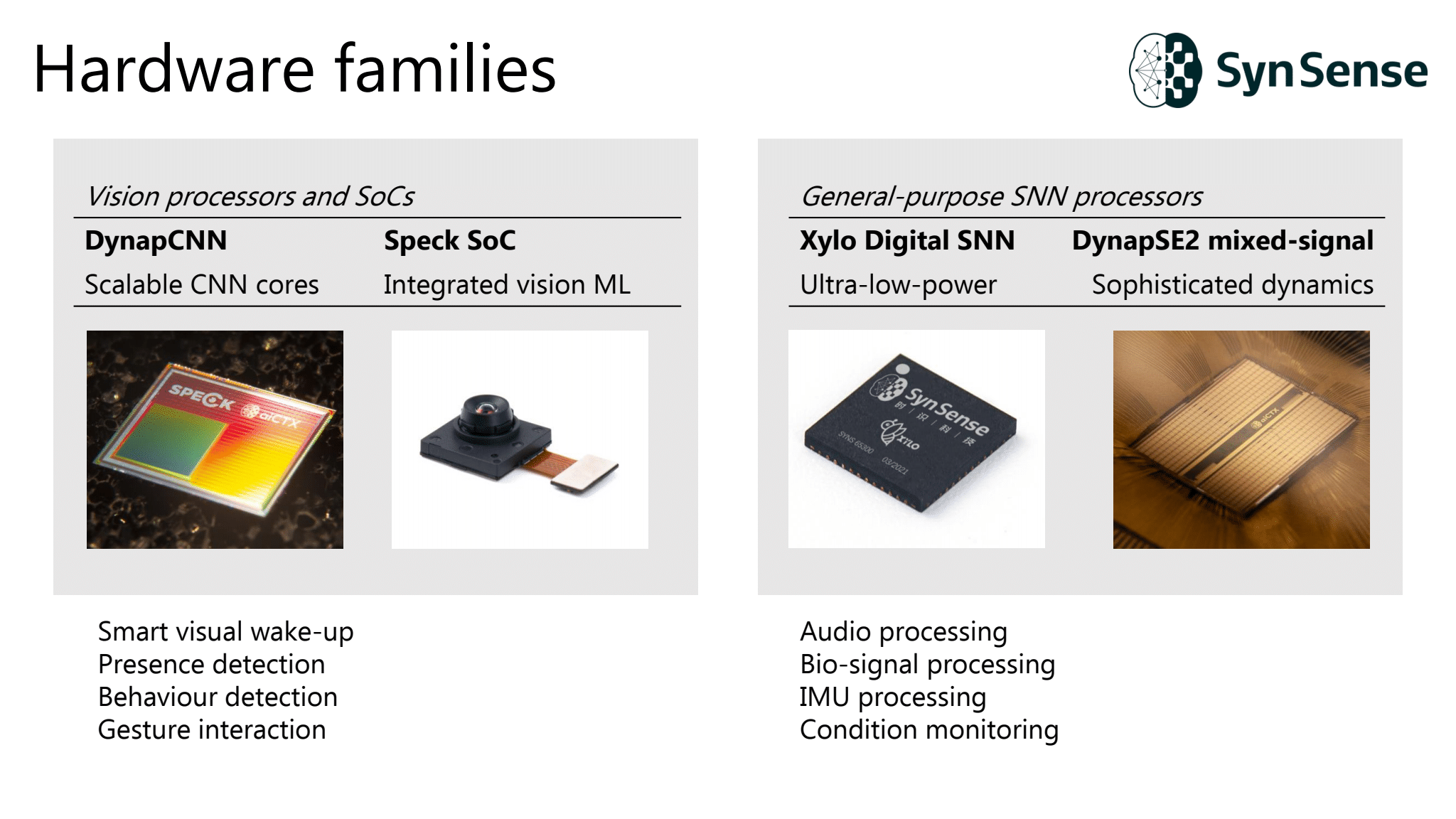

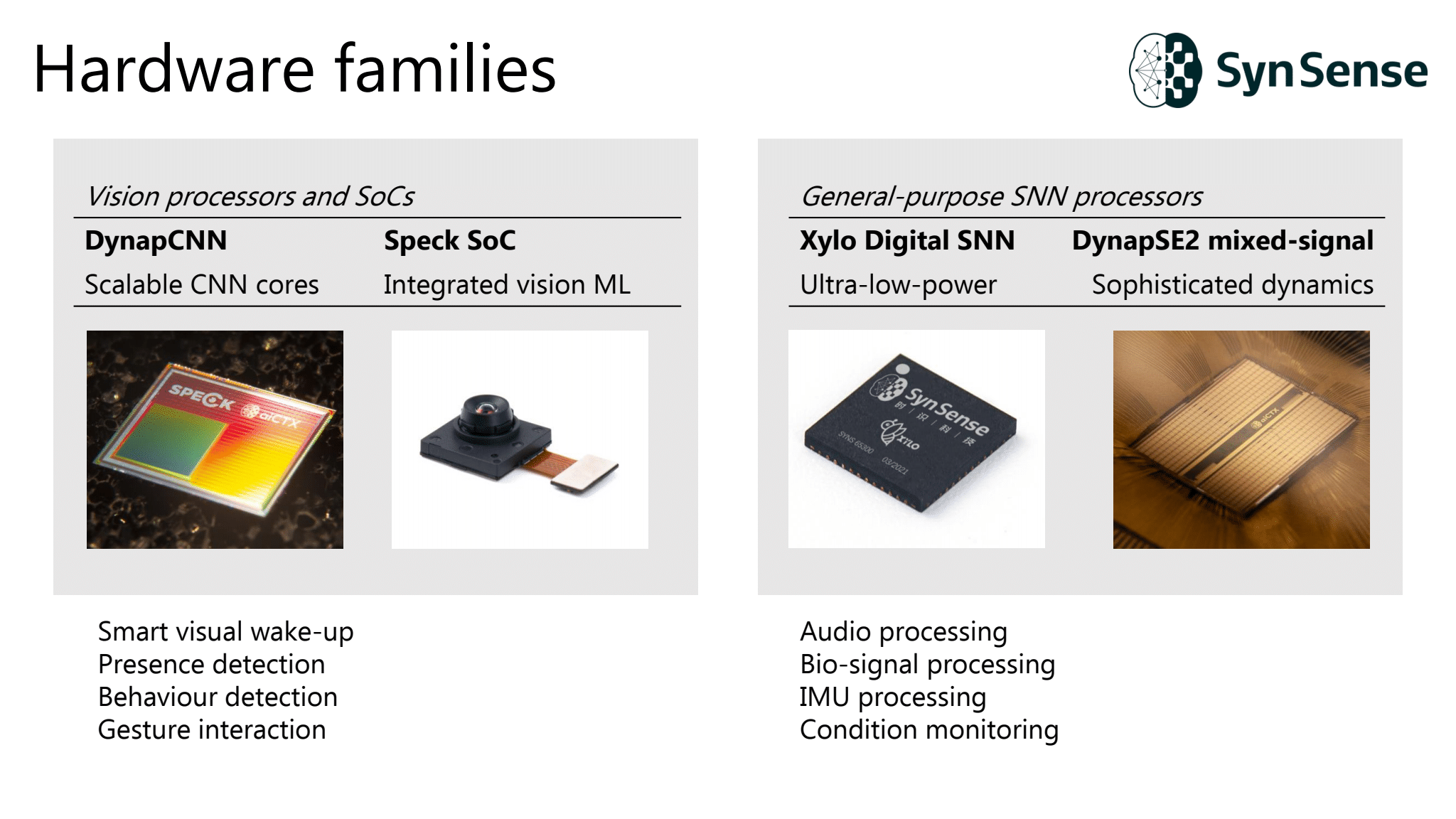

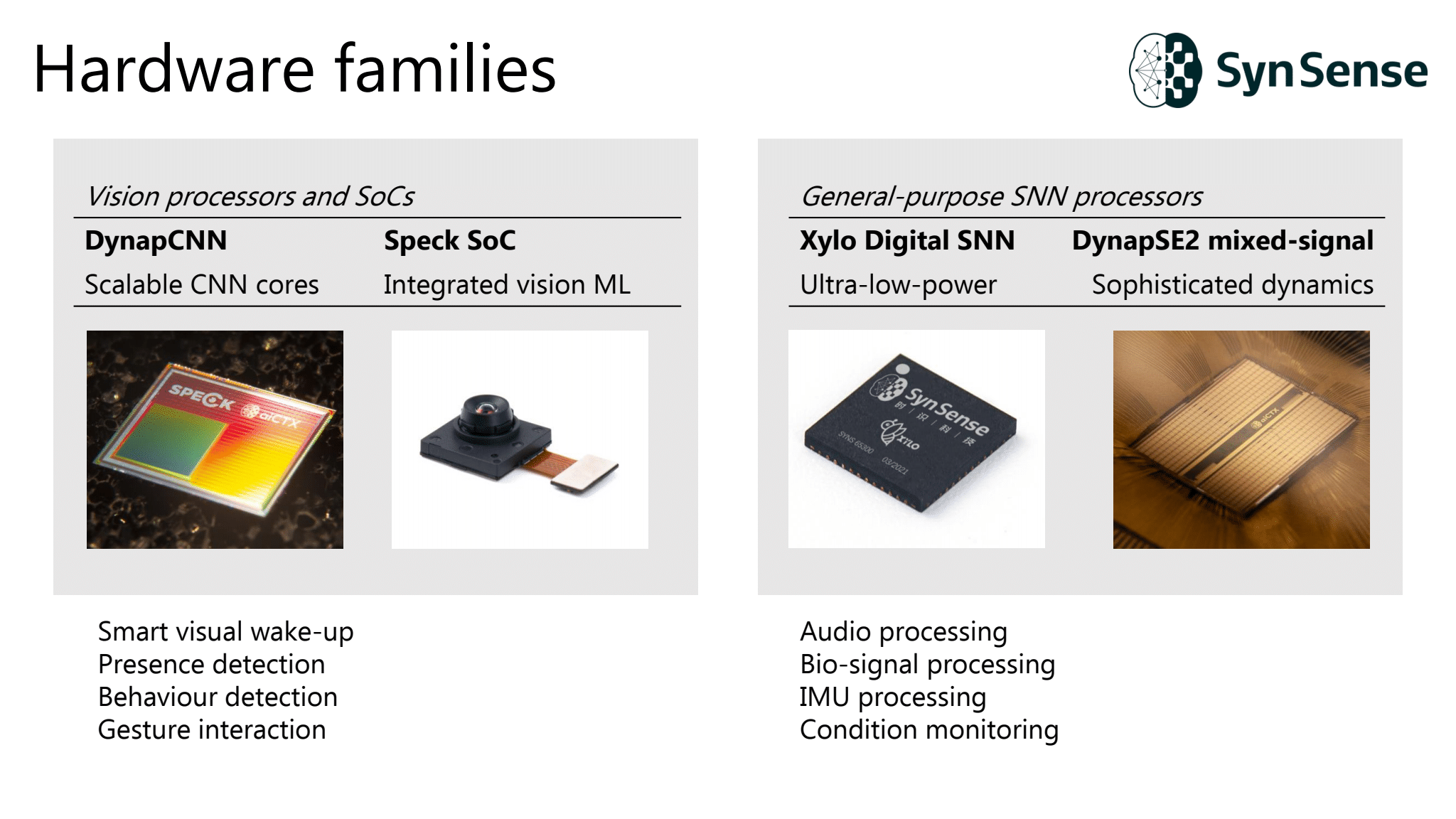

SynSense

- Founded 2017 in Zurich

- Spin off from UZH/ETH

- Ning Qiao and Prof. Giacomo Indiveri

- Offices in Zurich, Chengdu, Shanghai

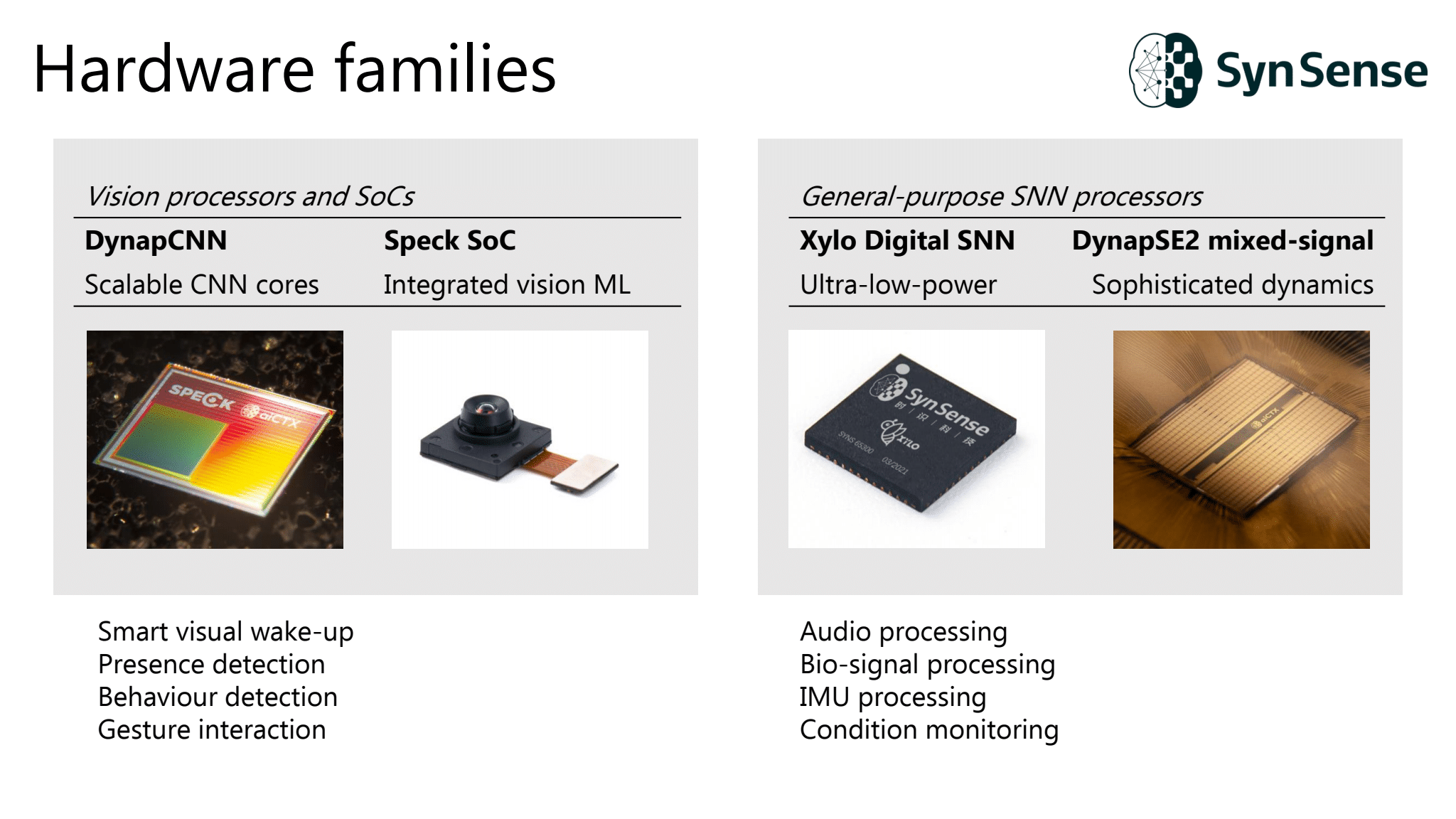

Core tech teams at SynSense

-

Hardware team: Circuit design for our chips

-

System-integration team: interface between hardware and higher-level software. Allows to configure chips

- Algorithms team: Train spiking neural networks for different tasks

Technical challenges

- Dataloading / dataset curation

- Training robust models

- Deployment to custom hardware

Dataloading and datasets

SNN training and deployment for vision models

SNN training and deployment for audio models

Accelerated neuron models

rockpool.ai

sinabs.ai

tonic.readthedocs.io/

SynSense Github!

Why we write our own libraries

- We started in 2017

- The current list of features on top of PyTorch is not very long - manageable time effort

- Need high degree of control to move fast

- Features that we care about without the need for compromises

- Tailored to our hardware

Dataloading and datasets

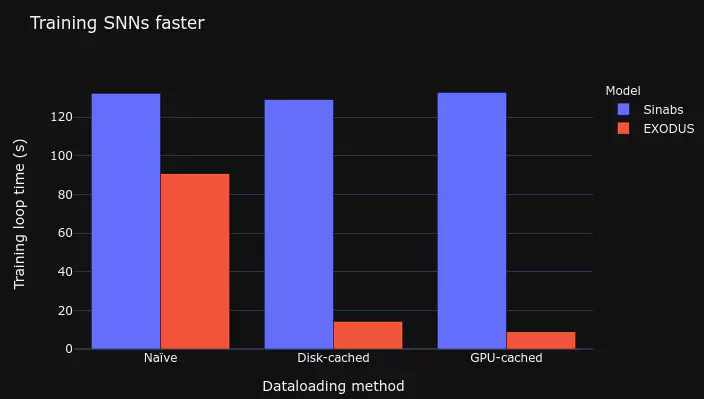

- Dataloading is a serious bottleneck when training models

Dataloading and datasets

https://lenzgregor.com/posts/train-snns-fast/

Dataloading and datasets

- Dataloading is a serious bottleneck when training models

- We create our own datasets - how to manage them?

- Event-based filtering / transformations

SNN training

- Anti-features: intricate neuron models, biologically-plausible learning rules

- Focus on training speed and robustness

SNN training

EXODUS: Stable and Efficient Training of Spiking Neural Networks

Bauer, Lenz, Haghighatshoar, Sheik, 2022

SNN training

Supervised training of spiking neural networks for robust deployment on mixed-signal neuromorphic processors

Büchel, Zendrikov, Solinas, Indiveri & Muir, 2021

Model definition and deployment to Speck

import torch.nn as nn

import sinabs.layers as sl

model = nn.Sequential(

nn.Conv2d(2, 8, 3),

sl.IAF(),

nn.AvgPool2d(2),

nn.Conv2d(8, 16, 3),

sl.IAF(),

nn.AvgPool2d(2),

nn.Flatten(),

nn.Linear(128, 10),

sl.IAF(),

)

# training...

from sinabs.backend.dynapcnn import DynapcnnNetwork

dynapcnn_net = DynapcnnNetwork(

snn=model,

input_shape=(2, 30, 30)

)

dynapcnn_net.to("speck2b")

Bit-precise simulation of hardware

# model definition...

from rockpool.transform import quantize_methods as q

from rockpool.devices.xylo import config_from_specification, XyloSim

from rockpool.devices import xylo as x

# map the graph to Xylo HW architecture

spec = x.mapper(model.as_graph(), weight_dtype='float')

# quantize the parameters to Xylo HW constraints

spec.update(q.global_quantize(**spec))

xylo_conf, _, _ = config_from_specification(**spec)

XyloSim_model = XyloSim.from_confiq(xylo_conf)

Conclusions

- Our research progress is publicly available

- Neuron models are based on hardware capabilities, unfortunately no standardized neuron models

- We offer mature frameworks that are battle-tested and are open to contributions

Algorithms team

lenzgregor.com

Gregor Lenz

Open Neuromorphic 13.12.2022

By Gregor Lenz

Open Neuromorphic 13.12.2022

- 317