22 Mar 2017

P3 Unit Leader 이해승

Applying to CDNW

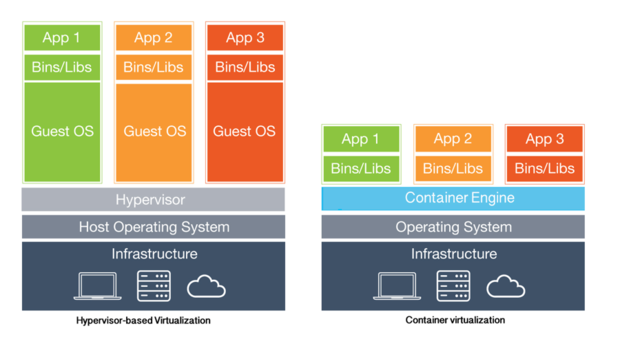

Virtual Machine, Hypervisor, ... more deeply

Docker

Docker Lifecycle

- docker create

- docker rename

- docker run

- docker rm

- docker update

Cheat Sheet

Authorization

Add User

Env Vars

Firewall

Dependencies

Network

Proxy Setting

Run App!!

Bundle Install

Configuration

Install GO or Python

Git Clone

Deployment Process

Authorization

Add User

Env Vars

Firewall

Dependencies

Network

Proxy Setting

Run App!!

Bundle Install

Configuration

Install GO or Python

Git Clone

State Change during Deployment

A

B

C

Practically,

numerous Applications

on server

Configuration Management

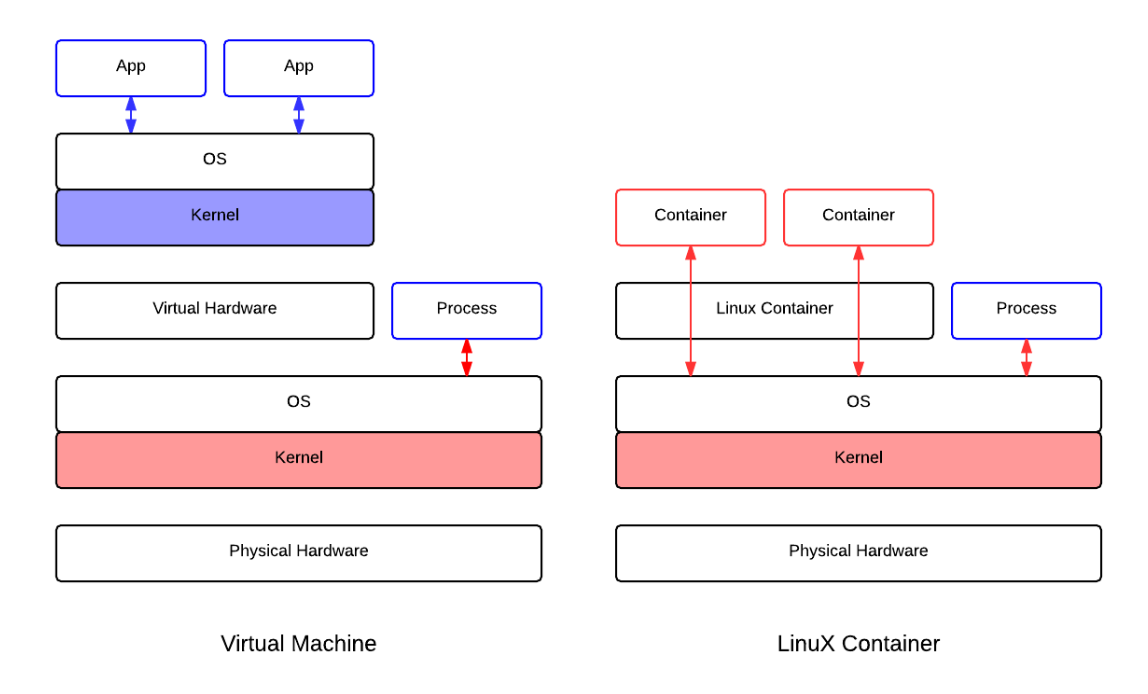

Docker의 구조

현재의 Docker(latest 1.13)

LXC

AuFS

프로세스 격리

계층화된 저장 장치

이미지 공유

Libcontainer

DeviceMapper

BTRFS

Docker Hub

Registry

Private

3rd Party

Repository

Boot2Docker

Docker 지원 시스템

Ubuntu, Red Hat Enterprise Linux, Oracle Linux, CentOS, Debian, Gentoo, Google Cloud Platform, Rackspace Cloud(Carina), Amazon EC2, IBM Softlayer, Microsoft Azure, Arch Linux, Fedora, OpenSUSE, CRUX linux, Microsoft Windows/Windows server, Mac OS X

Docker만의 차별성

- 이미지(Image) 기반의 애플리케이션 가상화

A

B

C

D

E

이미지: 특정 애플리케이션이 실행 가능한 고유 환경

컨테이너: 특정 이미지 기반으로 실행되는 격리된 프로세스

표준화된 이미지

이미지 생성/공유 기능

공식 Registry 서비스 지원

Private Registry 애플리케이션

Container vs. Docker

: 표준화된 컨테이너의 이동성에 집중

LXC vs. Docker

: LXC == 프로세스 격리를 위한 도구

: Docker == 컨테이너 수송을 위한 도구

Docker의 핵심

IaaS의 자유

PaaS의 단순함

--> Container on CDN?

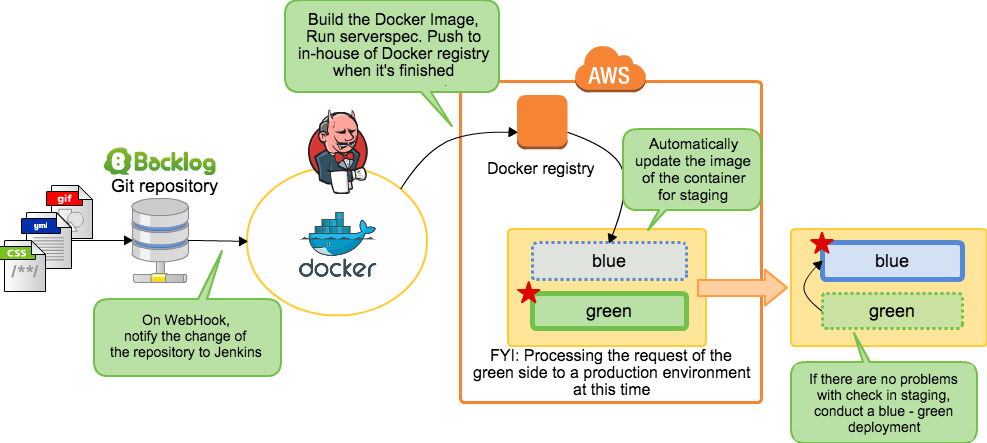

5. Re-Definition of Deployment by Docker

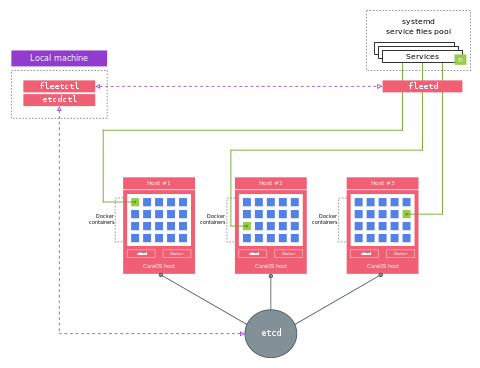

CoreOS

클러스터링 가능한 도커 전용 OS

물류 시스템?

Docker로 모든 애플리케이션을 컨테이너로 만든다면, 서버는 Docker를 돌리기 위한 존재일뿐 --> CoreOS concept

CDN 3.0?

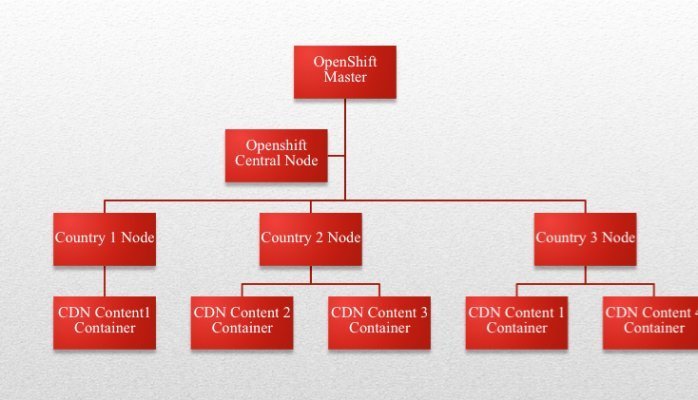

... easier to manage is to use Openshift 3.0

as the infrastructure of the CDN.

Feature Toggle - launch darkly

| Support for diverse kinds of workloads such as big data, cloud native apps, etc. |

Types of Workloads | Cloud Native Application |

|

"Application Group" models dependencies as a tree of groups. Components are started in dependency order. Colocation of group's containers on same Mesos slave is not supported. A Pod abstraction is on roadmap, but not yet available. |

Application Definition | A combination of Pods, Replication Controllers, Replica Sets, Services and Deployments. As explained in the overview above, a pod is a group of co-located containers; the atomic unit of deployment. Pods do not express dependencies among individual containers within them. Containers in a single Pod are guaranteed to run on a single Kubernetes node. |

| Possible to scale an individual group, its dependents in the tree are also scaled. |

Application Scalability constructs | Each application tier is defined as a pod and can be scaled when managed by a Deployment or Replication Controller. The scaling can be manual or automated. |

| Applications are distributed among Slave Nodes. | High Availability | Pods are distributed among Worker Nodes. Services also HA by detecting unhealthy pods and removing them. |

| Application can be reached via Mesos-DNS, which can act as a rudimentary load balancer. |

Load Balancing | Pods are exposed through a Service, which can be a load balancer. |

| Load-sensitive autoscaling available as a proof-of-concept application. Rate-sensitive autoscaling available for Mesosphere’s enterprise customers. Rich metric-based scaling policy. |

Auto-Scaling for the Application | Auto-scaling using a simple number-of-pods target is defined declaratively with the API exposed by Replication Controllers. CPU-utilization-per-pod target is available as of v1.1 in the Scale sub resource. Other targets are on the roadmap. |

| "Rolling restarts" model uses application-defined minimumHealthCapacity (ratio of nodes serving new/old application) "Health check" hooks consume a "health" API provided by the application itself |

Rolling Application Upgrades and Rollback |

"Deployment" model supports strategies, but one similar to Mesos is planned for the future Health checks test for liveness i.e. is app responsive |

| Logging: Can use ELK Monitoring: Can use external tools |

Logging and Monitoring | Health checks of two kinds: liveness (is app responsive) and readiness (is app responsive, but busy preparing and not yet able to serve) Logging: Container logs shipped to Elasticsearch/Kibana (ELK) service deployed in cluster Resource usage monitoring: Heapster/Grafana/Influx service deployed in cluster Logging/monitoring add-ons are part of official project Sysdig Cloud integration |

| A Marathon container can use persistent volumes, but such volumes are local to the node where they are created, so the container must always run on that node. An experimental flocker integration supports persistent volumes that are not local to one node. |

Storage | Two storage APIs: The first provides abstractions for individual storage backends (e.g. NFS, AWS EBS, ceph,flocker). The second provides an abstraction for a storage resource request (e.g. 8 Gb), which can be fulfilled with different storage backends. Modifying the storage resource used by the Docker daemon on a cluster node requires temporarily removing the node from the cluster |

| Marathon's docker integration facilitates mapping container ports to host ports, which are a limited resource. A container does not get its own IP by default, but it can if Mesos is integrated with Calico. Even so, multiple containers cannot share a network namespace (i.e. cannot talk to one another on localhost). |

Networking | The networking model lets any pod can communicate with other pods and with any service. The model requires two networks (one for pods, the other for services) Neither network is assumed to be (or needs to be) reachable from outside the cluster. The most common way of meeting this requirement is to deploy an overlay network on the cluster nodes. |

| Containers can discover services using DNS or reverse proxy. | Service Discovery | Pods discover services using intra-cluster DNS |

| Mesos has been simulated to scale to 50,000 nodes, although it is not clear how far scale has been pushed in production environments. Mesos can run LXC or Docker containers directly from the Marathon framework or it can fire up Kubernetes or Docker Swarm (the Docker-branded container manager) and let them do it. | Performance and Scalability | With the release of 1.2, Kubernetes now supports 1000-node clusters. Kubernetes scalability is benchmarked against the following Service Level Objectives (SLOs): API responsiveness: 99% of all API calls return in less than 1s Pod startup time: 99% of pods and their containers (with pre-pulled images) start within 5s. |

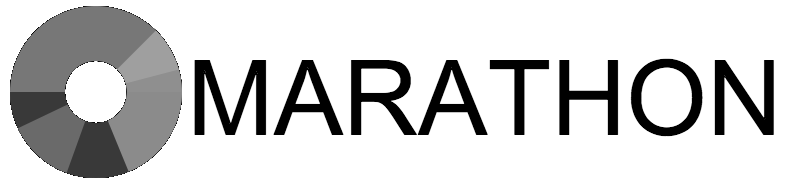

- Mesos+Marathon is slightly better and larger than kubernetes.

- Mesos can work with multiple frameworks and Kubernetes is one of them.

- Kubernetes on Mesos is working!

K8s-Mesos architecture

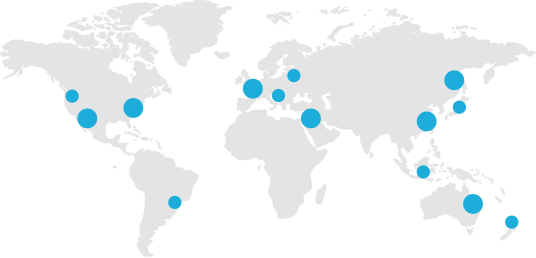

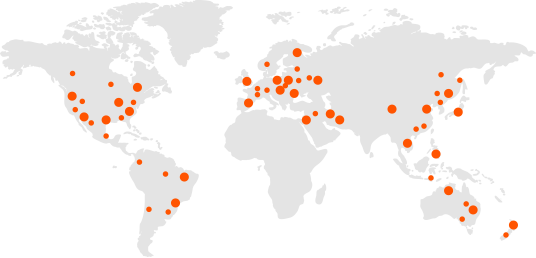

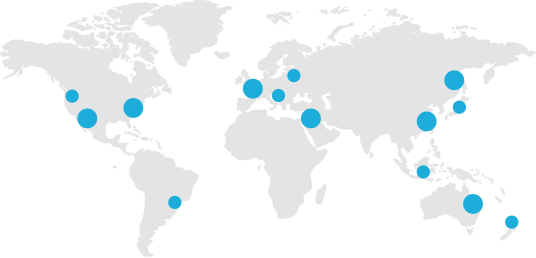

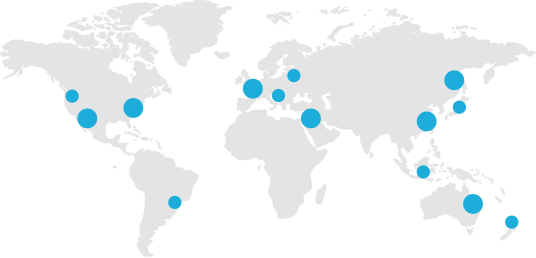

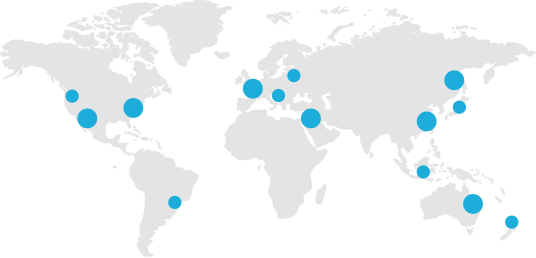

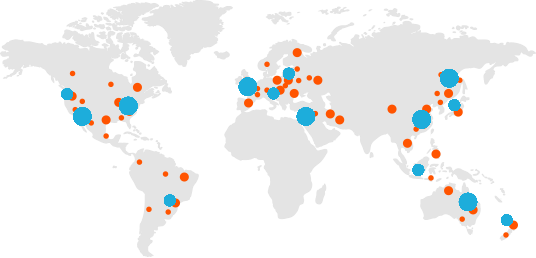

갑자기, CDN topology

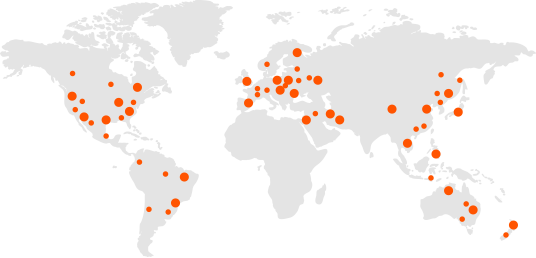

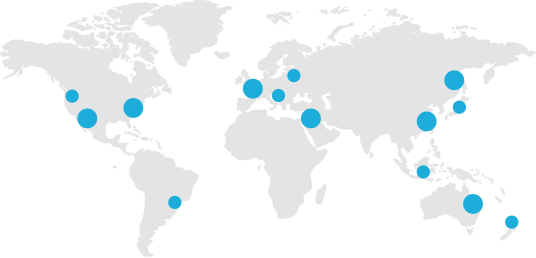

Scattered CDN

Consolidated CDN

갑자기, CDN topology

Scattered CDN

Consolidated CDN

-

physical proximity minimizes latency

-

effective in low-connectivity regions

-

smaller POPs are easier to deploy

-

Higher maintenance costs

-

RTT prolonged by multiple connection points

-

Cumbersome to deploy new configurations

-

High-capacity servers are better for DDoS mitigation

-

Enables agile configuration deployment

-

Lower maintenance costs

-

Less effective in low-connectivity regions

-

High-capacity POPs harder to deploy

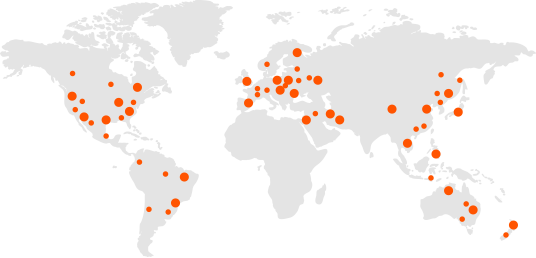

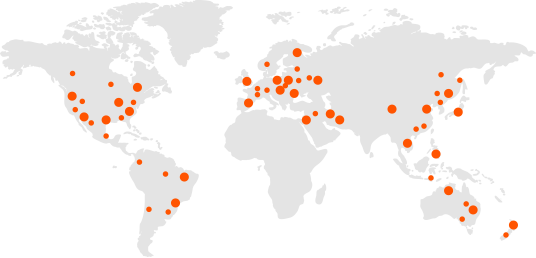

갑자기, CDN topology

Scattered CDN

Consolidated CDN

-

physical proximity minimizes latency

-

effective in low-connectivity regions

-

smaller POPs are easier to deploy

-

Higher maintenance costs

-

RTT prolonged by multiple connection points

-

Cumbersome to deploy new configurations

-

High-capacity servers are better for DDoS mitigation

-

Enables agile configuration deployment

-

Lower maintenance costs

-

Less effective in low-connectivity regions

-

High-capacity POPs harder to deploy

Akamai, CDNW

CloudFlare, LimeLight, and

AWS, GCP, Azure, AliYun

CDN strategy?

Scattered CDN

Consolidated CDN

CDNW CS

CDNW NGP

vs.?

CS와 NGP가 계속 따로 운영될 수 밖에 없는 구조.

통합은 어렵다. 새로 만드는게 더 빠르다 --> PJ WE?

일부라도 좀 더 고쳐 보자. MSA로 변경해 보자 --> 통합은 여전히?

더 큰 그림(architecture)가 필요하다. 하지만 누가?

컨테이너화의 대상은? docker는 기술일 뿐? 우리에게 맞추려면?

CAPEX & OPEX를 줄이기 위해서 SD(Software-Defined)를 적극적으로 적용?

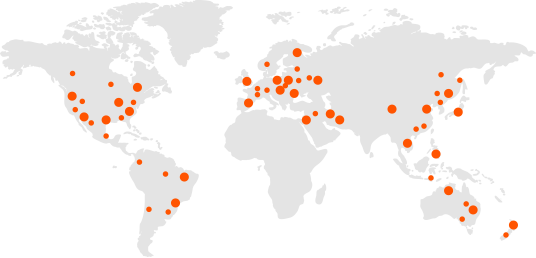

Ganglion model

or Nerve knot model

Scattered Cache network on some consolidated data processing hubs

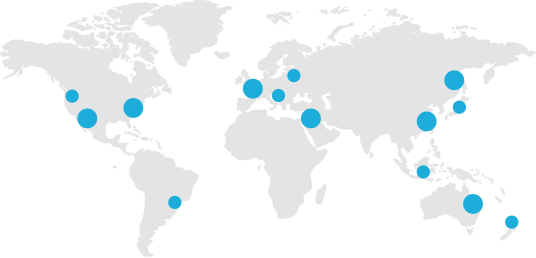

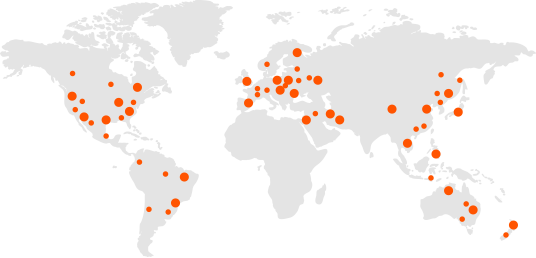

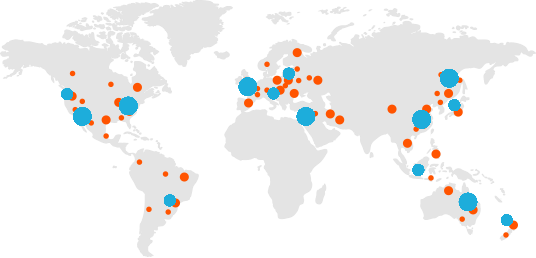

CDN strategy?

Scattered CDN

Consolidated CDN

Ganglion Model

Scattered CDN

Consolidated CDN

Ganglion Model

Ganglion(or Nerve Knot) - Consolidated. Central. High-Powered. Calculated.

Scattered. Throughput. Cached. Consumed.

applying Docker to CDNW

By Lee, Haeseung

applying Docker to CDNW

- 129