Managing

the Electronic

Resources Life-cycle:

Creating a comprehensive

checklist

using Techniques for

Electronic

Resource Management (TERMS)

Nathan Hosburgh, Montana State University ╬ NASIG 2013

WHY?

Create a reference point

Organize workflow & create efficiencies

Foster effective communication w/n & across teams

Exercise responsible stewardship of resources

Document iterative processes that can be improved

What does "the checklist' look like?

Simple word doc or PDF

Spreadsheet

Database

Flowchart

Management System

Whatever works for you!

Disclaimer:

I suck at ppt and I do not want to torture you.

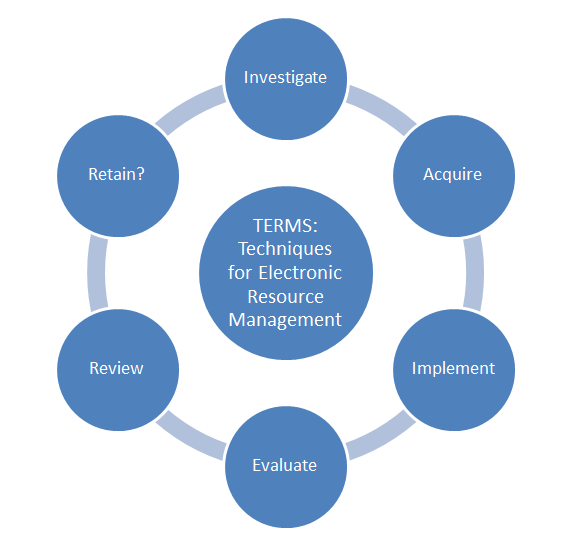

The e-resource life-cycle

Graphic from TERMS Wiki http://library.hud.ac.uk/wikiterms/Main_Page with slight modification

investigating new content

What is the goal?

Specify evaluation criteria

Consult with your team

Desktop review & trial

Communicate with vendors

Make a decision

know what you want to achieve

Identify & gauge demand

Purpose (teaching, research, other)

Response to course, research agenda, PDA

Electronic replacement for print

History of ILL requests

Integration with preferred platform

Budgetary concerns

Evaluation criteria

Audience appropriate?

Intuitive interface?

Compare platforms

Authentication/IP/proxy access?

COUNTER usage stats?

Integration into unified search tool?

MARC Record availability/quality/cost?

Administrative control?

(more) evaluation criteria

Preliminary license review

Unlimited simultaneous use

Remote/walk-in/alumni access

"Site" definition

ILL/course packs/e-reserves/LMS

Perpetual access & where?

Ebook format: PDF, epub, DRM?

get the right team

Single journals/small purchases - no team

Consider project template for large projects

Team Composition:

- E-resource Manager

- Collection Development Librarian

- Budget holder

- Subject Liaison Librarians

- Faculty outside library

review & trial

Satisfy demand with existing resource(s)?

Overlap analysis: coverage & duplication

Product reviews/comparison studies

Timing is important for trial

Publicize on blog, wiki, web, email

Usage stats for trial

Trial feedback

Longer trial is better - at least one month

talk to providers

Consortia arrangements?

Individual deals may be more flexible/advantageous

Better pricing for multi-year deal?

Inform vendor when looking at similar products

Understand fees and contract

Refer back to specification doc (criteria)

make a decision

Score resource against criteria

Criteria may be weighted based on priorities

Review can take a few hours or months

Document relevant points in ERM/spreadsheet

User feedback can be added to documentation

acquisition of new content

Compare specs between library & vendor

Negotiate contract terms & pricing

Review the license

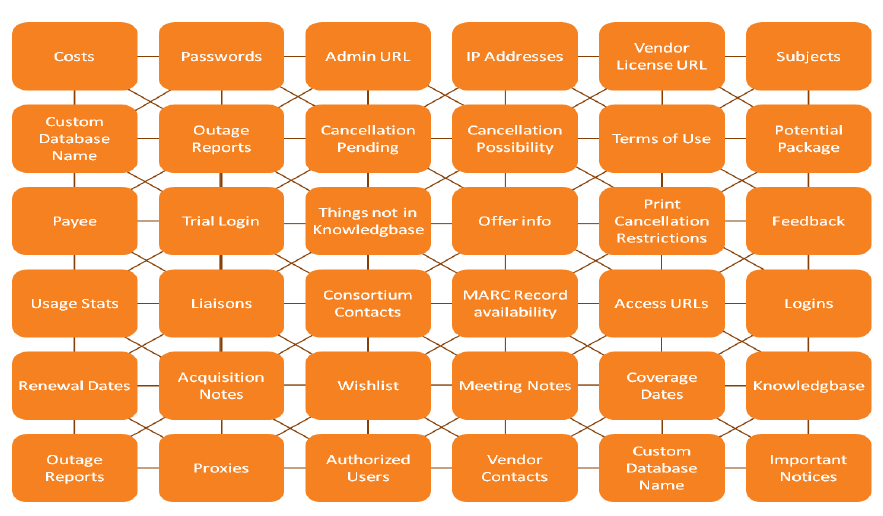

Record administrative metadata

compare specifications

Library & Vendor agreement (selection criteria grid)

Purchase order?

DDA details & configuration

Specific contract for purchasing terms?

Annual renewal process?

Multi-year discounts?

contract negotiation & pricing

SERU Guidelines may be used in lieu of license

Create list of 'deal breakers' & 'must haves'

Model license option

Base price on FTE that will use resource

Adjust price for product crossover

Everything is negotiable!

final license steps

Comprehensive license review

Forward to signing authority

Don't rush; protect yourself

Store countersigned e-copy

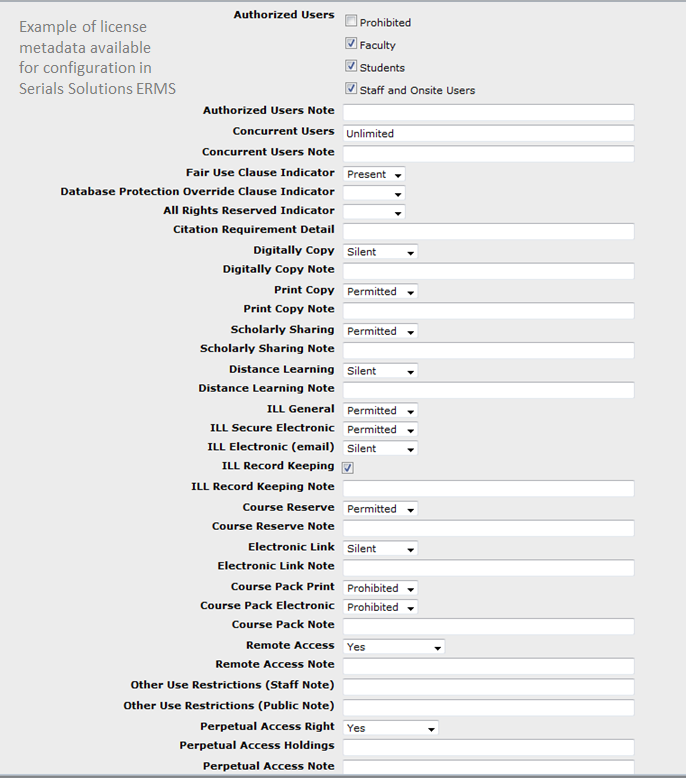

Record administrative data in ERMS:

- Payment terms

- Service terms

- License terms

- Renewal details

implementation

Test

Market

Train & Document

Launch & Feedback

testing the product

URL

Proxy/IP access

MARC Records

OpenURL

Admin interface

Usage stats

Access points

marketing the resource

Marketing plan?

Consider needs, wants, interests of users

Target specific user groups

Identify objectives of service/product

Develop marketing matrix

- Actions

- Responsibilities

- Timing

training & documentation

Update guides if replacing resource

May create library documentation

Check vendor documentation

Vendors provide free:

- Webinars

- Podcasts

- Conference Calls

- On-site visit

launch & feedback

Major changes may require 'soft launch'

Gather feedback via survey, focus groups, stats

Compare multiple access points for product

Timing of launch important

ongoing evaluation & access

Types of evaluation

Check the implementation

Ask your users

Track changes & issues

Communicate with the vendor

evaluation methods

Set consistent data points to measure

COUNTER-based stats

ISI Impact Factor

Eigenfactor

Journal Usage Factor project

Aggregated stats:

- Web page

- Discovery tool

- OpenURL

- ILS

check the implementation

Set up review schedule

After purchase: monthly, quarterly, annually

Check links & remote authentication

Check full-text access

General usability issues

ERMS reminder configuration

ask your users

Are e-resource needs being met?

Structured: LibQual+, survey

Unstructured: web comments, resource issues

Record comments in CRM or spreadsheet

Develop consistent approach; coherent reporting

monitor platform changes

Journal title transfers b/n platforms & publishers

'Transfer Code of Practice'

Team effort for quality control

Typical checks at renewal period

ERMS & subscription service aid verification

vendor communication

Keep records on each provider

- Correspondence

- Scheduled maintenance

- Specific problems

Information factors into renewal

Feedback may improve product

Join pertinent user groups for engagement

annual review process

Cancellation schedule

Consider new costs & terms

Examine usage stats

Communicate with stakeholders

Retain/renegotiate/cancel

schedule

Check required notice period on subscription

Examine price increase from last year

Renewals may occur throughout year

May be efficient to review resources in batch

Review new & established resources

Planning meetings for subject teams

review costs, terms, conditions

Contact vendor in advance of renewal

Analyze price increases against agreement

Consider other pricing options if available:

- Simultaneous users vs. site license

- Subscription vs. one-time purchase

- Annual vs. multi-year

usage statistics

Check if vendor is COUNTER-compliant

Compare hits/searches/downloads

Nuanced data in COUNTER (JR1 & JR1a)

Some stats are better than none at all!

Based on usage stats:

- Resource flagged for cancellation

- Evidence training/outreach needed

- Increase/decrease simultaneous users

importance of ERMS

communicate to stakeholders

Represent data clearly in reports

Overlap analysis via ERMS, Jisc ADAT, CUFTS

Longitudinal data across years is best

Challenge with streaming video & atypical resources

Resource errors & usability issues reported

making a choice

Retain, renegotiate, or cancel

Re-assess market for substitutions

Review new license for game changers

Cancellation talk may open negotiation

Expect flexibility from vendors

Explore availability of funds across campus

sources

CUFTS Open Source Serials Management: http://researcher.sfu.ca/cufts

Emery, J. & Stone, G. (2013). Library Technology Reports, 49(2).

Jacoby, B., York College of Pennsylvania [shared acquisitions checklist via ERIL-L 2012]

Jisc Academic Database Assessment Tool: http: //www.jisc-adat.com

Jisc Project Management Guidelines: http://tinyurl.com/jw4nz3t

Project COUNTER: http://www.projectcounter.org/

SERU Guidelines: http://www.niso.org/workrooms/seru

Stone, G., Anderson, R., & Feinstein, J. (2010) E-Resources Management Handbook.

Terms Wiki: http://library.hud.ac.uk/wikiterms/Main_Page

TERMS Wiki

Original Creators

Jill Emery & Graham Stone

Section Editors

TERMS 1: Investigating New Content - Ann Kucera (Baker College, Michigan)

TERMS 2: Acquiring New Content - Nathan Hosburgh (Montana State University)

TERMS 3: Implementation - Stephen Buck (Dublin City University, Ireland)

TERMS 4: Ongoing Evaluation and Access - Anita Wilcox (University College Cork, Ireland)

TERMS 5: Annual Review - Anna Franca (King's College, London, UK)

TERMS 6: Cancellation and Replacement - Eugenia Beh (Texas A&M University, Texas)

Managing E Resources Life-cycle

By hos

Managing E Resources Life-cycle

- 3,476