LDA Topic Model

Outline

- Latent Dirichlet Allocation

- LDA Topic Model

- Implementation -- Gibbs Sampling

- Limitation

Latent(潛在的) Dirichlet Allocation

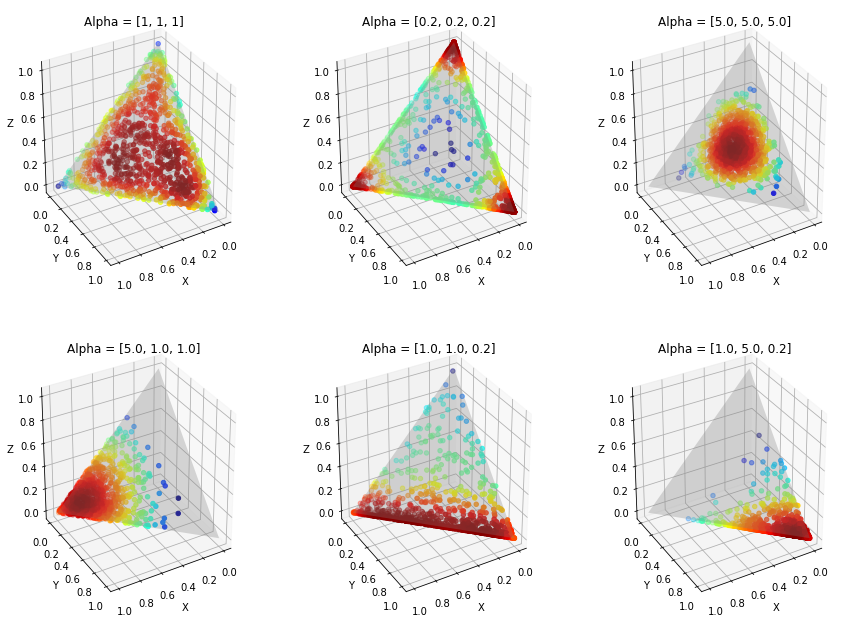

Dirichlet Allocation

Distribution of Distribution

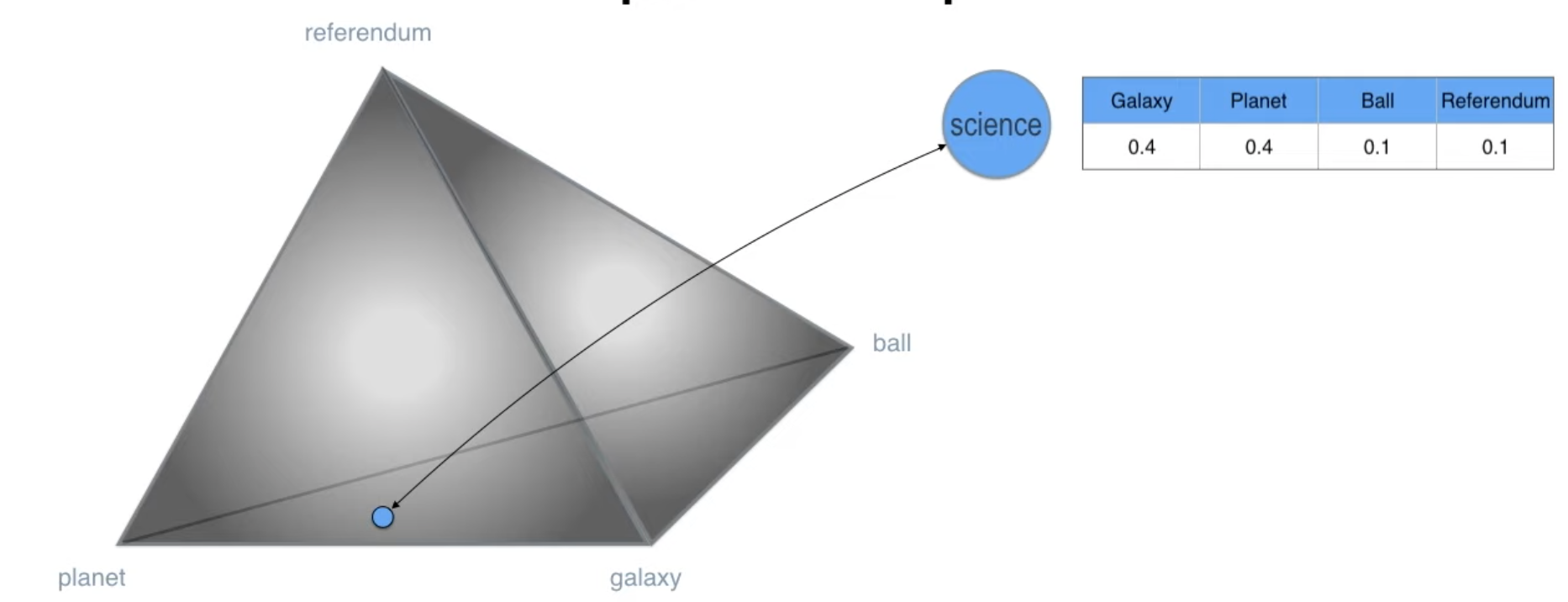

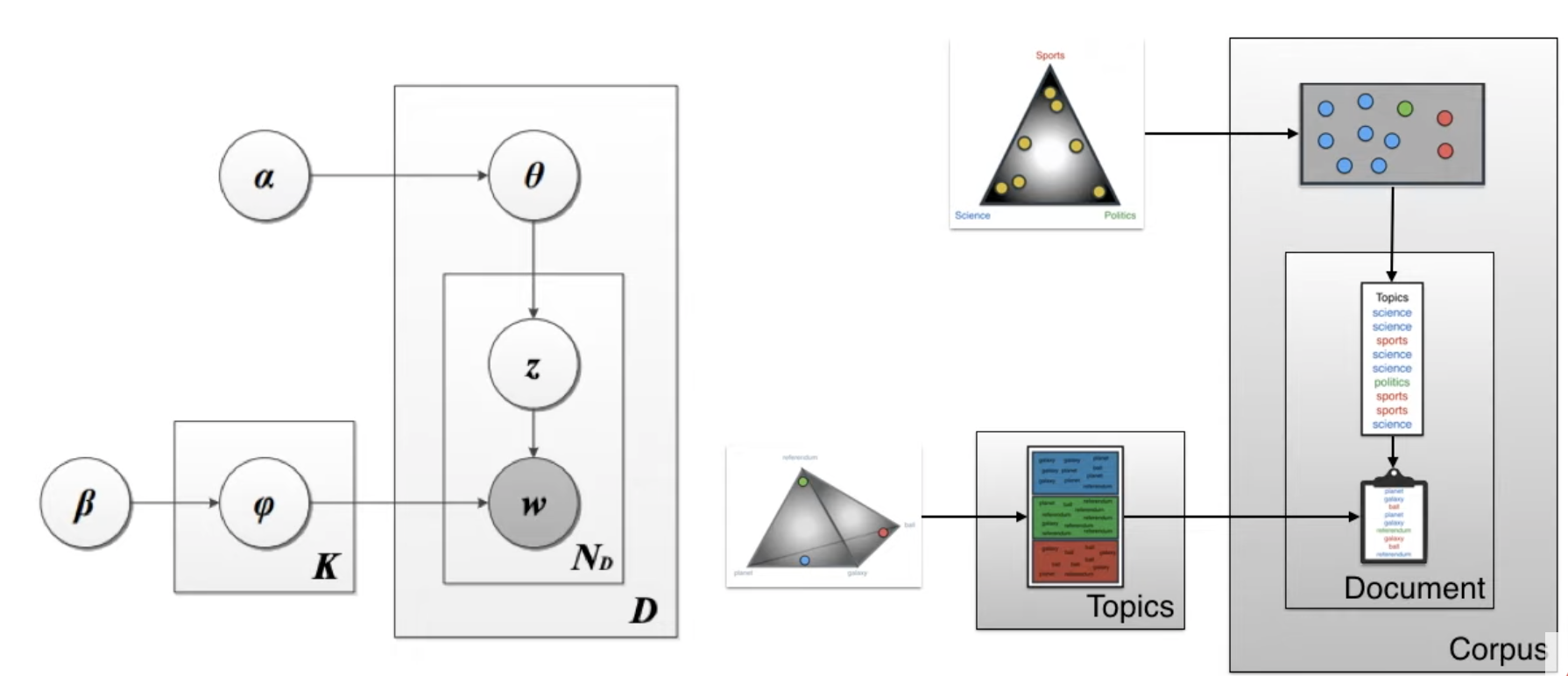

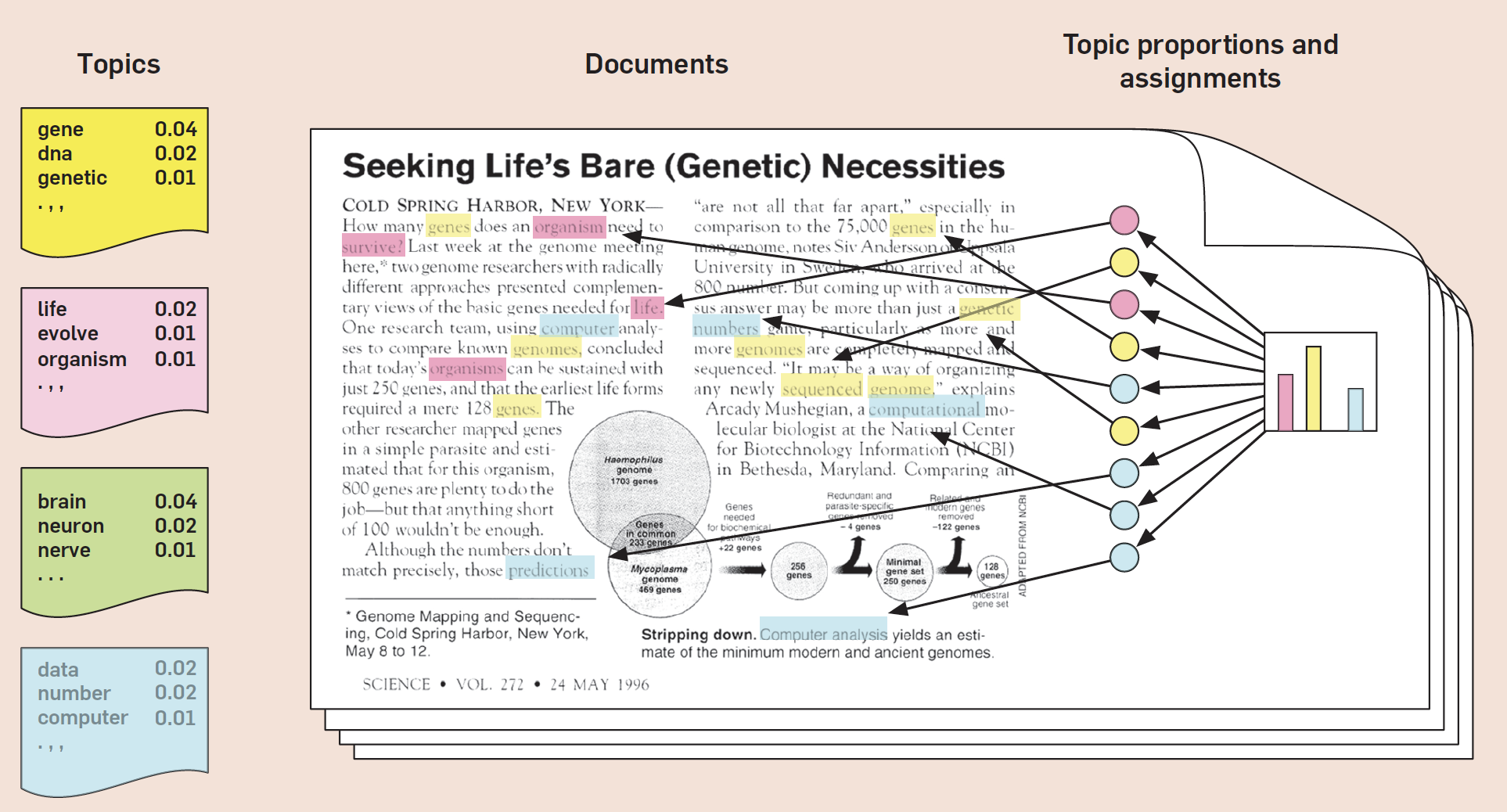

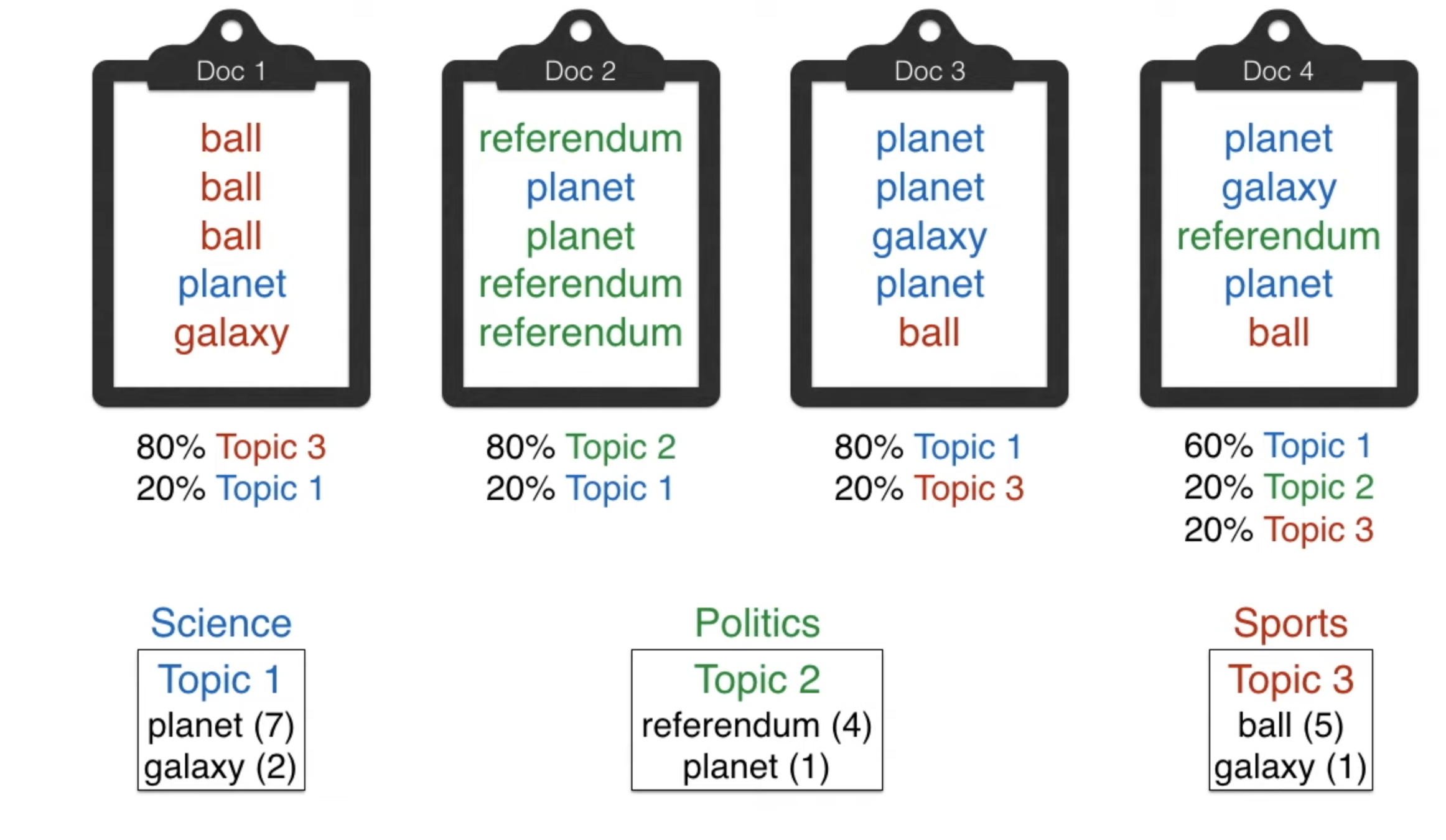

LDA Topic Model

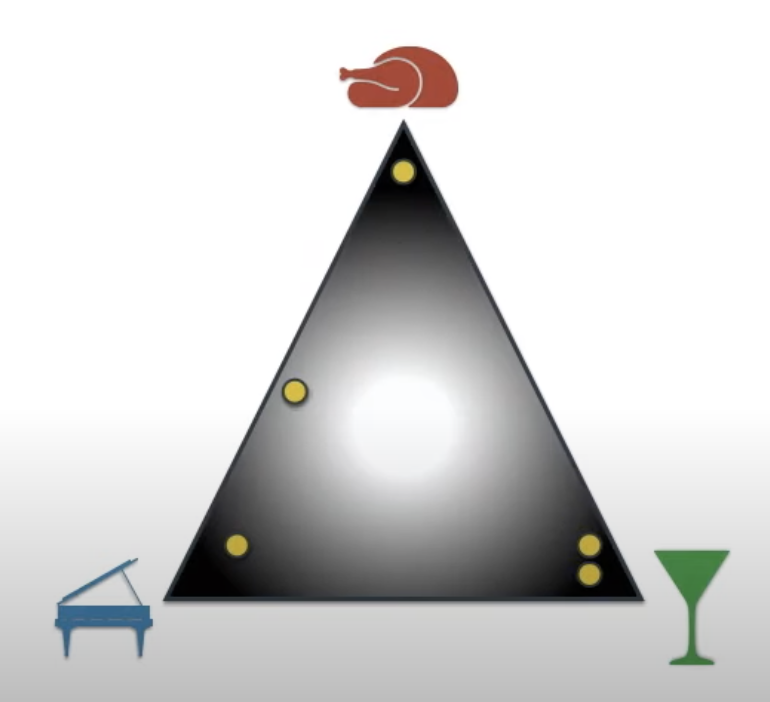

LDA Topic Model

- The document doesn't 100% belong to one topic.

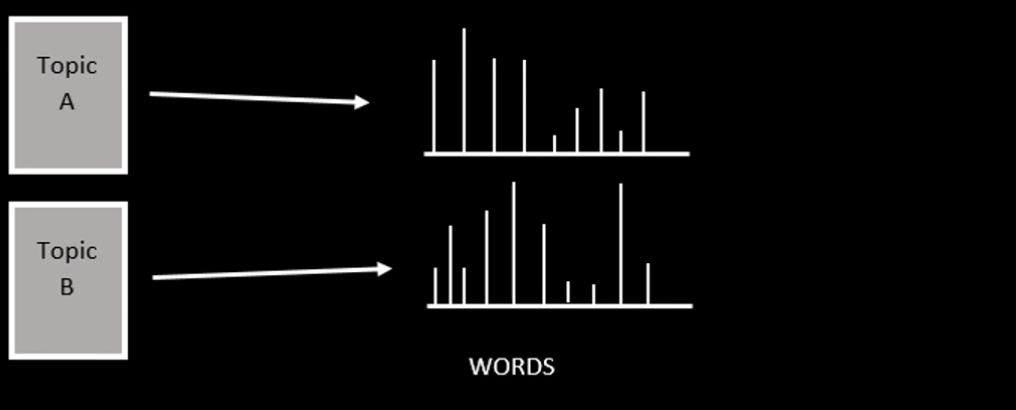

LDA Topic Model

- Every topic has its own bag of words.

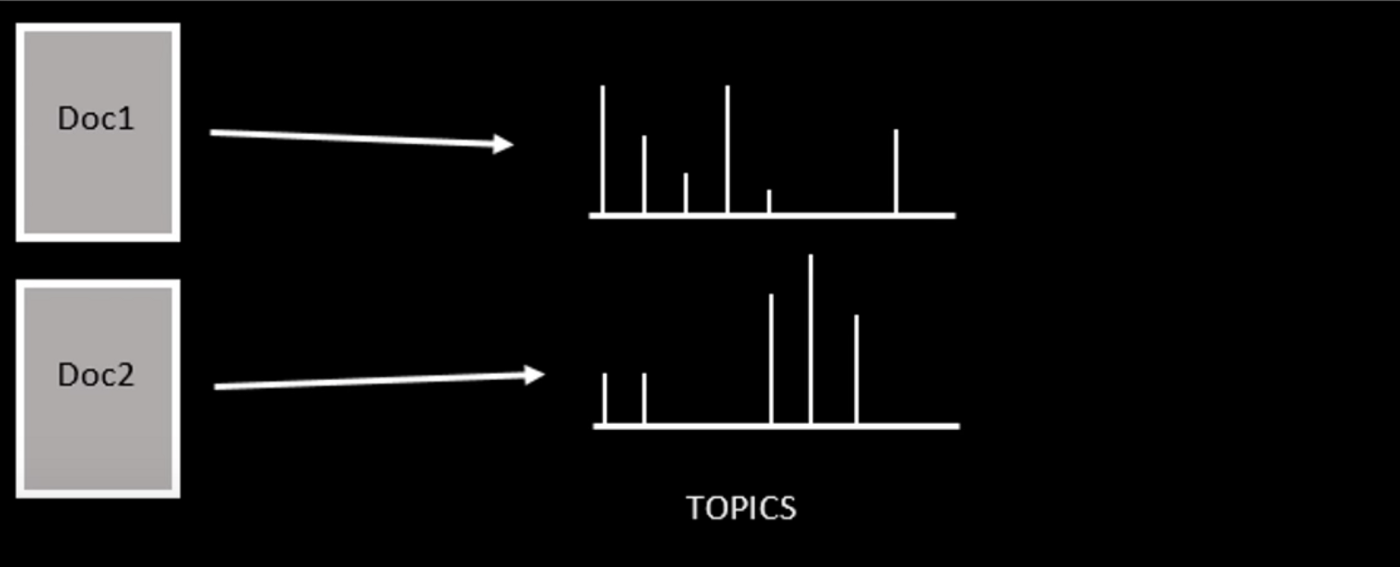

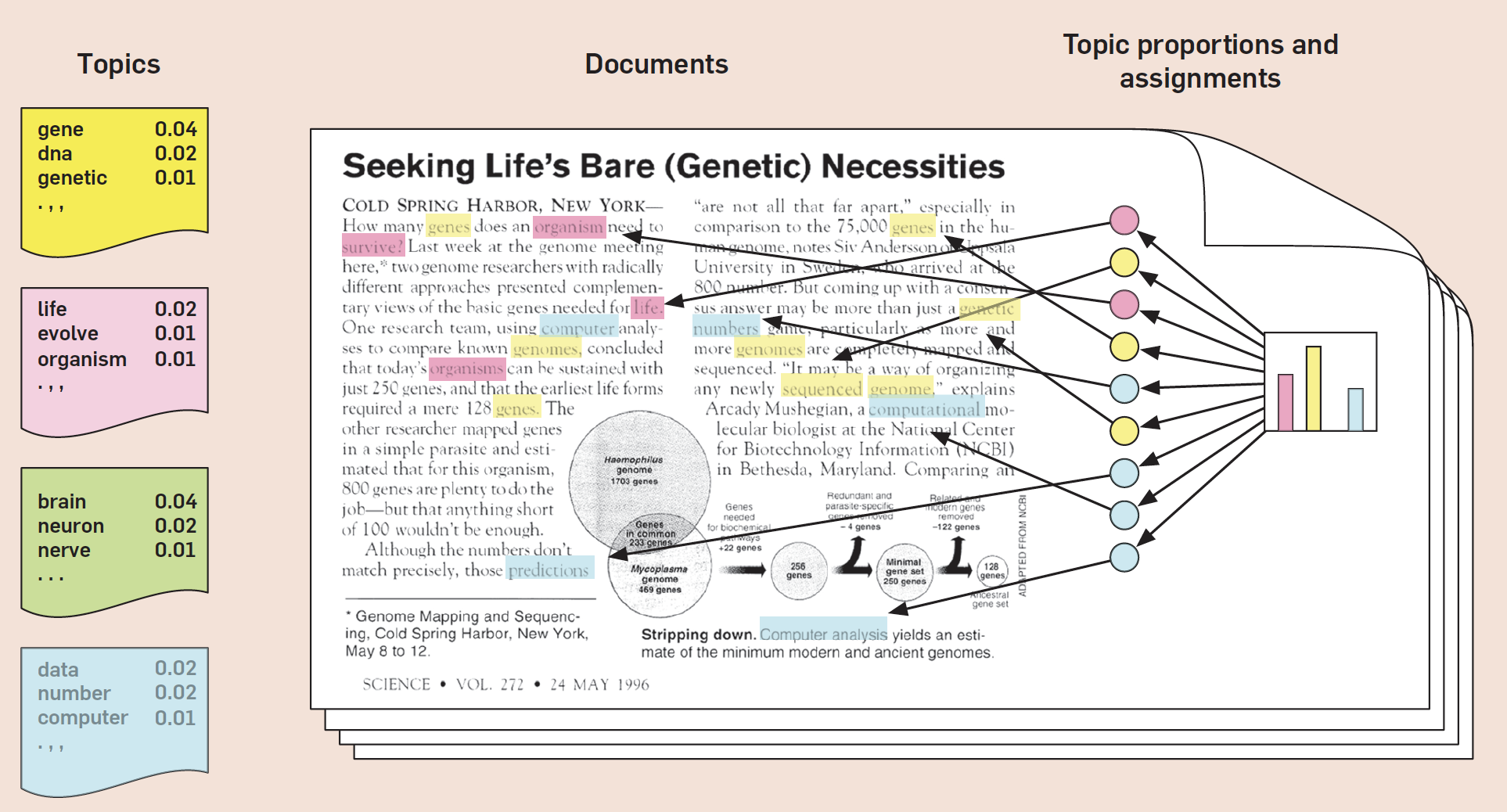

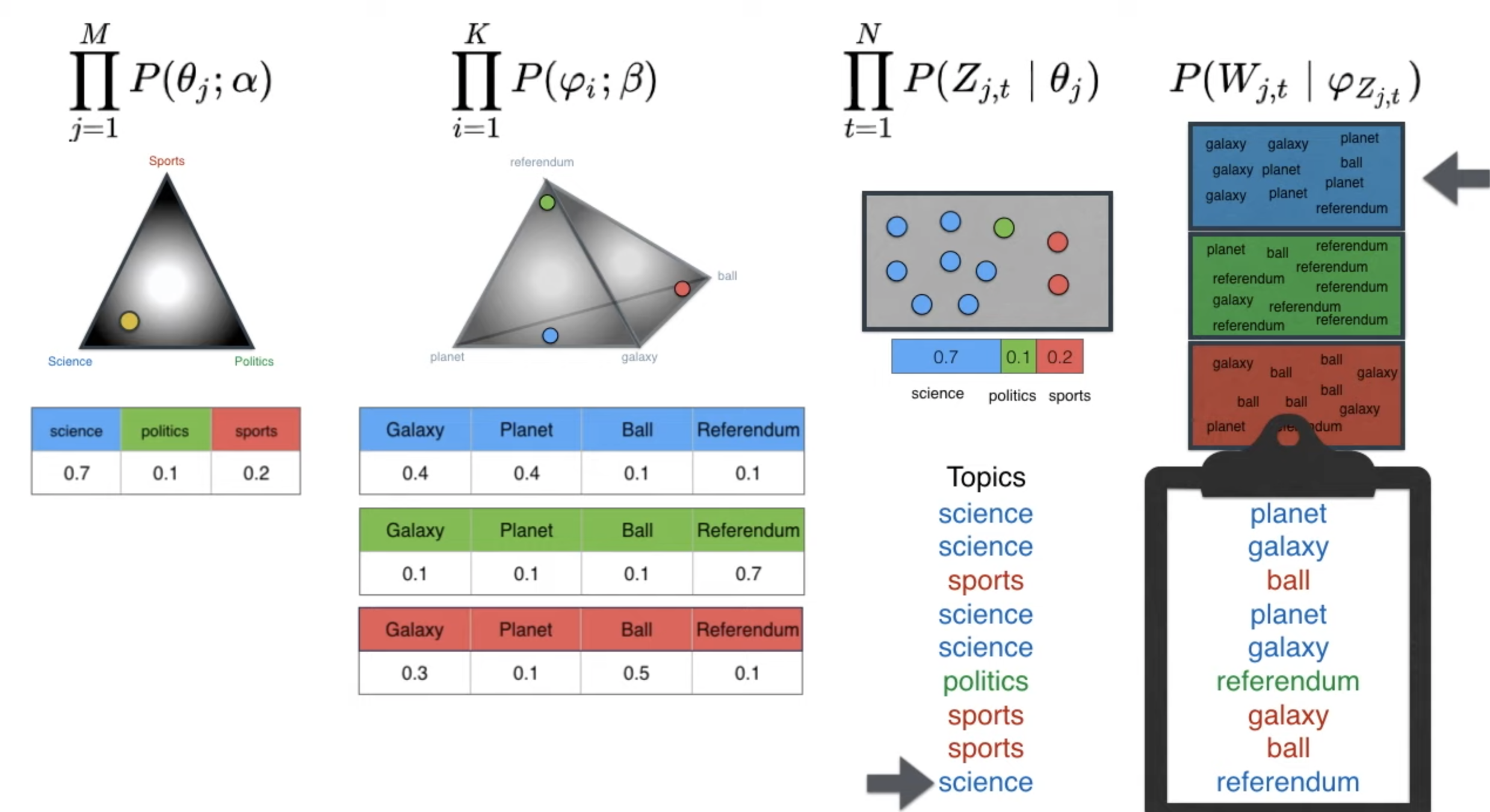

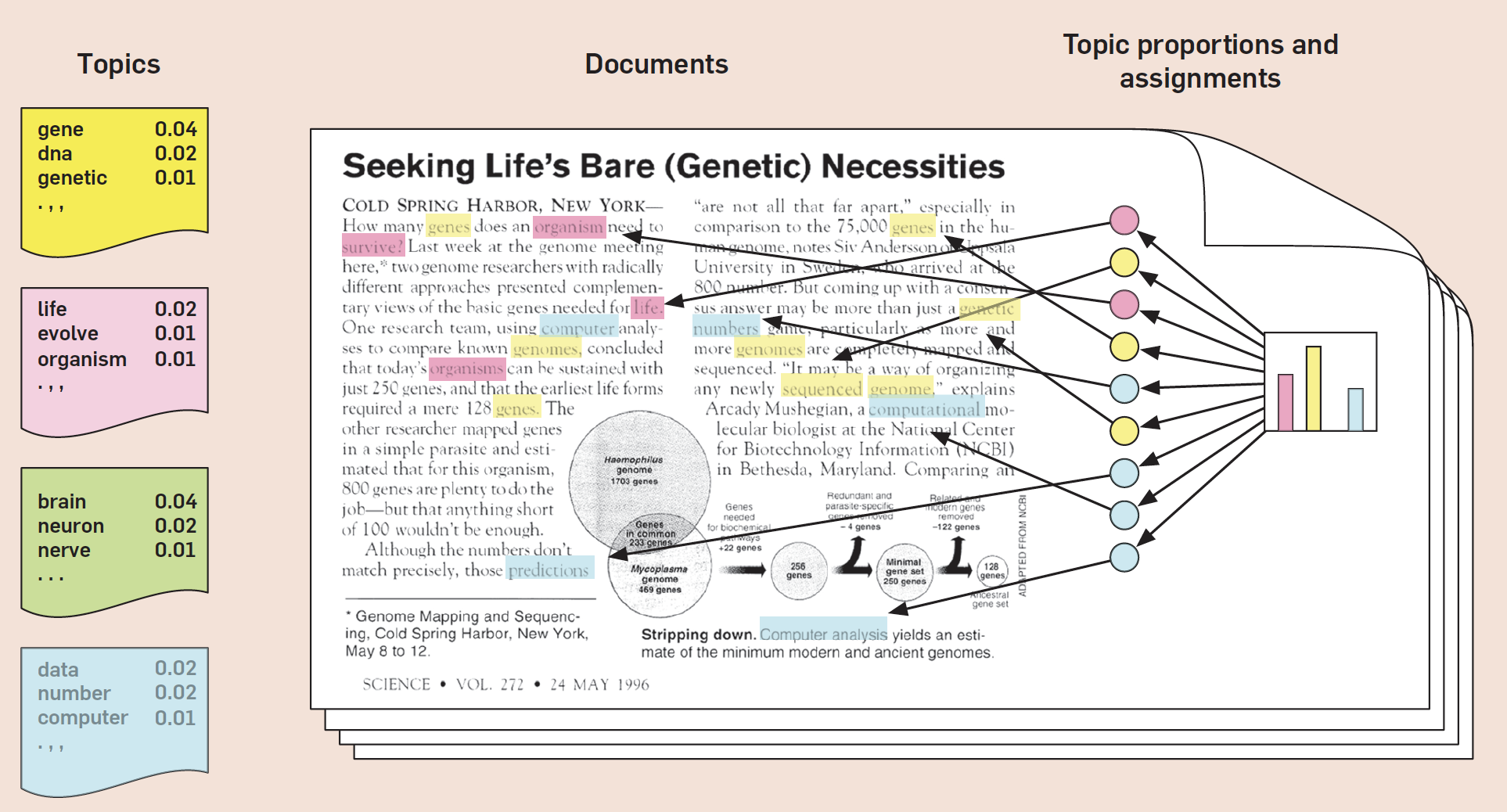

What to Solve

- documents → observed

- topic structure → hidden structure

- the topics

- per-document topic distributions

- the per-document per-word topic assignments

What to Solve

- observed documents -> hidden topic structure

- maximize the probability -> too complex

- focus the per-document per-word topic assignments

Gibbs Sampling

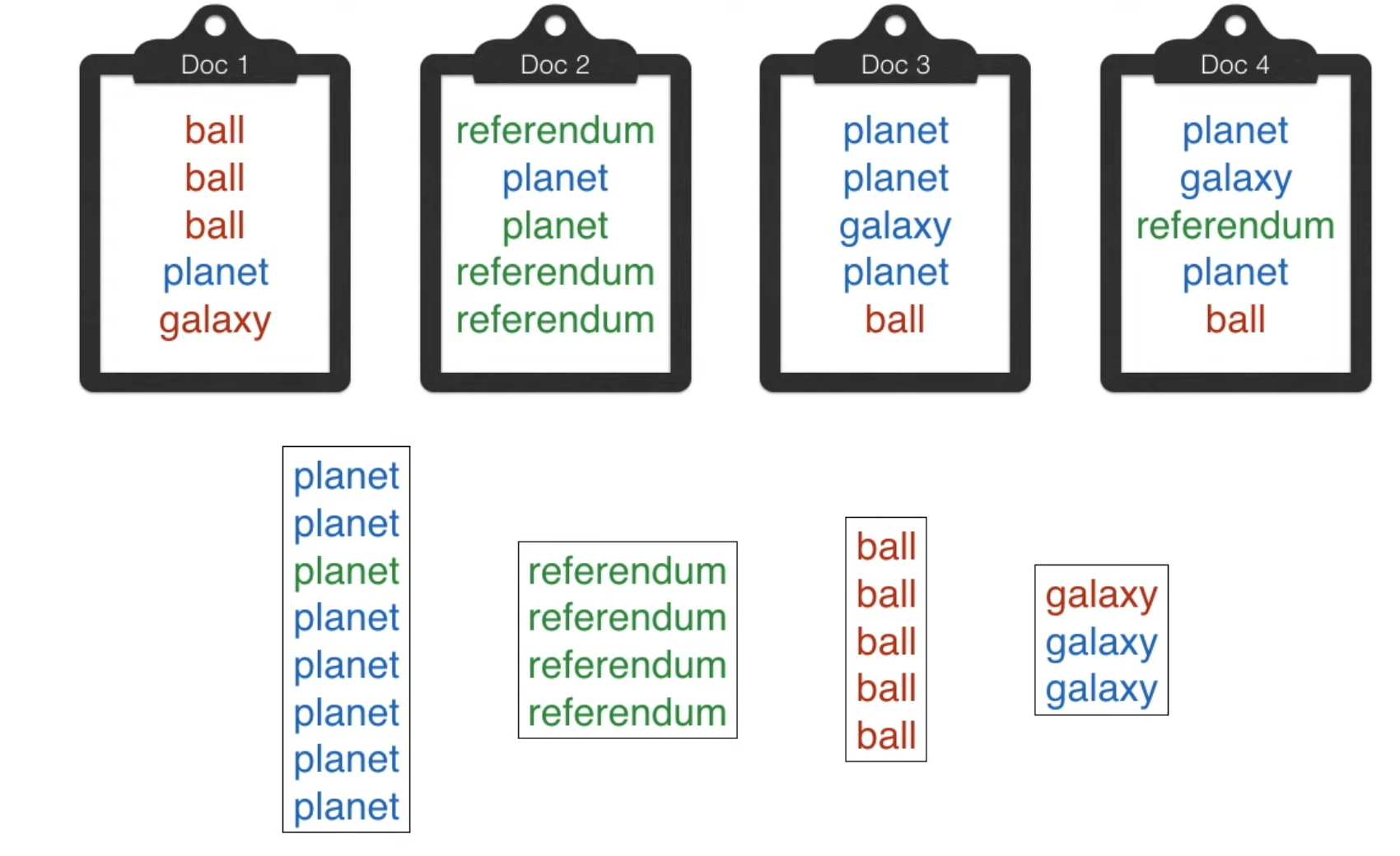

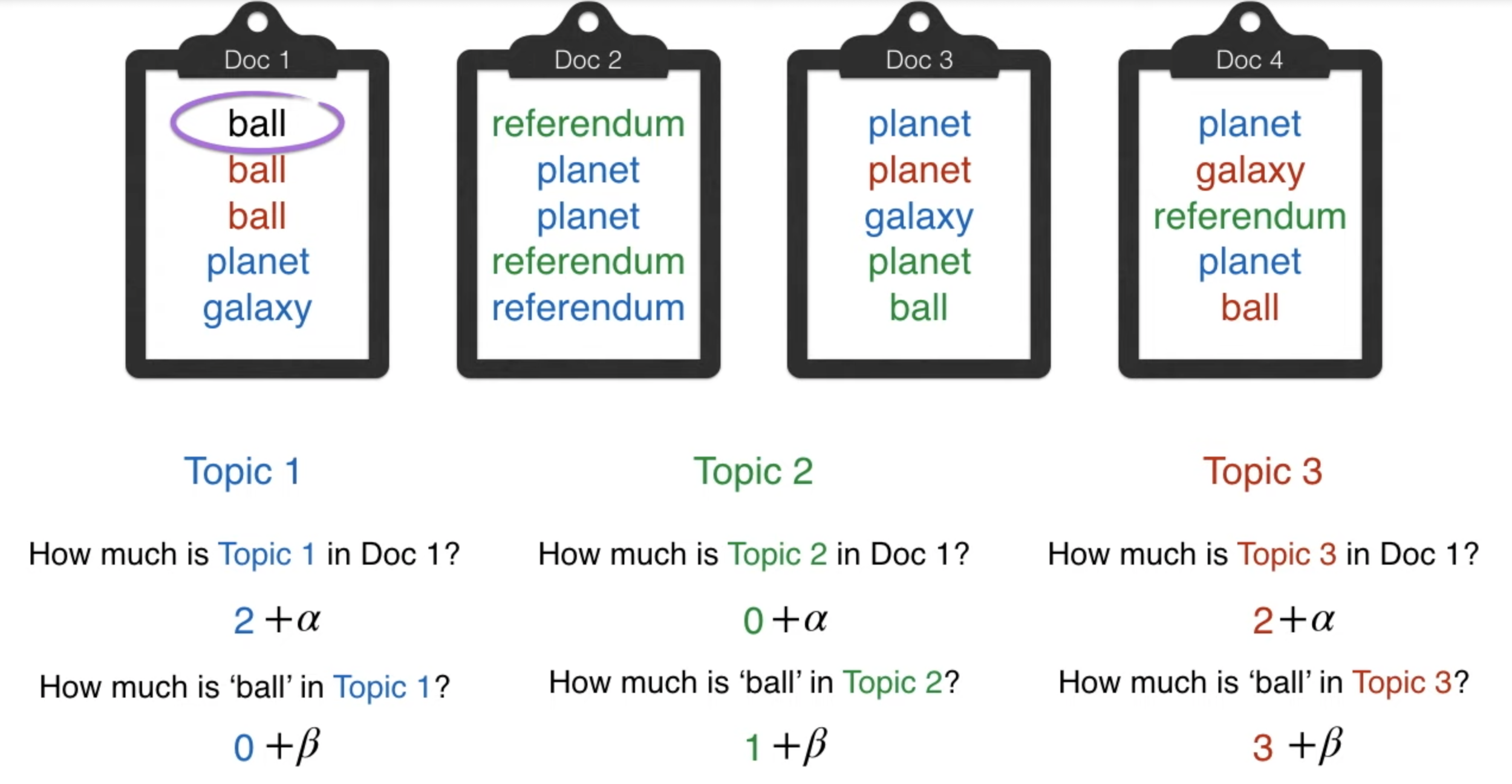

focus the per-document per-word topic assignments

2 Properties

- Articles are as monochromatic as possible.

- Words are as monochromatic as possible.

Coloring Problem

Gibbs Sampling

Topic id -> Topic

Limitation

Limitation

- # of the topic is fixed

- “bag of words” assumption

- the order of the words in the document does not matter

- require human to give topics according to words

Recap

Reference

LDA Topic Model

By hsutzu

LDA Topic Model

- 412