RATE meeting

August 24th

Previous meeting

Question: what properties define a good explanation?

Overview

Question: how to visualize explanations directly?

- Implementation

- Paper ideas

Demo

idea 1: Measuring explanations

Problem:

- No consensus on what a good explanation is

- Cannot compare explanation techniques

- Solution is subjective, so automated approaches (Pedreschi) won't work.

Goal/contribution:

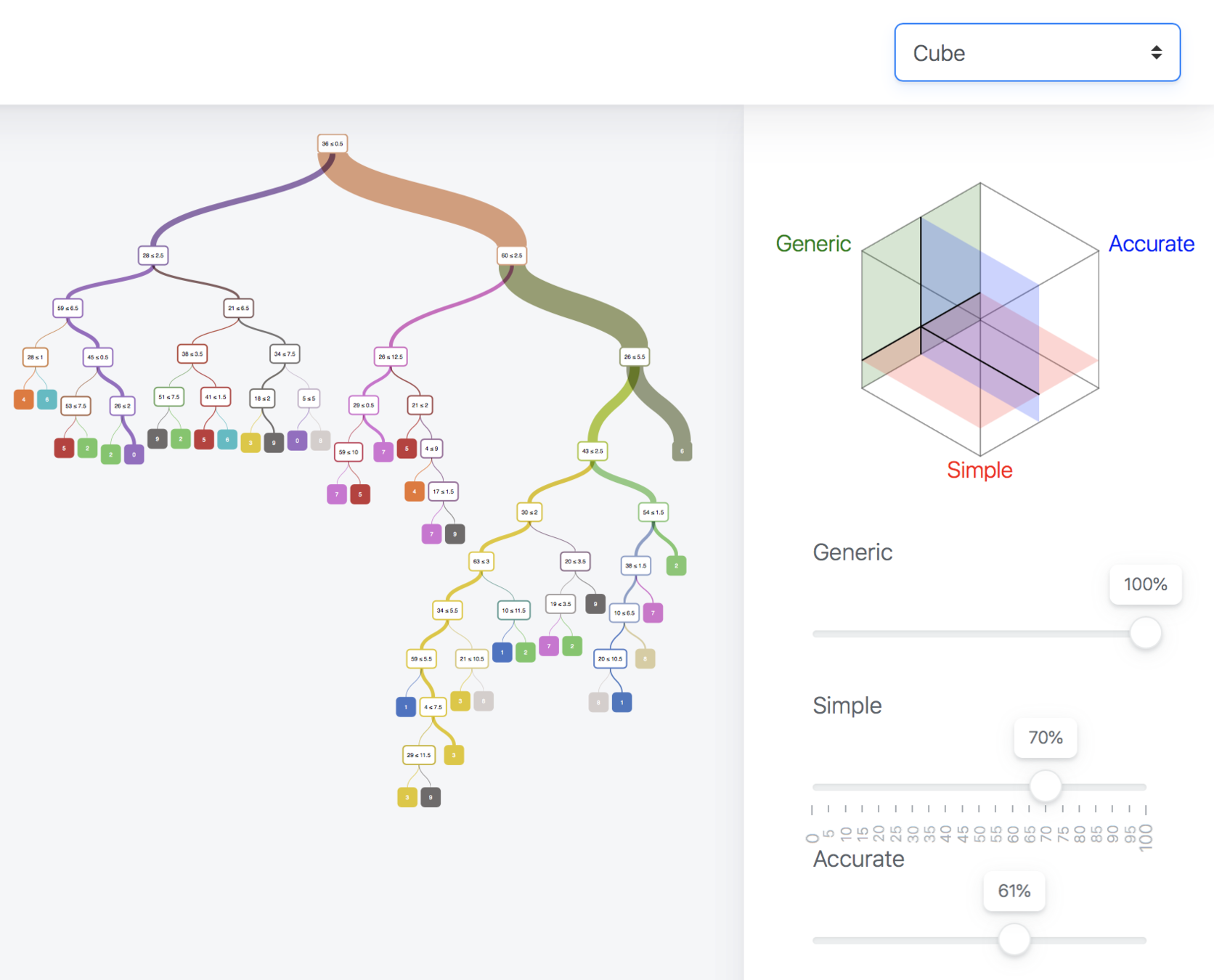

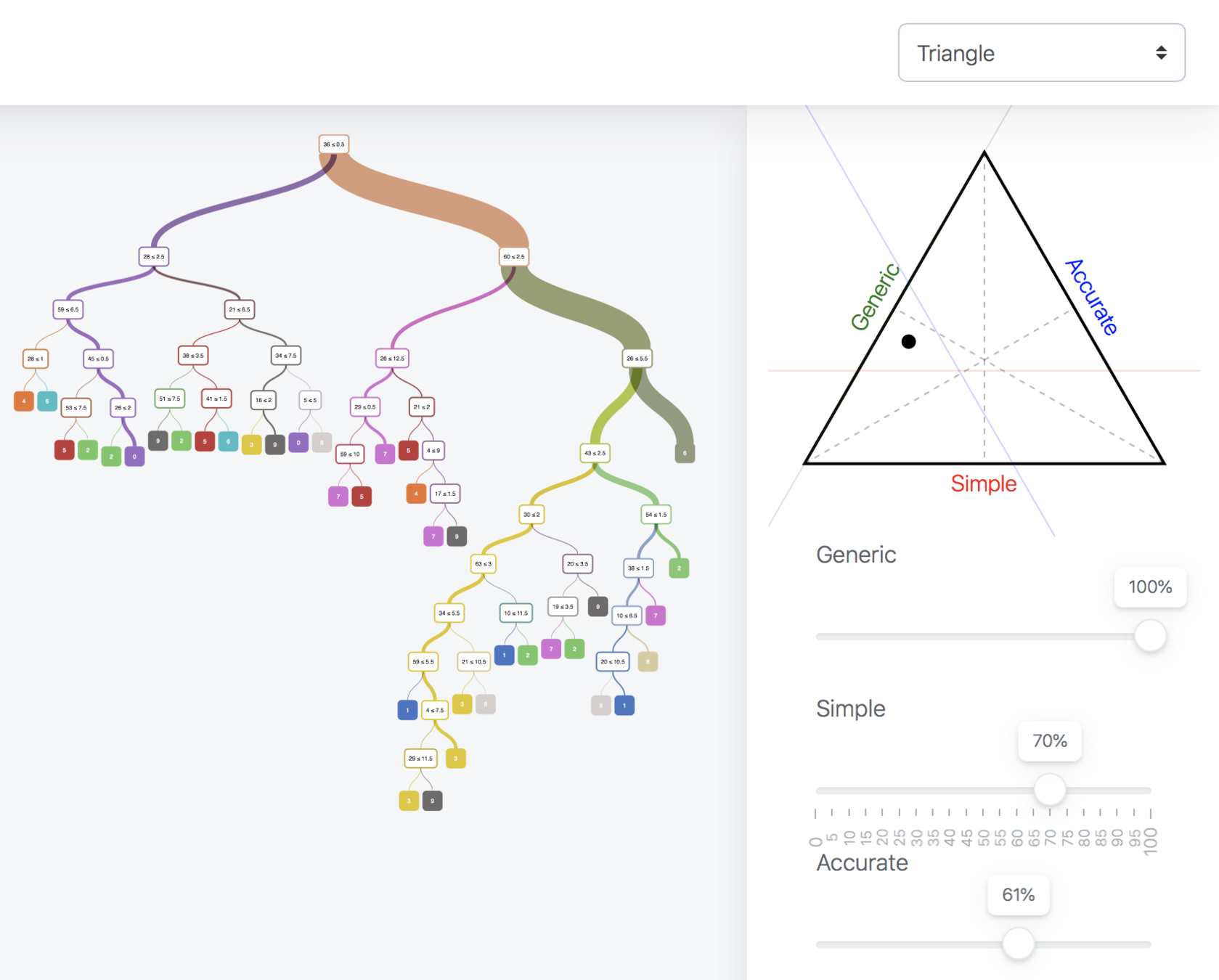

- Formal framework (Simplicity / Generality / Accuracy)

- Visual encoding of this system

- represent model

- represent user preference

- Apply to existing techniques (LIME/SHAP etc) to compare and evaluate them.

Pros:

+ Lots of interest during WHI workshop

Cons:

– Visualization not really required

– Heuristics..

idea 2: Educational playground

Problem:

- It is difficult to verify if an explanation explains the right thing

- Explanations are especially useful for novice ML users or decision makers.

Goal/contribution:

- An interactive visualization that allows users to experiment via direct manipulation to develop an understanding of

- How explanation approaches decision boundary

- How different parameters and surrogates affect the explanation

- If explanation is explaining the right thing

Pros:

+ System very suitable for this (it was

made for my own understanding as

well)

Cons:

– 'Thin' problem

idea 3: Expert (DM) system

Problem:

- Many explanations exist

- Which is best is subjective

- Which is best is difficult to define

Goal/contribution:

- Visuallly aid the expert to select the best explanation for his needs by:

- Direct manipulation

- Setting preferences (in triangle) and showing best explanation for that.

Pros:

+ Very well defined problem

Cons:

– System may not scale enough for expert

use (# features, complexity of model).

idea 4: Analyze inconsistencies

Problem:

- Many explanations techniques exist that are inconsistent

Goal/contribution:

- Provide a visual interactive system to very the validity of an explanation

- Show insights from using the system:

- increasing dimensionality decreases faithfulness

- decreasing locality increases accuracy

Pros:

+ Very well defined problem

Cons:

– Current system is mainly showing partial

dependence, which is just one of the 3

techniques that was inconsistent..

– Unsure if the system helps for this

idea 5: Explain ML

Problem:

- In ML there is a trade-off between simplicity and accuracy

- Complex models are difficult to understand

Goal/contribution:

- Provide a visual interactive system to show explanations for a given instance

- Also allow the expert to verify the explanation based on SPLOM

Pros:

+ This is exactly the goal of explanations

in the first place

Cons:

– ML visualization not something novel

Paper ideas

| # | Topic | Focus | Target user | Main contribution |

|---|---|---|---|---|

| 1 | Measuring explanations | Explanation | Academic | Formal |

| 2 | Educational playground | Explanation | Novice DS | Visual |

| 3 | Expert system | Explanation | Expert DM | Visual |

| 4 | Analyze inconsistencies | Explanation | Academic | Visual |

| 5 | Explain Machine Learning | ML | Expert DS / DM | Visual |

Deck August 24th

By iamdecode

Deck August 24th

- 110