NWO-RATE meeting

July 5th

Dennis Collaris

PhD Algorithms & Visualization

Predictive model interpretability

MY RESEARCH

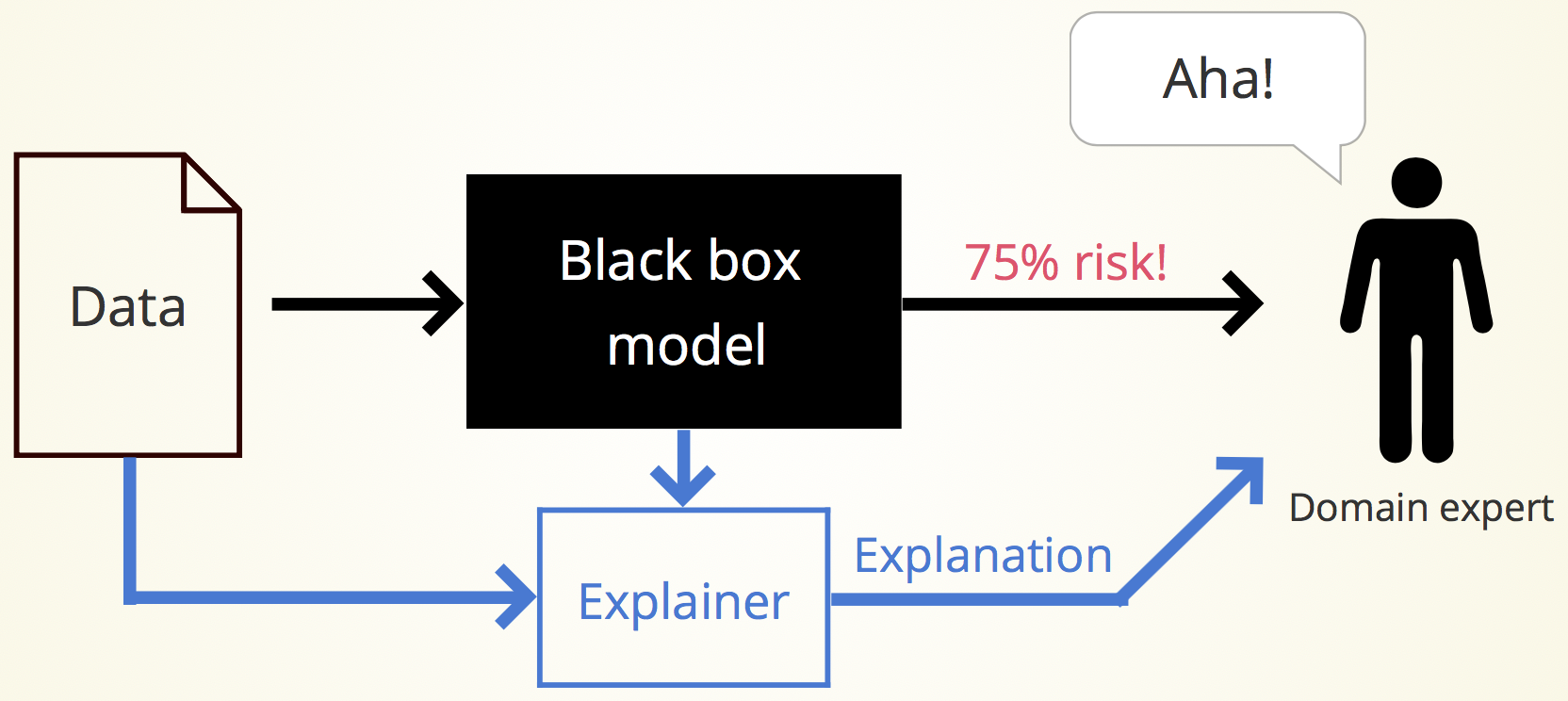

Black box model

75% risk!

Black box

model

Domain expert

Explanation

Aha!

But why?

Data

Explainer

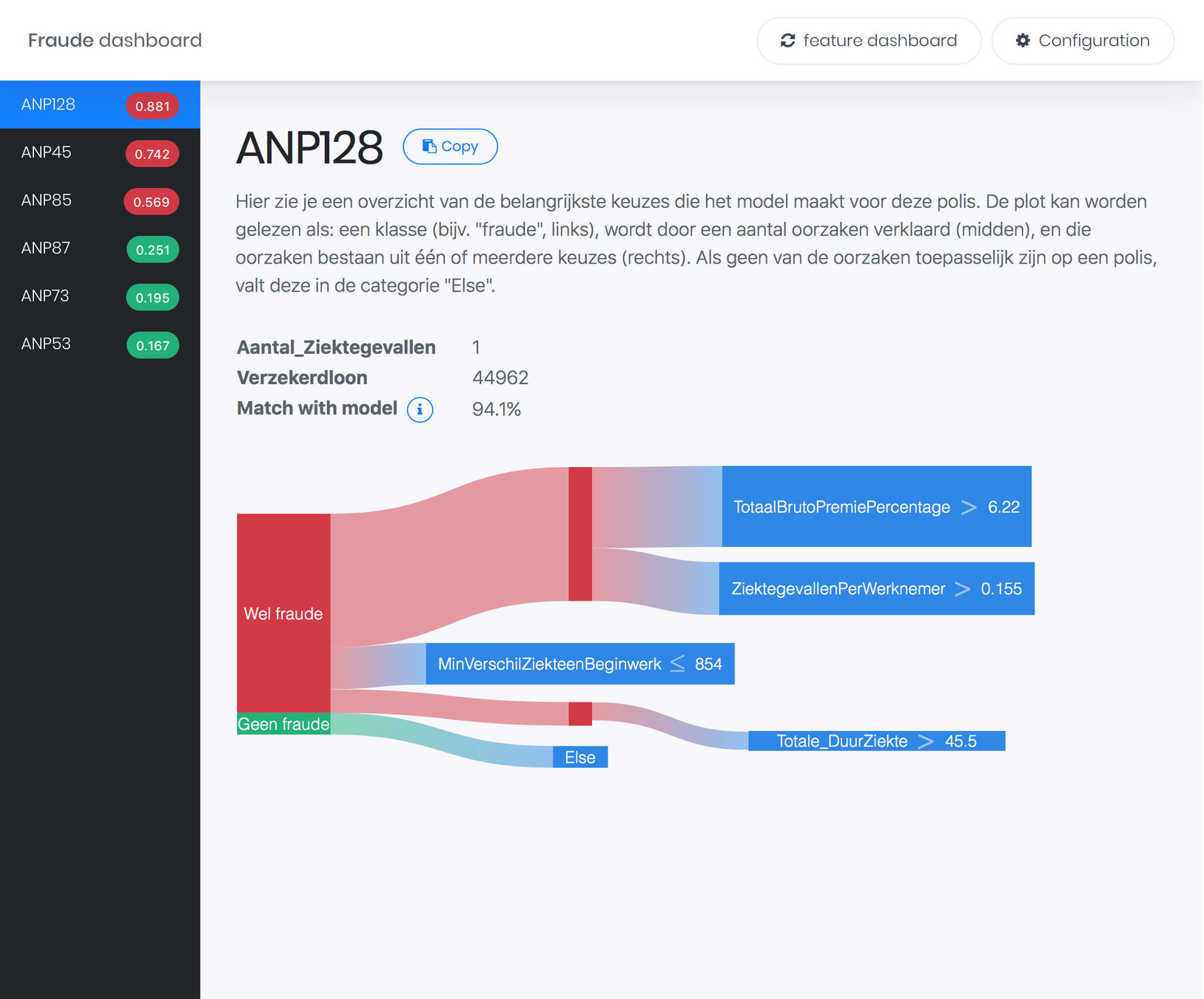

Explanations for

Fraud Detection

GRADUATION

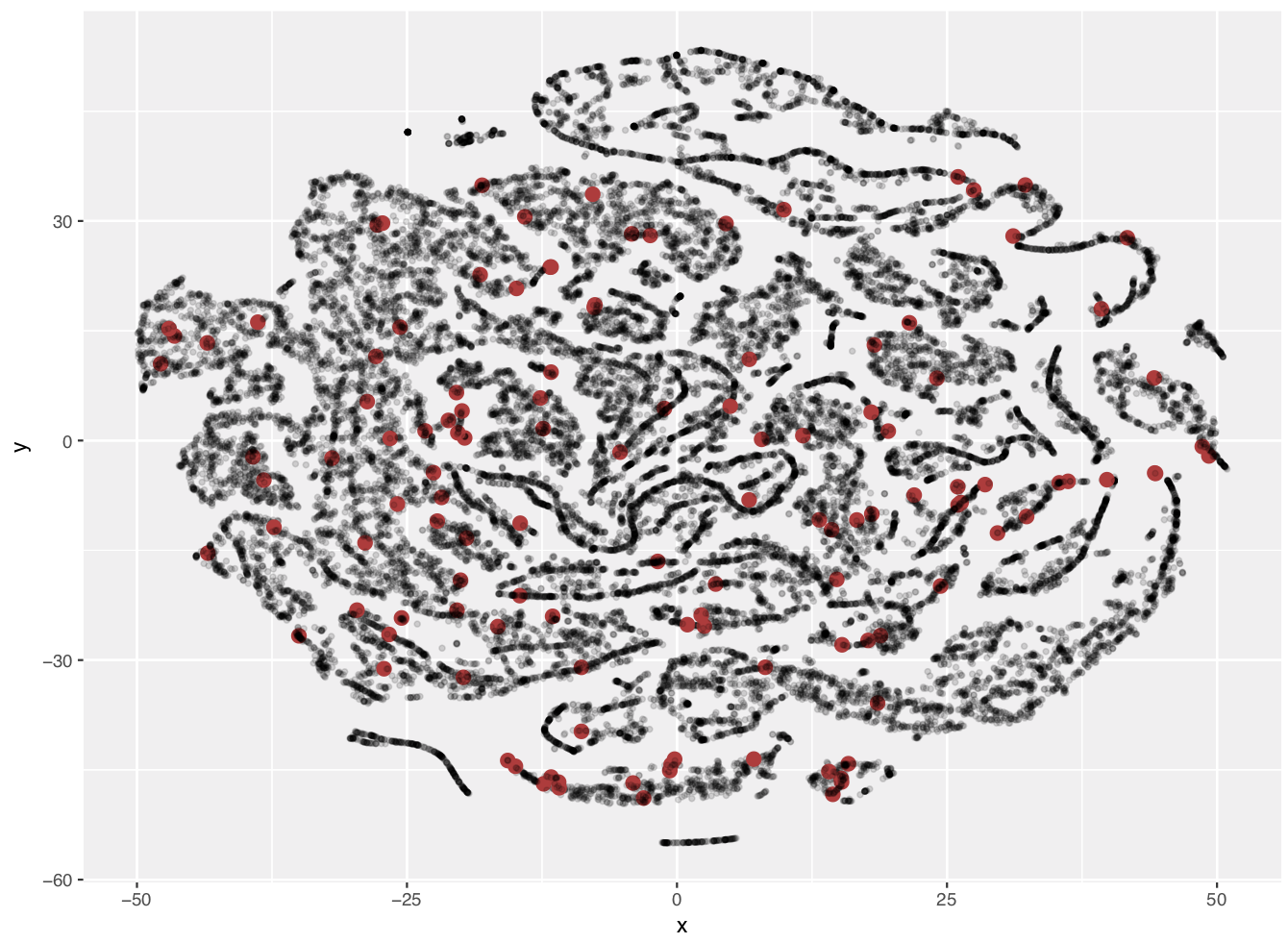

Real world scenario

Data

- Lots of missing values

Model

- 100 Random Forest

- 500 trees each

- ~25 decisions per tree

- 1.312.471 models total!

×

OOB error: 27.7%

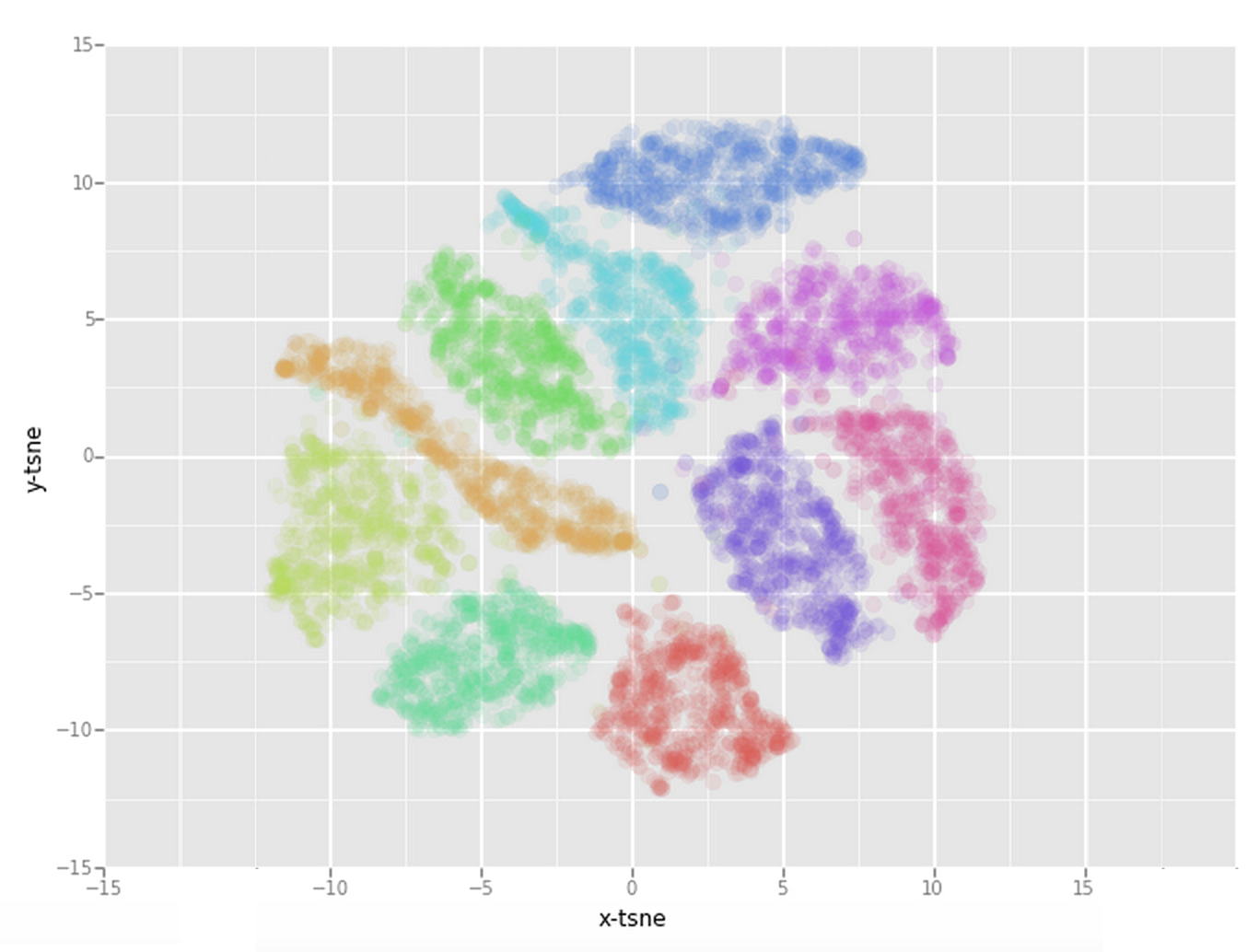

My solution

Feature contribution

[1] Palczewska, Anna et. al. Interpreting random forest classification models using a feature contribution method. In Integration of reusable systems, pp. 193–218. Springer, 2014.

0 1 2. 3 x

y

2

1

7 : 7

6 : 2

...

\(Y_{mean}\) = 0.5

\(Y_{mean}\) = 0.75

\(LI_{X}\) = 0.25

Contribution per Decision Tree:

\(FC_{i,t}^f = \sum_{N \in R_{i,t}} LI_f^N\)

Contribution per Random Forest:

\(FC_i^f = \frac{1}{T}\sum_{t=1}^T FC_{i,t}^f\)

X < 2.5

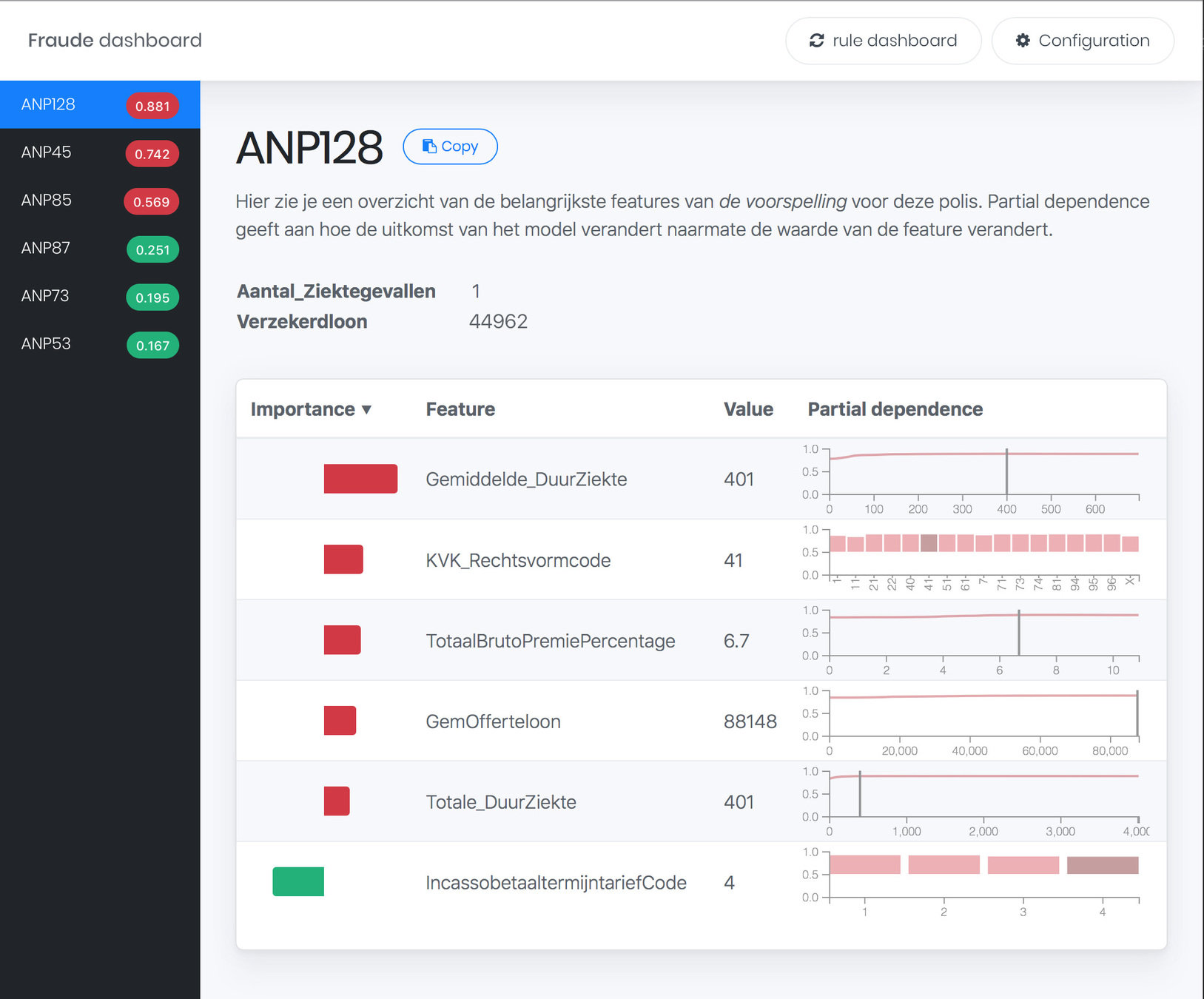

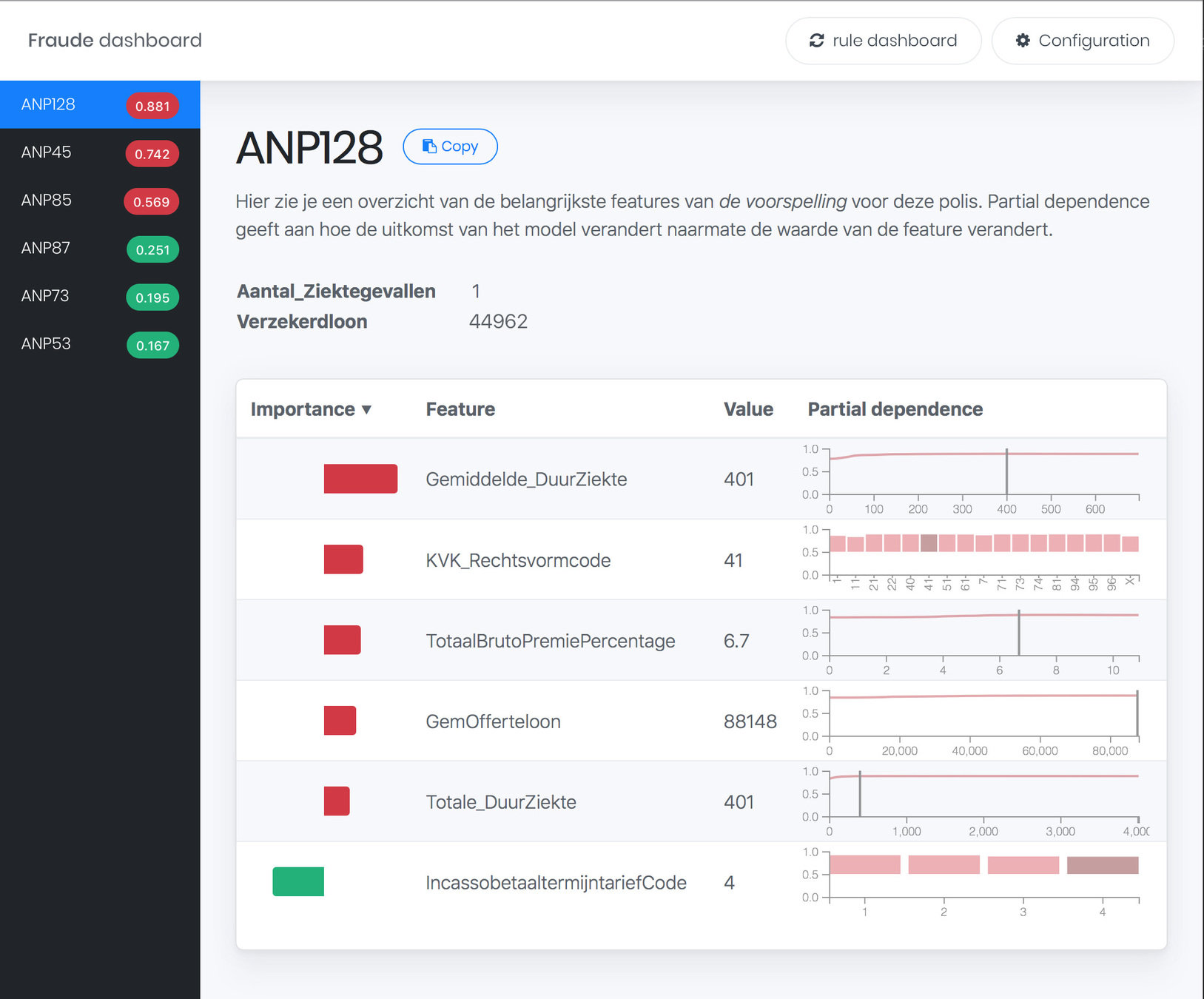

Partial dependence

[2] Friedman, Jerome H. Greedy function approximation: A gradient boosting machine. Annals of Statistics, 29(5): pp. 1189–1232, 2001.

300

250

200

150

100

50

1

0%

100%

200

100

0

Duration illness

Fraud?

Fraud (55%)

Non-fraud (35%)

| Company | ABC Inc |

| Employees | 5 |

| Duration illness | days |

| ... | ... |

Fraud (65%)

Fraud (90%)

Non-fraud (45%)

Non-fraud (40%)

Non-fraud (25%)

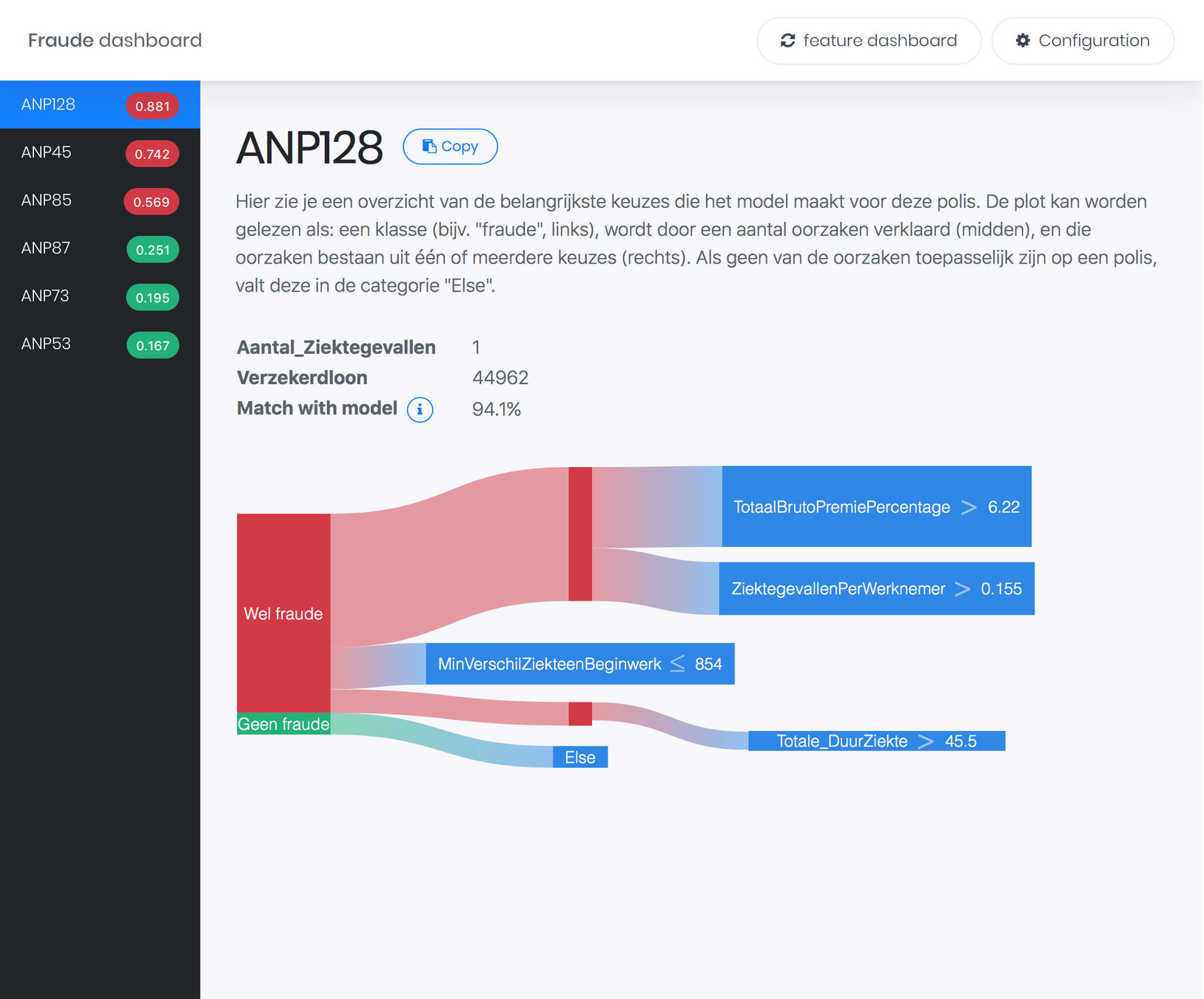

Local rule extraction

[3] Ribeiro, Marco Tulio et. al. Why should i trust you?: Explaining the predictions of any classifier. In

Proceedings of the 22nd ACM SIGKDD, pp. 1135–1144. ACM, 2016.

[4] Deng, Houtao. Interpreting tree ensembles with inTrees. arXiv preprint arXiv:1408.5456 , pp. 1–18, 2014.

0 1 2. 3 x

y

2

1

Fraud team happy! 🎉

Paper accepted for:

Workshop on Human Interpretability in Machine Learning

@

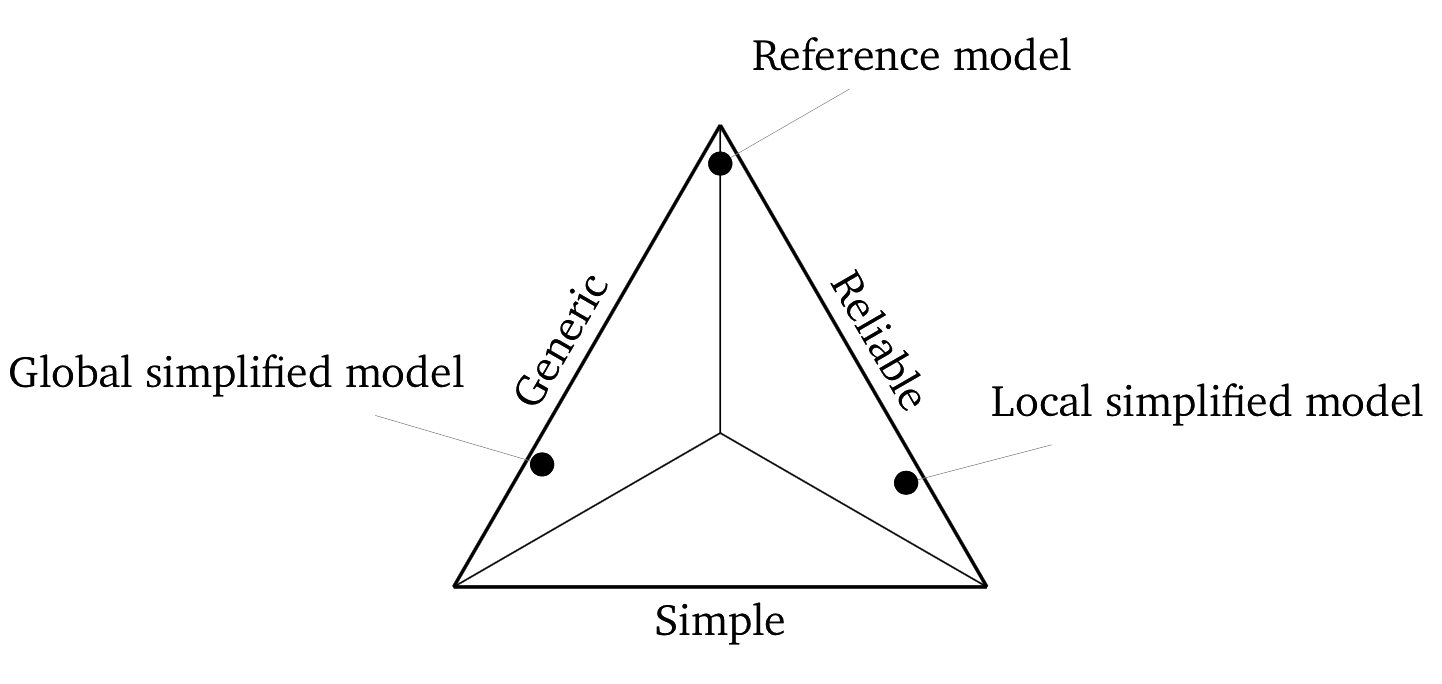

What is a good explanation?

FUTURE PLANS

OVERVIEW

My research

Graduation

Future plans

NWO-RATE meeting July 5th-export

By iamdecode

NWO-RATE meeting July 5th-export

- 103