Justin James

Applications Engineer

iRODS Consortium

June 2, 2022

Great Plains Network Annual Meeting

Kansas City, MO

Managing Petabytes of Data

Using iRODS

Managing Petabytes of Data

Using iRODS

Our Membership

Consortium

Member

Consortium

Member

Consortium

Member

Consortium

Member

Why use iRODS?

People need a solution for:

- Managing large amounts of data across various storage technologies

- Controlling access to data

- Searching their data quickly and efficiently

- Automation

The larger the organization, the more they need software like iRODS.

Why use iRODS? (Too Much Data)

"90% of the world's data created within the last two years"

-

Coming in too fast

-

Without good source information

-

Getting stored wherever there is room

-

Getting lost

-

Getting corrupted

-

Getting forgotten

Why use iRODS? (Data Management Requirements)

Sample Project Requirements

For 10+ years, data must be:

-

Verified

-

Migrated

-

Kept in Duplicate

-

Made Accessible

-

Made Searchable

-

Monitored

Why use iRODS?

-

These long-term management tasks are too much for a curator or librarian, and certainly too much for the data scientists themselves to handle by hand.

-

There must be organizational policy in place to handle the varied scenarios of data retention, data access, and data use.

-

There must be automation in place to provide consistency and confidence in the process.

Why use iRODS? (Data Management Requirements)

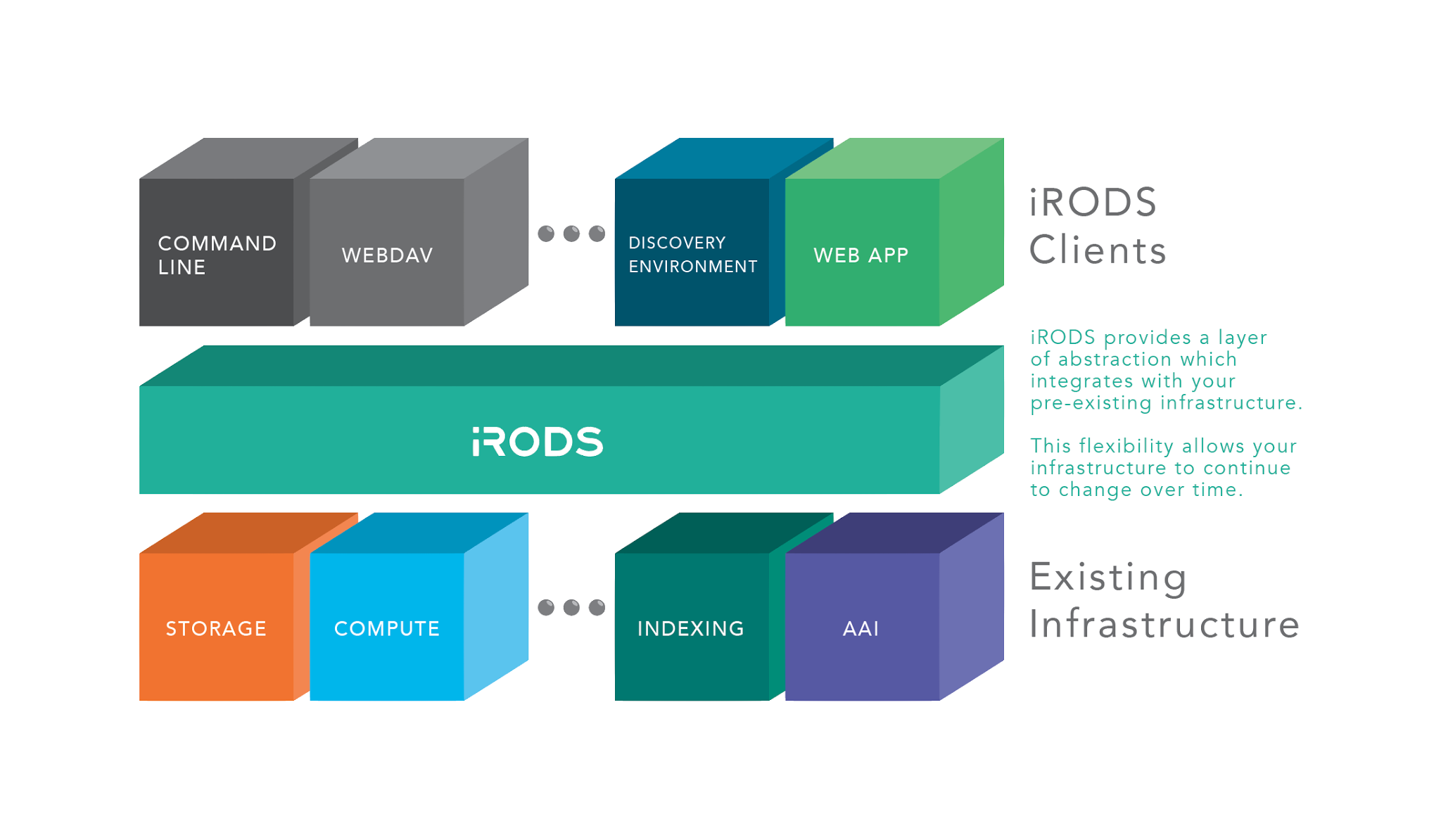

iRODS as the Integration Layer

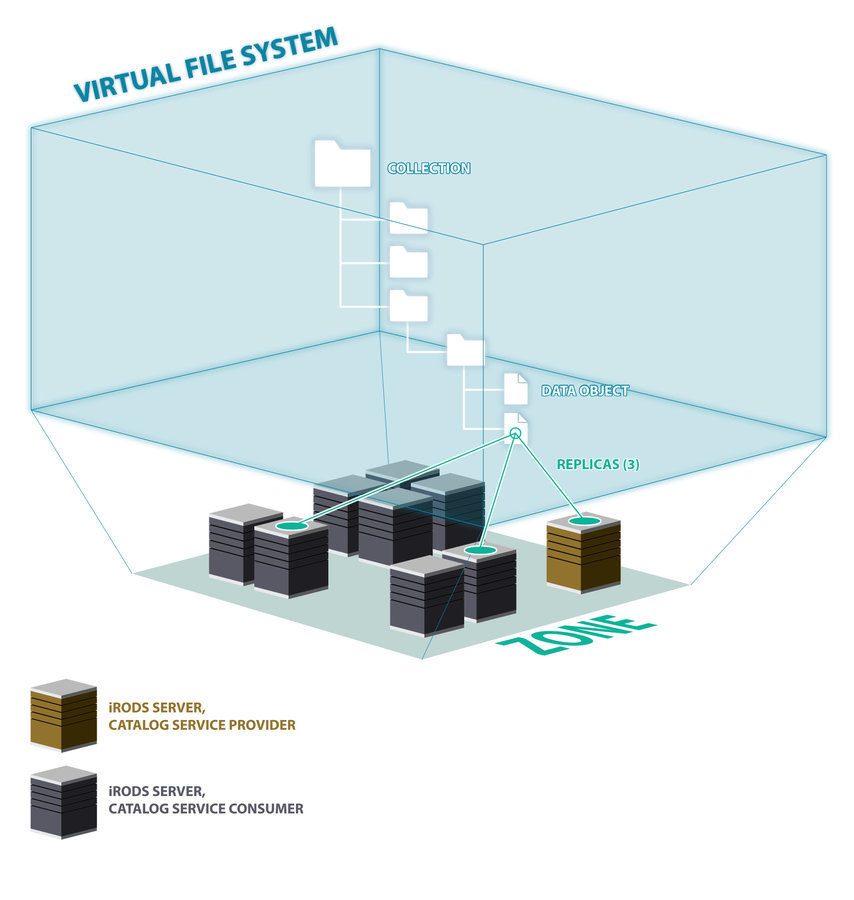

The iRODS Data Management Model

Core Competencies

Policy

Capabilities

Patterns

iRODS Core Competencies

The underlying technology categorized into four areas

Data Virtualization

Combine various distributed storage technologies into a Unified Namespace

- Existing file systems

- Cloud storage

- On premises object storage

- Archival storage systems

iRODS provides a logical view into the complex physical representation of your data, distributed geographically, and at scale.

Data Virtualization - Projection of the Physical into the Logical

Logical Path

Physical Path(s)

Data Discovery

Attach metadata to any first class entity within the iRODS Zone

- Data Objects

- Collections

- Users

- Storage Resources

- The Namespace

iRODS provides automated and user-provided metadata which makes your data and infrastructure more discoverable, operational and valuable.

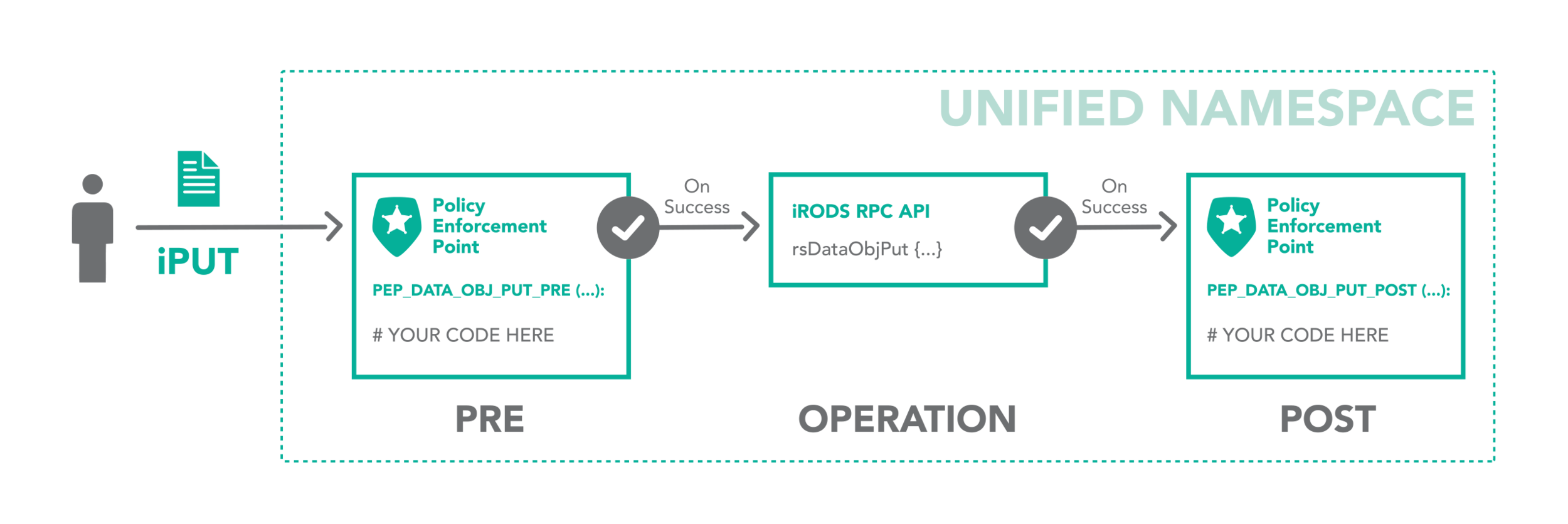

Workflow Automation

Integrated scripting language which is triggered by any operation within the framework

- Authentication

- Storage Access

- Database Interaction

- Network Activity

- Extensible RPC API

The iRODS rule engine provides the ability to capture real world policy as computer actionable rules which may allow, deny, or add context to operations within the system.

Workflow Automation - Dynamic Policy Enforcement Points

Workflow Automation - Resource Hierarchies

- A built-in special case for data storage policies. Easy configuration via a resource tree.

- Coordinating resources are the non-leaf nodes

- They do not represent a physical storage system

- They control the storage and retrieval policies

- Examples:

- Replication - Data replicated to all subtrees

- Random - Data written to a random subtree

- Compound - Has a cache and archive leaf

- Storage resources are the leaf nodes. They can represent POSIX filesystems, object stores (S3), or tape.

Secure Collaboration

iRODS allows for collaboration across administrative boundaries after deployment

- No need for common infrastructure

- No need for shared funding

- Affords temporary collaborations

iRODS provides the ability to federate namespaces across organizations without pre-coordinated funding or effort.

- Packaged and supported solutions

- Require configuration not code

- Derived from the majority of use cases observed in the user community

iRODS Capabilities

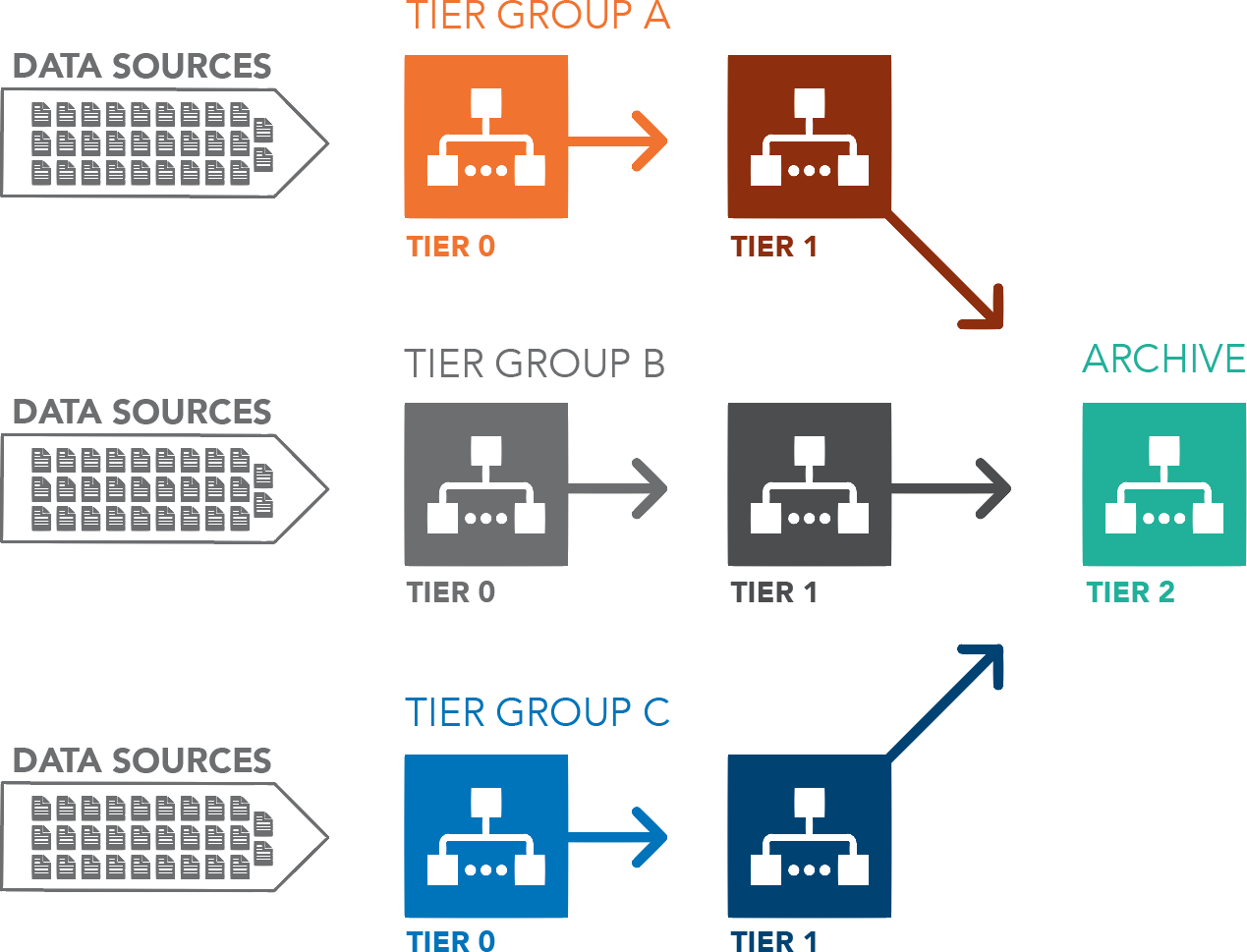

iRODS Capabilities Example - Storage Tiering

iRODS Capabilities Example - Storage Tiering

iRODS Capabilities Example - Storage Tiering

-

Easy to Set Up

-

Install the plugin (apt, yum)

-

Enable the plugin

-

Add resources (if they don't already exist)

-

Add some metadata on the resources (configuration)

-

Assign resource to tier group

-

Set tier time constraints

-

-

- No code required although tier violation queries can be customized

iRODS Capabilities Example - Automated Ingest

Monitors a filesystem and copies or registers files into iRODS

- Also follows the configuration not code paradigm

| Operation | New Files | Updated Files |

|---|---|---|

Operation.REGISTER_SYNC (default) |

registers in catalog | updates size in catalog |

| Operation.REGISTER_AS_REPLICA_SYNC | registers first or additional replica |

updates size in catalog |

| Operation.PUT | copies file to target vault, and registers in catalog |

no action |

| Operation.PUT_SYNC | copies file to target vault, and registers in catalog |

copies entire file again, and updates catalog |

| Operation.PUT_APPEND | copies file to target vault, and registers in catalog |

copies only appended part of file, and updates catalog |

The Data Management Model

Data Storage Demo

As a demonstration of the concepts I have introduced, we will start with a simple file replication example.

First, I will create a resource tree with only unix filesystem resources.

- Note that in a real system these resources would likely be on different geographically-separated servers.

$ iadmin mkresc resc1 unixfilesystem `hostname`:`pwd`/resc1

$ iadmin mkresc resc2 unixfilesystem `hostname`:`pwd`/resc2

$ iadmin mkresc resc3 unixfilesystem `hostname`:`pwd`/resc3

$ iadmin mkresc replresc replication

$ iadmin addchildtoresc replresc resc1

$ iadmin addchildtoresc replresc resc2

$ iadmin addchildtoresc replresc resc3 $ ilsresc demoResc:unixfilesystem replresc:replication ├── resc1:unixfilesystem ├── resc2:unixfilesystem └── resc3:unixfilesystem

Data Storage Demo

Now let's put a few files into the system. Note three replicas of each.

$ truncate --size 120M f1 $ truncate --size 120M f2 $ truncate --size 120M f3 $ iput -R replresc f1 $ iput -R replresc f2 $ iput -R replresc f3 $ ils -l /tempZone/home/rods: rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f1 rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f1 rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f1 rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f2 rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f2 rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f2 rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f3 rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f3 rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f3

Data Storage Demo

But we really want to store data in the cloud.

Let's create an S3 resource:

$ iadmin mkresc s3resc s3 `hostname`:/justinkylejames-irods1/s3resc "S3_DEFAULT_HOSTNAME=s3.amazonaws.com;S3_AUTH_FILE=/var/lib/irods/amazon.keypair;S3_REGIONNAME=us-east-1;S3_RETRY_COUNT=3;S3_WAIT_TIME_SEC=3;S3_PROTO=HTTP;HOST_MODE=cacheless_attached;S3_ENABLE_MD5=1;S3_SIGNATURE_VERSION=4;S3_ENABLE_MPU=1;ARCHIVE_NAMING_POLICY=consistent;S3_CACHE_DIR=/var/lib/irods;CIRCULAR_BUFFER_SIZE=2;DEBUG_LOGGING=true;S3_STSDATE=both"

$ ilsresc

demoResc

s3resc:s3

replresc:replication

├── resc1:unixfilesystem

├── resc2:unixfilesystem

└── resc3:unixfilesystem

Data Storage Demo

Now let's create a couple of large files and write them to our S3 bucket.

$ truncate --size 1G f4 $ truncate --size 1G f5

$ iput -R s3resc f4 $ iput -R s3resc f5 $ ils -l /tempZone/home/rods: rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f1 rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f1 rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f1 rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f2 rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f2 rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f2 rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f3 rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f3 rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f3 rods 0 s3resc 1073741824 2022-05-17.18:38 & f4 rods 0 s3resc 1073741824 2022-05-17.18:39 & f5

Data Storage Demo

But I would like f4 and f5 to also exist on the local resources so let's force a replication...

irods@cf4921416f3a:~$ irepl -R replresc f4

irods@cf4921416f3a:~$ irepl -R replresc f5

irods@cf4921416f3a:~$ ils -l

/tempZone/home/rods:

rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f1

rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f1

rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f1

rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f2

rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f2

rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f2

rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f3

rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f3

rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f3

rods 0 s3resc 1073741824 2022-05-17.18:38 & f4

rods 1 replresc;resc2 1073741824 2022-05-17.18:41 & f4

rods 2 replresc;resc3 1073741824 2022-05-17.18:41 & f4

rods 3 replresc;resc1 1073741824 2022-05-17.18:41 & f4

rods 0 s3resc 1073741824 2022-05-17.18:39 & f5

rods 1 replresc;resc2 1073741824 2022-05-17.18:41 & f5

rods 2 replresc;resc3 1073741824 2022-05-17.18:41 & f5

rods 3 replresc;resc1 1073741824 2022-05-17.18:41 & f5

Data Storage Demo

Let's replicate f1 to S3.

$ irepl -R s3resc f1 $ ils -l /tempZone/home/rods: rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f1 rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f1 rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f1 rods 3 s3resc 125829120 2022-05-17.18:43 & f1 rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f2 rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f2 rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f2 rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f3 rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f3 rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f3 rods 0 s3resc 1073741824 2022-05-17.18:38 & f4

rods 1 replresc;resc2 1073741824 2022-05-17.18:41 & f4

rods 2 replresc;resc3 1073741824 2022-05-17.18:41 & f4 rods 3 replresc;resc1 1073741824 2022-05-17.18:41 & f4 rods 0 s3resc 1073741824 2022-05-17.18:39 & f5 rods 1 replresc;resc2 1073741824 2022-05-17.18:41 & f5 rods 2 replresc;resc3 1073741824 2022-05-17.18:41 & f5 rods 3 replresc;resc1 1073741824 2022-05-17.18:41 & f5

Data Storage Demo - Automatic Replication

But maybe we just want the S3 resource to automatically replicate to the others and vice versa

$ iadmin addchildtoresc replresc s3resc

$ ilsresc

demoResc:unixfilesystem

replresc:replication

├── resc1:unixfilesystem

├── resc2:unixfilesystem

├── resc3:unixfilesystem

└── s3resc:s3

Data Storage Demo

We will rebalance the replresc so everything in that tree exists everywhere since some objects were created before we modified the tree.

irods@cf4921416f3a:~$ iadmin modresc replresc rebalance

irods@cf4921416f3a:~$ ils -l

/tempZone/home/rods:

rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f1

rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f1

rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f1

rods 3 replresc;s3resc 125829120 2022-05-17.18:43 & f1

rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f2

rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f2

rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f2

rods 3 replresc;s3resc 125829120 2022-05-17.18:50 & f2

rods 0 replresc;resc2 125829120 2022-05-17.18:23 & f3

rods 1 replresc;resc3 125829120 2022-05-17.18:23 & f3

rods 2 replresc;resc1 125829120 2022-05-17.18:23 & f3

rods 3 replresc;s3resc 125829120 2022-05-17.18:50 & f3

rods 0 replresc;s3resc 1073741824 2022-05-17.18:38 & f4

rods 1 replresc;resc2 1073741824 2022-05-17.18:41 & f4

rods 2 replresc;resc3 1073741824 2022-05-17.18:41 & f4

rods 3 replresc;resc1 1073741824 2022-05-17.18:41 & f4

rods 0 replresc;s3resc 1073741824 2022-05-17.18:39 & f5

rods 1 replresc;resc2 1073741824 2022-05-17.18:41 & f5

rods 2 replresc;resc3 1073741824 2022-05-17.18:41 & f5

rods 3 replresc;resc1 1073741824 2022-05-17.18:41 & f5

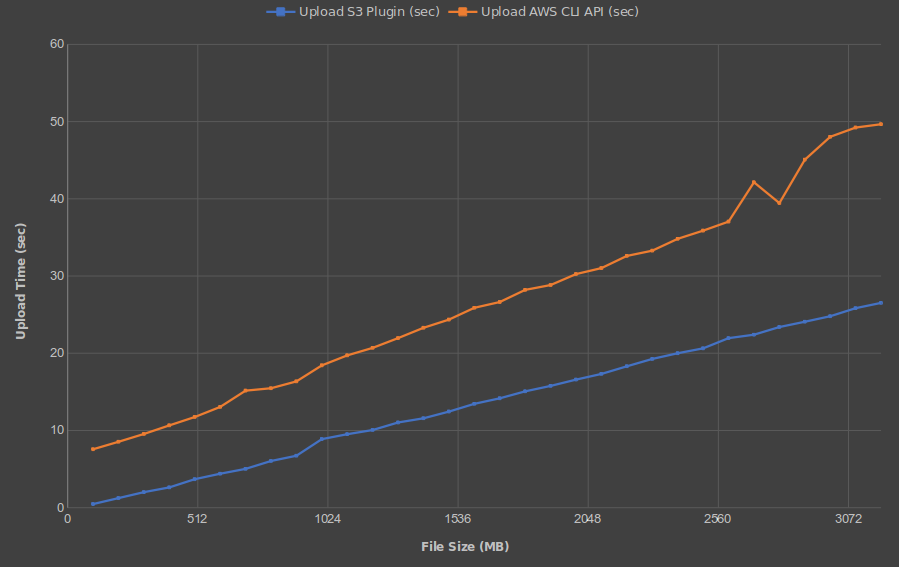

S3 Performance - Upload

- 10 transfer threads (each)

- Uploads every 100 MB between 100 MB and 3200 MB

- Median time shown of 5 uploads for each size

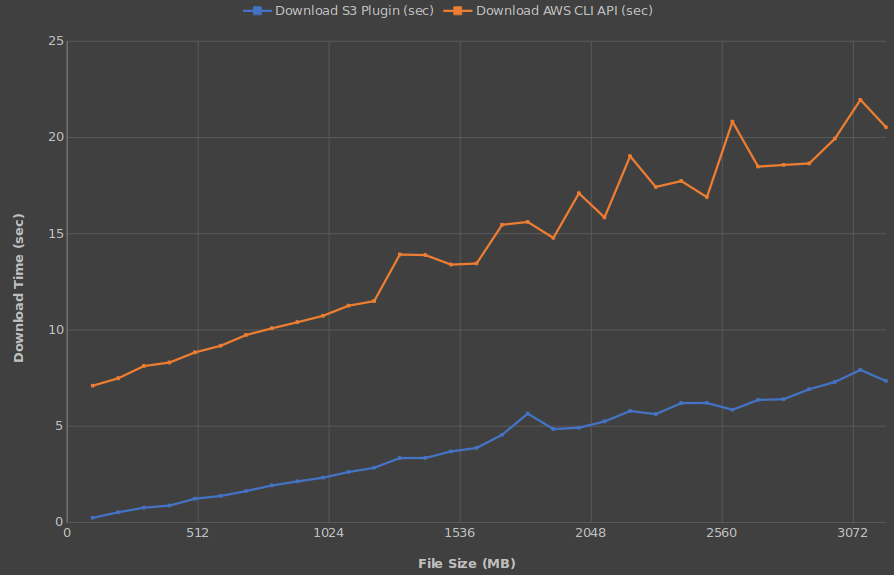

S3 Performance - Download

- 10 transfer threads (each)

- Downloads every 100 MB between 100 MB and 3200 MB

- Median time shown of 5 downloads for each size

GPN 2022 - Managing Petabytes of Data Using iRODS

By iRODS Consortium

GPN 2022 - Managing Petabytes of Data Using iRODS

- 951