Brain Language Model

1

Massive data collection + Data pipeline

2

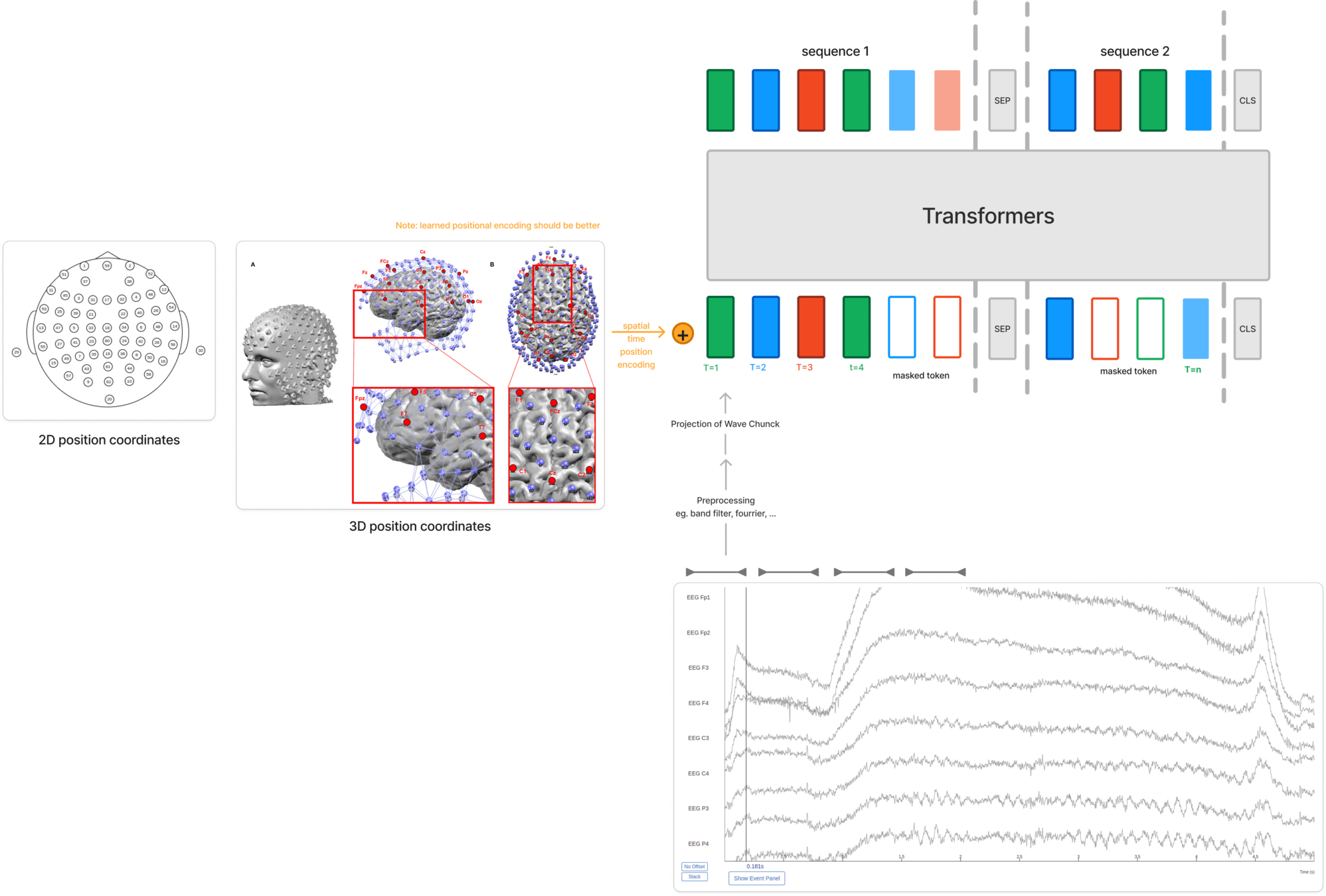

Build Baseline models for EEG

3

Build foundation model for EEG

- downstream tasks

- scaling laws

4

Foundational models for other Modalities (EEG, iEEG, MEG)

- Less noisy, more diverse

6

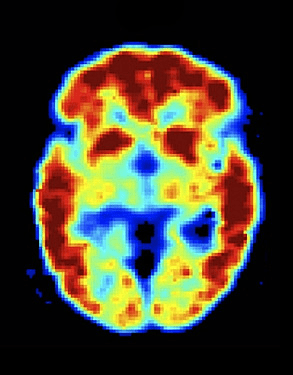

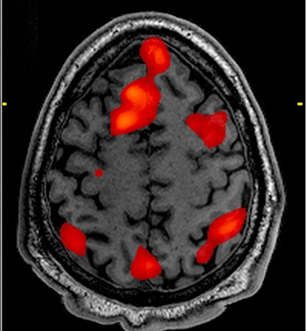

Foundational models for all modalities (MRI, PET, EEG, iEEG, MEG)

5

Foundational Models for (MRI, PET)

From Last week

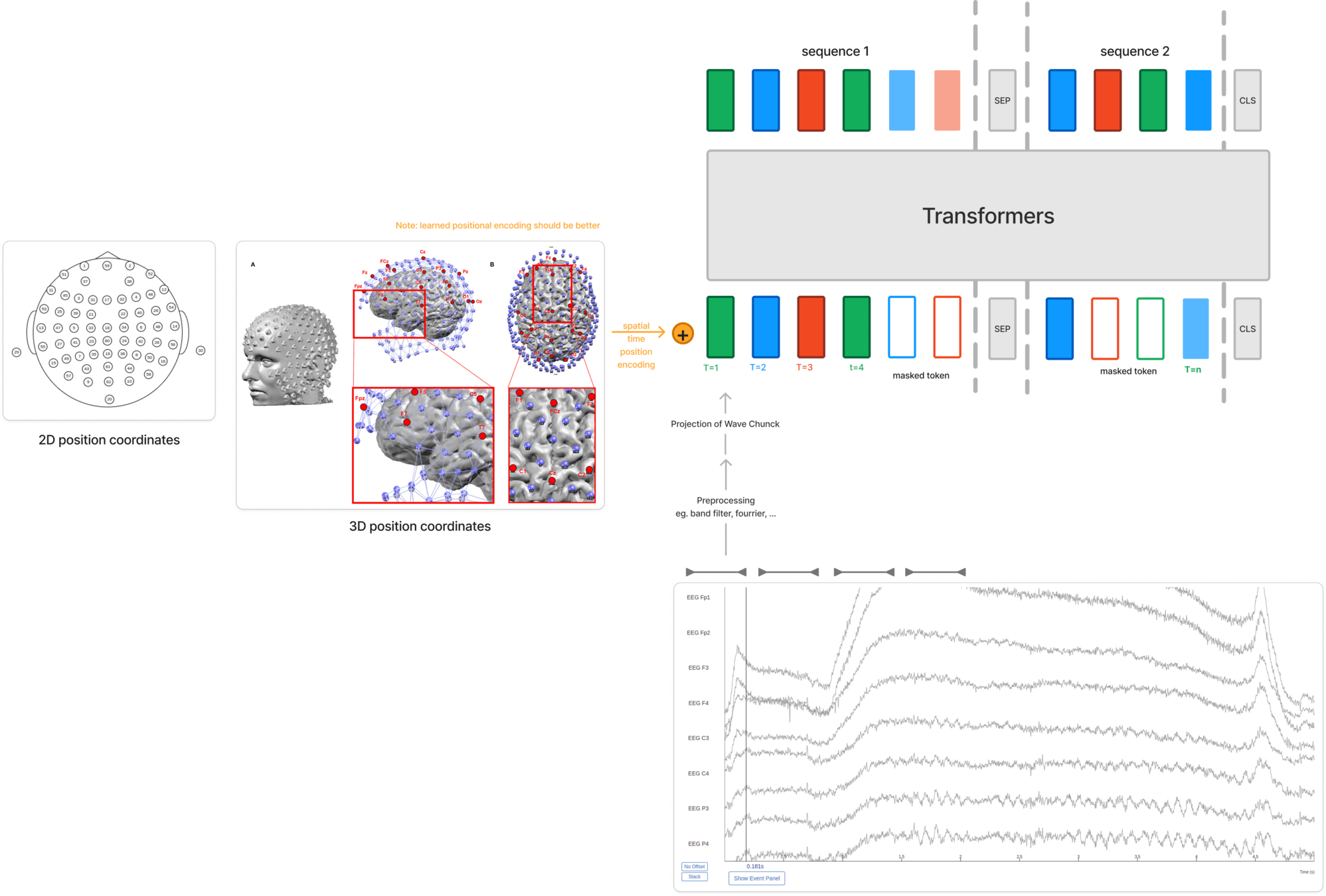

- Build more suitable architectures for EEG

- Build more suitable objectives functions

- Build better fine-tuning strategy

FM

1

Massive data collection + Data pipeline

2

Build Baseline models for EEG

3

Build foundation model for EEG

- downstream tasks

- scaling laws

4

Foundational models for other Modalities (EEG, iEEG, MEG)

- Less noisy, more diverse

6

Foundational models for all modalities (MRI, PET, EEG, iEEG, MEG)

5

Foundational Models for (MRI, PET)

- LaBraM

- NEURO-GPT

- Others

Literature

drawbacks

- Fails to manage channel variability

- Less downstream tasks

- Not big performance improvements

- Only focus on one modality

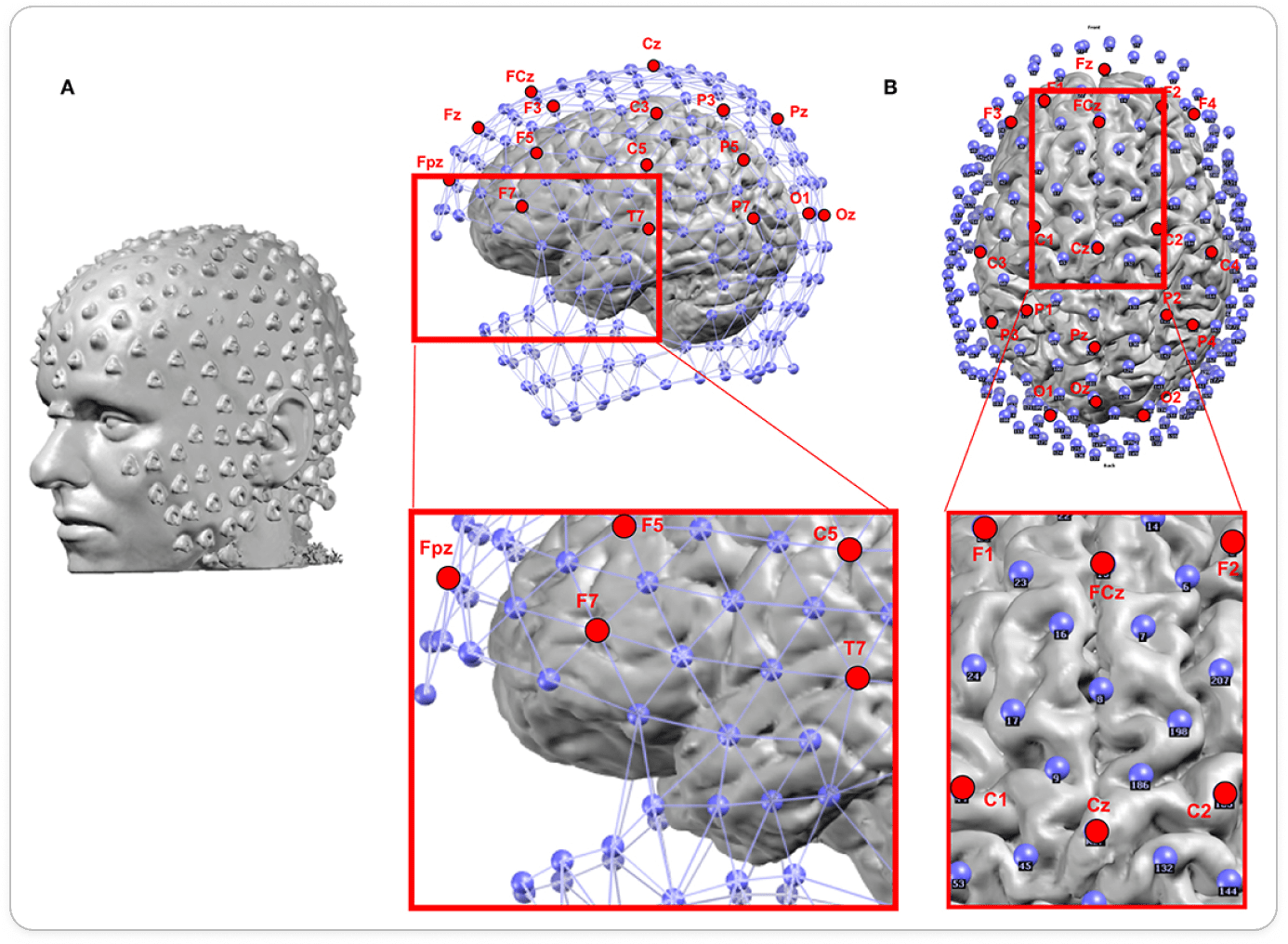

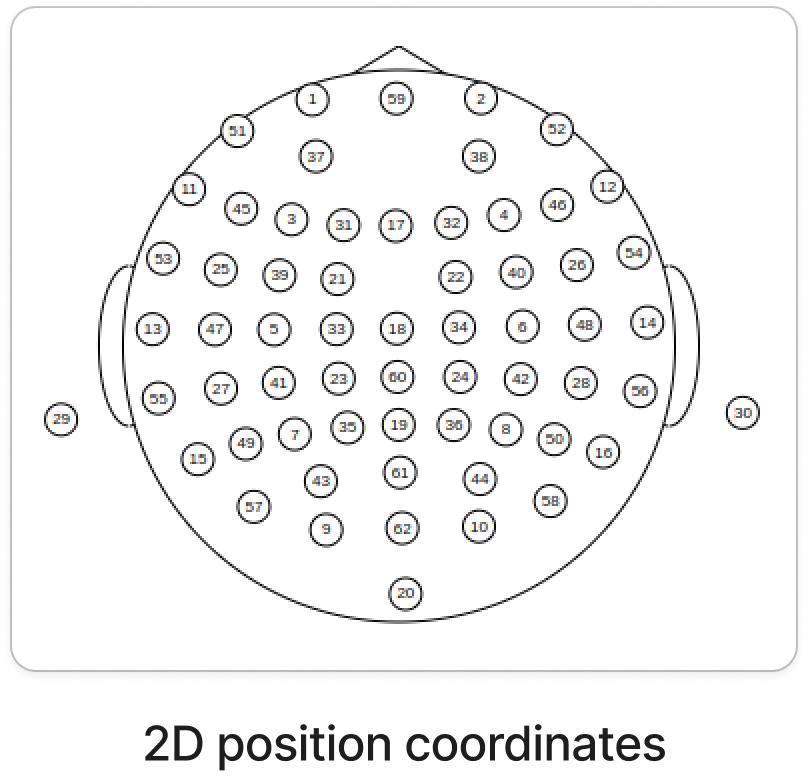

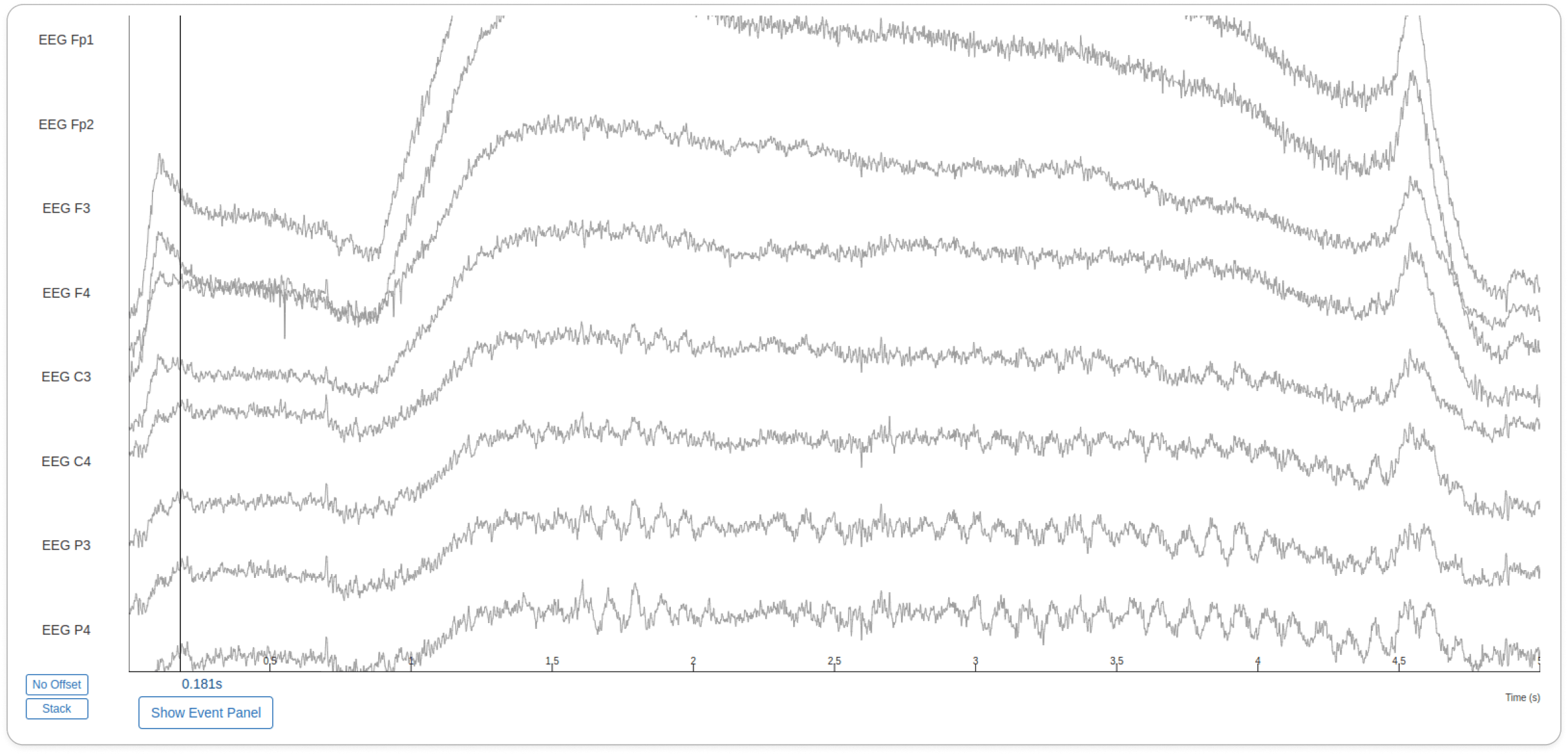

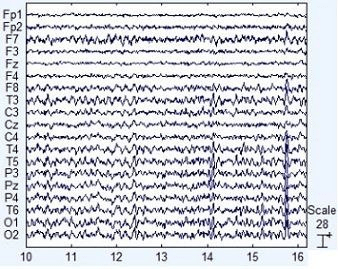

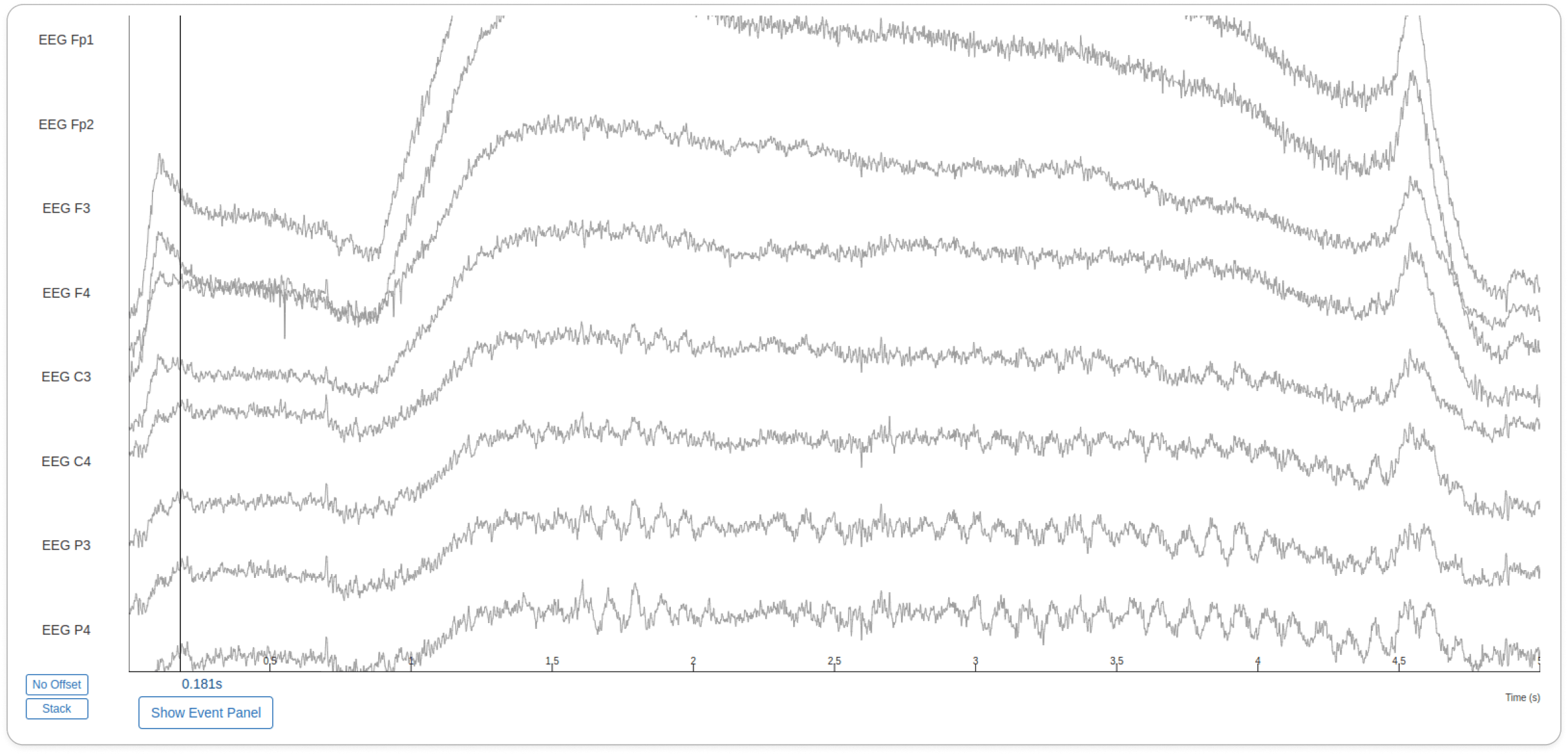

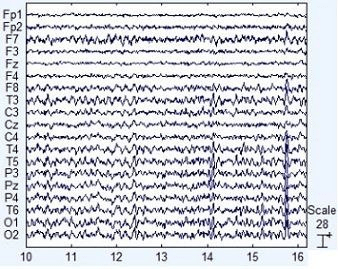

Issues with EEG Data

- Extremely Noisy

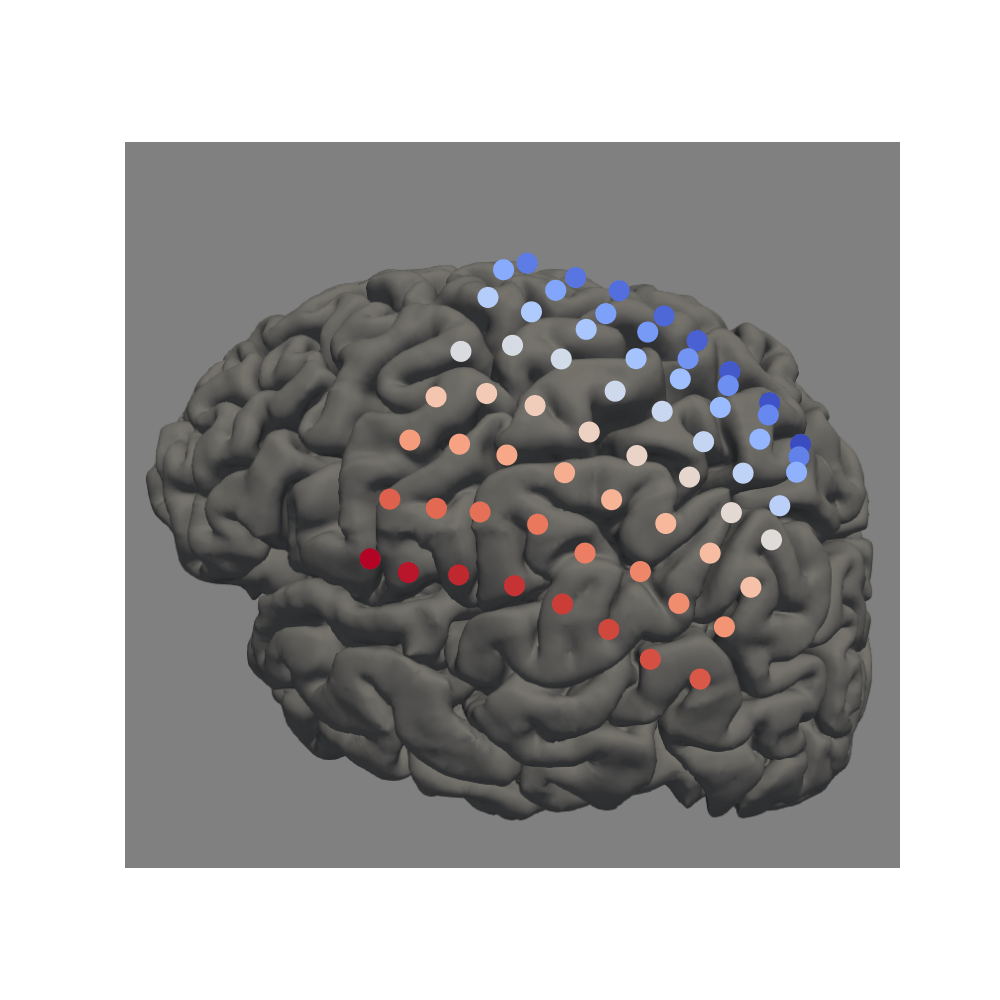

- Variable number of channels

- Continues signal (i.e. not discrete - harder for modeling)

What we have achieved so far?

-

Collected Massive EEG data:

-

Openneuro Corpus

-

Temple University Hospital (TUH) EEG corpus

-

Cuban Human Brain Mapping Corpus and Others ...

-

-

Over terabytes of data and thousands of hours of recordings

-

Extreme diversity in subjects and sessions

-

Implemented easy-to-plugin code

-

Implemented a very efficient data pipeline

-

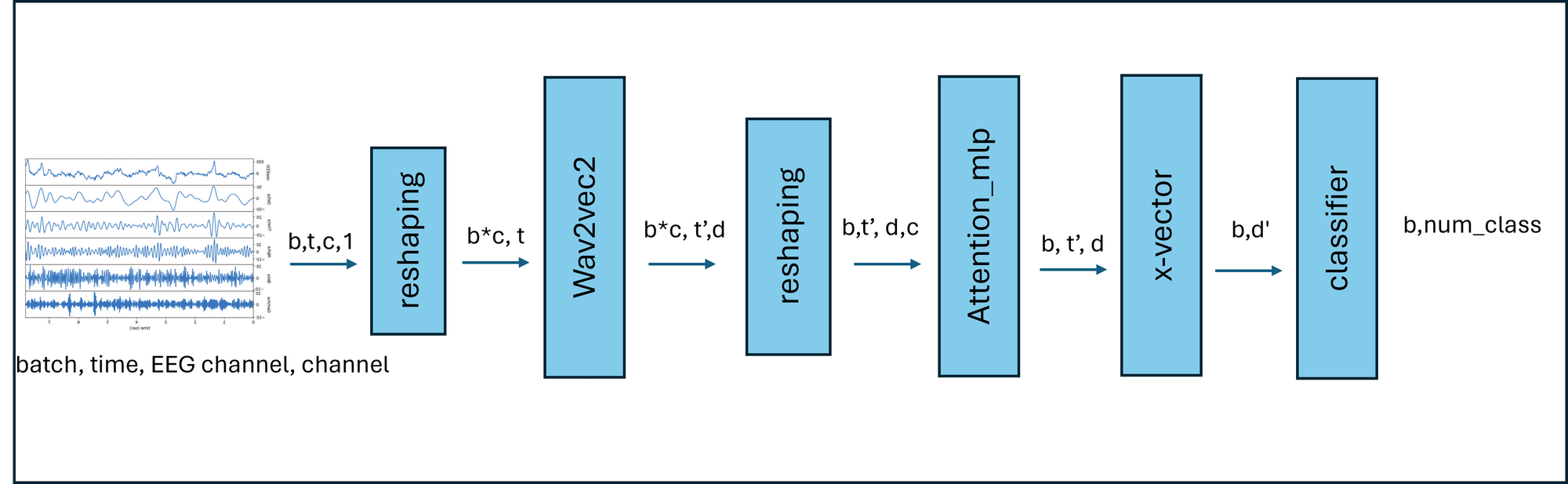

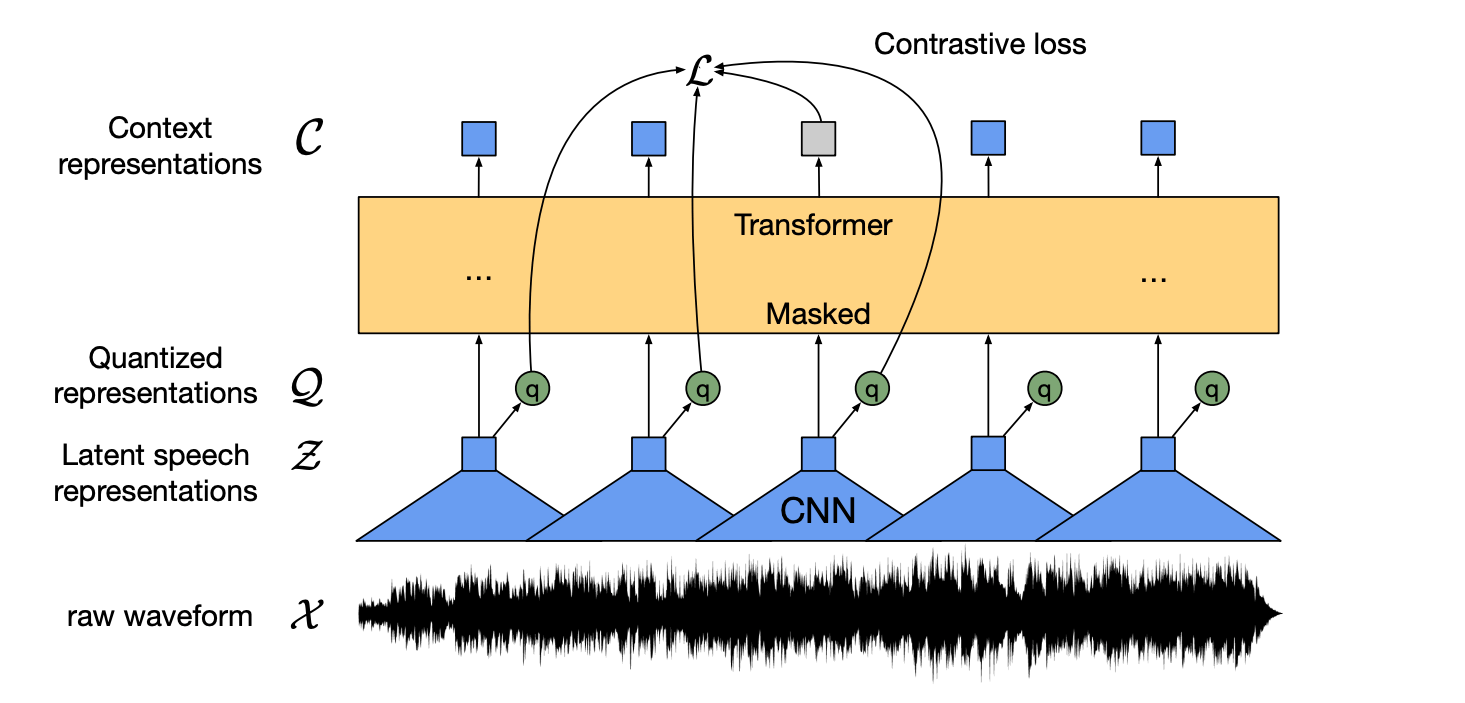

Implemented baseline models wav2vec2 architecture for pretraining

-

Tested various fine-tuning strategies (with MOABB benchmark)

Approaches used so far?

Next steps

- Build more suitable architectures for EEG

- Build more suitable objectives functions

- Build better fine-tuning strategy

Thank You!

Questions?

Palette

By Jama Hussein Mohamud

Palette

- 187