Tensors & Operators

James B. Wilson, Colorado State University

Follow the slides at your own pace.

Open your smartphone camera and point at this QR Code. Or type in the url directly

https://slides.com/jameswilson-3/tensors-operators/#/

Major credit is owed to...

Uriya First

U. Haifa

Joshua Maglione,

Bielefeld

Peter Brooksbank

Bucknell

- The National Science Foundation Grant DMS-1620454

- The Simons Foundation support for Magma CAS

- National Secturity Agency Grants Mathematical Sciences Program

- U. Colorado Dept. Computer Science

- Colorado State U. Dept. Mathematics

Notation Choices

Below we explain in more detail.

Notation Motives

Mathematics Computation

Vect[K,a] = [1..a] -> K

$ v:Vect[Float,4] = [3.14,2.7,-4,9]

$ v(2) = 2.7

*:Vect[K,a] -> Vect[K,a] -> K

u * v = ( (i:[1..a]) -> u(i)*v(i) ).fold(_+_)

Matrix[K,a,b] = [1..b] -> Vect[K,a]

$ M:Matrix[Float,2,3] = [[1,2,3],[4,5,6]]

$ M(2)(1) = 4

*:Matrix[K,a,b] -> Vect[K,b] -> Vect[K,a]

M * v = (i:[1..a]) -> M(i) * v

Difference? Math has sets, computation has types.

But types are math invention (B. Russell); lets use types too.

Taste of Types

Terms of types store object plus how it got made

Implications become functions

hypothesis (domain) to conclusion (codomain)

(Union, Or) becomes "+" of types

(Intersection,And) becomes dependent type

Types are honest about "="

Sets are the same if they have the same elements.

Are these sets the same?

We cannot always answer this, both because of practical limits of computation, but also some problems like these are undecidable (say over the integers).

In types the above need not be a set.

Sets are types where a=b only by reducing b to a explicitly.

What got cut

- All things Tucker - highly leveraged decomposition of tensors within engineering, but tangential to main themes here and thankfully a lot of good resources already available.

- SVDs - likewise, covered well in other material.

- Data structures & Categories (2nd tutorial)

These are tensors, so what does that mean?

Explore below

See common goals below

Tensor Spaces

Versors: an introduction

Why the notation? Nice consequences to come, like...

Context: finite-dimensional vector spaces

Actually, versors can be defined categorically

Versors

1. An abliean group constructor

2. Together we a distributive "evaluation" function

3. Universal Mapping Property

Bilinear maps (bimaps)

Rewrite how we evaluate ("uncurry"):

Practice

Practice with Notation

Multilinear maps (multimaps)

Evaluation

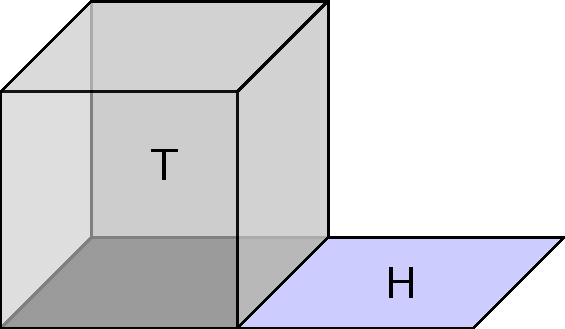

Definition. A tensor space is vector space \(T\) equipped with a linear map \(\langle \cdot |\) into a space of multilinear maps, i.e.:

Tensors are elements of a tensor space.

The frame is

The axes are the

The valence is the the size of the frame.

Fact.

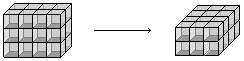

- Every tensor spaces is a tensor of valence one more (stacking).

- Every tensor is a tensor space of valence one less (slicing).

Example.

- A subspace identified with a matrix by taking the columns (or rows) to be a basis.

- A matrix becomes a subspace by taking the span of the columns (or rows).

Discussion (over time)

- Are tensor spaces enough to gather together the tensors found in your field?

- What about cotensors spaces? One model is to say cotensor spaces are codomains of multilinear maps. Does that work?

- Can tensor spaces be formulated completely with equational laws (instead of representationally as we have done)? I.e. list the axioms like one does with vector spaces.

Tensors in computation

- Tensor spaces make for "black-box" tensors.

- When using black-box algebra you have two choice:

- Move to the promise hierarchy (Babai-Szemeredi), lots known, but not part of P vs. NP inquiry.

- Stay in traditional decision hierarchy by requiring all objects provide proofs of axioms (Dietrich-W.).*

* This is actually where we need types. And it wouldn't have been possible even 5 years ago as types did not have a solid time-complexity as they do now (Accattoli-Dal Lago).

Tensor Constructors

- Shuffle

- Stack

- Slice

- Product

- Contraction

Shuffle the Frames

These are basis independent (in fact functors),

E.g.:

Rule: If shuffling through index 0, collect a dual.

And so duals applied in 0 and 1

Shuffle History

-

In Levi-Civita index calculus this is "raising-lowering'' an index.

-

In algebra these are transposes (here we use dagger) and opposites "ops". Knuth & Liebler gave general interpretations.

Despite long history, often rediscovered independently, but sometimes the rediscovery fails to use proper duals!

Whenever possible we shuffle problems to work on the 0 axis. We shall do so even without alerting the reader. We shall also work with single axes even if multiple axes are possible as well.

Side effects include confusion, loss of torsion, and incorrect statements in infinite dimensions. The presenter and his associates assume no blame for researchers harmed by these choices.

DISCLAIMER

Stacks

Glue together tensor with a common set of axes.

Interprets systems of forms, see below...

Systems of Forms as Tensors

Commonly introduced with homogeneous polynomials.

E.g. quadratic forms.

where

Stack Gram matrices

because

Slices

Cut tensor along an axis.

Slices recover systems of forms, see this.

Products

Area = Length x Width

Volume = Length x Width x Height

Univesality? Facing Torsion? Look down.

this defines matrices as a tensor product

Universal Mapping Property

There is onto linear map:

Whitney products with torsion

Quotient by an ideal to get exact sequence of tensors.

(Rows are exact sequences, columns are Curried bilinear maps.)

Tensor Ideals

As with rings, ideals have quotients.

Fractional inspired notation for things known, e.g.:

Fractional inspired notation for things known, e.g.:

More accurately there are natural maps, invertible if f.d. over fields

Contractions

Add the values along the axis.

Perhaps weight by another tensor

then add.

Simplest example is the standard dot-product.

Uses? Averages, weighted averages, matrix multiplication, lets explore one called "convolution"...

Convolution

Goal: sharpen an image, find edges, find textures, etc.

In the past it was for "photo-shop" (is this trade marked?).

Today its image feature extraction to give to machine learning.

We turn the image into a (3 x 3 x ab)-tensor where very slice is a (3 x 3)-subimage.

Convolution with a target shape is a contraction on the (3 x 3)-face. Result is a ab-tensor (another (a x b)-image).

Do this with k-targets and get an (a x b x k) tensor with the meta-data of our image.

Machine Learner tries to learn these tensors.

+ + + + + + +

* * * * * + + + + + + + + +

* * * * * * * + + + + + + + +

* * * * * * * * * + + + + + +

* * * * * * * * *

* * * * * * * * *

* * * * * * * * *

* * * * * * * * * - - - - - -

* * * * * * * - - - - - - - -

* * * * * - - - - - - - - -

- - - - - - -

Some convolutions detect horizontal edges

+ + - -

* * * * * + + + - - -

* * * * * * * + + + + - - - -

* * * * * * * * * + + + - - -

* * * * * * * * * + + - -

* * * * * * * * * + + - -

* * * * * * * * * + + - -

* * * * * * * * * + + + - - -

* * * * * * * + + + + - - - -

* * * * * + + + - - -

+ + - -Some convolutions detect vertical edges

- - - - -

* * * * * - + + + + + -

* * * * * * * - + + -

* * * * * * * * * - + + -

* * * * * * * * * - + + -

* * * * * * * * * - + + -

* * * * * * * * * - + + -

* * * * * * * * * - + + -

* * * * * * * - + + -

* * * * * - + + + + + -

- - - - -

Some convolutions are good at all edges.

Most important contraction: generalized matrix multiplication

Further Flavors... including our logo :)

Operators

Stuff an infinite sequence

in a finite-dimensional space,

you get a dependence.

So begins the story of annihilator polynomials and eigen values.

An infinite lattice in finite-dimensional space makes even more dependencies.

(and the ideal these generate)

> M := Matrix(Rationals(), 2,3,[[1,0,2],[3,4,5]]);

> X := Matrix(Rationals(), 2,2,[[1,0],[0,0]] );

> Y := Matrix(Rationals(), 3,3,[[0,0,0],[0,1,0],[0,0,0]]);

> seq := [ < i, j, X^i * M * Y^j > : i in [0..2], j in [0..3]];

> U := Matrix( [s[3] : s in seq]);

i j X^i * M * Y^j

0 0 [ 1, 0, 2, 3, 4, 5 ]

1 0 [ 1, 0, 2, 0, 0, 0 ]

2 0 [ 1, 0, 2, 0, 0, 0 ]

0 1 [ 0, 0, 0, 0, 4, 0 ]

1 1 [ 0, 0, 0, 0, 0, 0 ]

2 1 [ 0, 0, 0, 0, 0, 0 ]

0 2 [ 0, 0, 0, 0, 4, 0 ]

1 2 [ 0, 0, 0, 0, 0, 0 ]

2 2 [ 0, 0, 0, 0, 0, 0 ]

0 3 [ 0, 0, 0, 0, 4, 0 ]

1 3 [ 0, 0, 0, 0, 0, 0 ]

2 3 [ 0, 0, 0, 0, 0, 0 ]

In detail

Step out the bi-sequence

> E, T := EchelonForm( U ); // E = T*U

0 0 [ 1, 0, 2, 3, 4, 5 ] 1

1 0 [ 1, 0, 2, 0, 0, 0 ] x

0 1 [ 0, 0, 0, 0, 4, 0 ] y

Choose pivots

Write null space rows as relations in pivots.

> A<x,y> := PolynomialRing( Rationals(), 2 );

> row2poly := func< k | &+[ T[k][1+i+3*j]*x^i*y^j :

i in [0..2], j in [0..3] ] );

> polys := [ row2poly(k) : k in [(Rank(E)+1)..Nrows(E)] ];

2 0 [ 1, 0, 2, 0, 0, 0 ] x^2 - x

1 1 [ 0, 0, 0, 0, 0, 0 ] x*y

2 1 [ 0, 0, 0, 0, 0, 0 ] x^2*y

0 2 [ 0, 0, 0, 0, 4, 0 ] y^2 - y

1 2 [ 0, 0, 0, 0, 0, 0 ] x*y^2

2 2 [ 0, 0, 0, 0, 0, 0 ] x^2*y^2

0 3 [ 0, 0, 0, 0, 4, 0 ] y^3 - y

1 3 [ 0, 0, 0, 0, 0, 0 ] x*y^3

2 3 [ 0, 0, 0, 0, 0, 0 ] x^2*y^3

> ann := ideal< A | polys >;

> GroebnerBasis(ann);

x^2 - x,

x*y,

y^2 - y

Take Groebner basis of relation polynomials

Groebner in bounded number of variables is in polynomial time (Bradt-Faugere-Salvy).

Same tensor,

different operators,

can be different annihilators.

Different tensor,

same operators,

can be different annihilators.

Data

Action by polynomials

Resulting annihilating ideal

Could this be wild? Read below.

Annihilators

Mal'cev showed that the representation of 2-generated algebras is "wild" in that its theory is undecidable.

However, we have two features: our variables commute, and our operators are transverse.

Still, maybe this is wild?

Transverse Operators

This presecirbes a bimap

The image of this map we call the transverse operators.

These generate all other operators.

Explore a proof below or move on to more generality

Claim

Kernel is the annihilator of the operator.

Theorem. A Groebner basis for this annihilator can be computed in polynomial time.

Fact.

Annihilators General

Open

- Find more examples of matrices whose traits are parabolic (known), hyperbolic (known), elliptic (not known).

- Find a family of matrices with a trait that has genus, can all ellipitic curves appear?

- Is every ideal possible as an annihilator?

Traits

Prime & Primary Factors

- Unique by Lasker-Noether (below)

- Computable by Groebner basis

- Generalize eigen theory of operators on a single vector space.

- Problem: primes almost always determined by axis, often 0-dimensional, i.e. just eigen values.

A trait is an element of the Groebner basis of a prime decomposition of the annihilator.

Traits generalize eigen values.

In K[x]

Lasker-Noether: In K[X]

are all prime, and the minimal primes are unique.

where

As a variety we only see the radical, i.e. that x=0 and y=0.

There is nothing about the tensor in this.

Need to look at the scheme -- need to focus on the xy.

Isolating Traits

Examples

Ideals for operator sets

This ideal is the intersection of annihilators for each operator. So the dimension of the ideal grows.

This is how we isolate traits of high dimension -- use many operators.

Tensors with traits

T-sets are to traits what eigen vectors are to eigen values.

The Correspondence Theorem (First-Maglione-W.)

This is a ternary Galois connection.

Summary of Trait Theorems (First-Maglione-W.)

- Linear traits correspond to derivations.

- Monomial traits correspond to singularities

- Binomial traits are only way to support groups.

For trinomial ideals, all geometries can arise so classification beyond this point is essentially impossible.

Derivations & Densors

Most influential Traits

Idea: study the hypersurface traits -- captures the largest swath of operators. Reciprocally, what we find applies to very few tensors, perhaps even just the one we study.

Fact: In projective geometry there is a well-defined notation of a generic degree d hyper surface:

Seems tractible only for the linear case

Derivations & Densors

Treating 0 as contra-variant the natural hyperplane is:

That is, the generic linear trait is simply to say that operators are derivations!

However, the schemes Z(S,P) are not the same as Z(P), so generic here is not the same as generic there...work required.

Derivations are Universal Linear Operators

Theorem (FMW). If

Then

(If 1. fails extend the field; if 2. is affine, shift; if 3 fails, then result holds over support of P.)

Unintended Consequences

Since Whitney's 1938 paper, tensors have been grounded in associative algebras.

Derivations form natural Lie algebras.

If associative operators define tensor products but Lie operators are universal, who is right?

Tensor products are naturally over Lie algebras

Theorem (FMW). If

Then in all but at most 2 values of a

In particular, to be an associative algebra we are limited to at most 2 coordinates. Whitney's definition is a fluke.

Module Sides no longer matter

- Whitney tensor product pairs a right with a left module, often forces technical op-ring actions.

- Lie algebras are skew commutative so modules are both left and right, no unnatural op-rings required.

Associative Laws no longer

- Whitney tensor product is binary, so combining many modules consistantly by associativity laws isn't always possible - different coefficient rings.

- Lie tensor products can be defined on arbitrary number of modules - no need for associative laws.

Missing opperators

- Whitney tensor product puts coefficient between modules only, cannot operate at a distance.

As valence grows we act on a linear number of spaces but have exponentially many possible actions left out.

Lie tensor products act on all sides.

Densor

Densors are

- agnostic to sides of modules -- no comm. laws.

- n-ary needing no associative laws.

- operators are on all sides

- ...and Whitney products are actually just a special case of densors.

Monomials, Singularities, & simplicial complexes

Local Operators

I.e. operators that on the indices A are restricted to the U's.

Claim. Singularity at U if, and only if, monomial trait on A.

Singularities come with traits that are in bijection with Stanley-Raisner rings, and so with simplicial complexes.

Shaded regions are 0.

Binomials & Groups

Theorem (FMW). If for every S and P

then

If

then the converse holds.

(We speculate this is if, and only if.)

Implication

- Classifies the categories of tensors with transverse operators (there are as many as the valence).

Under symmetry...

In many settings a symmetry is required. The correspondence applies here but the classifications just given all evolve. Much to be learned here. E.g.

Application: Decompositions

Tensor & Their Operators

By James Wilson

Tensor & Their Operators

Definitions and properties of tensors, tensor spaces, and their operators.

- 1,998