Day 26:

Singular Value Decomposition

A new problem

Assume you have a collection of vectors \(\{v_{1},v_{2},\ldots,v_{n}\}\) in \(\R^{m}\). Given a subspace \(W\subset\R^{m}\), let \(P_{W}\) be the orthogonal projection onto \(W\).

We already know that \(P_{W}x\) is the vector in \(W\) that is closest to \(x\).

That is, \(\|P_{W}x-x\|\leq \|w-x\|\) for all \(w\in W\).

Now, we want to find the subspace \(W\) so that each \(P_{W}v_{i}\) is as close as possible to \(v_{i}\), that is, \(\|P_{W}v_{i}-v_{i}\|\) is as small as possible for each \(i=1,2,\ldots,n\).

Trivial answer: Simply take \(W = \text{span}\{v_{1},\ldots,v_{n}\}\).

We want the subspace to be as "small" as possible, thus we want the dimension to be small.

A new problem

Given a collection of vectors \(\{v_{1},v_{2},\ldots,v_{n}\}\) in \(\R^{m}\) and a subspace \(W\subset\R^{m}\) of dimension \(k\) how do we minimize all of the distances \(\|P_{W}v_{i} - v_{i}\|\)?

WE CAN'T!

Example. If \(v_{1} = \left[\begin{array}{r} 1\\ 0\end{array}\right]\), \(v_{2} = \left[\begin{array}{r} 1\\ 2\end{array}\right]\), \(v_{3} = \left[\begin{array}{r} 0\\ 1\end{array}\right]\), then there is no \(1\)-dimensional subspace \(W\subset \R^{2}\) such that all of the numbers

\(\|P_{W}v_{i}-v_{i}\|\) are all as small as possible.

What do we do?

Minimize one of the following:

\[\sum_{i=1}^{n}\|P_{W}v_{i} - v_{i}\|^{2},\quad \sum_{i=1}^{n}\|P_{W}v_{i} - v_{i}\|,\quad\text{or}\quad \max_{i}\|P_{W}v_{i} - v_{i}\|\]

Theorem (Singular Value Decomposition - Outer product form). If \(B\) is an \(m\times n\) matrix and \(p=\min\{m,n\}\), then there are orthonormal bases \(\{u_{1},\ldots,u_{m}\}\) for \(\R^{m}\) and \(\{v_{1},\ldots,v_{n}\}\) for \(\R^{n}\), and nonnegative scalars \(\sigma_{1},\ldots,\sigma_{p}\) such that

\[B = \sum_{i=1}^{p}\sigma_{i}u_{i}v_{i}^{\top}.\]

Proof. Let \(v_{1},\ldots,v_{n}\) be an orthonormal basis of eigenvectors of \(B^{\top}B\) with associated eigenvalues \(\lambda_{1}\geq \lambda_{2}\geq\ldots\geq \lambda_{n}\geq 0.\)

Let \(r\) be the rank of \(B,\) so that \(\lambda_{r}>0\) and \(\lambda_{r+1}=0\) (if \(r<n\)).

For each \(i\in\{1,\ldots,r\}\) define \[u_{i} = \frac{Bv_{i}}{\sqrt{\lambda_{i}}}.\] Note that \[u_{i}\cdot u_{j} = \frac{1}{\sqrt{\lambda_{i}\lambda_{j}}}(Bv_{i})^{\top}(Bv_{j}) = \frac{1}{\sqrt{\lambda_{i}\lambda_{j}}}v_{i}^{\top}B^{\top}Bv_{j} = \frac{1}{\sqrt{\lambda_{i}\lambda_{j}}}v_{i}^{\top}\lambda_{j}v_{j}\]

Proof continued. \[u_{i}\cdot u_{j} = \frac{1}{\sqrt{\lambda_{i}\lambda_{j}}}v_{i}^{\top}\lambda_{j}v_{j} = \frac{\lambda_{j}}{\sqrt{\lambda_{i}\lambda_{j}}} v_{i}\cdot v_{j} = \begin{cases} 1 & i=j,\\ 0 & i\neq j\end{cases}\]

From this we see that \(\{u_{1},\ldots,u_{r}\}\) is an orthonormal set. Next, note that

\[BB^{\top} u_{i} = \frac{BB^{\top}Bv_{i}}{\sqrt{\lambda_{i}}} = \frac{B\lambda_{i}v_{i}}{\sqrt{\lambda_{i}}} = \lambda_{i}u_{i}.\] Since \(r=\text{rank}(BB^{\top})\) we see that \(\{u_{1},\ldots,u_{r}\}\)is an orthonormal basis for \(C(BB^{\top})\). We can complete this to an orthonormal basis \(\{u_{1},\ldots,u_{m}\}\). By the definition of \(u_{i}\) for \(i=1,\ldots,r\) we have

\[Bv_{i} = \sqrt{\lambda_{i}}u_{i}\]

Setting \(\sigma_{i} = \sqrt{\lambda_{i}}\) this completes the proof. \(\Box\)

Example. Consider the matrix

\[B = \begin{bmatrix} 7 & 3 & 7 & 3\\ 3 & 7 & 3 & 7\end{bmatrix}\]

We compute

\[B^{\top}B = \begin{bmatrix} 58 & 42 & 58 & 42\\ 42 & 58 & 42 & 58\\ 58 & 42 & 58 & 42\\ 42 & 58 & 42 & 58\end{bmatrix}\]

Since this matrix is positive semidefinite (not definite) we have the spectral decomposition:

\[B^{\top}B = \frac{1}{2}\begin{bmatrix} 1 & 1 & 1 & 1\\ 1 & -1 & 1 & -1\\ 1 & 1 & -1 & -1\\ 1 & -1 & -1 & 1\end{bmatrix}\begin{bmatrix} 200 & 0 & 0 & 0\\ 0 & 32 & 0 & 0 \\ 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0\end{bmatrix}\frac{1}{2}\begin{bmatrix} 1 & 1 & 1 & 1\\ 1 & -1 & 1 & -1\\ 1 & 1 & -1 & -1\\ 1 & -1 & -1 & 1\end{bmatrix}\]

Note that the spectral decompositon is not unique.

Example. Consider the matrix

\[B = \begin{bmatrix} 7 & 3 & 7 & 3\\ 3 & 7 & 3 & 7\end{bmatrix}\]

We compute

\[B^{\top}B = \begin{bmatrix} 58 & 42 & 58 & 42\\ 42 & 58 & 42 & 58\\ 58 & 42 & 58 & 42\\ 42 & 58 & 42 & 58\end{bmatrix}\]

Since this matrix is positive semidefinite (not definite) we have the spectral decomposition:

\(B^{\top}B =\)\[ \frac{1}{2}\begin{bmatrix} 1 & 1 & \sqrt{2} & 0\\ 1 & -1 & 0 & \sqrt{2}\\ 1 & 1 & -\sqrt{2} & 0\\ 1 & -1 & 0 & -\sqrt{2}\end{bmatrix}\begin{bmatrix} 200 & 0 & 0 & 0\\ 0 & 32 & 0 & 0 \\ 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0\end{bmatrix}\frac{1}{2}\begin{bmatrix} 1 & 1 & 1 & 1\\ 1 & -1 & 1 & -1\\ \sqrt{2} & 0 & -\sqrt{2} & 0\\ 0 & \sqrt{2} & 0 & -\sqrt{2} \end{bmatrix}\]

Note that the spectral decompositon is not unique.

Example continued. Let

\[V = \frac{1}{2}\begin{bmatrix} 1 & 1 & 1 & 1\\ 1 & -1 & 1 & -1\\ 1 & 1 & -1 & -1\\ 1 & -1 & -1 & 1\end{bmatrix}\]

and let \(v_{i}\) denote the \(i\)th column of \(V\).

The singular values are \(\sigma_{1} = \sqrt{200} = 10\sqrt{2}\) and \(\sigma_{2} = \sqrt{32} = 4\sqrt{2}\), and the left singular vectors are

\[u_{1} = \frac{1}{10\sqrt{2}}Bv_{1} = \frac{1}{\sqrt{2}}\begin{bmatrix}1\\ 1\end{bmatrix}\quad\text{and}\quad u_{2} = \frac{1}{4\sqrt{2}}Bv_{2} = \frac{1}{\sqrt{2}}\begin{bmatrix}1\\ -1\end{bmatrix}\]

\[B = \sigma_{1}u_{1}v_{1}^{\top} + \sigma_{2}u_{2}v_{2}^{\top}=\begin{bmatrix} 5 & 5 & 5 & 5\\ 5 & 5 & 5 & 5\end{bmatrix} + \begin{bmatrix} 2 & -2 & 2 & -2\\ -2 & 2 & -2 & 2\end{bmatrix}\]

Example continued. Let

\[B = \begin{bmatrix} 2 & -1\\ 2 & 1\end{bmatrix}.\]

\[B^{\top}B = \begin{bmatrix} 8 & 0\\ 0 & 2\end{bmatrix} = \begin{bmatrix} 1 & 0\\ 0 & 1\end{bmatrix}\begin{bmatrix} 8 & 0\\ 0 & 2\end{bmatrix}\begin{bmatrix} 1 & 0\\ 0 & 1\end{bmatrix}\]

\[v_{1} = \begin{bmatrix}1\\ 0\end{bmatrix}\quad\text{and}\quad v_{2} = \begin{bmatrix} 0\\ 1\end{bmatrix}\]

\[u_{1} = \frac{1}{\sqrt{2}}\begin{bmatrix}1\\ 1\end{bmatrix}\quad\text{and}\quad u_{2} = \frac{1}{\sqrt{2}}\begin{bmatrix} -1\\ 1\end{bmatrix}\]

\[B = 2\sqrt{2}\left(\frac{1}{\sqrt{2}}\begin{bmatrix} 1 & 0\\ 1 & 0\end{bmatrix}\right) + \sqrt{2}\left(\frac{1}{\sqrt{2}}\begin{bmatrix} 0 & -1\\ 0 & 1\end{bmatrix}\right)\]

\[B = \frac{1}{\sqrt{2}}\begin{bmatrix} 1 & -1\\ 1 & 1\end{bmatrix}\begin{bmatrix} 2\sqrt{2} & 0\\ 0 & \sqrt{2}\end{bmatrix}\begin{bmatrix}1 & 0\\ 0 & 1\end{bmatrix}\]

Matrix form:

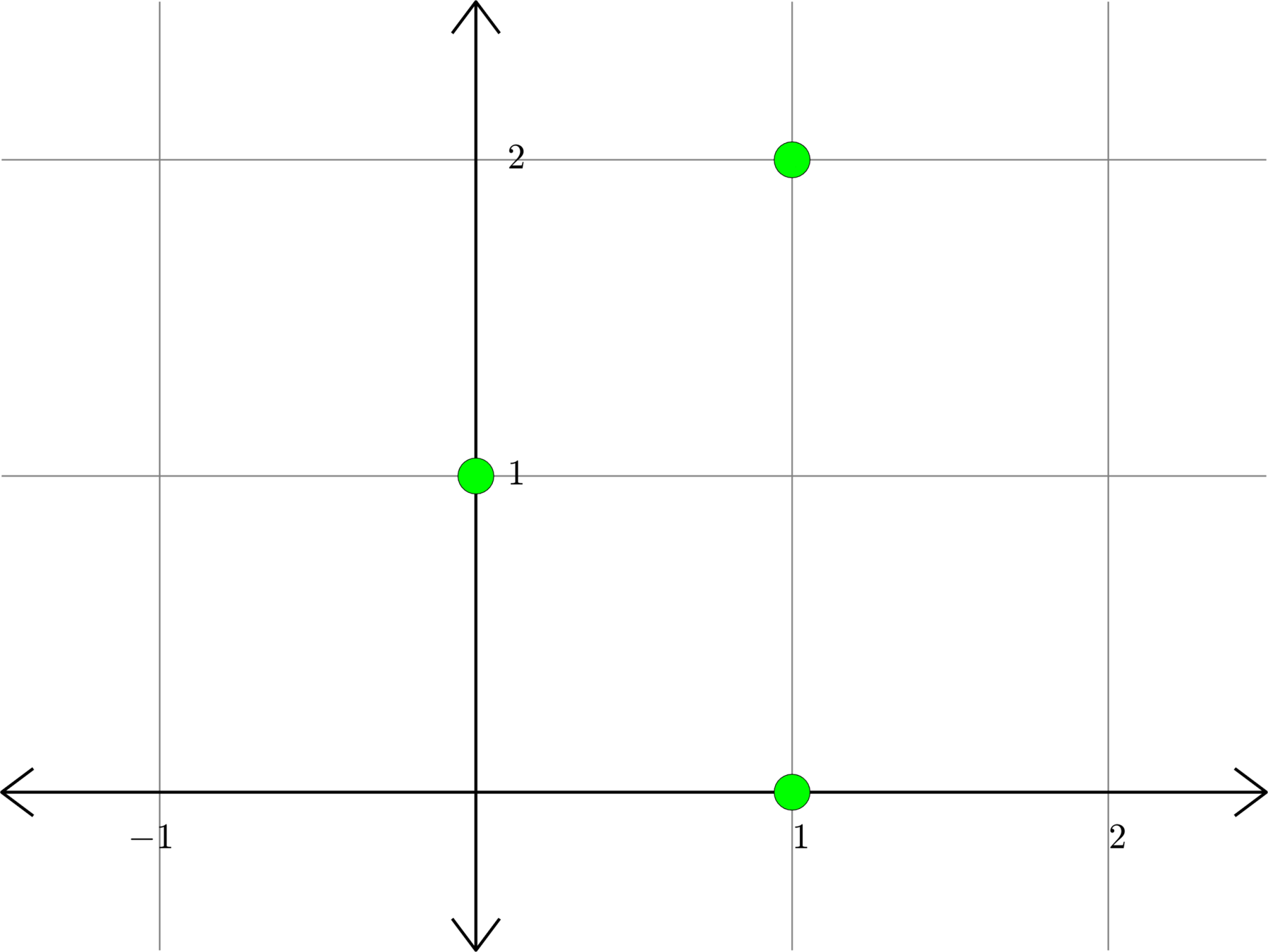

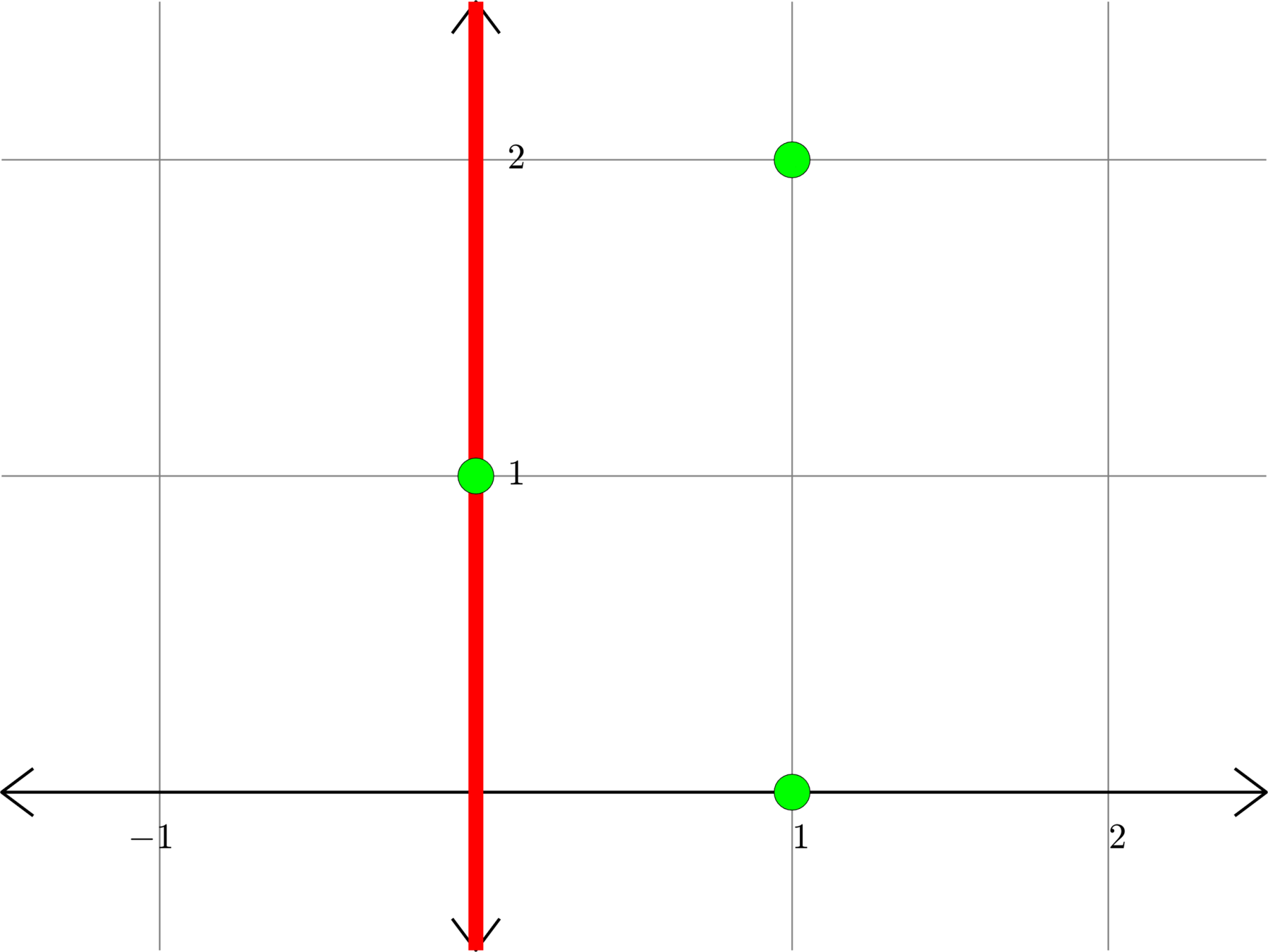

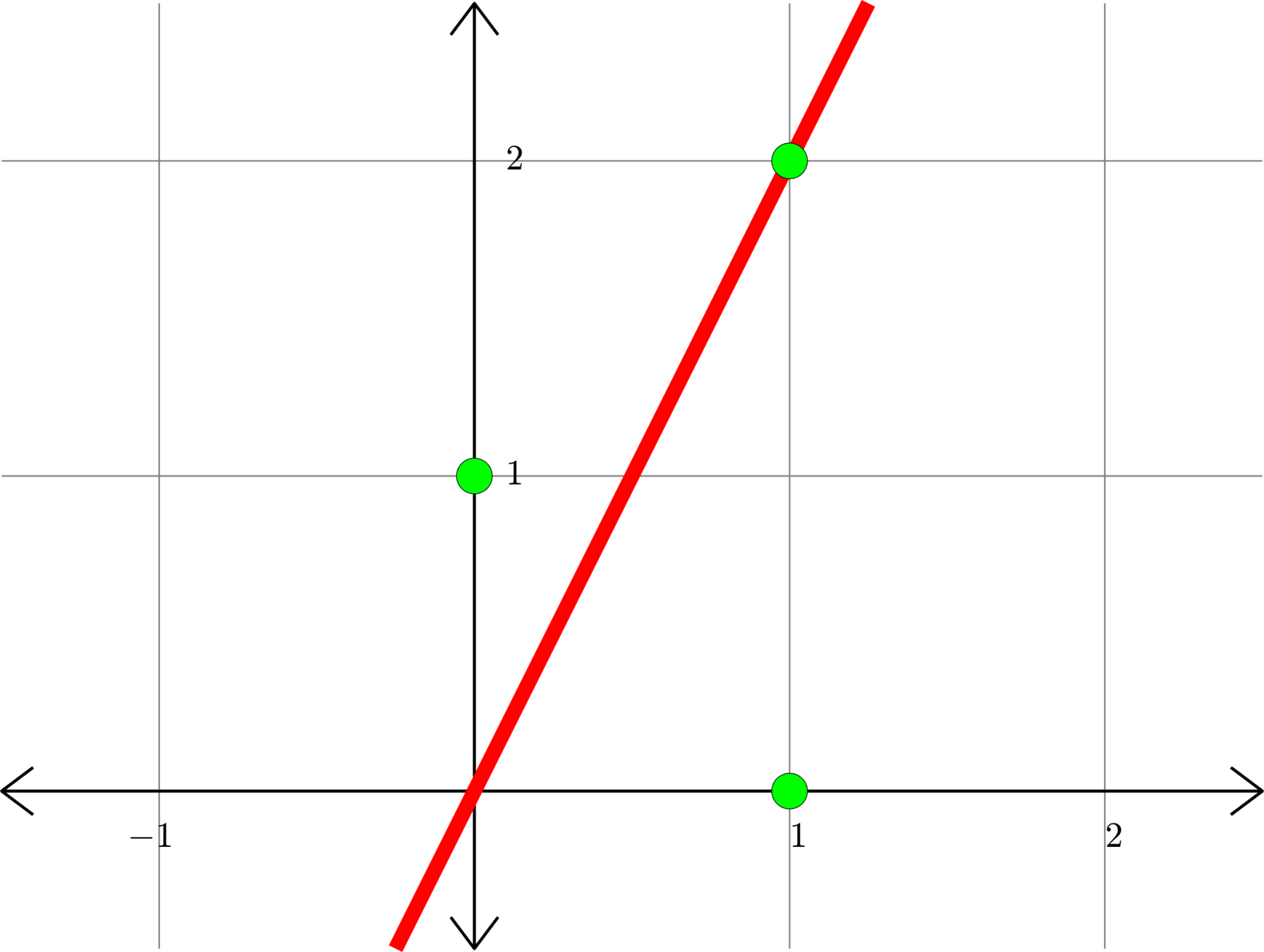

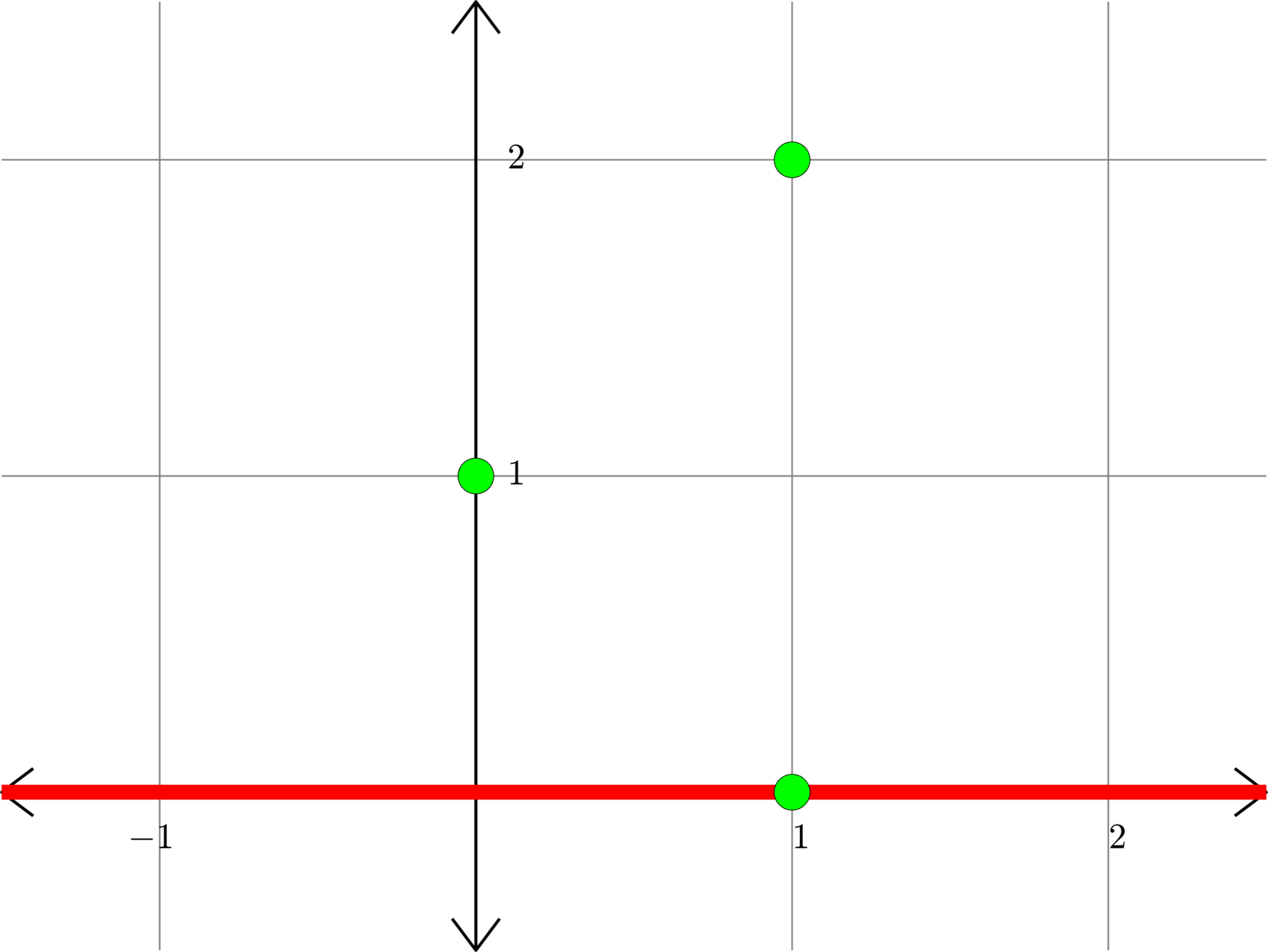

Example continued. Let \(\displaystyle{B = \begin{bmatrix} 2 & -1\\ 2 & 1\end{bmatrix}.}\)

\(B(\text{blue vector}) = \text{red vector}\)

\(\text{blue vector}\)

\(\text{red vector}\)

The blue vectors go through all vectors of length \(1\).

Linear Algebra Day 26

By John Jasper

Linear Algebra Day 26

- 886