Real Analysis

Part 1:

Sets

(See Chapter 1 in Book of Proof)

The central objects in mathematics are sets. A set is a collection of things. The things in a set are called elements.

Sets

Often, we denote a set by simply writing all the elements between \(\{\}\) ("curly braces") separated by commas. For example,

\[\{1,2,4,5\}\]

is the set with four elements, these elements being \(1,2,4,\) and \(5\).

We often give sets names, these will frequently be a single capital roman letter, for example, \[B = \{1,2,4,5\}.\]

To express the statement "\(x\) is an element of \(A\)" we write \(x\in A\). Thus, given the set \(B\) defined above, we see that \(1\in B\) is a true statement, and \(3\in B\) is a false statement.

We will also write \(x\notin A\) to for the statement "\(x\) is not an element of \(A\)."

Equality of sets

Two sets \(A\) and \(B\) are equal (they are the same set) if they contain exactly the same elements, that is, the following two statements hold:

If \(x\in A\), then \(x\in B\).

If \(x\in B\), then \(x\in A\).

In this case we write \(A=B\).

Important example:

Important example: Consider the sets \(A=\{1,2,2,3\}\) and \(B=\{1,2,3\}\). We can easily see that every element of \(A\) is an element of \(B\), and every element of \(B\) is an element of \(A\). Thus

\[\{1,2,2,3\}=\{1,2,3\}.\]

An element is either in a set or it is not. Sets cannot contain multiple copies of an element.

Subsets

Given two sets \(A\) and \(B\), if every element of \(A\) is also an element of \(B\), then we say that \(A\) is a subset of \(B\), and write \(A\subset B\) or \(A\subseteq B\) (these both mean the exact same thing). For example,

\[\{1,2\}\subset\{1,2,4,5\}\]

but \(\{1,2,3\}\) is not a subset of \(\{1,2,4,5\}\) since \(3\in\{1,2,3\}\) and \(3\notin\{1,2,4,5\}\)

Note that the statement "\(A=B\)" can be rewritten as "\(A\subset B\) and \(B\subset A.\)" This will be very useful later.

Ellipsis notation

The set of integers is denoted \(\mathbb{Z}\). The set of positive integers, also called the natural numbers or the naturals, is denoted \(\mathbb{N}\) or \(\mathbb{Z}^{+}\).

We will sometimes write sets like \(\mathbb{N}\) and \(\mathbb{Z}\) with ellipsis notation, as follows,

\[\N=\{1,2,3,\ldots\}\qquad \Z = \{\ldots,-2,-1,0,1,2,3,\ldots\}\]

In this notation, we list the first several elements of a set, until there is an apparent pattern, then write an ellipsis (...). After the ellipsis we write the final element in the pattern. If no element is written after the ellipsis, then the list of elements goes on without end.

Examples.

- \(\{2,4,6,8,\ldots\}\) is the set of even positive integers.

- \(\{3,7,11,15,\ldots,43\}=\{3,5,7,11,15,19,23,27,31,35,39,43\}\)

Problems with ellipsis notation

Consider the set

\[C:=\{2,4,8,\ldots\}.\]

Questions:

Is \(16\in C\)? Is \(14\in C\)?

What is the pattern? Is the \(n\)th term in the sequence \(2^{n}\) or \(n^2-n+2\) or something else?

It's not clear!

Suppose \(N\in\mathbb{N}\) and define the set

\[D:=\{2,4,6,\ldots,2N\}.\] If \(N=5\), then

\[D = \{2,4,6,\ldots,10\}=\{2,4,6,8,10\}\]

but if \(N=2\), then

\[D = \{2,4,6,\ldots,4\}(?)\]

We will interpret this set as \(\{2,4\}\).

Set builder notation

Let \(A\) be a set, and \(S(x)\) denote a statement whose truth value depends on \(x\in A\). The set of \(x\in A\) such that \(S(x)\) is true is denoted

\[\{x\in A : S(x)\}\quad\text{or}\quad \{x\in A\mid S(x)\}\quad\text{or}\quad \{x : x\in A\text{ and }S(x)\}.\]

Both the colon and the vertical line are read as "such that" or "with the property that."

Example. Consider the set of even positive integers:

\[\{x\in\mathbb{N} : x\text{ is even}\}.\]

This would be read:

"The set of \(x\) in the natural numbers such that \(x\) is even."

Using the notation from the above definition we have \(A=\N\) and \(S(x)\) is the statement "\(x\) is even"

Set builder notation

More generally, let \(A\) be a set. For each \(x\in A\) let \(f(x)\) be an expression that depends on \(x\) and \(S(x)\) denote a statement whose truth value depends on \(x\in A\). The set of all elements of the form \(f(x)\) with the property that \(x\in A\) and \(S(x)\) is true is denoted

\[\{f(x) : x\in A\text{ and }S(x)\}.\]

Example. Consider the set of even positive integers:

\[\{2x: x\in \N\}.\]

In the notation of the above definition we have \(A=\N\), \(f(x) = 2x\), and \(S(x)\) is true for all \(x\in\N\).

Using set builder notation we can disambiguate the sets from two slides prior:

\[\{2^{n} : n\in\mathbb{N}\}\quad\text{and}\quad\{n^2-n+2 : n\in\mathbb{N}\}.\]

Examples.

- \(\{2n-1 : n\in\N\text{ and }n\leq 5\} = \{1,3,5,7,9\}\)

- \(\{25-4n : n\in\N\text{ and }n\leq 10\text{ and } n>5\} = \{1,-3,-7,-11,-15\}\)

Consider the set

\[\{x\in\mathbb{R} : x^{2}+1 = 0\}.\]

There does not exist a real number \(x\) such that \(x^{2}+1=0\), and hence this set does not contain any elements!

The set containing no elements is called the empty set. The empty set is denoted \(\varnothing\) or \(\{\}\).

Part 2:

Proofs and Logic

(See Chapters 2,4,5 & 6 in Book of Proof)

Proposition 1. \(\{n\in\mathbb{Z} : n+1\text{ is odd}\} = \{2n : n\in\mathbb{Z}\}.\)

Proving sets are equal.

Proof. First we will show that \(\{n\in\mathbb{Z} : n+1\text{ is odd}\} \subset \{2n : n\in\mathbb{Z}\}.\) Let \(m\in\{n\in\mathbb{Z} : n+1\text{ is odd}\}\) be arbitrary. This implies that \(m+1\) is odd, that is, there exists \(k\in\Z\) such that \(m+1=2k+1.\) Subtracting \(1\) from both sides we deduce \(m=2k\), and hence \(m\in \{2n : n\in\Z\}.\)

Next, we will show \(\{2n:n\in\mathbb{Z}\}\subset\{n\in\mathbb{Z} : n+1\text{ is odd}\}\).

Let \(p\in\{2n:n\in\mathbb{Z}\}\) be arbitrary. Then, there exists \(q\in\mathbb{Z}\) such that \(p=2q\), and hence \[p+1 = 2q+1.\] Since \(q\in\mathbb{Z}\), we conclude that \(p+1\) is odd, and hence

\(p\in\{n\in\mathbb{Z} : n+1\text{ is odd}\}.\) \(\Box\)

Definition. An integer \(n\) is even if there exists an integer \(k\) such that \(n=2k\). An integer \(n\) is odd if there exists an integer \(p\) such that \(n=2p+1\).

Proposition 2. If \(n\in\Z\) is odd, then \(n^{2}\) is odd.

Proving implications (Direct proof)

\(A : n\) is odd

\(B : n^{2}\) is odd

We wish to prove the implication \(A\Rightarrow B\). We will use a direct proof, that is, we will assume \(A\), argue directly to \(B\).

Proof. Suppose \(n\in\Z\) is odd. By definition, this means that there exists \(k\in\Z\) such that \(n=2k+1\). This implies

\[n^{2} = (2k+1)^{2} = 4k^{2}+4k+1 = 2(2k^{2}+2k)+1.\]

Since \(2k^{2}+2k\) is an integer, we conclude that \(n^{2}\) is odd. \(\Box\)

Proposition 3. Suppose \(n\in\Z\). If \(n^{2}\) is even, then \(n\) is even.

Proving implications (Contrapositive proof)

\(A : n^{2}\) is even

\(B : n\) is even

We wish to prove \(A\Rightarrow B\). Instead, we can prove the equivalent statement \((\sim\! B)\Rightarrow(\sim\!A)\), where \(\sim\!A\) denotes the negation of the statement \(A\). The statement \((\sim\! B)\Rightarrow(\sim\!A)\) is called the contrapositive of the statement \(A\Rightarrow B\).

Proof. Suppose \(n\in\Z\) is not even. This implies that \(n\) is odd. By the previous proposition we see that \(n^{2}\) is odd, and therefore \(n^{2}\) is not even. \(\Box\)

In words, the contrapositive of the above proposition takes the form:

Suppose \(n\in\Z\). If \(n\) is not even, then \(n^{2}\) is not even.

Proposition 4. Suppose \(a,b,c\in\Z\). If \(a^{2}+b^{2}=c^{2}\), then either \(a\) is even or \(b\) is even.

Proving implications (Proof by contradiction)

\(A : a^{2}+b^{2}=c^{2}\)

\(B : a\) is even or \(b\) is even

We wish to prove \(A\Rightarrow B\). Instead, we can prove the equivalent implication \(A\wedge(\sim\!B)\Rightarrow C\wedge(\sim\!C)\) for any(!) statement \(C\). That is, we assume \(A\) and \(\sim\!B\), and we argue that some statement \(C\) and it's negation \(\sim\!C\) both hold. This is called proof by contradition.

The statement we will actually prove is:

If there are integers \(a,b,c\) such that both \(a\) and \(b\) are odd, and \(a^{2}+b^{2}=c^{2}\), then \(4\) divides \(2\). Since it is obvious that \(4\) does not divide 2, we are proving \[A\wedge(\sim\!B)\Rightarrow C\wedge(\sim\!C)\] where \(C:\) 4 divides 2.

(Note that \(A\Rightarrow B\) is the same as \((\sim\!A)\vee B\), hence, in proof by contradiction, we begin by assuming the negation \(A\wedge(\sim\!B)\) of the statement we are trying to prove.)

Proposition 4. Suppose \(a,b,c\in\Z\). If \(a^{2}+b^{2}=c^{2}\), then either \(a\) is even or \(b\) is even.

Proof. Assume toward a contradiction that there are integers \(a,b,c\) such that \(a\) and \(b\) are both odd, and \(a^{2}+b^{2}=c^{2}\). By definition there exist integers \(k,p\in\Z\) such that \(a=2k+1\) and \(b=2p+1\). Note that

\[c^{2} = a^{2}+b^{2} = (2k+1)^{2}+(2p+1)^{2} = 4k^{2}+4k+4p^{2}+4p+2.\] From this we see that \(c^{2}\) is even, and by the previous proposition \(c\) is even. By definition there exists \(m\in\Z\) such that \(c=2m\). Using the above equation we see that

\[4(k^{2}+k+p^{2}+p)+2 = c^{2} = (2m)^{2} = 4m^{2}.\] Rearranging we have

\[4(m^{2}-k^{2}-k-p^{2}-p) = 2.\]

This last equation implies that \(2\) is divisible by \(4\). Since we know that \(2\) is not divisible by \(4\), this gives the desired contradiction.\(\Box\)

Proposition 3. Suppose \(n\in\Z\). If \(n^{2}\) is even, then \(n\) is even.

Contrapositive vs. Contradiction

Proof. Assume toward a contradiction that \(n\) is not even and \(n^{2}\) is even. This implies that \(n\) is odd, that is \(n=2k+1\) for some \(k\in\Z\). Squaring, we obtain

\[n^{2} = 4k^{2}+4k+1=2(2k^{2}+2k)+1.\]

Since \(2k^{2}+2k\) is an integer, this shows that \(n^{2}\) is odd. This contradicts the fact that \(n^{2}\) is even.\(\Box\)

Proof by contradiction: \(A\wedge(\sim\!B)\Rightarrow C\wedge(\sim\!C)\)

Contrapositive: \((\sim\!B)\Rightarrow(\sim\!A)\)

Fake proof by contradiciton: \(A\wedge(\sim\!B)\Rightarrow A\wedge(\sim\!A)\)

Moral: If your proof by contradiction concludes with \(\sim\!A\), then you should use proof by contrapositive instead!

Universal quantifier

\(A : n^{2}\) is even

\(B : n\) is even

Note that \(A\) and \(B\) below are not statements. They're truth value depends on \(n\).

These are more precisely called open sentences (or predicates or propositional functions). They should really have variables

\(A(n) : n^{2}\) is even

\(B(n) : n\) is even

Proposition 3. Suppose \(n\in\Z\). If \(n^{2}\) is even, then \(n\) is even.

Recall the proposition:

Now, Proposition 3 can be more precisely written as \[\forall\,n\in\Z,\ A(n)\Rightarrow B(n).\]

Where the symbol \(\forall\) stands for "for all" or "for every," and is called the universal quantifier.

Note that \(A(n) : n^{2}\) is even, is false unless \(n\in\Z\).

Recall that for false statements \(A\), the statement \(A\Rightarrow B\) is true for any statement \(B\). (In this case \(A\Rightarrow B\) is called a vacuously true statement.)

Thus, in the statement \(\forall\,n\in\Z,\ A(n)\Rightarrow B(n)\) the universal quantifier isn't really necessary. It would be very common to see Proposition 3 written as

Proposition 3. If \(n^{2}\) is even, then \(n\) is even.

The universal quantifier "For all \(n\in\Z\)" is there, it's just not written.

Moral: In math we often omit the universal quantifier before an implication.

Existential quantifier

To say that an open sentence \(A(x)\) is true for all \(x\) in a set \(X\) we use the universal quantifier:

\[\forall\,x\in X,\ A(x).\]

In math, we would write this using words:

"For all \(x\in X\), \(A(x)\) is true."

To say that an open sentence \(A(x)\) is true for at least one \(x\) in a set \(X\) we use the existential quantifier \[\exists\, x\in X,\ A(x).\] In words:

"There exists \(x\in X\) such that \(A(x)\) is true."

Universally quantified statements may be more common, but here is an example of a statement that is not:

"The polynomial \(x^{5}+5x^3+10\) has a real root."

Do you see that this statement is of the form \(\exists\, x\in X,\ A(x)\). What are the set \(X\) and the open sentence \(A(x)\)?

Order of quantifiers

Consider the following two statements:

\(A:\ \) For every even \(n\in\N\) there exists an odd \(m\in\N\) such that \(m>n\).

\(B:\ \) There exists odd \(m\in\N\) such that for all even \(n\in\N\), it is true that \(m>n\).

Because it sounds better in English, we will often write \(B\) as

\(B:\ \) There exists odd \(m\in\N\) such that \(m>n\) for all even \(n\in\N\).

Let \(E\) denote the set of even natrual numbers, and let \(D\) denote the set of odd natural numbers. Using symbols, we can write \(A\) and \(B\) as follows: \[A: \forall\, n\in E,\ \exists\,m\in D,\ m>n\]

\[B: \exists\,m\in D,\ \forall\, n\in E,\ m>n\]

Moral: \(A\) is true, and \(B\) is false. The order of the quantifiers matters. You must think hard to figure out the meaning of a statement, there is no shortcut!

Proving universally quantified statements

Every universally quantified statement can be converted to an implication:

\[\forall\, x\in X,\ A(x)\]

is the same as

\[(x\in X)\Rightarrow A(x)\]

Thus, to prove this statement we take an "arbitrary" element \(x\in X\), then reason that \(A(x)\) is true for that \(x\). The proof looks like

Proof. Let \(x\in X\) be arbitrary.

\[\vdots\]

\[\vdots\]

Therefore, \(A(x)\). \(\Box\)

For example, let's say we want to prove the following statement:

Proposition. For every even number \(n\in\N\), the number \(n^2+1\) is odd.

We might start checking cases:

If \(n=2\), then \(n^2+1=5\). (\(n=2\cdot 1\), then \(n^2+1=2\cdot 2+1\))

If \(n=4\), then \(n^{2}+1=17\). (\(n=2\cdot 2\), then \(n^2+1=2\cdot 8+1\))

\(\vdots\)

But this will never prove the claim.

Instead, we take an arbitrary even number \(n\in\N\).

Now, using only our assumptions that \(n\) is an even natural number, we must argue that \(n^2+1\) is odd.

First, note that there exists \(k\in\N\) such that \(n=2k\).

Next, note that \(n^2+1 = 4k^2+1 = 2(2k^2)+1\).

Since \(2k^2\) is an integer, we conclude that \(n^2+1\) is odd.

We might start checking cases:

If \(n=2\), then \(n^2+1=5\).

If \(n=4\), then \(n^{2}+1=17\).

\(\vdots\)

But this will never prove the claim.

For example, let's say we want to prove the following statement:

Proposition. For every even number \(n\in\N\), the number \(n^2+1\) is odd.

Proof. Let \(n\in\N\) be an arbitrary even number. This implies that \(n=2k\) for some integer \(k\), and thus

\[n^2+1 = (2k)^{2}+1 = 4k^{2}+1 = 2(2k^{2})+1.\]

Since \(2k^2\) is an integer, we conclude that \(n^2+1\) is odd. \(\Box\)

Proving quantified statements

Theorem 1.18. For every positive real number \(x\) there exists a natural number \(n\) such that \(n>x.\)

Proposition. For every \(\varepsilon>0\) there exists \(m\in\N\) such that \(\dfrac{1}{m}<\varepsilon\).

Proof. Let \(\varepsilon>0\) be arbitrary. Note that \(1/\varepsilon\) is a positive real number. By the Theorem 1.18 there exists a natural number \(m\in\N\) such that \(m>\frac{1}{\varepsilon}\). This implies

\[\frac{1}{m}<\varepsilon.\]

The following is an important property of the natural numbers and the real numbers. We will prove it later, but for now we will assume it is true.

\(\Box\)

Proving quantified statements

Proposition. For every \(\varepsilon>0\) there exists \(m\in\N\) such that \(\dfrac{1}{m}<\varepsilon\).

Proof. Assume toward a contradiction that there exists \(\varepsilon>0\) such that \(\varepsilon\leq \dfrac{1}{m}\) for all \(m\in\mathbb{N}\). This implies that \(\dfrac{1}{\varepsilon}\geq m\) for all \(m\in\N\). By Proposition 1.18 there is a natural number \(k\in\N\) such that \(k>\dfrac{1}{\varepsilon}\). This is a contradiction. \(\Box\)

What would it look like if we tried to prove this statement by contradiction?

Statement: \(\forall\,\varepsilon>0,\ \exists\,m\in\N,\ \dfrac{1}{m}<\varepsilon.\)

Negation: \(\exists\,\varepsilon>0,\ \forall\,m\in\N,\ \dfrac{1}{m}\geq\varepsilon.\)

In words, the negation might read:

There exists \(\varepsilon>0\) such that \(\varepsilon\leq \dfrac{1}{m}\) for all \(m\in\mathbb{N}\).

Part 3:

Real numbers

(See sections 1.1-1.19 in Apostol)

The Real Numbers

The real numbers is the set denoted \(\mathbb{R}\) with two binary operations, addition and multiplication, and a relation \(<\), that satisfy the following ten axioms.

Axiom 1. \(x+y=y+x\), \(xy=yx\)

Axiom 2. \(x+(y+z)=(x+y)+z\), \(x(yz)=(xy)z\)

Axiom 3. \(x(y+z) = xy+xz\)

Axiom 4. For all \(x,y\in\mathbb{R}\), there exists a unique number \(z\in\mathbb{R}\) such that \(x+z=y\). This number \(z\) is denoted \(y-x\), and \(x-x\) is denoted \(0\). We also write \(-x\) for \(0-x\).

(commutative laws)

(associative laws)

(distributive law)

Proposition 1. If \(x,y\in\mathbb{R}\), then \(x-x=y-y\).

Proof. Note that \(y+x=x+y\) and \(y+x+(y-y)=x+y+(y-y) = x+y\). This shows that \(x\) and \(x+(y-y)\) are two numbers that can be added to \(y\) to obtain \(x+y\). By the uniqueness in Axiom 4, this shows that \(x=x+(y-y)\). Applying the uniqueness in Axiom 4 again we see that \(y-y=x-x\). \(\Box\)

Axiom 5. There exists at least one real number \(x\neq 0\). If \(x\) and \(y\) are two real numbers with \(x\neq 0\), then there exists a unique real number \(z\) such that \(xz=y\). This \(z\) is denoted by \(y/x\), and \(x/x\) is denoted \(1\).

One can similarly show that \(x/x=y/y\) for all \(x,y\in\mathbb{R}\setminus\{0\}\), that is, \(1\) is well-defined in Axiom 5.

The order axioms

Axiom 6. For \(x,y\in\R\) exactly one of the following is true:

\[x<y,\ y<x,\text{ or }x=y.\]

Axiom 7. If \(x<y\), then \(x+z<y+z\) for all \(z\in\mathbb{R}\).

Axiom 8. If \(x>0\) and \(y>0\), then \(xy>0\).

Axiom 9. If \(x>y\) and \(y>z\), then \(x>z\).

Note that \(x>y\) means \(y<x\). (That had to be stated?! Yep!) If \(x>0\) then we say that \(x\) is positive. We write \(x\leq y\) for the statement

\[x<y\text{ or }x=y,\]

and similarly for \(x\geq y\). If \(x\geq 0\) then we say that \(x\) is nonnegative. If \(x<0\) then we say that \(x\) is negative. If \(x\leq 0\) then we say that \(x\) is nonpositive.

Before we state Axiom 10, the completeness axiom, let's look at some consequences of the first nine axioms.

Proposition 2. If \(x,y,z\in\mathbb{R}\), then \(x(y-z)=xy-xz\).

Proof. By the distributive law

\[xz+x(y-z) = x(z+(y-z)) = x(y) = xy.\]

Also,

\[xz+(xy-xz) = xy.\]

By the uniqueness in Axiom 4 we see that \(x(y-z)=xy-xz\). \(\Box\)

Proposition 3. If \(x\in\mathbb{R}\), then \(x\cdot 0=0\).

Proof. \[x\cdot 0=x(x-x)=x^2-x^2=0.\quad \Box\]

Proposition 4. \(1\neq 0\).

Proof. By Axiom 5 there exists a real number \(x\neq 0\). Assume toward a contradiction that \(1=0\), then \[0 = x\cdot0 = x\cdot 1 = x\neq 0.\quad\Box\]

Proposition 5. \(1>0\).

Proof. By the previous proposition \(1\neq 0\). By Axiom 6, either \(1>0\) or \(1<0\). Assume toward a contradiction that \(1<0\). By Axiom 7 we have

\[0 = 1+(-1)<0+(-1) = -1,\]

that is, \(-1>0\). By Axiom 8, we see that \((-1)(-1)>0\). Next, note that

\[(-1)+(-1)^{2} = (-1)\cdot 1+(-1)^{2} = (-1)(1+(-1)) = (-1)\cdot 0 = 0,\]

and also \((-1)+1 = 0\). By the uniqueness in Axiom 4 we see that \((-1)^{2}=1\). Thus we have shown that both \(1<0\) and \(1>0\). This contradicts Axiom 6, and hence \(1>0\). \(\Box\)

Now, we define the natural numbers or positive integers to be the set \[\mathbb{N}:=\{1,1+1,1+1+1,\ldots\}\]

We write \(2\) for \(1+1\), \(3\) for \(1+1+1\), and so on...

By Axioms 7 and 9 we see that all natural numbers are positive.

(See Section 1.6 for a slightly more precise definition of \(\mathbb{N}\).)

Proposition 7. If \(x>0\), then \(\dfrac{1}{x}>0\).

Proof. By Proposition 6 we see that if \(1/x<0\), then \[0=x\cdot 0>x(1/x)=1.\] This contradiction shows that \(1/x>0\). \(\Box\)

Proposition 6. If \(a<b\) and \(c>0\), then \(ac<bc\).

Proof. Adding \(-a\) to both sides of \(a<b\) we obtain \(b-a>0\). By Axiom 8 we see that \(0<c(b-a)=cb-ca\). Adding \(ca\) we obtain the desired result. \(\Box\)

Theorem 1.1. Let \(a,b\in\mathbb{R}\). If \(a\leq b+\varepsilon\) for all \(\varepsilon>0\), then \(a\leq b\).

Proof. Assume \(a>b\). Set \(\varepsilon = \frac{a-b}{2}\). Note that \(\varepsilon>0\) and

\[b+\varepsilon = b+\frac{a-b}{2} = \frac{a+b}{2}<\frac{a+a}{2} = a\]

Thus, there exists \(\varepsilon>0\) such that \(b+\varepsilon<a\). \(\Box\)

The rational numbers

The integers are the numbers in set

\[\mathbb{Z}:=\N\cup\{0\}\cup\{-n : n\in\mathbb{N}\}.\]

The rational numbers are the numbers in the set

\[\mathbb{Q} = \left\{\frac{m}{n} : m,n\in\Z\text{ and }n\neq 0\right\}.\]

If \(x\in\mathbb{R}\setminus\mathbb{Q}\), that is, \(x\) is a real number which is not a rational number, then \(x\) is called an irrational number.

Theorem. There does not exist \(x\in\mathbb{Q}\) such that \(x^{2} = 2\).

Proof. See Theorem 1.10 in the text. \(\Box\)

Note that it would be incorrect at this point to say that \(\sqrt{2}\notin\mathbb{Q}\) since we do not know that there is a real number \(x\) such that \(x^{2} = 2\).

Upper bounds

Definition 1.12. Let \(S\) be a set of real numbers. If there is a real number \(b\) such that \(x\leq b\) for all \(x\in S\), then \(b\) is called an upper bound for \(S\) and we say that \(S\) is bounded above by \(b\). If \(S\) has no upper bound, then we say that \(S\) is unbounded above.

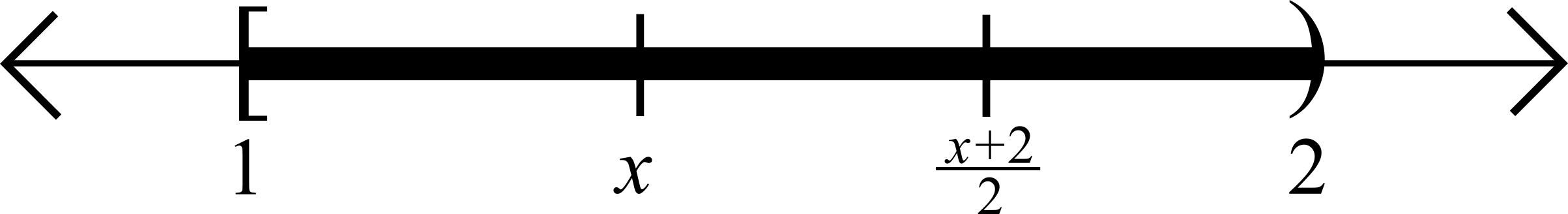

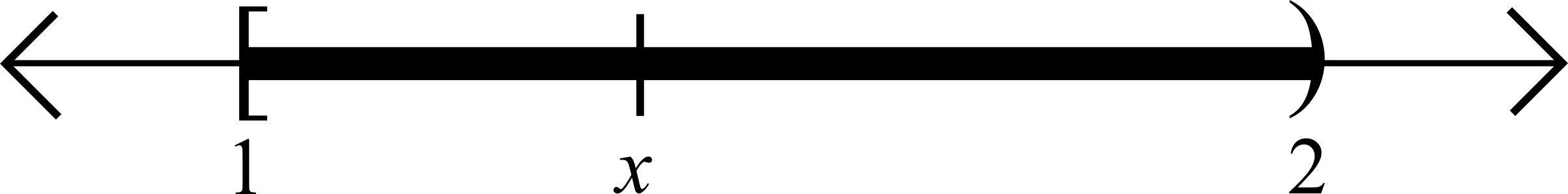

The interval \[[1,2)=\{x : 1\leq x<2\}\] is bounded above by \(2\).

It is also bounded above by \(138\).

It is not bounded above by \(3/2,\) since \(7/4\in[1,2),\) but \(7/4>3/2\).

Note that \(2\) is an upper bound, but any number less than \(2\) is not an upper bound. So \(2\) is the least upper bound for \([1,2)\).

In fact, a number \(x\) is an upper bound for \([1,2)\) if and only if \(x\geq 2\). Thus, the set \([1,2)\) does not contain any upper bounds for itself.

Maximum elements

If set \(S\subset\mathbb{R}\) and there exists \(b\in S\) such that \(b\) is an upper bound for \(S\), then \(b\) is called the maximum element or largest element of \(S\). In this case we write

\[b=\max S.\]

Examples.

- The set \([1,2)\) does not have a maximum element.

- The set \((1,2]\) does have a maximum element, namely, \(\max(1,2]=2.\)

- The empty set \(\varnothing\) has no maximum elements, but every real number is an upper bound.

Proposition 8. If \(S\subset\mathbb{R}\) has a maximum element, then it is unique.

Proof. Suppose \(x\) and \(y\) are both maximum elements of \(S\). This implies that \(x\) and \(y\) are both upper bounds for \(S\), and both \(x\) and \(y\) are elements of \(S\). If \(x<y\), then \(x\) is not an upper bound. If \(y<x\) then \(y\) is not an upper bound, thus by Axiom 6 of the real numbers \(x=y\). \(\Box\)

Proposition 9. \([1,2)\) does not have a maximum element.

Here's one way to parse this statement:

For all \(x\in\mathbb{R}\), either \(x\notin[1,2)\) or there exists \(y\in[1,2)\) such that \(y>x\).

\(A(x) : x\in[1,2)\) and \(B(x) : \exists\,y\in[1,2),\ y>x\)

With this notation the statement becomes \(\forall\,x\in\mathbb{R}, (\sim\!A(x))\vee B(x)\), which is equivalent to \(A(x)\Rightarrow B(x)\), so the proposition can be rephrased: If \(x\in[1,2)\), then there exists \(y\in[1,2)\) such that \(y>x\).

||

\(x+\frac{2-x}{2}\)

Proposition 9. \([1,2)\) does not have a maximum element.

Proof. We will show that if \(x\in[1,2)\), then \(x\) is not an upper bound for \([1,2)\). Let \(x\in [1,2)\) be arbitrary. Define the number \[y=\dfrac{x+2}{2}.\]

Note that

\[x=\frac{x+x}{2}<\frac{x+2}{2}<\frac{2+2}{2}=2\]

and

\[\frac{x+2}{2}\geq \frac{1+2}{2}=\frac{3}{2}.\]

This shows that \(y\in[1,2)\) and \(y>x\). \(\Box\)

Supremum and infimum

Definition 1.13. Let \(S\subset\mathbb{R}\) be bounded above. A real number \(b\) is called a least upper bound for \(S\) if it has the following two properties:

a) \(b\) is an upper bound for \(S\).

b) No number less than \(b\) is an upper bound for \(S\).

A least upper bound for \(S\) is also called a supremum. If \(b\) is a supremum for \(S\), then we write \[b=\sup S.\]

Proposition 10. If \(S\subset\mathbb{R}\) has a supremum, then it is unique.

Proof. Suppose \(a\) and \(b\) are both least upper bounds for \(S\). If \(a\) and \(b\) are not equal, then either \(a<b\) or \(b<a\). Since both \(a\) and \(b\) are upper bounds for \(S\), both \(a<b\) and \(b<a\) contradict the assumption about \(a\) and \(b\) in Definition 1.13 (b). \(\Box\)

Proposition 11. \(\sup[1,2)=2\).

Proof. It is clear that \(2\) is an upper bound for \([1,2)\). Let \(b<2\) be arbitrary. If \(b<1\), then \(b\) is not an upper bound since \(1\in[1,2)\). For \(b\geq 1\), as in the proof of Proposition 9 we see that \(y=\frac{b+2}{2}\in[1,2)\) and \(y>b\). This shows that \(b\) is not an upper bound for \([1,2)\), that is, there is no upper bound for \([1,2)\) less than \(2\). \(\Box\)

Homework: Show \(\displaystyle{\sup\left\{1-\frac{1}{n} : n\in\N\right\} = 1}.\)

Caution: If \(b<1\), then it is not obvious that \[\dfrac{b+1}{2}\in\displaystyle{\left\{1-\frac{1}{n} : n\in\N\right\} }.\] So you have to be more careful to show that there is some \[y\in \displaystyle{\left\{1-\frac{1}{n} : n\in\N\right\} }\] such that \(y>b\).

The completeness axiom

Axiom 10. Every nonempty set \(S\subset\mathbb{R}\) which is bounded above has a supremum.

Theorem 1.14 (Approximation property). Let \(S\) be a nonempty set of real numbers with a supremum, say \(b=\sup S\). Then, for every \(a<b\) there exists \(x\in S\) such that \(a<x\leq b\).

Proof. (On the board and in the text).

Theorem 1.15 (Additive property). Given nonempty sets \(A,B\subset\mathbb{R}\), let \(C\) denote the set

\[C:=\{x+y : x\in A,\ y\in B\}.\]

If \(A\) and \(B\) both have supremums, then \(C\) has a supremum, and

\[\sup C = \sup A+\sup B\]

Proof. (On the board and in the text).

Theorem 12. There is a positive real number \(b\) such that \(b^{2}=2\). We will call this number \(\sqrt{2}\).

Proof. (On the board). \(\Box\)

We have already shown that \(\sqrt{2}\) is not rational, thus there exist numbers in \(\R\setminus\mathbb{Q}\). Such numbers are called irrational numbers.

Theorem 1.10. If \(n\) is a positive integer which is not a perfect square, then \(\sqrt{n}\) is irrational.

In the book it is proven that there is no rational number \(a\) such that \(a^{2} = n\). It can be proven by a similar argument to the above theorem that there is a real number \(a\) such that \(a^{2}=n\).

Theorem 1.17. The set \(\mathbb{N}\) of positive integers is unbounded above.

Theorem 1.18. For every positive real number \(x\) there exists a natural number \(n\) such that \(n>x.\)

Theorem 1.19 (The Archimedian property of \(\mathbb{R}\)). If \(x>0\) and \(y\in\mathbb{R}\), then there exists \(n\in\mathbb{N}\) such that \(nx>y\).

Integers and completeness

Assuming Theorem 1.17 we see that Theorem 1.18 is essentially a restatement of Theorem 1.18.

Assuming Theorem 1.18, given \(x>0\) and \(y\in\mathbb{R}\) we apply Theorem 1.18 to \(y/x\) to obtain \(n\in\mathbb{N}\) such that \(n>y/x\), then multiply both sides by \(x\).

Theorem 13. Every nonempty set \(X\subset\mathbb{N}\) has a minimal element.

Proof. Since \(X\) is nonempty and bounded below by \(1\) by HW3 Problem 1 we see that \(X\) has an infimum, say \(m=\inf X\).

By Theorem 1.14 there is a number \(n\in X\) such that \(n<m+1\), and hence \(n-1<m\). Since \(m\) is a lower bound for \(X\) we also see that \(m\leq n.\) This implies that

\[0\leq n-m<1.\]

Assume toward a contradiction that \(m<n\), then there is some \(k\in X\) such that \(m\leq k<n.\) This implies

\[0<n-k<m+1-k = (m-k)+1\leq 0+1 =1.\]

This is a contradiction, since \(n-k\in\Z\). Therefore \(m=n\). \(\Box\)

Absolute value and the triangle inequality

Given a real number \(x\), the absolute value of \(x\), denoted by \(|x|\) is given by

\[|x| = \begin{cases} x & x\geq 0,\\ -x & x\leq 0.\end{cases}\]

It's often useful to be able to convert an inequality involving absolute values into one that does not involve absolute values:

Theorem 1.21. If \(a\geq 0\), then \(|x|\leq a\) if and only if \(-a\leq x\leq a\)

Example. Consider the set \(A=\{x\in\mathbb{R} : |2x-5|\leq 3\}\). Using Theorem 1.21 we see that \(x\in A\) if and only if \(-3\leq 2x-5\leq 3\), which holds if and only if \(1\leq x\leq 4\), that is

\[A = \{x\in\mathbb{R} : 1\leq x\leq 4\} = [1,4]\]

Absolute value and the triangle inequality

Theorem 1.22 (The triangle inequality). If \(x,y\in\mathbb{R}\), then \[|x+y|\leq |x|+|y|.\]

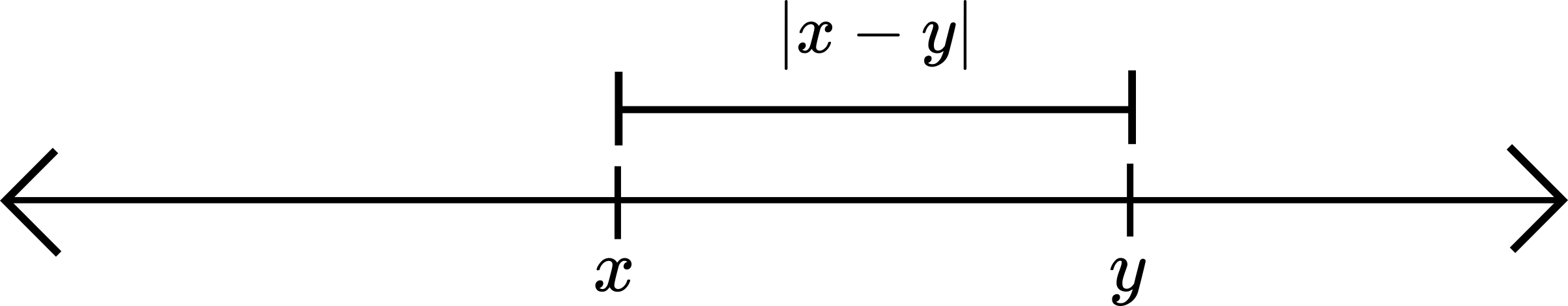

The absolute value gives us a notion of distance, in particular, we define the distance between two real numbers \(x\) and \(y\) to be the quantity \(|x-y|\).

One property that distance should satisfy is the following: Given three points \(a,b,\) and \(c\), the distance from \(a\) to \(c\) should not be greater than the distance from \(a\) to \(b\) plus the distance from \(b\) to \(c\). For this distance, this statement takes the following form:

\[|a-c|\leq |a-b|+|b-c|.\]

If we set \(x=a-b\) and \(y=b-c\), then \(a-c=x+y\), and the above inequality becomes

\[|x+y|\leq |x|+|y|.\]

This last inequality is called the triangle inequality.

Example. If \(x\) is a real number such that \(|x-4|<2\), then how large can \(|x+4|\) be?

Using the triangle inequality we have

\[|x+4| = |x-4+8|\leq |x-4|+|8| = |x-4|+8<2+8=10.\]

So, it appears that \(|x+4|<10\).

Corollary (the reverse triangle inequality). If \(x,y\in\mathbb{R}\), then \[|x+y|\geq \big||x|-|y|\big|.\]

Using the reverse triangle inequality in the above example, we see

\[|x+4| = |x-4+8|\geq \big||x-4|-8\big| = 8-|x-4|> 8-2 =6.\]

Proof. First, \(|x| = |x-y+y|\leq |x-y|+|y|\). Now swap \(x\) and \(y\). \(\Box\)

Part 4:

Topology

(See sections 3.1-3.15 in Apostol)

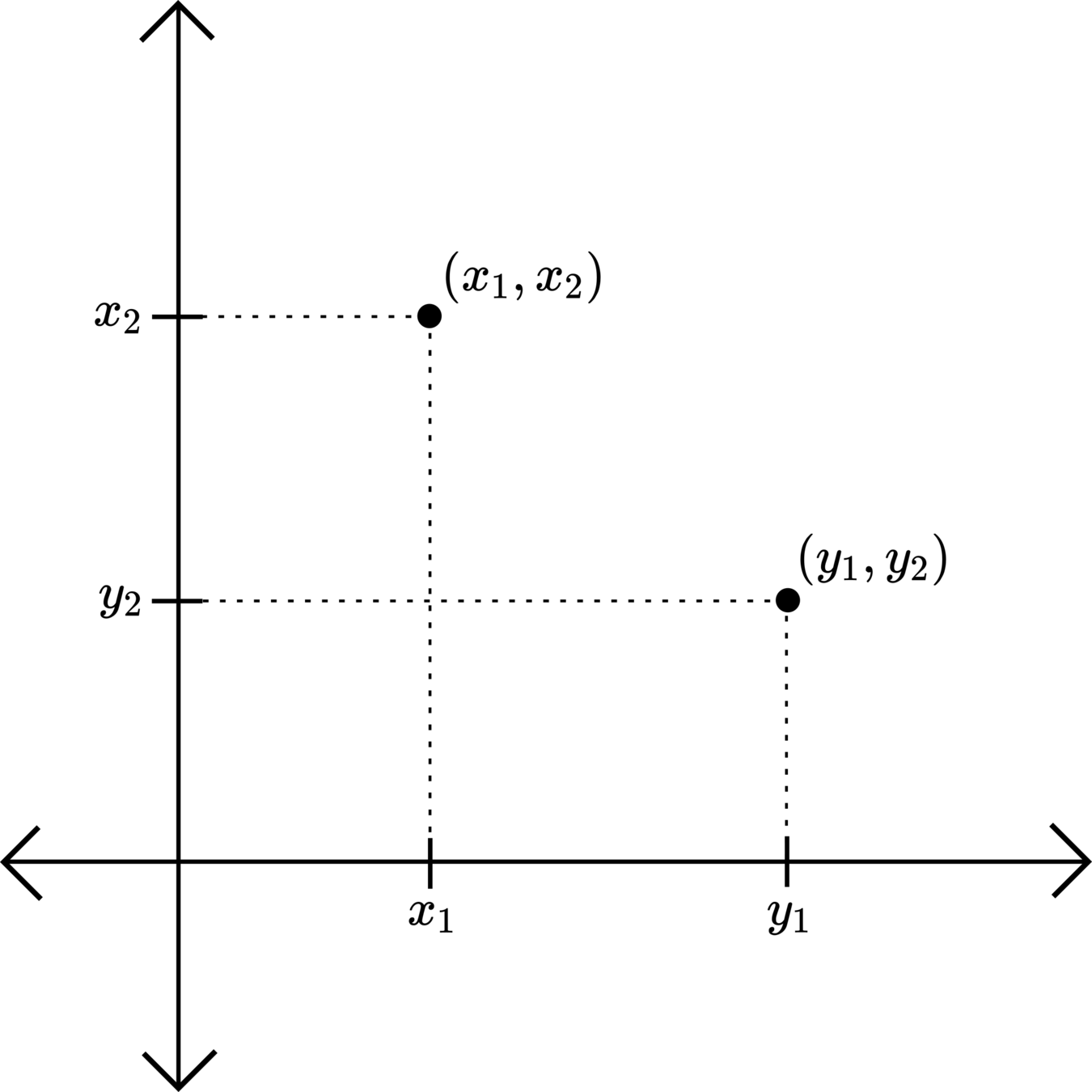

Definition 3.1. Let \(n>0\) be an integer. An ordered set of \(n\) real numbers is called an \(n\)-tuple, a \(n\)-dimensional point or a vector with \(n\) components. Points will be denoted with bold-face letters, for example:

\[\mathbf{x} = (x_{1},x_{2},\ldots,x_{n}).\]

The number \(x_{k}\) above is called the \(k\)th coordinate of the point \(\mathbf{x}\). The set of all \(n\)-dimensional points is call \(n\)-dimensional Euclidean space, and is denoted by \(\mathbb{R}^{n}\).

Below are graphical depictions of \(\mathbb{R}^{1}=\mathbb{R}\) and \(\mathbb{R}^{2}\).

Definition 3.2. Let \(\mathbf{x} = (x_{1},x_{2},\ldots,x_{n})\) and \(\mathbf{y} = (y_{1},y_{2},\ldots,y_{n})\) be in \(\mathbb{R}^{n}\). We define

a) \(\mathbf{x} = \mathbf{y}\) if and only if \(x_{i}=y_{i}\) for all \(i=1,2,\ldots,n\).

b) \(\mathbf{x}+\mathbf{y} = (x_{1}+y_{1},x_{2}+y_{2},\ldots,x_{n}+y_{n})\)

c) For \(a\in\mathbb{R}\), \(a\mathbf{x} = (ax_{1},ax_{2},\ldots,ax_{n})\)

d) \(\mathbf{x}-\mathbf{y} = \mathbf{x}+(-1)\mathbf{y}\)

e) The zero vector or origin is the point

\[\mathbf{0} = (0,0,\ldots,0).\]

f) The inner product or dot product of \(\mathbf{x}\) and \(\mathbf{y}\) is the scalar:

\[\mathbf{x}\cdot\mathbf{y} = \sum_{k=1}^{n}x_{k}y_{k}.\]

g) The norm or length of a vector \(\mathbf{x}\) is the nonnegative number

\[\|\mathbf{x}\| = (\mathbf{x}\cdot\mathbf{x})^{1/2}.\]

The number \(\|\mathbf{x}-\mathbf{y}\|\) is the distance between \(\mathbf{x}\) and \(\mathbf{y}\).

Length and distance in \(\mathbb{R}\) and \(\mathbb{R}^{2}\)

Theorem 3.3. Let \(\mathbf{x}\) and \(\mathbf{y}\) be points in \(\mathbb{R}^{n}\). Then,

a) \(\|\mathbf{x}\|\geq 0\), and \(\|\mathbf{x}\|=0\) if and only if \(\mathbf{x} = \mathbf{0}\).

b) \(\|a\mathbf{x}\| = |a|\|\mathbf{x}\|\) for all \(a\in\mathbb{R}.\)

c) \(\|\mathbf{x} - \mathbf{y}\| = \|\mathbf{y} - \mathbf{x}\|\)

d) (Cauchy-Schwarz inequality) \(|\mathbf{x}\cdot\mathbf{y}|\leq \|\mathbf{x}\|\|\mathbf{y}\|\)

e) (triangle inequality) \(\|\mathbf{x} +\mathbf{y}\| \leq \|\mathbf{x}\| + \|\mathbf{y}\|\)

Definition 3.4. The unit coordinate vector \(\mathbf{u}_{k}\) in \(\mathbb{R}^{n}\) is the vector whose \(k\)th component is \(1\) and the remaining components are zero. That is,

\[\mathbf{u}_{1} = (1,0,\ldots,0),\quad \mathbf{u}_{2} = (0,1,0,\ldots,0),\ \ldots\ \mathbf{u}_{n} = (0,\ldots,0,1).\]

Note that if \(\mathbf{x} = (x_{1},x_{2},\ldots,x_{n}),\) then \(\mathbf{x} = x_{1}\mathbf{u}_{1} + x_{2}\mathbf{u}_{2} + \cdots + x_{n}\mathbf{u}_{n}.\)

These vectors are also called standard basis vectors.

Also note that for any \(j,k\in\{1,2,\ldots,n\}\) such that \(k\neq j\) we have \(\mathbf{u}_{j}\cdot\mathbf{u}_{k}=0\). Any two vectors whose dot product is zero are said to be orthogonal.

Open sets and open balls

Let\(\mathbf{x}\) be a point in \(\mathbb{R}^{n}\). For \(r>0\) consider all the set of points in \(\mathbb{R}^{n}\) which are a distance \(<r\) away from \(\mathbf{x}\)

This set is called an open ball of radius \(r\) and center \(\mathbf{x}\), and is denoted

\[B(\mathbf{x};r)=\{\mathbf{y}\in\mathbb{R}^{n} : \|\mathbf{x}-\mathbf{y}\|<r\}.\]

In \(\mathbb{R}^{1}\), note that

\[B(x;r) = (x-r,x+r).\]

Definition 3.6. A set \(S\subset\mathbb{R}^{n}\) is called open if every point \(\mathbf{a}\in S\) is an interior point of \(S\). That is, \(S\) is open if for every \(\mathbf{a}\in S\) there exists a positive number \(r>0\) such that if \(\|\mathbf{a}-\mathbf{x}\|<r\), then \(\mathbf{x}\in S\).

Definition 3.5. Let \(S\subset \mathbb{R}^{n}\). A point \(\mathbf{a}\in S\) is called an interior point of \(S\) if there exists \(r>0\) such that \(B(\mathbf{a};r)\subset S\).

An open set in \(\mathbb{R}^{2}:\)

Example. The set \(\{\mathbf{x}\in\mathbb{R}^{2} : \|\mathbf{x}\|\leq 1\}\) is not open:

All of the blue points are interior points.

But for points on the boundary there is no ball around them that is contained in the set.

No matter how small the radius is.

Proposition 14. An open ball is an open set.

Idea:

Take \(s=r-\|\mathbf{x}-\mathbf{y}\|\).

Proof. Let \(\mathbf{x}\in\mathbb{R}^{n}\) and \(r>0\). Let \(\mathbf{y}\in B(\mathbf{x};r)\), that is, \(\mathbf{y}\in\mathbb{R}^{n}\) and \(\|\mathbf{x} - \mathbf{y}\|<r\).

Set \[s=r-\|\mathbf{x}-\mathbf{y}\|.\] Note that \(s>0\).

Next, take \(\mathbf{z}\in B(\mathbf{y};s)\), and observe \[\|\mathbf{x} - \mathbf{z}\| = \|\mathbf{x} - \mathbf{y} + \mathbf{y} - \mathbf{z}\| \leq \|\mathbf{x} - \mathbf{y}\| + \|\mathbf{y} - \mathbf{z}\|<\|\mathbf{x} - \mathbf{y}\| + s = r.\]

This shows that \(\mathbf{z}\in B(\mathbf{x};r)\), and thus \(B(\mathbf{y};s)\subset B(\mathbf{x};r).\) This shows that an arbitrary point \(\mathbf{y}\in B(\mathbf{x};r)\) is an interior point of \(B(\mathbf{x};r)\), and thus every point in \(B(\mathbf{x};r)\) is an interior point, and thus \(B(\mathbf{x};r)\) is open. \(\Box\)

Proposition 15. Let \(\mathbf{x}\) and \(\mathbf{y}\) be points in \(\mathbb{R}^{n}\) and \(r,s>0\). If \(\|\mathbf{x}-\mathbf{y}\|<r+s\) then there exists \(\mathbf{z}\in\mathbb{R}^{n}\) and \(\delta>0\) such that \[B(\mathbf{z};\delta)\subset B(\mathbf{x};r)\cap B(\mathbf{y};s)\]

Proposition 15. Let \(\mathbf{x}\) and \(\mathbf{y}\) be points in \(\mathbb{R}^{n}\) and \(r,s>0\). If \(\|\mathbf{x}-\mathbf{y}\|<r+s\) then there exists \(\mathbf{z}\in\mathbb{R}^{n}\) and \(\delta>0\) such that \[B(\mathbf{z};\delta)\subset B(\mathbf{x};r)\cap B(\mathbf{y};s)\]

\(\displaystyle{\delta = \frac{r+s-\|\mathbf{x} - \mathbf{y}\|}{2}}\)

\(\displaystyle{\mathbf{z} = \mathbf{x}+(r-\delta)\mathbf{u}}\)

Also

\(\displaystyle{\mathbf{z} = \mathbf{y}-(s-\delta)\mathbf{u}}\)

We can assume without loss of generality that \(r\geq s\), but what if \(r\gg s\) (\(r\) is much bigger than \(s\))?

Take \(\mathbf{z} = \mathbf{y}\) and \(\delta=s\). This happens when \(r> \|\mathbf{x}-\mathbf{y}\|+s\)

So, we will look at two cases:

Case 1. \(r> s+\|\mathbf{x}-\mathbf{y}\|\)

Case 2. \(r\leq s+\|\mathbf{x}-\mathbf{y}\|\)

Is our picture generic enough?

Proof. We may assume without loss of generality that \(r\geq s\).

Case 1. Suppose that \(r\geq s+\|\mathbf{x}-\mathbf{y}\|\) (note that this includes the case that \(\mathbf{x} = \mathbf{y}\)). Set

\[\delta = s\quad\text{and}\quad \mathbf{z} = \mathbf{y}.\]

For \(\mathbf{w}\in B(\mathbf{z},\delta)\) we see that \(\|\mathbf{y}-\mathbf{w}\|<\delta = s\), that is, \(\mathbf{w}\in B(\mathbf{y};s)\). We also see that

\[\|\mathbf{x} - \mathbf{w}\| = \|\mathbf{x} - \mathbf{y}+\mathbf{y}-\mathbf{w}\|\leq \|\mathbf{x} - \mathbf{y}\|+\|\mathbf{y}-\mathbf{w}\|< (r-s)+s=r.\]

This shows that \(\mathbf{w}\in B(\mathbf{x};r)\).

Thus, for arbitrary \(\mathbf{w}\in B(\mathbf{z},\delta)\), we have shown that \(\mathbf{w}\) is in both \(B(\mathbf{y};s)\) and \(B(\mathbf{x};r)\). This shows that \(B(\mathbf{z},\delta)\subset B(\mathbf{y};s)\) and \(B(\mathbf{z},\delta)\subset B(\mathbf{x};r)\), that is, \[B(\mathbf{z},\delta)\subset B(\mathbf{y};s)\cap B(\mathbf{x};r).\]

Proof continued. Case 2. Suppose that \(r\leq s+\|\mathbf{x}-\mathbf{y}\|.\)

Define

\[\delta = \frac{r+s-\|\mathbf{x} - \mathbf{y}\|}{2}\quad \text{and}\quad \mathbf{u} = \frac{\mathbf{y}-\mathbf{x}}{\|\mathbf{x}-\mathbf{y}\|}\quad\text{and}\quad\mathbf{z} = \mathbf{x}+(r-\delta)\mathbf{u}.\]

Also note that

\[2\delta+\|\mathbf{x}-\mathbf{y}\| = r+s,\]

which implies

\[\|\mathbf{x} - \mathbf{y}\| = (r-\delta)+(s-\delta).\]

Using this we see that \[\mathbf{z}-\big(\mathbf{y}-(s-\delta)\mathbf{u}\big) = \mathbf{x}-\mathbf{y}+[(r-\delta)+(s-\delta)]\mathbf{u} = \mathbf{0},\]

from which we deduce that

\[\mathbf{z} = \mathbf{y} - (s-\delta)\mathbf{u}.\]

Using our assumptions that \(\|\mathbf{x}-\mathbf{y}\|<r+s\) and \(r\leq s+\|\mathbf{x}-\mathbf{y}\|\) we see that

\[0 = \frac{\|\mathbf{x}-\mathbf{y}\|-\|\mathbf{x}-\mathbf{y}\|}{2}< \frac{r+s-\|\mathbf{x}-\mathbf{y}\|}{2}=\delta\]

and

\[\delta =\frac{r+s-\|\mathbf{x}-\mathbf{y}\|}{2}\leq \frac{s+\|\mathbf{x}-\mathbf{y}\|+s-\|\mathbf{x}-\mathbf{y}\|}{2} = s.\]

Moreover, our assumption that \(r\geq s\) implies

\[\delta =\frac{r+s-\|\mathbf{x}-\mathbf{y}\|}{2}\leq \frac{r+r-\|\mathbf{x}-\mathbf{y}\|}{2}\leq r.\]

Thus, we see that \(s-\delta\) and \(r-\delta\) are nonnegative.

To complete the proof, let \(\mathbf{w}\in B(\mathbf{z};\delta)\), then

\[\|\mathbf{x}-\mathbf{w}\| = \|\mathbf{x}-\mathbf{z}+\mathbf{z}-\mathbf{w}\|\leq \|\mathbf{x}-\mathbf{z}\|+\|\mathbf{z}-\mathbf{w}\|\]

\[=\|-(r-\delta)\mathbf{u}\| +\|\mathbf{z}-\mathbf{w}\| = r-\delta + \|\mathbf{z}-\mathbf{w}\|<r-\delta+\delta=r.\]

Proof. We may assume without loss of generality that \(r\geq s\). Define

\[\delta = \min\left\{s,\frac{r+s-\|\mathbf{x} - \mathbf{y}\|}{2}\right\},\quad \mathbf{u} = \frac{\mathbf{y}-\mathbf{x}}{\|\mathbf{x}-\mathbf{y}\|}\]

and

\[\mathbf{z} = \mathbf{x}+(r-\delta)\mathbf{u}.\]

Observe that

\[ = \mathbf{x} + \left(1-\frac{s-\delta}{\|\mathbf{x}-\mathbf{y}\|}\right)(\mathbf{y}-\mathbf{x}) = \mathbf{x} + \frac{\|\mathbf{x} - \mathbf{y}\| - s + \delta}{\|\mathbf{x} - \mathbf{y}\|}(\mathbf{y} - \mathbf{x})\]

\[\mathbf{y} - (s-\delta)\mathbf{u} = \mathbf{y} - \frac{s-\delta}{\|\mathbf{x}-\mathbf{y}\|}(\mathbf{y}-\mathbf{x}) = \mathbf{x} + (\mathbf{y} - \mathbf{x}) - \frac{s-\delta}{\|\mathbf{x}-\mathbf{y}\|}(\mathbf{y}-\mathbf{x})\]

\[ = \mathbf{x} + (\|\mathbf{x}-\mathbf{y}\|-s+\delta)\mathbf{u}.\]

Proof continued. Case 2. Suppose that \(r< s+\|\mathbf{x}-\mathbf{y}\|.\)

Define

\[\delta = \frac{r+s-\|\mathbf{x} - \mathbf{y}\|}{2}\quad \text{and}\quad \mathbf{u} = \frac{\mathbf{y}-\mathbf{x}}{\|\mathbf{x}-\mathbf{y}\|}\quad\text{and}\quad\mathbf{z} = \mathbf{x}+(r-\delta)\mathbf{u}.\]

Since \(\|\mathbf{x}-\mathbf{y}\|<r+s\) we see that \(\delta>0\).

Let \(\mathbf{w}\in B(\mathbf{z};\delta)\), that is, \(\|\mathbf{z}-\mathbf{w}\|<\delta.\) We must show that \(\mathbf{w}\in B(\mathbf{x};r)\) and \(\mathbf{w}\in B(\mathbf{y};s).\) Note that since \(r\geq s\) we have

\[r-\delta = r-\frac{r+s-\|\mathbf{x} - \mathbf{y}\|}{2}=\frac{r-s+\|\mathbf{x} - \mathbf{y}\|}{2}\geq \frac{\|\mathbf{x} - \mathbf{y}\|}{2}\geq 0\]

Therefore,

\(\displaystyle{<\|-(r-\delta)\mathbf{u}\| +\delta = |-(r-\delta)|\|\mathbf{u}\| +\delta = r-\delta+\delta = r}\)

\(\|\mathbf{x}-\mathbf{w}\| = \|\mathbf{x}-\mathbf{z}+\mathbf{z}-\mathbf{w}\|\leq \|\mathbf{x}-\mathbf{z}\|+\|\mathbf{z}-\mathbf{w}\|\)

This shows that \(\mathbf{w}\in B(\mathbf{x};r).\)

Note that our assumtion that \(r< s+\|\mathbf{x}-\mathbf{y}\|\) is equivalent to \(s-r+\|\mathbf{x}-\mathbf{y}\|> 0.\) Thus, we have

\(\|\mathbf{y}-\mathbf{w}\| = \|\mathbf{y}-\mathbf{z}+\mathbf{z}-\mathbf{w}\|\leq \|\mathbf{y}-\mathbf{z}\|+\|\mathbf{z}-\mathbf{w}\|\)

\(\displaystyle{0<\frac{s-r+\|\mathbf{x}-\mathbf{y}\|}{2} = s-\delta.}\)

And, finally

\(\leq \|(s-\delta)\mathbf{u}\|+\|\mathbf{z}-\mathbf{w}\|<(s-\delta) + \delta = s.\)

This shows that \(\mathbf{w}\in B(\mathbf{y};s).\) \(\Box\)

Proof. Note that

\[\mathbf{z} = \mathbf{x}+(r-\delta)\mathbf{u} = \mathbf{y}-(\mathbf{y}-\mathbf{x}) + \frac{r-\delta}{\|\mathbf{x}-\mathbf{y}\|}(\mathbf{y}-\mathbf{x})\]

\[=\mathbf{y} - \left(1-\frac{r-\delta}{\|\mathbf{x}-\mathbf{y}\|}\right)(\mathbf{y}-\mathbf{x}) = \mathbf{y} - \left(\|\mathbf{x}-\mathbf{y}\|-r+\delta\right)\mathbf{u}\]

\[= \mathbf{y}-(s-\delta)\mathbf{u}\]

Theorem 3.7. The union of any collection of open sets is open.

Consider a collection of open sets.

The union is the collection of all of the points in all of the sets.

Take a point in the union.

The point is in (at least) one of the sets in the collection of open sets.

That set is open, so there exists a ball around the point that is contained in the open set.

The set is also contained in the union.

Theorem 3.8. The intersection of any finite collection of open sets is open.

Take a finite collection of open sets.

The intersection is the set of points that are in all of the sets.

Take a point in the intersection.

This is a point in each of the sets.

In each set find an open ball around the point, contained in the set.

Take the smallest radius. (We can do this because the collection is finite!)

Theorem 3.8. The intersection of any finite collection of open sets is open.

The ball with this radius is contained in each of the sets.

So, that ball is contained in the intersection

Example. For each \(n\in\mathbb{N}\) define the set

\[I_{n} = \left(-\frac{1}{n},1+\frac{1}{n}\right).\]

The intersection is

\[\bigcap_{n=1}^{\infty}I_{n} = [0,1]\]

which is not open.

Definition 3.12. A set \(S\subset\mathbb{R}^{n}\) is called closed if its complement \(\mathbb{R}\setminus S\) is open.

Note: The book uses the following notation for set complements: If \(X\) and \(Y\) are sets, then

\[X - Y = \{x\in X : x\notin Y\}.\]

We will often use the notation \(X\setminus Y\) for the same set.

Example. Given \(a\leq b\), the closed interval

\[[a,b]=\{x\in\mathbb{R} : a\leq x\leq b\}\]

is a closed set, since

\[\mathbb{R}\setminus[a,b] = (-\infty,a)\cup (b,\infty)\]

is the union of two open intervals.

Example. The set \(K=\{\mathbf{x}\in\mathbb{R}^{2} : \|\mathbf{x}\|\leq 1\}\) is closed:

Note that the complement of \(K\) is the set

\[\mathbb{R}^{2}\setminus K = \{\mathbf{x}\in\mathbb{R}^{2} : \|\mathbf{x}\|>1\}.\]

If \(\mathbf{z}\in\mathbb{R}^{2}\setminus K\), then we let \(\mathbb{r}\in(0,\|\mathbf{z}\|-1).\) It is straightforward to show that \(B(\mathbf{z};r)\subset \mathbb{R}^{2}\setminus K.\)

Example. The set \((0,1]\) is neither open nor closed.

Example. The set \(\mathbb{Q}\) is neither open nor closed.

Theorem 3.13. The union of a finite collection of closed sets is closed, and the intersection of any collection of closed sets is closed.

Theorem 3.13 follows from Theorems 3.7 and 3.8 and De Morgan's laws: Given a collection of sets \(\{A_{x}\}_{x\in X}\) which are all subsets of \(\mathbb{A}\) we have

\[\mathbb{A}\setminus\bigcup_{x\in X}A_{x} = \bigcap_{x\in X}(\mathbb{A}\setminus A_{x})\]

and

\[\mathbb{A}\setminus\bigcap_{x\in X}A_{x} = \bigcup_{x\in X}(\mathbb{A}\setminus A_{x})\]

Theorem 3.14. If \(A\) is open and \(B\) is closed, the \(A\setminus B\) is open, and \(B\setminus A\) is closed.

Proof. Observe that

\[A\setminus B = A\cap (\mathbb{R}^{n}\setminus B)\]

Hence, by De Morgan's law

\[\mathbb{R}^{n}\setminus(A\setminus B) = (\mathbb{R}^{n}\setminus A)\cup B.\]

This is the union of two closed sets, and hence closed. This shows that \(A\setminus B\) is open.

The other proof is similar. (You should try it!) \(\Box\)

Definition 3.15. Let \(S\subset \mathbb{R}^{n}\) and \(\mathbf{x}\in\mathbb{R}^{n}\). The point \(\mathbf{x}\) is called an adherent point of \(S\) if every open ball \(B(\mathbf{x};r)\) contains at least one point of \(S\). That is, for every \(r>0\) there exists a point \(\mathbf{z}\in B(\mathbf{x};r)\cap S\).

Example. Consider the set \(S=(0,1]\cup\{2\}\). As we already noted, every point of \(S\) is an adherent point of \(S\). Note that \(0\notin S\), but \(0\) is an adherent point of \(S\). The set \(S\) has no other adherent points. Thus, the set of adherent points of \(S\) is \([0,1]\cup\{2\}\).

Example. Consider the set

\[T = \left\{\frac{1}{n} : n\in\N\right\}\]

The set of adherent points of \(T\) is the set \(T\cup\{0\}\).

Observe that given \(S\subset\mathbb{R}^{n}\), every point in \(S\) is an adherent point of \(S\).

Example. Consider the set \(\mathbb{Q}\). The set of adherent points of \(\mathbb{Q}\) is \(\mathbb{R}\).

Definition 3.16. Let \(S\subset \mathbb{R}^{n}\) and \(\mathbf{x}\in\mathbb{R}^{n}\). The point \(\mathbf{x}\) is called an accumulation point of \(S\) if every open ball \(B(\mathbf{x};r)\) contains at least one point of \(S\) distinct from \(\mathbf{x}\). That is, for every \(r>0\) there exists a point \(\mathbf{z}\in (B(\mathbf{x};r)\setminus\{\mathbf{x}\})\cap S\).

Example. Consider the set \(S=(0,1]\cup\{2\}\). The set of accumulation points of \(S\) is \([0,1]\).

Example. Consider the set

\[T = \left\{\frac{1}{n} : n\in\N\right\}\]

The set of accumulation points of \(T\) is the set \(\{0\}\).

Observe that given \(S\subset\mathbb{R}^{n}\), points in \(S\) may or may not be accumulation points of \(S\). Points of \(S\) which are not accumulation points of \(S\) are called isolated points of \(S\).

Example. Consider the set \(\mathbb{Q}\). The set of accumulation points of \(\mathbb{Q}\) is \(\mathbb{R}\).

Theorem 3.17. If \(\mathbf{x}\) is an accumulation point of \(S\), then every ball \(B(\mathbf{x};r)\) contains infinitely many points of \(S\).

Proof. On the board and in the book.

Definition 3.19. The set of all adherent points of a set \(S\) is called the closure of \(S\) and is denoted by \(\overline{S}\).

Theorem 3.18, 3.20 & 3.22. Given \(S\subset\mathbb{R}^{n}\), the following are equivalent:

- \(S\) is closed.

- \(S\) contains all of its adherent points, that is, \(\overline{S}\subset S\)

- \(S = \overline{S}\).

- \(S\) contains all of its accumulation points.

Proof. On the board and in the book.

Definition 3.23. A set \(S\subset\mathbb{R}^{n}\) is said to be bounded if there exists \(r>0\) and \(\mathbf{x}\in\mathbb{R}^{n}\) such that \(S\subset B(\mathbf{x};r).\)

Examples:

- Any open ball is bounded.

- The set of natural numbers \(\mathbb{N}\subset\mathbb{R}\) is not bounded

- The set of integers \(\mathbb{Z}\subset\mathbb{R}\) is not bounded.

- The whole space \(\mathbb{R}^{n}\) is not bounded.

- Any finite set is bounded:

Proof. If \(X=\{\mathbf{x}_{1},\mathbf{x}_{2},\ldots,\mathbf{x}_{N}\}\subset\mathbb{R}^{n}\), then let

\[r =1+\max \{\|\mathbf{x}_{1}\|,\|\mathbf{x}_{2}\|,\ldots,\|\mathbf{x}_{N}\|\}.\] Then, for each \(i\in\{1,2,\ldots,n\}\) we observe that

\[\|\mathbf{0}-\mathbf{x}_{i}\| = \|\mathbf{x}_{i}\|\leq \max \{\|\mathbf{x}_{1}\|,\|\mathbf{x}_{2}\|,\ldots,\|\mathbf{x}_{N}\|\}<r\]

and hence \(X\subset B(\mathbf{0};r)\). \(\Box\)

Theorem 3.24 (Bolzano-Weierstrass). If \(S\subset\mathbb{R}^{n}\) is bounded and infinite, then there is at least one point of \(\mathbb{R}^{n}\) which is an accumulation point of \(S\).

Proof. On the board and in the text.

Notes:

- The set \(S\) need not contain any accumulation points: \[S=\left\{\frac{1}{n} : n\in\mathbb{N}\right\}\] has only one accumulation point, namely, \(0\), but \(0\notin S\).

- The assumption that \(S\) is bounded is necessary. The set of integers \(\mathbb{Z}\) is infinte, but not bounded, and no point in \(\mathbb{R}\) is an accumulation point of \(\mathbb{Z}\)

Theorem 3.25 (Cantor intersection theorem). Let \(\{Q_{1},Q_{2},\ldots\}\) be a countable collection of nonempty sets in \(\mathbb{R}^{n}\) such that

i) \(Q_{k+1}\subset Q_{k}\) for each \(k\in\mathbb{N}\)

ii) \(Q_{k}\) is closed and bounded for each \(k\in\mathbb{N}\).

Then, the intersection \[\bigcap_{k=1}^{\infty}Q_{k}\] is closed and nonempty.

Note: The assumptions that the \(Q_{k}\)'s are both closed and bounded are both necessary:

The sets \(Q_{k} = [k,\infty)\) are closed, but not bounded, and \[\bigcap_{k=1}^{\infty}[k,\infty)=\varnothing\]

The sets \(Q_{k} = (0,\frac{1}{k})\) are bounded but not closed, and\[\bigcap_{k=1}^{\infty}\left(0,\tfrac{1}{k}\right) = \varnothing\]

Proof. Set

\[Q = \bigcap_{k=1}^{\infty}Q_{k}.\]

Since each of the sets \(Q_{k}\) is closed, the intersection is closed by Theorem 3.13.

For each \(k\in\mathbb{N}\) let \(\mathbf{a}_{k}\in Q_{k}\) and set

\[A: = \{\mathbf{a}_{1},\mathbf{a}_{2},\ldots\}.\]

If \(A\) is finite, then there exists \(\mathbf{a}\in A\) such that \(\mathbf{a}_{k}=\mathbf{a}\) for infinitely many \(k\in\N\). Thus, if \(j\in\N\), there exists \(k\geq j\) such that \[\mathbf{a}=\mathbf{a}_{k}\in Q_{k}\subset Q_{k-1}\subset\cdots\subset Q_{j}.\]

This shows that \(\mathbf{a}\in Q_{j}\) for each \(j\in\N\), and thus \(\mathbf{a}\in Q\).

Now, we may assume \(A\) is an infinite set. Since \(Q_{k}\subset Q_{1}\) for all \(k\in\N\) we see that \(\mathbf{a}_{k}\in Q_{1}\) for each \(k\in\N\), and thus \(A\subset Q_{1}\). Since \(Q_{1}\) is bounded, this implies \(A\) is bounded.

Proof continued. By the Bolzano-Weierstrass theorem the set \(A\) has an accumulation point, call it \(\mathbf{a}\).

By Theorem 3.17, every open ball around \(\mathbf{a}\) contains infinitely many points of \(A\).

Let \(k\in\N\) be arbitrary, and note that \[\{\mathbf{a}_{k},\mathbf{a}_{k+1},\ldots\}\subset Q_{k}.\]

From this we deduce that every open ball around \(\mathbf{a}\) also contains infinitely many points of \(Q_{k}\). This implies that \(\mathbf{a}\) is an accumulation point of \(Q_{k}\), and since \(Q_{k}\) is closed, we see that \(\mathbf{a}\in Q_{k}\). Since \(k\in\N\) was arbitrary, we conclude that \(\mathbf{a}\in Q_{k}\) for all \(k\in\N\), and thus \[\mathbf{a}\in \bigcap_{k=1}^{\infty}Q_{k}.\]

\(\Box\)

Definition 3.26. Given a set \(S\subset\mathbb{R}^{n}\), a collection of sets \(F\) is called a covering of \(S\) if

\[S\subset \bigcup_{A\in F}A.\]

If \(F\) is a covering of \(S\) and each set in \(F\) is an open set, then \(F\) is called an open covering (or open cover) of \(S\).

Examples.

- The collection \[F=\{(0.9,1.1),(1.9,2.1),\ldots,(k-\tfrac{1}{10},k+\tfrac{1}{10}),\ldots\}\] is an open cover of \(\mathbb{N}\).

- For each rational number \(q\in\mathbb{Q}\) select a positive number \(r_{q}>0\). Then, the set \[F = \{(q-r_{q},q+r_{q}):q\in\mathbb{Q}\}\] is an open cover of \(\mathbb{Q}\).

- More generally, if \(S\subset\mathbb{R}^{n}\), then for each \(\mathbf{x}\in S\) select a positive number \(r_{\mathbf{x}}>0\), then the set \(F=\{B(\mathbf{x};r_{\mathbf{x}}) : \mathbf{x}\in S\}\) is an open cover of \(S\).

Definition 3.30. A set \(S\subset\mathbb{R}^{n}\) is said to be compact if every open cover of \(S\) contains a finite subcover, that is, if \(F\) is an open cover of \(S\), then there is a finite subset of \(F\) which is also a cover of \(S\).

Proposition 16. For \(a\leq b\), the set \([a,b]\subset\mathbb{R}\) is compact.

Proof. If \(a=b\), then \([a,b]=\{a\}\). It is clear that any open cover of a \(\{a\}\) has a finite subcover, so we will now suppose that \(a<b\).

Suppose that \(F\) is an open cover of \([a,b]\). Since \[a\in\bigcup_{U\in F}U,\]

there is an open set \(U\in F\) such that \(a\in U\). Since \(U\) is open, there exists \(r>0\) such that \(B(a;r)\subset U\). Thus, \([a,a+\frac{r}{2}]\subset U\). Define the set

\[A=\{s\in[a,b] : \text{a finite subset of \(F\) covers } [a,s]\}.\]

We have already seen that \(a+\frac{r}{2}\in A\), so \(A\neq \varnothing\), and by definition \(A\) is bounded above, so we set

\[c = \sup A.\]

Proof continued.

Case 1. Suppose \(c=b\). There exists \(V\in F\) such that \(b\in V\). Since \(V\) is open, there exists \(\varepsilon>0\) such that \((b-\varepsilon,b+\varepsilon)\subset V\). Since \(b=\sup A\), we deduce that \([a,b-\varepsilon]\) can be covered by a finite subset of \(X\subset F\). Thus \(X\cup \{V\}\) is a finite subset of \(F\) that covers \([a,b]\).

Case 2. Suppose \(c<b\). There exists \(W\in F\) such that \(c\in W\) since \(W\) is open, there exists \(\delta>0\) such that \((c-\delta,c+\delta)\subset W\). Since \(c-\delta<c\) there exists \(d>c-\delta\) such that \([a,d]\) can be covered by a finite subset \(Y\subset F\). Then \(Y\cup \{W\}\) is an open cover of \([a,c+\frac{\delta}{2}]\). This contradiction shows that \(c=b\), that is, we are reduced to Case 1 where we have already seen that the desired conclusion holds. \(\Box\)

Theorem 3.29 (the Heine-Borel theorem). A set \(S\subset \mathbb{R}^{n}\) is compact if and only if it is closed and bounded.

Proposition 17. If \(K\subset\mathbb{R}^{n}\) is compact and \(L\subset K\) is closed, then \(L\) is compact.

Proof. Let \(F\) be an open cover of \(L\). The set \(F_{1} = F\cup\{\mathbb{R}^{n}\setminus L\}\) is an open cover of \(K\). By definition, there is a finite subset \(G\subset F_{1}\) that covers \(K\), and hence also covers \(L\). The set \(G\) might contain \(\mathbb{R}^{n}\setminus L\), but the rest of \(G\) is still a finite subcover that covers \(L\). \(\Box\)

Proof sketch. Using Proposition 16 and induction we can show that for any \(a\geq 0\), the set \([-a,a]^{n}\) is compact in \(\mathbb{R}^{n}\).

Since \(S\) is bounded, there exists \(a>0\) such that \(S\subset[-a,a]^{n}\).

The theorem follows from Proposition 17. \(\Box\)

Metric spaces

Definition 3.32. A metric space is a nonempty set \(M\) (the elements of this set are called points) and a function \(d:M\times M\to\mathbb{R}\) (called a metric) such that for any \(x,y,z\in M\) the following four properties hold:

1. \(d(x,x)=0\)

2. \(d(x,y)>0\) for \(x\neq y\)

3. \(d(x,y)=d(y,x)\)

4. \(d(x,y)\leq d(x,z)+d(z,y).\)

When we want to be clear, we denote a metric space as a pair \((M,d).\)

Examples.

1) The most important example of a metric space is \(\mathbb{R}^{n}\) with

\[d(\mathbf{x},\mathbf{y}) = \|\mathbf{x}-\mathbf{y}\|.\]

2) Consider the set \(\mathbb{Q}\) with \(d(x,y)=|x-y|\), this is also a metric space. More generally, any subset \(M\subset \mathbb{R}^{n}\) with metric \(d(\mathbf{x},\mathbf{y}) = \|\mathbf{x}-\mathbf{y}\|\) is a metric space.

More examples. An example of a metric space often worth considering is the set \([0,1]\subset \mathbb{R}\) together with the metric \(d(x,y)=|x-y|\).

There are other metrics that can be defined on \(\mathbb{R}^{n}\). Given

\(\mathbf{x} = (x_{1},x_{2},\ldots,x_{n})\) and \(\mathbf{y} = (y_{1},y_{2},\ldots,y_{n})\) in \(\mathbb{R}^{n}\) the following functions are metrics on \(\mathbb{R}^{n}\):

\[d(\mathbf{x},\mathbf{y}) = \sum_{i=1}^{n}|x_{i}-y_{i}|,\]

\[d(\mathbf{x},\mathbf{y}) = \max\{|x_{1}-y_{1}|,|x_{2}-y_{2}|,\ldots,|x_{n}-y_{n}|\},\]

for any \(p\geq 1\)

\[d(\mathbf{x},\mathbf{y})=\left(\sum_{i=1}^{n}|x_{i}-y_{i}|^{p}\right)^{1/p}.\]

Part 5:

Limits and continuity

(See sections 4.1-4.5, 4.7-4.9, 4.11-4.15 in Apostol)

Functions

Definition. Given two sets \(X\) and \(Y\), a subset

\[F\subset X\times Y=\{(x,y) : x\in X,\ y\in Y\}\]

is called a function if it satisfies the following two properties:

- for each \(x\in X\) there exists \(y\in Y\) such that \((x,y)\in F\)

- if \((x,y)\in F\) and \((x,z)\in F\), then \(y=z\).

Some more function terminology:

- The set \(X\) is called the domain of \(F\).

- The set \(Y\) is called the codomain of \(F\).

- The inclusion \((x,y)\in F\) is written \(F(x) = y\), and read "\(F\) of \(x\) equals \(y\)."

- The set \[\operatorname{im}(F) = \{F(x) : x\in X\}\] is called the range or image of \(F\).

- To denote \(F\) is a function with domain \(X\) and codomain \(Y\) we write \(F:X\to Y\).

- The elements of the domain \(X\) are sometimes called inputs and the elements of the range \(\operatorname{im}(F)\) are called outputs.

- The set of all functions with domain \(X\) and codomain \(Y\) is sometimes denoted \(Y^{X}\).

- A function \(F:X\to Y\) is called onto or a surjection if \(\operatorname{im}(F)=Y\).

- A function \(F:X\to Y\) is called one-to-one or an injection if \(F(x)=F(y)\) implies \(x=y\).

Defining a function:

The most common way to define a function is by a rule:

Let \(f:\mathbb{R}\to \mathbb{R}\) be given by \(f(x) = x^{2}\) for all \(x\in\mathbb{R}\).

Another example of a rule, is a so-called piecewise definition:

Let \(g:(-\infty,0)\cup(0,\infty)\to\mathbb{R}\) be given by \(g(x) = \begin{cases} \frac{1}{x} & x>0,\\ \frac{-1}{x} & x<0.\end{cases}\)

More on defining functions:

If the domain of a function is finite, then we can simply specify each output for a given input:

Let \(h:\{1,2,3\}\to\mathbb{R}\) be given by

\[h(1)=-3,\ h(2) = \pi,\ h(3)=0.\]

Showing that two functions are equal:

Suppose that we have two functions \(f:X\to Y\) and \(g:X\to Y\)

What does it mean to say that \(f=g\)?

For each \(x\in X\), \(f(x) = g(x)\).

A function is a special type of set, it is not an equation.

Sequences

Definition. Let \(X\) be a set. A sequence in \(X\) is a function \(x:\mathbb{N}\to X\).

Definition 4.1. A sequence \(\{x_{n}\}\) of points in a metric space \((S,d)\) is said to converge if there is a point \(p\in S\) with the following property:

For every \(\varepsilon>0\) there exists \(N\in\mathbb{N}\) such that \[d(x_{n},p)<\varepsilon\quad\text{for all }n\geq N.\]

In this case we say that \(\{x_{n}\}\) converges to \(p\), and we write \(x_{n}\to p\) as \(n\to\infty\), or just \(x_{n}\to p\). If there is no such \(p\in S\), then we say that \(\{x_{n}\}\) diverges or does not converge.

For sequence \(x:\mathbb{N}\to X\) we write \(x_{n}\) instead of \(x(n)\), and the function is denoted \(\{x_{n}\}\), or \(\{x_{n}\}_{n=1}^{\infty}\).

For example, we could define a sequence in \(\mathbb{R}\) by saying the following:

Let \(\{x_{n}\}\) be given by \(x_{n} = \dfrac{n}{n+1}\quad\text{for each }n\in\mathbb{N}.\)

If \(\{x_{n}\}\) is a real-valued sequence, then \(d(x_{n},p)\) becomes \(|x_{n}-p|\)

\(|x_{n}-p|\)

Proposition. The sequence \(\{\frac{1}{n}\}\) converges to \(0\).

Proof. Let \(\varepsilon>0\) be given. Fix \(N\in\N\) such that \(N>\frac{1}{\varepsilon}.\) For \(n\geq N\), we see that \(n>\frac{1}{\varepsilon}\), and thus

\[\left|\frac{1}{n}-0\right| = \frac{1}{n}<\varepsilon.\]

Proposition. The sequence \(\{\frac{n}{n+1}\}\) converges to \(1\).

Proof. Let \(\varepsilon>0\) be given. Fix \(N\in\N\) such that \(N>\frac{1}{\varepsilon}.\) For \(n\geq N\), we see that \(n+1>\frac{1}{\varepsilon}\), and thus

\[\left|\frac{n}{n+1}-1\right| = \left|\frac{-1}{n+1}\right| = \frac{1}{n+1}<\varepsilon.\]

\(\Box\)

\(\Box\)

Proposition. \(\displaystyle{\frac{n}{2n+1}\to\frac{1}{2}}\).

Proof. Let \(\varepsilon>0\) be given. By the Archimedian Property there is a natural number \(N\in\N\) such that

\[N> \frac{1}{4}\left(\frac{1}{\varepsilon}-2\right).\]

Let \(n\in\N\) such that \(n\geq N\) be arbitrary. Our choice of \(N\) implies \(n> \frac{1}{4}\left(\frac{1}{\varepsilon}-2\right).\) This inequality is equivalent to

\[\frac{1}{4n+2}<\varepsilon.\]

Finally, for this \(n\) we have

\[\left|\frac{n}{2n+1}-\frac{1}{2}\right| = \left|\frac{2n}{4n+2}-\frac{2n+1}{4n+2}\right| = \left|\frac{-1}{4n+2}\right| = \frac{1}{4n+2}<\varepsilon.\ \Box\]

Scratch work. We wish to show that

\[\left|\frac{n}{2n+1}-\frac{1}{2}\right|<\varepsilon.\]

So, we simplify this inequality as follows:

\[\varepsilon>\left|\frac{n}{2n+1}-\frac{1}{2}\right| = \left|\frac{2n}{4n+2}-\frac{2n+1}{4n+2}\right| = \left|\frac{-1}{4n+2}\right| = \frac{1}{4n+2}\]

Now, we see

\[\frac{1}{4n+2}<\varepsilon\ \Leftrightarrow\ \frac{1}{\varepsilon}<4n+2 \ \Leftrightarrow\ \frac{1}{4}\left(\frac{1}{\varepsilon}-2\right)<n\]

Thus, it seems that we could take \(N\) to be a natural number greater than \(\frac{1}{4}\left(\frac{1}{\varepsilon}-2\right)\). Such a natural number exists by the Archimedean Property.

Proposition. \(\displaystyle{\frac{2n^{2}+n+1}{n^2+1}\to 2}\).

Scratch work. We wish to show that

\[\left|\frac{2n^2+n+1}{n^2+1}-2\right|<\varepsilon.\]

So, we simplify this inequality as follows:

\[\varepsilon>\left|\frac{2n^2+n+1}{n^2+1}-2\right| = \left|\frac{n-1}{n^{2}+1}\right| = \frac{n-1}{n^{2}+1},\]

and hence we want

\[\frac{n-1}{n^{2}+1}<\varepsilon.\]

Instead, let's look for a sequence \(\{x_{n}\}\) which more obviously converging to zero, but such that

\[\frac{n-1}{n^{2}+1}<\quad \]

\(x_{n}\)

\(\dfrac{n}{n^{2}} = \dfrac{1}{n}\)

So, it's would suffice to take \(n\in\N\) large enough that

\(<\varepsilon\)

Proposition. \(\displaystyle{\frac{2n^{2}+n+1}{n^2+1}\to 2}\).

Proof. Let \(\varepsilon>0\) be given. Choose \(N\in\N\) such that \(N>\frac{1}{\varepsilon}\). Let \(n\geq N\). We first observe that \[\frac{1}{n}\leq\frac{1}{N}<\varepsilon.\]

Next, we obvserve that

\[\left|\frac{2n^{2}+n+1}{n^{2}+1}-2\right| = \left|\frac{n-1}{n^{2}+1}\right| = \frac{n-1}{n^{2}+1}<\frac{n}{n^{2}} = \frac{1}{n}<\varepsilon.\]

\(\Box\)

Proposition. \(\displaystyle{\frac{n^2+n-1}{n^2-2}\to 1}\).

Scratch work. We wish to show that

\[\left|\frac{n^2+n-1}{n^2-2}-1\right|<\varepsilon.\]

So, we simplify this inequality as follows:

\[\varepsilon>\left|\frac{n^2+n-1}{n^2-2}-1\right| = \left|\frac{n+1}{n^{2}-2}\right|\]

For \(n\geq 2\) we see that \(\dfrac{n+1}{n^{2}-2}>0\), and hence we want

\[\frac{n+1}{n^{2}-2}<\varepsilon.\]

Again, we look for a sequence which is bigger, but more obviously converging to zero:

\[\frac{n+1}{n^{2}-2}\leq\frac{n+n}{n^{2}-2} = \frac{2n}{n^{2}-2}\leq\frac{2n}{n^{2}-n} = \frac{2}{n-1}\]

Here we suppose \(n\geq 2\)

Here we use that \(n\geq 1\)

So, we will pick \(N\in\N\) such that \[N>1+\frac{2}{\varepsilon}\]

\(<\varepsilon\)

Proposition. \(\displaystyle{\frac{n^2+n-1}{n^2-2}\to 1}\).

Proof. Let \(\varepsilon>0\) be given. Choose \(N\in\N\) such that

\[N>1+\frac{2}{\varepsilon}.\]

This implies

\[\frac{2}{N-1}<\varepsilon.\]

If \(n\geq N\) then we see that

\[\frac{2}{n-1}\leq\frac{2}{N-1}<\varepsilon.\]

Since \(N>1\) and \(N\in\N\), we deduce that \(n\geq 2\). Finally, we have

\[\left|\frac{n^2+n-1}{n^2-2}-1\right| = \frac{n+1}{n^{2}-2}\leq \frac{n+n}{n^{2}-2} = \frac{2n}{n^{2}-2}\leq \frac{2n}{n^{2}-n} = \frac{2}{n-1}<\varepsilon.\]

\(\Box\)

Proposition. Let \(\{\mathbf{x}_{n}\}\) and \(\{\mathbf{y}_{n}\}\) be sequences in \(\mathbb{R}^{n}\). If \(\mathbf{x}_{n}\to \mathbf{a}\) and \(\mathbf{y}_{n}\to\mathbf{b}\), then \(\mathbf{x}_{n}+\mathbf{y}_{n}\to \mathbf{a}+\mathbf{b}\).

Proof. Let \(\varepsilon>0\) be given. There exists \(N_{1}\in\mathbb{N}\) such that

\[\|\mathbf{x}_{n}-\mathbf{a}\|<\frac{\varepsilon}{4}\quad\text{for all }n\geq N_{1},\]

and there exists \(N_{2}\in\mathbb{N}\) such that

\[\|\mathbf{y}_{n}-\mathbf{b}\|<\frac{\varepsilon}{4}\quad\text{for all }n\geq N_{2}.\]

Let \(N=\max\{N_{1},N_{2}\}\) and let \(n\geq N\) be arbitrary. This implies \(n\geq N_{1}\) and \(n\geq N_{2}\), and thus

\[\|(\mathbf{x}_{n} +\mathbf{y}_{n}) - (\mathbf{a}+\mathbf{b})\| = \|\mathbf{x}_{n} - \mathbf{a} +\mathbf{y}_{n}-\mathbf{b}\| \leq \|\mathbf{x}_{n} - \mathbf{a}\| +\|\mathbf{y}_{n}-\mathbf{b}\|\]

\[<\frac{\varepsilon}{4}+\frac{\varepsilon}{4} = \frac{\varepsilon}{2}<\varepsilon.\]

\(\Box\)

Proposition. Let \(\{x_{n}\}\) and \(\{y_{n}\}\) be sequences in \(\mathbb{R}\). If \(x_{n}\to a\) and \(y_{n}\to b\), then \(x_{n}y_{n}\to ab\).

First presentation:

Hints:

- First show that there is some \(M\) such that \(|x_{n}|\leq M\) for all \(n\in\N\).

- Use the fact that for any \(n\in\N\) \[x_{n}y_{n} - ab = x_{n}y_{n}-x_{n}b+x_{n}b-ab = x_{n}(y_{n}-b)+(x_{n}-a)b.\]

Definition. Let \(\{x_{n}\}\) be a sequence in a metric space \((S,d)\), and let \(\{n_{k}\}\) a strictly increasing sequence of natural numbers, that is, \(n_{k}\in\N\) for each \(k\in\N\), and

\[n_{1}<n_{2}<n_{3}<\cdots<n_{k}<\cdots.\]

The sequence \(\{x_{n_{k}}\}_{k=1}^{\infty}\) is called a subsequence of \(\{x_{n}\}\).

Theorem 4.5. A sequence \(\{x_{n}\}\) in a metric space \((S,d)\) converges to

\(p\in S\) if and only if every subsequence of \(\{x_{n}\}\) converges to \(p\).

Proof. (In the text and on the board)

Subsequences

Example. Consider the sequence \(\{x_{n}\}\) where \(x_{n} = n^{2}\). If we list out the terms, we have \[1,4,9,16,\ldots,n^{2},\ldots\] If \(n_{k} = 3k\) for each \(k\in\N\), then \(\{n_{k}\}\) is a strictly increasing sequence in of natural numbers, and \(\{x_{n_{k}}\}\) is the subsequence with terms

\[9,36,81,\ldots,9k^2,\ldots\]

Definition 4.7. A sequence \(\{x_{n}\}\) in a metric space \((S,d)\) is called a Cauchy sequence if for every \(\varepsilon>0\) there exists \(N\in\N\) such that

\[d(x_{n},x_{m})<\varepsilon\quad\text{for all }m\geq N\text{ and }n\geq N.\]

Theorem 4.6. If \(\{x_{n}\}\) is a convergent sequence in a metric space \((S,d)\), then \(\{x_{n}\}\) is a Cauchy sequence.

Proof. (In the text and on the board)

Cauchy Sequences

Theorem 4.8. If \(\{x_{n}\}\) is a Cauchy sequence in \(\mathbb{R}^{n}\), then \(\{x_{n}\}\) converges.

Proof. (In the text and on the board)

Key Takeaway: In \(\mathbb{R}^{n}\), a sequence converges (to something) if and only if it is a Cauchy sequence.

Limits of functions

Definition 4.11. If \(p\) is an accumulation point of \(A\) and \(b\in T\), then

\[\lim_{x\to p}f(x) = b\]

is defined to mean the following: For every \(\varepsilon>0\), there exists \(\delta>0\) such that

\[d_{T}(f(x),b)<\varepsilon\quad\text{for all }x\in (B(p;\delta)\setminus \{p\})\cap A.\]

In this case, we say that "\(b\) is the limit of \(f(x)\) as \(x\) tends to \(p\)."

Suppose \((S,d_{S})\) and \((T,d_{T})\) are metric spaces, and \(A\subset S\). Let \(f:A\to T\) be a function with domain \(A\) and codomain \(T\).

For example, we could have \(S=\mathbb{R}^{2}\) and \(T=\mathbb{R}\) with their usual metrics. Then we define the function \(f: B(\mathbf{0};1)\to\mathbb{R}\) by setting

\[f(x,y) = \frac{1-x^2}{1-x^{2}-y^{2}}\quad\text{for all }(x,y)\in B(\mathbf{0};1).\]

For every \(\varepsilon>0\) there exists \(\delta>0\) such that

\[\text{if }0<|x-p|<\delta,\text{ then }|f(x) - b|<\varepsilon\]

\(f:\mathbb{R}\to\mathbb{R}\)

For every \(\varepsilon>0\) there exists \(\delta>0\) such that

\[\text{if }0<\|x-p\|<\delta,\text{ then }\|f(x) - b\|<\varepsilon\]

\(f:\mathbb{R}^{n}\to\mathbb{R}^{m}\)

For every \(\varepsilon>0\) there exists \(\delta>0\) such that

\[\text{if }0<|x-p|<\delta\text{ and }x\in[a,b],\text{ then }|f(x) - b|<\varepsilon\]

\(f:[a,b]\to\mathbb{R}\)

\[\lim_{x\to p}f(x) = b\]

Proposition. \(\displaystyle{\lim_{x\to 0}x = 0}.\)

Scratch work. Suppose \(\varepsilon>0\). We wish to find \(\delta>0\) such that if \[0<|x-0|<\delta,\] then \[|x-0|<\varepsilon.\] We should take \(\delta=\varepsilon\).

Proof. Let \(\varepsilon>0\) be given. Set \(\delta=\varepsilon\). For \(x\in\mathbb{R}\) such that \(|x-0|<\delta\), it is clear that \(|x-0|<\varepsilon.\) \(\Box\)

Proposition. \(\displaystyle{\lim_{x\to 0}x^2 = 0}.\)

Scratch work. Suppose \(\varepsilon>0\). We wish to find \(\delta>0\) such that if \[0<|x-0|<\delta,\] then \[|x^2-0|<\varepsilon.\] Since \(|x^{2}|=x^{2}\) it is enough to take \(\delta=\sqrt{\varepsilon}\).

Proof. Let \(\varepsilon>0\) be given. Set \(\delta=\sqrt{\varepsilon}\). For \(x\in\mathbb{R}\) such that \(|x-0|<\delta\), we see that

\[|x^{2} - 0| = |x|^{2}<\delta^{2} = \varepsilon.\ \Box\]

Proposition. For \(a\in\mathbb{R}\), \(\displaystyle{\lim_{x\to a}x^2 = a^2}.\)

Scratch work. Suppose \(\varepsilon>0\). We wish to find \(\delta>0\) such that if \[0<|x-a|<\delta,\] then \[|x^2-a^2|<\varepsilon.\] We can simplify the expression that we are trying to bound:

\[|x^{2}-a^{2}| = |x-a||x+a|.\]

As long as \(|x-a|<1\), which we can guarantee by choosing \(\delta\leq 1\), then by the triangle inequality \[|x+a|\leq |x-a|+|2a|<1+2|a|.\]

Thus, it should be enough to take \[\delta=\min\left\{1,\frac{\varepsilon}{1+2|a|}\right\}.\]

Proposition. For \(a\in\mathbb{R}\), \(\displaystyle{\lim_{x\to a}x^2 = a^2}.\)

Proof. Let \(\varepsilon>0\) be given. Set \[\delta=\min\left\{1,\frac{\varepsilon}{1+2|a|}\right\}.\]

Let \(|x-a|<\delta\). Since \(\delta\leq 1\), by the triangle inequality

\[|x+a|\leq |x-a|+|2a|<\delta+|2a|\leq 1+2|a|.\]

Since \(\delta\leq \frac{\varepsilon}{1+2|a|}\) we have

\[|x^{2}-a^{2}| = |x-a||x+a|\leq \frac{\varepsilon}{1+2|a|}|x+a|<\frac{\varepsilon}{1+2|a|}(1+2|a|) = \varepsilon. \ \Box\]

Proposition. For \(a\in\mathbb{R}\setminus\{0\}\), \(\displaystyle{\lim_{x\to a}\dfrac{1}{x} = \dfrac{1}{a}}.\)

Proof. Let \(\varepsilon>0\) be given. Set

\[\delta = \min\left\{\frac{1}{2}|a|,\frac{a^{2}\varepsilon}{4}\right\}.\]

Take \(x\in\mathbb{R}\setminus\{0\}\) such that \(0<|x-a|<\delta.\) By the reverse triangle inequality, we have

\[|x|\geq |a| - |x-a|>|a|-\delta\geq |a| - \frac{1}{2}|a| = \frac{1}{2}|a|.\]

Using this, we have

\[\left|\frac{1}{x}-\frac{1}{a}\right| = \frac{|x-a|}{|a||x|}<\frac{|x-a|}{|a|\frac{1}{2}|a|} = \frac{2}{a^{2}}|x-a| < \frac{2}{a^{2}}\delta\leq \frac{2}{a^{2}}\frac{a^{2}\varepsilon}{4} = \frac{\varepsilon}{2}<\varepsilon.\ \Box\]

Theorem 4.12. Using the notation from Definition 4.11, If \(p\) is an accumulation point of \(A\) and \(b\in T\), then

\[\lim_{x\to p}f(x) = b\]

if and only if the sequence \(\{f(x_{n})\}\) converges to \(b\) for every sequence \(\{x_{n}\}\) in \(A\setminus\{p\}\) which converges to \(p\).

Theorem 4.14. If

\[\lim_{x\to p}f(x) = \mathbf{a}\quad\text{and}\quad\lim_{x\to p}g(x) = \mathbf{b},\]

then

a) \(\displaystyle{\lim_{x\to p}[f(x)+g(x)] = \mathbf{a}+\mathbf{b}}\)

b) \(\displaystyle{\lim_{x\to p}\lambda f(x) = \lambda\mathbf{a}}\) for any scalar \(\lambda\).

Now, suppose that \(T=\mathbb{R}^{k}\) with the usual metric. (Of particular interest is the case of \(k=1\).)

c) \(\displaystyle{\lim_{x\to p}f(x)\cdot g(x) = \mathbf{a}\cdot\mathbf{b}}\)

d) \(\displaystyle{\lim_{x\to p}\|f(x)\| = \|\mathbf{a}\|}\).

Proposition. Let \(A\subset \R^{k}\), and let \(f,g:A\to\mathbb{R}^{n}.\) If \[\lim_{x\to p}f(x) = \mathbf{a}\quad\text{and}\quad\lim_{x\to p}g(x) = \mathbf{b},\]

then \[\lim_{x\to p}f(x)\cdot g(x) = \mathbf{a}\cdot\mathbf{b}.\]

Second presentation:

Hints: \(\bullet\) First, suppose \(A=S=\mathbb{R}\) with the usual metric and \(n=1\). The proof in this case will be quite similar to the first presentation, namely, you should use the following observations:

\[|f(x)g(x) - ab| = |f(x)g(x) - f(x)b+f(x)b-ab|\]

\[\leq |f(x)g(x)-f(x)b|+|f(x)b-ab|\]

\[= |f(x)||g(x)-b|+|b||f(x)-a|\]

- Show that for some \(r>0\) the set \(\{f(x): x\in B(p;r)\setminus\{p\}\}\) is bounded.

Continuous Functions

Definition 4.15. A function \(f:S\to T\), where \(S\) and \(T\) are metric spaces is said to be continuous at a point \(p\) in \(S\) if for every \(\varepsilon>0\) there exists \(\delta>0\) such that

\[d_{T}(f(x),f(p))<\varepsilon\quad\text{for all }x\text{ satifying }d_{S}(x,p)<\delta.\]

If \(f\) is continuous at all \(p\) in some set \(A\subset S\), then we say that \(f\) is continuous on \(A\).

Definition 4.15 (For functions from \(\mathbb{R}^{m}\to\mathbb{R}^{k}\)). A function \(f:\mathbb{R}^{m}\to \mathbb{R}^{k}\) is said to be continuous at a point \(\mathbf{p}\) in \(\mathbb{R}^{m}\) if for every \(\varepsilon>0\) there exists \(\delta>0\) such that

\[\|f(\mathbf{x})-f(\mathbf{p})\|<\varepsilon\quad\text{for all }\mathbf{x}\text{ satifying }\|\mathbf{x}-\mathbf{p}\|<\delta.\]

Definition 4.15 (For functions from \(\mathbb{R}\to\mathbb{R}\)). A function \(f:\mathbb{R}\to \mathbb{R}\) is said to be continuous at a point \(p\) in \(\mathbb{R}\) if for every \(\varepsilon>0\) there exists \(\delta>0\) such that

\[|f(x)-f(p)|<\varepsilon\quad\text{for all }x \text{ satifying }|x-p|<\delta.\]

Proposition. For any \(p\in\mathbb{R}\), the function \(f:\mathbb{R}\to\mathbb{R}\) given by \(f(x) = x\) is continuous at \(p\). Thus \(f\) is continuous on \(\mathbb{R}\).

Proof. Let \(p\in\mathbb{R}\) and \(\varepsilon>0\) be given. Set \(\delta=\varepsilon\), and let \(x\in(p-\delta,p+\delta)\). Observe that \[|f(x) - f(p)| = |x-p|<\delta = \varepsilon.\ \Box\]

Proposition. For any \(p\in\mathbb{R}\), the function \(f:\mathbb{R}\to\mathbb{R}\) given by \(f(x) = 1\) is continuous at \(p\). Thus \(f\) is continuous on \(\mathbb{R}\).

Proof. Let \(p\in\mathbb{R}\) and \(\varepsilon>0\) be given. Set \(\delta=1\), then for any \(x\in(p-\delta,p+\delta)\) we have

\[|f(x) - f(p)| = |1-1|=0<\varepsilon.\ \Box\]

Corollary. Let \(a_{0},a_{1},\ldots,a_{n}\) be real numbers. Define the function \(f:\mathbb{R}\to\mathbb{R}\) by

\[f(x) = a_{0}+a_{1}x+a_{2}x^{2} + \cdots + a_{n}x^{n}\]

for each \(x\in\mathbb{R}\). The function \(f\) is continuous on \(\mathbb{R}\). Such functions are called polynomials. The degree of a polynomial is the largest \(k\) such that \(a_{k}\neq 0\).

\[= a_{0}\cdot 1 + a_{1}\cdot p = f(p).\]

Proof. Let \(\mathbb{p}\in\mathbb{R}\) be arbitrary. Let \(f_{0}:\mathbb{R}\to\mathbb{R}\) and \(f_{1}:\mathbb{R}\to\mathbb{R}\) be given by

\[f_{0}(x) = 1\quad\text{and}\quad f_{1}(x) = x.\]

We have already seen that \(f_{0}\) and \(f_{1}\) are continuous on \(\mathbb{R}\), and hence

\[\lim_{x\to p}f_{0}(x) = 1\quad\text{and}\quad \lim_{x\to p}f_{1}(x) = p.\]

If \(f\) is a degree 1 polynomial, that is, \(f(x) = a_{0}+a_{1}x\), then we see that \(f(x) = a_{0}f_{0}(x)+a_{1}f_{1}(x)\). By Theorem 4.14 we have

\[\lim_{x\to p}f(x) = \lim_{x\to p}a_{0}f_{0}(x)+\lim_{x\to p}a_{1}f_{1}(x) = a_{0}\lim_{x\to p}f_{0}(x)+a_{1}\lim_{x\to p}f_{1}(x) \]

Thus, we have shown the desired result for polynomials of degree 1. Now assume it is true for polynomials of degree \(\leq n\). Let

\[f(x) = a_{0}+a_{1}x+a_{2}x^{2}+\cdots+a_{n}x^{n}+a_{n+1}x^{n+1}.\]

Set

\[g(x) = a_{n+1}x^{n}\quad\text{and}\quad h(x) = f(x) - xg(x).\]

Both of these are polynomials of degree \(\leq n\), and hence we have

\[\lim_{x\to p}g(x) = g(p)\quad\text{and}\quad \lim_{x\to p}h(x) = h(p) = f(p)-p\cdot g(p).\]

By theorem 4.14(c) we also have

\[\lim_{x\to p}xg(x) = \left(\lim_{x\to p}x\right)\left(\lim_{x\to p}g(x)\right) = pg(p) = a_{n+1}p^{n+1}.\]

Finally, by Theorem 4.14 (a) we have

\[\lim_{x\to p}f(x) = \lim_{x\to p}[h(x) + xg(x)] = \lim_{x\to p}h(x) + \lim_{x\to p}xg(x) \]

\[= f(p)-p\cdot g(p) + pg(p) = f(p).\ \Box\]

Theorem 4.31. Let \(S\subset\mathbb{R}\) be an interval, and let \(f:S\to\mathbb{R}\). If \(f\) is continuous at \(c\in S\) and \(f(c)\neq 0\), then there exists \(\delta>0\) such that \(f(x)\) has the same sign as \(f(c)\) for all \(x\in(c-\delta,c+\delta)\cap S\).

Proof. Assume without loss of generality that \(f(c)>0\). Since \(f\) is continuous at \(c\), there exists \(\delta>0\) such that

\[|f(x)-f(c)|<\frac{f(c)}{2}\quad\text{for all }x\in (c-\delta,c+\delta)\cap S.\]

Hence, for any \(x\in(c-\delta,c+\delta)\cap S\) we have

\[f(x)-f(c)>-\frac{f(c)}{2}\]

and thus

\[f(x)>\frac{f(c)}{2}>0.\ \Box\]

This theorem is sometimes called "the bump theorem." Can you see why?