AI Fairness 360 Toolkit : Identify & Remove Bias From AI Models

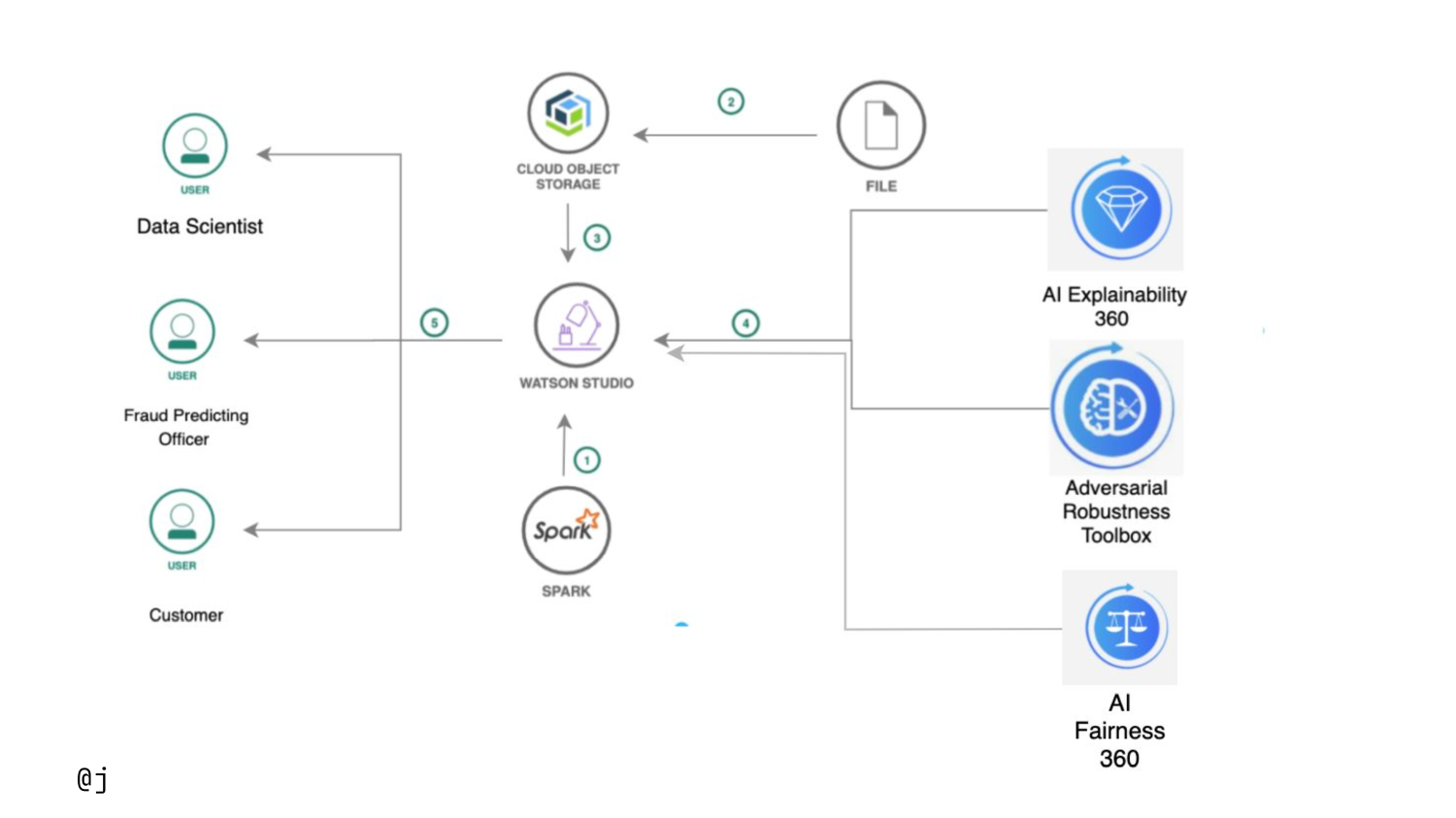

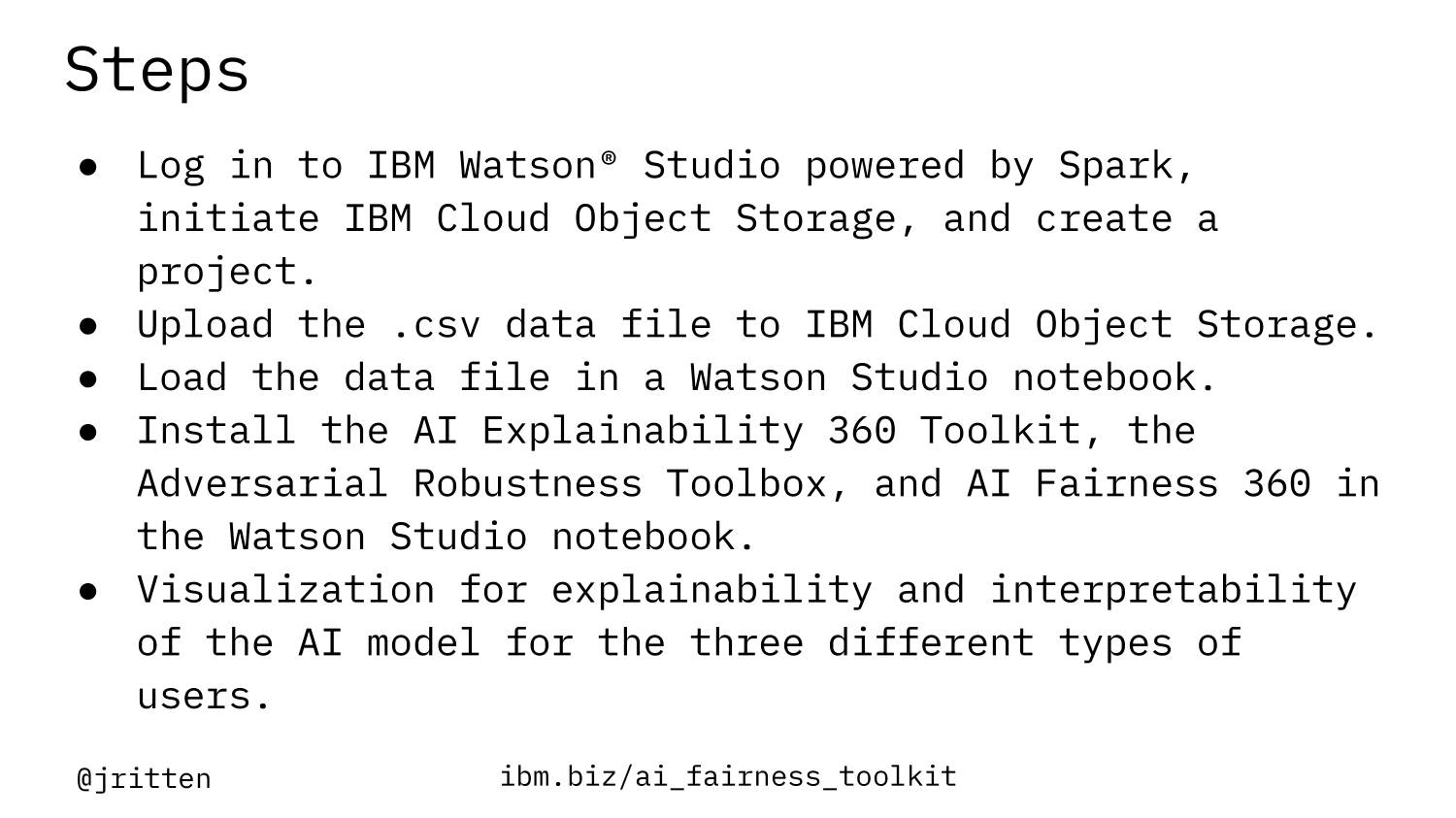

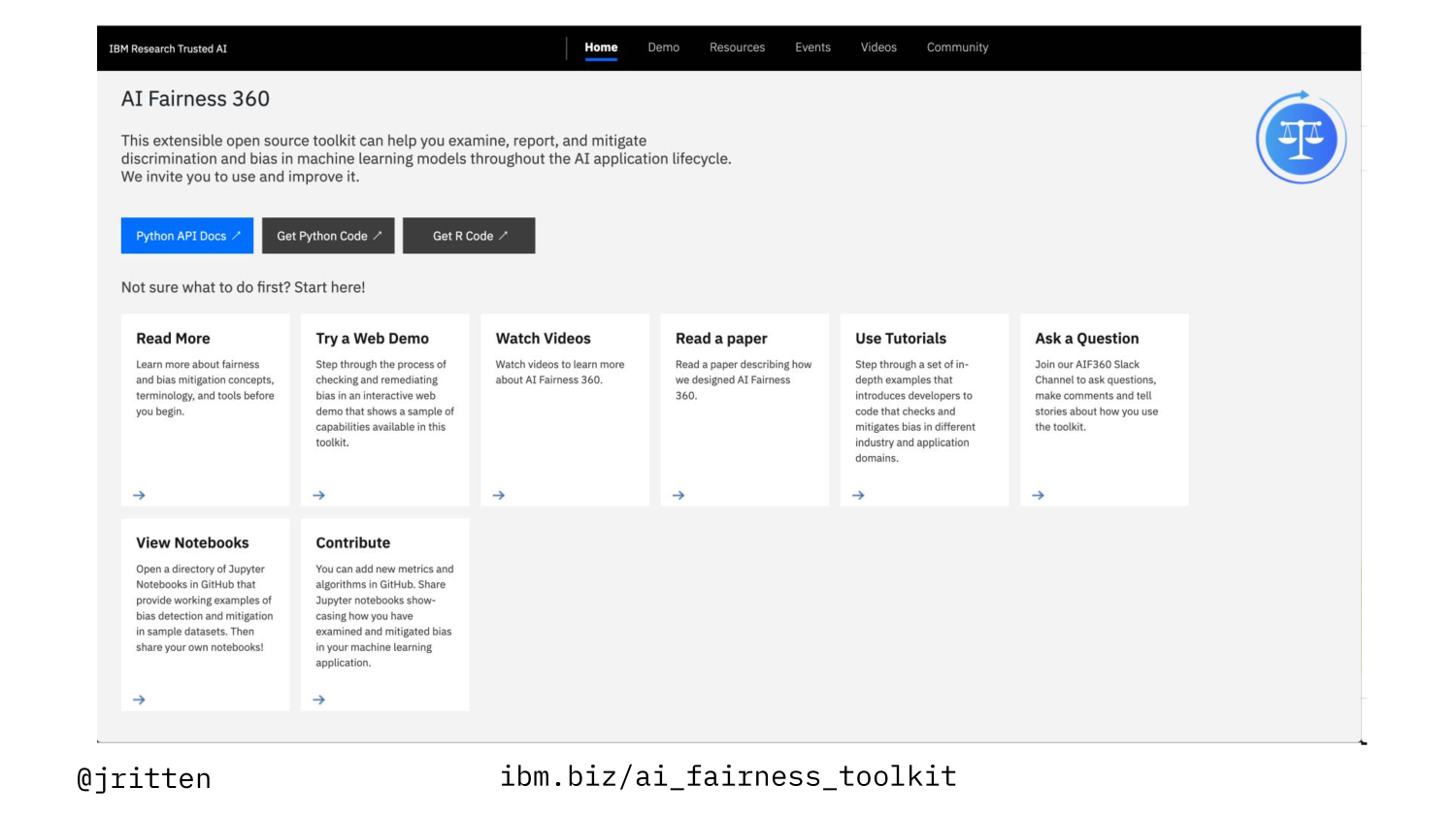

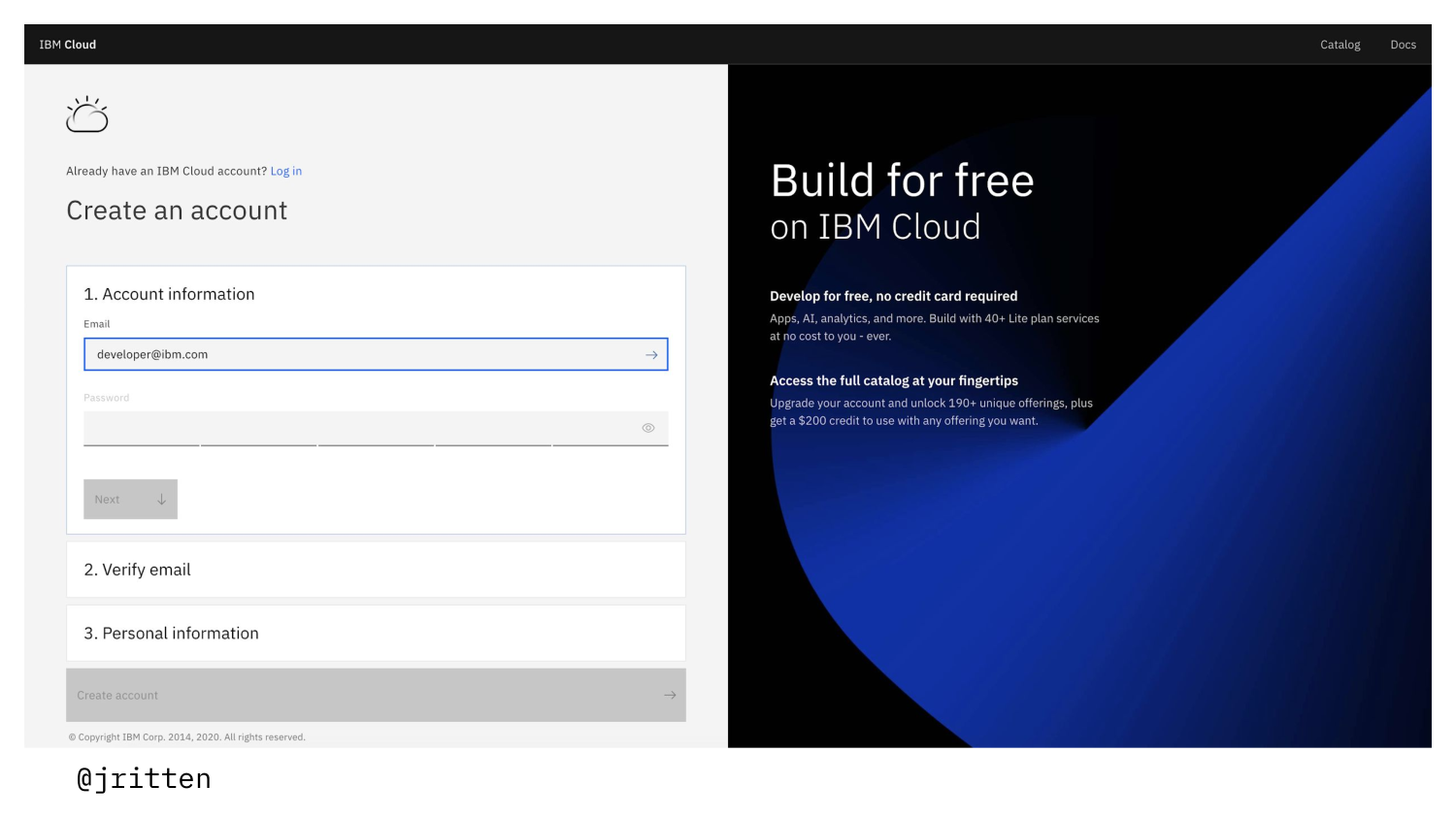

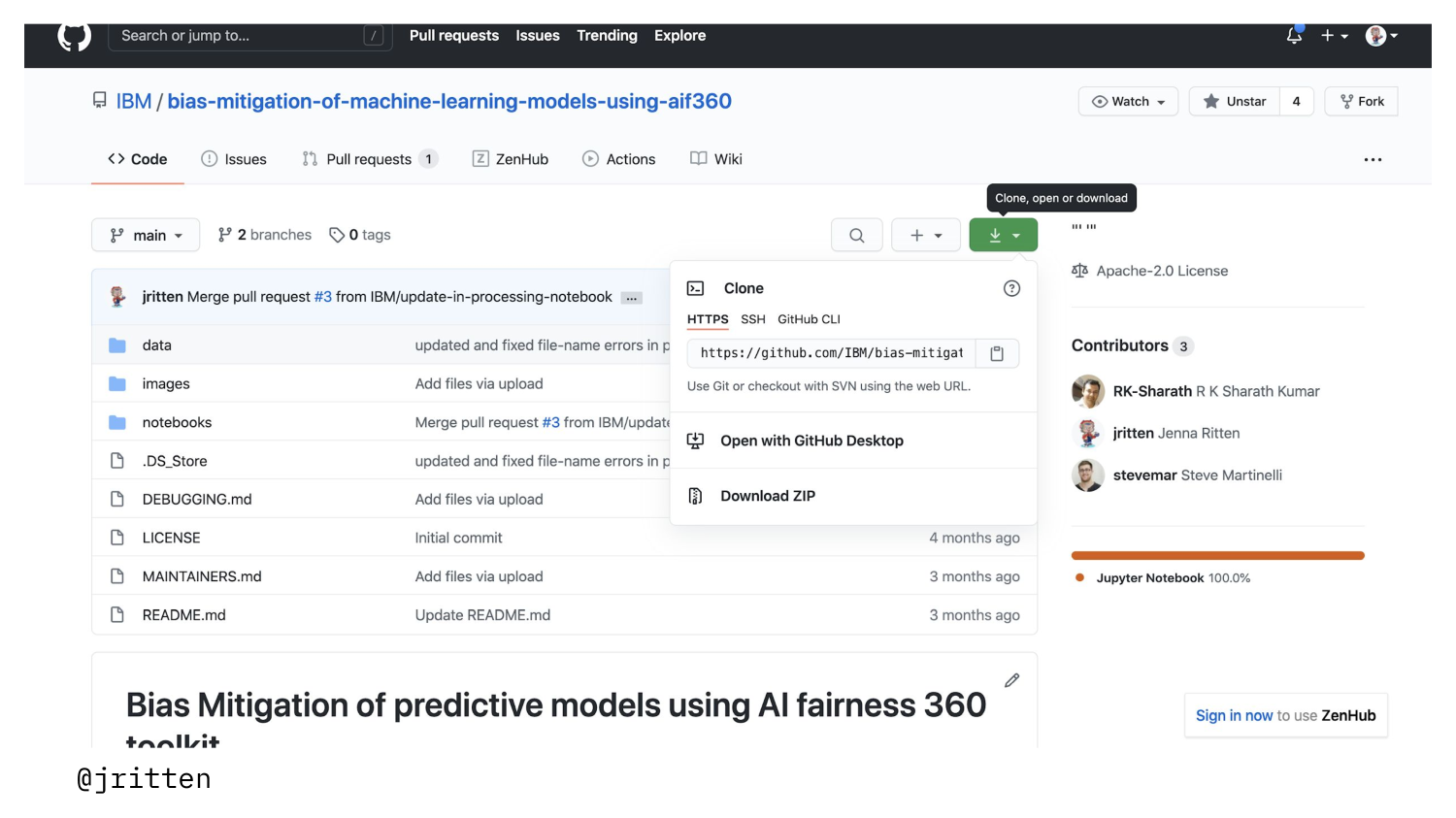

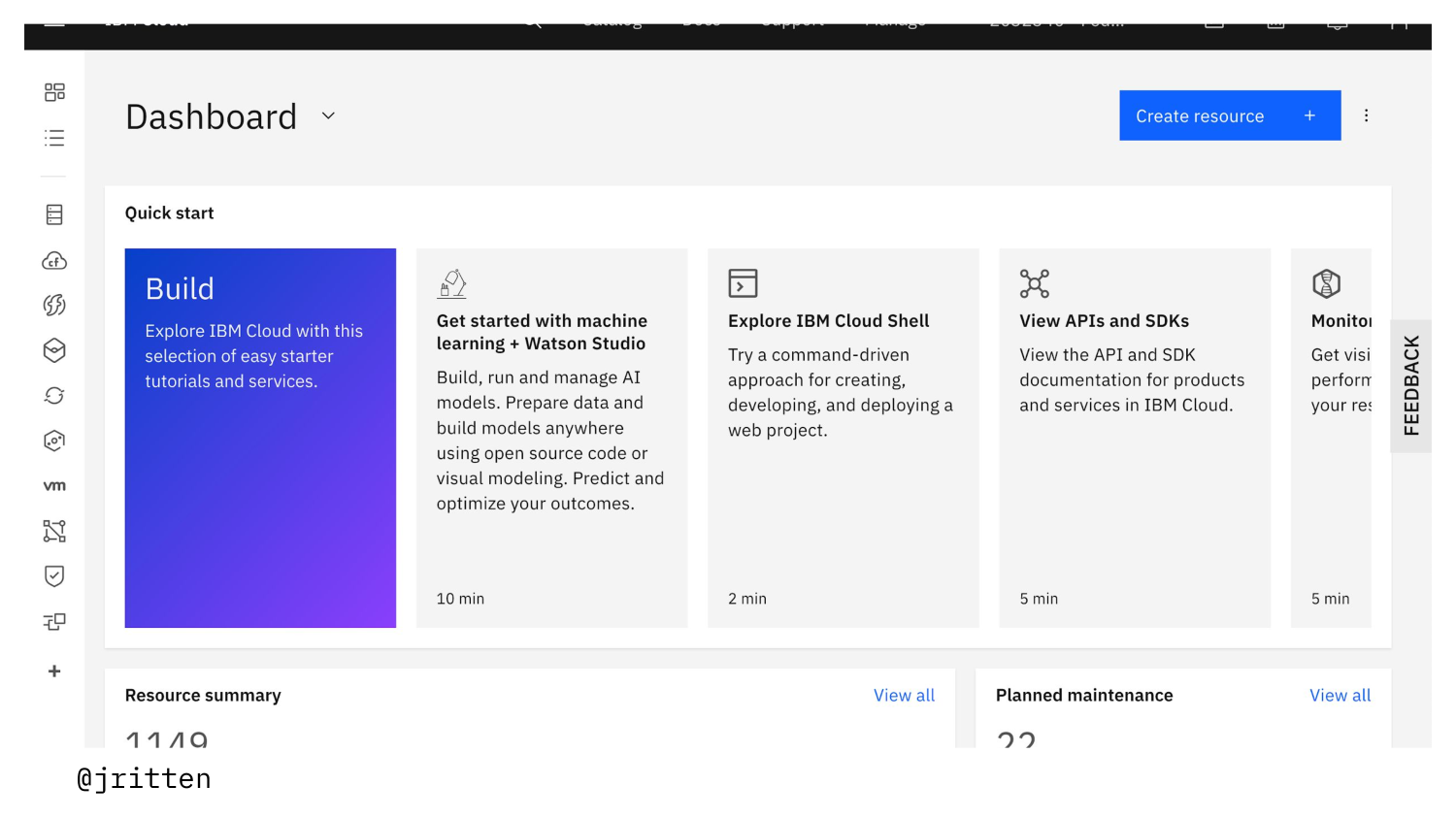

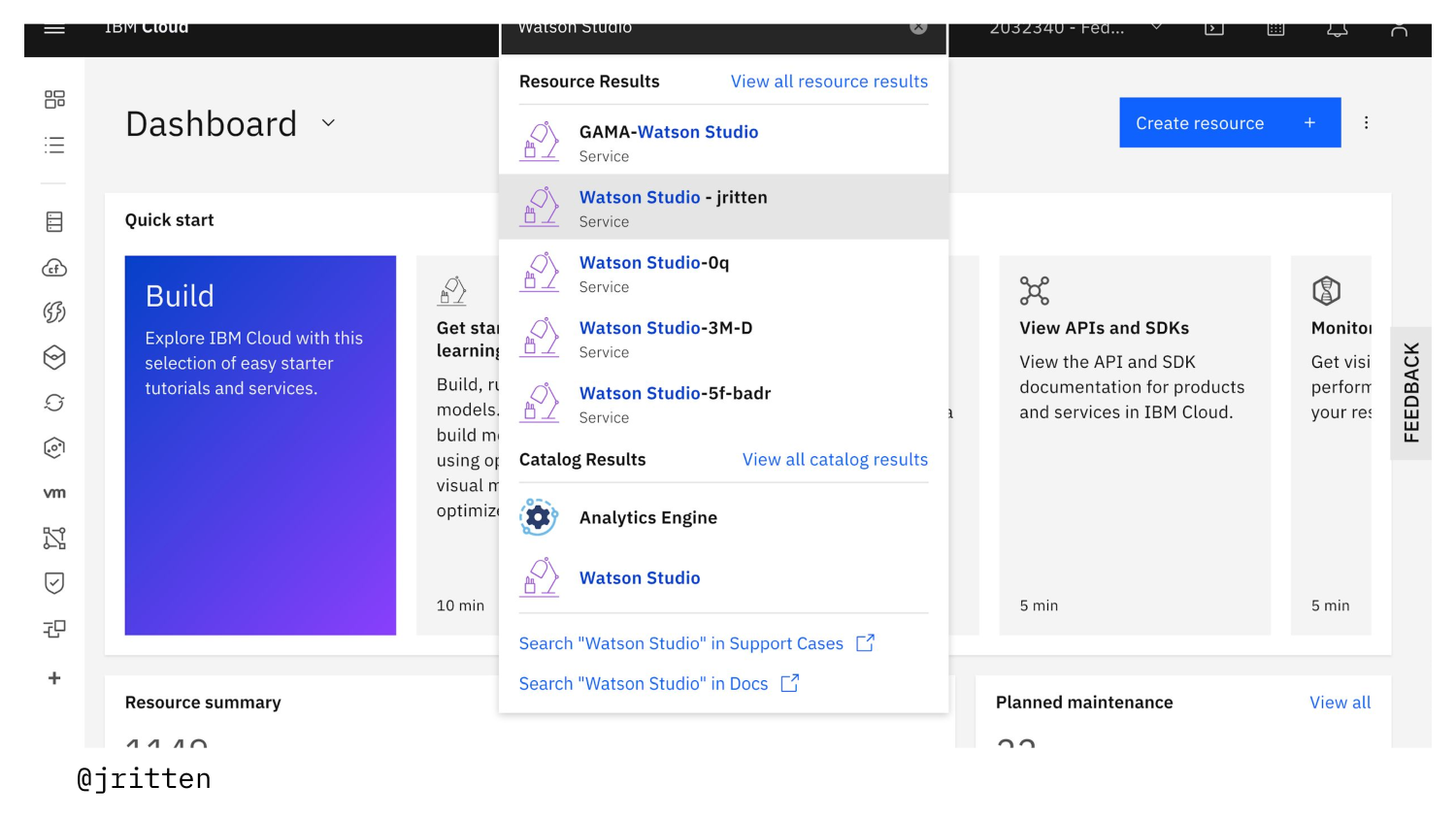

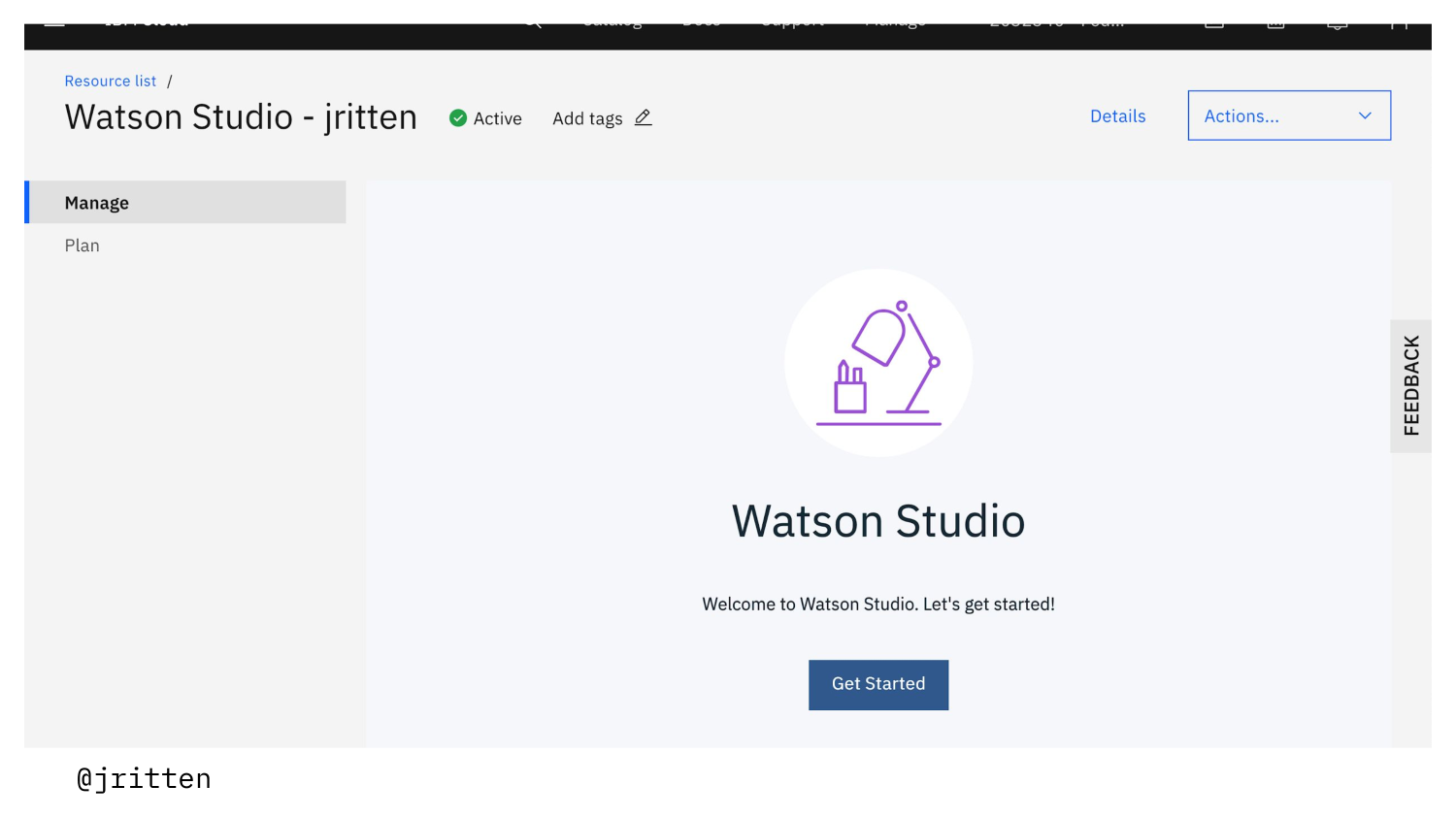

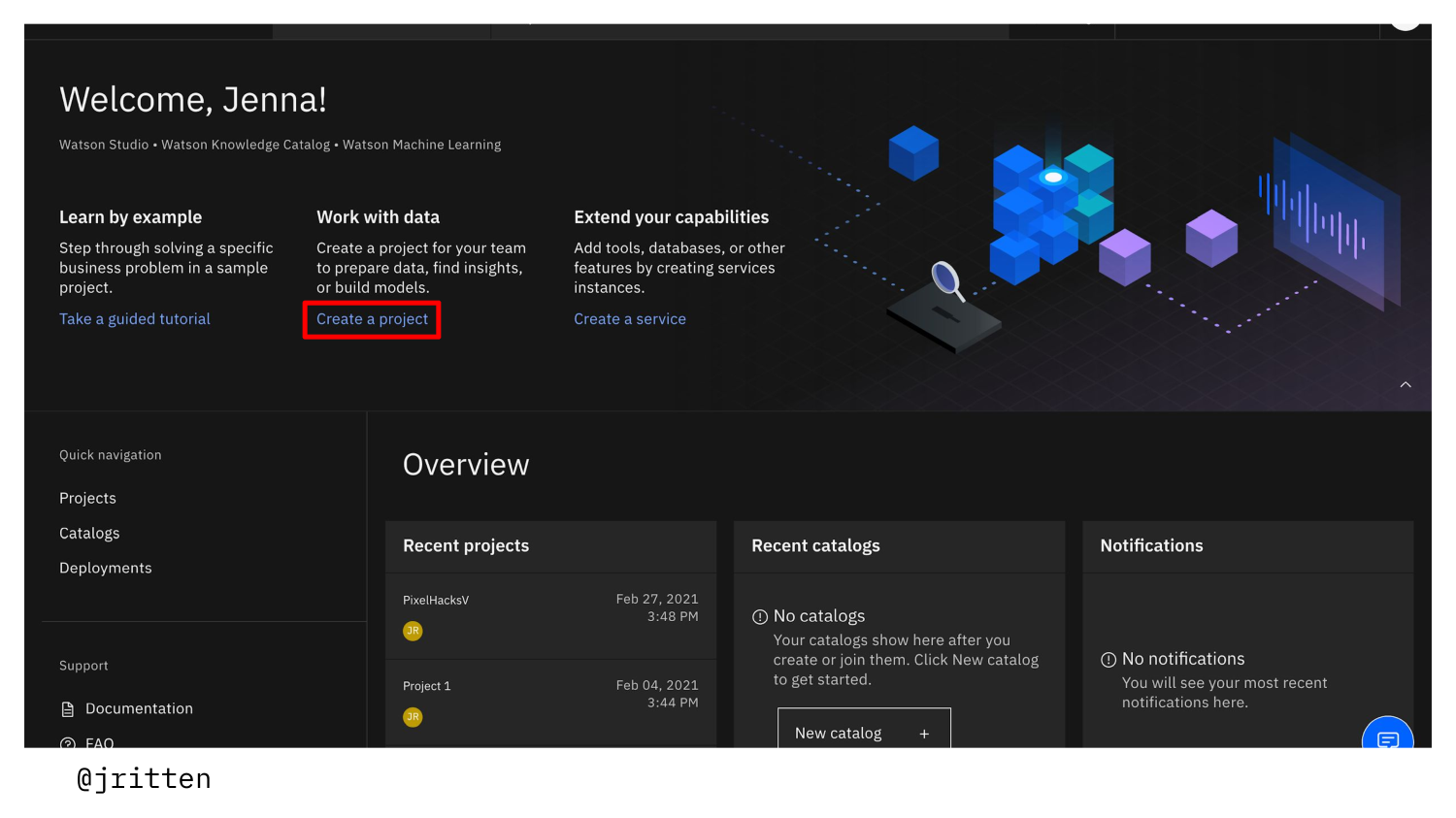

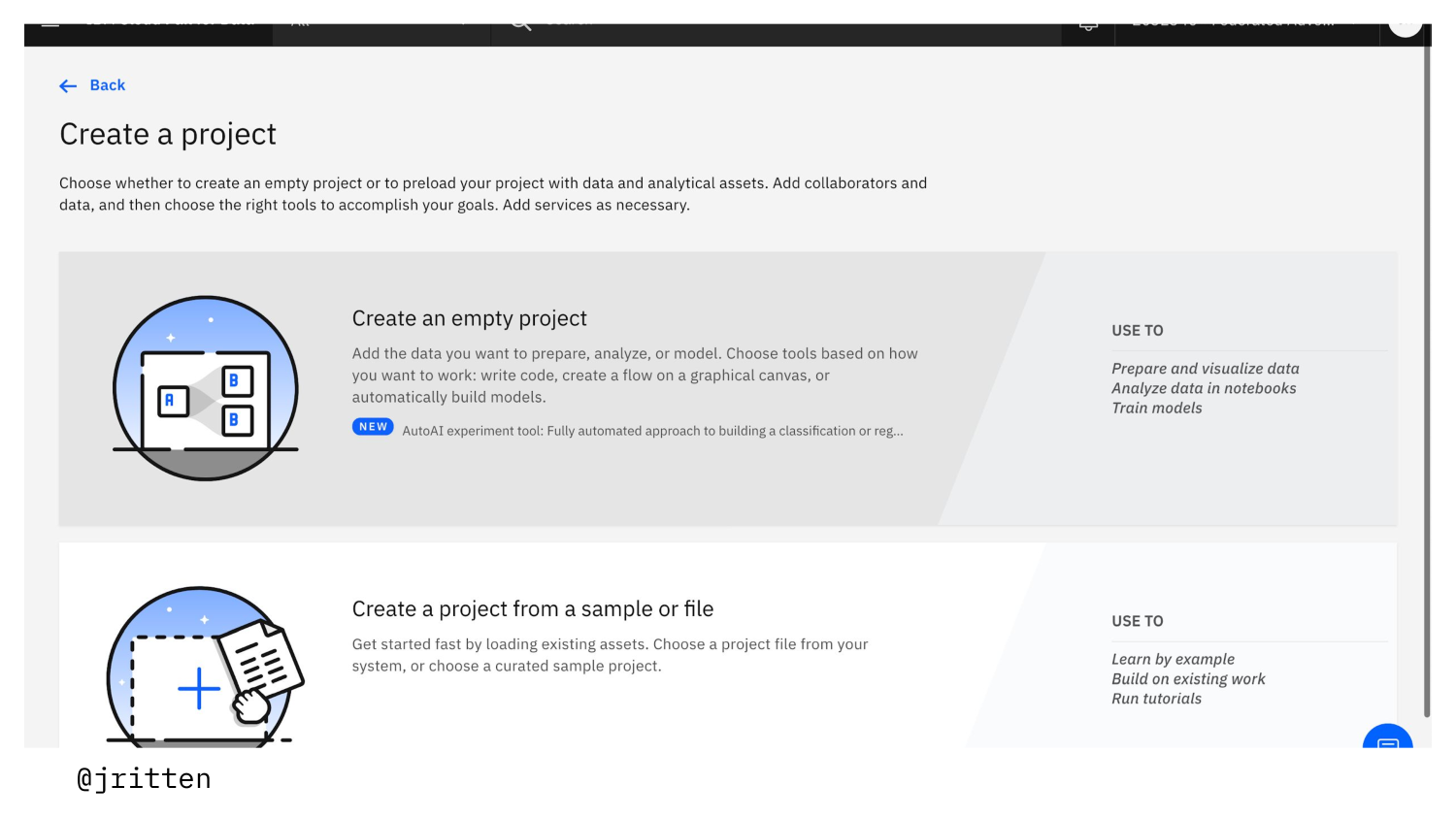

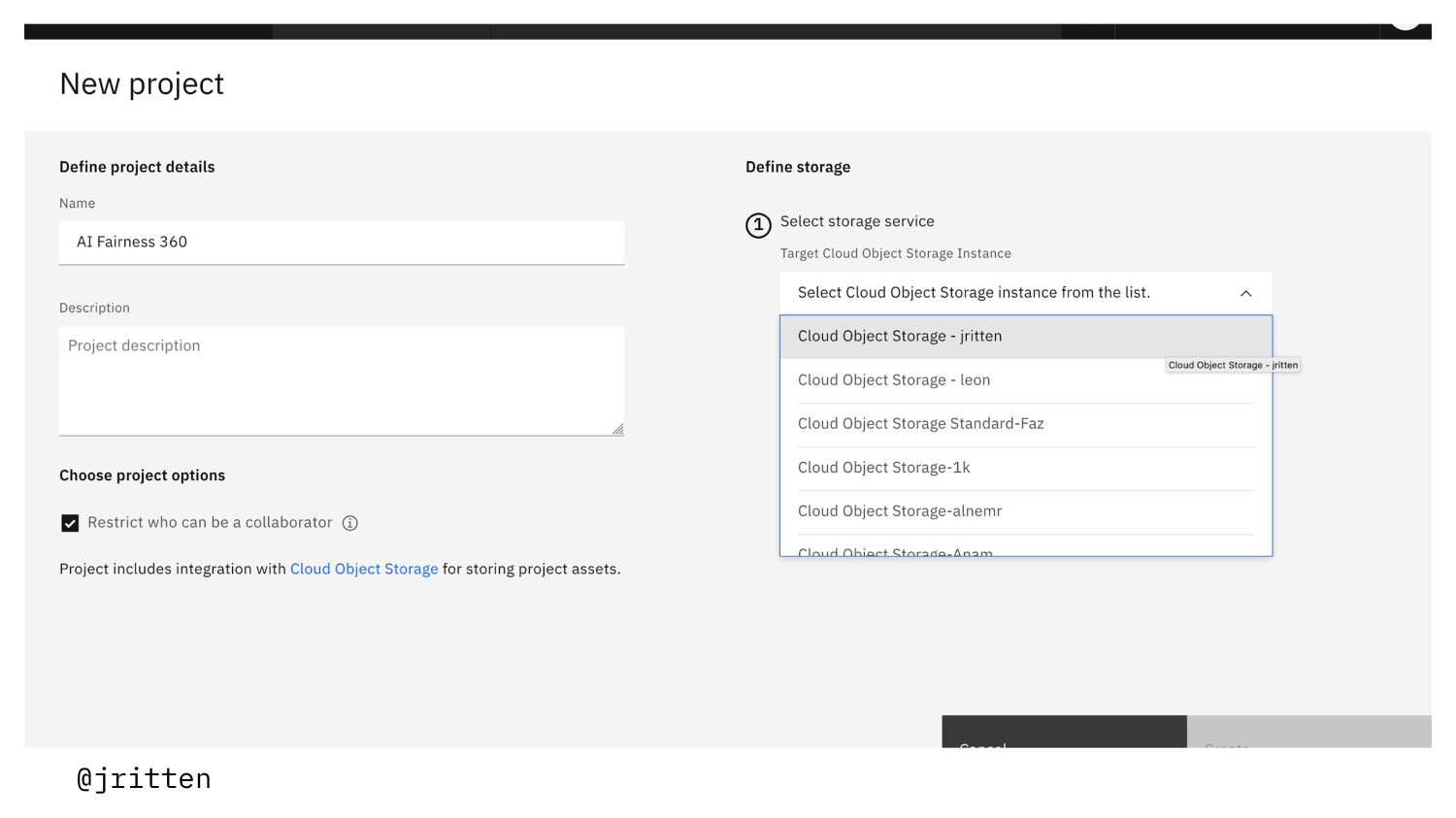

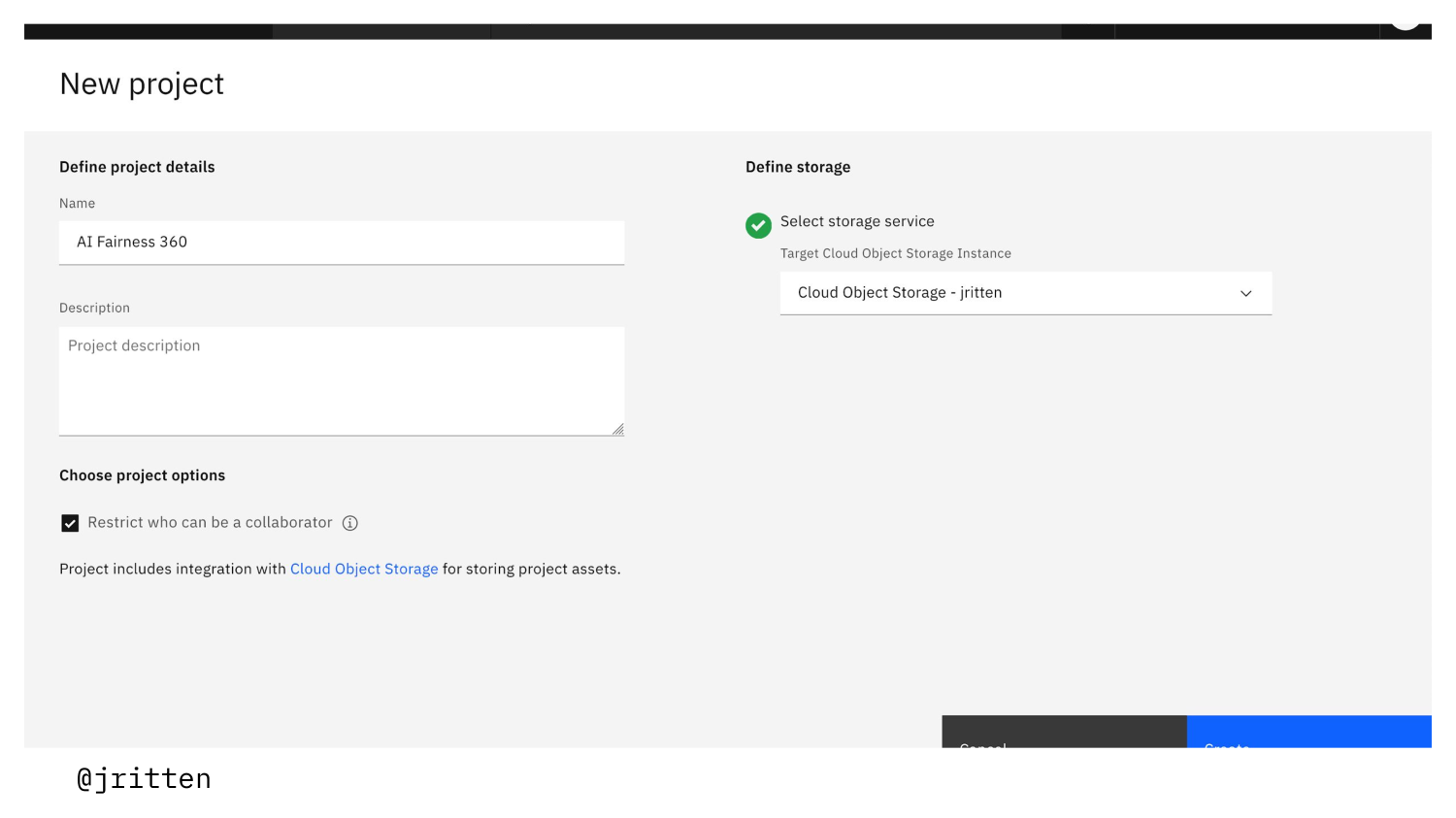

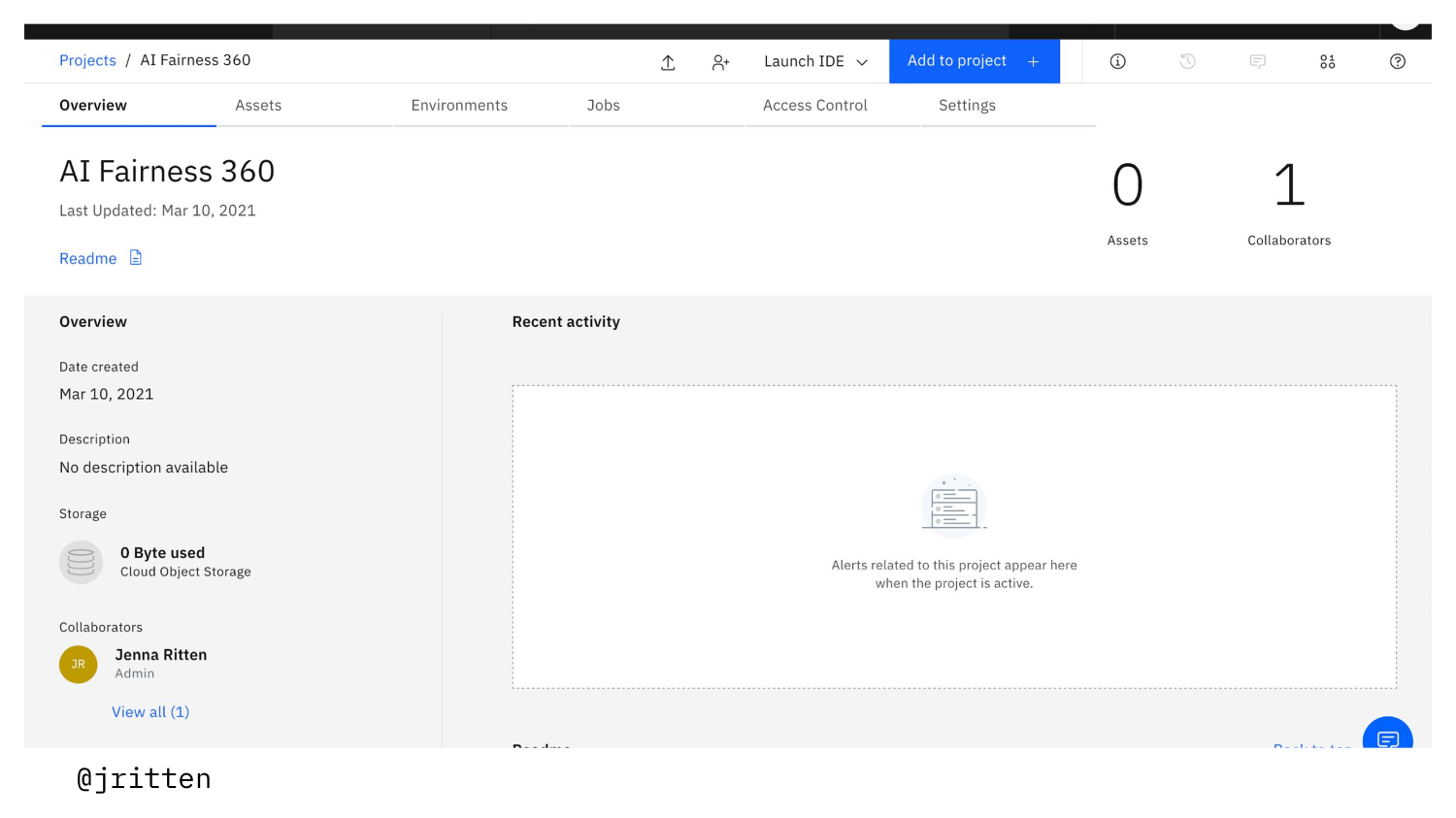

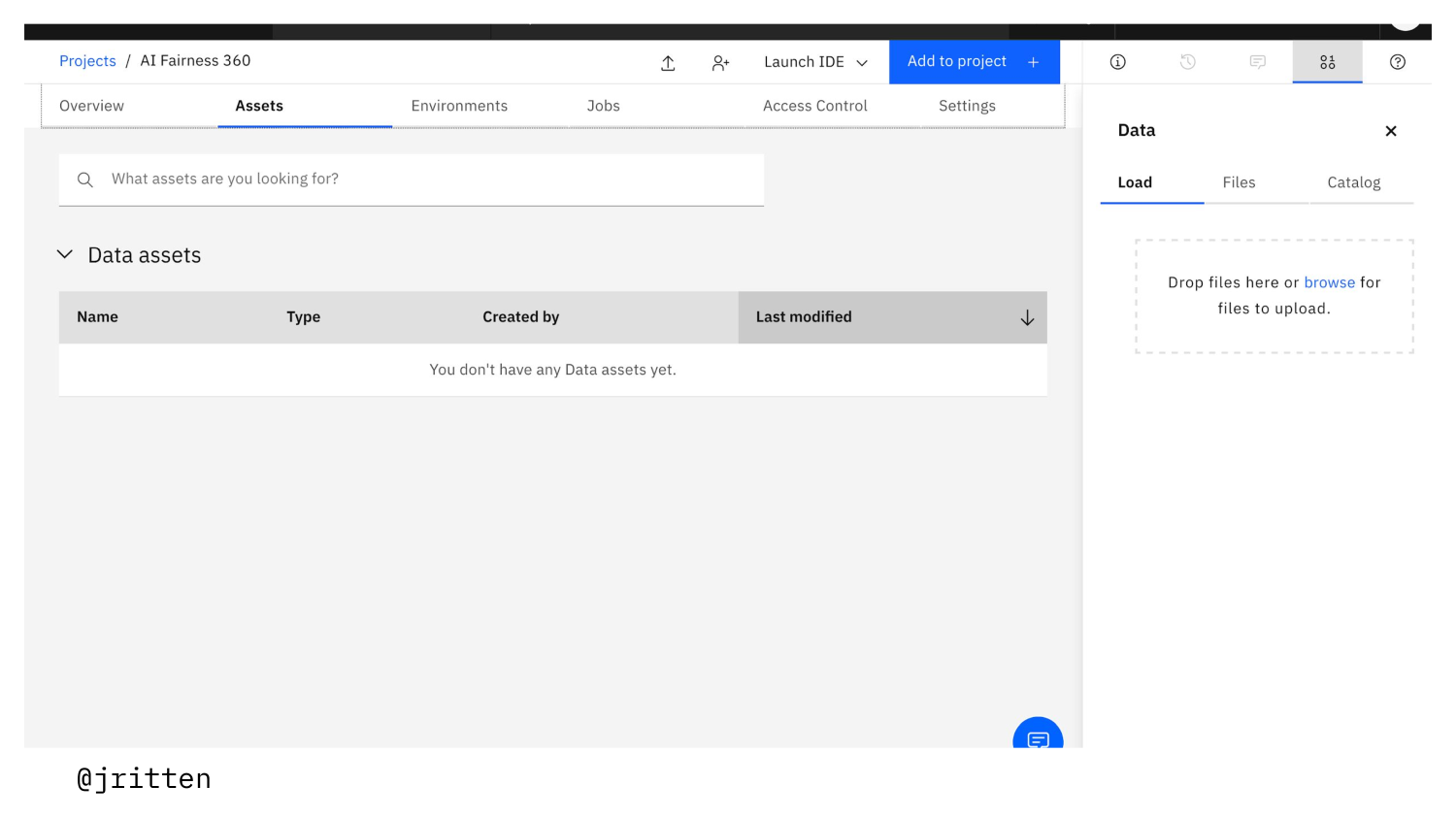

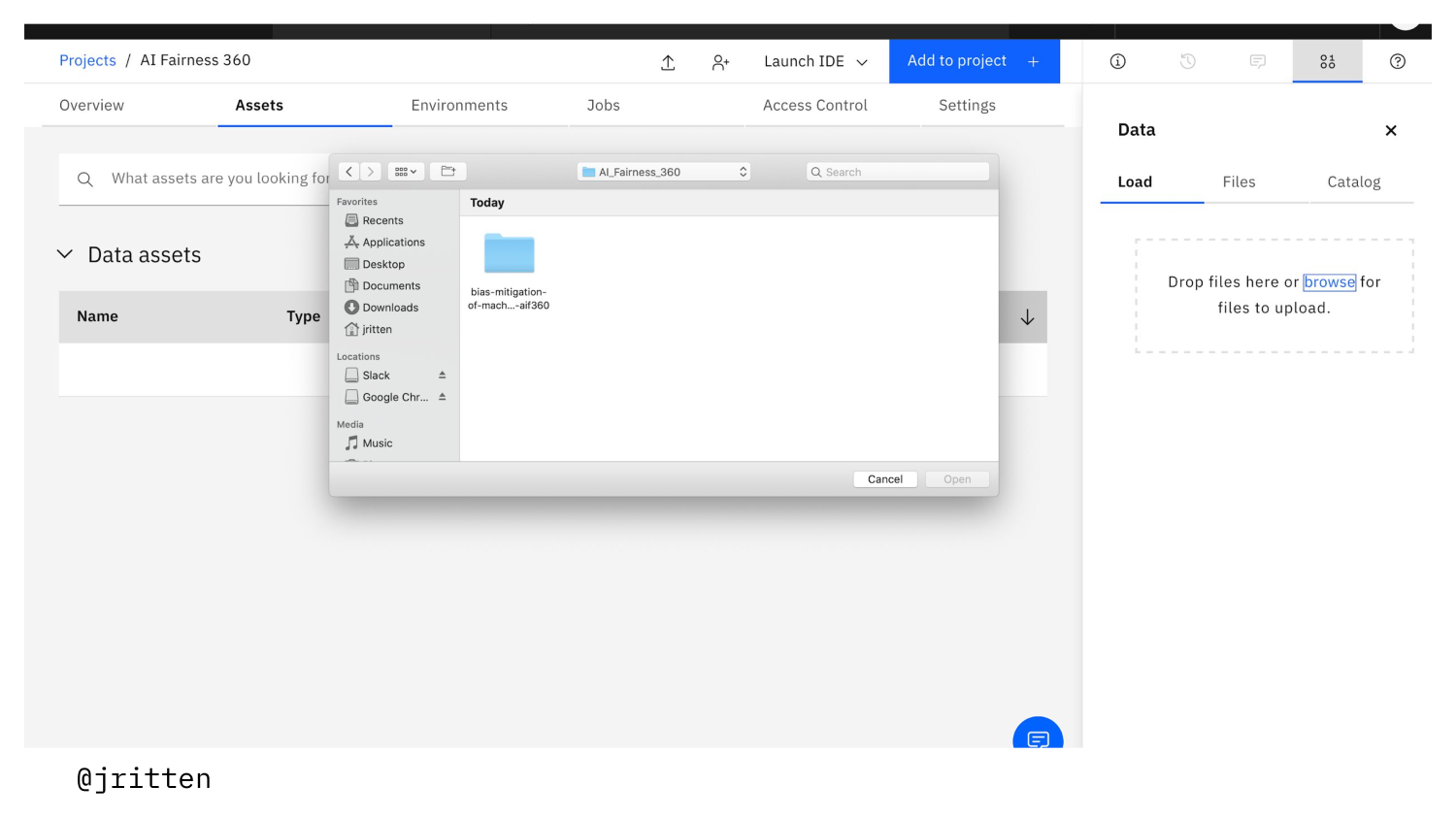

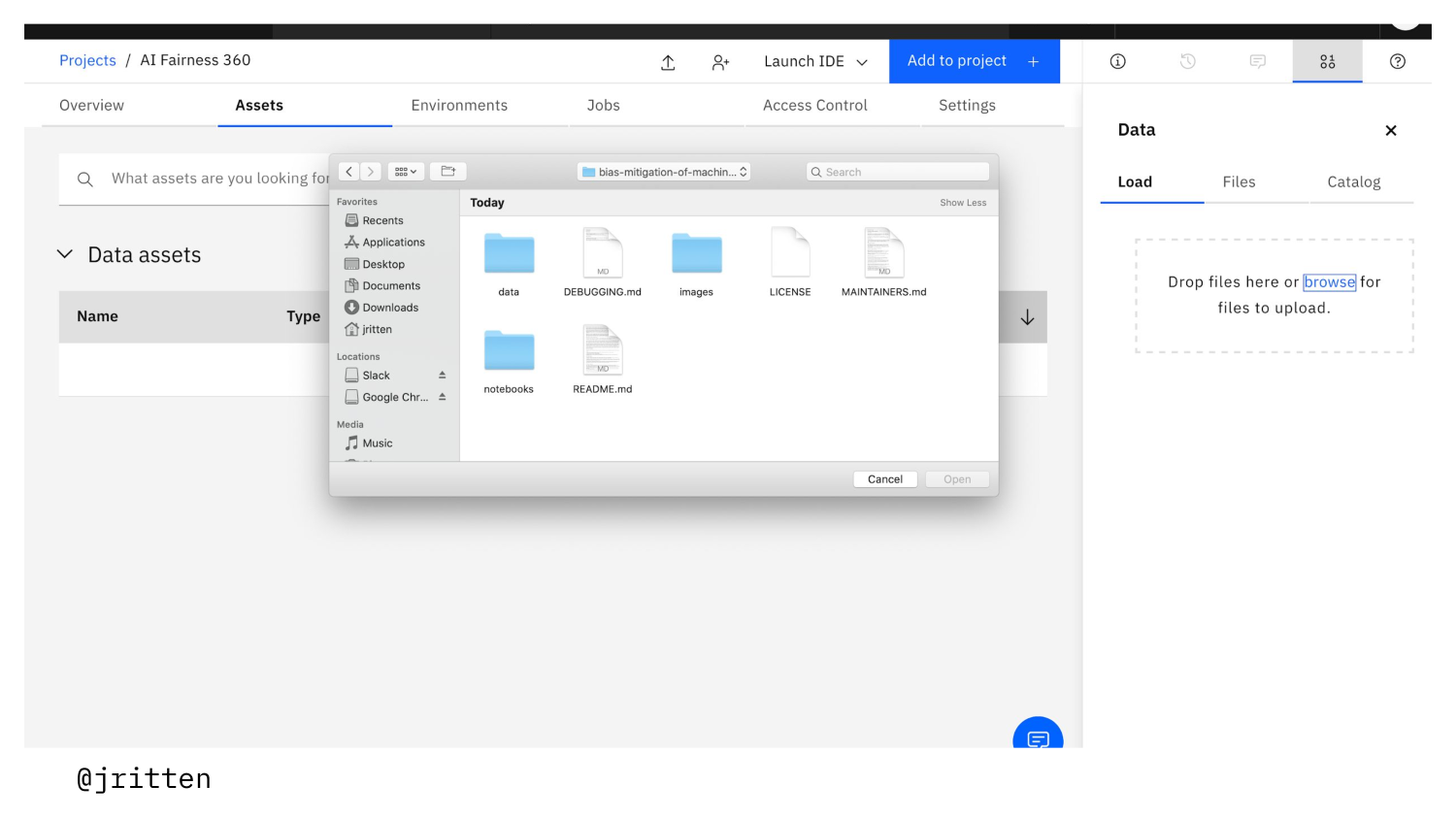

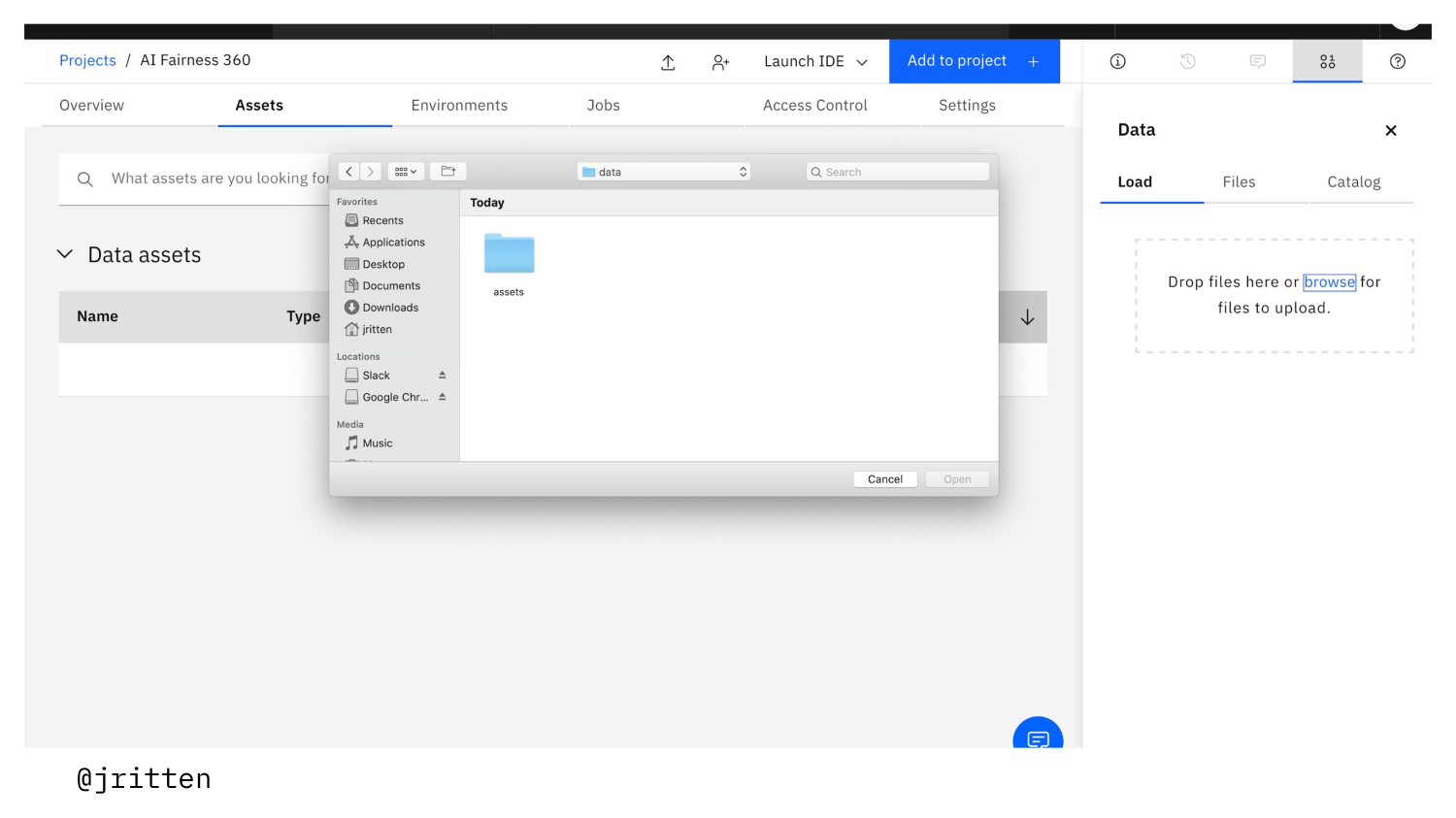

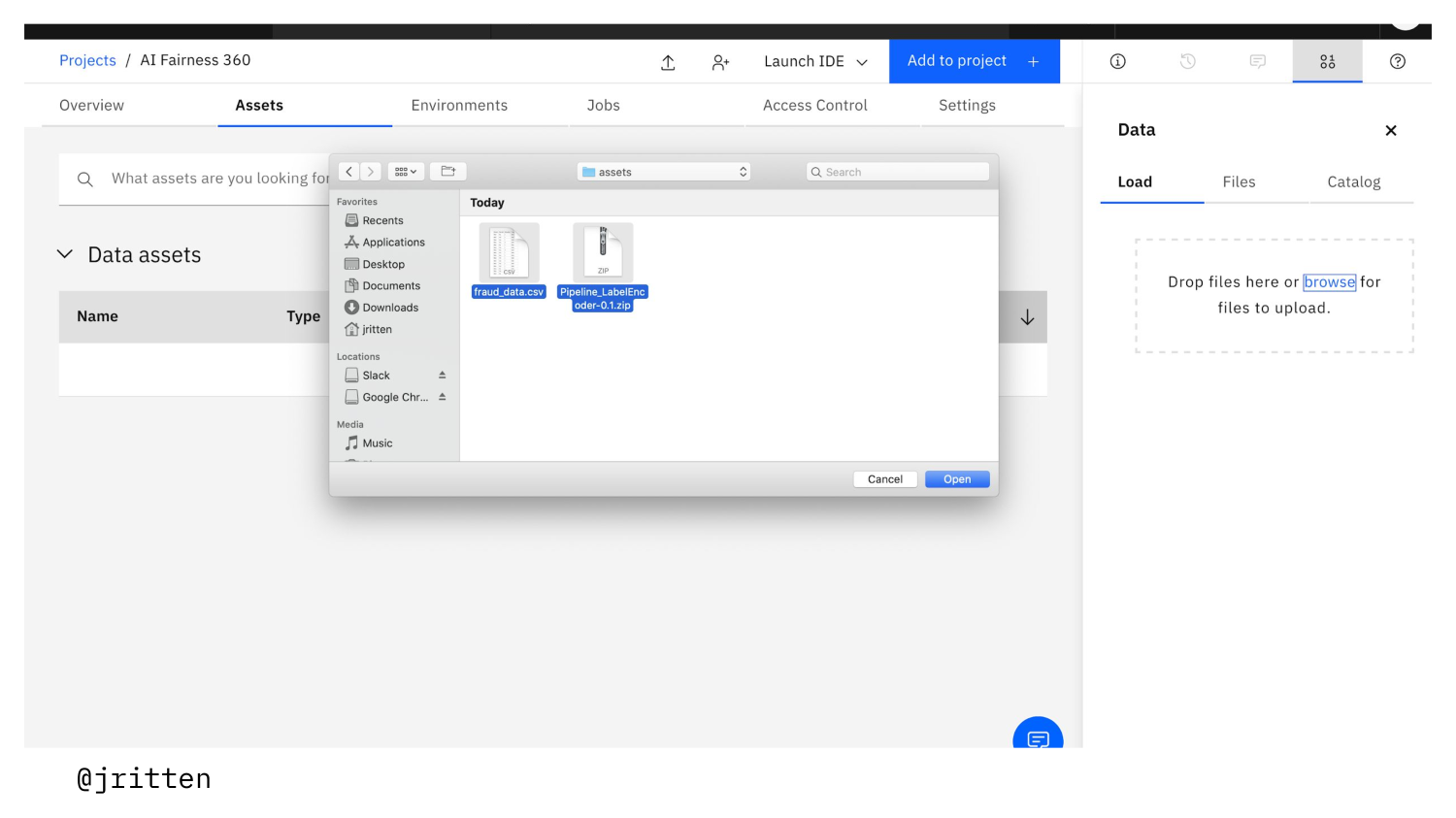

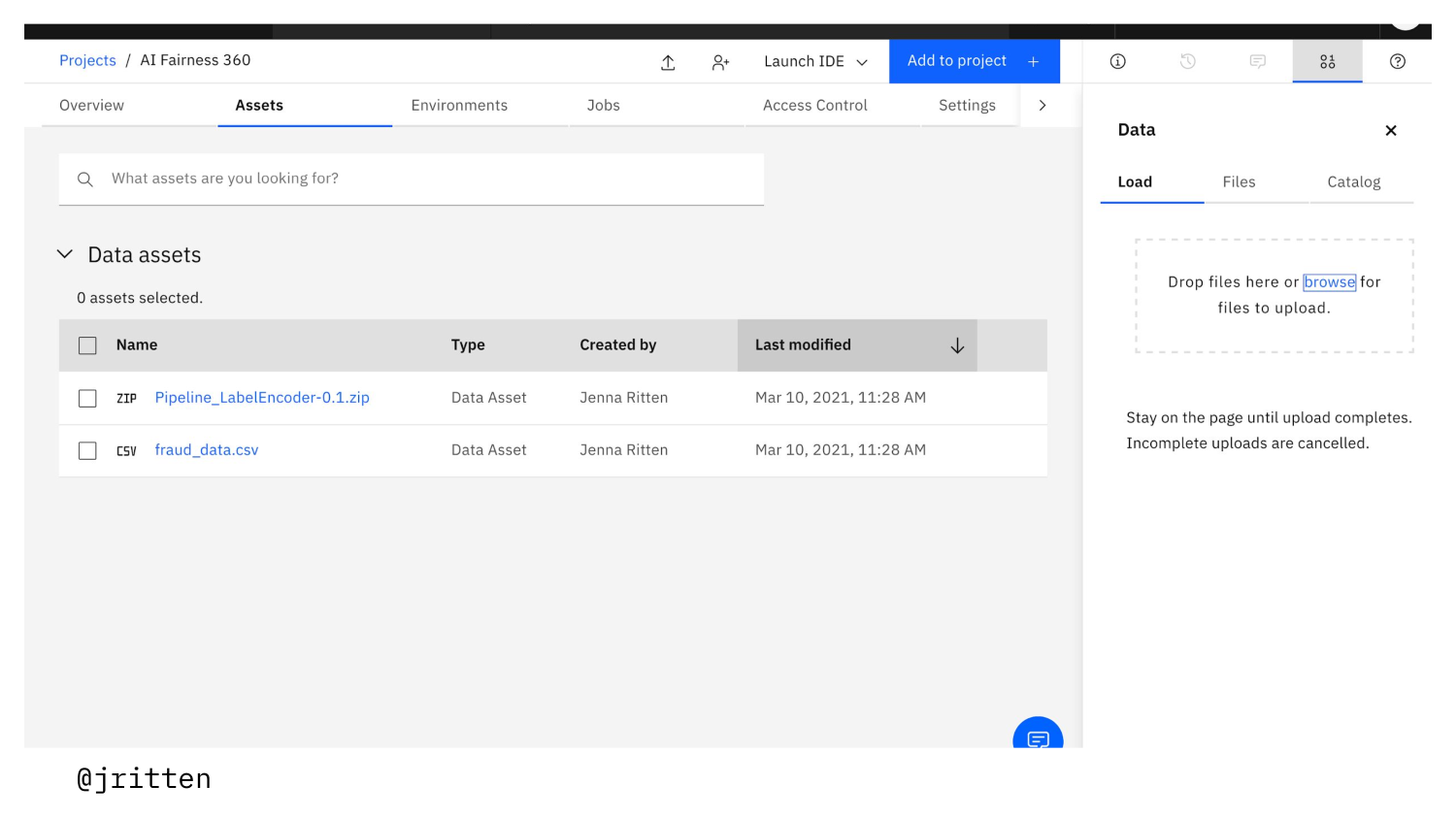

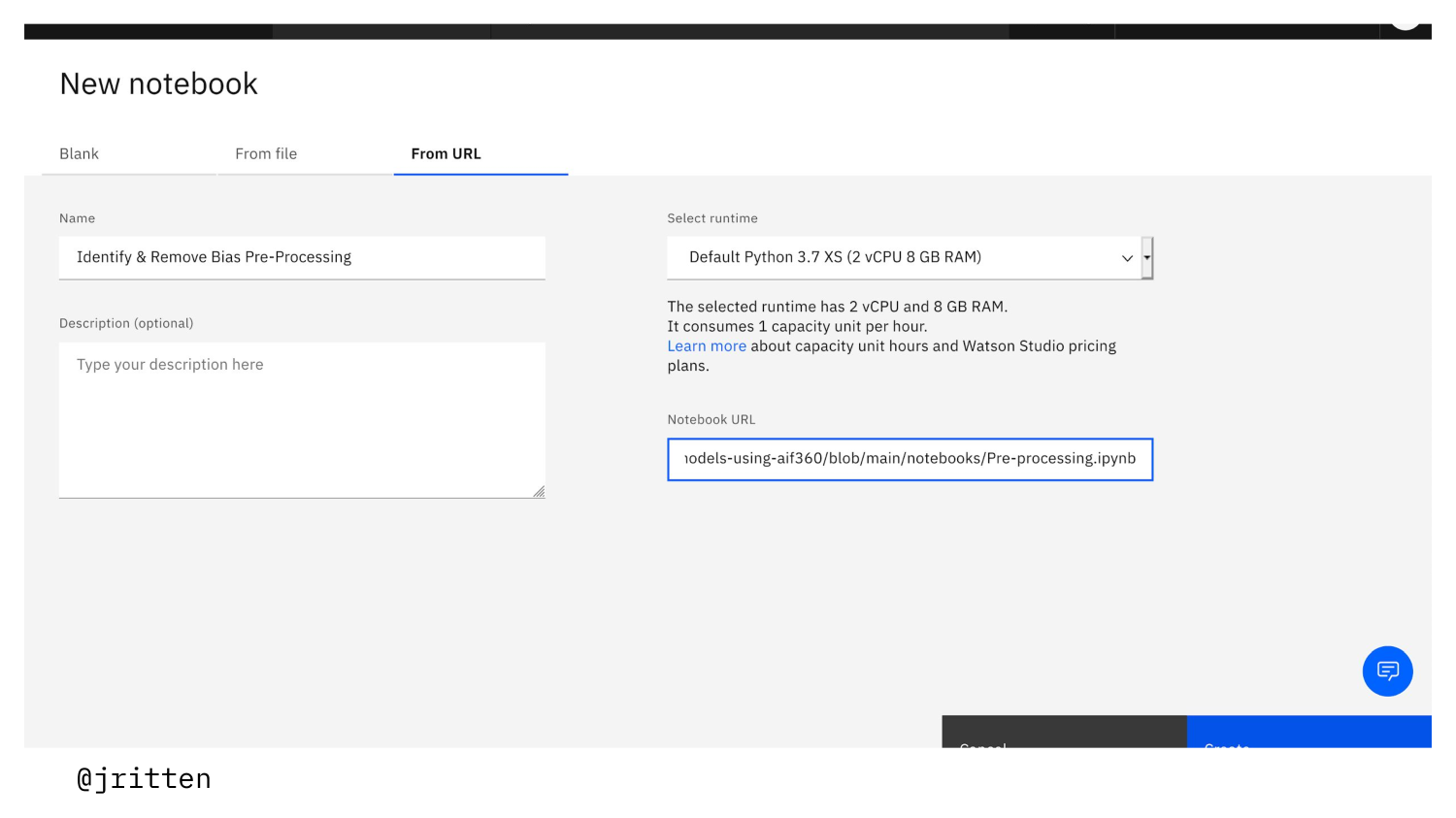

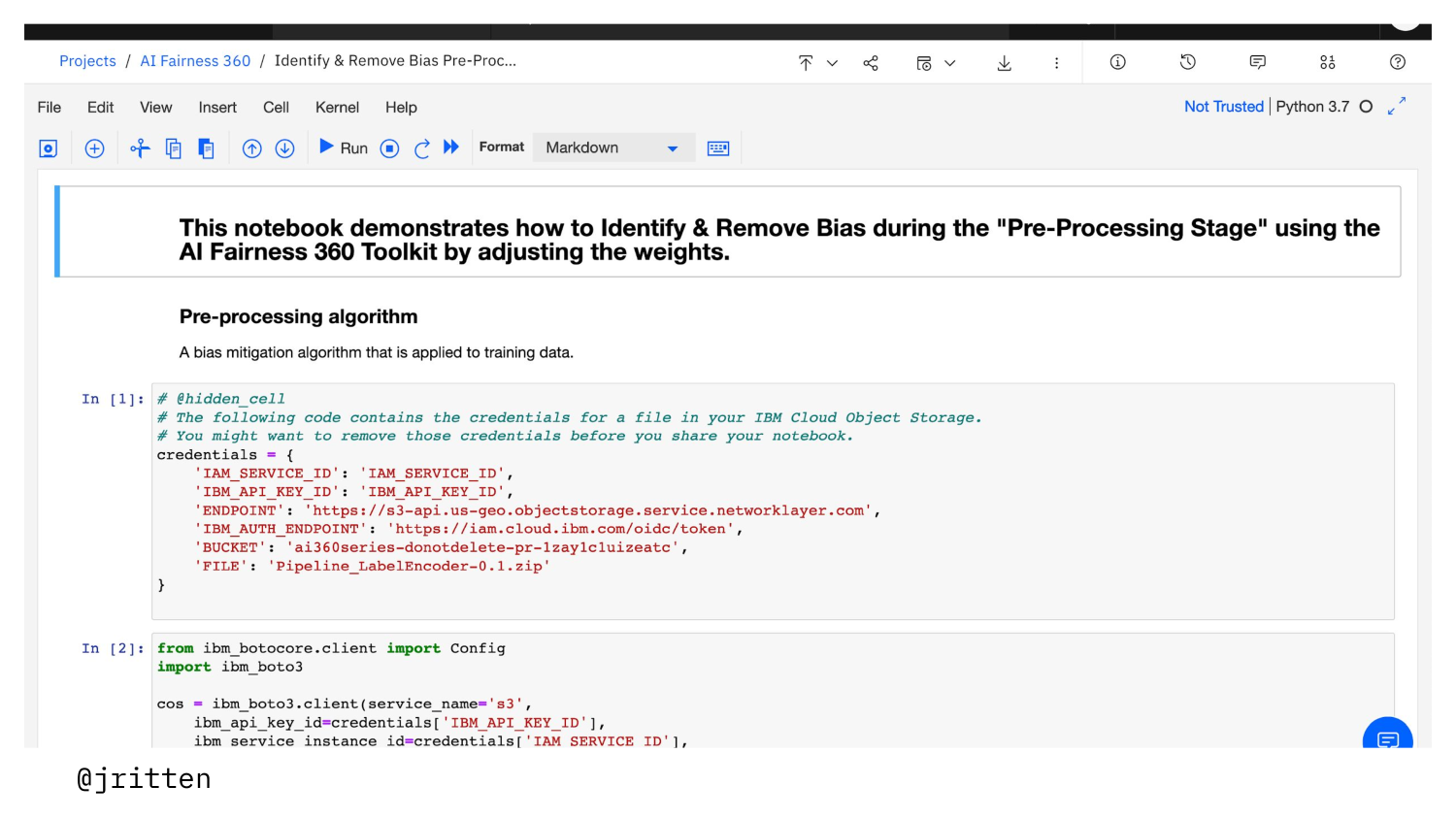

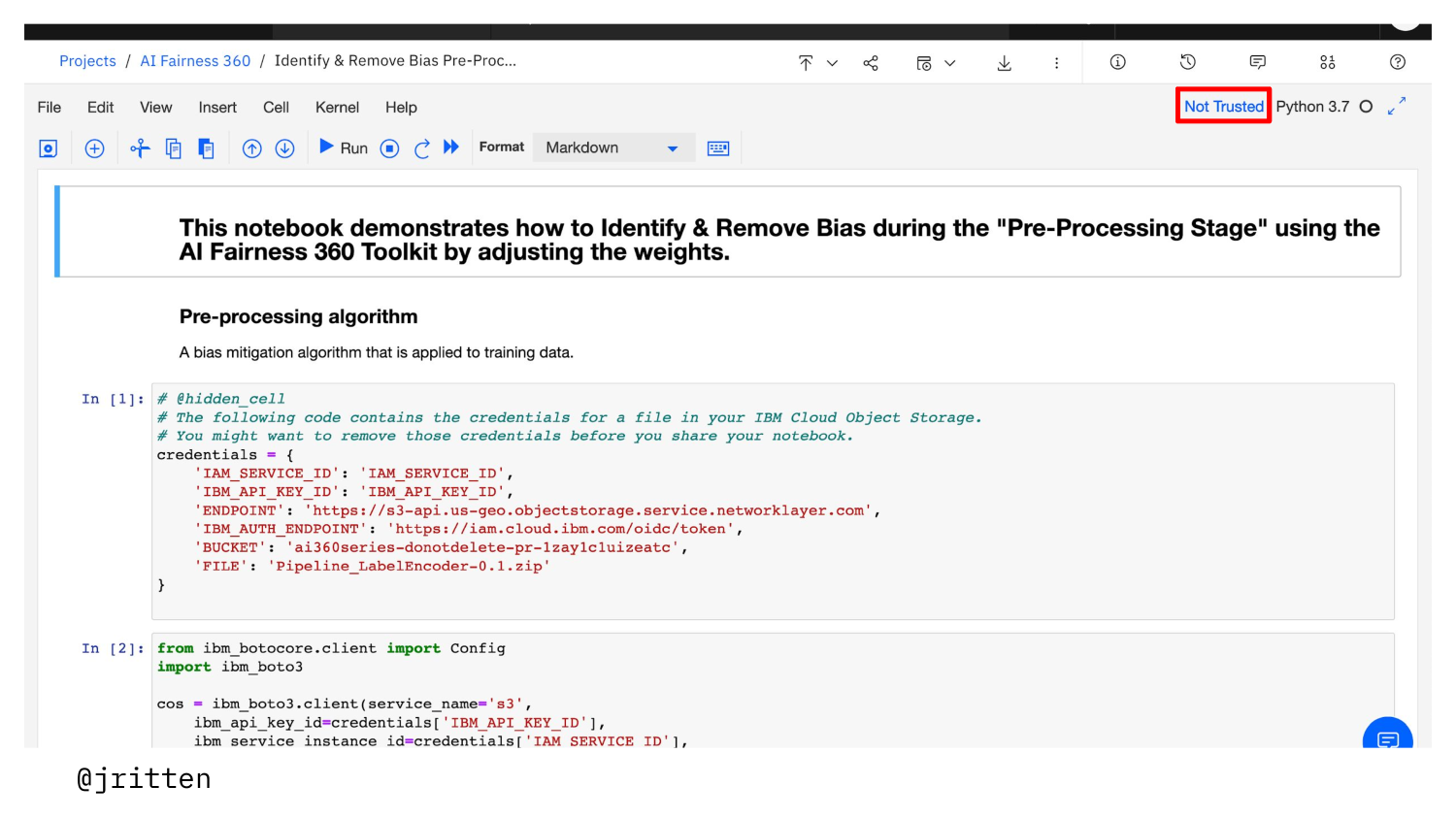

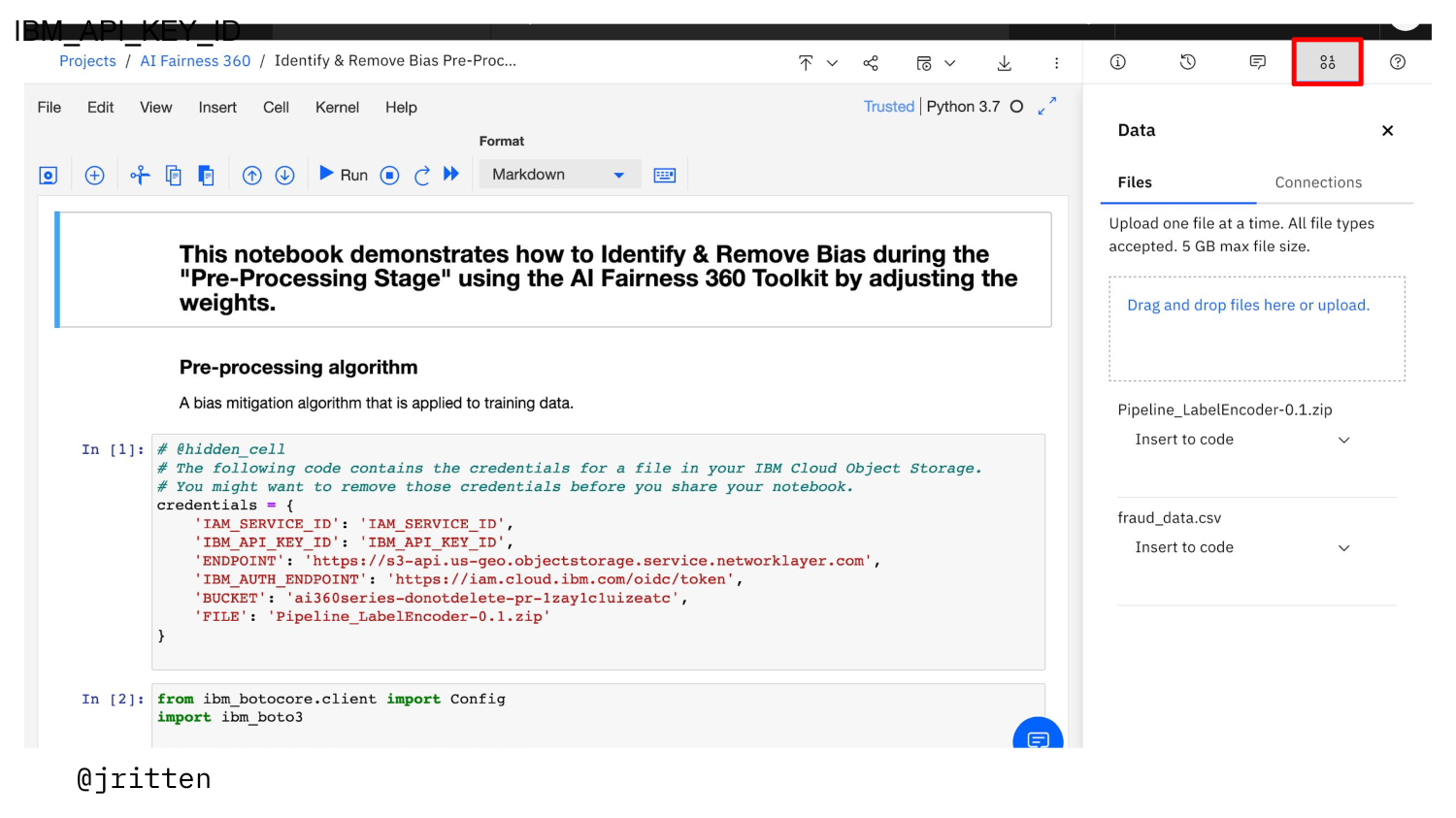

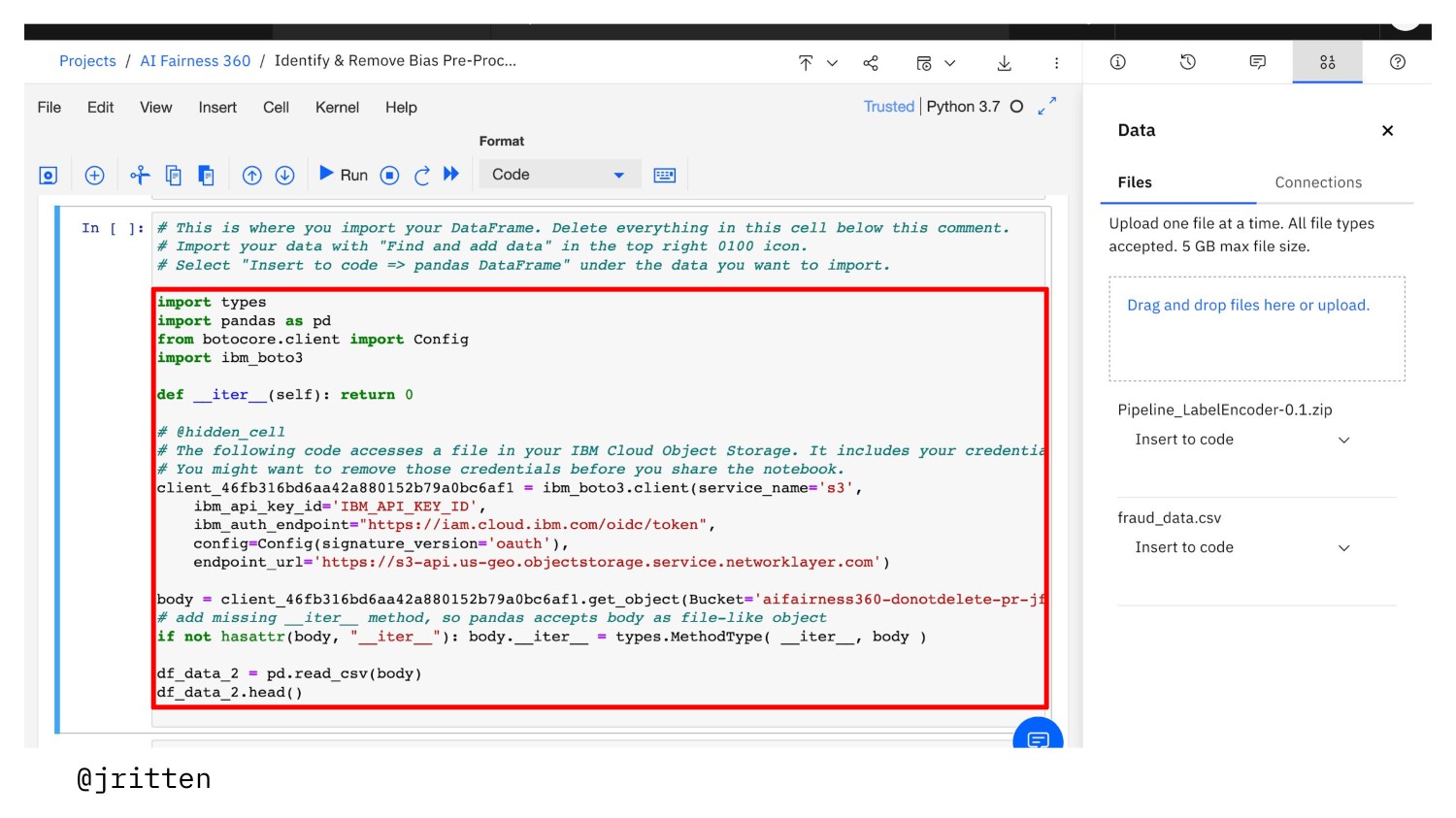

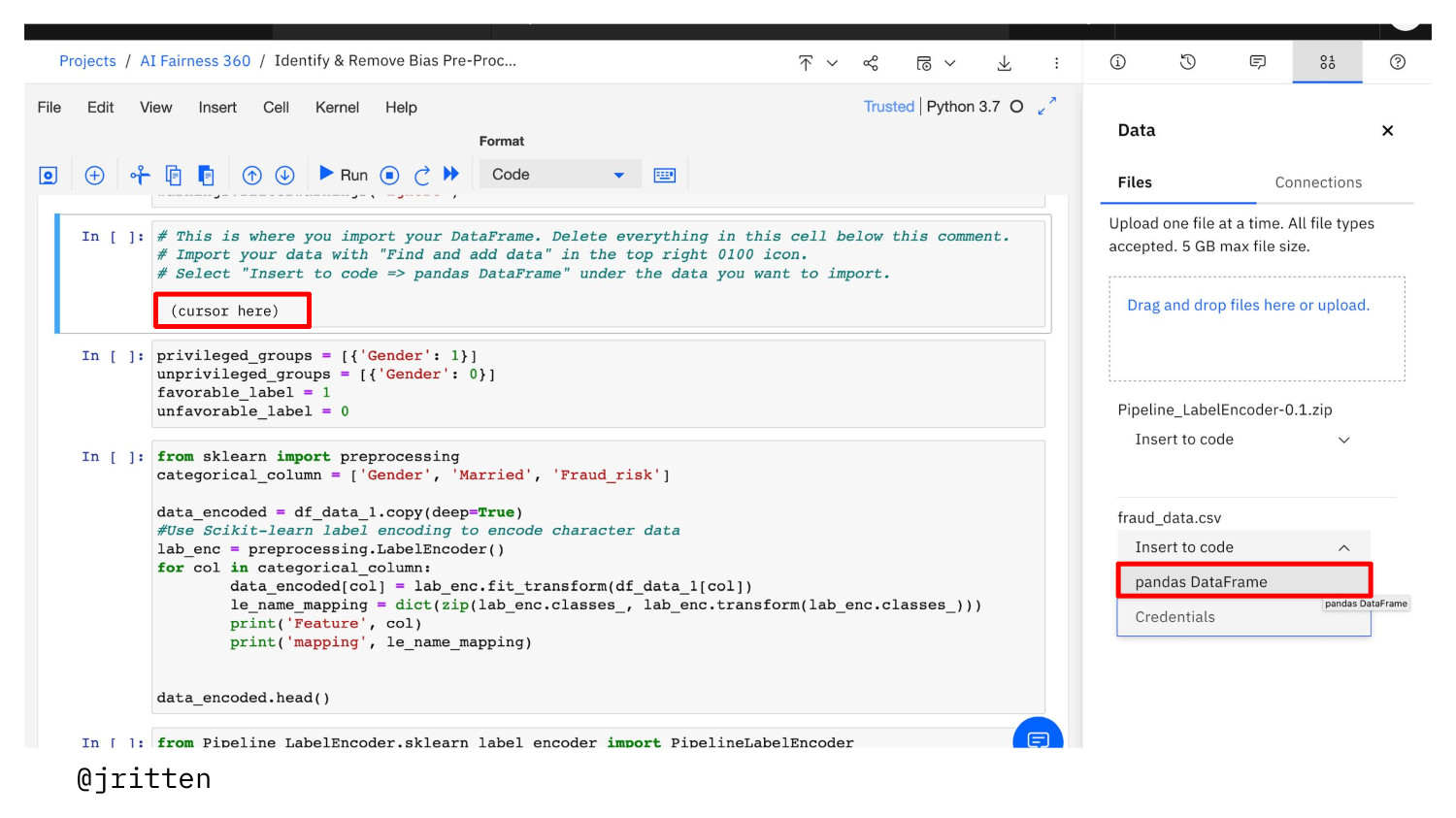

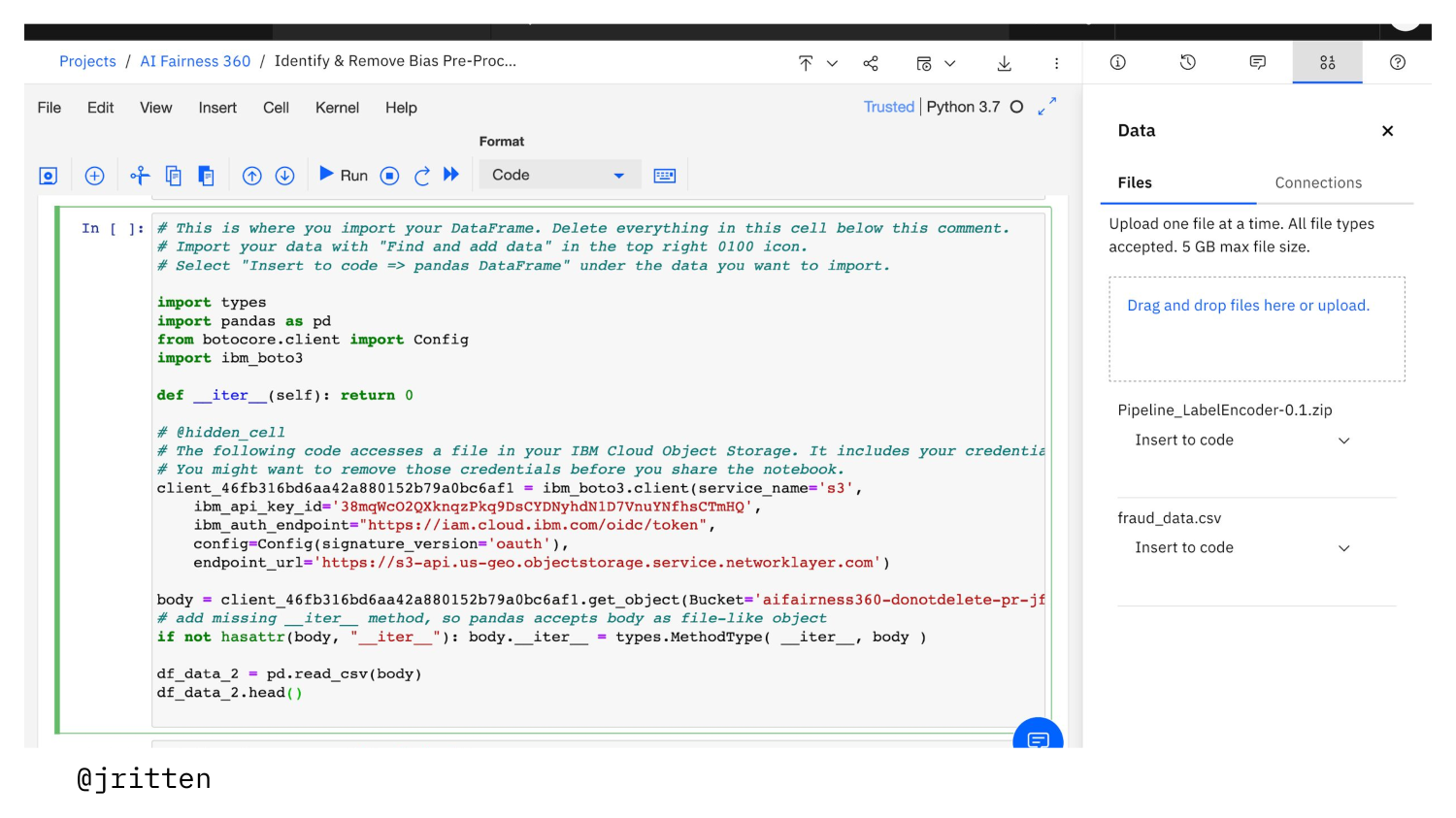

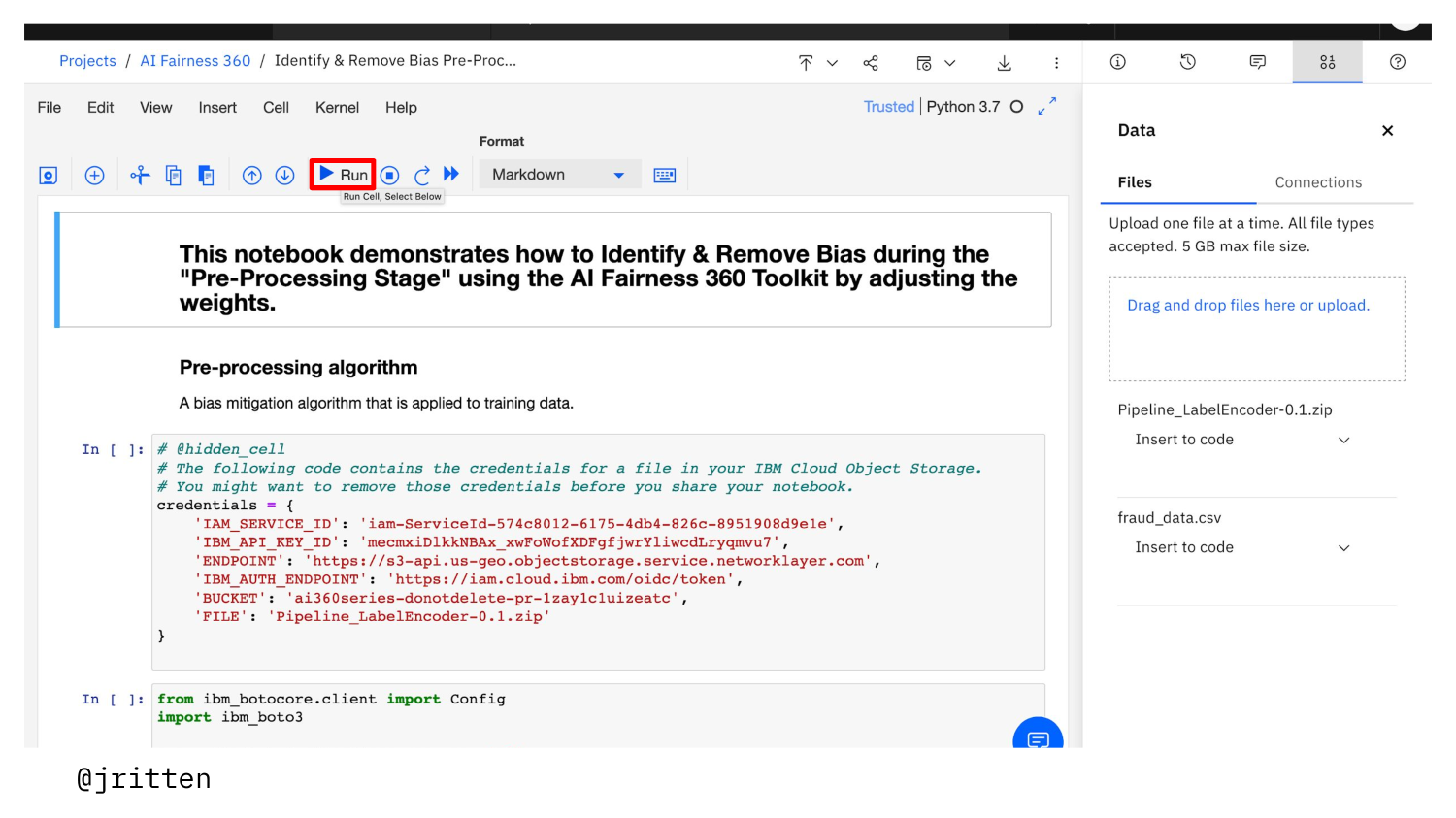

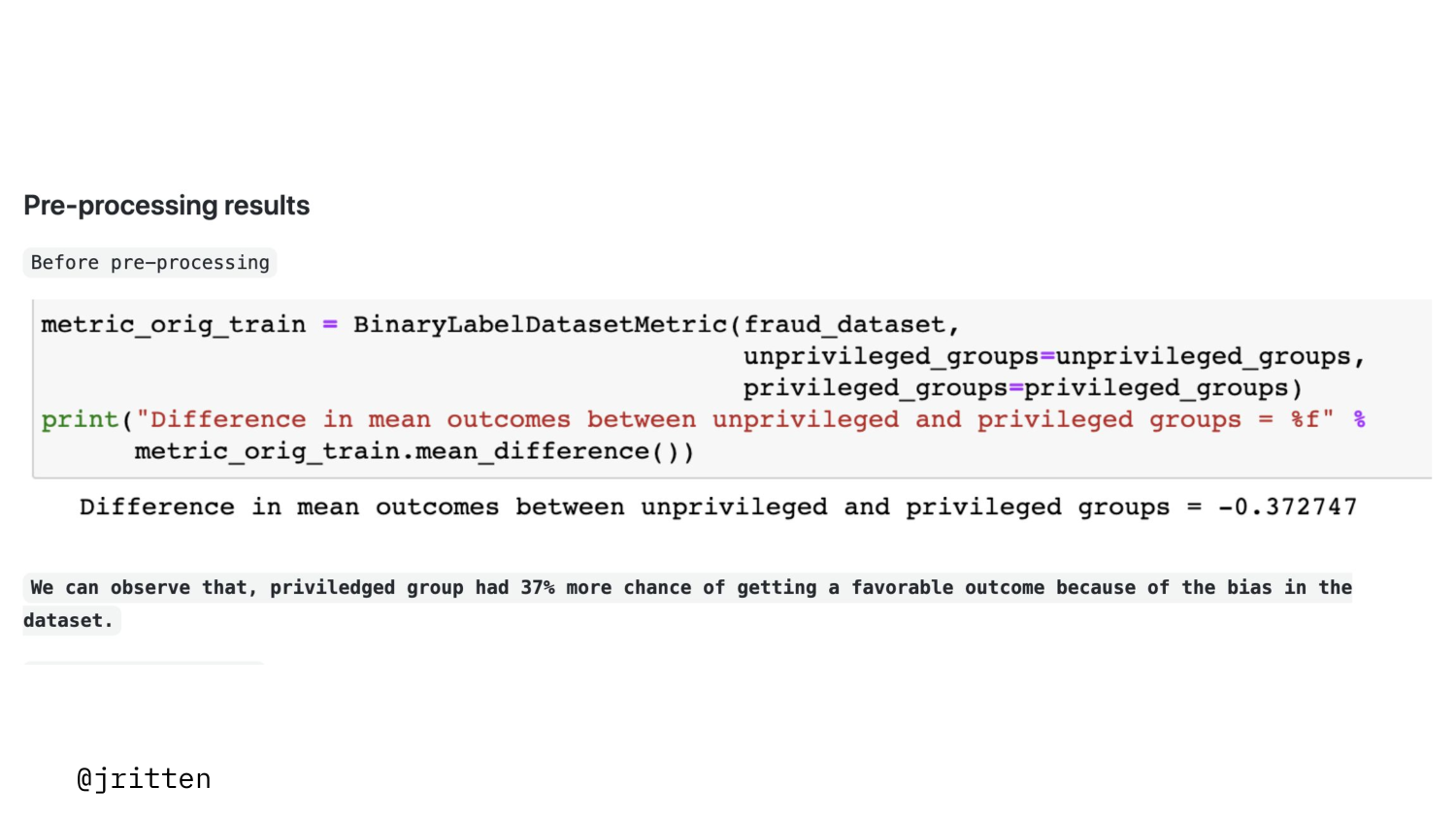

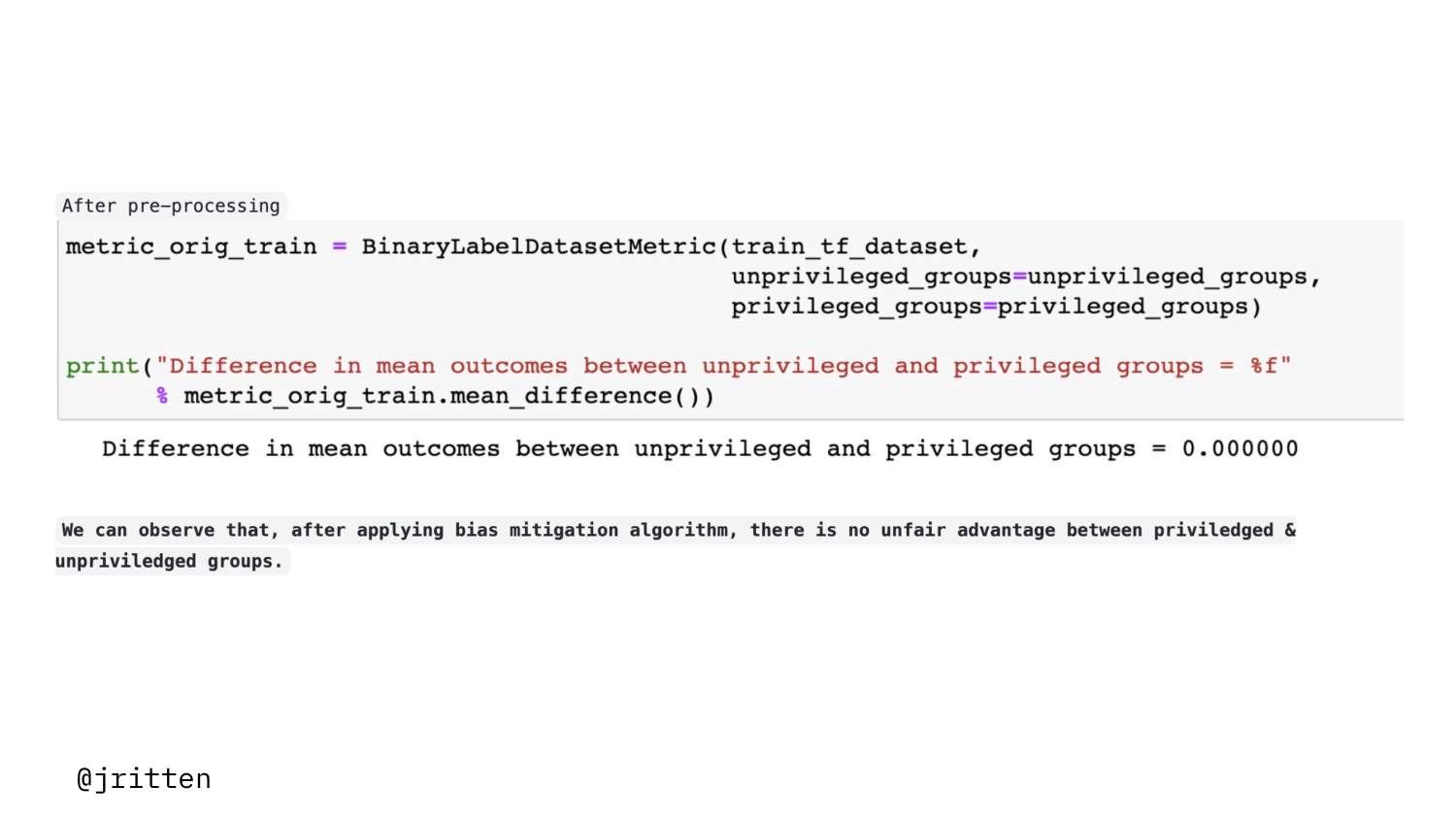

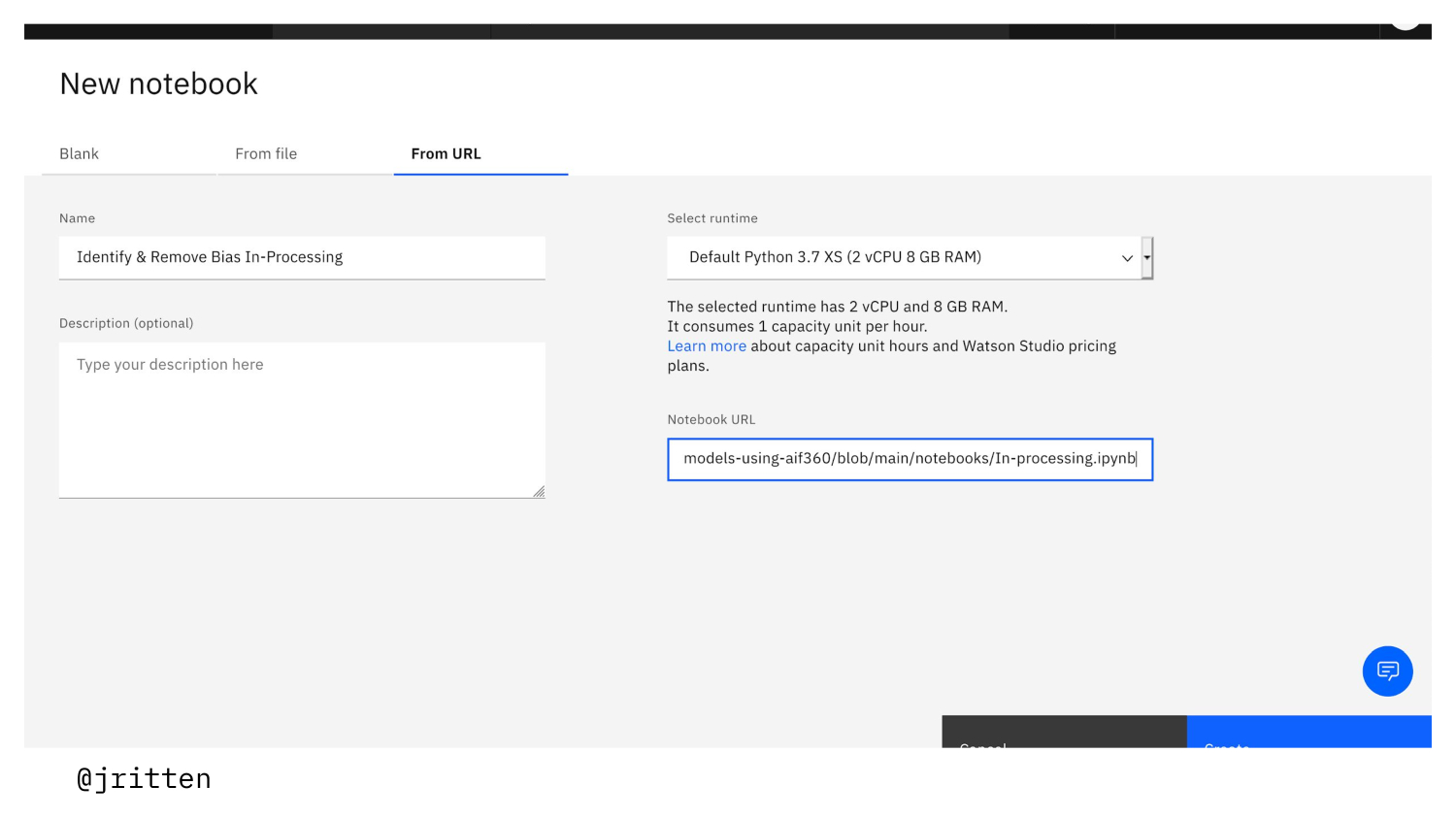

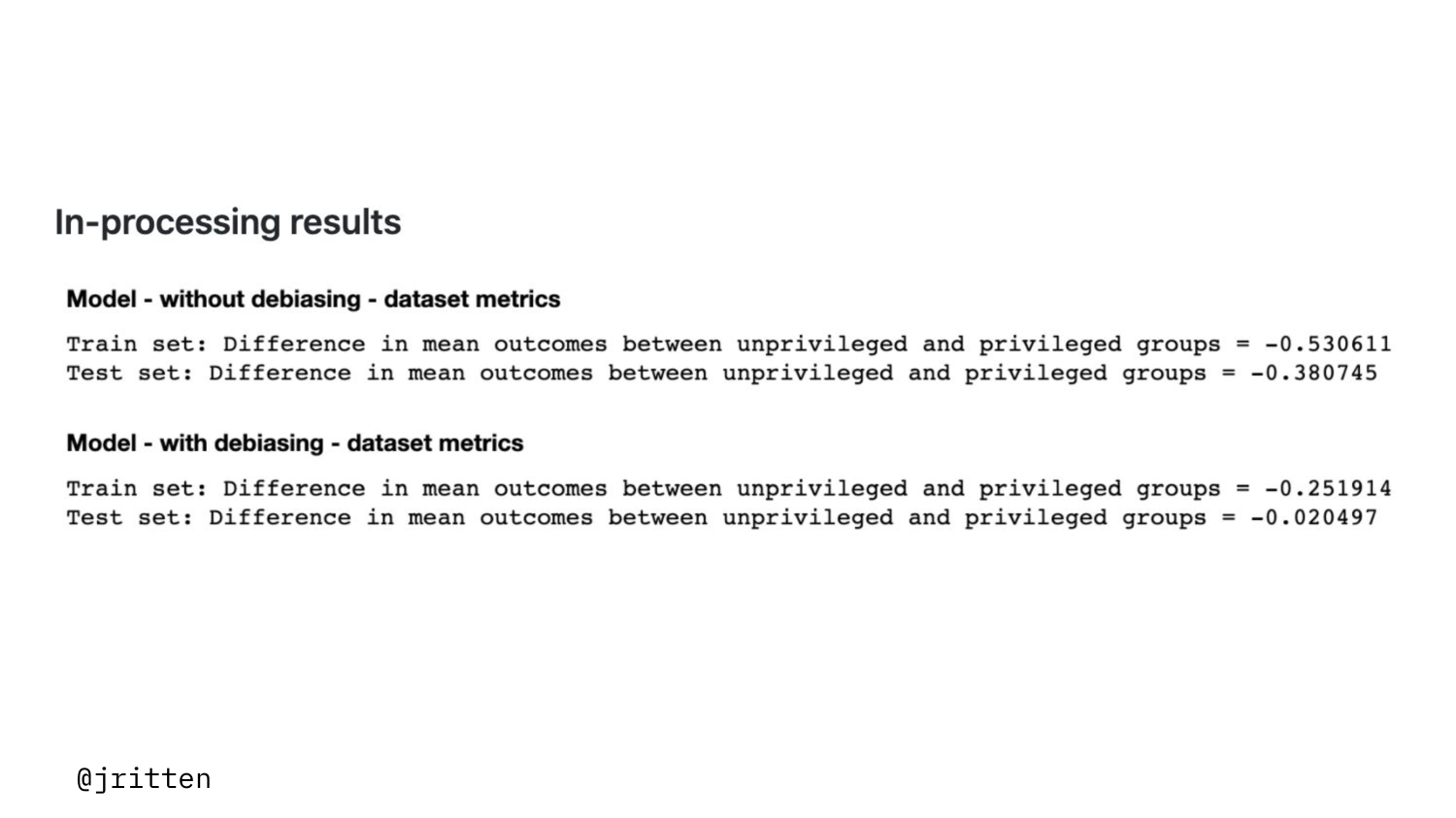

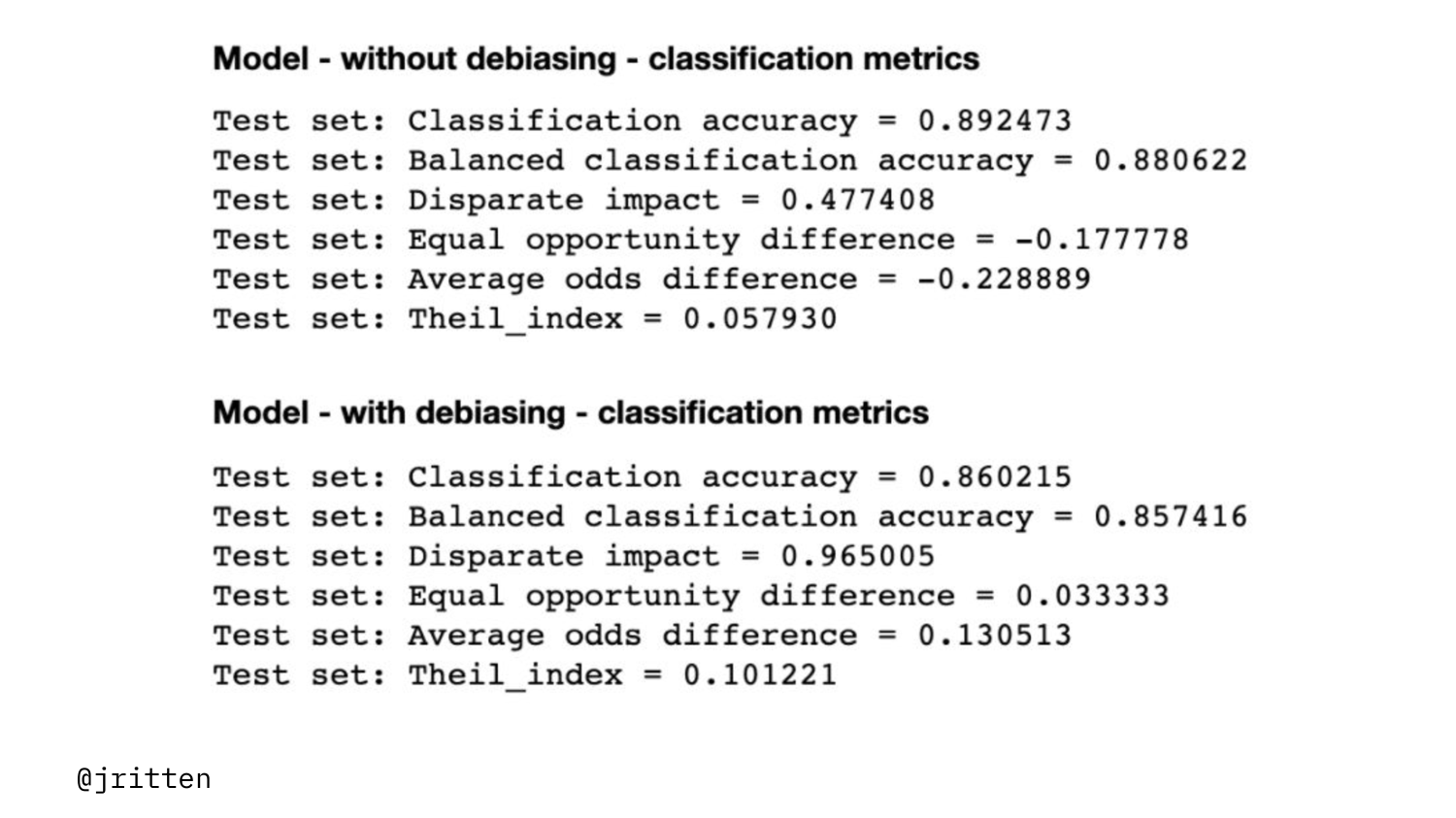

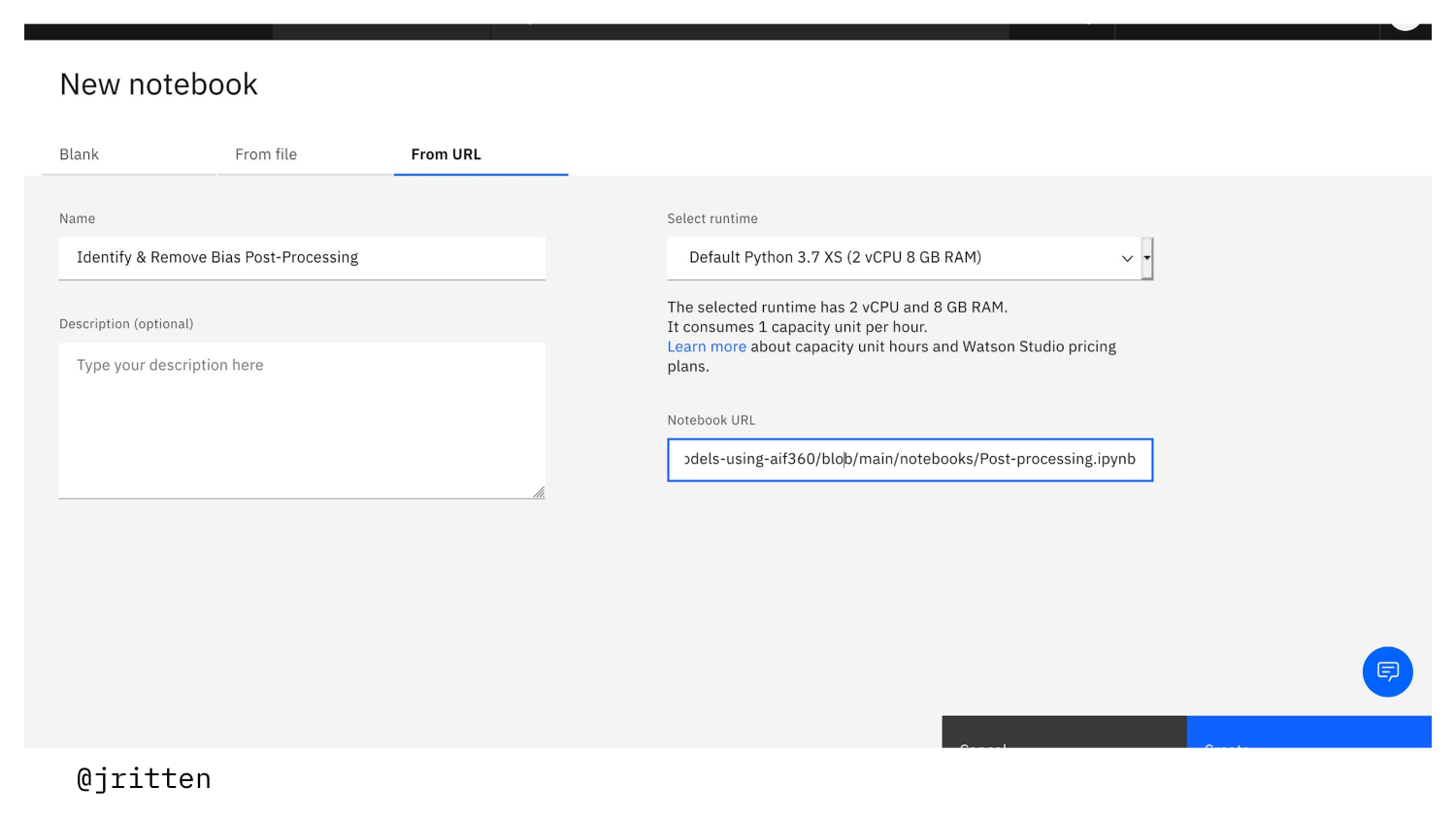

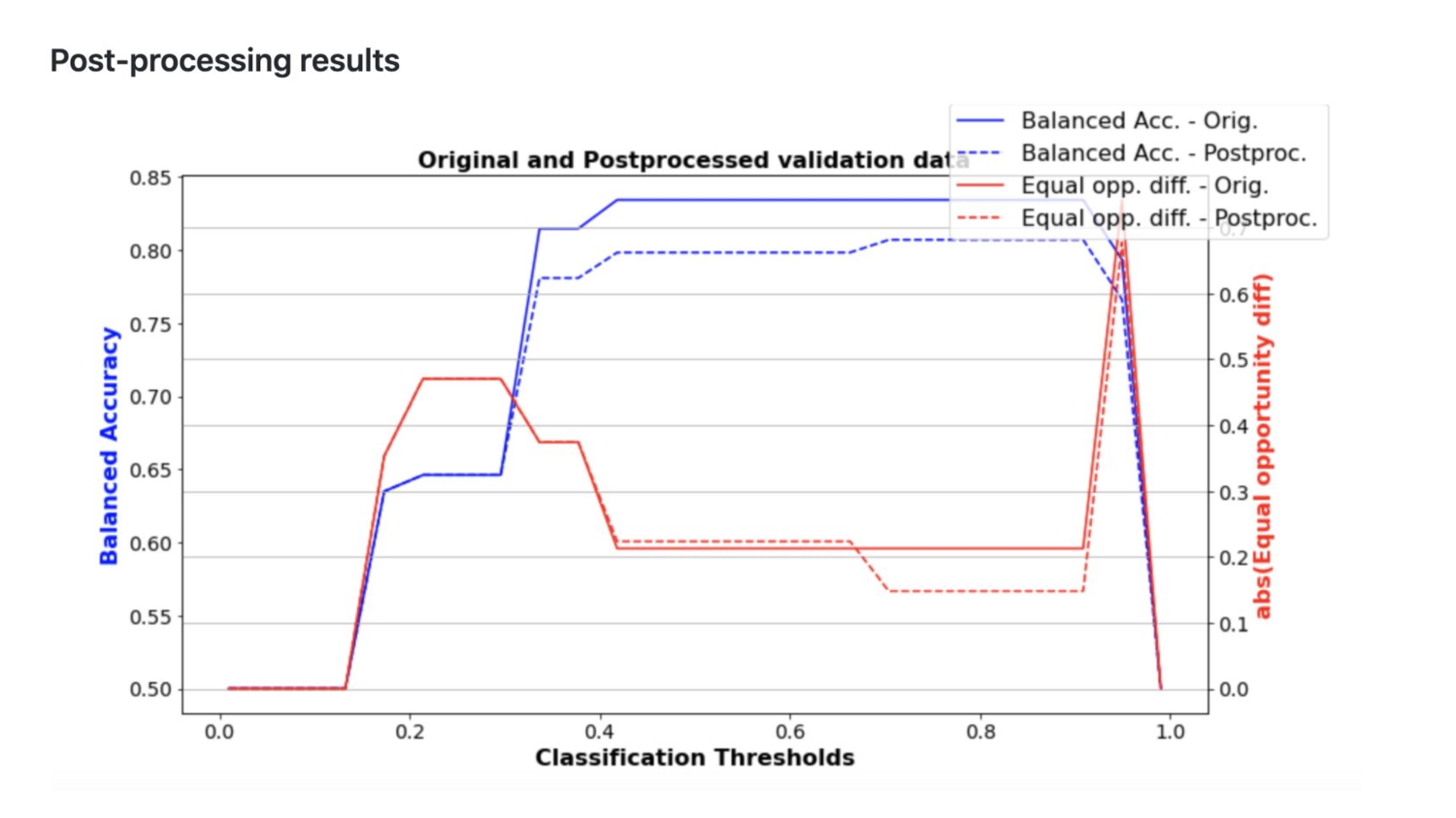

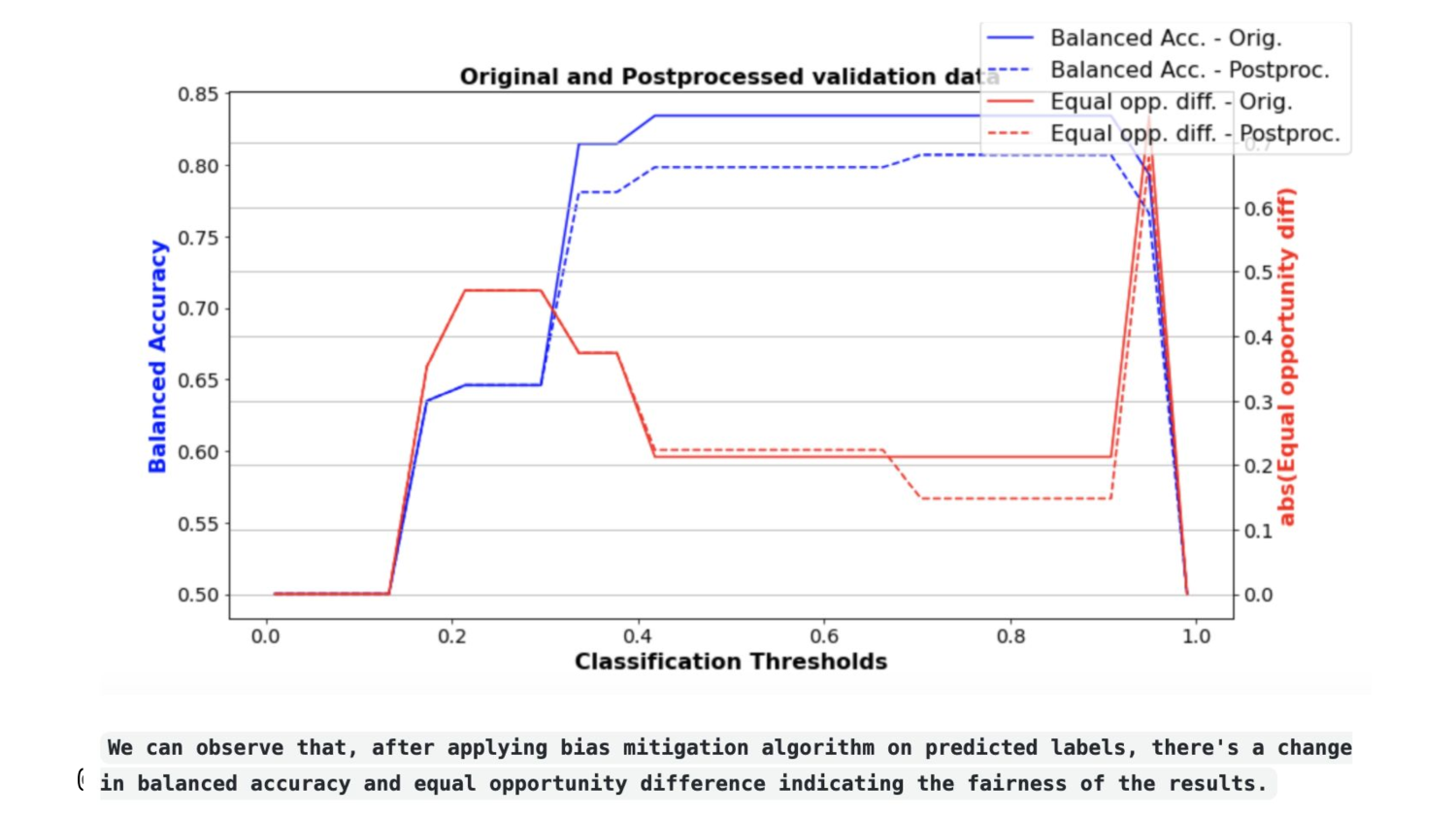

🔍 How do you remove bias from the machine learning models and ensure that the predictions are fair? What are the three stages in which the bias mitigation solution can be applied? In this workshop we will answer these questions to help you make informed decision by consuming the results of predictive models. 🔍 Fairness in data and machine learning algorithms is critical to building safe and responsible AI systems. While accuracy is one metric for evaluating the accuracy of a machine learning model, fairness gives you a way to understand the practical implications of deploying the model in a real-world situation. 🔑 In this workshop, you will use a diabetes data set to predict whether a person is prone to have diabetes. You’ll use IBM Watson Studio, IBM Cloud Object Storage, and the AI Fairness 360 Toolkit to create the data, apply the bias mitigation algorithm, and then analyze the results. After completing this workshop, you will understand how to: - Create a project using Watson Studio - Use the AI Fairness 360 Toolkit

Jenna Ritten (@jritten) is a cloud software developer turned developer advocate for IBM Cloud. She is an advocate for non-traditional people in tech, much like herself, and provides support by building communities for underrepresented people in tech.