CEPH

Introduction and Beyond

# whoami

- Author of "Learning Ceph" - Packt Publication , January 2015

- http://karan-mj.blogspot.fi/ --- Tune in for latest Ceph blogs

Karan Singh

System specialist cloud

CSC - IT Center for Science , Espoo

karan.singh@csc.fi

CSC-IT Center for Science

- Founded in 1971

- Finnish, Non Profit Organization

- Funded by Ministry of Education

- Connected Finland to Internet in 1988

- Most powerful academic computing facility in the Nordics.

- ISO27001:2013 Certification

- Production Cloud : http://pouta.csc.fi

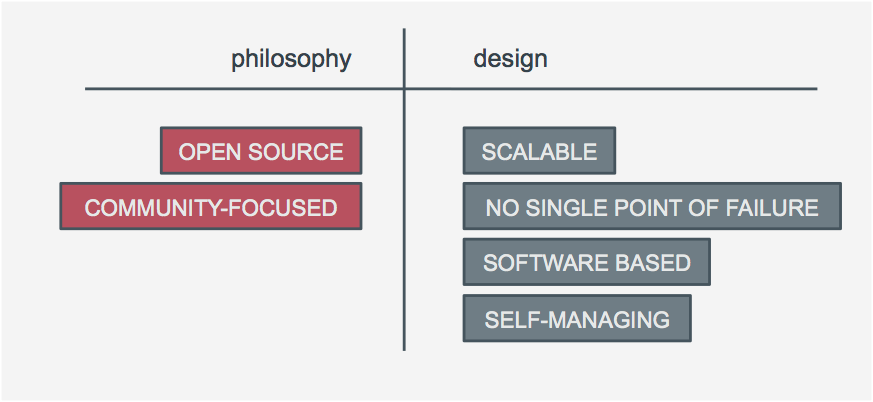

Our Need for a Storage System

- To build our own storage - Not to Buy

- Software Defined Storage

- Unified - File , block , object

- Open Source

- Ultra scalable & No SPOF

- Cloud ready

- No hardware lockin

- Low cost & Future ready

Challenges with proprietary systems

- Really expensive

- Vendor lock in

- Block,File & Object : Mostly separate product line

- Difficult to scale out

- Mostly closed source

- Hardware & Software tied together

How to overcome these challenges ??

Ceph is The Future of Storage

&

Answer to your storage problems

Ceph :: Philosophy & Design

The History

- 2003 : Sage Weil developed Ceph as a PhD project

- 2003 - 2007 : Research period for Ceph

- 2006 : Open sourced under LGPL

- 2007 - 2011 : DreamHost incubated Ceph

- April 2012 : Sage Weil founded InkTank

- April 2014 : RehHat acquired InkTank

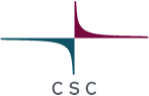

Ceph releases

- Quick release cycles

- Every alternate release is LTS

Ceph :: Requirements

Servers with several DISKS

Networking b/w these servers

Operating System

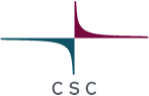

How Ceph Stack looks like

Ceph :: Building Blocks

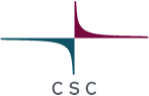

Ceph :: Simplified

Ceph is Software Defined Storage

and every component in Ceph

is a daemon

* Ceph MON ( Monitor )

* Ceph OSD ( Object Storage Device )

* Ceph MDS ( Ceph Filesystem )

* Ceph RGW ( Object Storage Service )

Ceph Components :: Quick Overview

- Tracks & Monitor the health of entire cluster

- Maintains the map of cluster state

- "Do Not" store DATA

- Stores the actual data on physical disks

- One OSD per HDD ( recommended )

- Stores everything in the form of "Object"

MON

OSD

- The Filesystem Part of Ceph

- Tracks file hierarchy and stores metadata

- Provides distributed File-system of any size

- The Object Storage part of Ceph

- Provides RESTful gateway to Ceph cluster

- Amazon S3 and OpenStack Swift compatible API

MDS

RGW

Ceph Components :: Quick Overview

Live Demonstration

Getting Your First Ceph Cluster

Up & Running Is Wicked Easy

Step-1 : # git clone https://github.com/ksingh7/Try-Ceph.git

Step-2 : # vagrant up

And you are done

Recorded session available at http://youtu.be/lki1fQptIRk

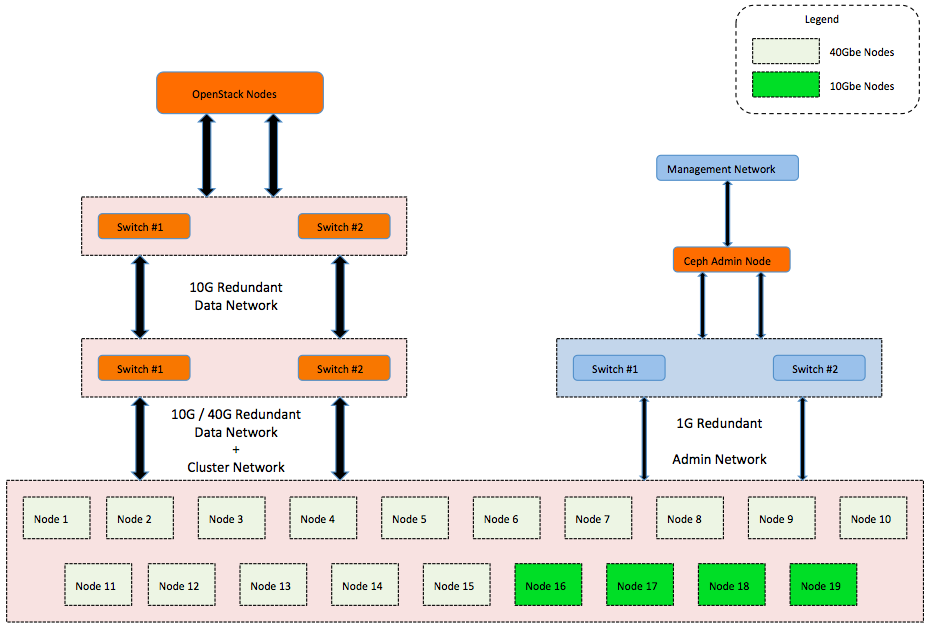

How Do We Do Ceph @ CSC - [1]

- 15 x HP DL 380e

- Intel Xeon 2.10 GHz

- 24GB Memory

- 12 x 3 TB SATA Disk

- 40Gb Infiniband

- 4 x HP SL4540

- Intel Xeon 2 x 2.30 GHz

- 192GB Memory

- 60 x 4 TB SATA Disk

- 10Gb Infiniband

- 2 x Mellanox IB SW

- Ceph Firefly 0.80.7

- Erasure Coding

- CentOS 6.5 , 3.10.X

- OpenStack ICH

- Gangila Monitoring

- Ansible for Deployment & Management

Total Raw Capacity

1.5 PB

How Do We Do Ceph @ CSC - [2]

- 4 x HP SL4540

- Intel Xeon 2 x 2.30 GHz

- 192GB Memory

- 60 x 4 TB SATA Disk

- 10Gbe

- 2 x 10Gbe SW

- Ceph Firefly 0.80.7

- CentOS 6.5 , 3.10.X

- OpenStack ICH

- Ansible for Deployment & Management

Total Raw Capacity

960 TB

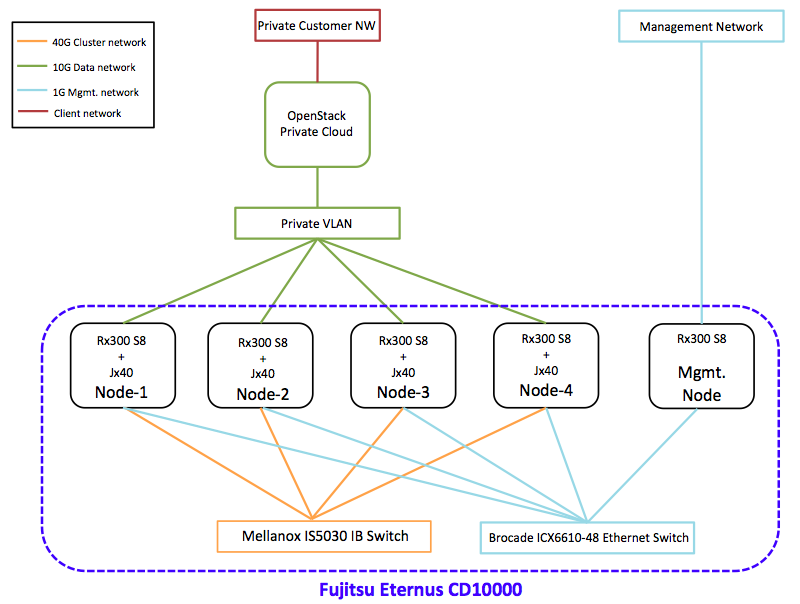

How Do We Do Ceph @ CSC - [3]

- 4 x Primergy RX300 Storage Nodes

- 4 x Eternus JX40 JBOD

- 1 x Primergy Management Node

- 1 x Brocade 1G Mgmt. S/W

- 1 x Mellanox 40G IB Cluster N/W S/W

Total Raw

Capacity

250 TB

Fujitsu Storage ETERNUS CD10000

- Hyperscale Storage System

- H/w by Fujitsu , Ceph S/w from RedHat

- Fujitsu's GUI / CLI Storage Management S/w

- CSC is the first company to use this for POC testing

- Product comes with Fujitsu Enterprise Support

For more information on this product check out

http://www.fujitsu.com/global/products/computing/storage/eternus-cd/index.html

Lessons Learnt

- Never use RAID underneath Ceph OSD. Ceph is very smart and has its own way of doing things .

- Use smaller machines and use as many of them

- Design your cluster for I/O not for Raw TB

- Test recovery situations - Go & literally pull the power cables

- Reboot your machine regularly - uptime XXXX days is no longer cool.

- Use SSD's if you can

Questions ?

- Email : Karan.Singh@csc.fi , Karan_Singh1@live.com

- Twitter : @karansingh0101

- Github : https://github.com/ksingh7

- Blog : http://karan-mj.blogspot.fi/

https://www.packtpub.com/big-data-and-business-intelligence/learning-ceph

Want more about Ceph ? Read the Book

Ceph Introduction , BootStrap , and Learnings

By Karan Singh

Ceph Introduction , BootStrap , and Learnings

- 40,661