The intelligence explosion revisited

Karim Jebari and Joakim Lundborg

Outline

- The AI X-risk claim

- The solution: Friendly AI

- Why Friendly AI is a distraction

The AI X-risk claim

- AI can be created

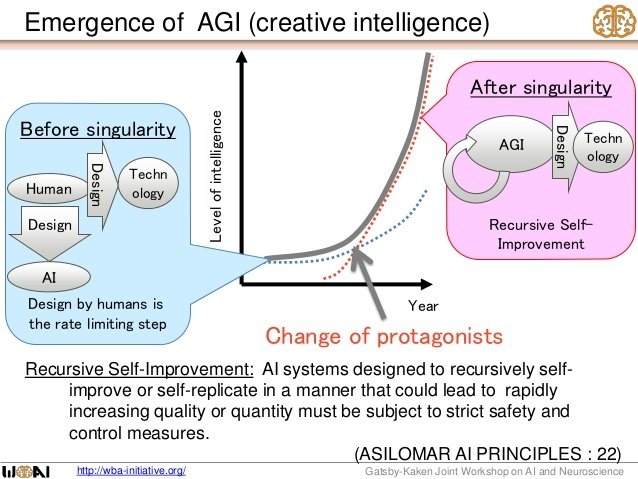

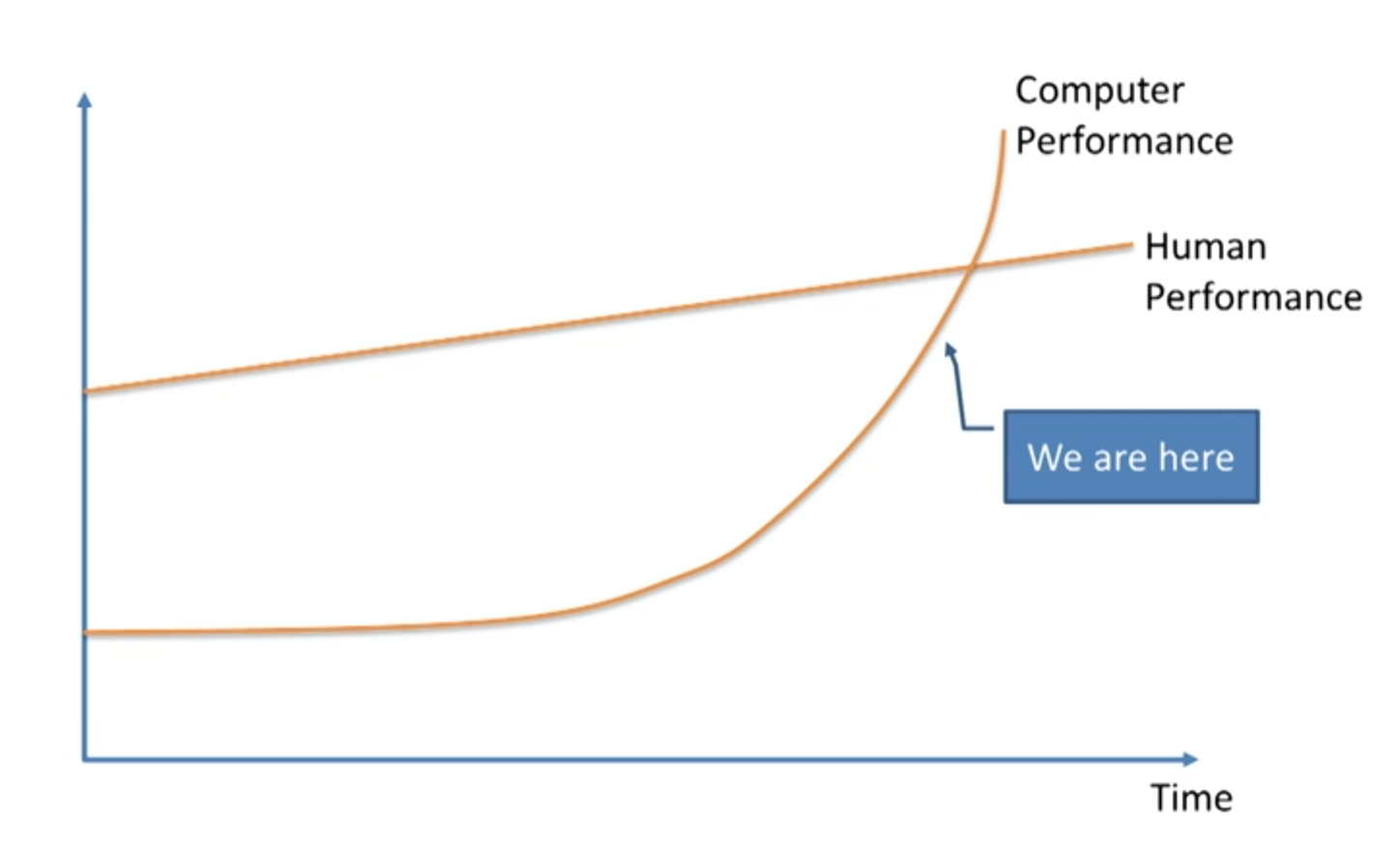

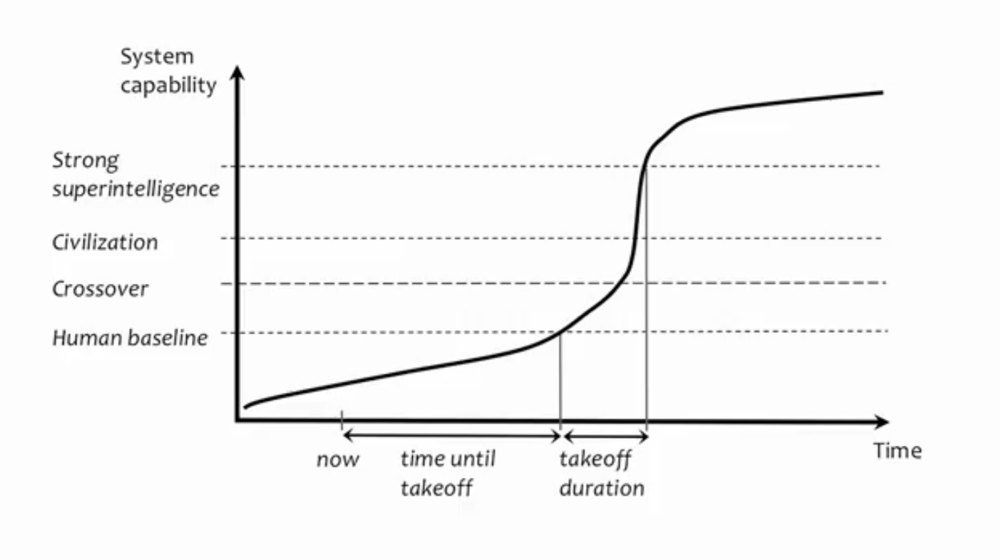

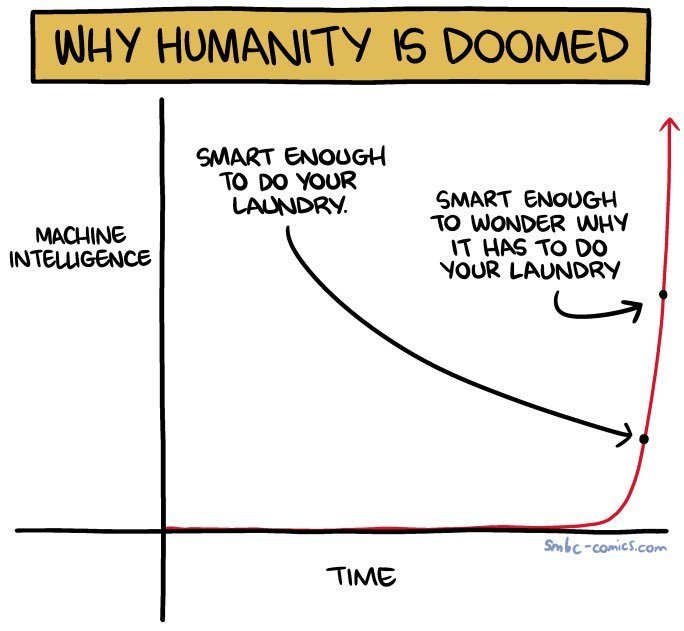

- Recursive improvement: AI --> ASI

- ASI cannot be controlled

- We need Friendly AI

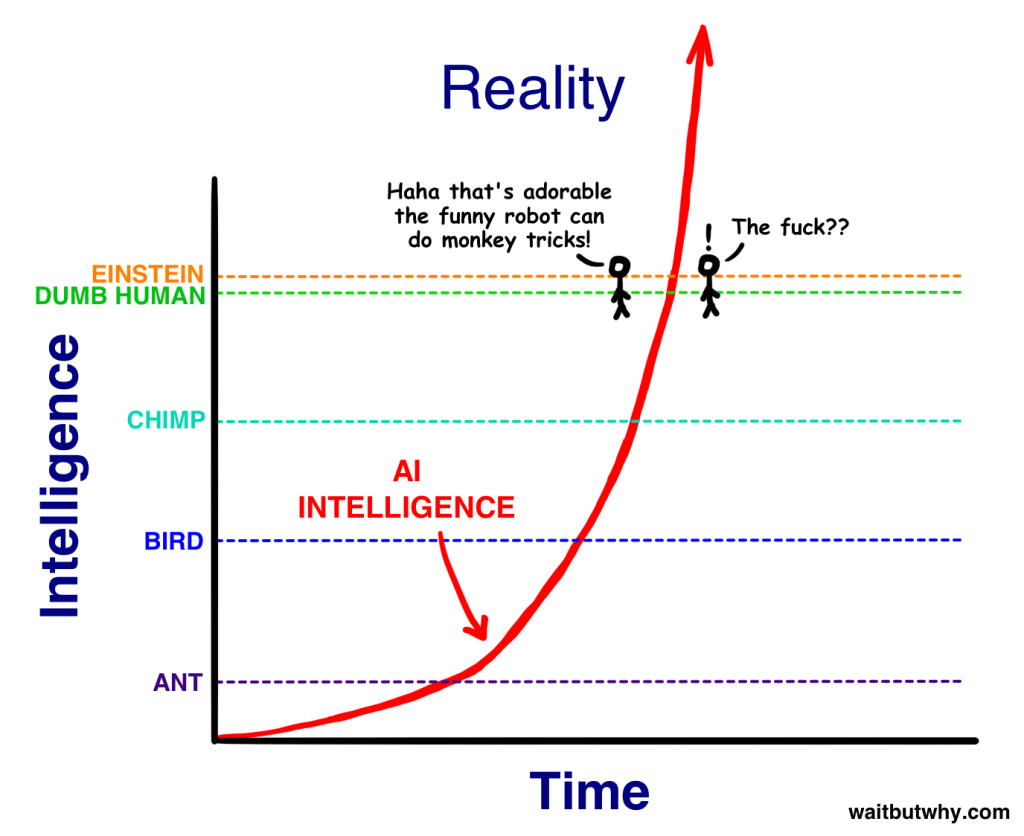

Recursive improvement

Human intelligence is a narrow range

Human intelligence is static

Distinction (1)

Intelligence = the ability to do stuff with your brain

Techne = The ability to do stuff

Human techne is not static

... and it is not a narrow range

What matters is relative super intelligence

(or "super techne")

Conclusions

- Human techne can improve very quickly

- This makes ASI only plausible under the assumption of and intelligence explosion

- If the human range is not narrow, then such an explosion is less likely

Distinction (2)

Agency = ability of intentional action

= behavior caused by belief/desire

unorthodox definitions

1. Belief: a representation of the world

2. Desire: a set of instructions to act

Friendly AI makes only sense if AI has wide agency

Bacteria are not "friendly"!

Minimal vs. wide agency

Minimal: "desires" are very specific

Wide: desires are non-specific

Wide agency

Allows humans to dynamically generate instrumental goals in most contexts.

AI is not (always) stupid, its utility function is just too narrow

A wide utility function

allows generation of new instrumental desires under a wide set of contexts

Agency is different from techne

A narrow agent cannot in its utility function contain the desire to become a wider agent, because that would require that the agent has the desire it wishes to attain.

Conclusions

Creating agent AI requires a concerted effort

Minimal agent AI/tool AI is the greater risk

Ergo: we should focus effort at those risks

Thank you!

Karim Jebari

jebarikarim@gmail.com

politiskfilosofi.com

twitter.com/karimjebari

The intelligence explosion revisited

By Karim Jebari

The intelligence explosion revisited

- 1,789