Mathematical Analysis

of Ensemble Kalman Fitlter

Kota Takeda , Takashi Sakajo

1. Department of Mathematics, Kyoto University

2. Data assimilation research team, RIKEN R-CCS

17th EASIAM Annual Conference, Jun. 30, 2024

1,2

1

I'm Kota Takeda

President of SIAM SC Kyoto, Japan

Kota Takeda

Position

Research Topic

- Ph.D. student at Kyoto University

- Junior Research Associate at RIKEN

- President of SIAM SC Kyoto

- Mathematical Fluid Mechanics

- Uncertainty Quantification

- Data Assimilation

SIAM SC Kyoto

Activities

-

1 or 2 student symposiums per year

-

Monthly seminar

Members

13 students (doctor, master) from math., engineering, life science, etc.

If you want to come to Kyoto...

Please let me know!

(we can collaborate in any way)

Data Assimilation (DA)

Seamless integration of data into numerical models

Data Assimilation (DA)

Seamless integration of data into numerical models

Model

Dynamical systems and its numerical simulations

Data

Obtained from experiments or measurements

×

Setup

Model dynamics

Observation

Aim

Unkown

Given

Construct

estimate

Quality Assessment

Given

Model

Obs.

Obs.

unknown

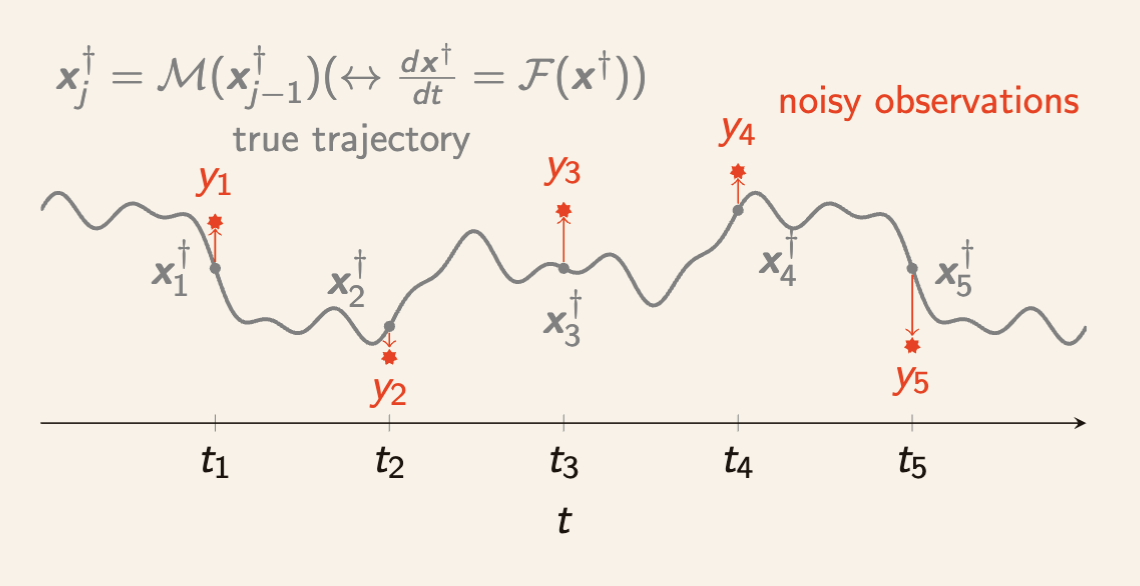

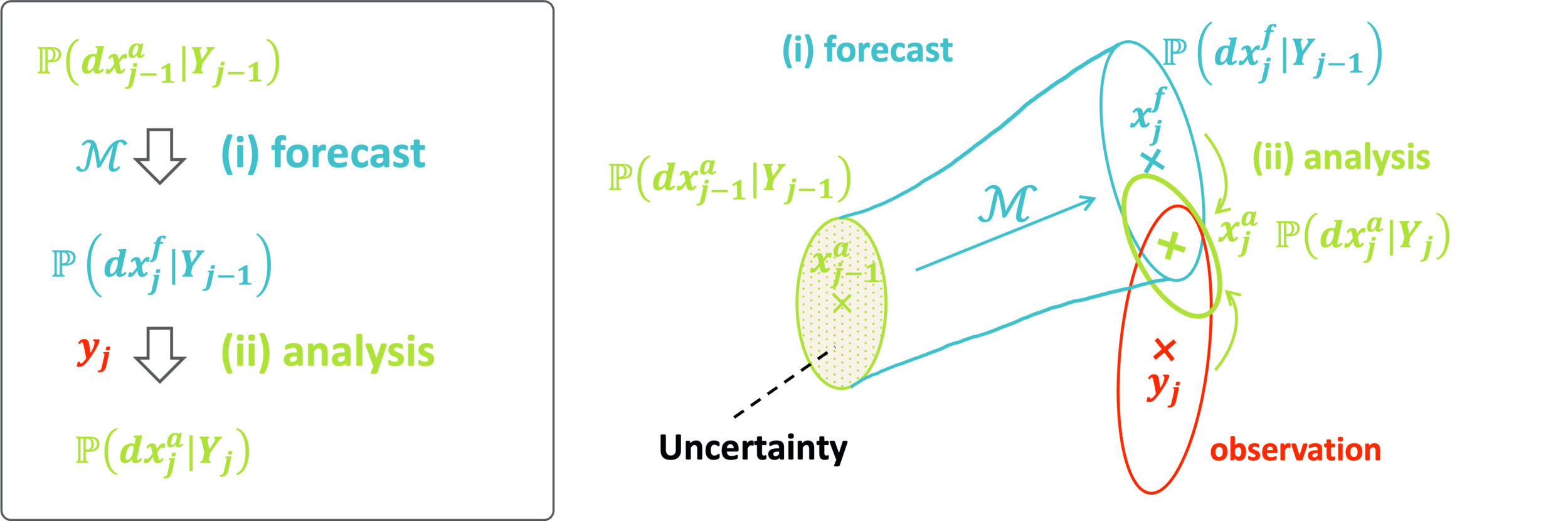

Sequential Data Assimilation

→ Next: approximate this numerically

Prediction

by Bayes' rule

by model

Update

We can construct the exact distribution by 2 steps at each time step.

Data Assimilation Algorithms

Only focus on Approximate Gaussian Algorithms

Data Assimilation Algorithms

Kalman filter(KF) [Kalman 1960]

- All Gaussian distribution

- Update of mean and covariance.

Assume: linear F, Gaussian noises

Ensemble Kalman filter(EnKF)

Non-linear extension by Monte Carlo

- Update of ensemble

- Two major implementations:

- Perturbed Observation (PO): stochastic

- Ensemble Transform Kalman Filter (ETKF): deterministic

Data Assimilation Algorithms

Kalman filter(KF)

Assume: linear F, Gaussian noises

Ensemble Kalman filter(EnKF)

Non-linear extension by Monte Carlo

Two implementations:

- PO: stochastic

- ETKF: deterministic

Regularized Least Squares

Linear F

Bayes' rule

Regularized Least Squares

approx.

ensemble

predict

update

PO (stochastic EnKF)

Stochastic!

(Burgers+1998) G. Burgers, P. J. van Leeuwen, and G. Evensen, Analysis Scheme in the Ensemble Kalman Filter,684, Mon. Weather Rev., 126 (1998), pp. 1719–1724.

ETKF (deterministic EnKF)

Deterministic!

(Bishop+2001)C. H. Bishop, B. J. Etherton, and S. J. Majumdar, Adaptive Sampling with the Ensemble Transform681

Kalman Filter. Part I: Theoretical Aspects, Mon. Weather Rev., 129 (2001), pp. 420–436.

Inflation (numerical technique)

Issue: Underestimation of covariance through the DA cycle.

→ Poor state estimation.

→ (idea) inflating covariance before the update step.

Two basic methods of (covariance) inflation

Literature review

-

Consistency (Mandel+2011, Kwiatkowski+2015)

-

Sampling errors (Al-Ghattas+2024)

-

Error analysis (full observation )

-

PO (Kelly+2014)

-

ETKF (T.+2024) → current

-

-

Error analysis (partial observation) → future

(Kelly+2014) D. T. B. Kelly, K. J. H. Law, and A. M. Stuart, Well-posedness and accuracy of the ensemble716

Kalman filter in discrete and continuous time, Nonlinearity, 27 (2014), pp. 2579–260.

(Al-Ghattas+2024) O. Al-Ghattas and D. Sanz-Alonso, Non-asymptotic analysis of ensemble Kalman updates: Effective669

dimension and localization, Information and Inference: A Journal of the IMA, 13 (2024).

(Kwiatkowski+2015) E. Kwiatkowski and J. Mandel, Convergence of the square root ensemble kalman filter in the large en-719

semble limit, Siam-Asa J. Uncertain. Quantif., 3 (2015), pp. 1–17.

(Mandel+2011) J. Mandel, L. Cobb, and J. D. Beezley, On the convergence of the ensemble Kalman filter, Appl.739 Math., 56 (2011), pp. 533–541.

How EnKF approximate KF?

How EnKF approximate

the true state?

Mathematical analysis of EnKF

Assumptions on model

(Reasonable)

Assumption on observation

(Strong)

Assumptions for analysis

Assumption (obs-1)

full observation

Strong!

Assumption (model-1)

Assumption (model-2)

Assumption (model-2')

Reasonable

Dissipative dynamical system

Dissipative dynamical systems

Lorenz' 63, 96 equations, widely used in geoscience,

Example (Lorenz 96)

non-linear conserving

linear dissipating

forcing

Assumption (model-1, 2, 2')

hold

Example (Lorenz 63)

(incompressible 2D Navier-Stokes equations in infinite-dim. setting)

Error analysis of PO (prev.)

Theorem (Kelly+2014)

variance of observation noise

uniformly in time

Error analysis of ETKF (our)

Theorem (T.+2024)

Summary

ETKF with multiplicative inflation (our)

PO with additive inflation (prev.)

accurate observation limit

Two approximate Gaussian filters (EnKF) are assessed in terms of the state estimation error.

due to

due to

Take home message

Data Assimilation is a seamless integration of

Data into Model.

Statistics/

Probability

Fluid Mechanics/

Other Dynamics

Numerical Analysis

There are still many problems in DA, and

we need to cooperate with a wide range of fields.

Optimization

Engineering

Machine Learning

Computing

Thank you for listening!

Please feel free to contact me.

Any Questions?

ensemble Kalman filter

(SIAM SC Kyoto)

Data Assimilation

Fluid mechanics

Future plans

Issue: partial observation

Partially observed

in Assumption (obs-1) is too strong for real-world applications.

In general, we can obtain partial information about the state.

Example

(full observation),

Future plan

Error analysis with projection

Difficult to obtain the error bound with projection

→ need to use detailed properties of model dynamics.

Related studies (DA with noiseless observations)

we can reconstruct the true state in the time limit with

for Lorenz 63,

for Lorenz 96 (40-dim.).

If

(noiseless observations)

(Law+2015) K. J. H. Law, A. M. Stuart, and K. C. Zygalakis. Data Assimilation: A Mathematical Introduction. Springer, 2015.

(Law+2016) K. J. H. Law, D. Sanz-Alonso, A. Shukla, and A. M. Stuart. Filter accuracy for the Lorenz 96 model: Fixed versus adaptive observation operators. Phys. -Nonlinear Phenom., 325:1–13, 2016.

Mathematical Analysis of Ensemble Kalman Filter

By kotatakeda

Mathematical Analysis of Ensemble Kalman Filter

EASIAM 2024

- 82