Deploying Serverless Docker applications on AWS

Andrew Ang

Harvard IT Summit 2019

May 14, 2019

Harvard VPAL Research Group

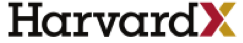

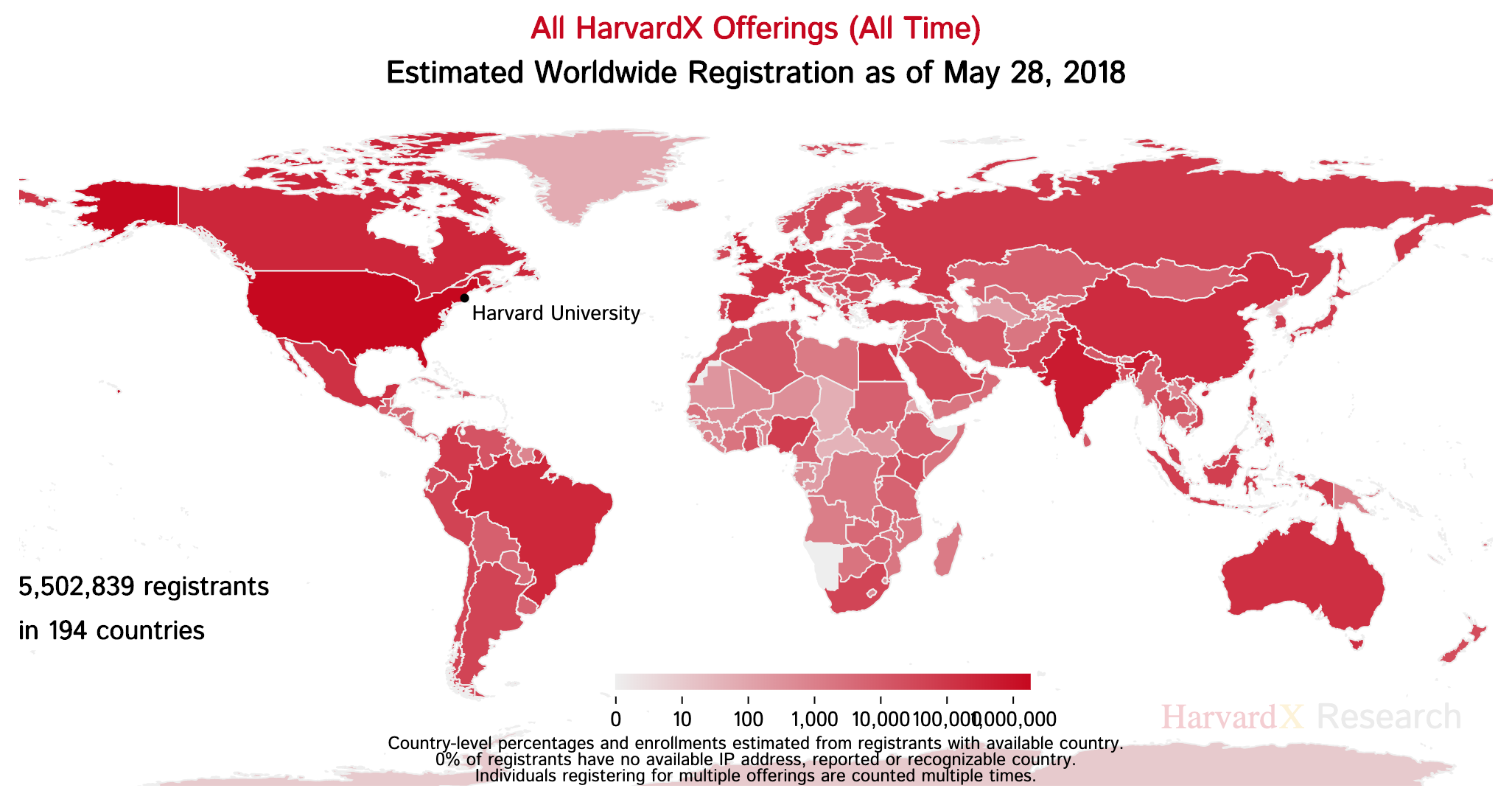

Learning about learning, at scale, using MOOCs ...

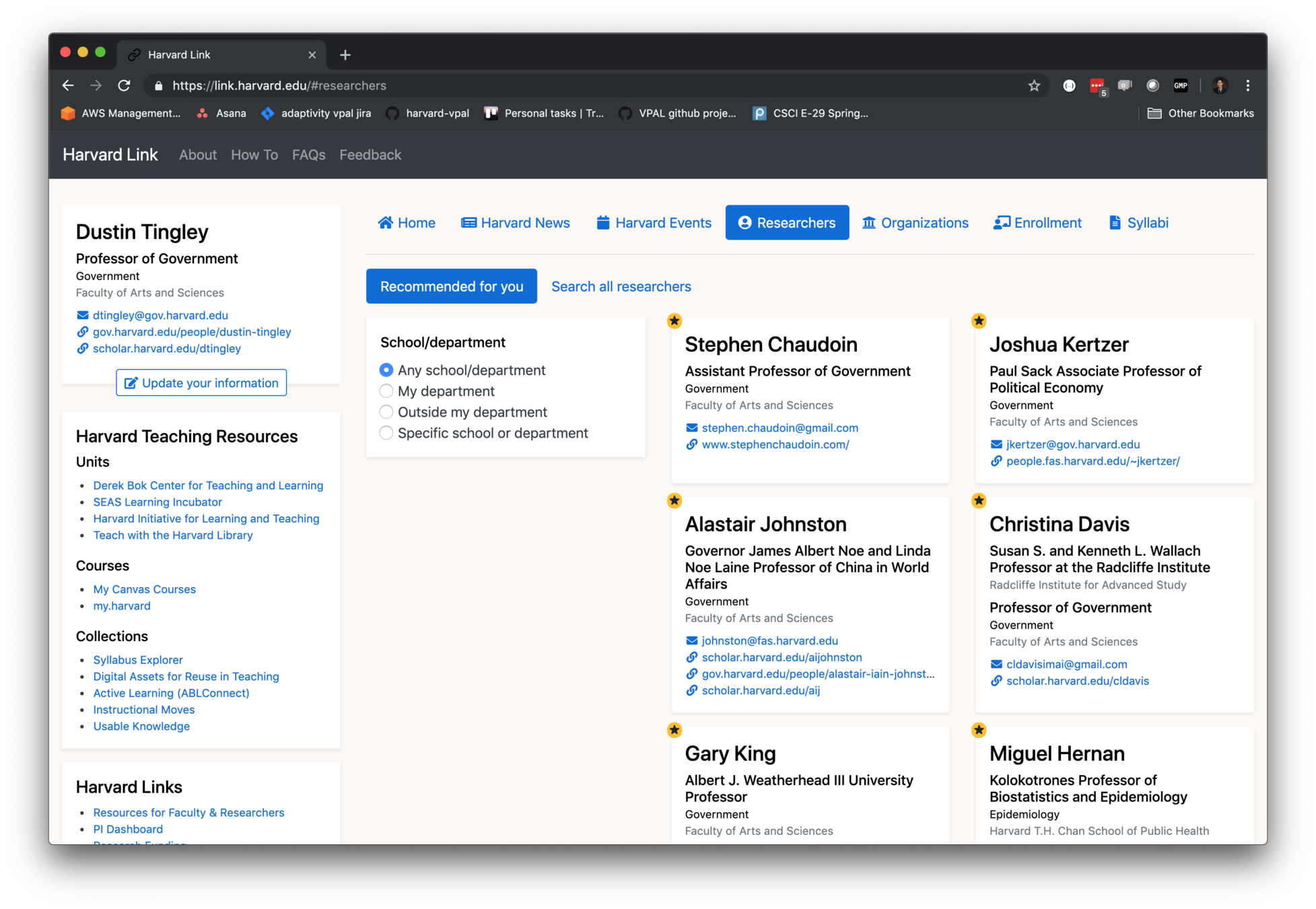

… and bringing research and technology innovations back to campus ...

… to create engaging learning experiences

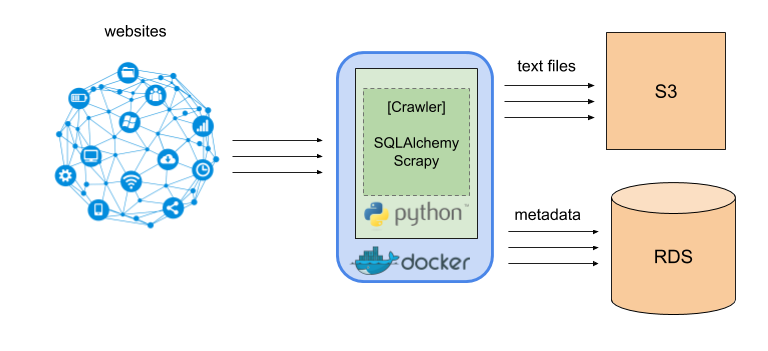

Our use cases for dockerized applications in education research + technology

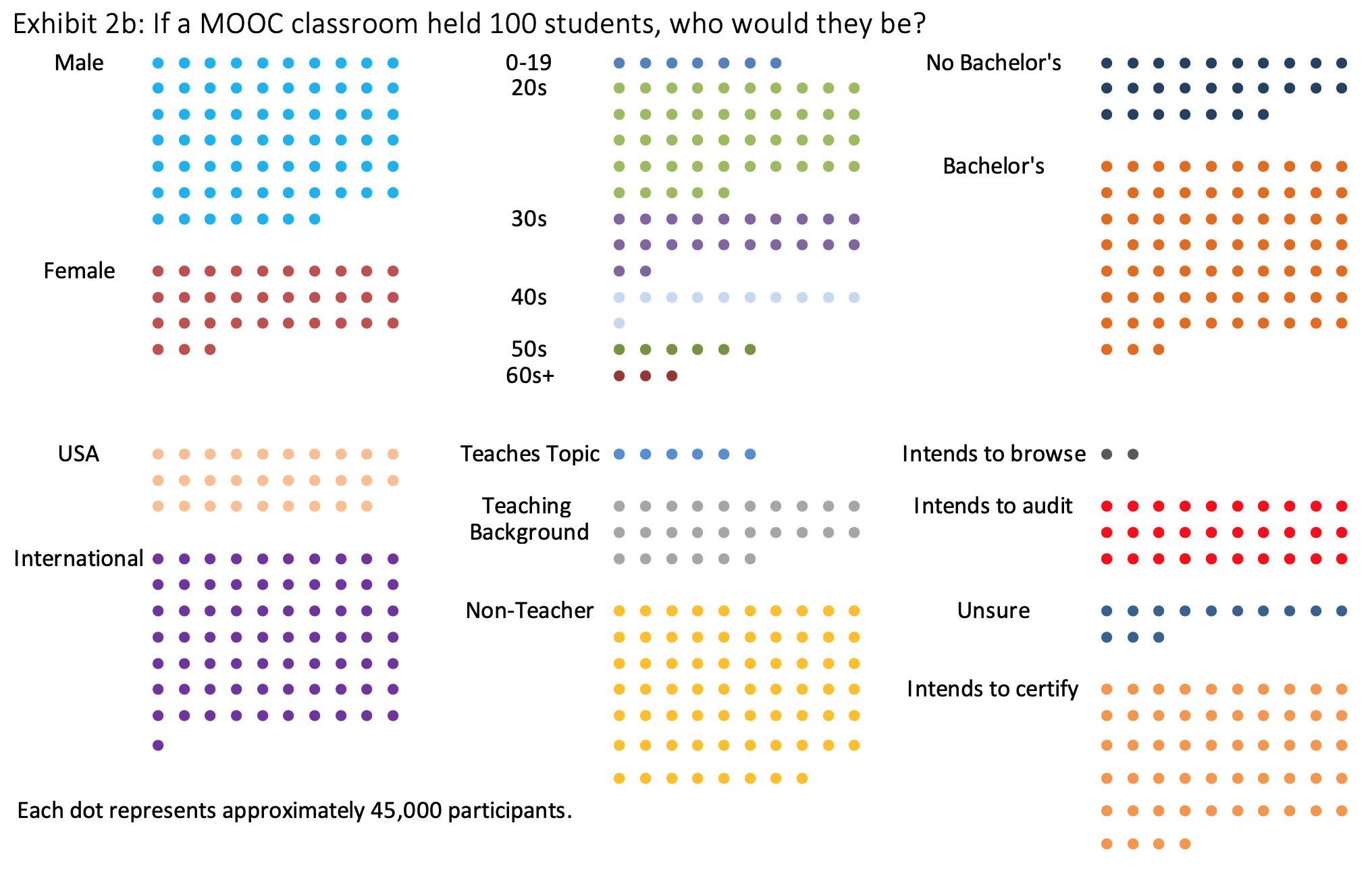

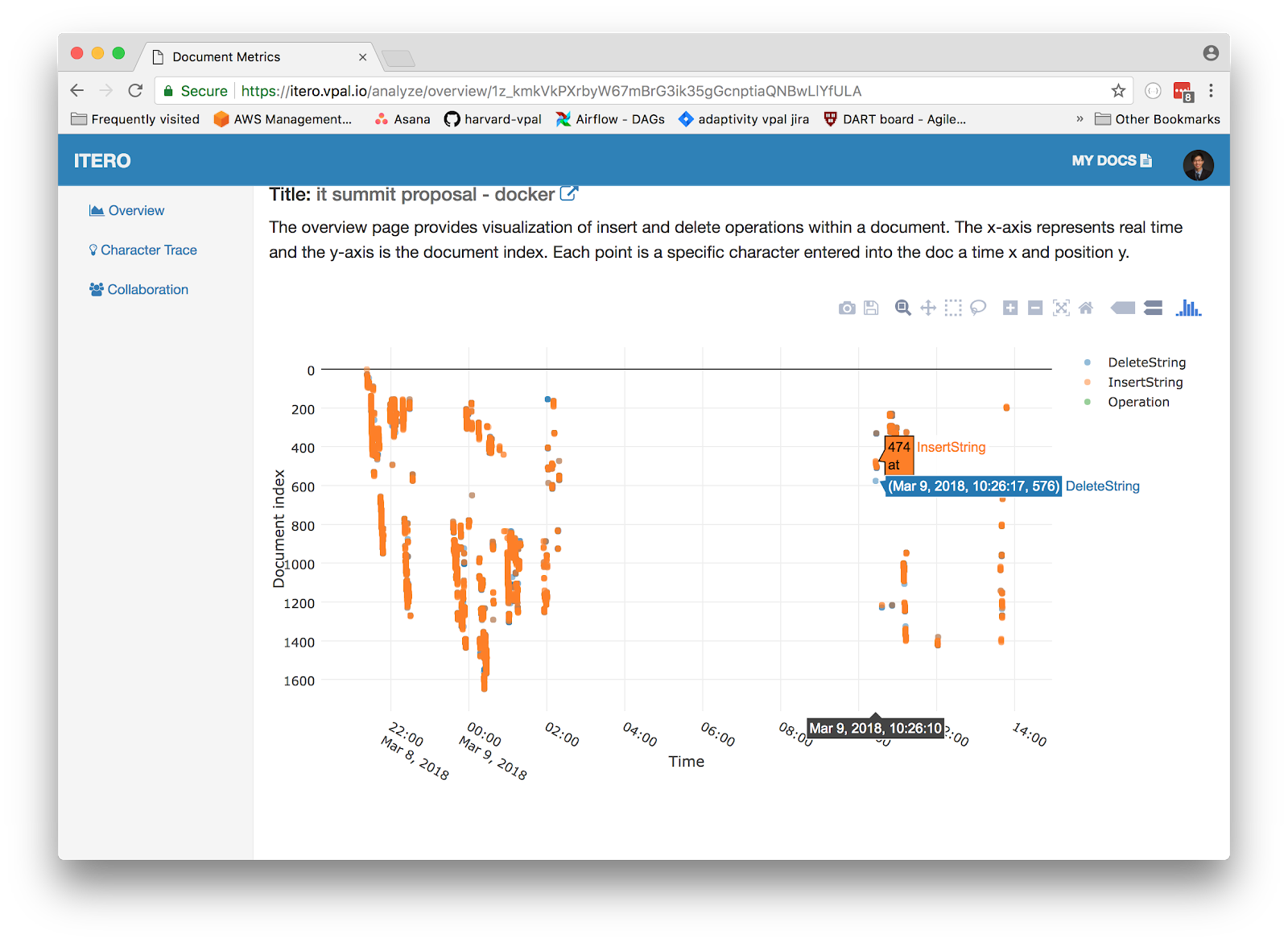

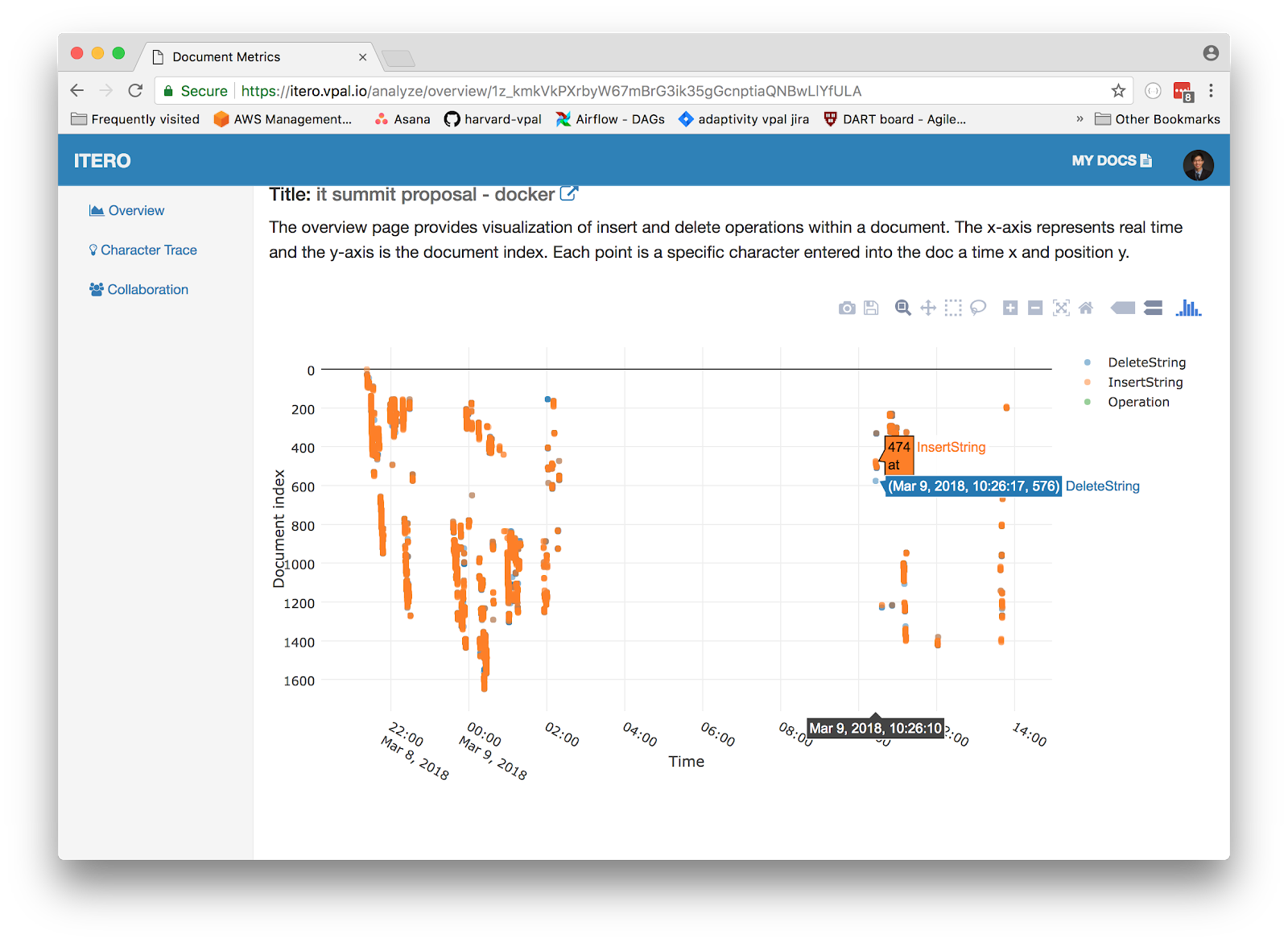

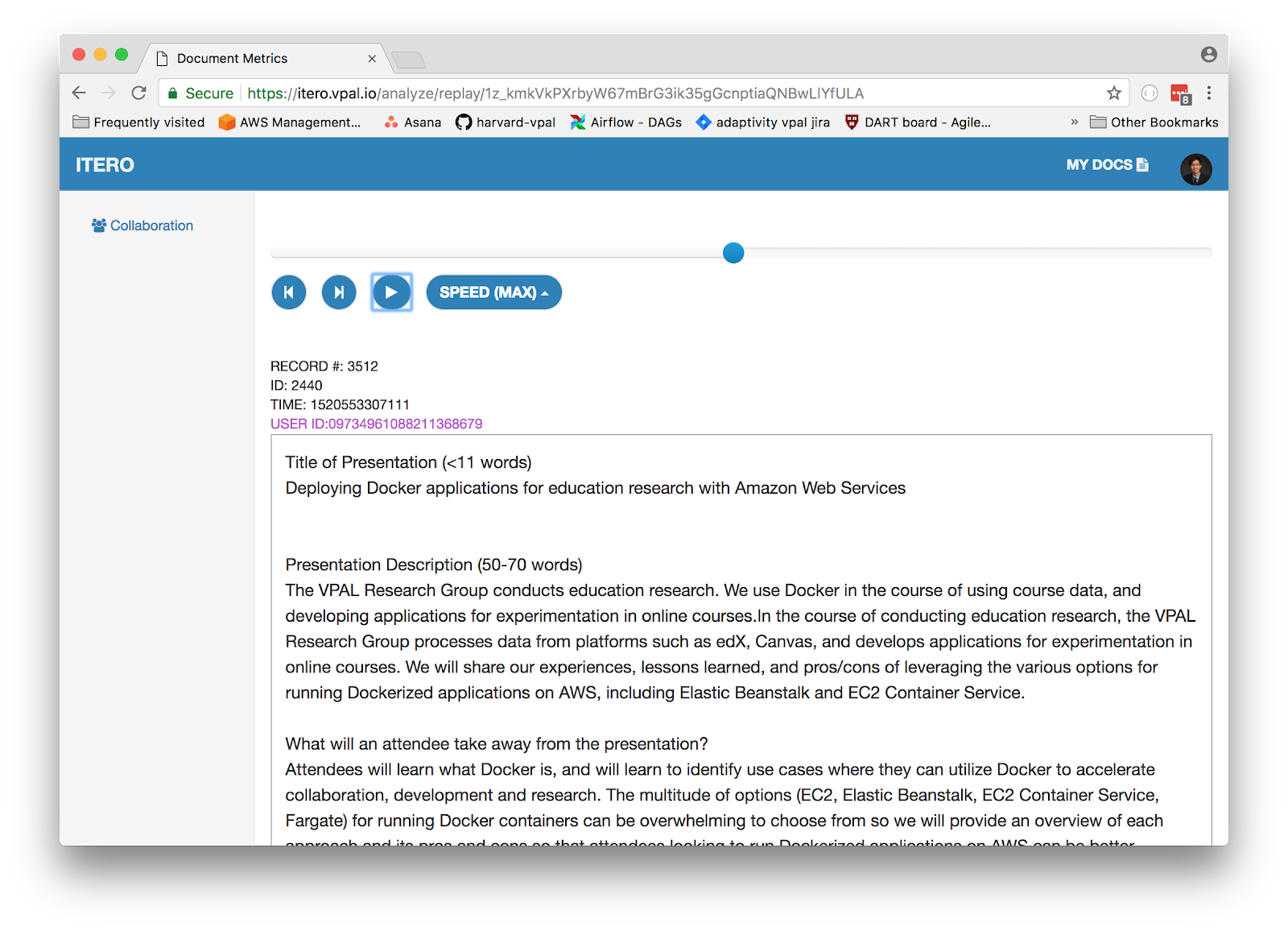

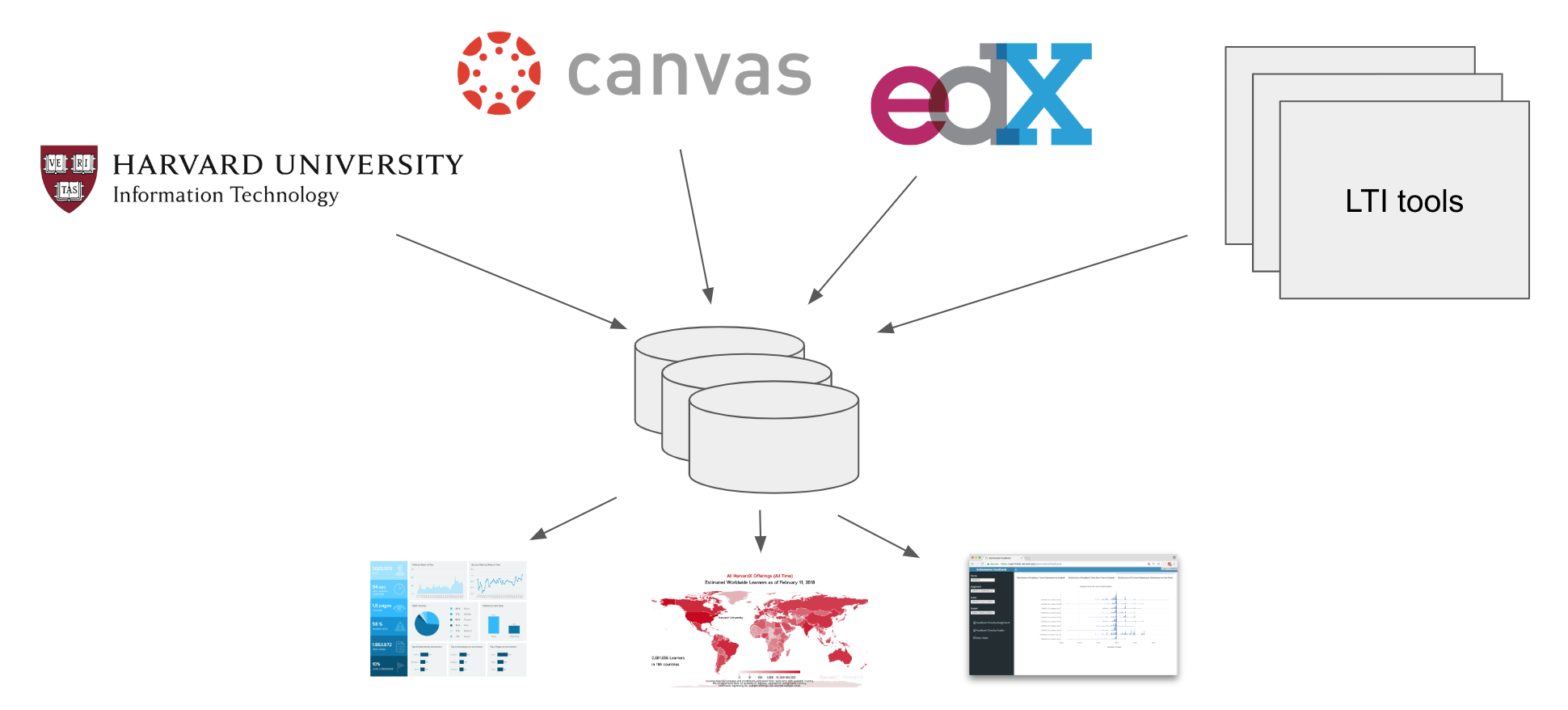

Research and analytics with education data

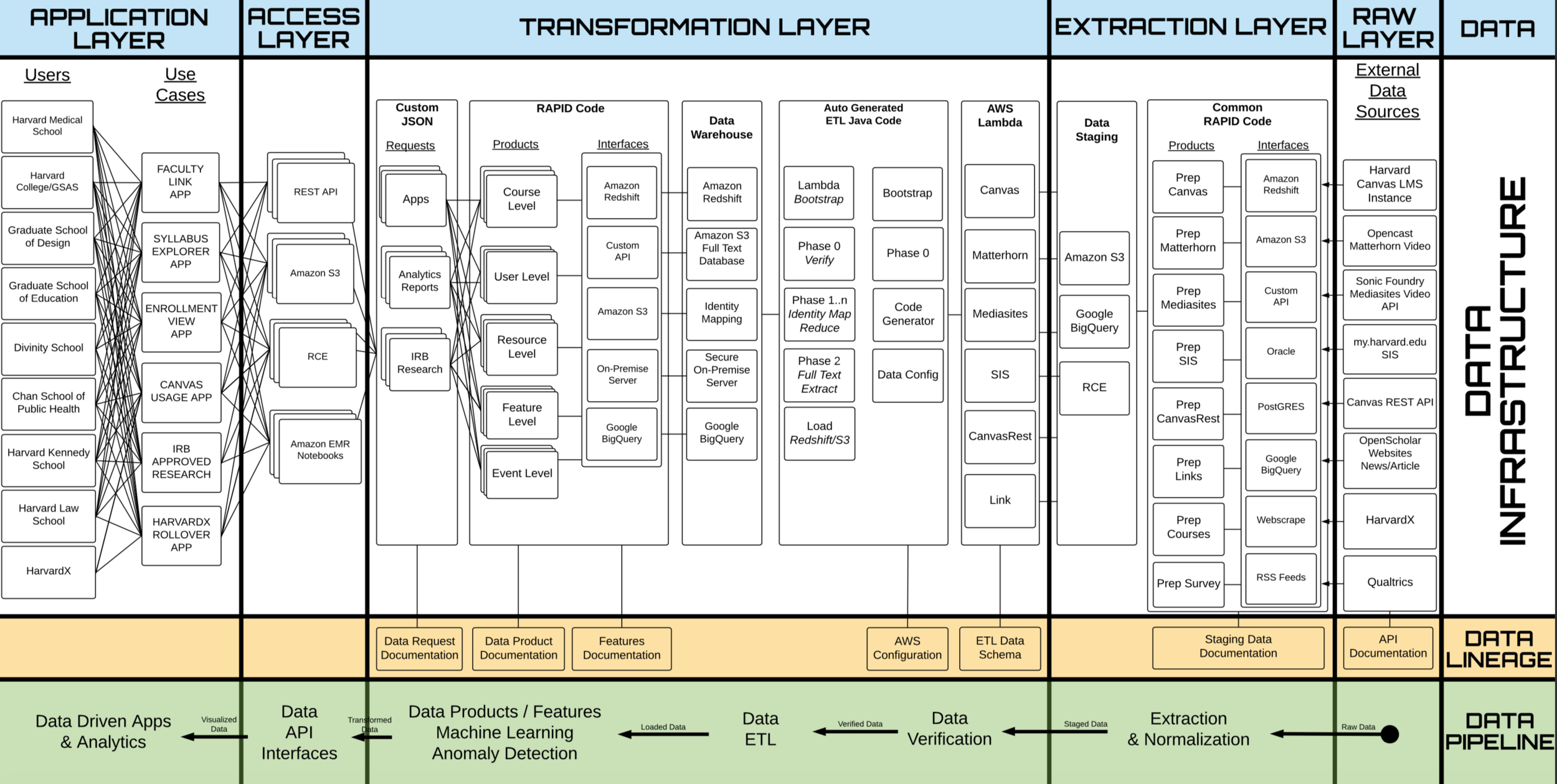

Data pipelines

Data pipelines

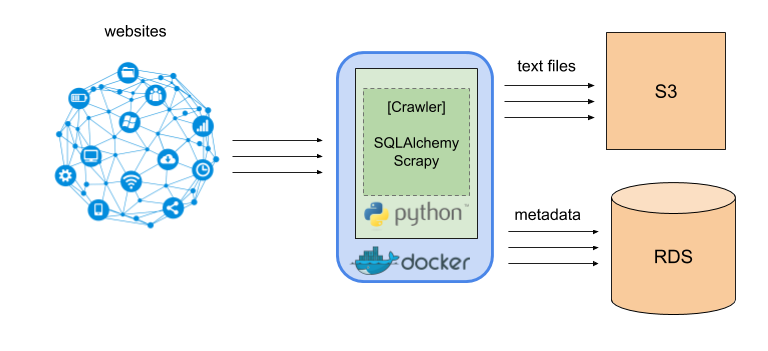

API scraper, web crawler, data ETL

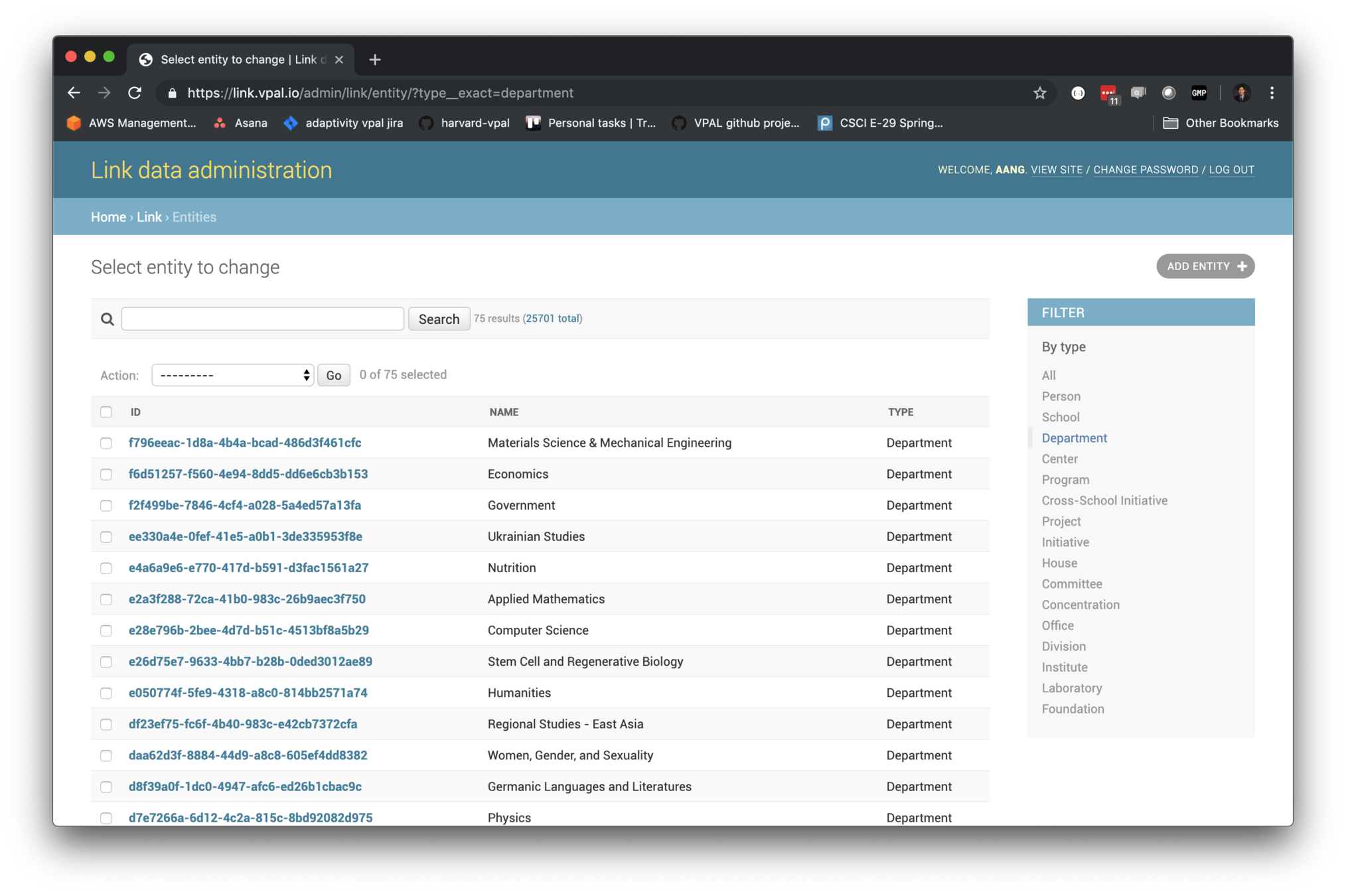

Dashboards - Django admin panel for data pipeline diagnostics

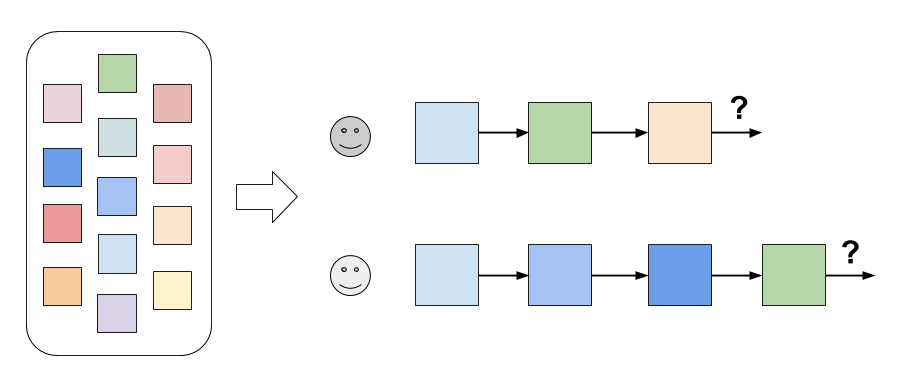

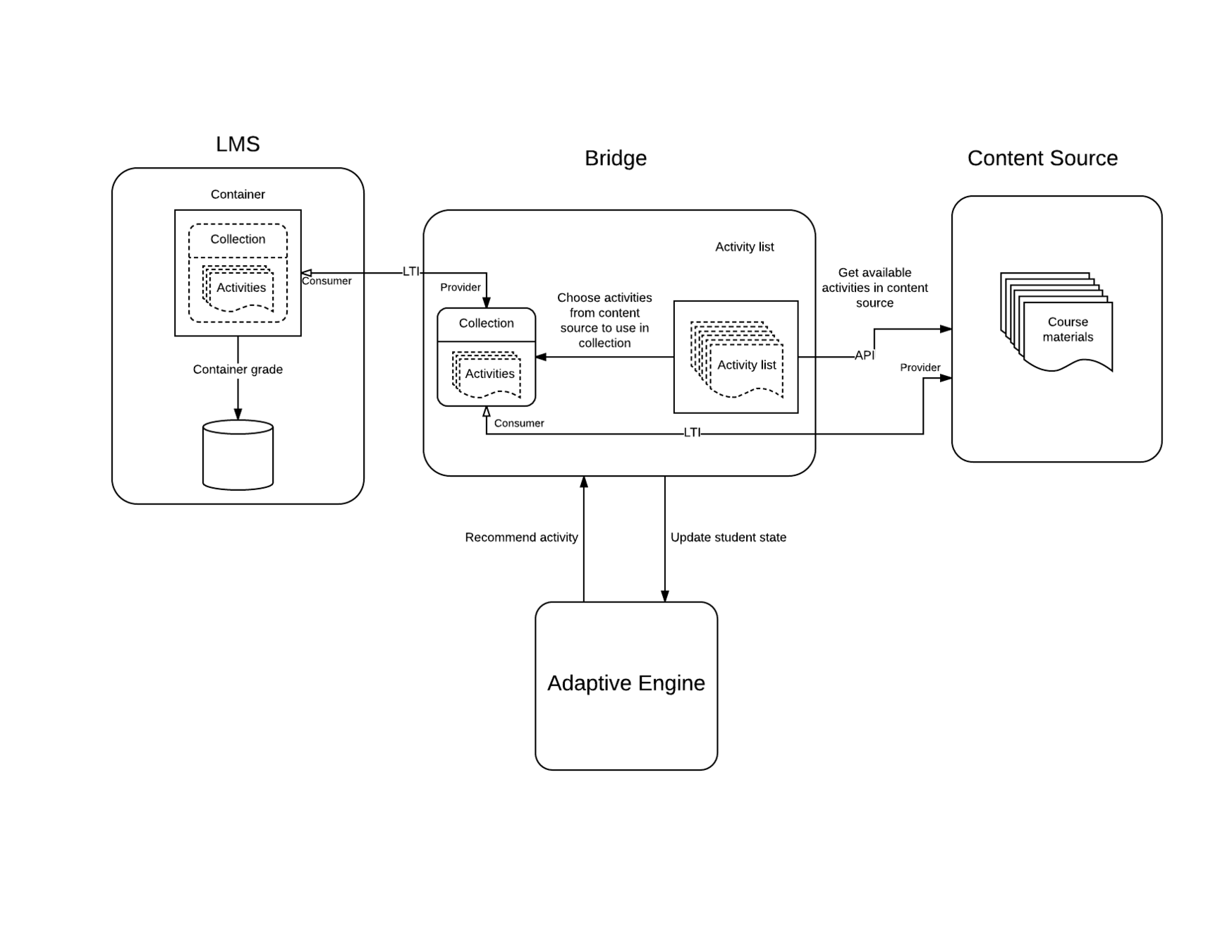

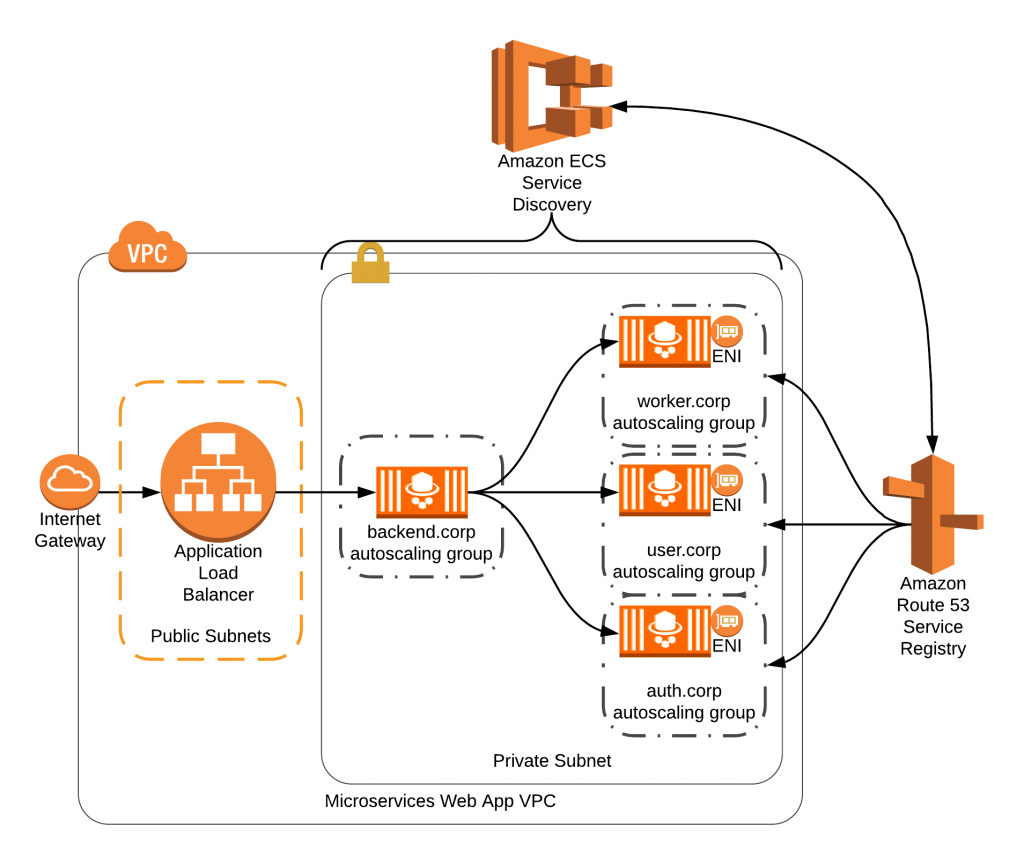

Adaptivity - service architecture

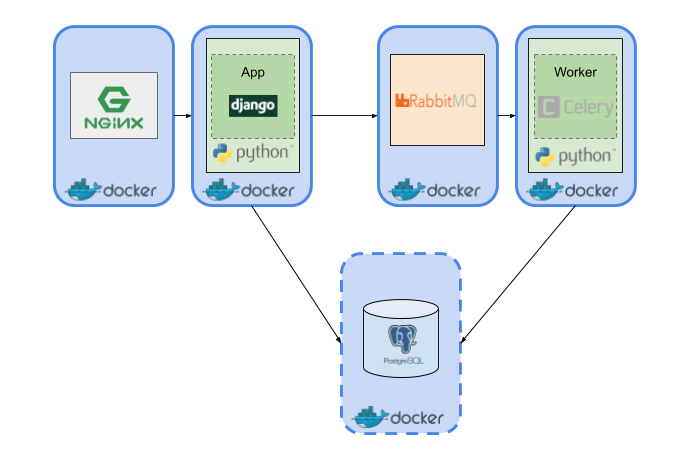

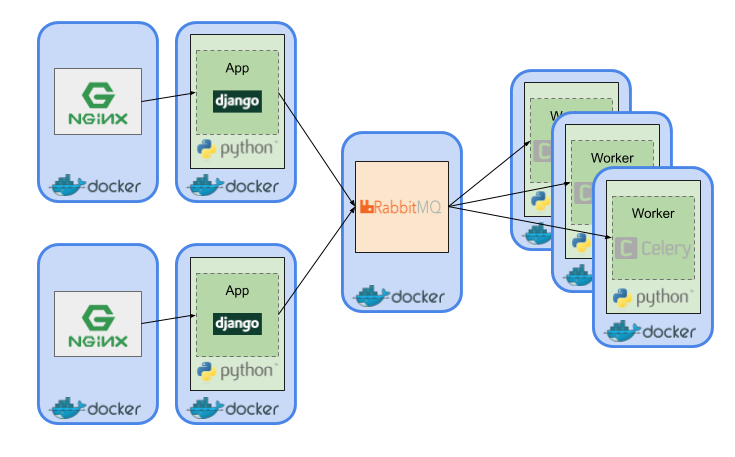

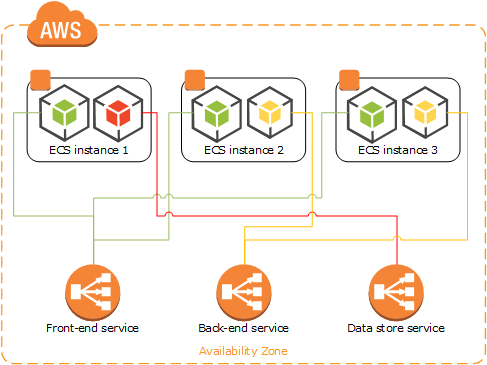

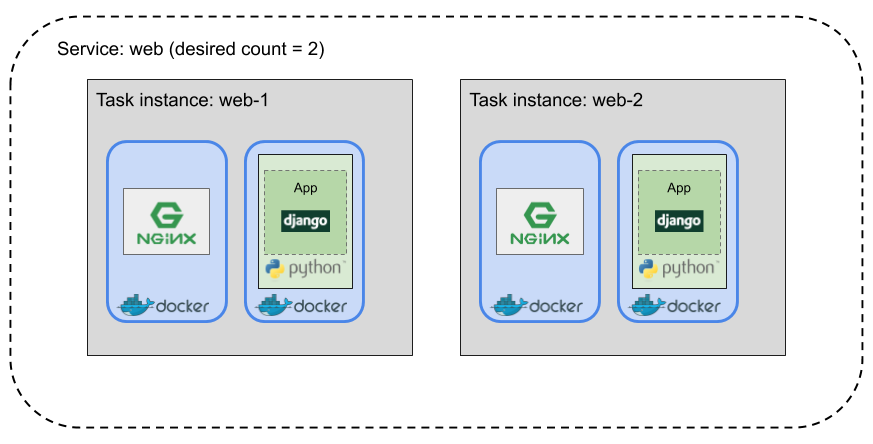

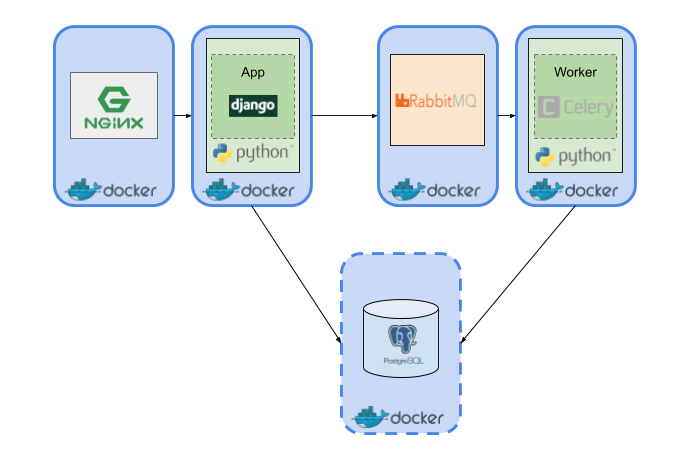

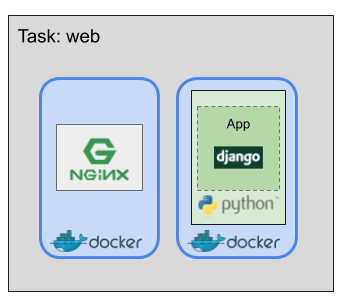

Typical dockerized web application architecture

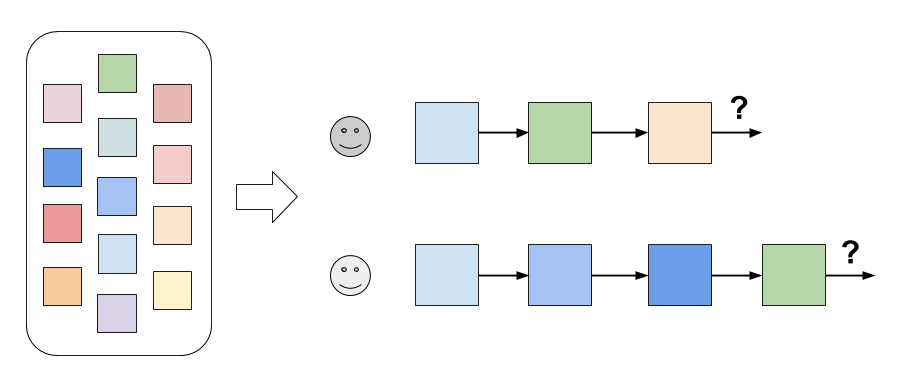

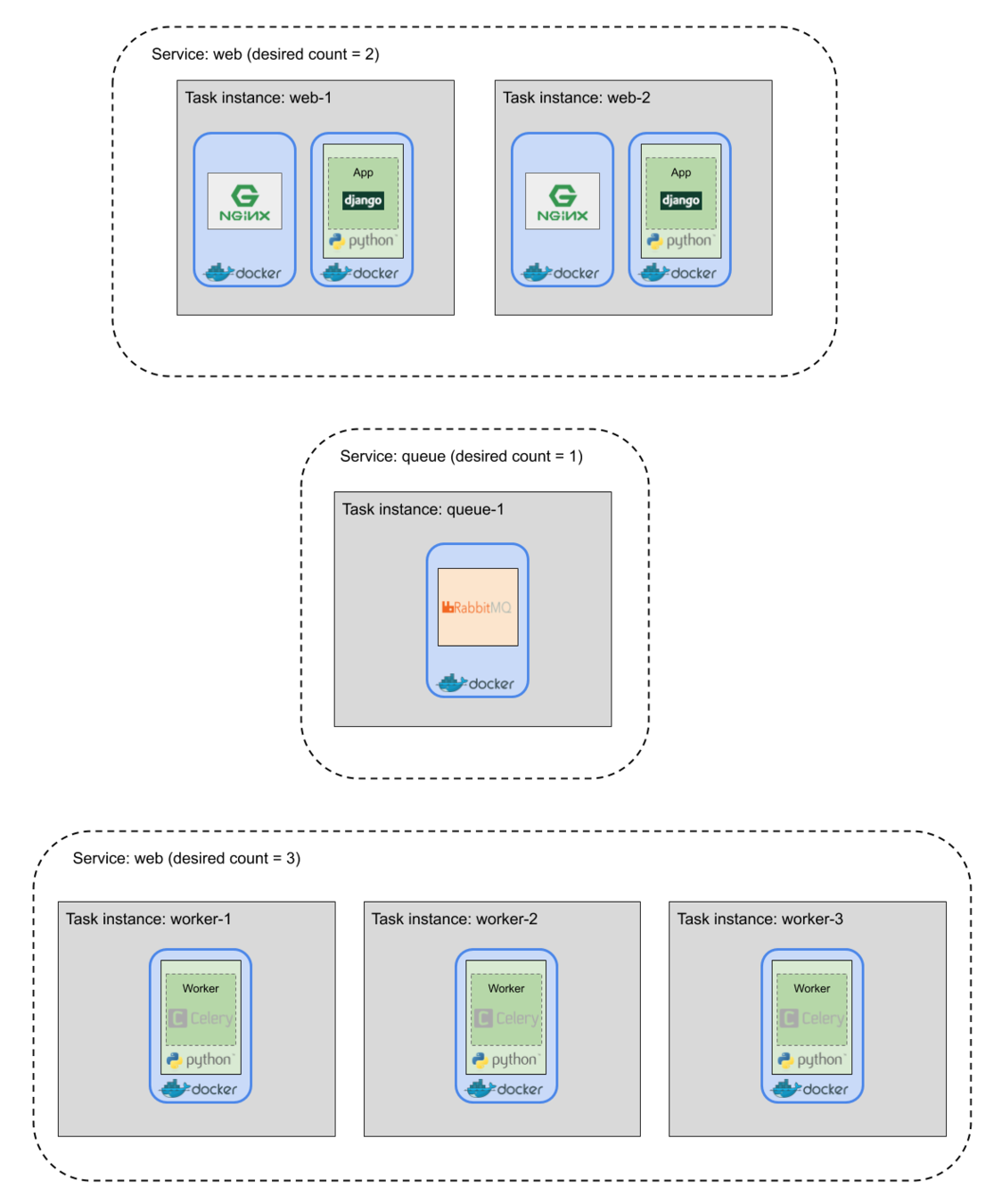

Ideal service scaling

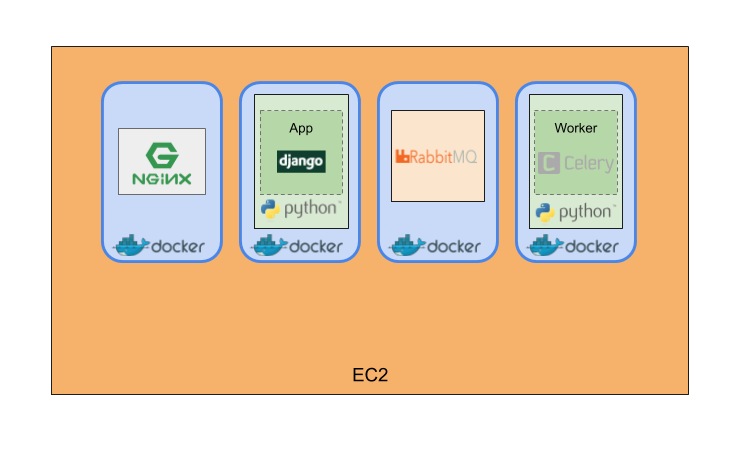

Deployment solution #1:

EC2 + docker-compose

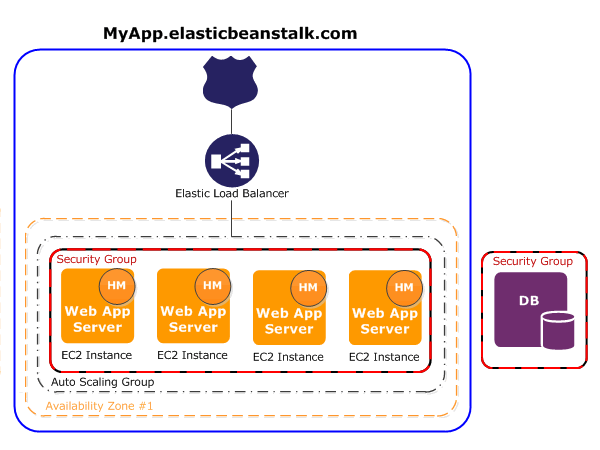

Deployment solution #2a:

Elastic Beanstalk

Previous deployment solutions #2b:

Elastic Beanstalk

(Multicontainer Docker)

Previous deployment (attempt) #3:

ECS - Elastic Container Service

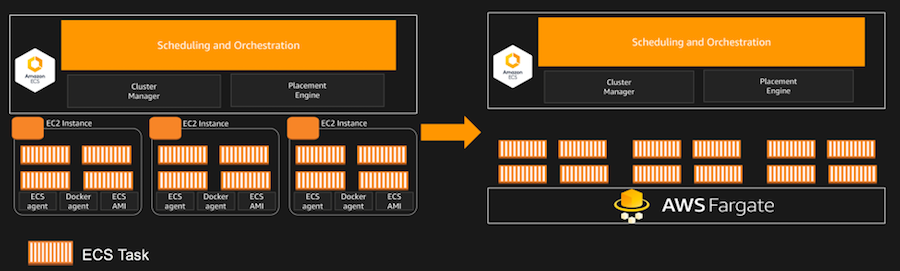

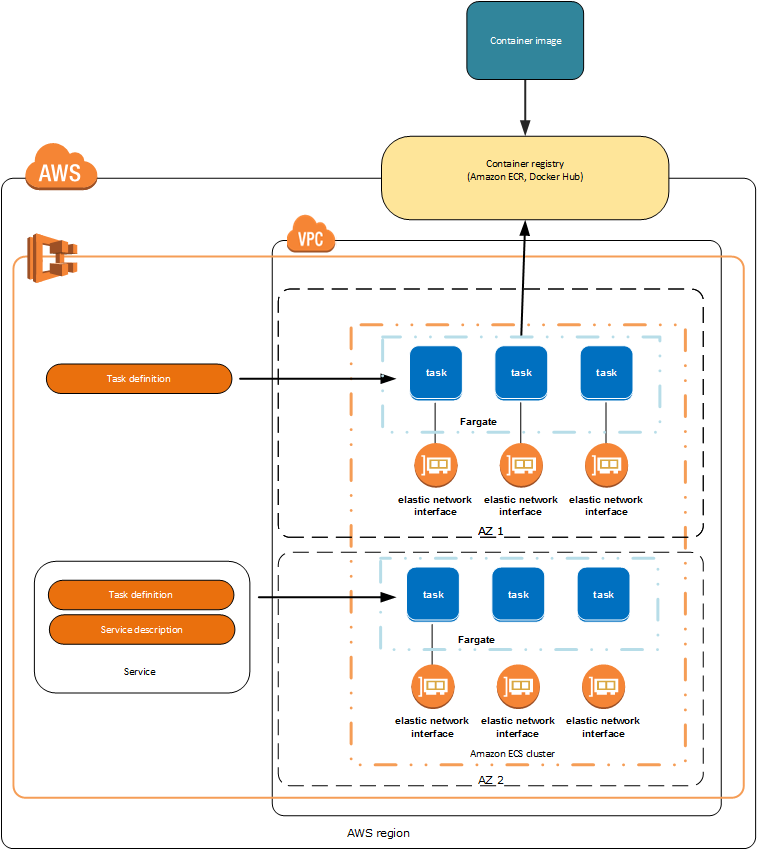

Fargate introduced in Nov 2017

“Fargate is like EC2 but instead of giving you a virtual machine you get a container.”

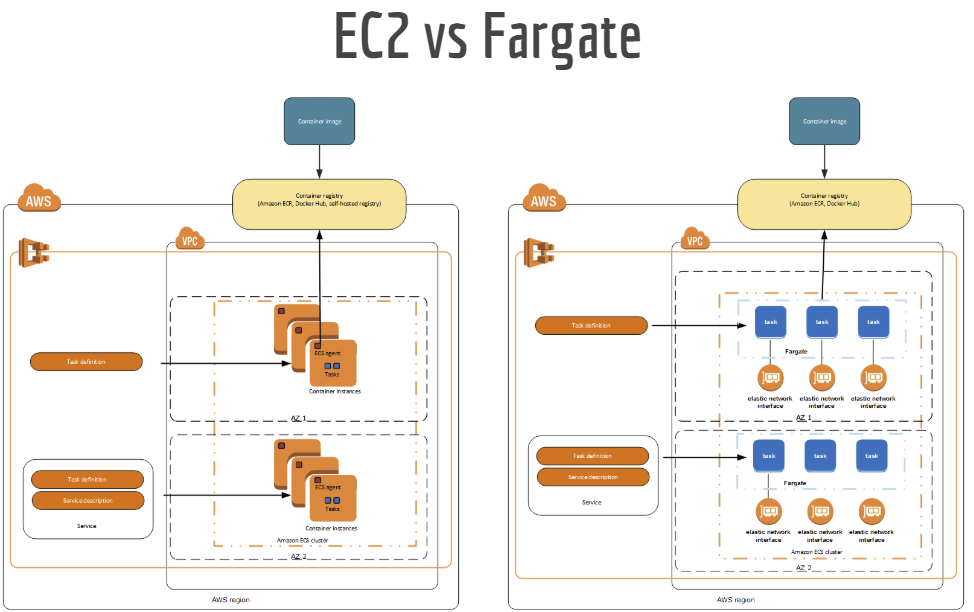

ECS launch types:

EC2 vs. Fargate

ECS launch types:

EC2 vs. Fargate

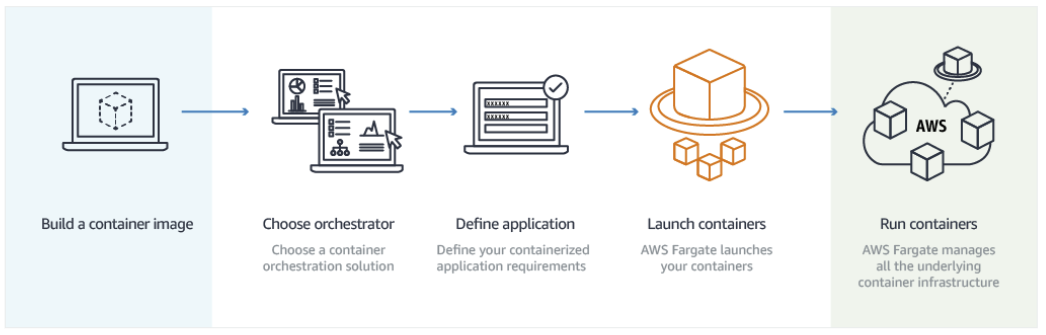

Workflow

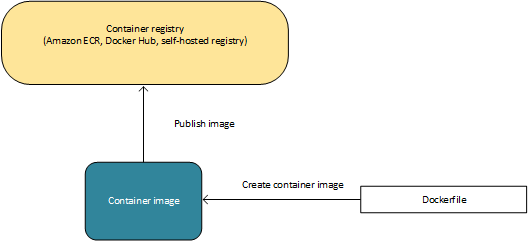

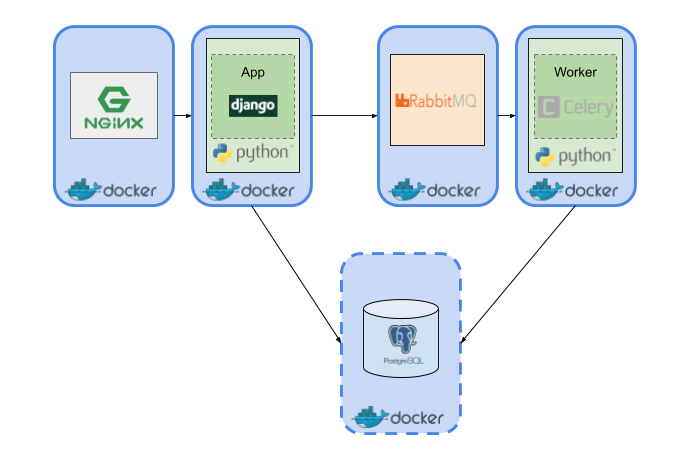

Workflow:

Build containers and upload to registry

Workflow:

Pull containers from registry and run

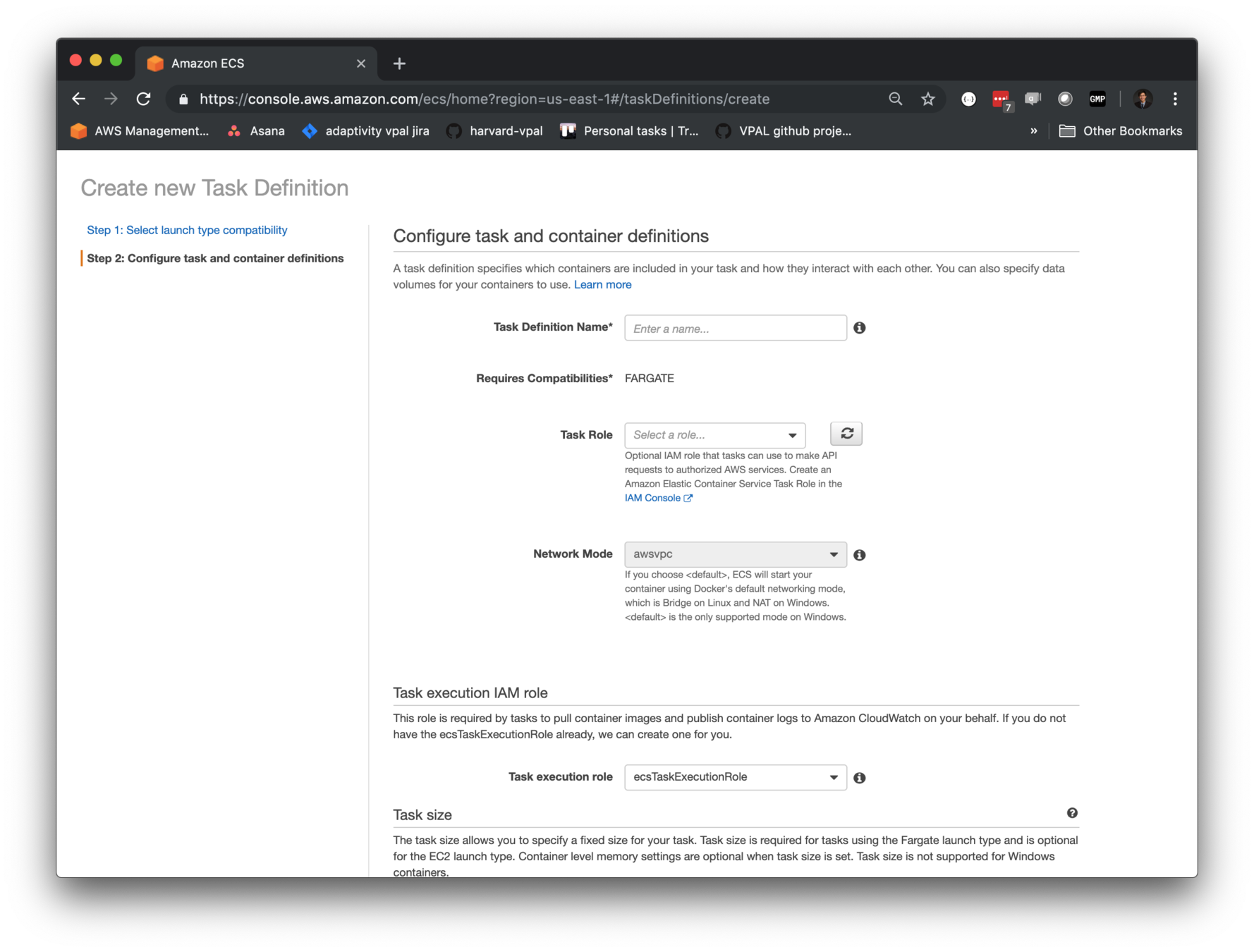

Workflow:

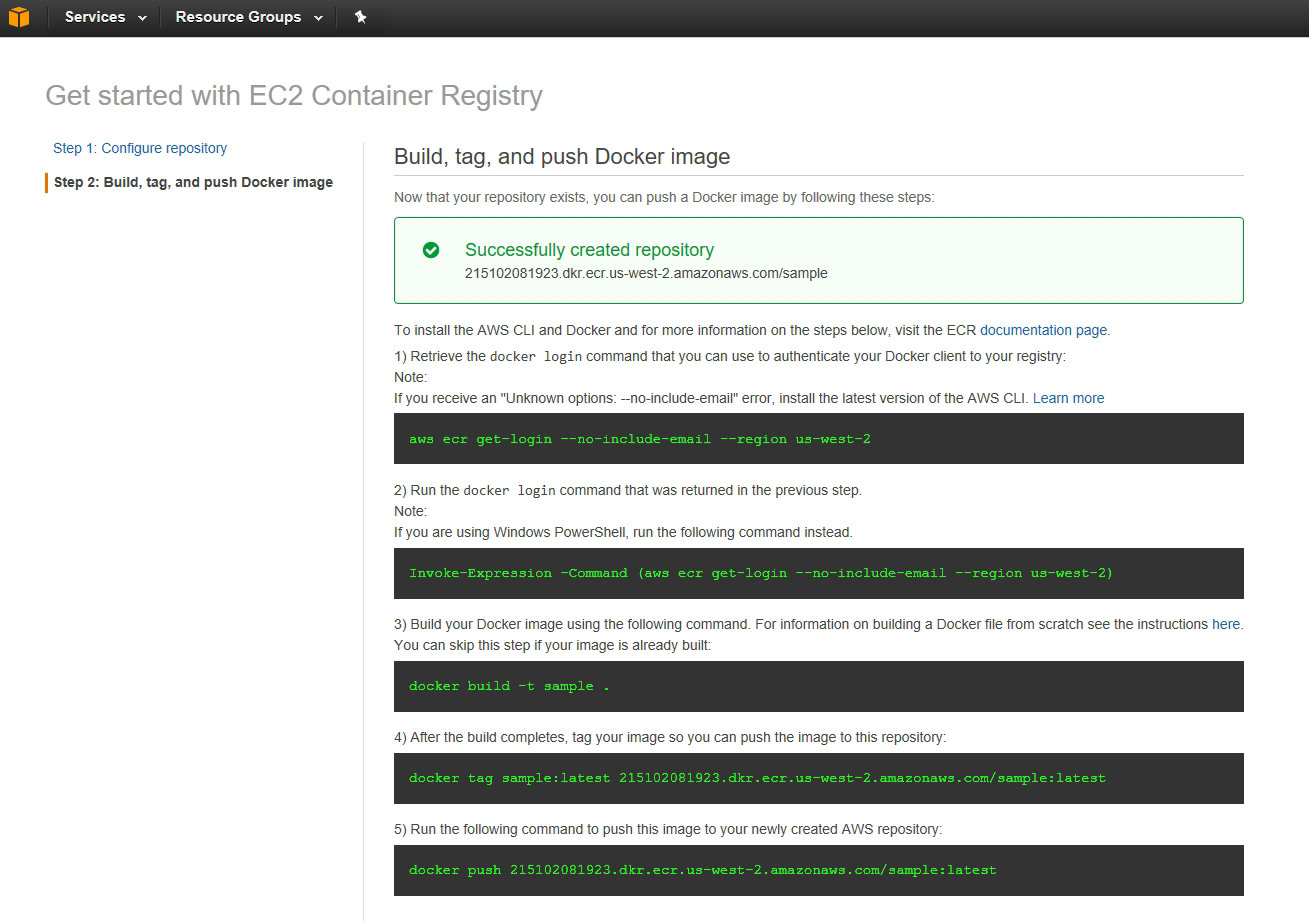

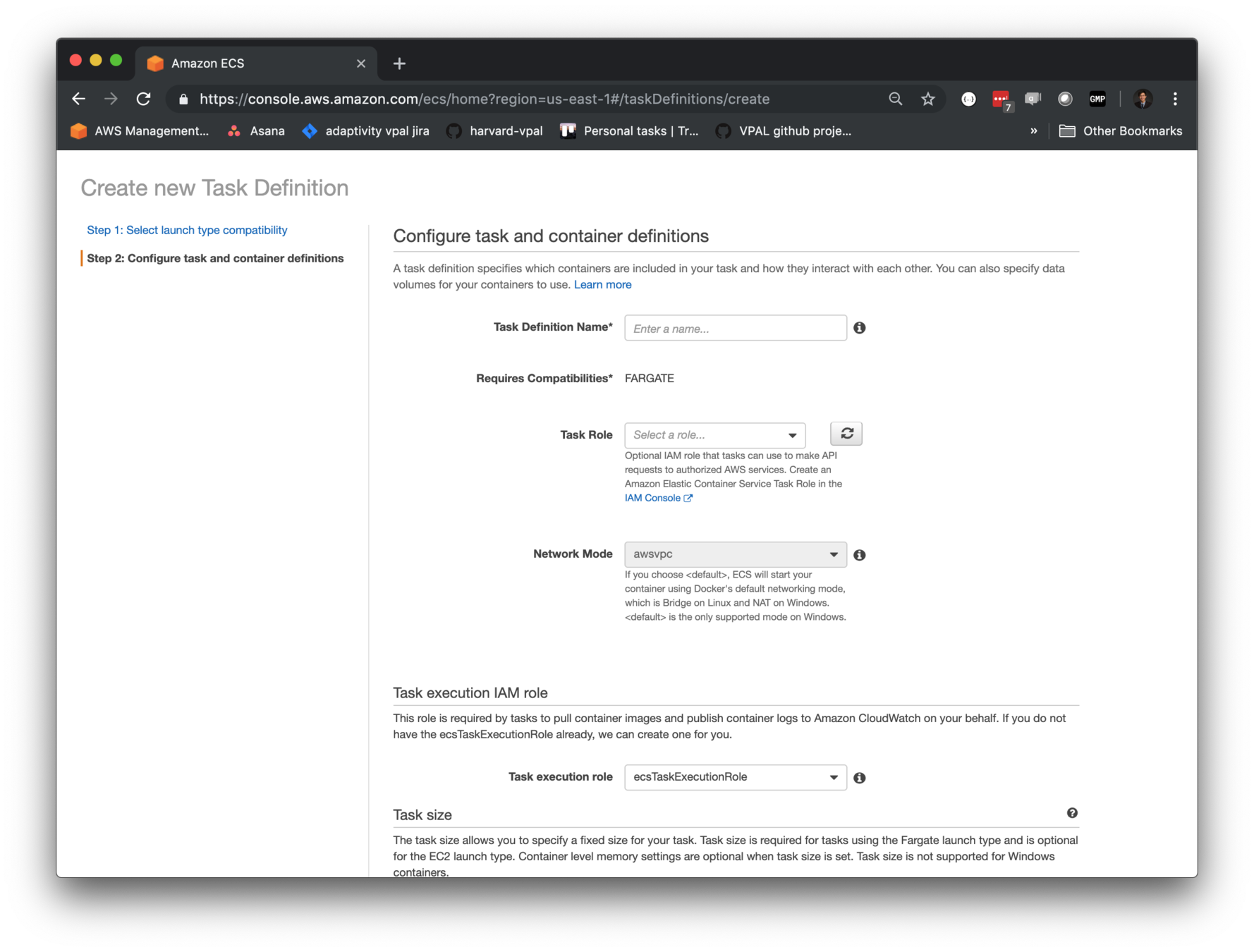

Task definitions

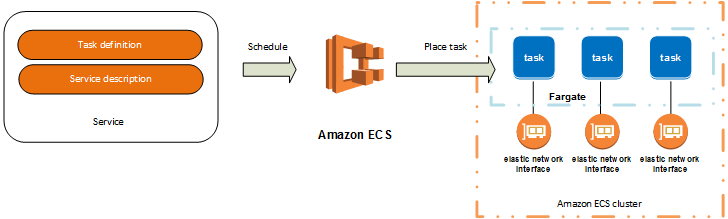

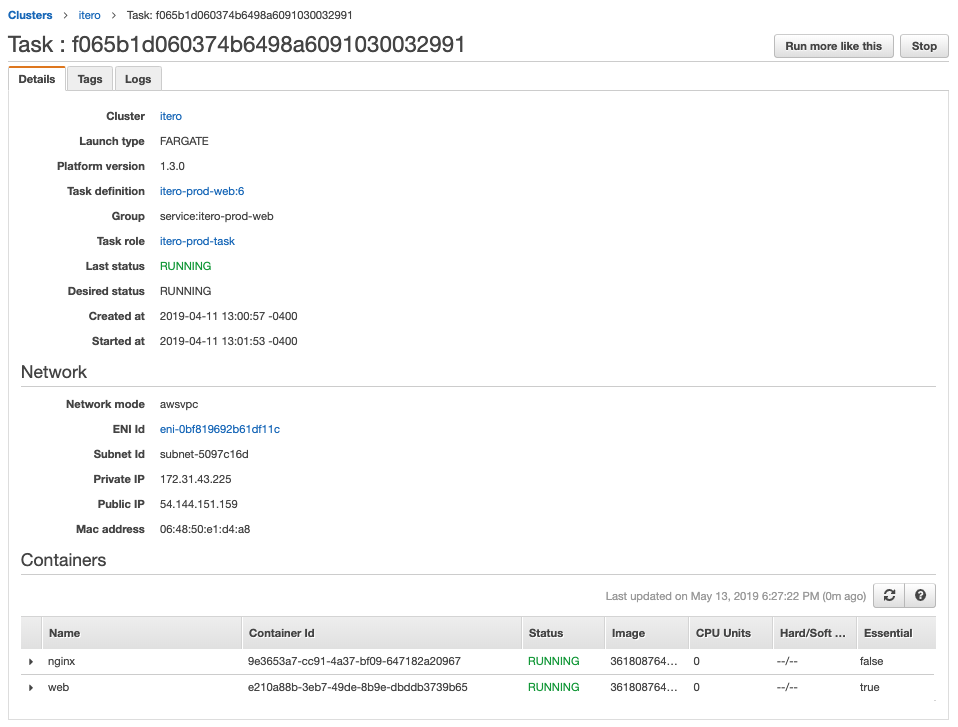

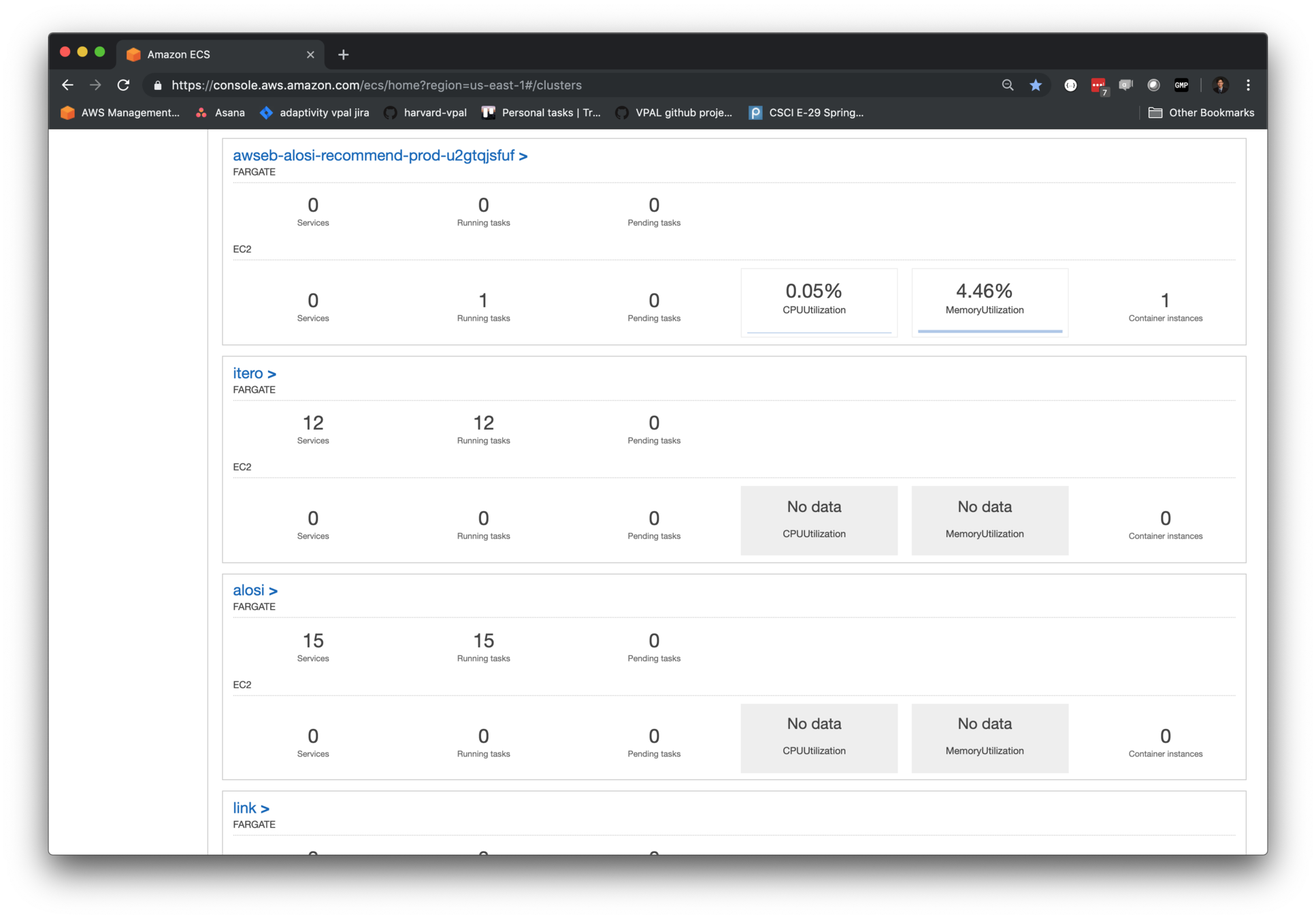

Task: scalable unit of ECS

{

"family": "web",

"taskRoleArn": "arn:...",

"networkMode": "awsvpc",

"containerDefinitions": [

{

"name": "web",

"image": "${web_image}",

"essential": true,

"memory": 256,

"portMappings": [

{

"containerPort": 8000

}

],

"command": [

"/usr/local/bin/gunicorn",

"config.wsgi:application",

"-w=2",

"-b=:8000",

"--log-file=-",

"--access-logfile=-"

],

"environment": [

{"name": "DJANGO_SETTINGS_MODULE", "value": "${DJANGO_SETTINGS_MODULE}"},

{"name": "ENV_LABEL", "value": "${env_label}"},

{"name": "HOST", "value": "${domain_name}"}

],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "${log_group_name}",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

}

},

{

"name": "nginx",

"image": "${nginx_image}",

"essential": false,

"memory": 256,

"portMappings": [

{

"containerPort": 80

}

],

...

}

]

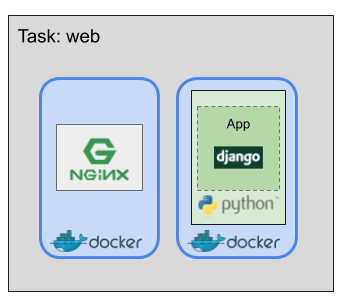

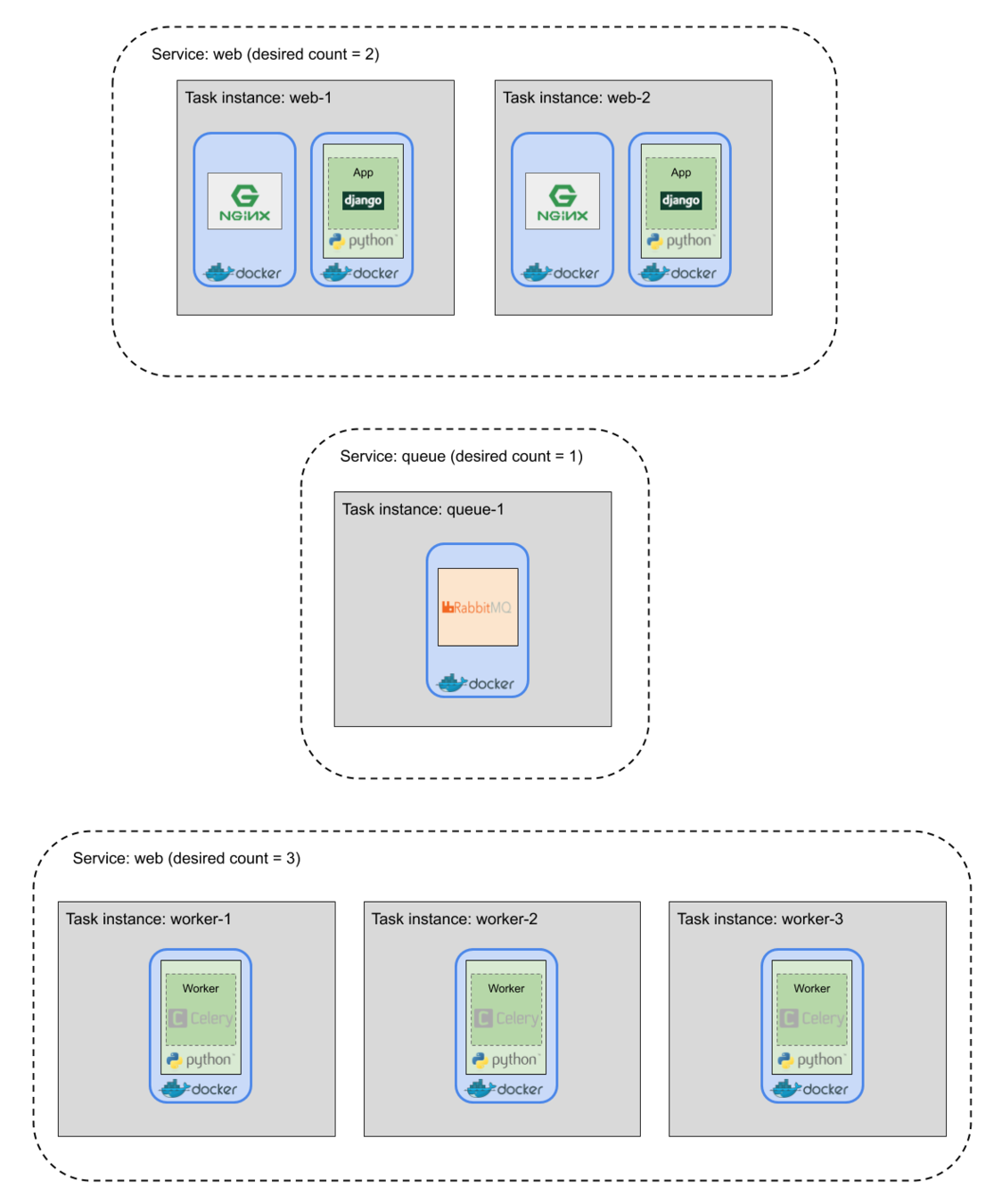

}Tasks can have one or more containers.

Task instance: instantiation of a task

Default settings from task definition such as command, memory allocation, etc. can be overridden when instantiating a task.

Each task instantiation from a task definition has the same set of containers.

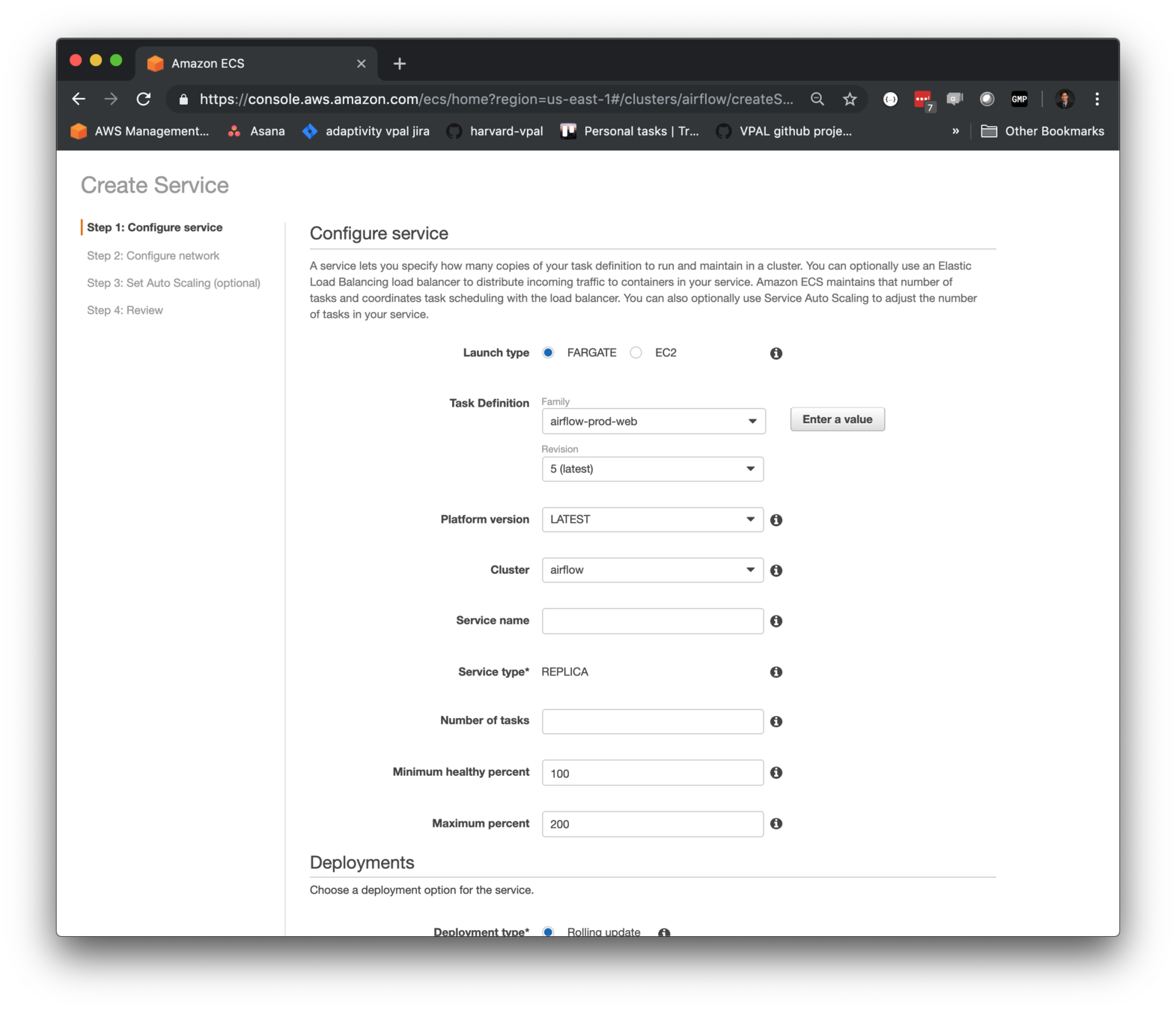

Service: an "auto scaling group" for tasks

Services are used for tasks that run indefinitely

(e.g. web service)

Cluster: a logical grouping of tasks/services

ECS abstractions

| ECS term | description / analog |

|---|---|

| container definition | docker-compose |

| task definition | docker-compose + AWS config |

| task instance | instantiation of a task definition |

| service | auto-scaling group for task instances |

| cluster | grouping of tasks/services |

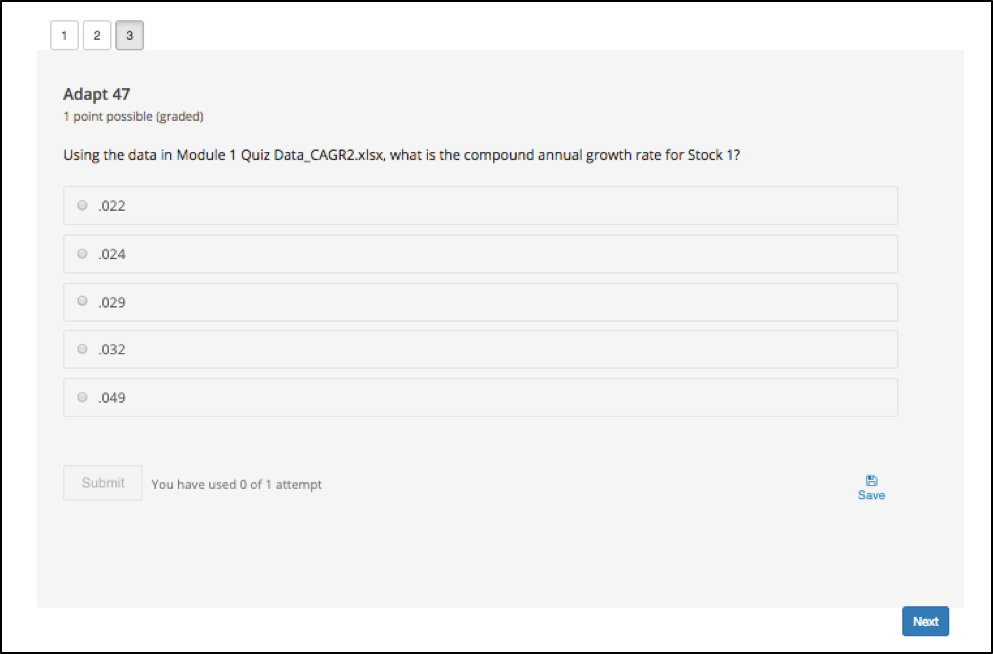

Example application 1

web microservice

(adaptive learning recommendation engine)

Workflow

- build with docker-compose

- push image to ECR

- define ECS tasks and services

- setup additional infrastructure

Build images

e.g. `docker-compose build`

# docker-compose.yml

version: '2'

services:

bridge:

container_name: BFA

build:

context: .

dockerfile: Dockerfile

image: bridge_adaptivity

command: bash -c "./prod_run.sh"

volumes:

- .:/bridge_adaptivity

- static:/www/static

ports:

- "8000:8000"

links:

- postgres

# Celery worker

worker:

image: bridge_adaptivity

environment:

DJANGO_SETTINGS_MODULE: config.settings.prod

command: bash -c "sleep 5 && celery -A config worker -l info"

volumes:

- .:/bridge_adaptivity

links:

- rabbit

- postgres

depends_on:

- bridge

rabbit:

container_name: rabbitmq

image: rabbitmq

env_file: ./envs/rabbit.env

nginx:

container_name: nginx_BFA

build: ./nginx

ports:

- "80:80"

- "443:443"

volumes_from:

- bridge

volumes:

- /etc/nginx/ssl/:/etc/nginx/ssl/

links:

- bridge

postgres:

container_name: postgresql_BFA

image: postgres

env_file: ./envs/pg.env

volumes:

- pgs:/var/lib/postgresql/data/

ports:

- "5432:5432"

Push images

e.g. `docker-compose push`

Task definition: web

{

"family": "web",

"taskRoleArn": "arn:...",

"networkMode": "awsvpc",

"containerDefinitions": [

{

"name": "web",

"image": "123456789.dkr.ecr.us-east-1.amazonaws.com/namespace/app",

"essential": true,

"memory": 256,

"portMappings": [

{

"containerPort": 8000

}

],

"command": [

"/usr/local/bin/gunicorn",

"config.wsgi:application",

"-w=2",

"-b=:8000",

"--log-file=-",

"--access-logfile=-"

],

"environment": [

{"name": "DJANGO_SETTINGS_MODULE", "value": "${DJANGO_SETTINGS_MODULE}"},

{"name": "ENV_LABEL", "value": "${env_label}"},

{"name": "HOST", "value": "${domain_name}"}

],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "${log_group_name}",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

}

},

{

"name": "nginx",

"image": "${nginx_image}",

"essential": false,

"memory": 256,

"portMappings": [

{

"containerPort": 80

}

],

...

}

]

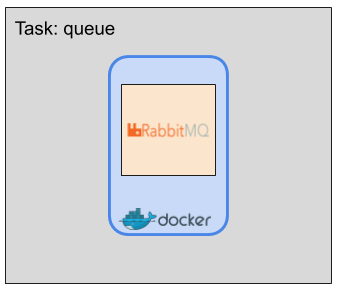

}Task definition: queue

{

"family": "web",

"taskRoleArn": "arn:...",

"networkMode": "awsvpc",

"containerDefinitions": [

[

{

"name": "rabbit",

"image": "rabbitmq",

"essential": true,

"memory": 256,

"environment": [

{"name": "RABBITMQ_DEFAULT_PASS", "value": "${celery_password}"},

{"name": "RABBITMQ_DEFAULT_USER", "value": "${celery_user}"}

],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "${log_group_name}",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

}

}

}

]

]

}

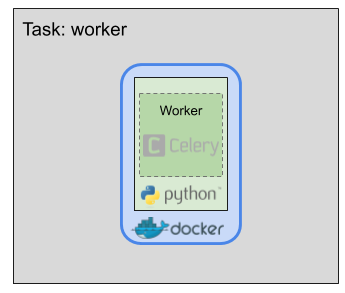

Task definition: worker

{

"family": "web",

"taskRoleArn": "arn:...",

"networkMode": "awsvpc",

"containerDefinitions": [

{

"name": "worker",

"image": "123456789.dkr.ecr.us-east-1.amazonaws.com/namespace/app",

"essential": true,

"memory": 256,

"command": ["celery","-A","config","worker","-l","info"],

"environment": [

{"name": "DJANGO_SETTINGS_MODULE", "value": "${DJANGO_SETTINGS_MODULE}"}

],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "${log_group_name}",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

}

}

}

]

}

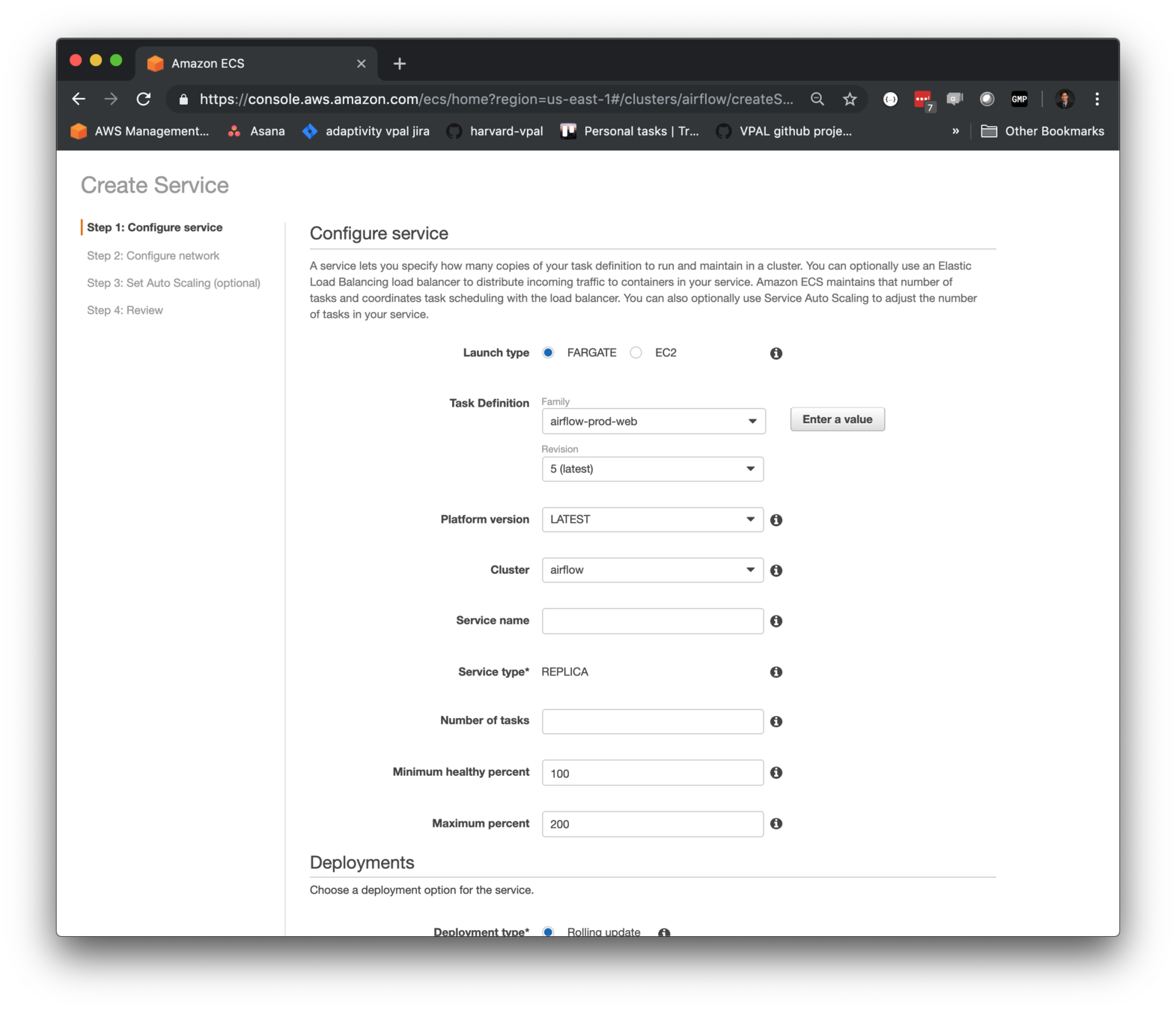

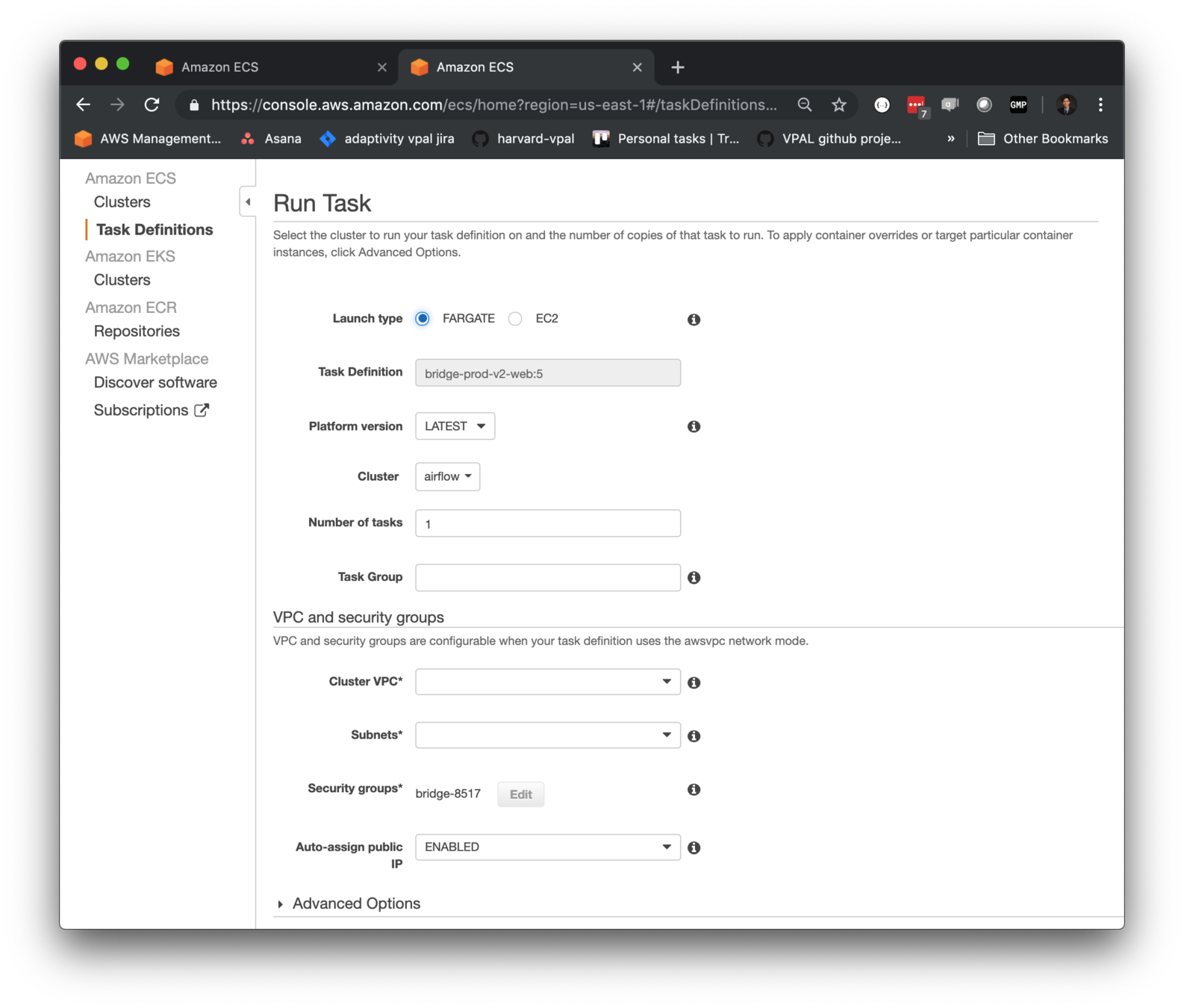

Define services

Additional infrastructure

-

database, associated security group

-

task IAM role

-

ECS service discovery

-

application load balancer

-

route 53 zone

Example application #2:

Long-running (~ few days) web crawler that triggers on a scheduled basis, or in response to new records

Run scripts with finite execution time as tasks, instead of services

e.g. data processing jobs, api scraper, web crawler

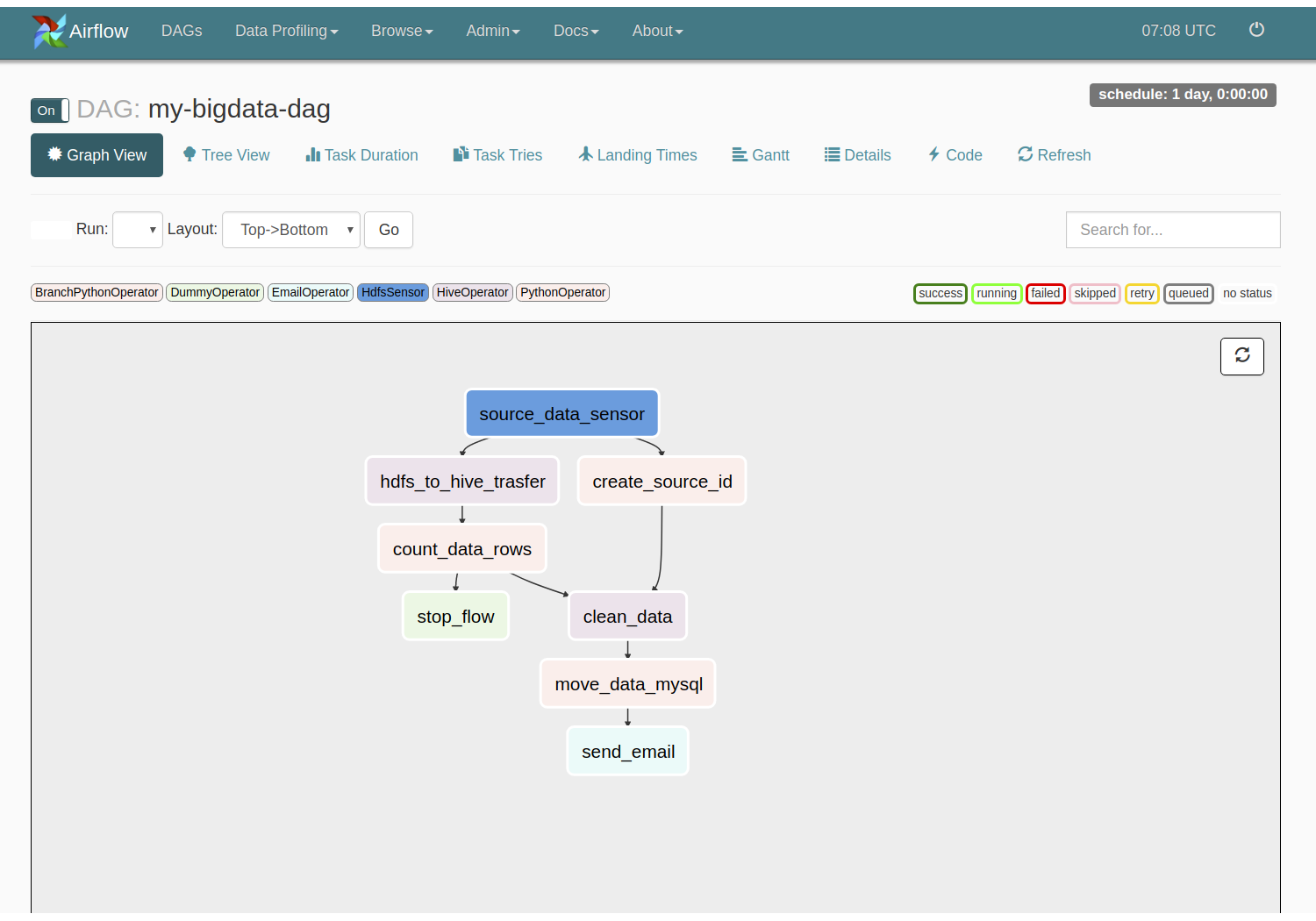

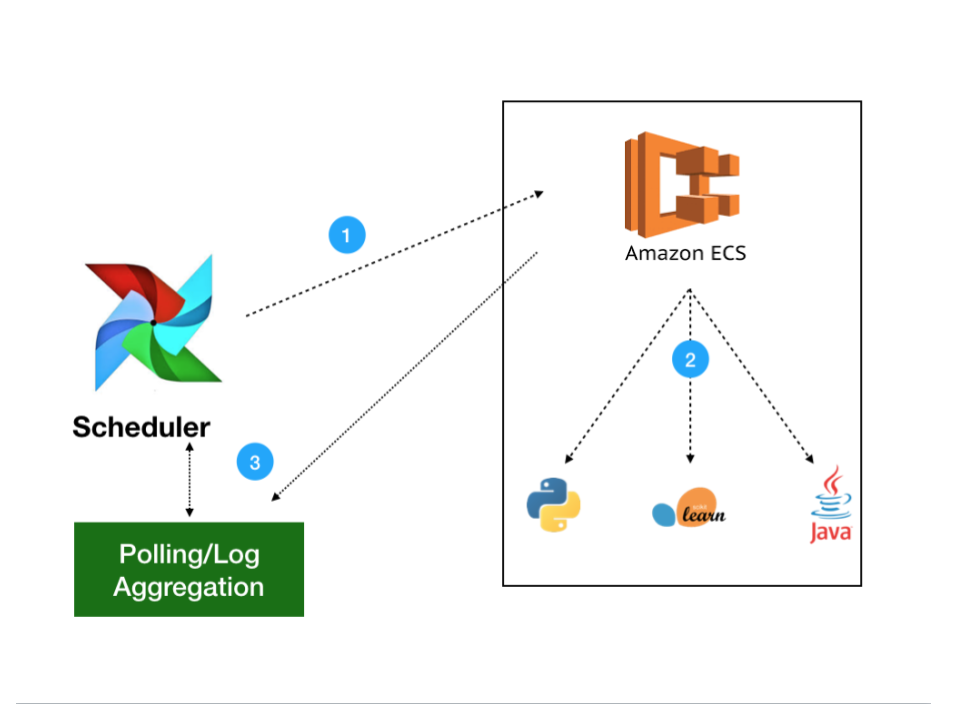

Scheduling jobs with Airflow

Task dependencies expressed as a DAG (Directed Acyclic Graph)

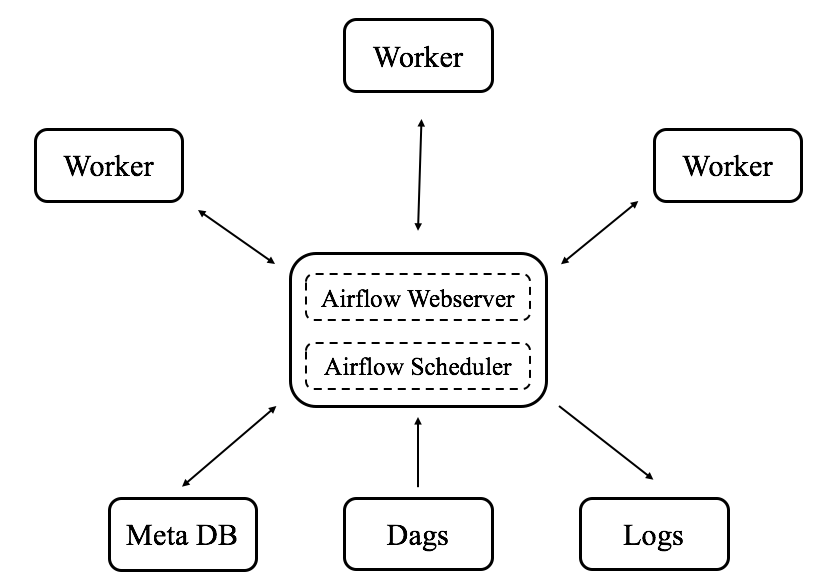

Typical Airflow cluster setup

Scheduling ECS tasks with Airflow

DevOps considerations and streamlining deployment tasks

DevOps considerations

- Secrets management

- Access control

- Application configuration

- Versioning

- Build/Deploy Automation

- Cost

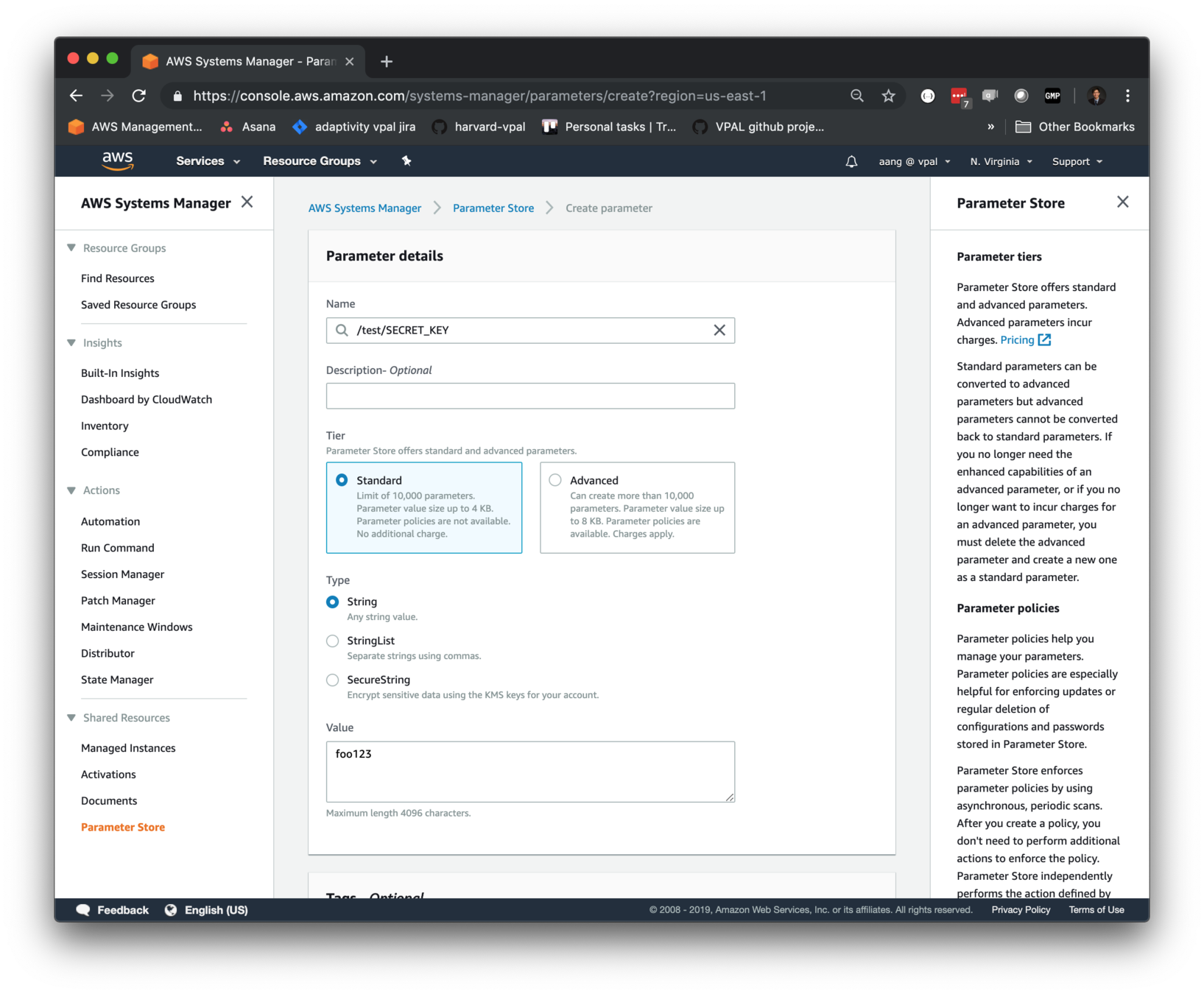

Secrets management:

Specify values from SSM Param Store in task definition to inject as environment variables (available Nov 2018)

{

"family": "web",

"taskRoleArn": "arn:...",

"networkMode": "awsvpc",

"containerDefinitions": [

{

"name": "web",

"image": "${web_image}",

...

"environment": [

{"name": "DJANGO_SETTINGS_MODULE", "value": "${DJANGO_SETTINGS_MODULE}"},

{"name": "HOST", "value": "${domain_name}"}

],

"secrets": [

{

"name": "SECRET_KEY",

"valueFrom": "arn:aws:ssm:us-east-1:123456789:parameter/app/${env_label}/SECRET_KEY"

},

{

"name": "DATABASE_CONNECTION",

"valueFrom": "arn:aws:ssm:us-east-1:123456789:parameter/app/${env_label}/DATABASE_CONNECTION"

}

]

},

...

]

}Secrets management:

Populate values in SSM Param Store; access control can be controlled via IAM

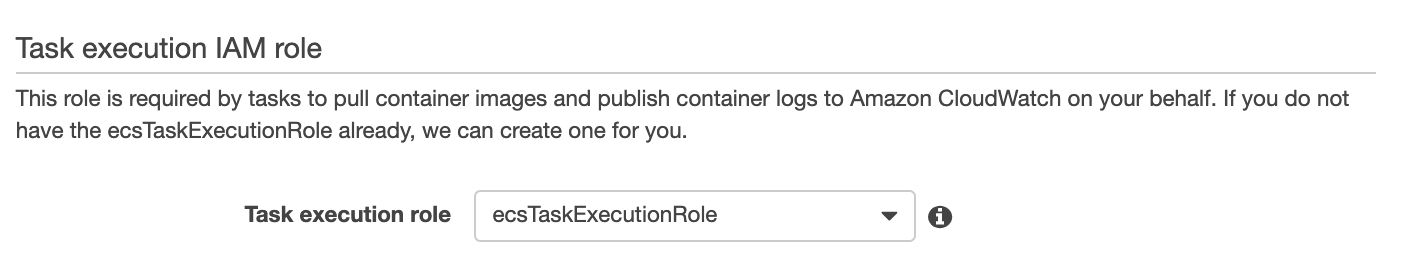

Access control:

Control access to AWS resources on startup with

Task Execution Role

Useful for controlling access to secrets, ECR repos

Security groups:

Define security group for task to be associated with

Useful for giving access to

ip-restricted databases

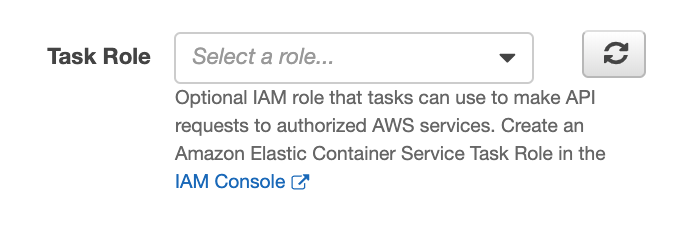

Access control:

Control access to AWS resources at task runtime with Task Role

Useful for controlling access to data sources / destinations

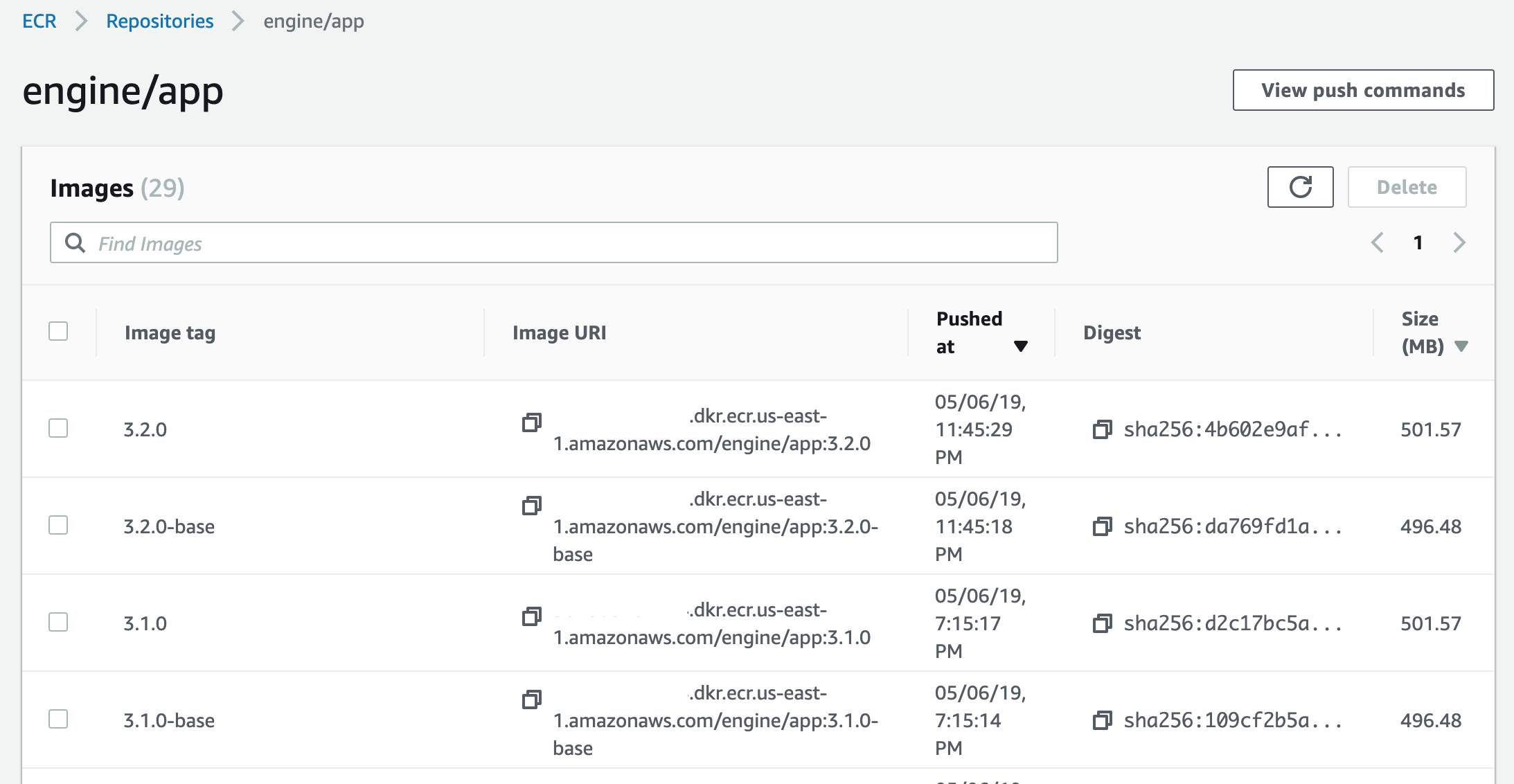

Versioning:

Tag ECR images with version at build/upload

Versioning:

Reference image tags in task definition

{

"family": "app",

"networkMode": "awsvpc",

"containerDefinitions": [

{

"name": "web",

"image": "123456789.dkr.ecr.us-east-1.amazonaws.com/namespace/app:3.1.2",

...

}

]

}Build / deploy automation

- Build

- build versioned docker image

- add additional config if applicable

- Deploy

- Push version to image repo

- Create infrastructure

- load balancer, security groups and policies, ECS task definitions and services

- Apply infrastructure changes

- Deploy app (image) version

- Scale up/down

docker-compose file for deploy builds

Alternate docker-compose file for versioned/custom builds -

Uses APP_TAG env variable and builds from github source

version: '3'

services:

# base app image

app_base:

image: ${APP_IMAGE}:${APP_TAG}-base

build:

dockerfile: Dockerfile_opt

# context: app_base/src/bridge_adaptivity # if building from local version; ensure volume mount is configured in other docker-compose

# build from github, using reference APP_TAG and bridge_adaptivity subdirectory

context: https://github.com/harvard-vpal/bridge-adaptivity.git#${APP_TAG}:bridge_adaptivity

# copy custom settings into base app image (see Dockerfile)

app:

build:

context: app

args:

- APP_IMAGE=${APP_IMAGE}:${APP_TAG}-base

image: ${APP_IMAGE}:${APP_TAG}

environment:

- DJANGO_SETTINGS_MODULE=config.settings.custom

# custom nginx image build that collects static assets from app image and copies to nginx image

nginx:

build:

context: nginx

args:

- APP_IMAGE=${APP_IMAGE}:${APP_TAG}

image: ${NGINX_IMAGE}:${APP_TAG}

Extending an app image

Adding custom settings

# Dockerfile that derives from base app image and adds some custom settings

# Base app image:tag to use

ARG APP_IMAGE

FROM ${APP_IMAGE} as app

WORKDIR /bridge_adaptivity

# copy custom settings into desired location

COPY settings/custom.py config/settings/custom.py

COPY settings/collectstatic.py config/settings/collectstatic.py

# generate staticfiles.json even if app image is not serving static images directly

RUN python manage.py collectstatic -c --noinput --settings=config.settings.collectstatic

Custom image build

ECS-specific settings - a django example

# django custom settings (custom.py)

def get_ecs_task_ips():

"""

Retrieve the internal ip address(es) for task, if running with AWS ECS and awsvpc networking mode

"""

ip_addresses = []

try:

r = requests.get("http://169.254.170.2/v2/metadata", timeout=0.01)

except requests.exceptions.RequestException:

return []

if r.ok:

task_metadata = r.json()

for container in task_metadata['Containers']:

for network in container['Networks']:

if network['NetworkMode'] == 'awsvpc':

ip_addresses.extend(network['IPv4Addresses'])

return list(set(ip_addresses))

ecs_task_ips = get_ecs_task_ips()

if ecs_task_ips:

# ALLOWED_HOSTS comes from config.settings.base

ALLOWED_HOSTS.extend(ecs_task_ips)Managing infrastructure state with Terraform

"Define infrastructure as code"

resource "aws_ecs_task_definition" "main" {

family = "${var.name}"

container_definitions = "${var.container_definitions}"

execution_role_arn = "${var.execution_role_arn}" # required for awslogs

task_role_arn = "${var.role_arn}"

network_mode = "awsvpc"

memory = "${var.memory}"

cpu = "${var.cpu}"

requires_compatibilities = ["FARGATE"]

}terraform workspace select dev

terraform apply -var-file="dev.tfvars"Manage multiple environments (dev/stage/prod) with respective config values

ECS resources in Terraform

resource "aws_ecs_task_definition" "main" {

family = "${var.name}"

container_definitions = "${var.container_definitions}"

execution_role_arn = "${var.execution_role_arn}" # required for awslogs

task_role_arn = "${var.role_arn}"

network_mode = "awsvpc"

memory = "${var.memory}"

cpu = "${var.cpu}"

requires_compatibilities = ["FARGATE"]

}

resource "aws_ecs_service" "main" {

name = "${var.name}"

cluster = "${var.cluster_name}"

task_definition = "${aws_ecs_task_definition.main.arn}"

desired_count = "${var.count}"

launch_type = "FARGATE"

load_balancer {

target_group_arn = "${var.target_group_arn}"

container_name = "${var.load_balancer_container_name}"

container_port = "${var.load_balancer_container_port}"

}

network_configuration {

subnets = ["${data.aws_subnet_ids.main.ids}"],

security_groups = ["${var.security_group_id}"]

assign_public_ip = true

}

}

Other AWS resources in Terraform

route53 record, load balancer, target groups, ...

resource "aws_alb" "main" {

name = "${var.project}-${var.env_label}"

subnets = ["${data.aws_subnet_ids.main.ids}"]

security_groups = ["${var.security_group_id}"]

}

resource "aws_alb_listener" "main" {

load_balancer_arn = "${aws_alb.main.id}"

port = "443"

protocol = "HTTPS"

ssl_policy = "ELBSecurityPolicy-2016-08"

certificate_arn = "${var.ssl_certificate_arn}"

default_action {

type = "fixed-response"

fixed_response {

content_type = "text/plain"

message_body = "Service Temporarily Unavailable (ALB Default Action)"

status_code = "503"

}

}

}

resource "aws_route53_record" "main" {

zone_id = "${data.aws_route53_zone.main.zone_id}"

name = "${var.domain_name}"

type = "A"

alias {

name = "${aws_alb.main.dns_name}"

zone_id = "${aws_alb.main.zone_id}"

evaluate_target_health = false

}

}

## Assumes only one service is being load balanced but may make sense to move these to service modules if not the case

resource "aws_alb_target_group" "main" {

name_prefix = "${var.short_project_label}" # using name_prefix instead of name used because of create_before_destroy option

port = "${var.container_port}"

protocol = "HTTP"

target_type = "ip" # required for use of awsvpc task networking mode

vpc_id = "${var.vpc_id}"

health_check {

path = "${var.health_check_path}"

}

# Resolves: (Error deleting Target Group: Target group is currently in use by a listener or a rule)

lifecycle {

create_before_destroy = true

}

# Resolves: The target group does not have an associated load balancer

depends_on = ["aws_alb.main"]

}

resource "aws_alb_listener_rule" "main" {

listener_arn = "${aws_alb_listener.main.arn}"

action {

target_group_arn = "${aws_alb_target_group.main.id}"

type = "forward"

}

condition {

field = "path-pattern"

values = ["*"]

}

}Terraform modules in ecs-app-utils repo

# creates load balancer, security group, route 53 records, and target groups

module "network" {

source = "git::https://github.com/harvard-vpal/ecs-app-utils.git//terraform/network/public?ref=2.3.0"

vpc_id = "${var.vpc_id}"

ssl_certificate_arn = "${var.ssl_certificate_arn}"

hosted_zone = "${var.hosted_zone}"

domain_name = "${var.domain_name}"

env_label = "${var.env_label}"

project = "${var.project}"

short_project_label = "${var.short_project_label}"

}Available terraform modules in ecs-app-utils

- execution role (IAM role)

-

network

- base

- public (base + open inbound security group)

-

service

- load balanced (e.g. web)

- discoverable (e.g. queue)

- generic (e.g. worker)

Container definitions are application-specific

Use of templating to pass in environment-specific variables or version tags

data "template_file" "container_definitions_web" {

template = "${file("./container_definitions_web.tpl")}"

vars {

web_image = "${var.app_image}:${var.app_tag}"

nginx_image = "${var.nginx_image}:${var.app_tag}"

project = "${var.project}"

env_label = "${var.env_label}"

log_group_name = "${aws_cloudwatch_log_group.main.name}"

DJANGO_SETTINGS_MODULE = "${var.DJANGO_SETTINGS_MODULE}"

domain_name = "${var.domain_name}"

}

}

module "web_service" {

source = "git::https://github.com/harvard-vpal/ecs-app-utils.git//terraform/services/load_balanced?ref=3.2.0"

vpc_id = "${var.vpc_id}"

cluster_name = "${var.cluster_name}"

role_arn = "${aws_iam_role.task.arn}"

execution_role_arn = "${module.execution_role.arn}"

security_group_id = "${aws_security_group.ecs_service.id}"

name = "${var.project}-${var.env_label}-web"

container_definitions = "${data.template_file.container_definitions_web.rendered}"

target_group_arn = "${module.network.target_group_arn}"

count = "${var.web_count}"

cpu = 512

memory = 1024

}# container_definitions_web.tpl

[

{

"name": "web",

"image": "${web_image}",

"essential": true,

"portMappings": [

{

"containerPort": 8000

}

],

"command": [

"/usr/local/bin/gunicorn",

"itero.wsgi:application",

"-w=2",

"-b=:8000",

"--log-level=debug",

"--log-file=-",

"--access-logfile=-"

],

"environment": [

{"name": "DJANGO_SETTINGS_MODULE", "value": "${DJANGO_SETTINGS_MODULE}"},

{"name": "HOST", "value": "${domain_name}"}

],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "${log_group_name}",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

}

},

"secrets": [

{

"name": "SECRET_KEY",

"valueFrom": "arn:aws:ssm:us-east-1:123456789:parameter/itero/${env_label}/SECRET_KEY"

},

{

"name": "DATABASE_CONNECTION",

"valueFrom": "arn:aws:ssm:us-east-1:361808764124:parameter/itero/${env_label}/DATABASE_CONNECTION"

},

{

"name": "CELERY_BROKER_URL",

"valueFrom": "arn:aws:ssm:us-east-1:123456789:parameter/itero/${env_label}/CELERY_BROKER_URL"

}

{

"name": "GOOGLE_PICKER_CLIENT_ID",

"valueFrom": "arn:aws:ssm:us-east-1:123456789:parameter/itero/${env_label}/GOOGLE_CLIENT_ID"

},

{

"name": "GOOGLE_PICKER_APP_ID",

"valueFrom": "arn:aws:ssm:us-east-1:123456789:parameter/itero/common/GOOGLE_APP_ID"

}

]

},

{

"name": "nginx",

"image": "${nginx_image}",

"essential": false,

"portMappings": [

{

"containerPort": 80

}

],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "${log_group_name}",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

}

}

}

]

ecs-utils

CLI for common ecs build/deploy tasks

Supports image versioning and multiple environments (dev/stage/prod)

# Checkout the app code with the specified version and tag image with that tag

deploy build --tag 1.0.0

# Push images with the specified tag to ECR repositories

deploy push --tag 1.0.0

# Run 'terraform apply' with specified image tag against 'dev' environment

deploy apply --tag 1.0.0 --env dev

# Build, push, and apply

deploy all --tag 1.0.0 --env dev

# Redeploy services (force restart of specific services, even if no config changes)

deploy redeploy --env dev web worker

Comparisions with other AWS services

Fargate vs EC2

| Fargate | EC2 |

|---|---|

| Easy scalability, automatic, built-in failure recovery, no need to think about instance provisioning More expensive Compute/memory capacity flexible within a limited range (max 4vCPU / 30 GB memory) |

Build your own scaling, failure recovery, container orchestration (if using docker) If not using docker, dependency management/setup may be complex depending on library/system dependencies Cheaper More high-end options for compute/memory configurations |

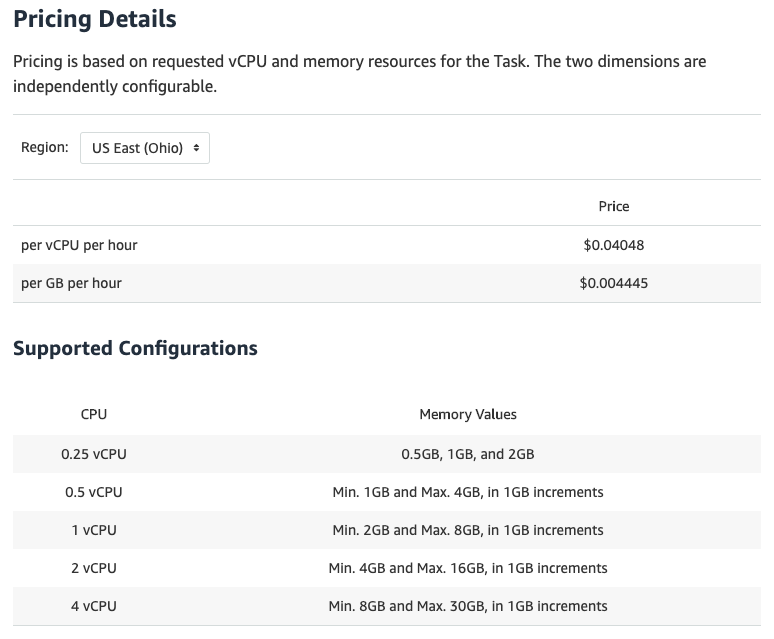

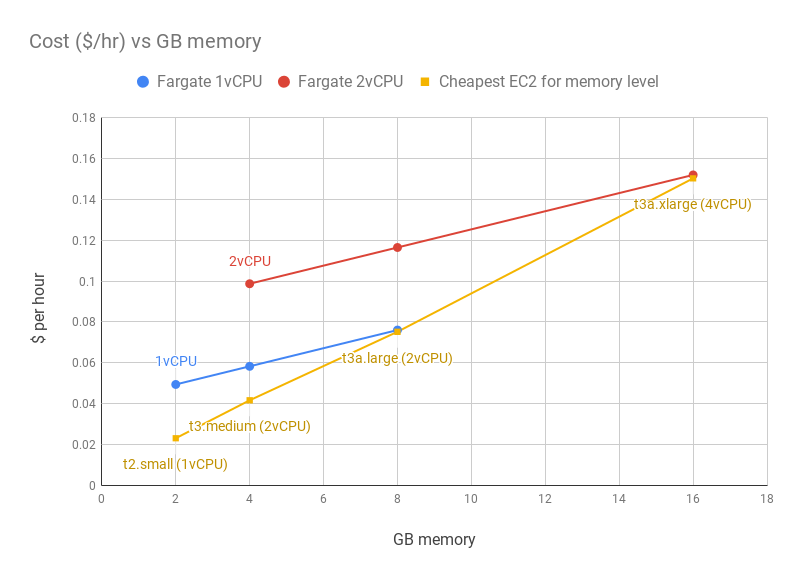

Fargate pricing

Fargate vs EC2 cost

Fargate can be a good option for use cases that are memory-limited (e.g. data processing/transformation in memory) vs upgrading to next ec2 tier

Fargate vs Lambda

| Fargate | Lambda |

|---|---|

| High level of control of library and system dependencies with docker Slower startup time (30 sec - 1 min) Can use for long running applications |

No docker support 50mb (zipped) deployment package size limit - barely enough for basic python data science stack (numpy / pandas / sklearn / statsmodel) 900 second execution time limit Fast startup time |

Fargate vs Kubernetes

| Fargate | Kubernetes |

|---|---|

| No need to consider instance provisioning Tight integration with other AWS resources - (task IAM roles, SSM secrets, Cloudwatch logs) |

Abstractions are more complex (imo) EKS more expensive (need to run control plane - $0.2 / hour) Managing your own K8s cluster is complex Open-source; community plug-ins (e.g. canary deployments) |

Thanks!

Questions

Slides:

https://bit.ly/itsummit-fargate

ecs-utils

https://github.com/harvard-vpal/ecs-app-utils

andrew_ang@harvard.edu

Deploying Serverless Docker applications on AWS

By kunanit

Deploying Serverless Docker applications on AWS

Sharing our experience and DevOps considerations in using AWS Fargate to support a variety of containerized applications for education data science.

- 1,074