DuckDB

A nontraditional database

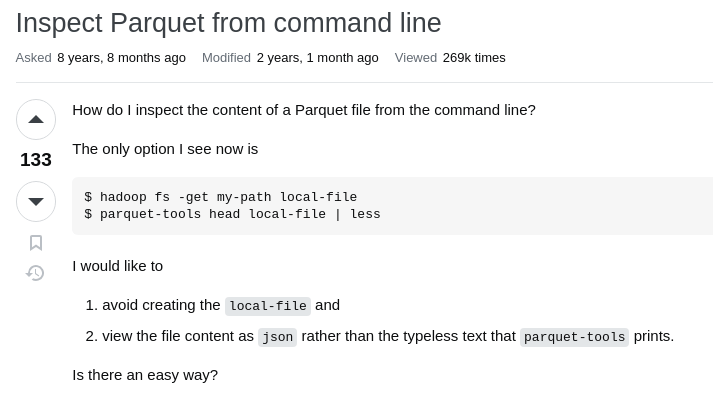

How have I found DuckDB?

How have I found DuckDB?

select *

from read_parquet()

limit 100;

select count(*)

from read_parquet()

limit 100;

-- Inferred schema based on select

describe select *

from read_parquet();

-- Actual parquet file schema

select *

from parquet_schema();

select *

from parquet_metadata();Demo

Usage from IDE

Demo

- Parquet examples

- CSV

- Json examples

- Creating views

- Creating tables

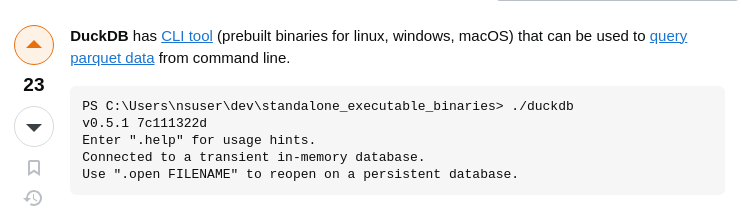

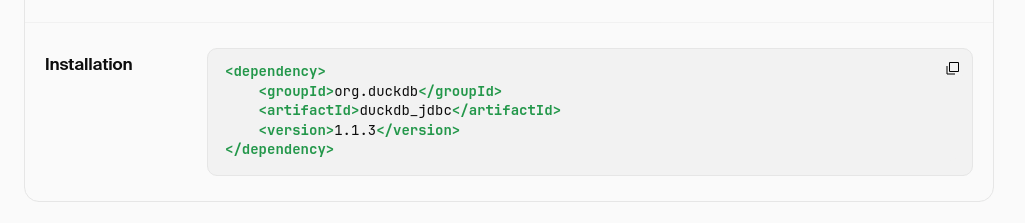

DuckDB Modes

1. In-memory

2. Persistent

jdbc:duckdb:

jdbc:duckdb:memory:

jdbc:duckdb:/path/to/file.duckdb

In-memory

create view my_view as

select *

from read_json_auto('...');

select *

from my_view; -- actually reads a file

create table my_table -- copies data to memory

select *

from read_json_auto('...');

select *

from my_table; -- reads data from memory

Persistent

create view my_view as

select *

from read_json_auto('...');

select *

from my_view; -- actually reads a file

create table my_table -- copies data to DB file

select *

from read_json_auto('...');

select *

from my_table; -- reads data from DB file

SQL Dialect

- Arrays/List

- Lambda functions

- Pattern Matching + Regular Expressions

- Struct (json/kv)

- Text Functions

- Text Similarity Functions

- Window Functions

- Pivot/Unpivot

- Filter

- Grouping sets

What about writing?

Inserts work as expected:

For in-memory mode insert copies data to in-memory table.

For persistent mode insert copies data to table in DB file.

Extensions

+----------------+------------------------------------------------------------------------+

|extension_name |description |

+----------------+------------------------------------------------------------------------+

|arrow |A zero-copy data integration between Apache Arrow and DuckDB |

|autocomplete |Adds support for autocomplete in the shell |

|aws |Provides features that depend on the AWS SDK |

|azure |Adds a filesystem abstraction for Azure blob storage to DuckDB |

|delta |Adds support for Delta Lake |

|excel |Adds support for Excel-like format strings |

|fts |Adds support for Full-Text Search Indexes |

|httpfs |Adds support for reading and writing files over a HTTP(S) connection |

|iceberg |Adds support for Apache Iceberg |

|icu |Adds support for time zones and collations using the ICU library |

|inet |Adds support for IP-related data types and functions |

|jemalloc |Overwrites system allocator with JEMalloc |

|json |Adds support for JSON operations |

|motherduck |Enables motherduck integration with the system |

|mysql_scanner |Adds support for connecting to a MySQL database |

|parquet |Adds support for reading and writing parquet files |

|postgres_scanner|Adds support for connecting to a Postgres database |

|spatial |Geospatial extension that adds support for working with spatial data... |

|sqlite_scanner |Adds support for reading and writing SQLite database files |

|substrait |Adds support for the Substrait integration |

|tpcds |Adds TPC-DS data generation and query support |

|tpch |Adds TPC-H data generation and query support |

|vss |Adds indexing support to accelerate Vector Similarity Search |

+----------------+------------------------------------------------------------------------+Extensions

DuckDB as an ETL tool

- File formats

- Parquet

- JSON

- CSV

- S3 support

- MySQL + PostgreSQL support

DuckDB as an ETL tool

Source

Target

DuckDB

Local machine

EC2 instance

Lambda

DuckDB as an ETL tool

Lambda Demo

- README

- lambda_function.py

- invoke.py

- invoke_template.py

- justfile

- Airflow

- redshift/import/from_rds.py

- common/duckdb.py

DuckDB downsides

1. Single-node processing

2. Sharing

Kinda solvable with duckdb file + sql script

Summary

- Amazing tool with a lot of use-cases

- Reading/writing local files

- Ad-hoc data transformations

- Data analysis

- ETL

- Extremely fast

- It just works

Thank you!

duckdb

By Yury Badalyants

duckdb

Internal Presentation for Parrot Analytics

- 261