Investigative reporting with databases

By Luke Rosiak

The Daily Caller News Foundation

This presentation:

slides.com/lukerosiak/

"You see Lucille there? You ought to see her when they play 'Tequila Makes Her Clothes Fall Off,'" Mr. Byron says.

Data can be your secret weapon to find stories others miss, and work faster than your competitors.

- Data is just a window into the real world. Use it to generate story leads, and then report them out.

- Newspaper articles aren't academic papers. Don't bog them down with numbers.

- Your anecdotal leads will actually mean something, and your trend pieces will represent actual trends instead of just something a writer noticed.

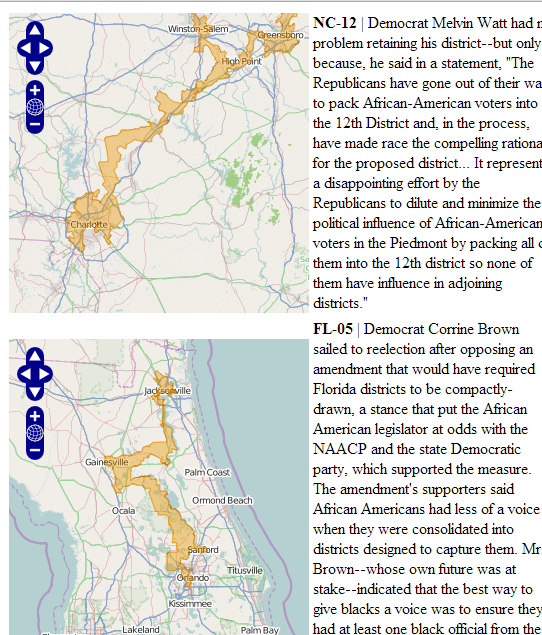

I used math and GIS (spatial software) to identify the most-gerrymandered Congressional districts. Lets you be authoritative, not subjective--and lets you automate redundant tasks.

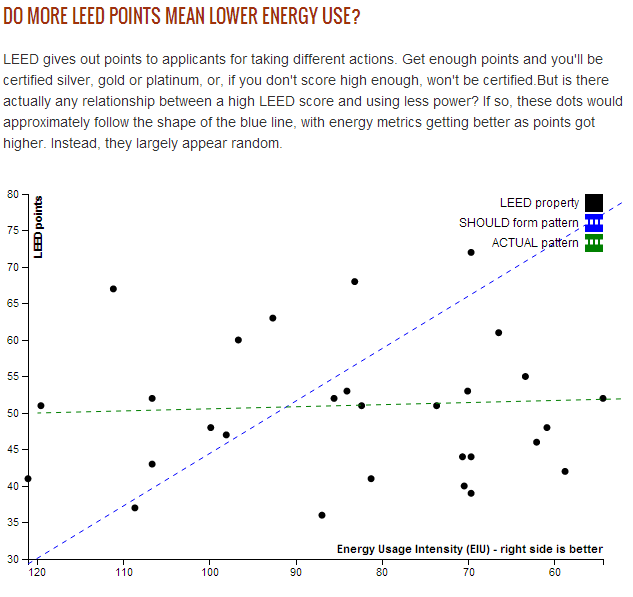

Challenging assumptions

- Data can test conventional wisdom

- Anyone can have an ideology-driven opinion, but data helps you get at the truth

- Spokesmen and anyone else can manipulate traditional statements, but if they don't know how to make sense of data, they don't know what they should try to censor or hide from you.

Recognize patterns, create methodologies and apply them en masse

-

Data is especially helpful in writing at national publications with readers everywhere.

- You write some formula that could show something interesting, run it on every member of a group at once (all members of Congress, all counties, etc.).

- The odds are good that at least some will be outliers, and you can write about them.

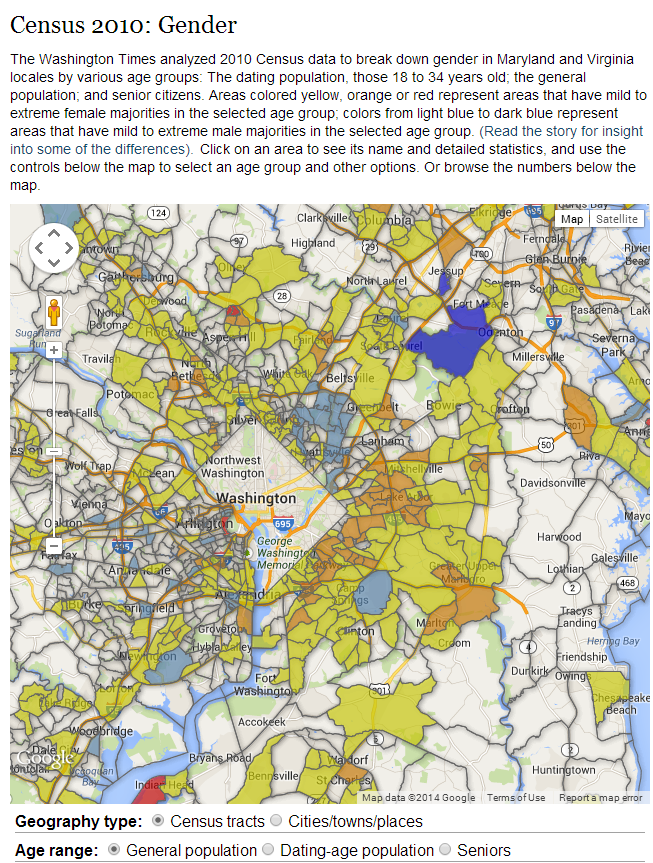

- If you can make web apps, you can make something that allows readers across the country to localize your findings--essentially writing 50 or more stories at once.

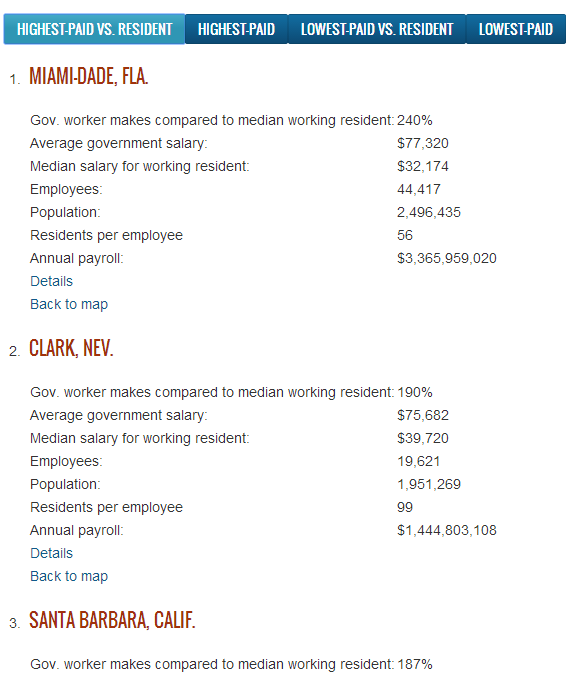

Government salaries

layers of computer work

- Simply pulling up facts from online databases using their online navigation system as part of your stories.

- Coding (optional): Python. Gathering data by scraping websites, pre-processing it.

- Data analysis: SQL or spreadsheets. Linking multiple data sources together and running filters and totals.

- Display (optional): Javascript. Displaying data visualizations in the browser.

Don't be afraid

- Journalists are experts at quickly learning stuff. You can do it.

- There's a lot to learn, but you do it a little at a time, to solve immediate problems that you have in front of you.

- There is a great email list full of helpful people, called NICAR-L. You can sign up at ire.org.

- There are TONS of jobs for journalists with data and programming skills, so it's worth learning.

Finding DATA: NATIONAL POLITICS

- Legislation: Federal: govtrack.us State: openstates.org

- Campaign finance and lobbying: Federal: OpenSecrets.org State: followthemoney.org

- Federal contracts: USASpending.gov

- Census: censusreporter.org

- Nationwide info about local governments: https://www.census.gov/govs/cog/

- Nonprofits: CitizenAudit.org

- Unions: UnionFacts.org

- Farm subsidies: farm.ewg.org

- Search for miscellaneous federal data: Data.gov

- Federal court records: PACER.gov

GETTING DATA: Creating it

- Create your own: Take notes in Excel or Google Spreadsheets, using columns appropriately, when information takes a certain format.

- This can be information from interviews, web research, or documents. Staying organized and working in a structured way is important.

- Numbers in their own columns

- Define and apply categories... Make sure the spelling is the exact same each time so you can group by them.

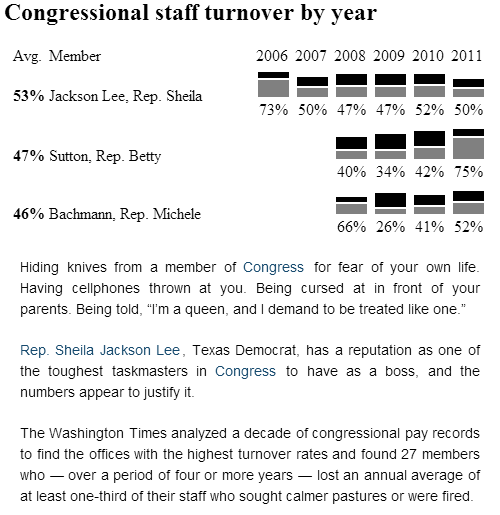

mean bosses in congress

GETTING DATA

-

The best format to get it in is the simplest, CSV, because it can be opened by any program. The WORST is PDFs.

- Governments have stuff electronically (spreadsheets) so don't settle for scanned pieces of paper or PDFs.

- If you find a blank government form that would contain interesting information, then you know there's a dataset somewhere.

Spreadsheets

- A spreadsheet is one table, a database is multiple tables interacting with each other.

- Google Docs' spreadsheets is very similar to Excel, and more convenient

- Formulas: =sum(a2:a100), =average(b2:b100)

- "Pivot Table" under the Data menu is where things get powerful. "Group" records to lump names together, and run totals on them. Drag the column with names in it into the "row" section, the column you want to run totals on into the "column" section, and pick whether you want to see the number of records (count), sum, etc.

- Demo

things to learn: Databases.

- Databases--the SQL language--is the next step.

-

Microsoft Access is a visual version of SQL that you may have on your computer as part of Microsoft Office, and if you do, you can do 95% of computer-assisted reporting there.

- Google Fusion Tables has some SQL features and also allows you to easily display info on the web, including maps (spatial), which is called GIS.

SQL concepts

Regardless of whether you're writing SQL commands manually or using a tool like Microsoft Access, the commands are SELECT columns, filtering with WHERE, ORDERing, GROUPing and JOINING.

Computing the honor roll:

- SELECT firstname, lastname FROM students WHERE gpa>3 ORDER BY gpa DESC;

Wildcard "LIKE"s to show students whose names start with JA:

SELECT firstname, lastname FROM students WHERE name LIKE 'ja*';

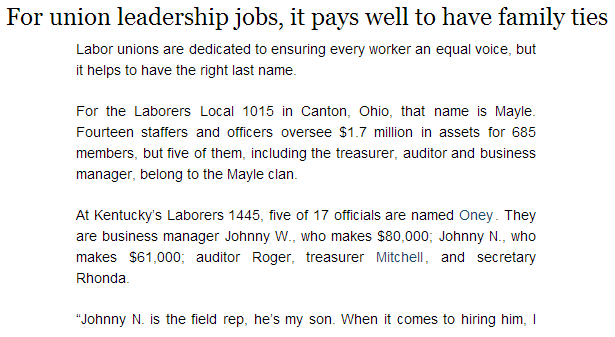

USING 'GROUP BY" TO SHOW NEPOTISM

SELECT union_name, last_name, COUNT(*)

FROM union_salaries

GROUP BY union_name, last_name

ORDER BY COUNT(*);

-

Grouping by is also called 'aggregating.' You can do it in Excel with the Pivot Tables function, or in Google Fusion Tables with the Summarize function.

- It means lumping things together into groups based on one or more values, and computing some number for the whole group, such as averaging or totaling a numeric value, or just giving you the number of rows in that category.

- Big tables won't seem so overwhelming when you realize that you'll probably want to condense them into much smaller and more illuminating summary tables this way.

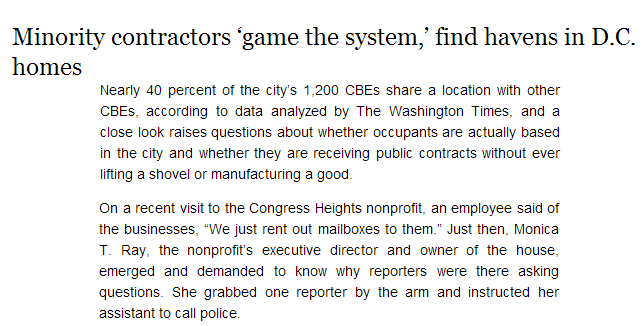

Finding fake mailing addresses used by hundreds of companies to gain preferences:

SELECT address, city, COUNT(*) FROM contracts GROUP BY address, city ORDER BY COUNT(*) DESC LIMIT 10;

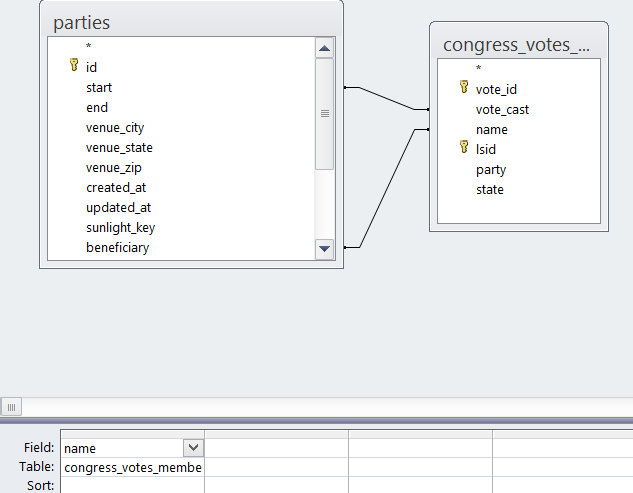

JOINING

JUST JOIN TWO LISTS TOGETHER TO FIND SECRET CONNECTIONS! #1 THING YOU CAN DO, AND EASY!

For example, a list of votes where members of Congress were absent, and a list of fundraisers that they attended. If the lists are from seemingly unrelated sources, you'll find stuff no one knows about.

It's important to have a unique identifier for concepts... in other words, if both tables represent members of Congress, do they format the names the same way? Ideally they'll both use the same numerical ID.

SELECT member_id, member_name, date, vote_name, fundraiser_location

FROM missed_votes INNER JOIN fundraisers

ON (missedvotes.member_id=fundraisers.member_id AND missedvotes.date=fundraisers.date)

Or in Microsoft Access:

SQL specifics

- Definitely use Microsoft Access if you have it. Import external data and drag the tables onto the pane, and go to the Design tab. Select your fields and put in WHERE and ORDER criteria at the bottom, and visually join fields on different tables together to do JOINs.

- Otherwise, use SqLite, which you can download as a Mozilla Firefox plug-in and other easy ways.

- More robust version is called PostgreSQL. But all SQL is basically the same

-

Here's a SQL tutorial: http://www.peteraldhous.com/CAR/Database_SQLite.pdf

with great power comes great responsibility

- Data is often messy. You may have to clean it up. For example, phone company employees listing their jobs as AT&T, A T T, AT+T, A T and T. The computer will not know these are the same. Use the tool Open Refine and its Cluster tool to clean up different spellings mostly automatically.

- You need to learn about the topic the data is representing. That means asking questions, calling people, acquiring domain knowledge.

- If something seems so extreme that it would make for a crazy story, there's a good chance it's some kind of error instead.

OPTIONAL THINGS TO LEARN: PROGRAMMING

- The Python language. learnpython.org

- Automate redundant tasks. It's like having 100s of interns!

- Programming primarily comes in handy to get data into a format good for databases.

- "Scraping" means hitting different pages of a web site thousands of times and using patterns to extract the data from HTML and put it into fields.

- You can use advanced algorithms to detect suspicious things.

Optional things to learn: FRONT-END WEB DESIGN

- This means HTML and Javascript, often with the help of Javascript libraries like D3.js

- Interactive graphics

You can do this

- All you need to know is Pivot Tables in Google Docs and "joins" in Access. You can forget everything else.

- Just pay attention to the existence of data and how it can play into your beat, and learn what you need to do a specific story. Do that a bunch of times and you know everything!

- You will be able to get lots of jobs if you have data and/or programming skills.

- But that's not why you should do it, you should do it because you can root out fraud and send people to jail with it.

Computer-assisted reporting

By Luke Rosiak

Computer-assisted reporting

- 1,235