CASSANDRA SUMMIT 2015

Mario Lazaro

September 24th 2015

#CassandraSummit2015

Multi-Region Cassandra in AWS

WHOAMI

-

Mario Cerdan Lazaro

-

Big Data Engineer

-

Born and raised in Spain

- Joined GumGum 18 months ago

- About a year and a half experience with Cassandra

-

#5 Ad Platform in the U.S

-

10B impressions / month

-

2,000 brand-safe premium publisher partners

-

1B+ global unique visitors

-

Daily inventory Impressions processed - 213M

-

Monthly Image Impressions processed - 2.6B

-

123 employees in seven offices

Agenda

- Old cluster

-

International Expansion

- Challenges

- Testing

- Modus Operandi

- Tips

- Questions & Answers

- 25 Classic nodes cluster

- 1 Region / 1 Rack hosted in AWS EC2 US East

- Version 2.0.8

- Datastax CQL driver

- GumGum's metadata including visitors, images, pages, and ad performance

- Usage: realtime data access and analytics (MR jobs)

OLD C* CLUSTER - MARCH 2015

OLD C* CLUSTER - REALTIME USE CASE

- Billions of rows

-

Heavy read workload

- 60/40

- TTLs everywhere - Tombstones

- Heavy and critical use of counters

- RTB - Read Latency constraints (total execution time ~50 ms)

OLD C* CLUSTER - ANALYTICS USE CASE

-

Daily ETL jobs to extract / join data from C*

- Hadoop MR jobs

- AdHoc queries with Presto

International expansion

First Steps

- Start C* test datacenters in US East & EU West and test how C* multi region works in AWS

- Run capacity/performance tests. We expect 3x times more traffic in 2015 Q4

First thoughts

- Use AWS Virtual Private Cloud (VPC)

- Cassandra & VPC present some connectivity

issueschallenges - Replicate entire data with same number of replicas

TOO GOOD TO BE TRUE ...

CHALLENGES

- Problems between Cassandra in EC2 Classic / VPC and Datastax Java driver

- EC2MultiRegionSnitch uses public IPs. EC2 instances do not have an interface with public IP address - Cannot connect between instances in the same region using Public IPs

- Region to Region connectivity will use public IPs - Trust those IPs or use software/hardware VPN

- Your application needs to connect to C* using private IPs - Custom EC2 translator

/**

* Implementation of {@link AddressTranslater} used by the driver that

* translate external IPs to internal IPs.

* @author Mario <mario@gumgum.com>

*/

public class Ec2ClassicTranslater implements AddressTranslater {

private static final Logger LOGGER = LoggerFactory.getLogger(Ec2ClassicTranslater.class);

private ClusterService clusterService;

private Cluster cluster;

private List<Instance> publicDnss;

@PostConstruct

public void build() {

publicDnss = clusterService.getInstances(cluster);

}

/**

* Translates a Cassandra {@code rpc_address} to another address if necessary.

* <p>

*

* @param address the address of a node as returned by Cassandra.

* @return {@code address} translated IP address of the source.

*/

public InetSocketAddress translate(InetSocketAddress address) {

for (final Instance server : publicDnss) {

if (server.getPublicIpAddress().equals(address.getHostString())) {

LOGGER.info("IP address: {} translated to {}", address.getHostString(), server.getPrivateIpAddress());

return new InetSocketAddress(server.getPrivateIpAddress(), address.getPort());

}

}

return null;

}

public void setClusterService(ClusterService clusterService) {

this.clusterService = clusterService;

}

public void setCluster(Cluster cluster) {

this.cluster = cluster;

}

}- Problems between Cassandra in EC2 Classic / VPC and Datastax Java driver

- EC2MultiRegionSnitch uses public IPs. EC2 instances do not have an interface with public IP address - Cannot connect between instances in the same region using Public IPs

- Problems between Cassandra in EC2 Classic / VPC and Datastax Java driver

- EC2MultiRegionSnitch uses public IPs. EC2 instances do not have an interface with public IP address - Cannot connect between instances in the same region using Public IPs

- Region to Region connectivity will use public IPs - Trust those IPs or use software/hardware VPN

Challenges

- Datastax Java Driver Load Balancing

- Multiple choices

- Datastax Java Driver Load Balancing

- Multiple choices

-

- DCAware + TokenAware

- Datastax Java Driver Load Balancing

- Multiple choices

- DCAware + TokenAware + ?

- Multiple choices

Clients in one AZ attempt to always communicate with C* nodes in the same AZ. We call this zone-aware connections. This feature is built into Astyanax, Netflix’s C* Java client library.

CHALLENGES

- Zone Aware Connection:

- Webapps in 3 different AZs: 1A, 1B, and 1C

- C* datacenter spanning 3 AZs with 3 replicas

1A

1B

1C

1B

1B

CHALLENGES

- We added it! - Rack/AZ awareness to TokenAware Policy

CHALLENGES

- Third Datacenter: Analytics

- Do not impact realtime data access

- Spark on top of Cassandra

- Spark-Cassandra Datastax connector

- Replicate specific keyspaces

- Less nodes with larger disk space

- Settings are different

- Ex: Bloom filter chance

CHALLENGES

- Third Datacenter: Analytics

Cassandra Only DC

Realtime

Cassandra + Spark DC

Analytics

Challenges

- Upgrade from 2.0.8 to 2.1.5

- Counters implementation is buggy in pre-2.1 versions

My code never has bugs. It just develops random unexpected features

CHALLENGES

To choose, or not to choose VNodes. That is the question.

(M. Lazaro, 1990 - 2500)

- Previous DC using Classic Nodes

-

Works with MR jobs

-

Complexity for adding/removing nodes

-

Manual manage token ranges

-

- New DCs will use VNodes

- Apache Spark + Spark Cassandra Datastax connector

- Easy to add/remove new nodes as traffic increases

testing

Testing

- Testing requires creating and modifying many C* nodes

- Create and configuring a C* cluster is time-consuming / repetitive task

- Create fully automated process for creating/modifying/destroying Cassandra clusters with Ansible

# Ansible settings for provisioning the EC2 instance

---

ec2_instance_type: r3.2xlarge

ec2_count:

- 0 # How many in us-east-1a ?

- 7 # How many in us-east-1b ?

ec2_vpc_subnet:

- undefined

- subnet-c51241b2

- undefined

- subnet-80f085d9

- subnet-f9138cd2

ec2_sg:

- va-ops

- va-cassandra-realtime-private

- va-cassandra-realtime-public-1

- va-cassandra-realtime-public-2

- va-cassandra-realtime-public-3

TESTING - Performance

- Performance tests using new Cassandra 2.1 Stress Tool:

- Recreate GumGum metadata / schemas

- Recreate workload and make it 3 times bigger

- Try to find limits / Saturate clients

# Keyspace Name

keyspace: stresscql

#keyspace_definition: |

# CREATE KEYSPACE stresscql WITH replication = {'class': #'NetworkTopologyStrategy', 'us-eastus-sandbox':3,'eu-westeu-sandbox':3 }

### Column Distribution Specifications ###

columnspec:

- name: visitor_id

size: gaussian(32..32) #domain names are relatively short

population: uniform(1..999M) #10M possible domains to pick from

- name: bidder_code

cluster: fixed(5)

- name: bluekai_category_id

- name: bidder_custom

size: fixed(32)

- name: bidder_id

size: fixed(32)

- name: bluekai_id

size: fixed(32)

- name: dt_pd

- name: rt_exp_dt

- name: rt_opt_out

### Batch Ratio Distribution Specifications ###

insert:

partitions: fixed(1) # Our partition key is the visitor_id so only insert one per batch

select: fixed(1)/5 # We have 5 bidder_code per domain so 1/5 will allow 1 bidder_code per batch

batchtype: UNLOGGED # Unlogged batches

#

# A list of queries you wish to run against the schema

#

queries:

getvisitor:

cql: SELECT bidder_code, bluekai_category_id, bidder_custom, bidder_id, bluekai_id, dt_pd, rt_exp_dt, rt_opt_out FROM partners WHERE visitor_id = ?

fields: samerowTESTING - Performance

- Main worry:

- Latency and replication overseas

- Use LOCAL_X consistency levels in your client

- Only one C* node will contact only one C* node in a different DC for sending replicas/mutations

- Latency and replication overseas

TESTING - Performance

- Main worries:

- Latency

TESTING - Instance type

-

Test all kind of instance types. We decided to go with r3.2xlarge machines for our cluster:

- 60 GB RAM

- 8 Cores

-

160GB Ephemeral SSD Storage for commit logs and saved caches

-

RAID 0 over 4 SSD EBS Volumes for data

- Performance / Cost and GumGum use case makes r3.2xlarge the best option

- Disclosure: I2 instance family is the best if you can afford it

TESTING - Upgrade

-

Upgrade C* Datacenter from 2.0.8 to 2.1.5

- Both versions can cohabit in the same DC

-

New settings and features tried

- DateTieredCompactionStrategy: Compaction for Time Series Data

- Incremental repairs

- Counters new architecture

MODUS OPERANDI

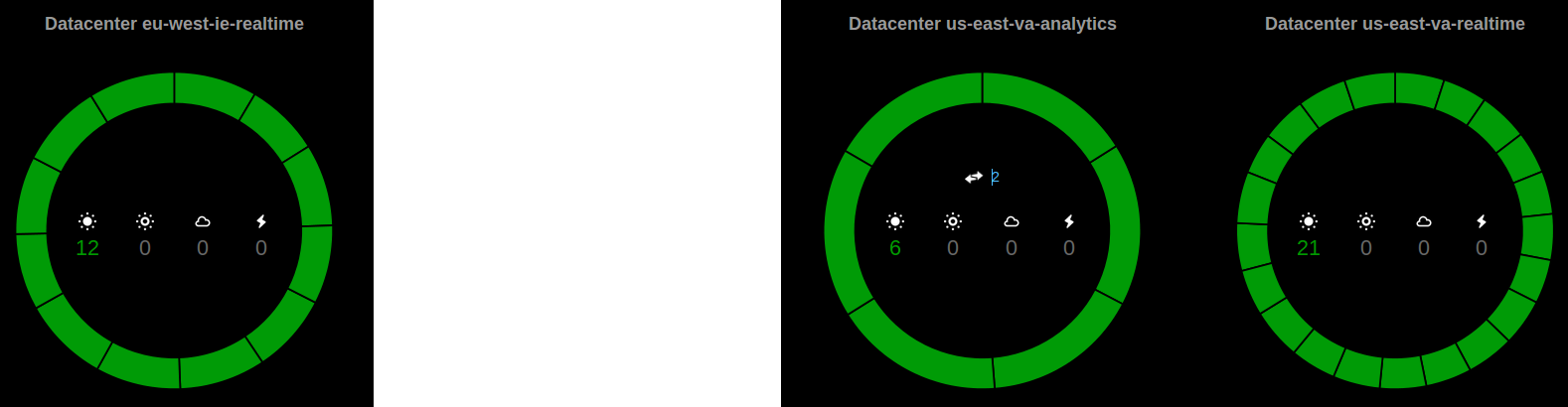

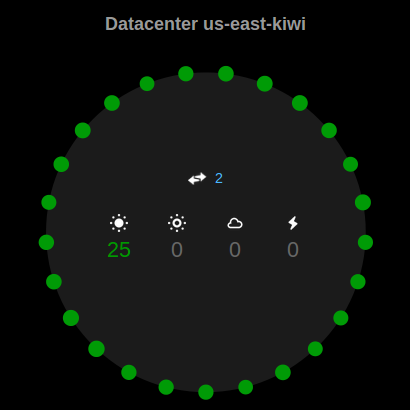

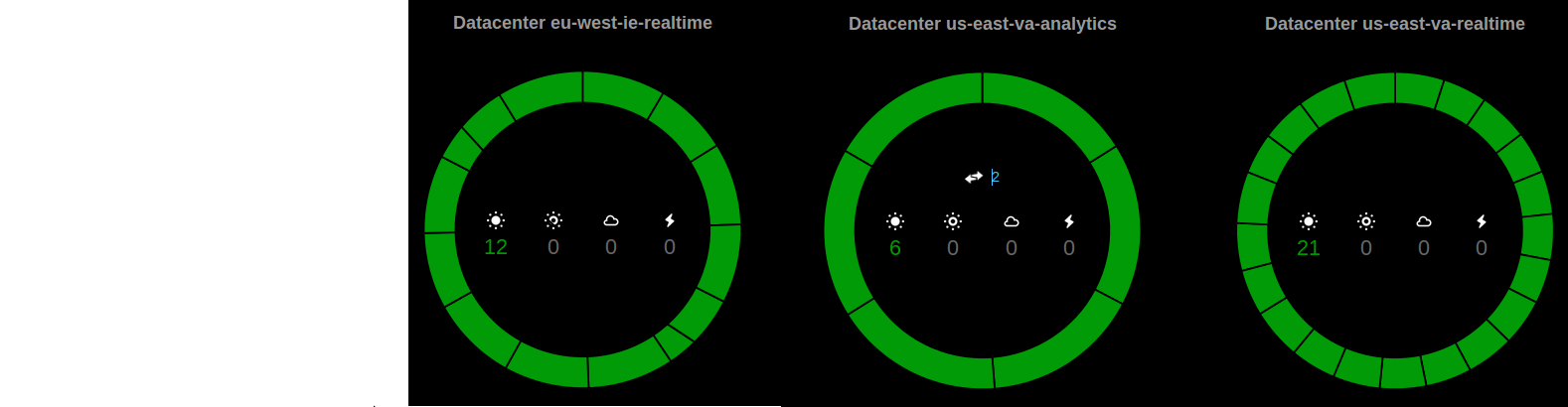

MODUS OPERANDI

- Sum up

- From: One cluster / One DC in US East

- To: One cluster / Two DCs in US East and one DC in EU West

MODUS OPERANDI

- First step:

- Upgrade old cluster snitch from EC2Snitch to EC2MultiRegionSnitch

- Upgrade clients to handle it (aka translators)

- Make sure your clients do not lose connection to upgraded C* nodes (JIRA DataStax - JAVA-809)

MODUS OPERANDI

- Second step:

- Upgrade old datacenter from 2.0.8 to 2.1.5

- nodetool upgradesstables (multiple nodes at a time)

- Not possible to rebuild a 2.1.X C* node from a 2.0.X C* datacenter.

- Upgrade old datacenter from 2.0.8 to 2.1.5

WARN [Thread-12683] 2015-06-17 10:17:22,845 IncomingTcpConnection.java:91 -

UnknownColumnFamilyException reading from socket;

closing org.apache.cassandra.db.UnknownColumnFamilyException: Couldn't find cfId=XXX

rebuild

MODUS OPERANDI

-

Third step:

- Start EU West and new US East DCs within the same cluster

- Replication factor in new DCs: 0

- Use dc_suffix to differentiate new Virginia DC from old one

- Clients do not talk to new DCs. Only C* knows they exist

-

Replication factor to 3 on all except analytics 1

- Start receiving new data

-

Nodetool rebuild <old-datacenter>

- Old data

- Start EU West and new US East DCs within the same cluster

MODUS OPERANDI

RF 3

RF 3:0:0:0

RF 3:3:3:1

Clients

US East Realtime

EU West Realtime

US East Analytics

Rebuild

Rebuild

Rebuild

MODUS OPERANDI

From 39d8f76d9cae11b4db405f5a002e2a4f6f764b1d Mon Sep 17 00:00:00 2001

From: mario <mario@gumgum.com>

Date: Wed, 17 Jun 2015 14:21:32 -0700

Subject: [PATCH] AT-3576 Start using new Cassandra realtime cluster

---

src/main/java/com/gumgum/cassandra/Client.java | 30 ++++------------------

.../com/gumgum/cassandra/Ec2ClassicTranslater.java | 30 ++++++++++++++--------

src/main/java/com/gumgum/cluster/Cluster.java | 3 ++-

.../resources/applicationContext-cassandra.xml | 13 ++++------

src/main/resources/dev.properties | 2 +-

src/main/resources/eu-west-1.prod.properties | 3 +++

src/main/resources/prod.properties | 3 +--

src/main/resources/us-east-1.prod.properties | 3 +++

.../CassandraAdPerformanceDaoImplTest.java | 2 --

.../asset/cassandra/CassandraImageDaoImplTest.java | 2 --

.../CassandraExactDuplicatesDaoTest.java | 2 --

.../com/gumgum/page/CassandraPageDoaImplTest.java | 2 --

.../cassandra/CassandraVisitorDaoImplTest.java | 2 --

13 files changed, 39 insertions(+), 58 deletions(-)Start using new Cassandra DCs

MODUS OPERANDI

RF 0:3:3:1

Clients

US East Realtime

EU West Realtime

US East Analytics

RF 3:3:3:1

MODUS OPERANDI

RF 0:3:3:1

Clients

US East Realtime

EU West Realtime

US East Analytics

RF 3:3:1

Decomission

TIPS

tips - automated Maintenance

Maintenance in a multi-region C* cluster:

- Ansible + Cassandra maintenance keyspace + email report = zero human intervention!

CREATE TABLE maintenance.history (

dc text,

op text,

ts timestamp,

ip text,

PRIMARY KEY ((dc, op), ts)

) WITH CLUSTERING ORDER BY (ts ASC) AND

bloom_filter_fp_chance=0.010000 AND

caching='{"keys":"ALL", "rows_per_partition":"NONE"}' AND

comment='' AND

dclocal_read_repair_chance=0.100000 AND

gc_grace_seconds=864000 AND

read_repair_chance=0.000000 AND

compaction={'class': 'SizeTieredCompactionStrategy'} AND

compression={'sstable_compression': 'LZ4Compressor'};

CREATE INDEX history_kscf_idx ON maintenance.history (kscf);gumgum@ip-10-233-133-65:/opt/scripts/production/groovy$ groovy CassandraMaintenanceCheck.groovy -dc us-east-va-realtime -op compaction -e mario@gumgum.comtips - spark

- Number of workers above number of total C* nodes in analytics

-

Each worker uses:

- 1/4 number of cores of each instance

- 1/3 total available RAM of each instance

-

Cassandra-Spark connector

- SpanBy

- .joinWithCassandraTable(:x, :y)

- Spark.cassandra.output.batch.size.bytes

- Spark.cassandra.output.concurrent.writes

TIPS - spark

val conf = new SparkConf()

.set("spark.cassandra.connection.host", cassandraNodes)

.set("spark.cassandra.connection.local_dc", "us-east-va-analytics")

.set("spark.cassandra.connection.factory", "com.gumgum.spark.bluekai.DirectLinkConnectionFactory")

.set("spark.driver.memory","4g")

.setAppName("Cassandra presidential candidates app")-

Create "translator" if using EC2MultiRegionSnitch

- Spark.cassandra.connection.factory

since c* in EU WEST ...

US West Datacenter!

EU West DC

US East DC

Analytics DC

US West DC

Q&A

GumGum is hiring!

Cassandra Summit 2015 - GumGum

By mario2

Cassandra Summit 2015 - GumGum

- 2,527