MARTIN SCHUHFUSS

@usefulthink

m.schuhfuss@gmail.com

Webgl Learning CUrve

hey, we can do this thing in 3D!

let's find a simpler solution :(

const container = document.querySelector('.container');

const canvas = document.createElement('canvas');

canvas.width = container.offsetWidth;

canvas.height = container.offsetHeight;

container.appendChild(canvas);

const gl = canvas.getContext('webgl');

// ---- setup viewport size

gl.viewport(0, 0, canvas.width, canvas.height);

// ---- clear screen with black

gl.clearColor(0.0, 0.0, 0.0, 1.0);

gl.clear(gl.COLOR_BUFFER_BIT);

// ---- create and compile shaders

const vs = gl.createShader(gl.VERTEX_SHADER);

const fs = gl.createShader(gl.FRAGMENT_SHADER);

// ---- upload and compile GLSL shaders

gl.shaderSource(vs, `

attribute vec3 position;

void main() { gl_Position = vec4(position, 1.0); }

`);

gl.shaderSource(fs, `

void main() { gl_FragColor = vec4(1.0, 1.0, 1.0, 1.0); }

`);

gl.compileShader(vs);

gl.compileShader(fs);

let's draw a triangle...

// [... getContext, init viewport and clear]

// [... create and compile shaders]

// ---- link a program out of the two shaders

const shaderProgram = gl.createProgram();

gl.attachShader(shaderProgram, vs);

gl.attachShader(shaderProgram, fs);

gl.linkProgram(shaderProgram);

gl.useProgram(shaderProgram);

// ---- create a buffer with vertex-data

const vertexBuffer = gl.createBuffer();

gl.bindBuffer(gl.ARRAY_BUFFER, vertexBuffer);

const vertices = new Float32Array([

-1.0, 0.0, 0.0,

1.0, 0.0, 0.0,

0.0, 1.0, 0.0

]);

gl.bufferData(gl.ARRAY_BUFFER, vertices, gl.STATIC_DRAW);

// ---- bind the buffer to the vertex-shader attribute

const positionAttr = gl.getAttribLocation(shaderProgram, "position");

gl.vertexAttribPointer(positionAttr, 3, gl.FLOAT, false, 0, 0);

// ---- draw it. finally.

gl.drawArrays(gl.TRIANGLES, 0, vertices.length);

No really, IT's just a triangle

WTF?

I think there's a better way to explain 3D rendering

...and we won't need any more code for this

the hard way

Computer-Graphics and

3d-rendering

- almost 50 years of research

- probably one of the best financed areas of research ($100bn/yr in games several hundred more in VFX)

- unbelievably huge scientific field: maths, physics, numerics, radiometry, photometry, colorimetry, ...

WHAT IS 3D RENDERING?

use a virtual camera to

take pictures of a virtual environment/scene

FIGURE OUT HOW LIGHT BEHAVES AND WHICH LIGHT ENDS UP ON THE CAMERA SENSOR

simulate how photons would bounce around and find those that hit the camera sensor

problem: there are just way too many photons

rendering techniques

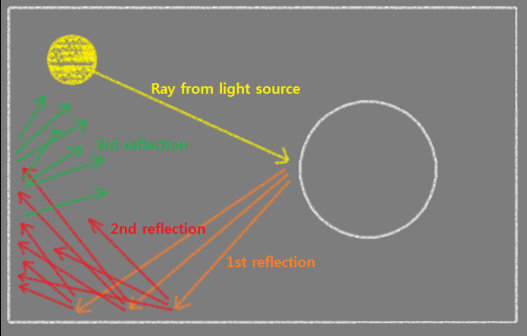

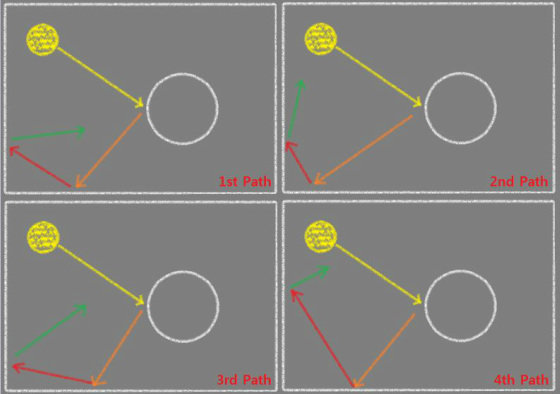

raytracing / PATH-TRACING

RAYTRACING / PATH-TRACING

http://ilchoi.weebly.com/monte-carlo-path-tracing.html

RAYTRACING / PATH-TRACING

http://docs.chaosgroup.com/display/VRAY2SKETCHUP/Basic+Ray+Tracing

RAYTRACING / PATH-TRACING

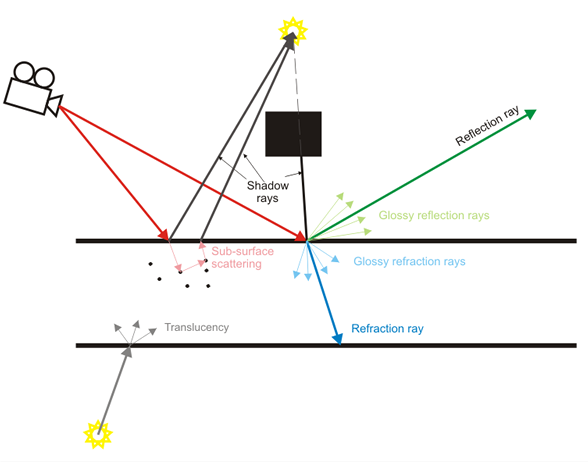

- very accurate rendering

- supports special light behaviors: reflection, refraction, caustics and global illumination

- used by almost everything that doesn't need to be realtime (i.e. all VFX in movies or static renderings)

- GPUs are not yet fast enough to do this in realtime

(ToyStory3: each final frame took 2‑15 hours to render)

WEBGL PATH-TRACING DEMOS

RASTERIZATION / scanline rendering

- used by most realtime applications today

- GPUs are designed for this

- every major game-engine and WebGL implement scanline-rendering

RASTERIZATION / scanline rendering

- objects made from triangles

- triangles are projected onto the screen

- pixel-colors are computed only from data provided for the triangle

MATH

(doesn't hurt, I promise)

(just kidding...)

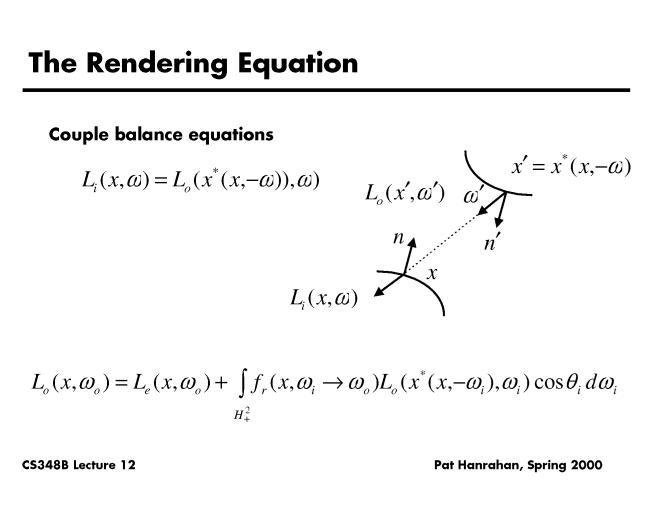

https://graphics.stanford.edu/courses/cs348b-00/lectures/lecture12/walk006.html

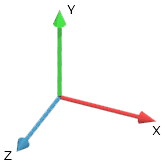

COORDINATE SPACES

- frame of reference for vertices

- a point of origin and three axes (x, y, z)

- "right handed" system

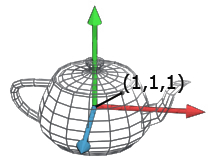

VERTICES

- a vertex describes a point in a coordinate-space

- three components: (x, y, z)

TRANSFORMING VERTICES / MATRIx MULTIPLICATION

- vectors can be transformed (rotation, translation, scaling etc.) using matrix-multiplication

- the matrix is a "recipe" how to convert a vector from one coordinate space into another

- they are everywhere (remember css transform: matrix3d(...)?)

homogenous coordinates

or, "why are there 4 dimensional coordinates everywhere?"

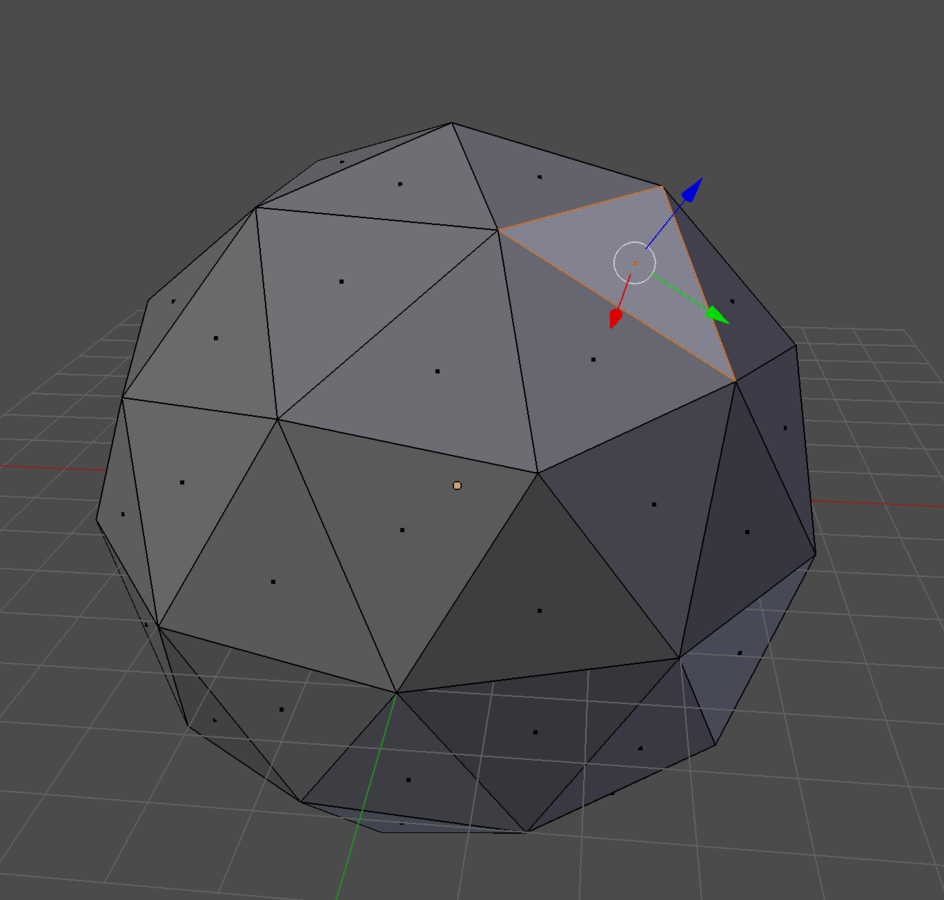

GEOMETRIES

- defined using triangles* / faces

- all faces together form the object-surface

- vertices for all triangles are defined in object-space

* most 3D environments like DirectX or OpenGL also allow quads as primitives

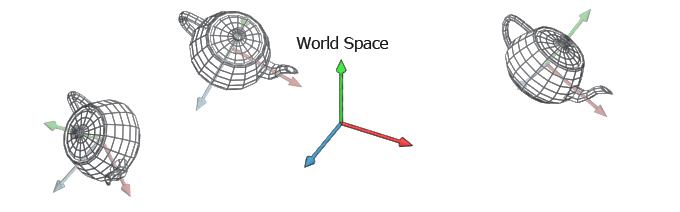

OBJECTS / Meshes

- created from a geometry and material-information

- provide the coordinate space used by the geometry (object-space)

- manage transforms relative to world-space

- hierarchical: objects can contain other objects that are then relative to the parent object's coordinate space

SCENE-GRAPH

- root-object - parent to all rendered objects

- hosts the global coordinate space

(world space) - also contains the camera and lights, which are special types of objects

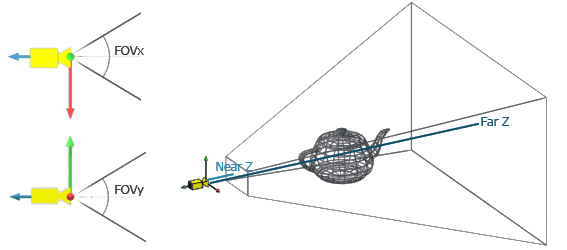

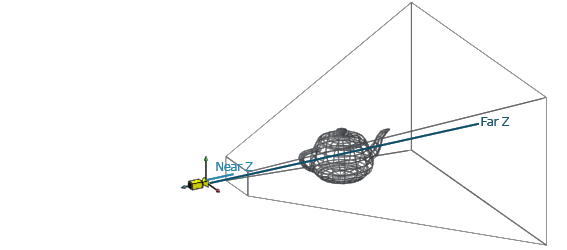

Camera

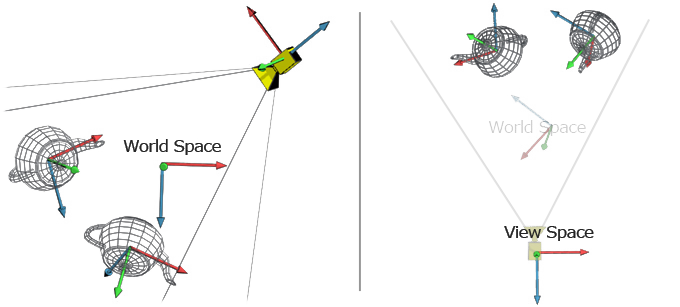

- just another object with it's own coordinate space (view-space)

- is positioned/oriented in the scene

- "field of view"-angles (fov), near- and far-plane define the view-frustrum

model-, world- AND view-Space

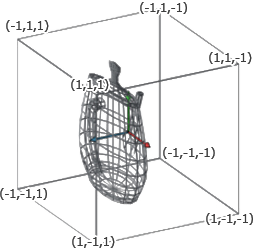

PERSPECTIVE TRANSFORM

- vertices are transformed from world-space into view-space

- multiplying with a special projection-matrix transforms coordinates into the canonical view volume or clip-space

- everything outside the range [-1, 1] is invisible to the camera and will be clipped

The rendering algorithm

- project all vertices into canonical view volume

- assemble triangles from sets of three vertices

- rasterize triangles

- compute the color-value for every fragment

- blend color-value with value stored in frame-buffer for the pixel

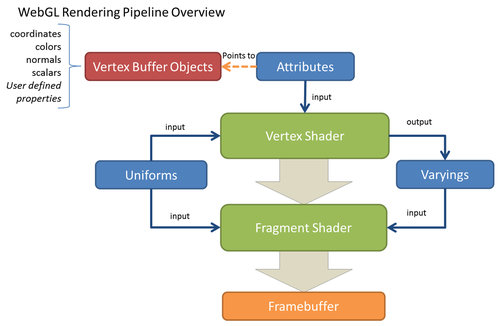

Webgl rendering pipeline

primitive assembly

rasterization

color-blending

VERTEX-SHADER

- computes the clip-space coordinates

- vertices (world-space coordinates) and other per-vertex data (colors, texture-coordinates, ...) is passed in as attributes

- constants can be passed as uniforms

- can declare special variables (varying) for the fragment-shader

The vertex-shader is run on the GPU for each and every vertex, without any information about other vertices.

PRIMITIVE ASSEMBLY / Rasterization

- every sequence of three vertices forms a triangle*

- those triangles are then rasterized, or divided based on where exactly display-pixels are covered by the triangle

- these affected pixels are called fragments

* there are other rendering-modes like triangle-strips and fans, but those are ignored here

FRAGMENT-SHADER

- can only access the varying-values defined by the vertex-shader and the uniforms defined for this drawcall

- implements lighting equations and various forms of texturing

The fragment-shader is run on the GPU once for every fragment of every triangle, and outputs a color-value for the fragment.

Physical MATERIALS

- absorption: parts of the spectrum of incoming light is absorbed

- reflection: an incoming light-ray is bounced off the surface

- refraction: the light-ray slightly changes it's direction and continues it's path through the object

Materials describe the optical properties of stuff objects are made of and how they interact with light-sources.

MATERIALS

- hold all the information required to calculate the color for a given fragment

- any object needs to have at least one material to be rendered.

- there can be up to one material per triangle

SHADING-MODELS

- Basic / Uniform: always the same color, regardless of light-influence

- Lambert: very simple shading-equation, only diffuse reflection.

- Blinn-Phong: most commonly used, computes diffuse and specular reflection.

- ...and a lot more.

The shading-model is the rendering-equation used to compute the fragment-color from material-properties and light-sources.

MATERIALS

TEXTURE-MAPS

TEXTURE-MAPS

- use images (textures) to add detail to objects by controlling material-parameters with image-data

- most common: control the diffuse color of the material (diffuse-mapping)

- other uses: bump-mapping, environment-mapping, alpha-mapping, ...

- UV-mapping: mapping the 2D image onto the objects geometry

TEXTURE-MAPS

QUESTIONS?

please feel free to contact me later for chatting or questions...

FIND ME OUTSIDE FOR QUESTIONS AND COMMENTS

(I probably ran out of time by now...)

THANKS FOR LISTENING!

welcome to 3d – jsunconf

By Martin Schuhfuss

welcome to 3d – jsunconf

- 2,271