20th of November, 2024

presentation by Matias Guijarro, AWI dept

From to :

Control System Comparison and Perspectives

SLS 2.0 Beamline Scientist Expert Group

About the speaker

Software Engineer

20-year career at ESRF,

Beamline Control Unit

BLISS project responsible: 2017-2023

In sabbatical leave since 01/24

Joined BEC Development Team at Science IT Infrastructure and Services Department, PSI

Breaking news

January, 2025: will start at

Talk outline

Control System comparison

- Architecture

- Technical choices

- Configuration

- Scans

- Graphical Interfaces

- Data Saving

- Data Management

- Data Processing

Perspectives

- Symbiosis

- Introducing Blissdata

- Proposal

Control systems comparison

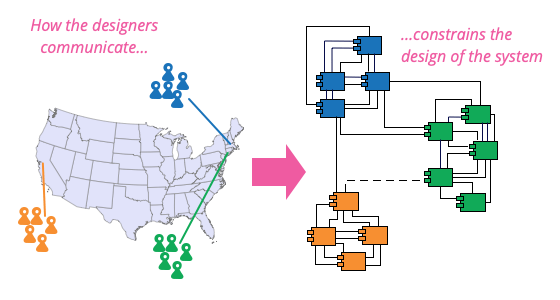

Conway's law

From "How Do Committees Invent?" (1968)

Organizations which design systems [...] are constrained to produce designs which are copies of the communication structures of these organizations.

Melvin Conway, circa 1968 and more recently.

Architecture comparison

VS.

Architecture

scalar

Main components running within the same process (think of SPEC)

Hardware control is also part of it, mostly as direct connection or through

Architecture

Device Server

Scan Server

Scan Bundler Service

Scihub Service

BEC shell

BEC core library

Main components running as services (multiple processes)

Hardware control is delegated to Ophyd (Bluesky project), mostly through

Written in Python

I/O based on gevent, cooperative multi-tasking

Technical choices

Built-in direct hardware control, or

Centralized architecture

ptpython shell

Written in Python

Services-oriented architecture

Multi-threading

Devices control via Ophyd (Bluesky)

Buffer and settings DB

Buffer and message broker

IPython shell

Qt graphical interfaces

Qt and web graphical interfaces

{..}

{..}

Human-readable, editable YAML configuration

Human-readable, editable YAML configuration

HDF5 file saving format

HDF5 file saving format

Scan API comparison

framework

library

Scan stubs

Scan class to write, with methods:

open, stage, pre_scan, core loop, complete, unstage, close

Maximum flexibility

Acquisition chain (tree)

Node devices trigger acquisition

Leaf node devices take data

Channels push data to Redis

Core loop is always the same

configuration

code

Graphical User Interfaces

"one size fits all" online visualization

Has to be used with BLISS shell:

Region of Interest selection, basic user interaction

No scan launching, no device tweaking (motors...), no parameter forms

Flint

Daiquiri

Custom applications

Developed by engineers, adapted for the beamline technique/experiments

General concepts: device tweaking, scans launching, queuing, forms, logging, etc

ESRF GUI strategy towards

web apps

Graphical User Interfaces

ptpython

interpreter

blissterm server

ptpython

interpreter

API

blissterm application

socket io

xtermjs

Daiquiri UI components library

logic

blissdata

Blissterm : enhanced web shell

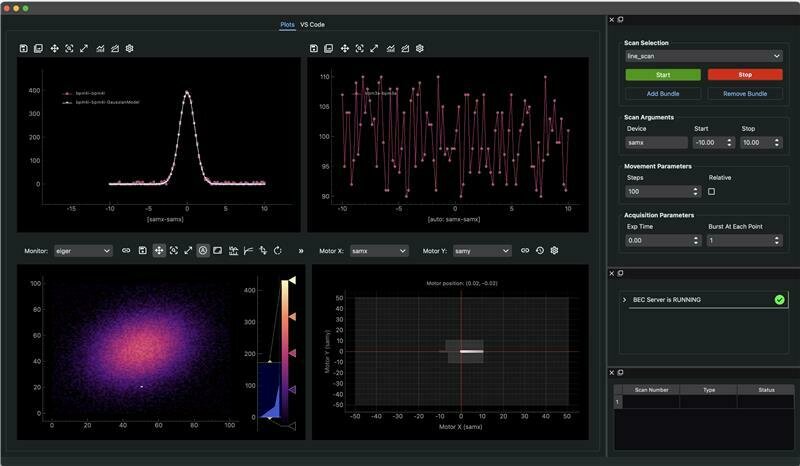

BEC Graphical User Interfaces

Complete set of widgets and helpers to build advanced applications

BEC Widgets

BEC Designer, to quickly build applications with BEC widgets

Enable developers or power users to make their own UI with reusable components, connected to the control system

General purpose applications, i.e

beamline alignment

BEC Graphical User Interfaces

BEC dock areas brings better User Experience, allowing users to organize widgets as they want

Data saving comparison

Both systems have Writers as external processes, taking data and metadata from acquisition and writing Nexus compliant files

ESRF Nexus flavour is supposed to be compatible with the European consensus (ALBA, DESY, MAX IV, SOLEIL...)

BLISS does not implement Application Definitions yet

The file structure is unified at whole facility level - conversion to other formats can happen if absolutely necessary

BEC file structure can be adapted thanks to plugins

Data saving: note about HDF5 limitations

- While writing in SWMR mode, HDF5 groups/datasets/attributes/links cannot be created

- Only POSIX file systems are supported which excludes network file systems like NFS

- SWMR is only supported from HDF5 1.10.0 onward. Matlab, IDL are often stuck on older HDF5 versions (typically 1.8.x)

- A SWMR reader needs to wait until the writer enables SWMR mode before it can open the file

Current SWMR (Single Writer Multiple Reader) mode has shortcomings

HDF5 is best to archive data - it is not well suited to read while writing

Writers should lock the files they write, readers should disable locking

- and retry read when it fails or seg faults

- silx library provides utility function for this

- h5recover can sometimes save corrupted files (worst case !)

Users can interact with the ESRF Data Portal directly from the BLISS command line

Proposal

Datasets

Metadata

- acquisition parameters

- sample parameters

Gallery

- images, plots, associated to a dataset

Tape interface

newcollection("sample1")

Data management

IH-LS-3167: elemental distributions in microalgae

BLISS commands to submit comments, plots, execution output, logs

elog_add("...")

Automatic notifications from the control system

(in case of errors, beamline events...)

Manually editable from the Data Portal

searchable

Data management

Data management

The Scilog API can be invoked to push to the Electronic Logbook

(no high-level command from shell or automatic mechanism yet)

SciCat integration can be made similar to SwissFEL, automatic archival after experiment

Data Processing

Processing data is hard and takes time !

What processing do I need to do?

Where is my data ?

What software do I need to install? And how ?

There is too much data!

I will never be able to process it all !

Oh god, I forgot to apply the mask !

The software is not working and the PhD/post-doc who wrote it left !

Data Processing

How to improve data processing experience, and how to apply processing as soon as possible?

- To make informed decisions during acquisition

- To provide users with tools to help/encourage further data analysis

i.e, to go from this...

to this:

Data Processing Workflows

Acquisition control

Execute workflow on

- local machines (immediate feed)

- slurm nodes

Tasks can be rearranged or reused in other workflows without deep knowledge of the task content

Upload result to data portal

Persist result for further analysis

Visualization, feedback

Workflows are data processing pipelines composed of several steps (tasks)

EWOKS project : Esrf WOrKflowS

EWOKS workflow example

Raw parameter space

Workflow based on xraylarch

Parameter space in which scientific decisions are made

EXAFS visualization

Data processing

Live fitting of data using LMFIT

Plugins for BEC DAP can be written to do some more advanced processing

Perspectives

Symbiosis

In which areas both BEC and BLISS could benefit each other ?

Where is it worth collaborating ? "Return on investment"

What would be beneficial for the control system users ?

relies on blissdata to publish acquisition data to Redis

Introducing Blissdata

blissdata is a separate package with limited dependencies, easy to integrate in any Python project

Blissdata publishes data (producer)

Blissdata can also retrieve previously published data (consumers)

Support technology:

+ extensions RedisJSON, RedisSearch

Blissdata defines a model to represent scans, and provides acquisition streams to push data

Blissdata model

For big data (mainly 2D), only references are published

Pushing and retrieving data

1. Can be shipped with the event:

0D, 1D, small 2D... are within the Redis stream

2. Data is too large, or data is stored by acquisition in its own file:

JSON events are emitted, need a Blissdata plugin to reference (and de-reference) data

- ideally from Redis : circumvent the HDF5 problems, no file I/O

- the corresponding Blissdata plugin determines the action to do

- h5py-like API, transparent access to data

Retrieving data

2 cases when pushing data

Start of a collaboration around Blissdata

ESRF relies on Blissdata to get access to data from acquisition for all its sub-systems:

archiving, display, processing...

There are on-going initiatives at ALBA and DESY to bring Blissdata to their existing control system

- interoperability with ESRF tools

Even some attempts converting Bluesky documents to Blissdata model

Proposal

Device Server

Scan Server

blissdata

PyMca:

A tool designed to assist with XRF data analysis and beyond.

Publishing BEC scans with blissdata would give access to ESRF HDF5 Writer, display and processing tools

Ending remarks

Through the eyes of a visitor...

French political thinker from

"Age of Enlightenment"

Fictional letters exchanged between Persian travelers and their friends to offer a satirical and critical perspective on French society, politics, and culture, exposing its contradictions and absurdities through the eyes of outsiders.

Through the eyes of a visitor...

Absence of dedicated Product Owner for BEC

Product Owner roles:

- define product vision and alignment with business goals

- manage backlog: priorities & features

- communication with stakeholders, gathering feedback, ensuring development team understands needs

Through the eyes of a visitor...

Lack of Unified 2D detector control software:

a challenge for integration

- AreaDetector, camera server, std-daq, Jungfraujoch

- 4 APIs, inconsistencies in user experience and integration workflows

- Hard to ensure compatibility between systems

Through the eyes of a visitor...

Absence of a dedicated Integrator role:

a coordination gap

- Separation of responsibilities between BEC developers and Control engineers

- While each group performs tasks effectively, there is no dedicated role to ensure seamless integration between hardware and software components

- Task is assumed by one or the other or beamline scientist, but this can lead to misalignments, delays, and inefficiencies

Through the eyes of a visitor...

Technical responsibilities of Beamline Scientists

- Broad range of technical responsibilities are devoted to SLS Beamline Scientists, like creation of data acquisition sequences and navigating the complexity of the control system

disclaimer: gut feeling, after 20 years at ESRF

- This approach fosters expertise and autonomy, and flexibility in solutions but can also divert from primary focus on research and beamline optimization

Conclusion

Conclusion

PSI is a fantastic workplace, very stimulating environment

I am very grateful to have had the opportunity to participate to the development of BEC, and to have worked with such talented developers and committed professionals

Most features are there, but there is still a lot of work to do ; a Product Owner would be beneficial to join the team

Small development teams, existing software: let's mutualize data handling, visualization, processing, and archiving tools ! At least aim for interoperability to better serve users community

BSEG meeting 20/11/2024

By Matias Guijarro

BSEG meeting 20/11/2024

- 574