Safe AI-Enabled Systems through Collective Intelligence

<WORKSHOP>

<DATE>

Rafael Kaufmann, Thomas Kopinski,

Justice Sefas, Michael Walters

Topics ahead

AIEverything safety

AI safety is a piece of the Metacrisis:

Everything is connected (climate, food, security, biodiv)

× Billions of highly-capable intelligent agents

× Coordination failure ("Moloch")

= Total risk for humanity and biosphere

Schmachtenberger, hypothesized attractors:

- Chaotic breakdown

- Oppressive dystopian control

Before this:

Today's AI Risks: Misspecification + coordination failure = compounded catastrophic risk

Before this:

Today's AI Risks: Misspecification + coordination failure = compounded catastrophic risk

We'll have exponentially more of this:

Perverse instantiation of AI systems ranges from benign and up

-

Game playing agents find bizarre techniques

-

Social media algorithms optimized towards dopamine machines

- OOD issues can express social bias etc. in production

AI in the wild

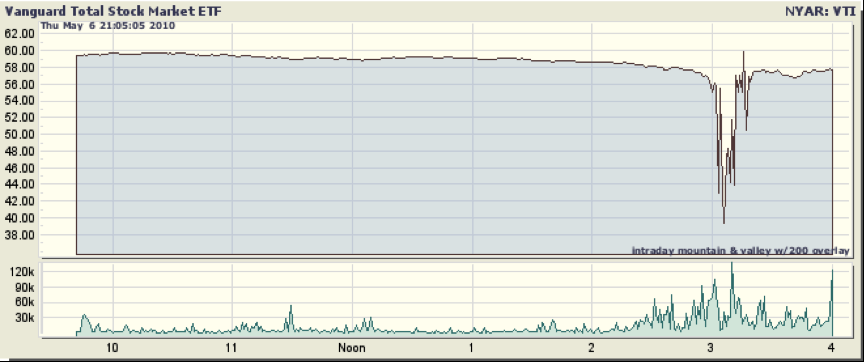

- Hyperfast algorithmic trading can run amok (2010 Flash Crash)

Active Inference proposes a model of intelligence at all scales, from microbes to macro agents.

Entities continuously accumulate evidence for a generative model of their sensed world, "self-evidencing"; plugs Bayes right into entity operations.

Arguments fom control theory also posit that physical systems contain structures that are homomorphic to their outer environment.

The result is AI that “scales up” the way nature does: by aggregating individual intelligences and their locally contextualized knowledge bases, within and across ecosystems, into “nested intelligences”—rather than by merely adding more data, parameters, or layers to a machine learning architecture.

- K. Friston et al. (2024)

Intelligence from first principles

Intelligence from first principles

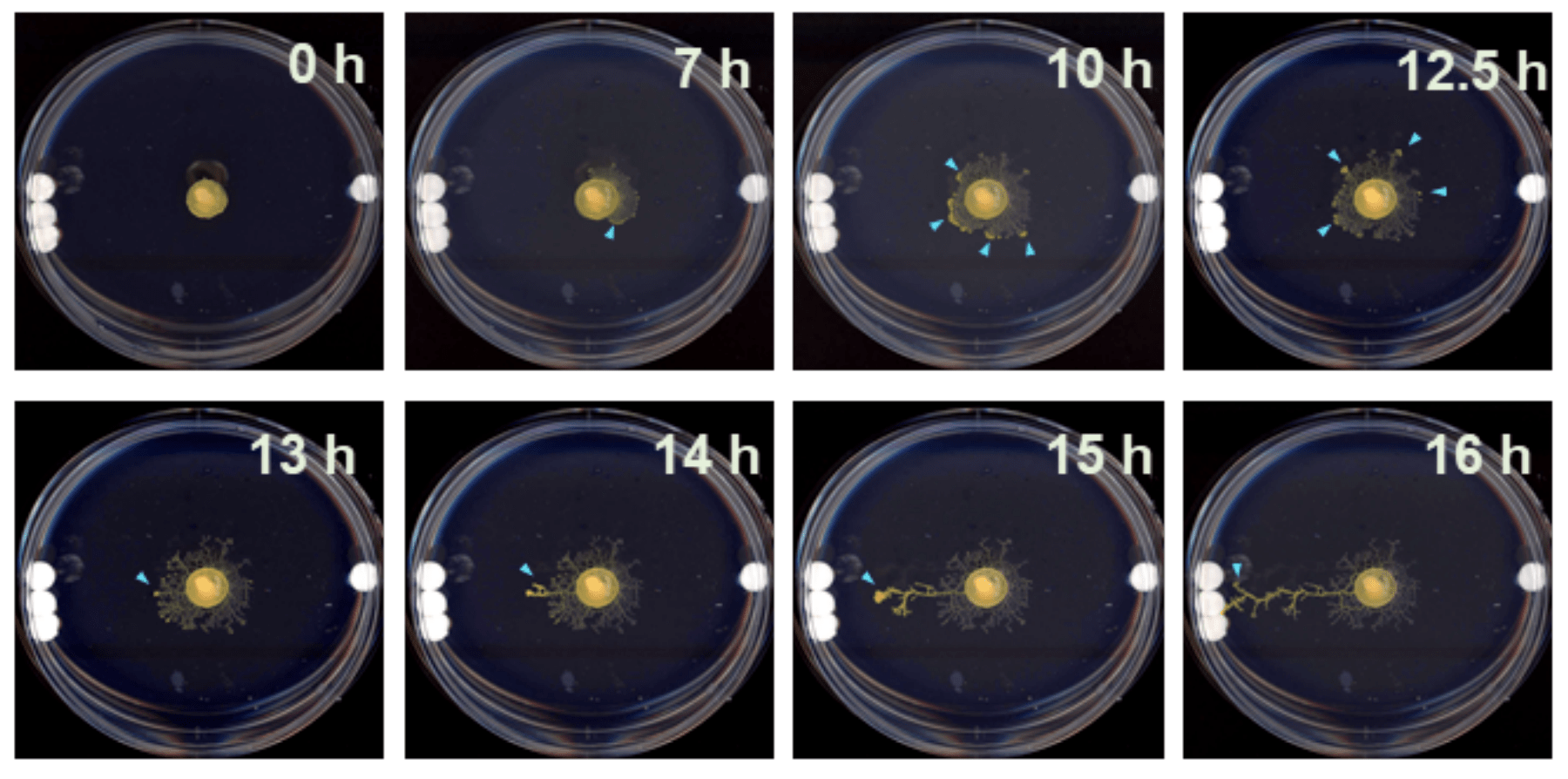

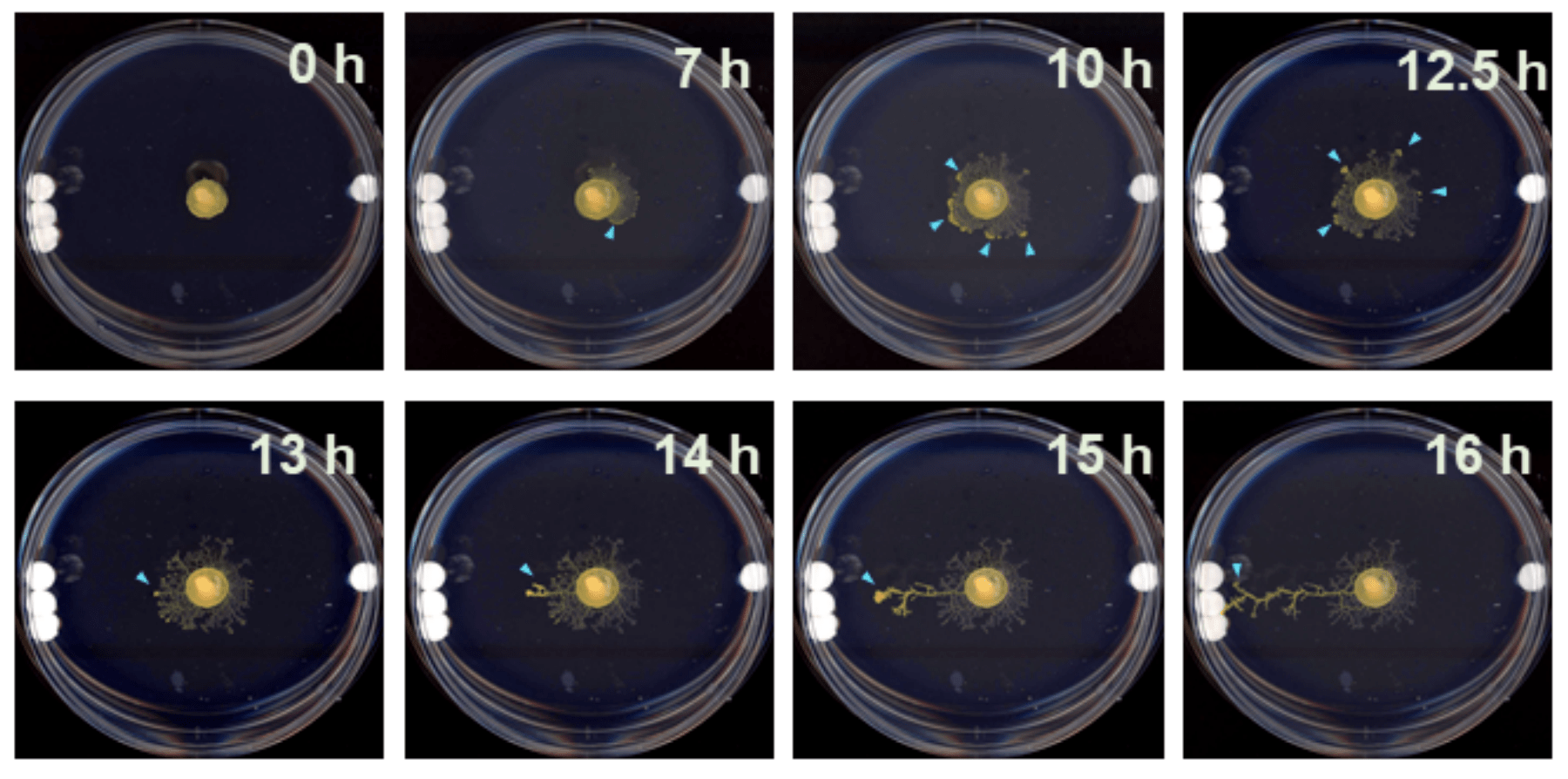

Nirosha J. Murugan et al. (2020)

Aneural organism Physarum polycephalum behaving in response to physics of environment

Intelligence from first principles

Nirosha J. Murugan et al. (2020)

Aneural organism Physarum polycephalum behaving in response to physics of environment

Under ActInf, shared narratives (world models) and communication are mutually beneficial for updating generative models.

The future amalgamation of humans and artificial agents stands to produce a higher order collective intelligence.

But we want to maintain our values and safety...

Bayesianism and ActInf seems like a sound approach for value learning our world models. Simulation will help us gatekeep and evaluate proposed actions.

Human-AI Collective

risk Exposure AS free energy

"Free energy of the future" (Millidge et al, 2021) - based on variational free energy.

Lower bound on expected model evidence, maximize reward while minimizing exploration

In a fully observable setting this simplifies to:

Cf. conditional expected shortfall (finance), KL control (control theory)

preference prior

Towards "Everything Safety"

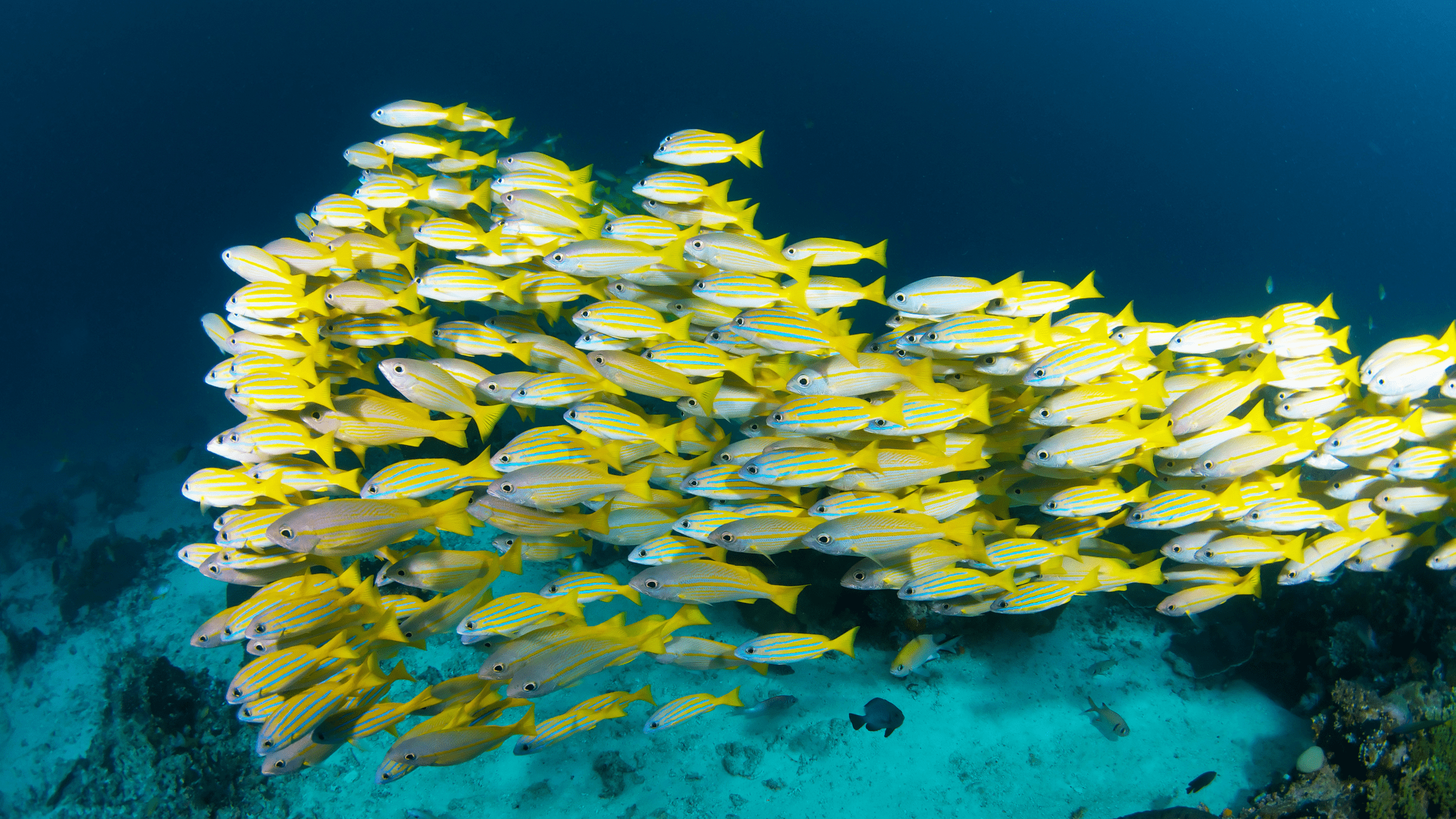

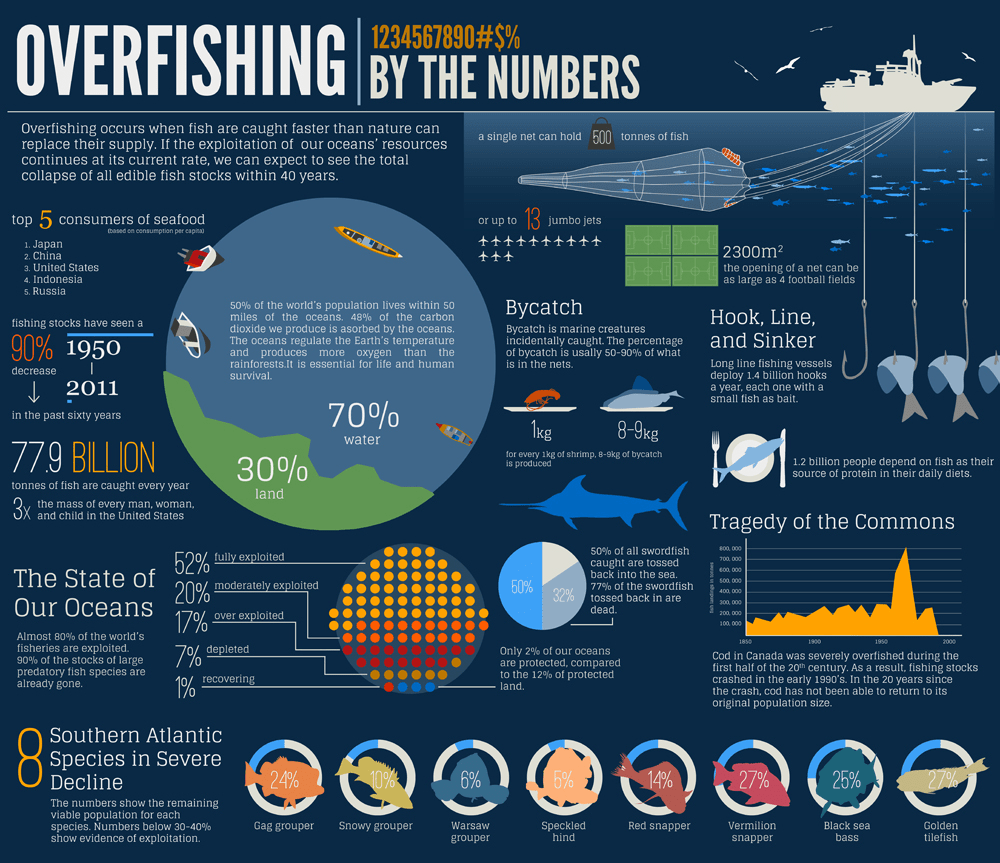

Case study: managing the Risk of AI-ACCELERATED overharvesting and ecosystem collapse

Overfishing Case study

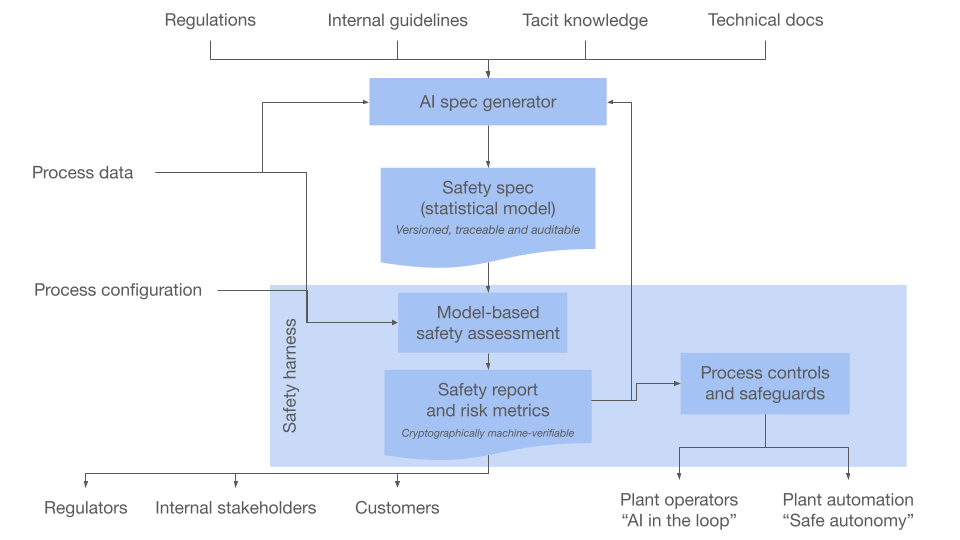

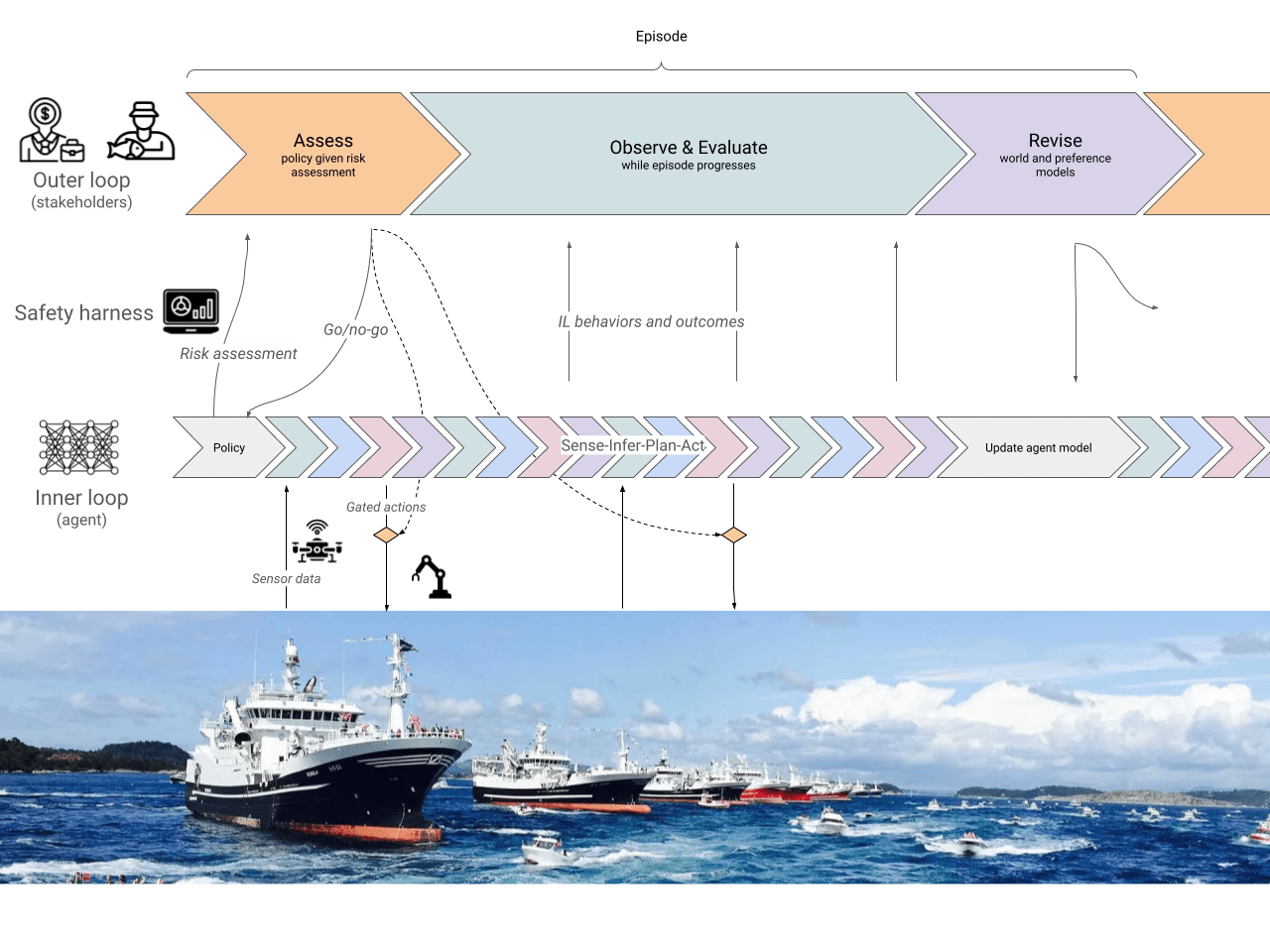

overfishing: Architecture at a glance

Population evolution

Cost | Revenue | Profit

Loss

- Bounded from above (satisficing)

- Equivocal about all profit-taking scenarios

- Futures discounted via w

overfishing: Architecture at a glance

Loss

- Bounded from above (satisficing)

- Equivocal about all profit-taking scenarios

- Futures discounted via w

Preference Prior

stakeholder accepted probability of loss L*

Risk

risk Exposure AS free energy

"Free energy of the future" (Millidge et al, 2021) - lower bound on expected model evidence, maximize reward while minimizing exploration

In a fully observable setting this simplifies to:

Cf. conditional expected shortfall (finance), KL control (control theory)

preference prior

- Sample world parameters, e.g. E, r, ...

- Run a world simulation of fishing, agent policy selection, etc. for each sample

- At each episode, agent MC-samples trajectories of fish population changes, profits, costs, ...

- Cumulative risk measured along trajectories

- , based on pref. prior

- , based on pref. prior

- Inform (or not) policy

overfishing: Architecture at a glance

Overfishing Case study

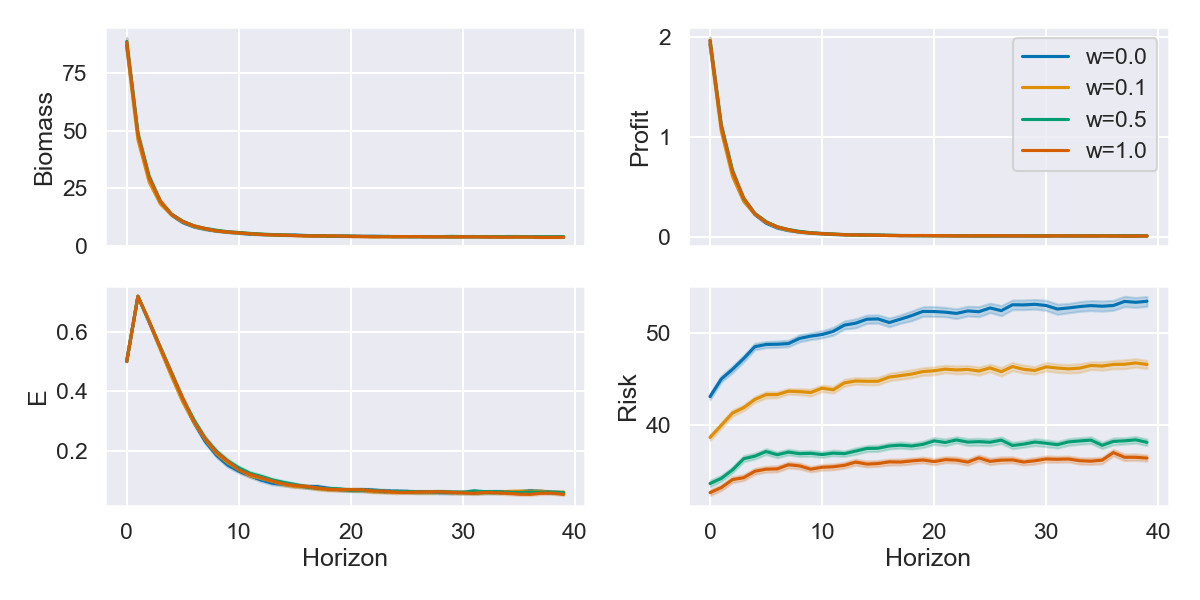

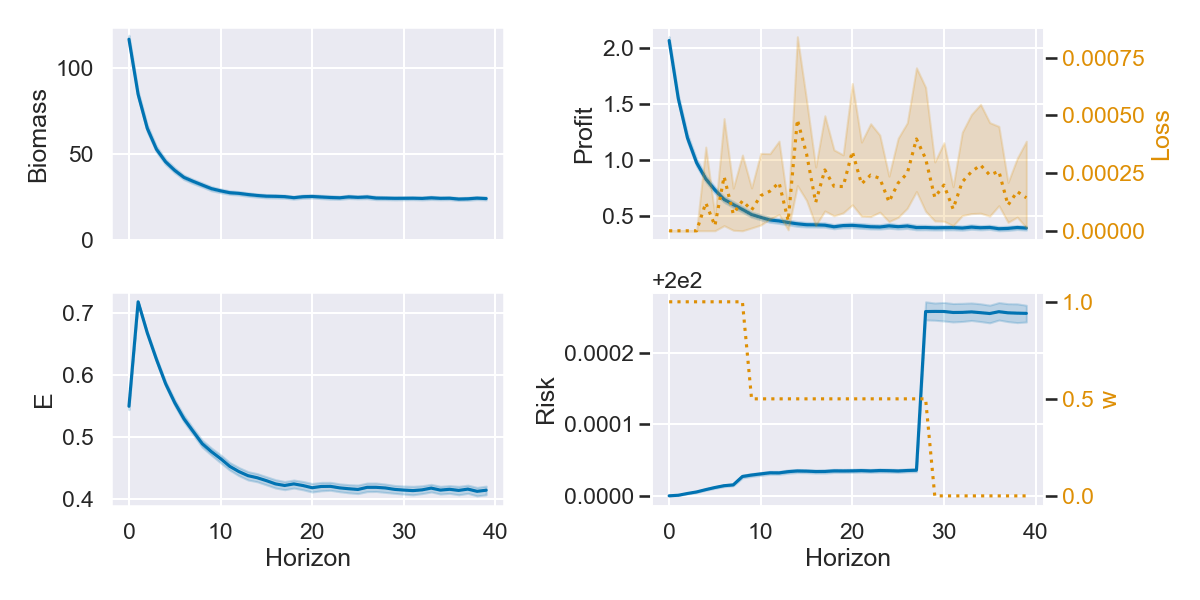

Myopic (single-season) profit-maximizing agents deplete population (and profits)

Less time-discounting = higher perceived risk, earlier

Overfishing Case study

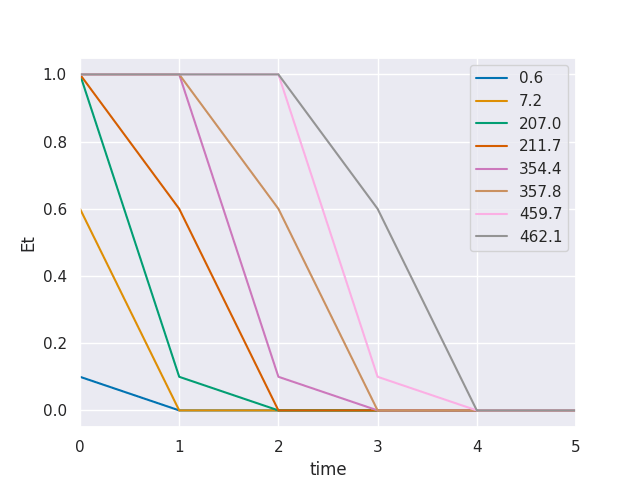

Constrained policy for various ε

Overfishing Case study

CRE responds to evolving stakeholder preferences of far-sightedness

Safely decommissioning with AI

-

Background: Germany is progressing its energy transition plans with one main goal being the decommissioning of all nuclear power plants

-

This is a complex process with many challenges, at the forefront:

-

Radioactive waste management

-

Decompositioning of building parts

-

Recycling of materials

-

Knowledge management

-

-

In order to ensure a correct procedure and reduce risk for failure, AI components are incrementally introduced into the processes

-

AI Tools have been successfully deployed in e.g.:

-

Robotics: Automatic scanning of elements within the building for transportation via Computer Vision

-

Knowledge Management: Teaching / Onboarding new staff with AR/VR and NLP

-

Human Resources: Utilizing NLP and LLMs for Person-to-Job fitting

-

Nuclear Decommissioning Process in Germany’s Energy Transition

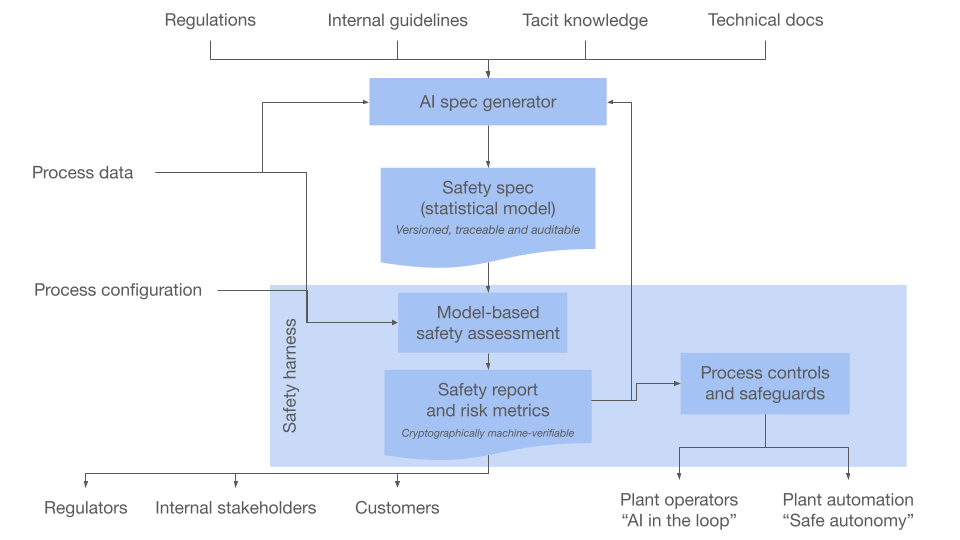

- How can we adapt this system to nuclear decommissioning?

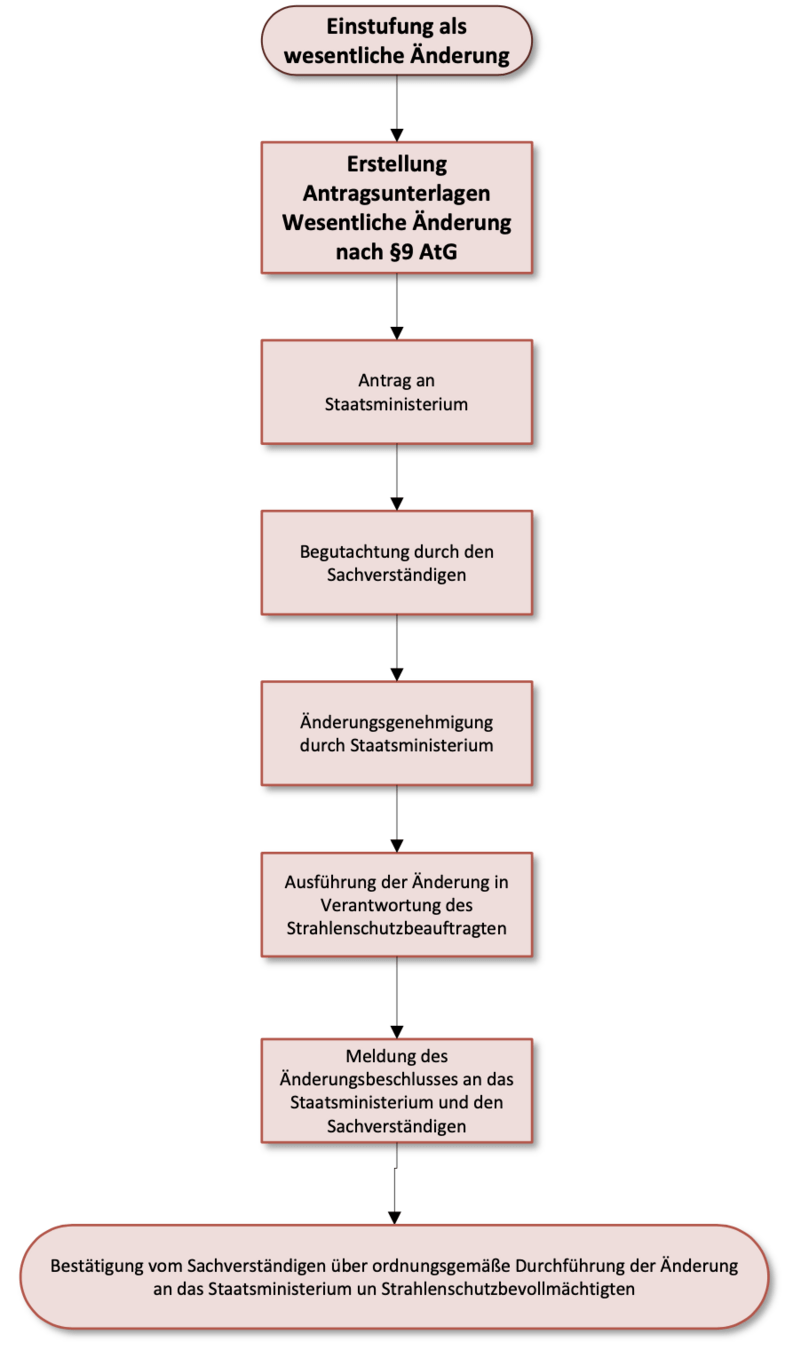

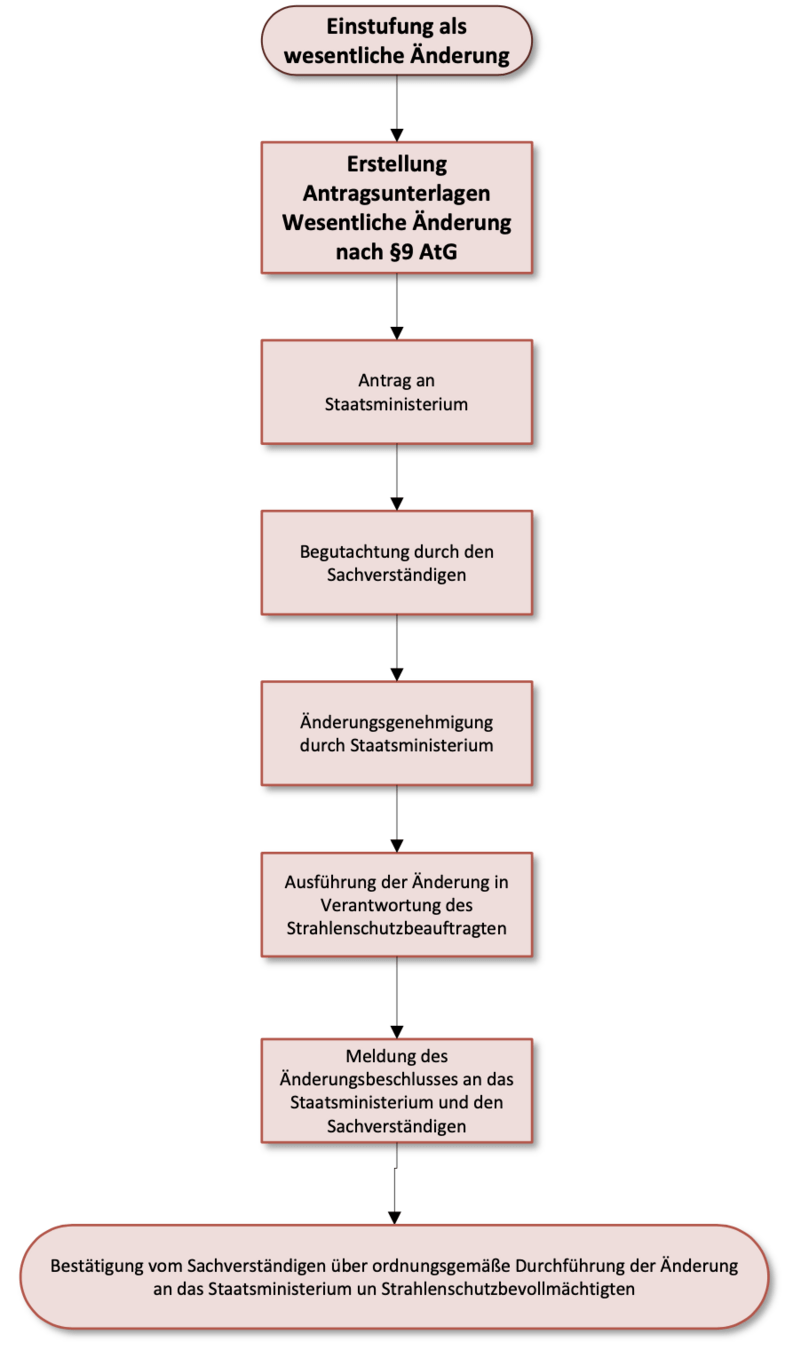

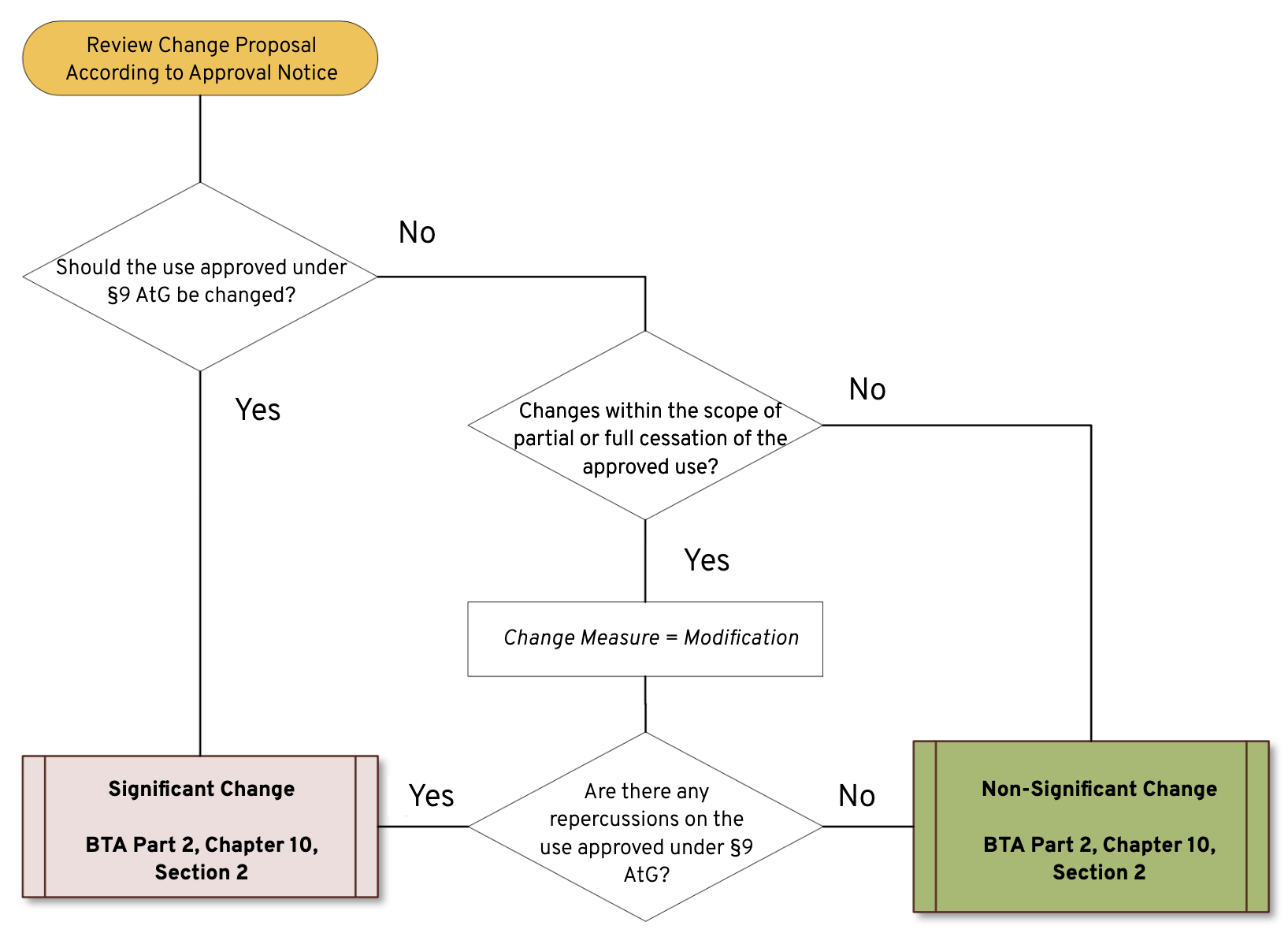

Classification as

Major Change

Preparation of Documents for Major Change under §9 AtG

Confirmation from the Expert about Proper Execution of the Change to the State Ministry and the Radiation Protection Officer

Application to State Ministry

Review by Expert

Change Approval by State Ministry

Application to State Ministry

Supervised Execution

of Change by RPO

Notification of Change to State Ministry and Expert

Nothing happens fast and each action has multiple stages of review and approval

Classification as

Major Change

Preparation of Documents for Major Change under §9 AtG

Confirmation from the Expert about Proper Execution of the Change to the State Ministry and the Radiation Protection Officer

Application to State Ministry

Review by Expert

Change Approval by State Ministry

Application to State Ministry

Supervised Execution

of Change by RPO

Notification of Change to State Ministry and Expert

Coordination is required across many separate agents and groups, forming a complex—often non-linear—web of dependency and communication

Nothing happens fast and each action has multiple stages of review and approval

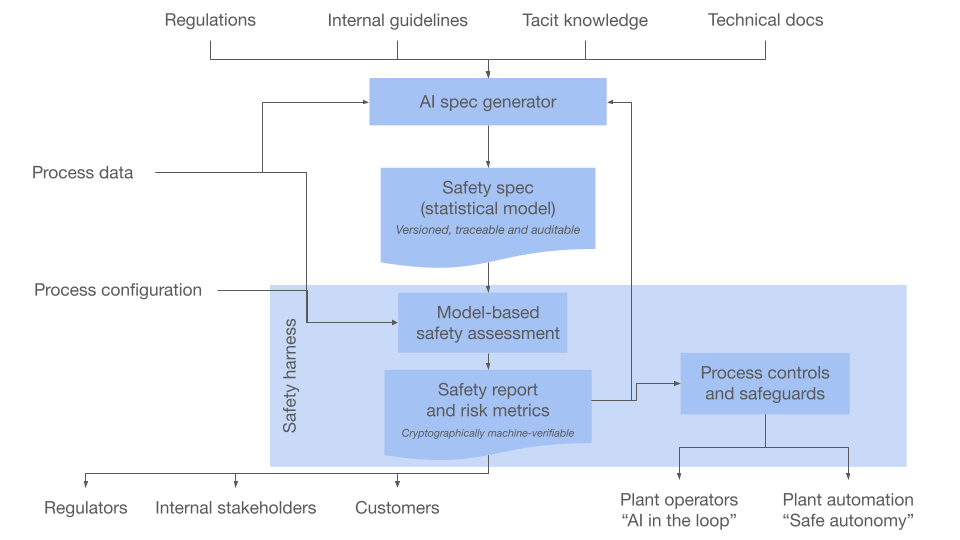

- How can we adapt this system to nuclear decommissioning?

No single person can oversee and consider all processes and applications

Leverage language models and other AI tools to parse collective information,

recommend actions, and flag issues

Better informed decisions reduce accidents, costs, and harm

- How can we adapt this system to nuclear decommissioning?

Knowledge Base

Optimization Engine /

Safety Harness

Regulators, human experts, etc. query the Knowledge Base for up-to-date statuses, procedures, and more

LLM mediated

Autonomous agents take/advise actions, and interface with humans while maintaining synchronized connection to the network

As new data is introduced in the the Knowledge Base, generative world models are updated.

Simulations on these models are carried out to compute risk metrics, and optimal decision actions.

Active processes

Safety & Regulatory Requirements

Tech Specs

....

Automated LLM tools digest array of documents beyond human capability, tracking statuses, updating records, and flagging potential issues

Logs

Update world models

Update KB

Wissensbasis

Optimierungs Engine /

Sicherheitschirm

Regulierungsbehörden, menschliche Experten usw. fragen die Wissensdatenbank nach aktuellen Status, Verfahren und mehr.

LLM vermittelt

Autonome Agenten ergreifen/beraten Maßnahmen und interagieren mit Menschen, während sie eine synchronisierte Verbindung zum Netzwerk aufrechterhalten.

Wenn neue Daten in die Wissensdatenbank eingeführt werden, werden generative Weltmodelle aktualisiert.

Simulationen dieser Modelle werden durchgeführt, um Risikometriken und optimale Entscheidungsaktionen zu berechnen.

Aktive Prozesse

Sicherheits- und behördliche Anforderungen

Technische Spezifikationen

....

Automatisierte LLM-Tools verarbeiten eine Vielzahl von Dokumenten über menschliche Fähigkeiten hinaus, verfolgen Status, aktualisieren Aufzeichnungen und markieren potenzielle Probleme.

Protokolle

Weltmodelle aktualisieren

WB aktualisieren

The Gaia Network

A WWW of world/decision models

Goal: Help agents (people, AI, organizations) with:

-

Making sense of a complex world

-

Grounding decisions, dialogues and negotiations

How: Decentralized, crowdsourced, model-based prediction and assessment

Where: Applications in:

- Safe AI for automation in the physical world (today's focus)

- Climate policy and investment

- Participatory planning for cities

- Sustainability and risk mitigation in supply chains

- Etc

Let's discuss!

- Can you think of other systems and applications that would benefit from CRE/ActInf and these tools?

-

Consider any issues, improvements, pitfalls. What might need to happen to evolve this for CI? e.g. room for ToM?

-

How might/might not this fit in the long view of AI safety and alignment, e.g. ASI?

- Did the Panthers deserve the cup?

Some of Our contributors (so far)!

Research Template

By Michael Walters

Research Template

- 134