The Web Audio API

Synthesis and Visualization

Mithun Selvaratnam

Audio in the Web Browser

- <bgsound> (Internet Explorer) & <embed> (Netscape) allowed sites to trigger background music when a page was accessed;

- Adobe Flash was the first cross-browser way of playing back audio; biggest drawback was that it required a plug-in

- HTML5's <audio> element provides native support for audio playback in all browsers

Why not just use <audio>?

If simple playback is all you need, go for it! However, <audio> has several limitations that keep it from being reliable for audio-intensive applications.

// HTML

<audio src="audio.mp3" id="demo" preload="auto"></audio>

// JavaScript

var audio = document.getElementById('demo')

audio.play()Limitations of <audio>

- no precise timing; unreliable latency

- limited number of samples can be played at once

- no real-time effects

- no audio synthesis

- no way to analyze sounds or create visualizations

The Web Audio API is a native JavaScript library that allows for advanced audio processing

- gives developers access to features found in specialized applications like digital audio workstations and game audio engines, such as:

- Synthesis: generating sounds, building instruments, manipulating sound using effects

- Analysis: monitor frequency and time data; speech recognition, pitch/rhythm detection

- Visualization: using frequency and time data to dynamically generate graphs based on audio playback

- Spatialization: simulating acoustics in a 2D or 3D environment; adjusting audio based on position of listener and sound sources; typically used in games

How does it work?

Audio Context

var audioCtx = new (window.AudioContext || window.webkitAudioContext)();

var oscillator = audioCtx.createOscillator();

oscillator.connect(audioCtx.destination);

Think of audio context as a flowchart of nodes, starting with an sound source and ending with an output.

Hold on...oscillator? filter? gain? What does all of it MEAN?

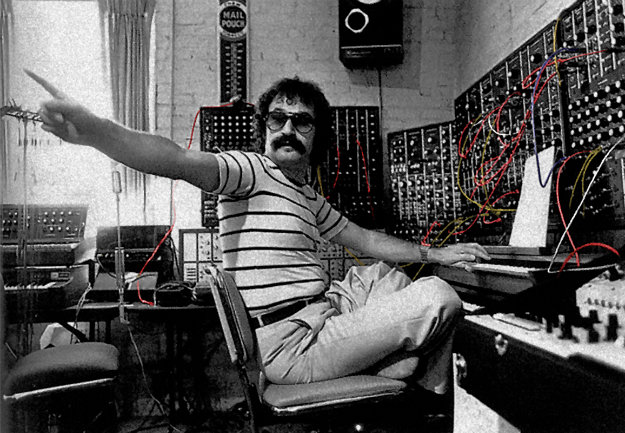

A Brief Intro to Synths

- oscillator: generates a waveform of a certain shape at a certain pitch; i.e. creates sound

-

filter: shapes tone of a sound by attenuating frequencies

- cutoff: point at which to block certain frequencies

- resonance (Q): amount by which to boost amplitude at cutoff point

- amplifier: controls amount of signal to pass to output, i.e. volume or gain

Audio Analysis

var waveAnalyser = audioCtx.createAnalyser();

waveAnalyser.fftSize = 2048;

// creates an array of 8-bit unsigned integers

// frequencyBinCount is a number equal to half of the FFTSize (Fast Fourier Transform)

// FFT is used to interpret frequency or time data of waveforms

var dataArray = new Uint8Array(waveAnalyser.frequencyBinCount);

// will fill dataArray with values corresponding to changes in amplitude over time

waveAnalyser.getByteTimeDomainData(dataArray);

// will fill dataArray with values corresponding to changes in amplitude over frequency

waveAnalyser.getByteFrequencyData(dataArray);

// the values in dataArray can then be passed into a visualizing tool like <canvas>Conclusion

- The Web Audio API facilitates advanced audio tasks in the browser, like sound synthesis and analysis

- Audio analysis can be combined with visualization tools for both practical and aesthetically pleasing results

- Many JavaScript audio libraries are built off of it, e.g. Tone.js, Flocking.js; they streamline certain features but are rooted in the same logic

References

- MDN, Web Audio API documentation

- Smus, Boris. Web Audio API

- New York School of Synthesis. Intro to Synthesis video tutorial

Web Audio API

By Mithun Selvaratnam

Web Audio API

Fullstack 1609 Tech Talk

- 1,341