Around the cluster in 80 ms

The journey of a packet

Monica Gangwar

About me

Not just your average Software Developer ...

Not just your average Devops Engineer ...

To save the cluster... I had to become something else... someone else ...

And I became Jugadu Engineer as well ...

Full stack Engineer @

A long time ago ...

Terminology

- Kubernetes

- Pod

- Service

Problem

Service A wants to send a packet to Service B

Simple easy path :D

If only it was that easy

Each component has the tendency to either add latency or block the packet altogether!

The route not taken:

Packet traces explained

...

Kernel

DNS Resolver

Kube Proxy

CNI

Voila!

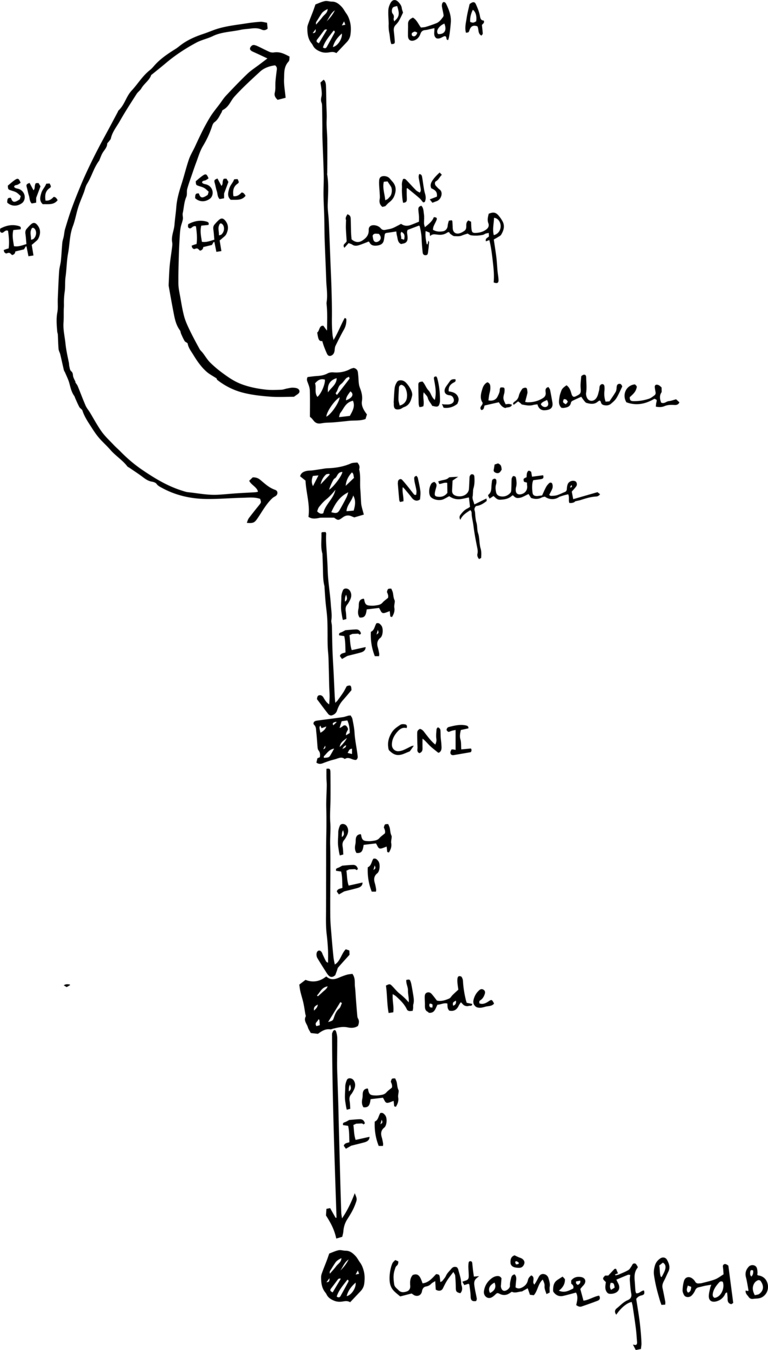

The Treacherous path: First stop

Service IP

Pod IP

DNS lookup

Pod IP

Objective : Send packet to serviceB

Kernel

Latencies in dns lookups

- DNS lookups performed by libc/musl for serviceB

- Lookups for A(IPv4) and AAAA(IPv6) from the same socket

- Race condition when DNAT/SNAT translations happen in kernel

- Added latency due to retries in DNS lookups

"I don't have any authority over Linux other than this notion that I know what I'm doing."

- Linus Torvalds

- Monica Gangwar

Kernel (contd)

How to debug it

-

tcpdump port 53

-

conntrack -S

How to avoid it

- single-request-reopen

- grpc dns resolver

# linux

---

apiVersion: extensions/v1beta1

kind: Deployment

spec:

template:

spec:

dnsConfig:

options:

- name: single-request-reopen

# grpc on linux

---

apiVersion: extensions/v1beta1

kind: Deployment

spec:

template:

spec:

containers:

- env:

- name: GRPC_DNS_RESOLVER

value: native

name: sample-grpc-app

dnsConfig:

options:

- name: single-request-reopen

Kernel

DNS Resolver

Kube Proxy

CNI

Voila!

The Treacherous path: Second stop

Service IP

Pod IP

DNS lookup

Pod IP

Objective : Resolve serviceB to service IP

Dns resolver

| Parameters | KubeDns | CoreDns |

|---|---|---|

| Negative Caching | Absent | Present |

| CPU | Single threaded in C | Multi threaded in Go |

| Memory | Multiple containers | Single container |

| Latency | Better for internal DNS | Better for external DNS |

Overall : CoreDns Wins!

Dns resolver (contd)

Avoid latency due to DNS resolver

- ndots property of kubernetes causes DNS resolution for multiple domain:

- serviceB.default.svc.cluster.local -> serviceB.default.svc.cluster.local.

- caching

- internal zone specification for internal CIDR, eg: mindtickle.com

apiVersion: v1

data:

Corefile: |

.:53 {

log

health

errors

reload

cache 30

prometheus :9153

kubernetes cluster.local 100.64.0.0/13 {

pods verified

resyncperiod 1m

}

autopath @kubernetes

forward . /etc/resolv.conf

}

kind: ConfigMap

metadata:

name: coredns

Kernel

DNS Resolver

Kube Proxy

CNI

Voila!

The Treacherous path: Third stop

Service IP

Pod IP

DNS lookup

Pod IP

Objective : Resolve service IP to Pod IP

Kube Proxy

- Runs in three modes - userspace, iptables, ipvs

- Userspace (obsolete)

- kube proxy itself act as a proxy

- higher latency due to switching b/w kernel and go binary

- iptables (default)

- rules added for NAT rerouting via packet manipulation

- can only round robin over backends with complexity O(n)

- ipvs

- netfilter configuration instead of rules in iptables

- can loadbalance using multiple schemes with complexity O(1)

Debug Kube proxy

- Userspace (obsolete)

- kube-proxy logs

- iptables (default)

conntrack -L

- iptables-save

- bottleneck when load ~ 5000 nodes * 2000 services * 10 pods each

- ipvs

- dummy interface in each node

- IPVS virtual addresses for each service

ipvsadm -ln

Kube proxy (iptables) in action

iptables-save

-A KUBE-SERVICES -d 100.65.207.102/32 -p tcp -m tcp --dport 8080 -j KUBE-SVC-ZTQKF64YG2DSI7SY

-A KUBE-SVC-ZTQKF64YG2DSI7SY -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-A7VB3EEHPCZHU76Q

-A KUBE-SVC-ZTQKF64YG2DSI7SY -j KUBE-SEP-KPI6I3B54SPPUXJ2

:KUBE-SEP-A7VB3EEHPCZHU76Q - [0:0]

-A KUBE-SEP-A7VB3EEHPCZHU76Q -s 100.125.192.6/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-A7VB3EEHPCZHU76Q -p tcp -m tcp -j DNAT --to-destination 100.125.192.6:8080

-A KUBE-SVC-ZTQKF64YG2DSI7SY -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-A7VB3EEHPCZHU76Q

:KUBE-SEP-KPI6I3B54SPPUXJ2 - [0:0]

-A KUBE-SEP-KPI6I3B54SPPUXJ2 -s 100.98.136.0/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-KPI6I3B54SPPUXJ2 -p tcp -m tcp -j DNAT --to-destination 100.98.136.0:8080

-A KUBE-SVC-ZTQKF64YG2DSI7SY -j KUBE-SEP-KPI6I3B54SPPUXJ2

Kernel

DNS Resolver

Kube Proxy

CNI

Voila!

The Treacherous path: Last stop ... finally

Service IP

Pod IP

DNS lookup

Pod IP

Objective : Reach correct container on correct node using Pod Ip

CNI - Container networking interface

In depth article here

CNI - How to troubleshoot ?

Logs, logs and logs ...

Wide variety of Add ons available for CNI and each have their own way of implementing networks

Conclusion

- Kubernetes is not foolproof

-

Kubernetes is not that complex either

-

Networking is hard

-

Networking is fun

-

Abstracted Networking sucks

Thanks, I'm out!

Around the cluster in 80 ms : The journey of a packet

By Monica Gangwar

Around the cluster in 80 ms : The journey of a packet

- 1,405