How Siemens SW Hub increased their test productivity by 38% using Cypress

Gleb Bahmutov

Vp of Engineering

@bahmutov

WEBCAST

Murat Ozcan

Siemens SW Hub Test Lead

Contents

- Siemens Building Operator Cloud App

- Product, Test & Pipeline architectures

-

Cypress plugins

- Recording fixtures

- Visual testing with Percy

- Retries

- API testing with cy.api

- cy.pipe and cy.waitUntil

- App actions

- Dashboard cost savings

- CI cost savings: Combinatorial configs with Cypress

- Key Benefits & Metrics

- Q & A sli.do event #cysiemens

Q & A

Ask your question and up-vote questions at:

https://www.sli.do/

Event code: #cysiemens

or use direct link: https://app.sli.do/event/2pc077rg

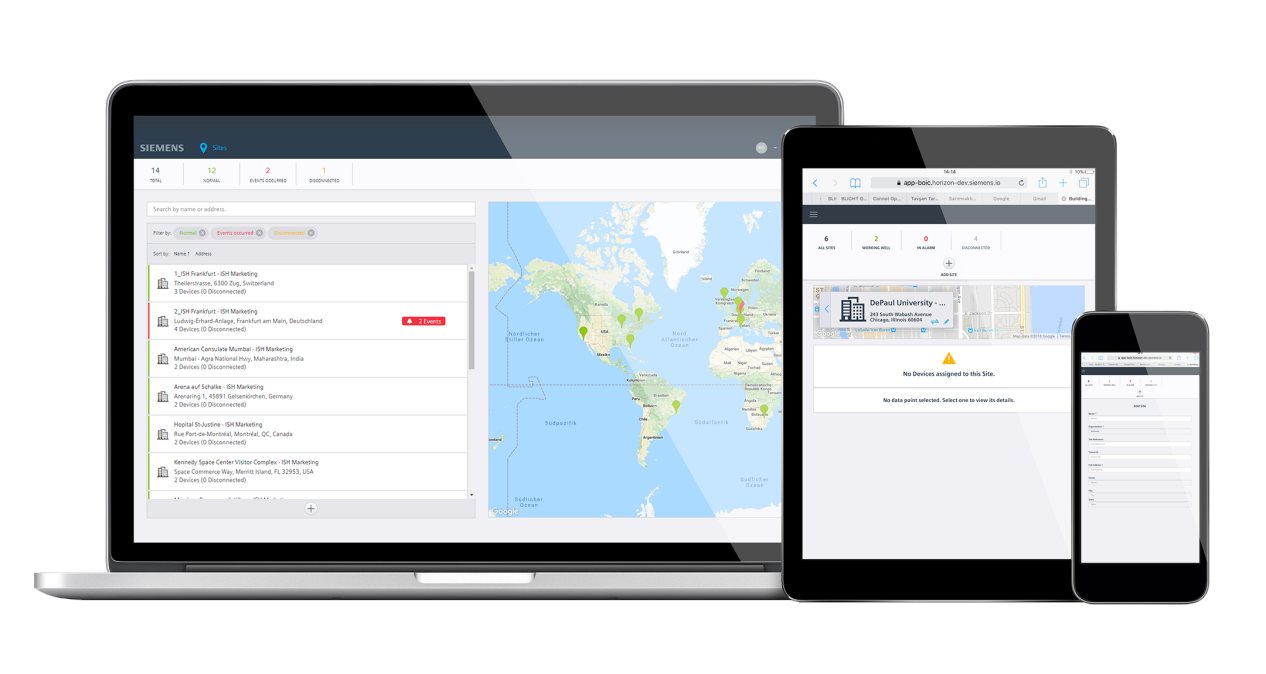

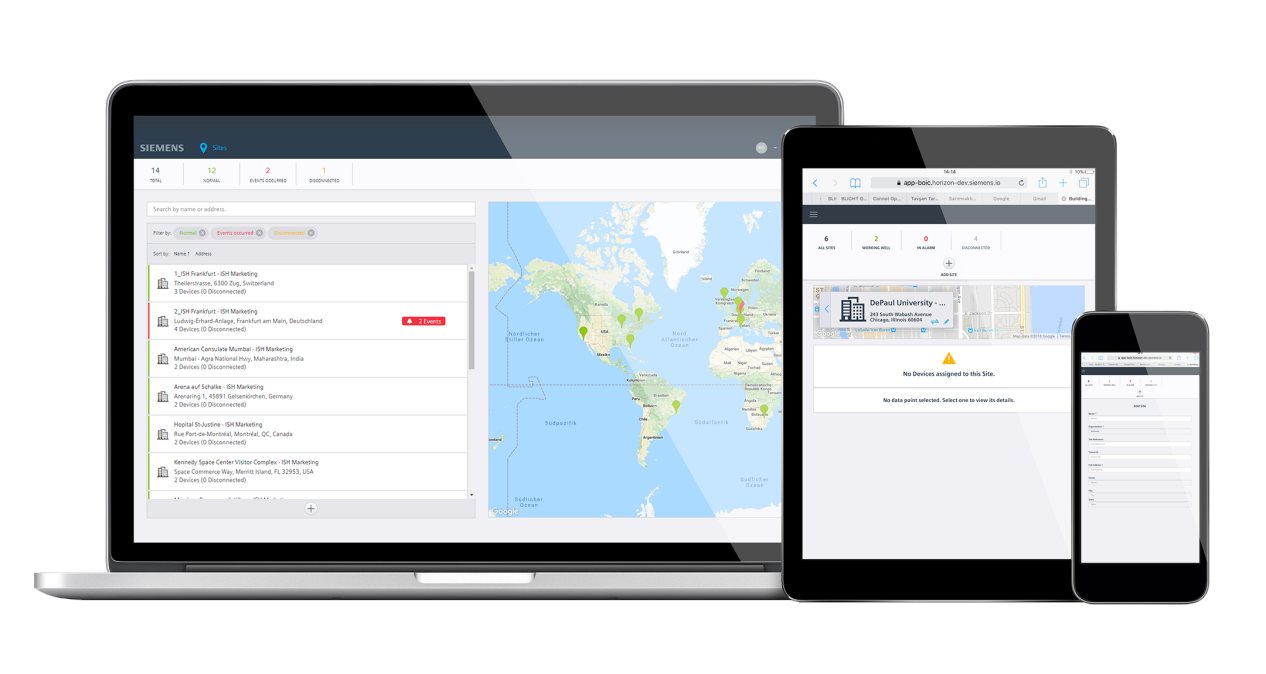

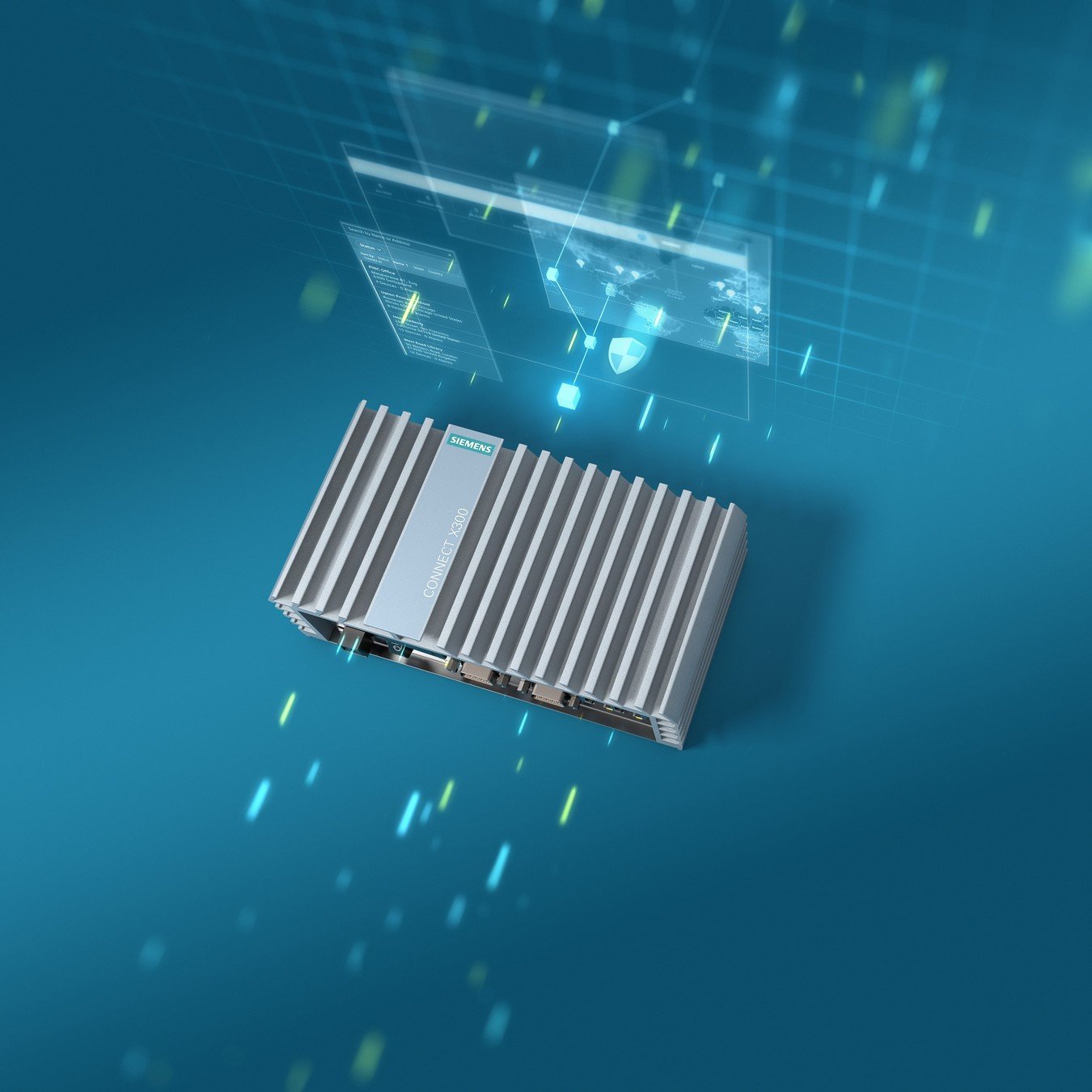

Flagship cloud application of Siemens Smart Infrastructure

SaaS solution

Remotely monitor, operate and troubleshoot buildings

(system under test)

Building Technologies Domain

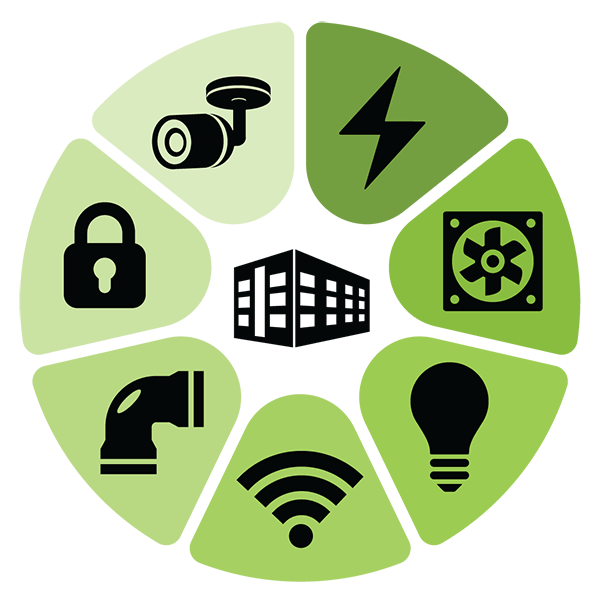

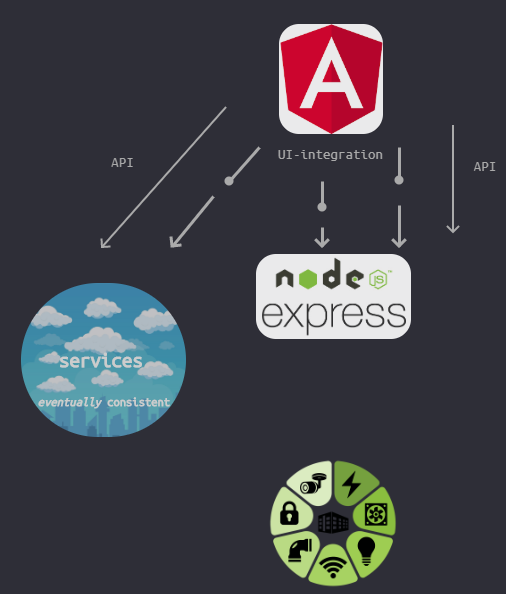

Product Architecture

services

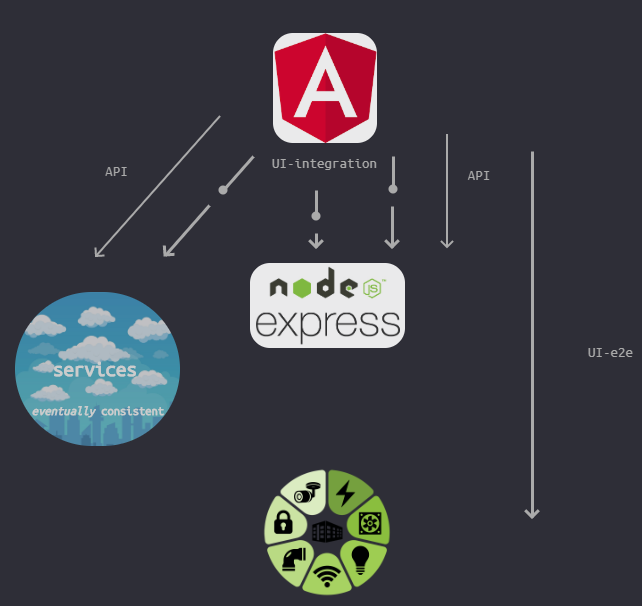

Test Architecture

UI-integration

(fixtures)

UI-e2e

(no fixtures)

API tests

API tests

services

Test Architecture - prior to Cypress

UI-e2e

UI-e2e

UI-e2e

UI-e2e

"We are not testing anymore;

our job is maintaining scripts..."

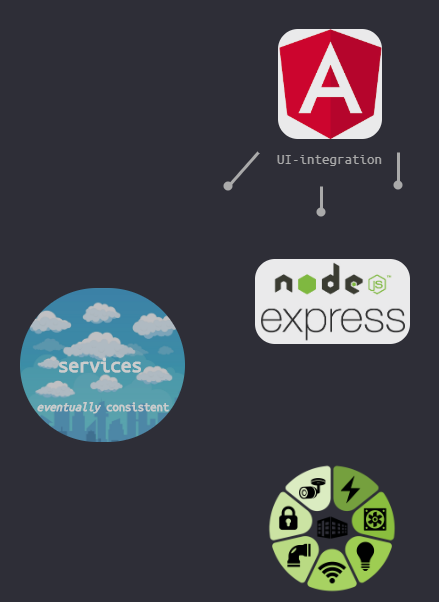

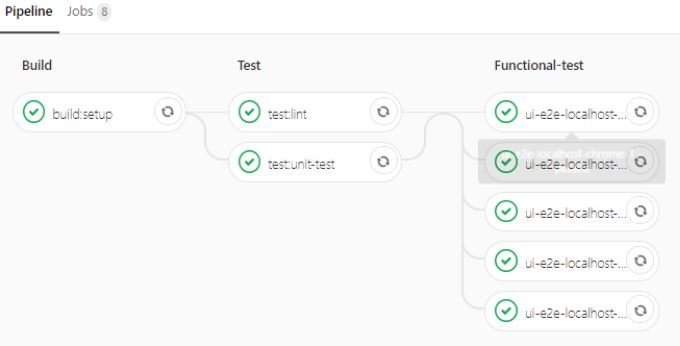

Pipeline Architecture

Keep calm

&

Gleb

Conditional fixtures

UI-integration

UI-e2e

// stub-services.js

export default function() {

cy.server();

cy.route('/api/../me', 'fx:services/me.json').as('me_stub');

cy.route('/api/../permissions', 'fx:services/permissions.json')

.as('permissions_stub');

// Lots of fixtures ...

}// spec file

import stubServices from '../../support/stub-services';

/** isLocalhost, isDev, isInt etc. is a config environment checker*/

const isLocalHost = () => Cypress.env('ENVIRONMENT') === "localhost";

// ...

if (isLocalHost()) {

stubServices();

}branches

development

staging

production

How do we control what we stub?

check out cypress-skip-test

Individual Branches (Pull Requests)

{

"localhost-cy:run-ci": "server-test remote-dev http-get://localhost:4200 cypress:run-ci",

"remote-dev": "ng serve -o --proxy-config proxy.config.remote-dev.json --aot",

"cypress:run-ci": "cypress run --record --parallel --group localhost --config-file localhost.json"

}package.json

localhost.json

(Cypress config file)

{

"baseUrl": "http://localhost:4200",

"env": {

"ENVIRONMENT": "localhost"

},

"testFiles": ["ui-tests/*"]

}

Starts server, waits for URL, then runs test command;

.gitlab-ci-tests.yml

## PARALLEL ui tests on branches (localhost in pipeline)

.job_template: &ui-localhost

image: cypress/browsers:node12.6.0-chrome77

stage: functional-test

only:

- branches

script:

- yarn cypress:verify

- yarn localhost-cy:run-ci

# actual job definitions

ui-localhost-1:

<<: *ui-localhost

ui-localhost-2:

<<: *ui-localhost ...

Individual Branches (Pull Requests)

Development - runs all tests (no hardware)

{

"cypress:record-parallel-all": "cypress run --record --parallel"

}package.json

development.json

(Cypress config file)

{

"baseUrl": "https://app-dev-url.cloud",

"env": {

"ENVIRONMENT": "development" },

"testFiles": ["ui-tests/*", "service-tests/*"]

}

.gitlab-ci-tests.yml

## PARALLEL ui tests on development (replace 'development' with 'staging'...)

.job_template: &ui-e2e-development

image: cypress/browsers:node12.6.0-chrome77

stage: functional-test

only:

- master

script:

- yarn cypress:verify

- yarn cypress:record-parallel-all --config-file development.json --group ui-and-services

# actual job definitions

ui-e2e-dev-1:

<<: *ui-e2e-development

ui-e2e-dev-2:

<<: *ui-e2e-development

Hardware & Cron jobs

Issue Isolation!

Contents

- Siemens Building Operator Cloud App

- Product, Test & Pipeline architectures

-

Cypress plugins

- Recording fixtures

- Visual testing with Percy

- Retries

- API testing with cy.api

- cy.pipe and cy.waitUntil

- App actions

- Dashboard cost savings

- CI cost savings: Combinatorial configs with Cypress

- Key Benefits & Metrics

- Q & A sli.do event #cysiemens

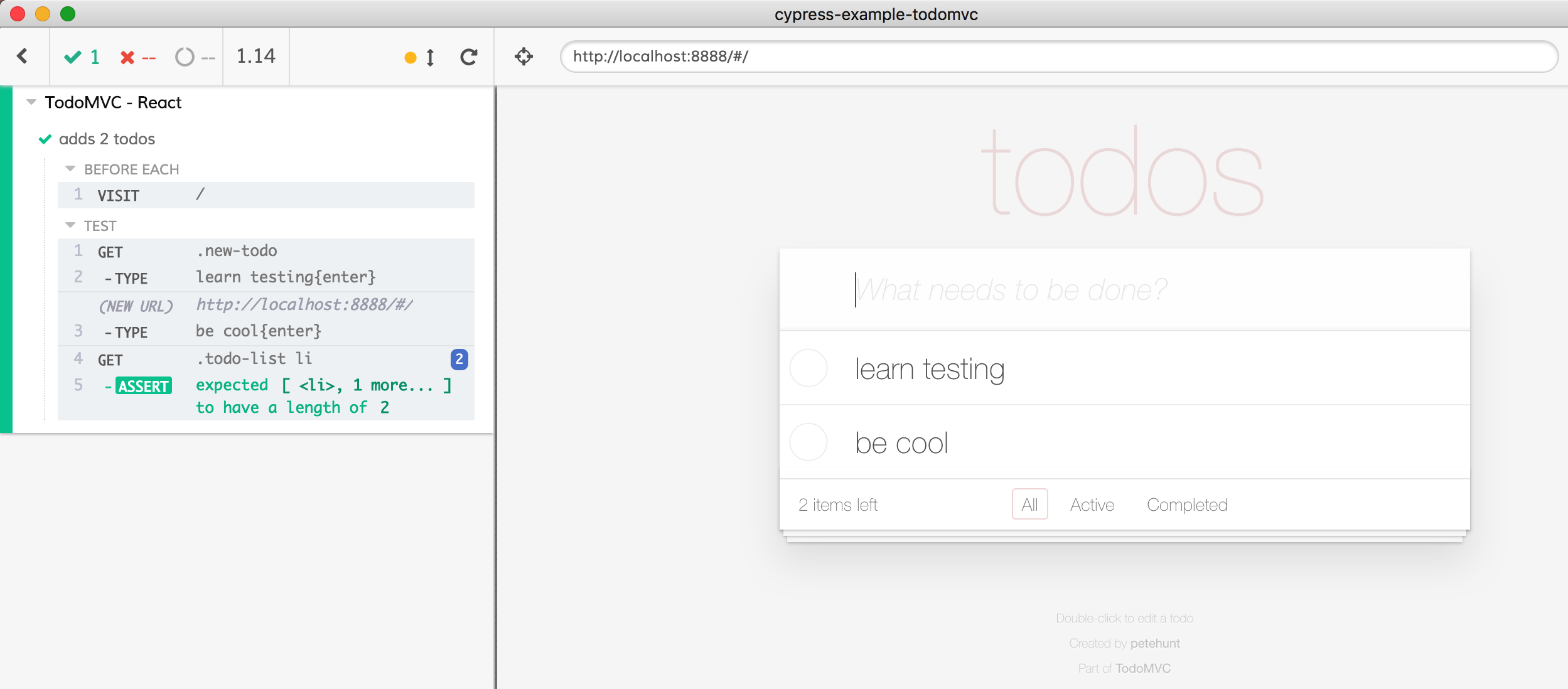

Recording fixtures

Where do we get all our mocks for fixtures?

We do not want to manually copy and save them

We want to record them as the test runs against a real API

Recording fixtures

describe('top describe block', function () {

before(function () { /* setup */ });

let isRecord = false; // Optional boolean flag for recording.

const xhrData = []; // an empty array to hold the data

it('your test', function () {

cy.server({ // if recording, save the response data in the array

onResponse: (response) => {

if (isRecord) {

const url = response.url;

const method = response.method;

const data = response.response.body;

// We push a new entry into the xhrData array

xhrData.push({ url, method, data });

}

}

});

// this is used as a ‘filter’ for the data you want to record.

// You can specify the methods and routes that are recorded

if (isRecord) {

cy.log('recording!');

cy.route({

method: 'GET',

url: '*',

})

}

// if recording, after the test runs, create a fixture file with the recorded data

if (isRecord) {

after(function () {

const path = './cypress/fixtures/yourFixtureName.json';

cy.writeFile(path, xhrData);

cy.log(`Wrote ${xhrData.length} XHR responses to local file ${path}`);

});

}

// (optional) this is if you are executing, and for the sake of the example

// Once fixtures are recorded, you are free to use cy.route however you want

if (!isRecord) {

// use pre-recorded fixtures

cy.fixture('gateway/yourFixtureName')

.then((data) => {

for (let i = 0, length = data.length; i < length; i++) {

cy.route(data[i].method, data[i].url, data[i].data);

}

});

}

// this is where your test goes

});

});

challenge: activate & update - hardware state change

Recording fixtures

Recording fixtures

it('Activated -> update -> Updated', () => {

mockActivatedState();

/* Use UI to Update */

mockUpdatedState();

/* Assert network and UI */

});

challenge: update - hardware state change

Visual AI with Percy.io

visual-ai-test:

stage: nightly-test

only:

- schedules

- tags

image: cypress/browsers:node12.6.0-chrome77

script:

- yarn cypress:verify

- yarn percy exec cypress run --record --group visual-ai --config-file vis-test-suite.json

# specify test specs in the config json file

Check out https://on.cypress.io/visual-testing

How do you visually verify how a page actually looks?

example: Chart zoom factor

cy.get('.track').invoke('attr', 'width').then(trackWidth => {

zoomIn(2);

// non-visual tests always run

checkScrollBarWidth(trackWidth, 2);

// percy tests get ignored in regular CI jobs,

// and get executed in Visual AI CI jobs

cy.percySnapshot('chart zoomed in 2x');

});

Visual AI with Percy.io

Visual AI with Percy.io

How do you address Test Flake and

ensure test-confidence through growing pains?

How do you address false-negatives with pipeline,

infra, shared resources and not having control?

Sporadic Defects?

How do you reveal

// retry only the test

it('should get a response 200 when it sends a request', function () {

// mind the function scope and use of 'this'

this.retries(2);

cy...( … );

});// retry a full spec

describe('top level describe block', () => {

// can use lexical scope with this one

Cypress.env('RETRIES', 2);

before( () => { // or beforeEach

// these do not get repeated

});

// your tests...

});

consistency tests with cron jobs

$$$ failures !!

Sporadic defects

cy.request has retry-ability for 4 seconds

What if you need more insight from CI executions?

What if you need to retry for longer?

it('your test', function () {

cy.api({

method: 'GET',

url: `${apiEndpoint()}`,

headers: { 'Authorization': `${bearerToken}` },

retryOnStatusCodeFailure: true // under the hood Cypress retries the initial req 4 times

}, 'should get a list', // can specify a test name

{ timeout: 20000 }) // and timeout

.its('length')

.should('be.greaterThan', 0); // will retry the assertion for 20 seconds

});Screenshots & video

Sub-test names

Timeouts:

Do not use with a running UI, use cy.request for that

Cypress has built-in Retry-ability for idempotent requests

How do you address race conditions, and retry the actions that change application state? www.cypress.io/blog/when-can-the-test-click/

/* querySelectorAll() returns a static NodeList of DOM elements.

* We use it to get the filtered list of elements without asserting them. */

const getElements = doc => doc.querySelectorAll('.list-item');

/* If the function passed to pipe

* resolves synchronously (doesn't contain Cypress commands)

* AND returns a jQuery element,

* AND pipe is followed by a cy.should

* Then the function will be retried until the assertion passes or times out */

cy.document()

.pipe(getElements)

.should(filteredItems => {

expect(filteredItems[0]).to.be.visible;

});

cy.pipe() is great for addressing race conditions between the app and the test runner - at client side

What if a race condition has an API request dependency?

Retry a button click using cy.pipe()

// Ideal solution: if the button gets grayed out during commanding use cy.pipe

// setup jQuery for cy.pipe() . The $el here can be the command (check) button

const click = $el => $el.click();

// click the button

cy.get('.btn-command')

.pipe(click)

.should($el => {

expect($el).to.not.be.visible // or grayed out, etc.

});Button does not get grayed out; the test needs to be XHR aware

cy.waitUntil can help

let requestStarted = false; // set the flag: did the command go out?

cy.route({

method: 'POST',

url: '**/pointcommands',

// the flag will be set to true IF the POST goes out, otherwise it is false

onRequest: () => (requestStarted = true)

}).as('sendCommand');

// waitUntil expects a function that returns a boolean result. We use the flag requestStarted

cy.waitUntil( () =>

cy.get('.btn-command').click() // we get the button and click it

.then(() => requestStarted) // we return the value of requestStarted: false until POST goes out

,

{

timeout: 10000,

interval: 1000,

errorMsg: 'POST not sent within time limit'

});

// if waitUntil is fulfilled, we wait for the POST alias at XHR level

cy.wait('@sendCommand');Contents

- Siemens Building Operator Cloud App

- Product, Test & Pipeline architectures

-

Cypress plugins

- Recording fixtures

- Visual testing with Percy

- Retries

- API testing with cy.api

- cy.pipe and cy.waitUntil

- App actions

- Dashboard cost savings

- CI cost savings: Combinatorial configs with Cypress

- Key Benefits & Metrics

- Q & A sli.do event #cysiemens

Tests

-----------------

Page Objects

~ ~ ~ ~ ~ ~ ~ ~ ~

HTML UI

-----------------

Application code----------

API ...

DB ...

DOM

storage

location

cookies

Cypress tests have direct access to your app

network

app code

App actions: setup

// Angular Component file example

/* setup:

0. Identify the component in the DOM;

inspect and find the corresponding <app.. tag,

then find the component in src

1. Insert conditional */

constructor(

/* ... */

) {

/* if running inside Cypress tests, set the component

may need // @ts-ignore initially */

if (window.Cypress) {

window.yourComponent = this;

}

}/** yields the data property on your component */

export const getSomeListData = () =>

yourComponent().should('have.property', 'data');

/** yields window.yourComponent */

export const yourComponent = () =>

cy.window().should('have.property', 'yourComponent');DevTools:

see what that component allows for properties

Component code:

see what functions you can .invoke()

../../support/app-actions.ts

challenge: component list

const invalidIcons = [ .. ];

const validIcons = [ .. ];

/** sets icons in the component based on the array*/

const setIcons = (icons) => {

appAction.getList().then(list => {

// we only want to display the array of icons

list.length = icons.length;

// set the component according to the array

for (let i = 0; i < list.length; i++) {

list[i].iconAttr = icon[i];

}

});

};

it('should check valid icons', () => {

// setup Component

setIcons(validIcons);

// assert DOM

cy.get(...);

// assert visuals

cy.percySnapshot('valid icons');

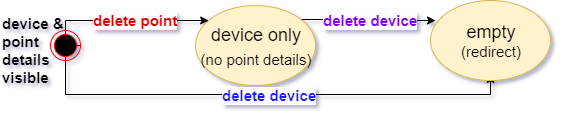

});challenge 2: deletion; hardware state change

left pane

(Device & points)

right pane

(point details)

it('Component test: delete right pane and then left', () => {

/* tests a SEQUENCE not covered with UI tests

* tests a COMBINATION of components */

appAction.deleteRightPane();

cy.window().should('not.have.property', 'rightPaneComponent');

cy.window().should('have.property', 'leftPaneComponent');

appAction.deleteLeftPane();

cy.window().should('not.have.property', 'leftPaneComponent');

cy.window().should('not.have.property', 'rightPaneComponent');

cy.url().should('match', redirectRoute);

});challenge: deletion; hardware state change

App Actions: powerful, but make sure you are still testing the user interface

Contents

- Siemens Building Operator Cloud App

- Product, Test & Pipeline architectures

-

Cypress plugins

- Recording fixtures

- Visual testing with Percy

- Retries

- API testing with cy.api

- cy.pipe and cy.waitUntil

- App actions

- Dashboard cost savings

- CI cost savings: Combinatorial configs with Cypress

- Key Benefits & Metrics

- Q & A sli.do event #cysiemens

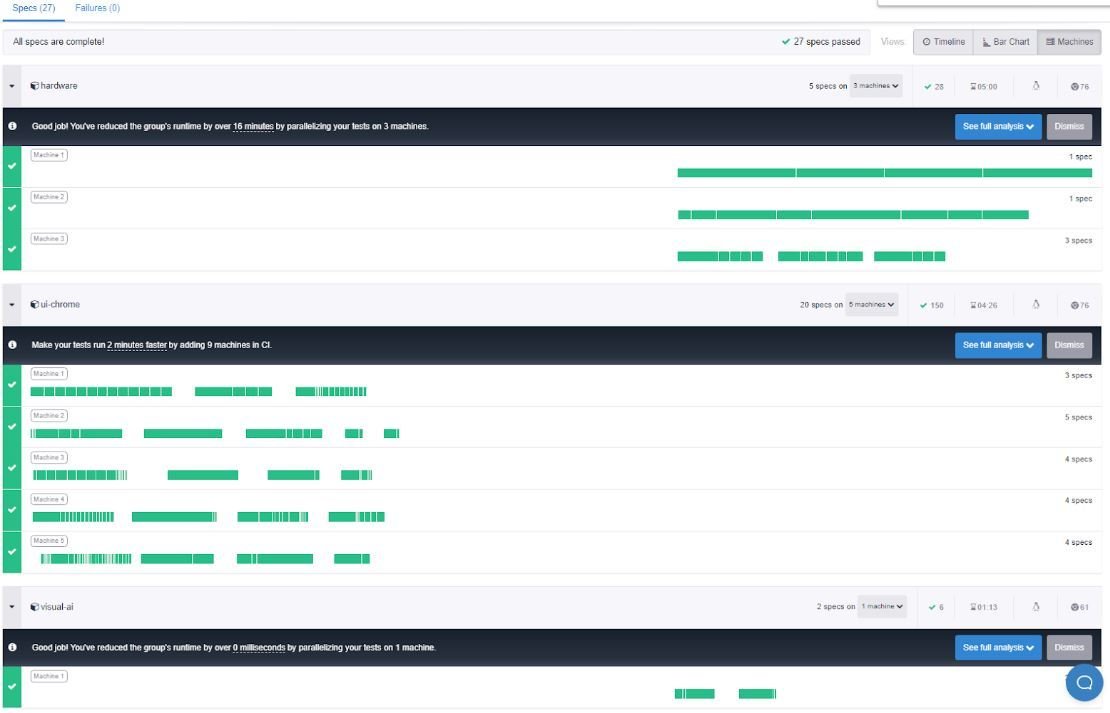

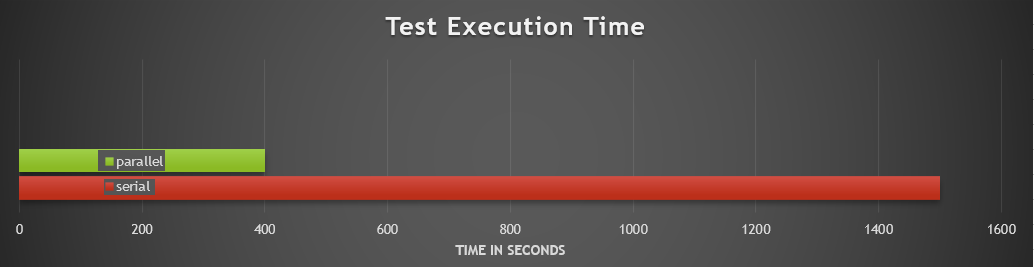

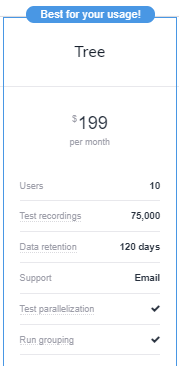

Dashboard cost savings

Dashboard cost savings

| serial execution | 1500 seconds |

| parallel execution | 400 seconds |

| % gain | 375% |

| time saved per execution | 1100 seconds |

| # of executions per Q | 1000 pipelines |

| time saved per Q | 306 person-hours |

| total number of tests | 194 |

| test recordings per Q | ~194,000 |

| Dashboard cost per Q | $597.00 |

| Cost savings | > 290 person-hours |

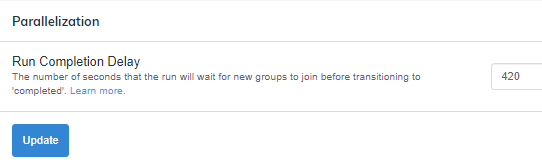

Dashboard cost savings: efficiency in CI

Very costly to manually optimize & maintain this continuously

(automatic) allocate specs to N machines

Dashboard cost savings: efficiency in CI

Very costly to manually optimize & maintain this continuously

(automatic) run specs from slowest to fastest

Dashboard cost savings: efficiency in CI

Very costly to manually optimize & maintain this continuously

(manual) split long specs into shorter ones

Contents

- Siemens Building Operator Cloud App

- Product, Test & Pipeline architectures

-

Cypress plugins

- Recording fixtures

- Visual testing with Percy

- Retries

- API testing with cy.api

- cy.pipe and cy.waitUntil

- App actions

- Dashboard cost savings

- CI cost savings: Combinatorial configs with Cypress

- Key Benefits & Metrics

- Q & A sli.do event #cysiemens

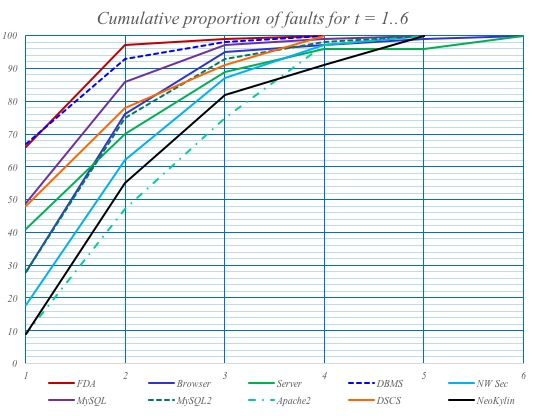

CI cost savings: Combinatorial Testing

"Proven method for more effective sw testing at a lower cost"

"Most sw bugs and failures are caused by one or two parameters"

"Testing parameter combinations can provide more efficient fault detection than conventional methods"

CI cost savings: Combinatorial Testing

check out slides 16-50

vary just browser for example

vary browser + test suite

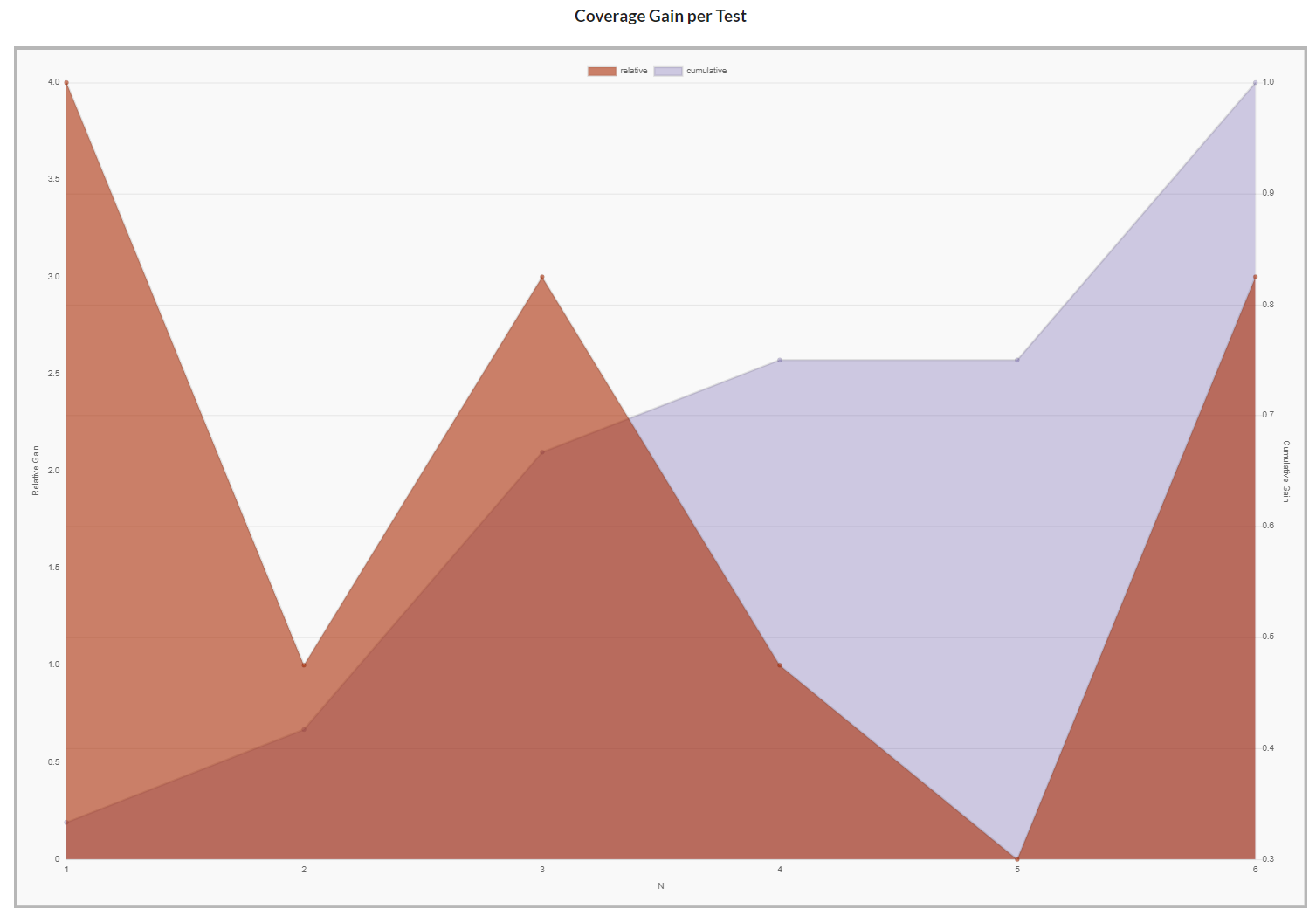

CI cost savings: Combinatorial configs with Cypress

Model CI

Parameters:

deployment_UI : { branch, development, staging }

deployment_API: { development, staging }

spec_suite: { ui_services_stubbed, ui_services, ui_services_hardware }

browser: { chrome, electron }

Constraints:

// on staging, run all tests

# spec_suite=ui_services_hardware <=> deployment_API=staging #

// match dev vs dev, staging vs staging, and when on staging use Chrome

# deployment_UI=development => deployment_API=development #

# deployment_UI=staging => deployment_API=staging #

# deployment_UI=staging && deployment_API=staging => browser=chrome #

// when on branch, stub the services

# deployment_UI=branch => spec_suite=ui_services_stubbed #

// do not stub the services when on UI development

# deployment_UI=development => spec_suite!=ui_services_stubbed #| deployment_UI | deployment_API | spec_suite | browser |

|---|---|---|---|

| branch | dev | ui_services_stubbed | chrome |

| branch | dev | ui_services_stubbed | electron |

| dev | dev | ui_services | chrome |

| dev | dev | ui_services | electron |

| staging | staging | ui_services_hardware | chrome |

36 exhaustive configs

5 combinatorial configs

--record --parallel (375% gain for us)

--config-file branch.json/dev.json/stag.json

server-test remote-dev / remote-int

--spec 'ui-tests/*' or use config file

--browser chrome / electron / firefox

(extra: --group group1 / group2 / group3)

3 params

x 2

x 3

x 2

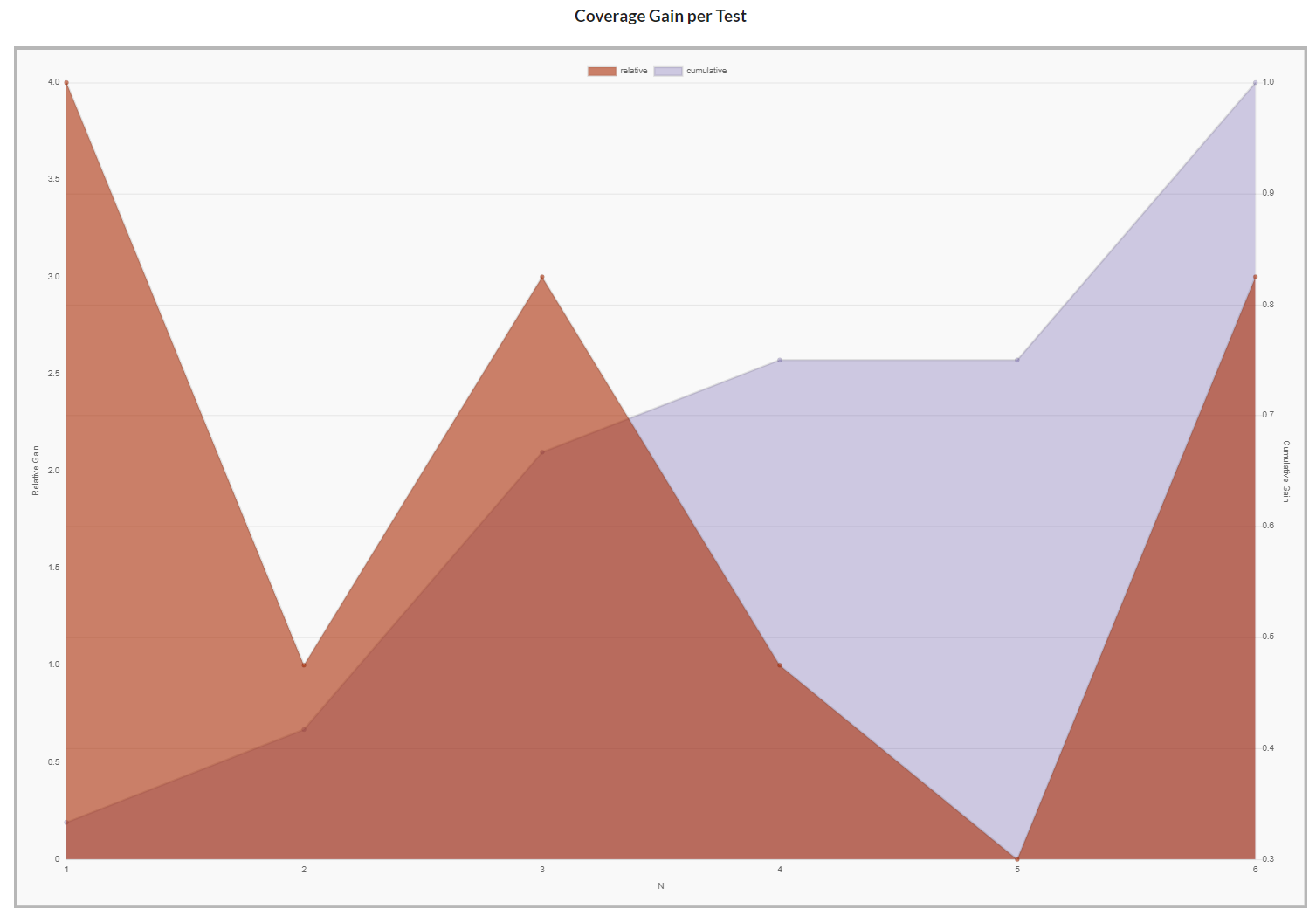

CI cost savings: adding Firefox and spot-checks

Model CI

Parameters:

deployment_UI : { branch, development, staging }

deployment_API: { development, staging }

spec_suite: { ui_services_stubbed, ui_services, ui_services_hardware, spot_check}

browser: { chrome, electron, firefox }

Constraints:

// one extra constraint for firefox spot checks

# browser=firefox <=> spec_suite=spot_check #

// on staging, run all tests

# spec_suite=ui_services_hardware <=> deployment_API=staging #

// match dev vs dev, staging vs staging, and when on staging use Chrome

# deployment_UI=development => deployment_API=development #

# deployment_UI=staging => deployment_API=staging #

# deployment_UI=staging && deployment_API=staging => browser=chrome #

// when on branch, stub the services

# deployment_UI=branch => spec_suite=ui_services_stubbed #

// do not stub the services when on UI development

# deployment_UI=development => spec_suite!=ui_services_stubbed #| deployment_UI | deployment_API | spec_suite | browser |

|---|---|---|---|

| branch | dev | ui_services_stubbed | chrome |

| branch | dev | ui_services_stubbed | electron |

| dev | dev | spot_check_suite | firefox |

| dev | dev | ui_services | chrome |

| dev | dev | ui_services | electron |

| staging | staging | ui_services_hardware | chrome |

72 exhaustive configs

6 combinatorial configs

Combinatorial Coverage: CAmetrics

72 -> 6 -> 5 test runs

CI cost savings: Combinatorial configs with Cypress

| deployment_UI | deployment_API | spec_suite | browser |

|---|---|---|---|

| branch | dev | ui_services_stubbed | chrome |

| branch | dev | ui_services_stubbed | electron |

| dev | dev | spot_check_suite | firefox |

| dev | dev | ui_services | chrome |

| dev | dev | ui_services | electron |

| staging | staging | ui_services_hardware | chrome |

| deployment_UI | deployment_API | spec_suite | browser |

|---|---|---|---|

| branch | dev | ui_services_stubbed | chrome |

| branch | dev | ui_services_stubbed | electron |

| dev | dev | spot_check_suite | firefox |

| dev | dev | ui_services | chrome |

| staging | staging | ui_services_hardware | chrome |

5 configs can cover browsers, test layers, deployments...

Significant CI cost reduction (from 72 to 5), with feasible risk

CI cost savings: Combinatorial configs with Cypress

72 -> 6 -> 5 test runs

Contents

- Siemens Building Operator Cloud App

- Product, Test & Pipeline architectures

-

Cypress plugins

- Recording fixtures

- Visual testing with Percy

- Retries

- API testing with cy.api

- cy.pipe and cy.waitUntil

- App actions

- Dashboard cost savings

- CI cost savings: Combinatorial configs with Cypress

- Key Benefits & Metrics

- Q & A sli.do event #cysiemens

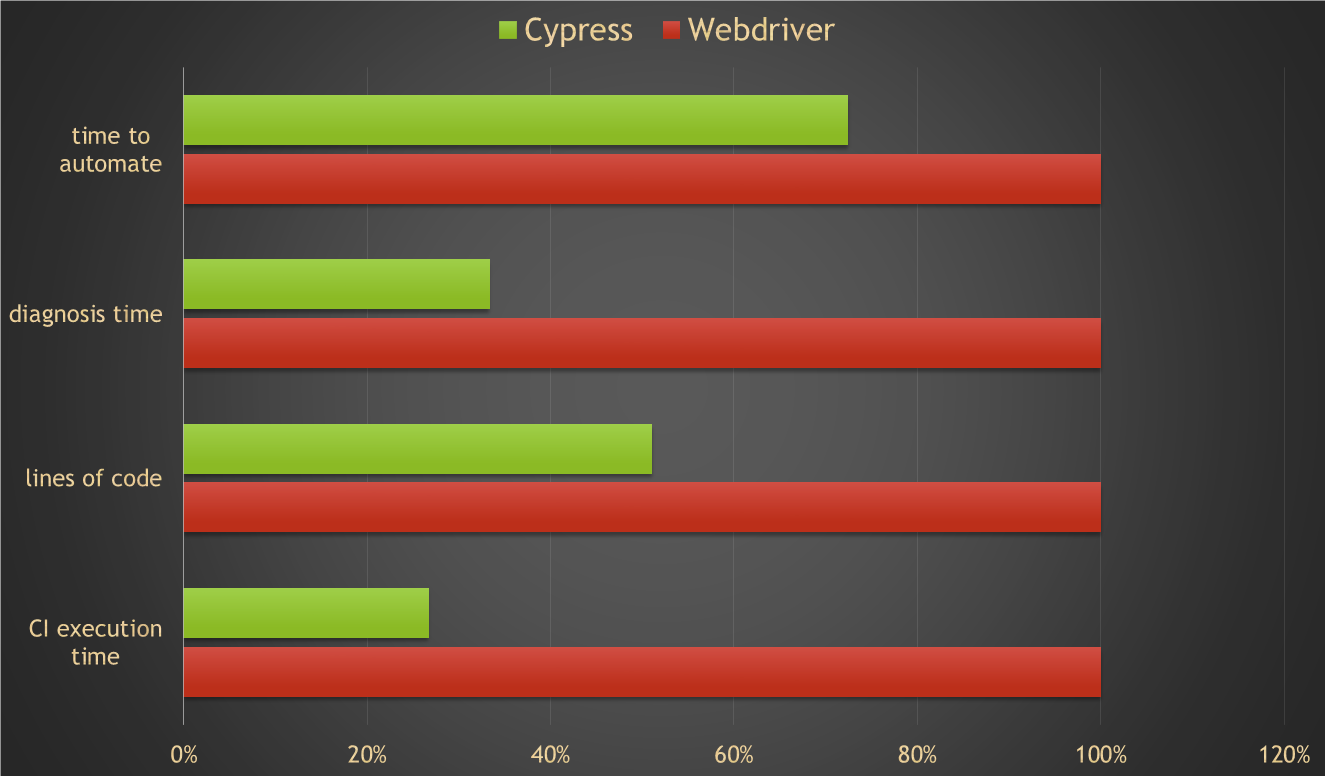

Key Benefits & Metrics

| Source code | Webdriver | test code / month |

|---|---|---|

| 10987 | 2324 | 194 |

| Source code | Cypress-legacy func. | Cypress - new func. | test code / month |

|---|---|---|---|

| 19956 | 1185 | 2026 | 268 |

Lines of code in a year

Product v0

Product v1

38% higher productivity

49% less code

Key Benefits & Metrics

Defect detection & diagnosis

Tests do not fail if the app is working (no false negatives)

When tests fail, they drive us directly to the problem

Time-travel debug, source code debugging, CI video & screenshots

"Diagnosis effort reduced to 1/3, hands down."

Murat Ozcan

Key Benefits & Metrics

CI execution speed

| Webdriver | Cypress |

|---|---|

| 874 seconds | 334 seconds |

legacy ui-e2e (80% hardware)

261% gain

| Cypress serial | Cypress parallel |

|---|---|

| 1500 seconds | 400 seconds |

375% gain

Recall Dashboard value

Key Benefits & Metrics

What have we learned at Siemens

End-to-end tests can be painless with high value

Secret to useful Cypress testing is

test isolation and an enriched test portfolio

Time for Q&A sli.do event #cysiemens

- Siemens Building Operator Cloud App

- Product, Test & Pipeline architectures

-

Cypress plugins

- Recording fixtures

- Visual testing with Percy

- Retries

- API testing with cy.api

- cy.pipe and cy.waitUntil

- App actions

- Dashboard cost savings

- CI cost savings: Combinatorial configs with Cypress

- Key Benefits & Metrics

- Q & A sli.do event #cysiemens

How Siemens SW Hub increased their test productivity by 38% using Cypress

By Murat Ozcan

How Siemens SW Hub increased their test productivity by 38% using Cypress

Link to webcast: https://www.youtube.com/watch?v=aMPkaLOpyns We cover how Siemens SW Hub Chicago cloud team uses Cypress to run hundreds of tests across multiple platforms - to ensure all systems are working correctly for their Smart Infrastructure cloud product: Building Operator. We will explore real-world advanced UI and API tests Siemens uses to ensure quality, and show how they are using community-created plugins for the Cypress Test Runner that extend testing capabilities for visual testing, test retries, and more. Finally, we’ll demonstrate how to run combinations of tests across multiple testing environments to guarantee every code iteration and deployed system passes the tests. We’ll conclude the webcast by showing how Siemens SW Hub Cloud team: • Reduced test code by 49% • Increased productivity by 38% • Sped up test execution by 375%

- 130