CI/CD & Testing

In a real project

by Nadav Sinai,

nadav@misterbit.com

github.com/nadavsinai

June 2015

nadav@misterbit.co.il

github.com/nadavsinai

Nadav Sinai

- Fullstack JS & PHP developer

- Deep in Angular.js since 2013

- Coded some big projects for

Kaltura, Startapp, Reconcept - Loves music & Plays Guitar

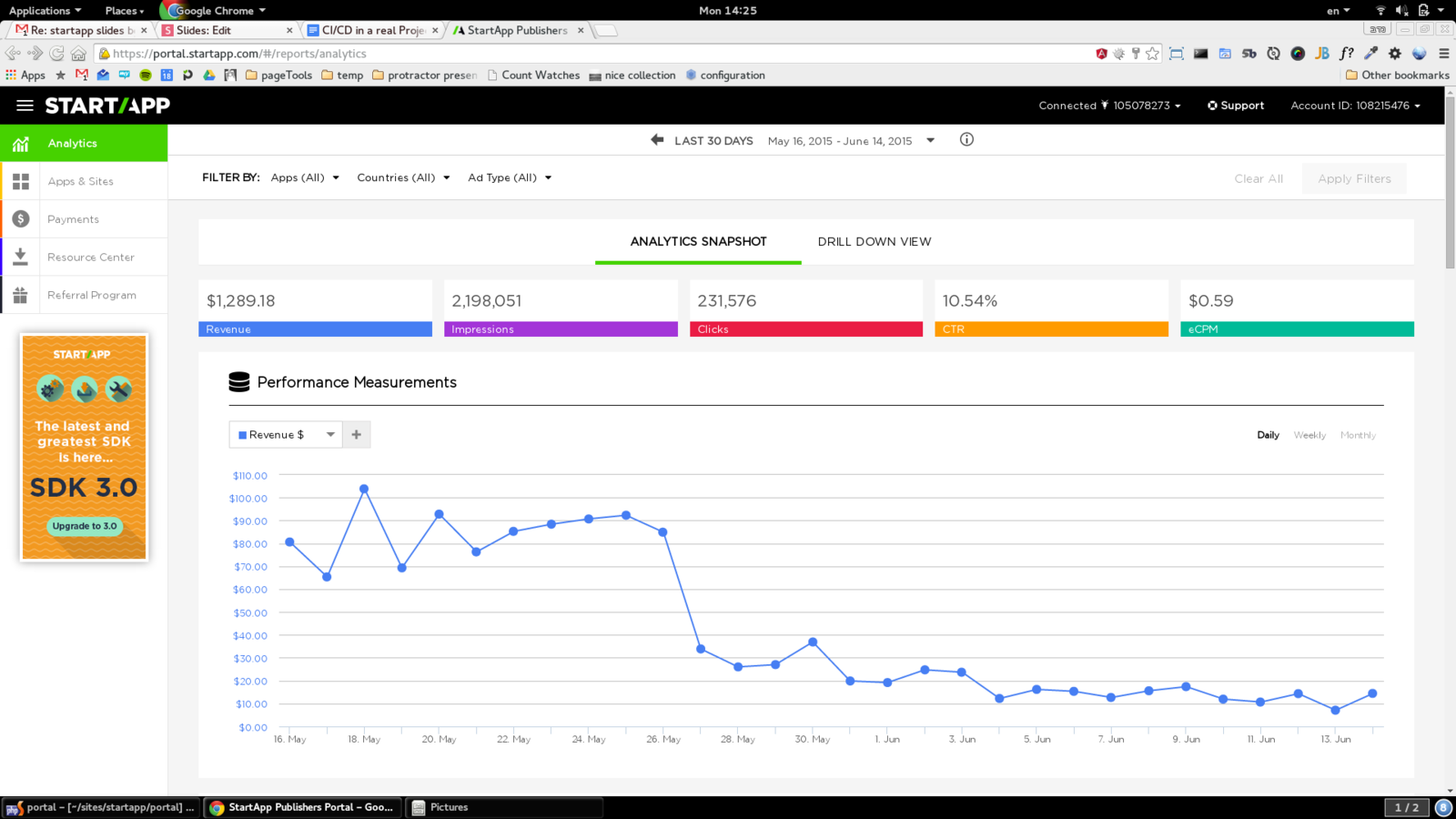

In StartApp

- Fullstack JS developer

- Tech lead for Client Side/AngularJS

- E2E Project lead

- CI/CD Project lead

CI - short for See Eye??

CD - compact disc???

Together

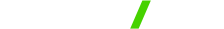

- Founded Dec2010, ~100 employees globally across 6 offices

- Helping mobile app developers monetize their apps

- Provides SDK to implement advertising to mobile apps

- Over 175,000 applications registered today

- Over 350M monthly active distinct users

StartApp Portal Stack

- Written in less than a year, 1-2 weekly builds

- Fullstack JS based on MEAN.js framework

- Runs on 3 load balanced node.js servers & 3 MongoDBs in a ReplicaSet, CDNified assets

- Currently serves the developers, will soon be expanded to also serve the advertisers.

How to keep a solid product while developing rapidly?

QA

Develop

QA

Production

0

CI

Develop

QA

Production

Code flow

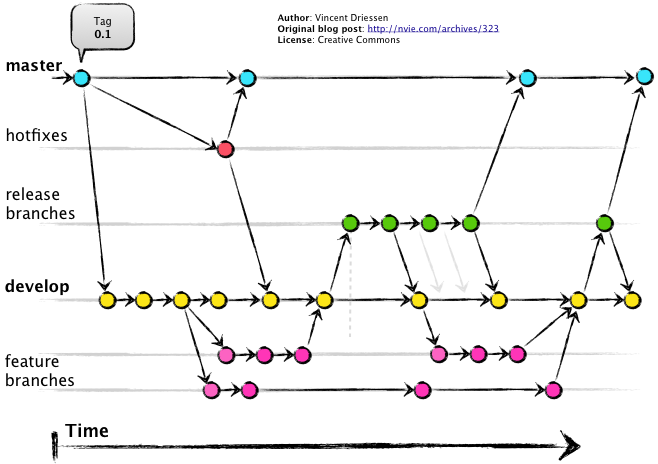

Git - central code repository

- Branch for each feature

- Release branches are made before releases

- Merge only PR to develop/master (except hotfixes)

- Merge with develop every day

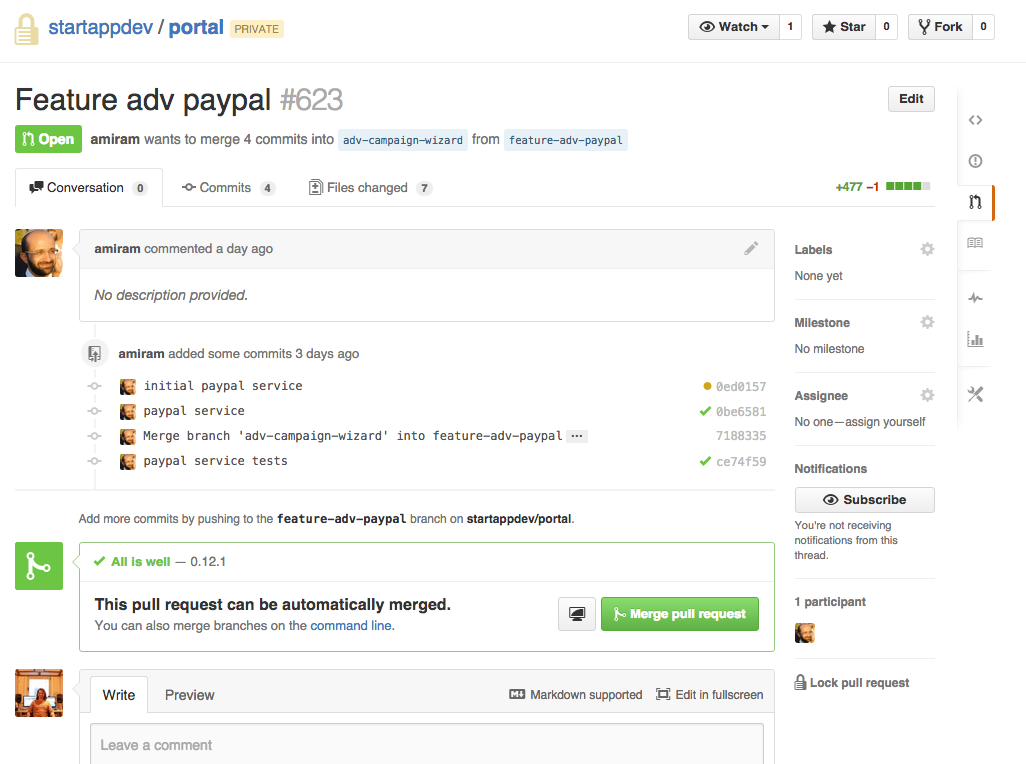

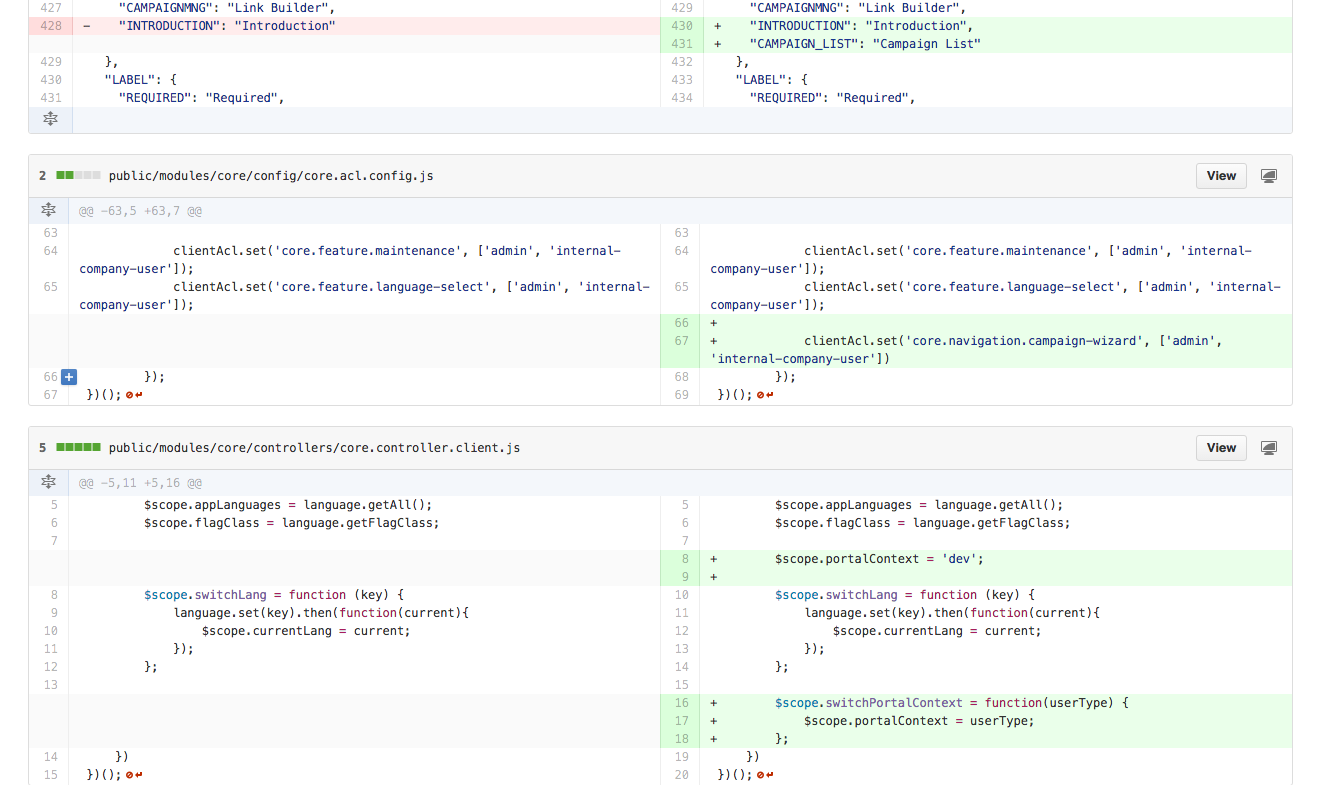

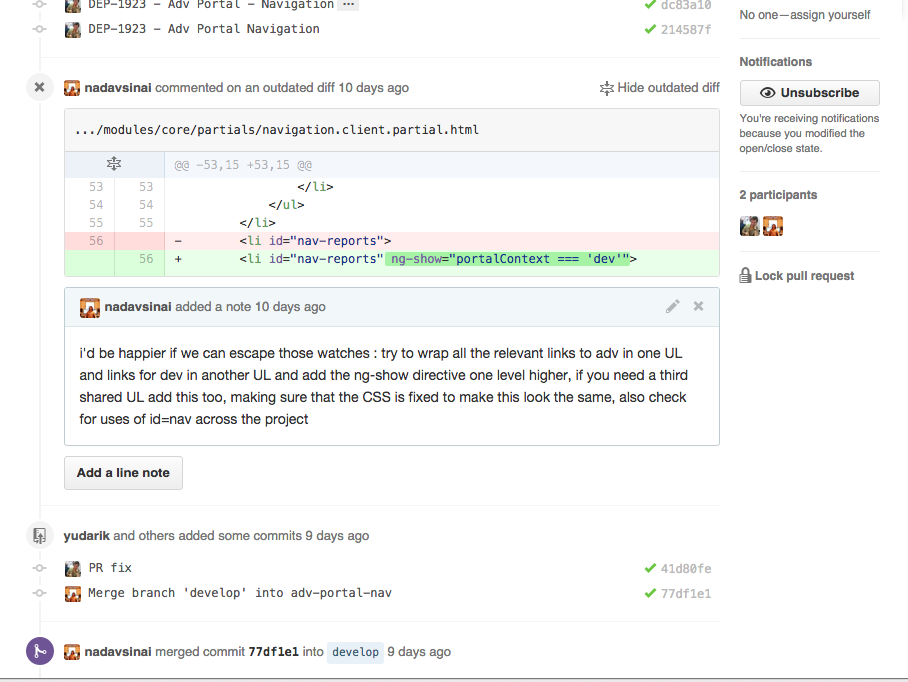

PR - Pull Request

PR - Pull Request

PR - Pull Request

CI - Continues Integration

"The practice of frequently integrating one's new or changed code with the existing code repository." (wikipedia)

But integrating changes does not let you know for sure things are still working well....

How can we know changes haven't broken anything?

Tests

Code Changed

Tests Run

Developer notified

CI - Continues Integration

-

Practice of frequently integrating changed branches & mainline

-

An automation setup for testing code integrity done by responding to repository change events

-

Helps discovering bugs more quickly, somewhat incorporate the QA phase in the development process.

-

Allows for quick rapid deployments whilst keeping stability.

-

It can be extended in many ways - automatic deployment, docs generation, tests coverage reports,performance testing etc.

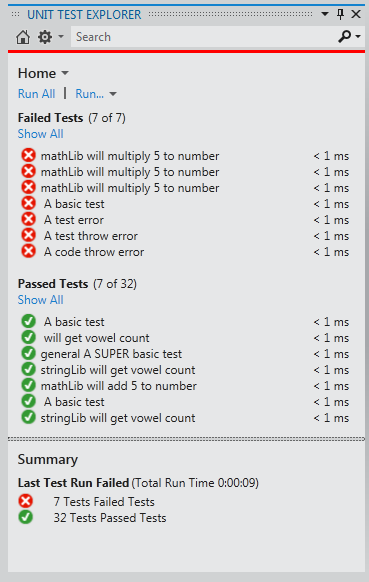

Types of tests

Unit Tests

- Currently there's about 1000 unit tests in both server and client

- Coverage is how much of the code is covered by tests

- We aim to keep coverage at 80%.

- 20 percent of the time to make 80 percent of the work.

Unit Tests

module.exports = function(logMessages) {

return function(req, res, next) {

if (res.statusCode && res.statusCode !== 200) {

var matchingMessageCode = _.findKey(logMessages, function (message) {

return message.httpStatus === res.statusCode;

});

if (matchingMessageCode) {

res.reportError(matchingMessageCode);

} else {

res.reportError('SA101');

}

} else {

next();

}

};

};

Unit Tests

beforeEach(function(done){

reportErrorSpy = sinon.spy();

res = {

statusCode: 200,

reportError: reportErrorSpy

};

errorHandlerMiddleware = errorHandler(logMessagesMock);

done();

});

it('Should continue on 200 status code', function(done){

var next = sinon.spy();

errorHandlerMiddleware(req, res, next);

next.called.should.be.true;

done();

});

Unit Tests

self.shortenTitle = function (title) {

if (title.length > maxTitleLength) {

//trim the string to the maximum length

var trimmedString = title.substr(0, maxTitleLength);

//re-trim if we are in the middle of a word

.........

};

describe('checks for shortenTitle function ', function () {

it('when title is shorter than maxLength', function () {

expect(ctrl.shortenTitle('short')).to.equal('short');

});

it('when title is longer than maxLength', function () {

expect(ctrl.shortenTitle('longlonglong')).to.equal('longl...');

});

it('when title is longer and has space to naturally trim', function () {

expect(ctrl.shortenTitle('long long long')).to.equal('long...');

});

});Unit Tests

Points learnt from writing Unit tests:

- Load only containing module - prove the unit's independence and prevent unknown coupling.

- Unit test should prove the functionally of a single black box, checking internal implementation is irrelevant.

- Budget writing your tests use the 80/20 rule

Development Integration tasks

- Default - runs compile +server + lint + watch

- before-commit - runs lint + cl/srv unit tests

-

before-push - runs E2E tests locally

-

test-client-tdd - runs client unit tests in watch mode

- test-server-tdd - runs server unit tests in watch mode

E2E Tests

- Protractor

- Local and via BrowserStack

- Grunt tasks automate the E2E setup

- A large internal framework has been developed to allow:

- Loading of known mock datasets

- Working with users (captcha, verification,roles etc )

- Creation of test data objects + expected view formatting

- Quick navigation in the app instead of whole page reloads.

- Ease tests development (getting charts data, etc)

E2E Tests

Points learnt from E2E tests development

- E2E is costly but important

- Protractor is tough to debug, dependent on environment. Everything runs better on Linux.

- Although writing good tests requires skills of a Fullstack developer - good Page Objects (selectors) are the most important aspect of keeping the tests easy to maintain.

- Tests are slow to adapt to mobile/tablet versions , especially if you don't follow strict naming rules.

- The most important is to automate the "sanity" flow.

CI Service Provider Choice

- Simplicity of setup/use

- Speed

- Cost

- Docker support (Shippable/Cirrcle CI/Jenkins)

CI tasks

- Prepration

- Tests

- Combine test + coverage reports

- master branch adds:

- Building the minified client

- Test client in production mode

- Make artifacts and upload to S3

script:

- grunt test

- if [ $BRANCH == "master" ]; then grunt build; fi

- if [ $BRANCH == "master" ]; then grunt test-client-production; fi

- if [ $BRANCH == "master" ]; then grunt compress:shippable_artifacts; fi

# deal with tests and coverage reports

after_script:

- mkdir -p ./shippable/testresults

- find . -maxdepth 1 -name "test-result*.xml" -exec cp {} ./shippable/testresults \;

- cd ./shippable/testresults

- python ../../app/cliscripts/merge-test-reports.py $(ls) > results.xml

# notify github of success and if in master upload artifacts

after_success:

- if [ $BRANCH == "master" ]; then sudo pip install awscli; fi

- export APP_VERSION=$(node app/cliscripts/version.js)

- export TARGET_URL=s3://s3.startapp.com/Portal/artifacts/shippable/$APP_VERSION.tar.gz

- if [ $BRANCH == "master" ]; then aws s3 cp ./portal.tar.gz $TARGET_URL --acl private; fi

- eval curl "$GITHUB_HEADER" --data '\{\"state\":\"success\",\"context\":\"ci/shippable\",\"description\":\"$APP_VERSION\"}'

-X POST https://api.github.com/repos/startappdev/portal/statuses/$COMMITCI Choices

- CI needs to be FAST to give quick feedback upon commits

- E2E tests are automated only in releases (or locally)

- E2E tests are automated only in releases (or locally)

- CI path needs to be RELIABLE

- Double CI servers used.

- Artifacts storage needs to centralized S3 used

- Delivery paths offered by cloud providers were too basic for us - so CD Orchestrator was made

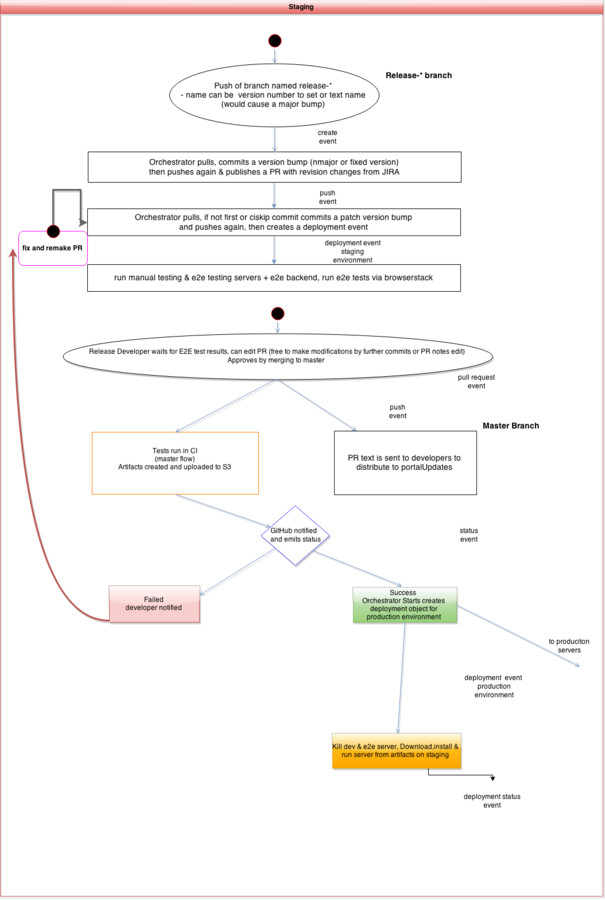

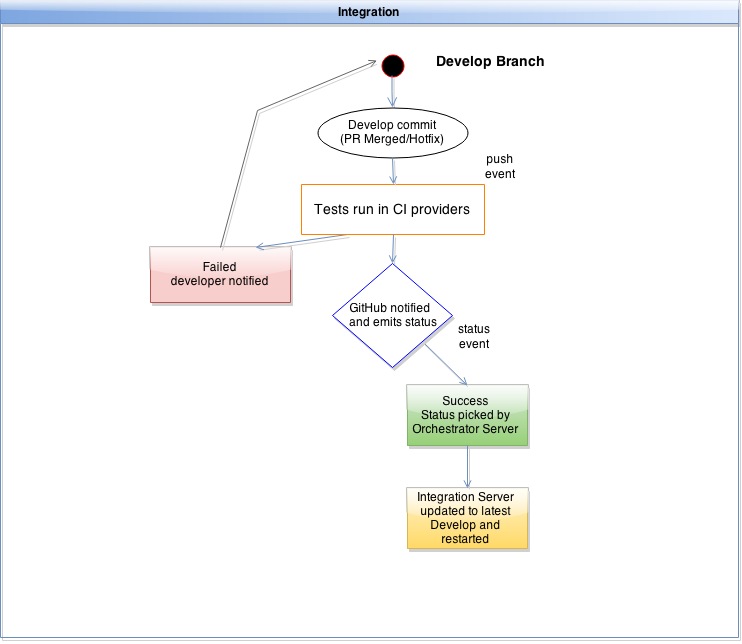

CD Orchestrator

- A separate project to aid deployment and other tasks

- not necessary node related

- Runs arbitrary tasks upon receival of git hooks

- Highly configurable - queues, debouncing, conditions, error handling.

- In our setup uses gulp to run tasks.

- Written in ES6 using babel.

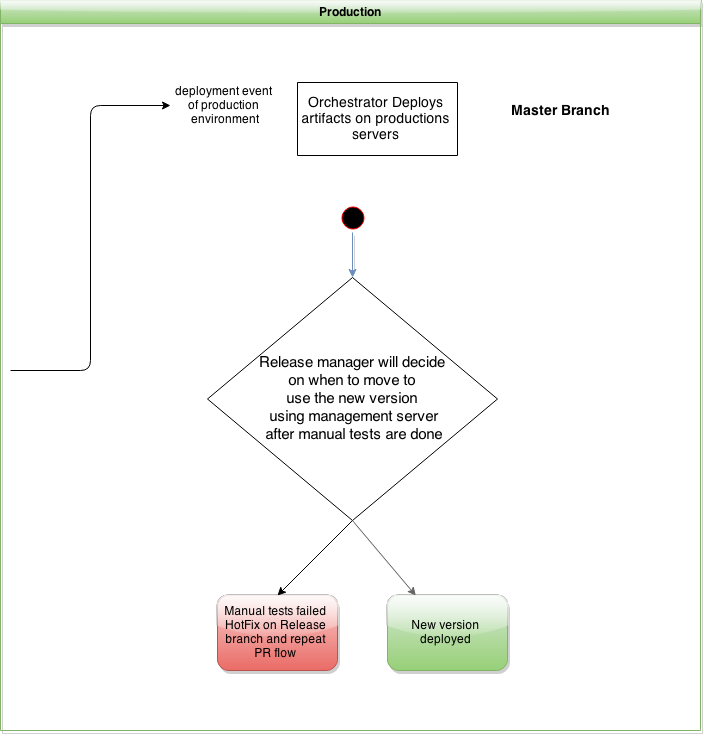

CD

Develop

Integration

Staging

Production

Thank you

CI/CD and Testing in a Real Project

By Nadav SInai

CI/CD and Testing in a Real Project

Slides for the talk in FullStack JS IL

- 1,785