Software Quality and Testing for Geophysical Models

Nic Hannah

ARC CoE Climate System Science

Breakaway Labs

Motivations for Quality

- Geophysical computational models and the science surrounding them are hugely important to society.

- The importance of climate science is ever increasing, and within this the role of software is growing. Climate science is now a computational science.

- As the importance of a system grows, the cost of failure increases and the need to measure and improve quality grows.

- Other scientists, policy makers, the public etc. are relying on us to engineer demonstrably high quality, credible models.

What is Software Quality?

It depends! Probably determined by the user rather than the developer. I imagine high quality software to have few serious bugs, be reliable, easy to maintain, efficient/fast, also easy to test and understand.

Apart from performance measuring quality is very difficult. Perhaps we can start by comparing between models or versions.

Where do we Stand?

- First problem: I don't think we know. We don't have processes in place to measure how good our software is.

- We do know our model code can be difficult to use and understand, badly documented and even buggy. *

- Engineering budgets are often secondary. e.g. we put fewer resources into testing and documentation when compared to equivalent sized products in industry.

* I think GFDL does particularly well in this respect, however there's more to do within the community. GFDL is in a good position to lead the way here.

Getting Practical

- Let's leave the difficult question of how to measure quality aside for now. We'll forge ahead by exploring some specific ways to find or avoid bugs/problems.

- We can learn from Software Engineering and Computer Science but there's also unique challenges when working with numerical codes - as well as unique opportunities.

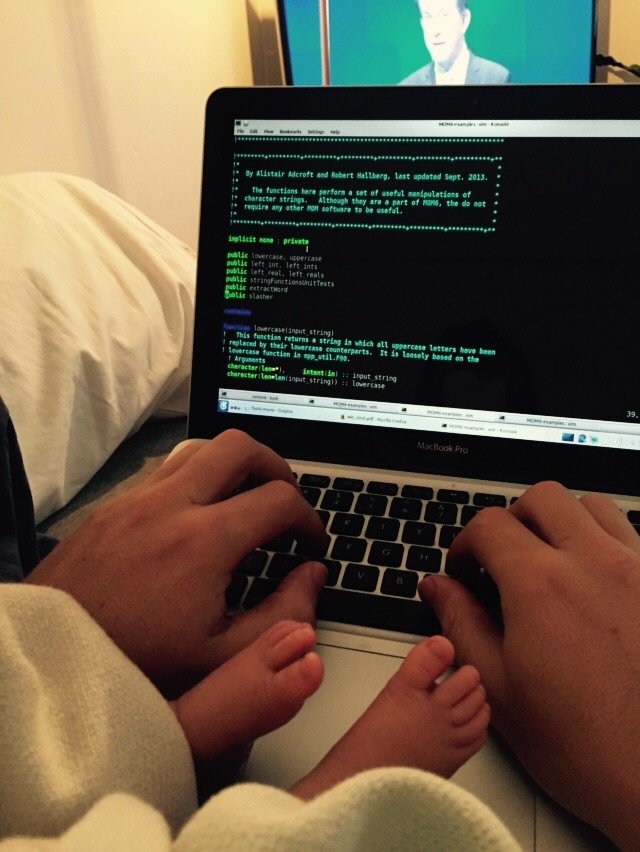

- The next few slides are a summary of useful tools and techniques I've gathered while working on a mobile phone operating system, a bionic hearing implant, the ACCESS-CM coupled model at CSIRO and also MOM6 here at GFDL.

Static Analysis

- Static analyzers check code without running it to find patterns which are considered dangerous, suspicious or non-portable.

- Can also do data flow analysis to check things like passing/returning bad values.

- The idea is to catch low-hanging fruit. Some developers always use them - overhead similar to compiler.

- Examples: Python has PEP8 (more about style) and Pylint, C/C++ has (f)(s)lint, Fortran has Forcheck, Cleanscape (?).

Forchk examples

$ forchk -f90 src/MOM6/src/*/*.F90

333 if (h0+h1==0. .or. h1+h2==0. .or. h2+h3==0.) then

(file: src/MOM6/src/ALE/regrid_edge_values.F90, line: 333)

h0+h1==0.

**[342 I] eq.or ineq. comparison of floating point data with zero constant

37 logical :: boundary_extrapolation = .true.

(file: src/MOM6/src/ALE/MOM_remapping.F90, line: 37)

= .true.

**[274 E] initialization of component or field not allowed

(Initialization of components is supported from Fortran 95 on)

(file: src/MOM6/src/parameterizations/vertical/MOM_vert_friction.F90, line: 1128)

VISC

**[557 I] dummy argument not used

(file: src/MOM6/src/ice_shelf/MOM_ice_shelf.F90, line: 526)

**[ 53 W] tab(s) used

- In Fortran, the compiler warnings probably do a better job at static analysis than most stand-alone tools.

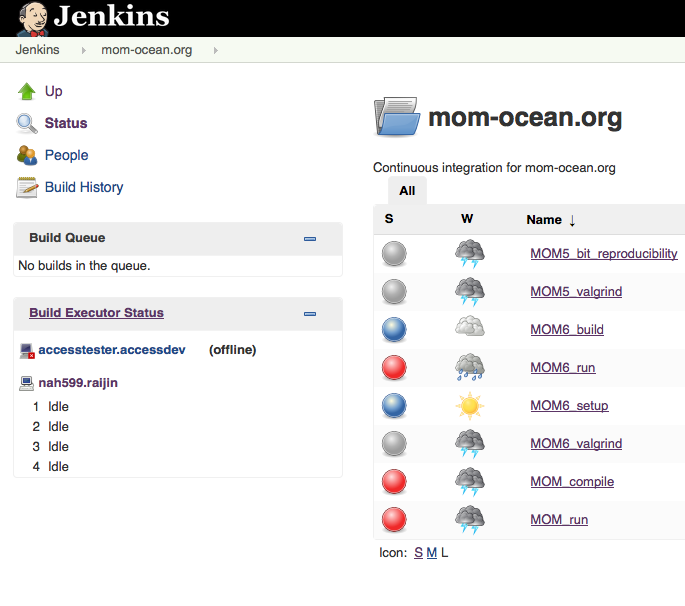

Memory Debugging using Valgrind

- Valgrind is a virtual machine that intercepts memory access instructions and does book-keeping.

- It is able to track every bit of program memory, finding any accesses to uninitialised or unallocated memory.

- It is not dependent on the programming language.

- It can be difficult to use because it is very slow and can produce many false-positives.

- We've run Valgrind on several MOM6 test cases with good effect.

$ mpirun -wdir /short/v45/nah599/access/work/cm_360x300-valgrind/atmosphere -np 32 \

-x LD_PRELOAD=/home/599/nah599/usr/local/lib/valgrind/libmpiwrap-amd64-linux.so \

/home/599/nah599/usr/local/bin/valgrind --main-stacksize=2000000000 \

--max-stackframe=2000000000 --error-limit=no --gen-suppressions=all \

--suppressions=/home/599/nah599/more_home/access-tests/valgrind_suppressions.txt \

/short/v45/nah599/access/bin/um7.3.dbg.exeAn example command line:

Valgrind Example

1 IF (GID == GCG__ALLGROUP) THEN

2 IGID = GC__MY_MPI_COMM_WORLD

3 ELSE

4 IGID = GID

5 ENDIF

6 ISTAT = GCG__MPI_RANK(SEND, IGID)

7 IF (ISTAT == -1) RETURN

8 RANK = ISTAT

9 CALL MPL_BCAST(SARR, 8_GC_REAL_KIND*LEN, MPL_BYTE, RANK, &

IGID, ISTAT)

==16086== Conditional jump or move depends on uninitialised value(s)

==16086== at 0x1A3ED83: gcg_rbcast_ (gcg_rbcast.f90:150)

==16086== by 0x10DEEBA: interpolation_multi_ (interpolation_multi.f90:1490)

==16086== by 0xB79F66: sl_thermo_ (sl_thermo.f90:1497)

==16086== by 0x88F11E: ni_sl_thermo_ (ni_sl_thermo.f90:768)

==16086== by 0x503DC7: atm_step_ (atm_step.f90:10389)

==16086== by 0x4757CD: u_model_ (in /short/v45/nah599/access/bin/um_hg3_dbg.exe)

==16086== by 0x4420FA: um_shell_ (um_shell.f90:3939)

==16086== by 0x43BA26: MAIN__ (flumeMain.f90:36)

==16086== by 0x43B935: main (in /short/v45/nah599/access/bin/um_hg3_dbg.exe)

Stack trace to bad variable access:

Code in question:

Testing

- Nomenclature: unit, system and regression tests.

- Testing has benefits other than code correctness:

- it's a form of documentation

- testability encourages modularity

- In some industries lines of testing code can be many multiples of lines of product code, e.g. medical devices.

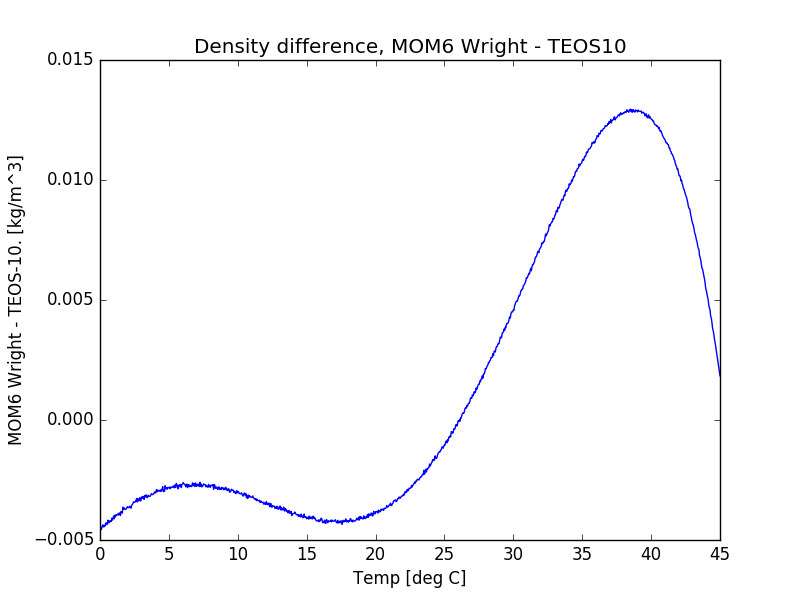

- MOM6 has a good collection of system tests. What about unit tests?

Unit test example: since MOM_EOS_Wright.F90 is very modular it's easy to call from Python and compare to the TEOS-10 package.

Dimensional Analysis

- Programming languages have type systems to give meaning to collections of bits.

- If something in a program has meaning it should have that meaning explicitly ascribed using the language - the compiler can use this to improve correctness. The SI units are an untapped resource in this respect!

- Bob Hallberg and I tested a simple but powerful SI unit/type checking technique within MOM6. We ensured there are no errors involving the H (thickness) unit.

- Basic units are represented by a random power-of-two number. Variables are assigned a unit by multiplication and 'expressed' as a unit using division.

- To check for unit correctness the program is run multiple times, if the output varies randomly then there is a unit mistake.

Unit Checking Example

>>> import random

>>> def check_units():

... s = 2.0**random.randint(1, 100)

... m = 2.0**random.randint(1, 100)

... cm = m / 100.0

... nm = m*1e-9

... mL = cm**3

... # Assign variable x a value of 5 mL

... x = 5*mL

... print('x expressed as cm cubed: {}'.format(x / cm**3))

... print('x expressed as nm cubed: {}'.format(x / nm**3))

... print('x expressed as seconds: {}'.format(x / s))

...

>>> check_units()

x expressed as cm cubed: 5.0

x expressed as nm cubed: 5e+21

x expressed as seconds: 5.02168138831e+53

>>> check_units()

x expressed as cm cubed: 5.0

x expressed as nm cubed: 5e+21

x expressed as seconds: 90071992547.4Unique opportunities for testing

- Bitwise reproducibility across restarts, domain decompositions etc.

- Fine scale comparisons to the real world, e.g range checking physical variables. Also very useful for sanity checking when setting up new configurations or forcings.

- Scientific analysis is a kind of manual testing. Can we convince model users to package their analysis with the model so that it can be easily re-run? (Once again MOM6 developers already trying to do this). Then go one step further and make it automatic?

- Others ideas?

Conclusion

- There's a lot we can do to improve the quality of model software. This is good for everyone: the scientists, developers and the public.

- We need to look beyond performance. Instead of asking whether code runs quickly we should ask whether scientists run quickly when using the code.

- The down-side of quality is additional engineering cost. I would argue that given the importance of these models this can now be justified.

- The first step is to start to measure quality. e.g. MOM6 public issue tracking. In some industries the 'cost' of software failure is obvious - in ours this is more difficult. Other ways to do this?

- Next start implementing some of the easy ways to improve quality. Generally speaking the sooner a bug is found the better. Static analysers, tools like Valgrind, and unit testing are popular because they find bugs before the software is widely used.

Questions?

Software Quality for Geophysical Models

By nicjhan

Software Quality for Geophysical Models

Motivations for improving software quality of geophysical models and some interesting technical approaches to help acheive this.

- 830