Crawlie

Lessons learned about GenStage and Flow

Hi, my name is...

Jacek Królikowski - @nietaki

GenStage & Flow

Value proposition

-

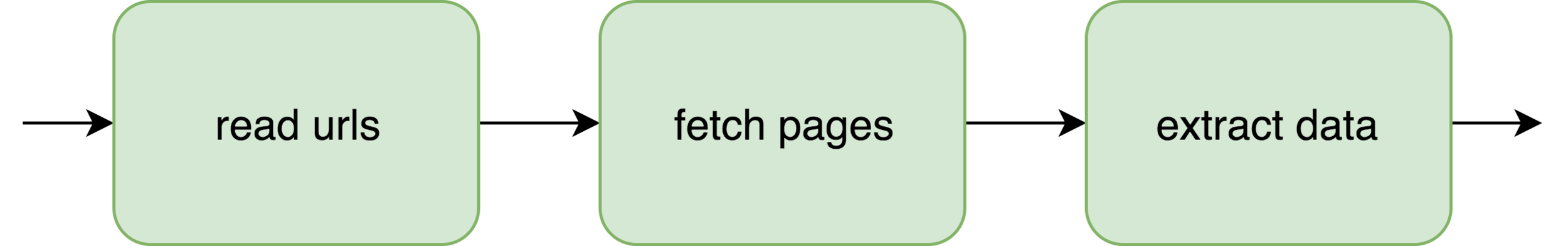

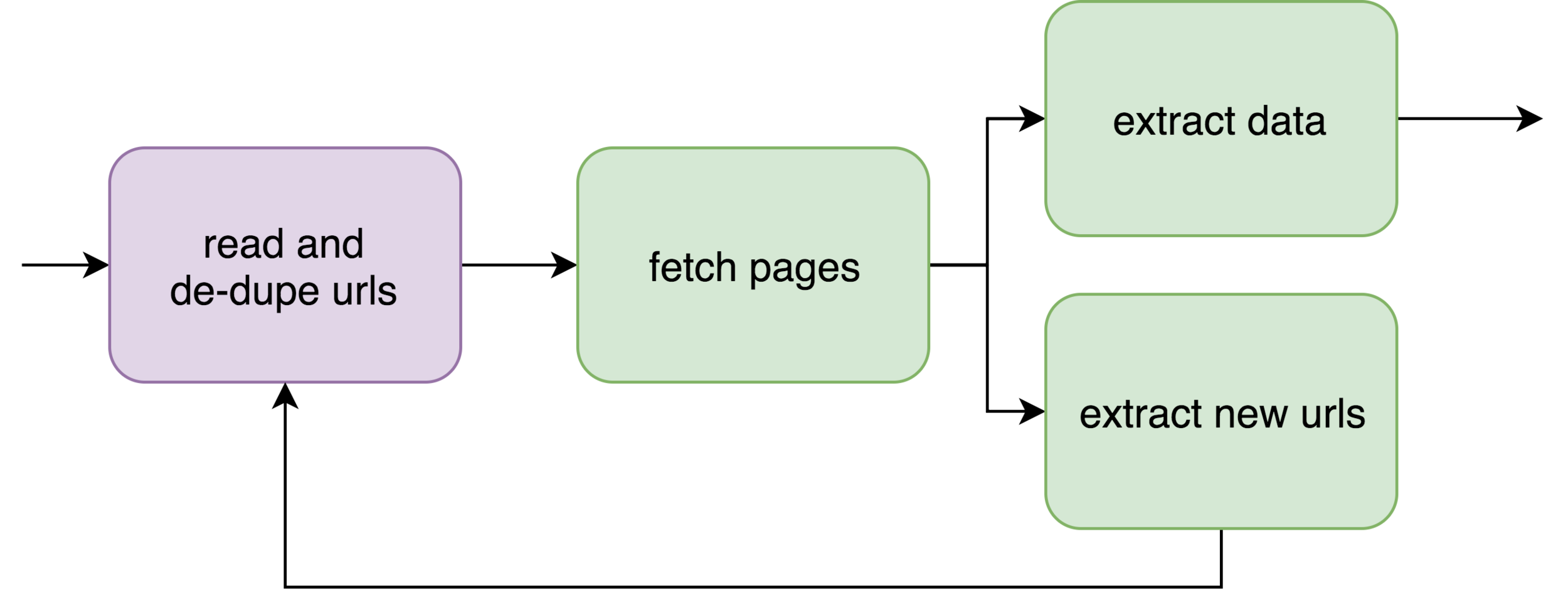

Workflow orchestrator for parallel data processing

-

"Small" data processing

-

Demand driven / with back pressure - only have in flight/memory as much as you need right now

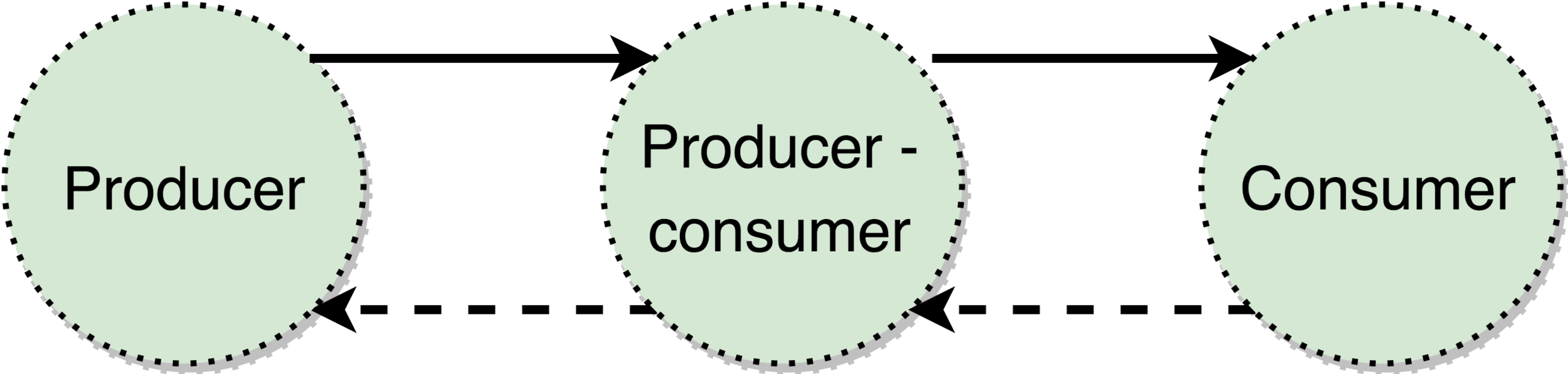

GenStage - event processing behaviour

GenStage is a specification for exchanging events between producers and consumers.

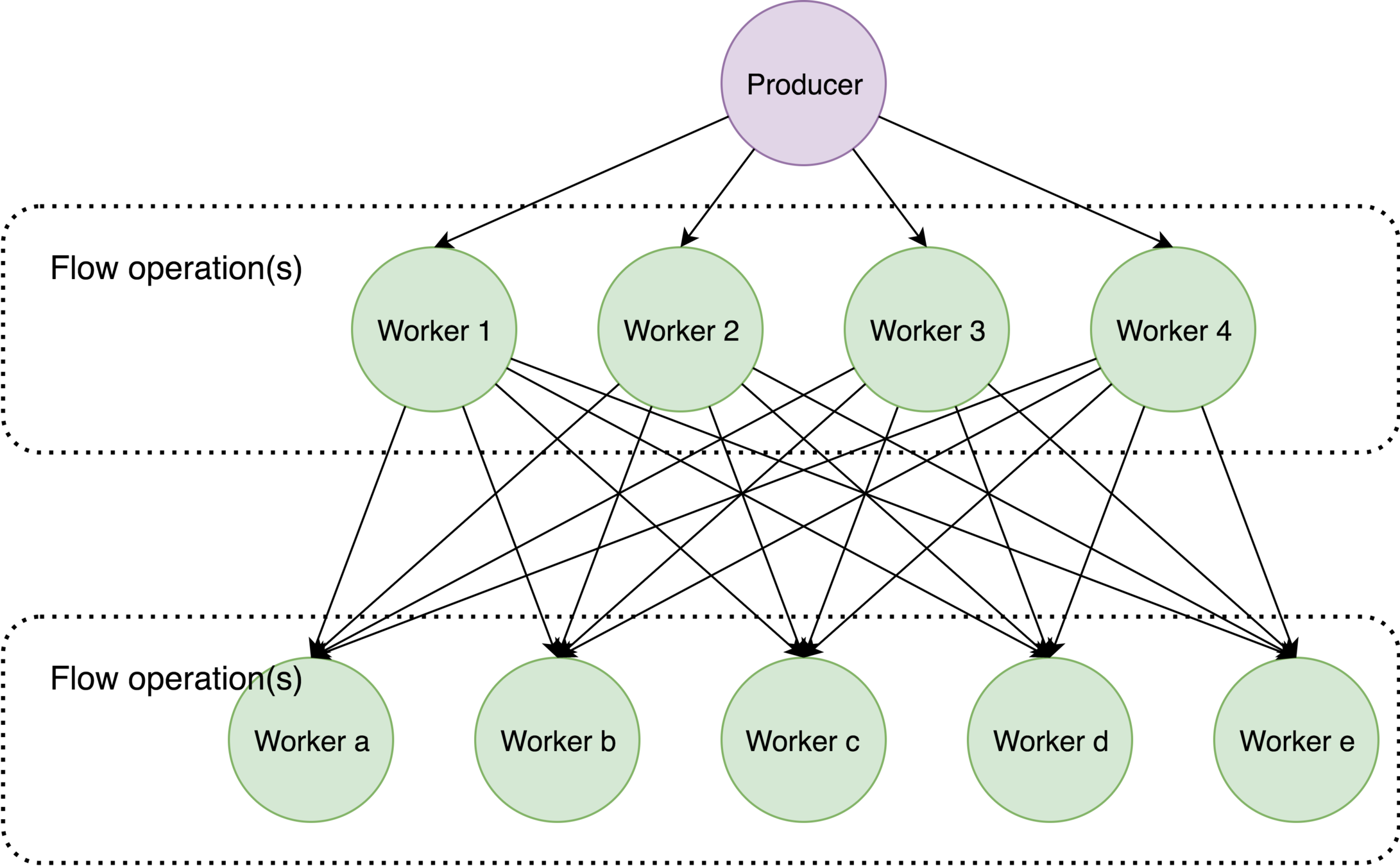

Flow - abstraction built on GenStage

Enum is eager, Stream is lazy, Flow is concurrent

Flow.from_enumerable(...)|> Flow.map(...)

|> Flow.flat_map(...)

|> Flow.partition(...)|> Flow.map(...)

|> Flow.reduce(...)

Diagram stolen from José: http://www.lambdadays.org/lambdadays2017/jose-valim

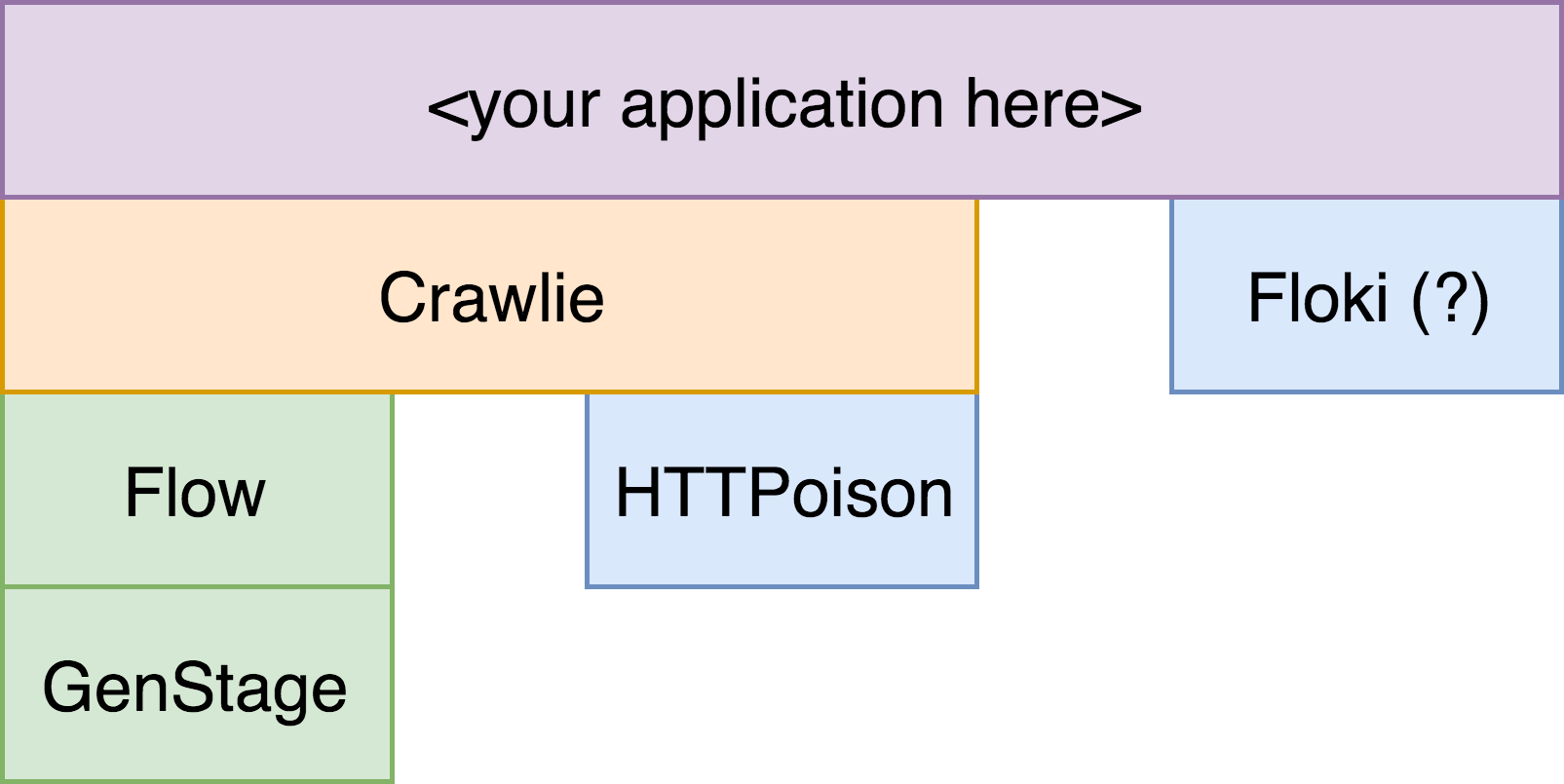

Crawlie

A simple Elixir library for creating decently-performing crawlers with minimum effort.

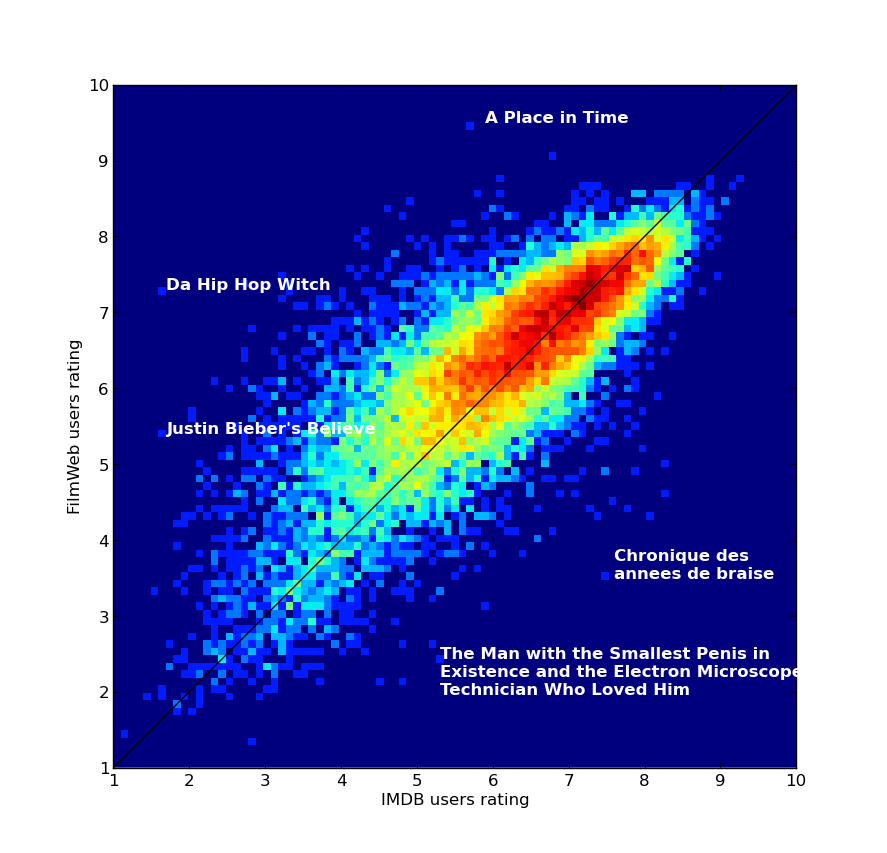

Filmweb scraper

def scrape() = {

val writer = CSVWriter.open(getCurrentFilename(), append = true)

var curId = 0

(1 to lastPageNo).foreach { pageNo =>

println()

println(pageNo)

println()

val url = constructUrl(pageNo)

val films = scrapeUrl(url).map { f =>

val ret = f.copy(idOption = Some(curId))

curId += 1 // dirty, I know

ret

}

films.foreach(println(_))

writer.writeAll(films.map(_.row))

}

}How would you use it?

Lessons learned

Flow is simple (to use)

File.stream!("input.txt")

|> Flow.from_enumerable()

|> Flow.flat_map(&String.split/1)

|> Flow.partition()

|> Flow.reduce(fn -> %{} end,

fn word, map ->

Map.update(map, word, 1, &(&1 + 1))

end)

|> Flow.each(&IO.inspect/1)

File.stream!("input.txt")

#

|> Stream.flat_map(&String.split/1)

#

|> Enum.reduce(%{}, fn word, map ->

Map.update(map, word, 1, &(&1 + 1))

end)

|> Enum.each(&IO.inspect/1)

{"are", 2}

{"blue", 1}

{"red", 1}

{"roses", 1}

{"violets", 1} File.stream!("input.txt")

|> Flow.from_enumerable()

|> Flow.flat_map(&String.split/1)

|> Flow.partition()

|> Flow.reduce(fn -> %{} end,

fn word, map ->

Map.update(map, word, 1, &(&1 + 1))

end)

|> Flow.each(&IO.inspect/1)

|> Enum.to_list() # or Flow.run(){"are", 2}

{"blue", 1}

{"red", 1}

{"roses", 1}

{"violets", 1}Brain teaser

Stream.cycle([:a, :b, :c])

|> Stream.take(3_000_000)

|> Flow.from_enumerable(max_demand: 256)

|> Flow.reduce(fn -> %{} end, fn atom, map ->

Map.update(map, atom, 1, &(&1 + 1))

end)

|> Enum.into(%{})

|> IO.inspect()

%{a: 124768, b: 124783, c: 124785}Stream.cycle([:a, :b, :c])

|> Stream.take(3_000_000)

|> Flow.from_enumerable(max_demand: 256)

|> Flow.reduce(fn -> %{} end, fn atom, map ->

Map.update(map, atom, 1, &(&1 + 1))

end)

|> Enum.to_list()

|> Enum.reduce(%{}, fn {atom, count}, map ->

Map.update(map, atom, count, &(&1 + count))

end)

|> IO.inspect

%{a: 1000000, b: 1000000, c: 1000000}%{a: 1000000, b: 1000000, c: 1000000}Stream.cycle([:a, :b, :c])

|> Stream.take(3_000_000)

|> Flow.from_enumerable(max_demand: 256)

|> Flow.partition()

|> Flow.reduce(fn -> %{} end, fn atom, map ->

Map.update(map, atom, 1, &(&1 + 1))

end)

|> Enum.into(%{})

|> IO.inspect

GenStage vs Flow

GenStage

- low level

- potentially stateful

- fits in within a supervision tree

Flow

- high level

- stateless*

- fails all at once*

* mostly stateless, depends what you mean by stateless

* as far as I know

GenStage → Flow

GenStage → Flow

+ Statistics

GenStage → Flow

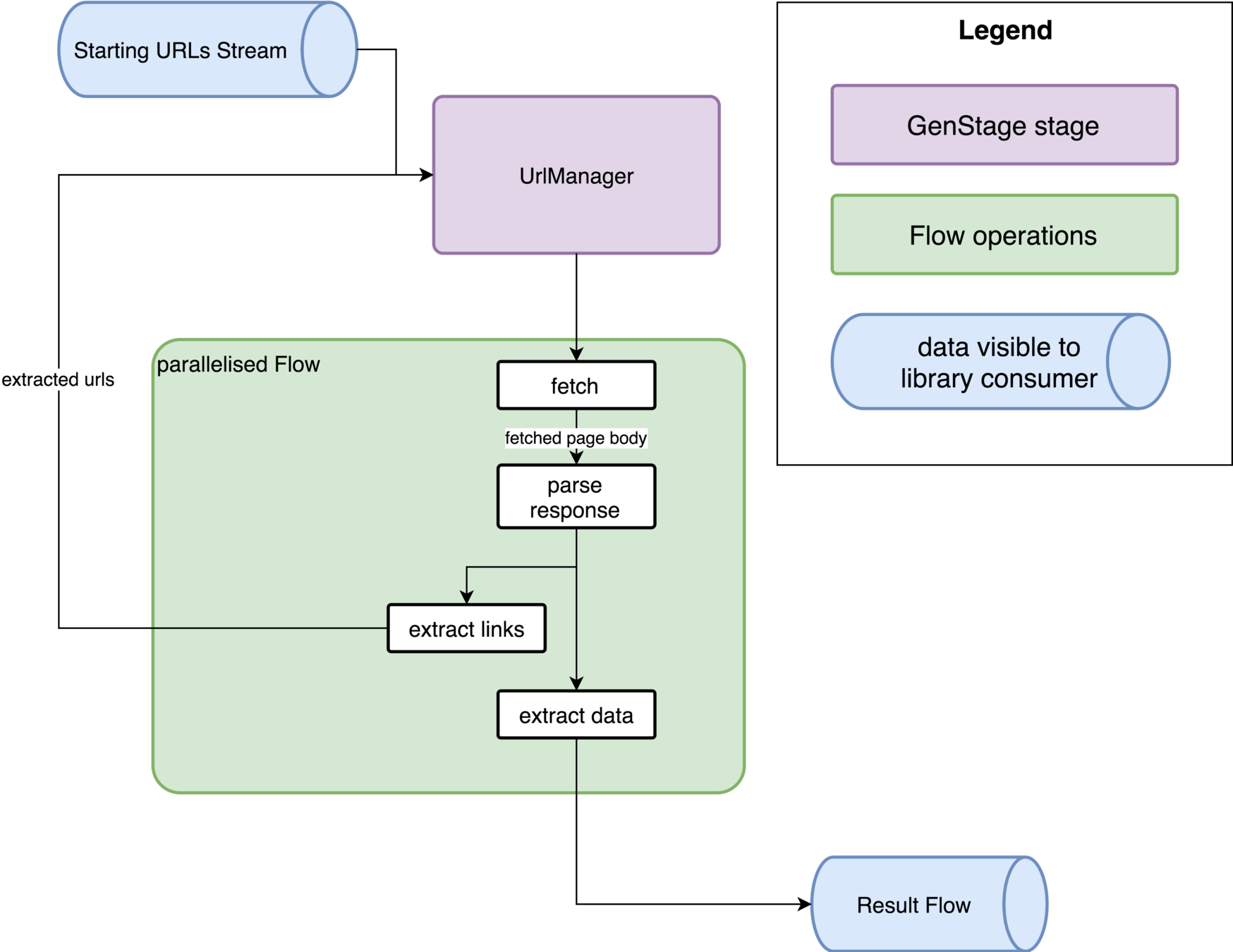

url_manager_stage

|> Flow.from_stage(options)

|> Flow.partition(Keyword.get(options, :fetch_phase))

|> Flow.flat_map(&fetch_operation(&1, options, url_stage))

|> Flow.partition(Keyword.get(options, :process_phase))

|> Flow.flat_map(&parse_operation(&1, options, parser_logic, url_stage))

|> Flow.each(&extract_uris_operation(&1, options, parser_logic, url_stage))

|> Flow.flat_map(&extract_data_operation(&1, options, parser_logic))

@doc """

Parses the retrieved page to user-defined data.

"""

@callback parse(Response.t, options :: Keyword.t)

:: {:ok, parsed} | {:error, term} | :skip | {:skip, reason :: atom}

@doc """

Extracts the uri's to be crawled subsequently.

"""

@callback extract_uris(Response.t, parsed, options :: Keyword.t)

:: [URI.t | String.t]

@doc """

Extracts the final data from the parsed page.

"""

@callback extract_data(Response.t, parsed, options :: Keyword.t)

:: [result]

Terminating Flow...

...from a GenStage producer.

GenStage.async_notify(self(), {:producer, :done})

As of 0.12.0, just stop the producer stage:

{:stop, :normal, state}Flow → Stage

f = Flow.from_enumerable(1..10)

{:ok, consumer} = GenStage.start_link(NosyStage, self())

{:ok, _coordinator_process} = Flow.into_stages(f, [{consumer, [max_demand: 8]}])

receive do

:nosy_stage_done -> IO.puts "RESULT: nosy stage done"

after

1000 -> IO.puts "RESULT: nosy stage never finished"

end

defmodule NosyStage do

use GenStage

def handle_info({from, {:producer, :done}}, creator_pid) do

IO.puts "Nosy got {:producer, :done} info from #{inspect(from)}"

send creator_pid, :nosy_stage_done

{:stop, :normal, creator_pid}

end

# (...) other callbacks

end

f = Flow.from_enumerable(1..10)

{:ok, consumer} = GenStage.start_link(NosyStage, self())

{:ok, _coordinator_process} = Flow.into_stages(f, [{consumer, [max_demand: 8]}])

receive do

:nosy_stage_done -> IO.puts "RESULT: nosy stage done"

after

1000 -> IO.puts "RESULT: nosy stage never finished"

end

defmodule NosyStage do

use GenStage

def init(creator_pid) do

{:consumer, creator_pid}

end

def handle_events(events, from, creator_pid) do

IO.puts "Nosy handles incoming events: #{inspect(events)} from #{inspect(from)}"

{:noreply, [], creator_pid}

end

def handle_subscribe(:producer, _options, from, creator_pid) do

IO.puts "Nosy subscribed to #{inspect(from)}"

# {:manual, creator_pid}

{:automatic, creator_pid}

end

def handle_info({from, {:producer, :done}}, creator_pid) do

IO.puts "Nosy got {:producer, :done} info from #{inspect(from)}"

send creator_pid, :nosy_stage_done

{:stop, :normal, creator_pid}

end

end

Nosy subscribed to {#PID<0.128.0>, #Reference<0.0.7.161>}

Nosy handles incoming events: [1, 2, 3, 4] from {#PID<0.128.0>, #Reference<0.0.7.161>}

Nosy handles incoming events: [5, 6, 7, 8] from {#PID<0.128.0>, #Reference<0.0.7.161>}

Nosy handles incoming events: '\t\n' from {#PID<0.128.0>, #Reference<0.0.7.161>}

Nosy got {:producer, :done} info from {#PID<0.128.0>, #Reference<0.0.7.161>}

RESULT: nosy stage doneHandling Demand

Main GenStage callbacks:

- init/1

- handle_events/3

- handle_demand/2

def handle_demand(demand, %State{pending_demand: pending_demand} = state) do

%State{state | pending_demand: pending_demand + demand}

|> do_handle_demand()

end

defp do_handle_demand(state) do

demand = state.pending_demand

{state, pages} = State.take_pages(state, demand)

remaining_demand = demand - Enum.count(pages)

state = %State{state | pending_demand: remaining_demand}

{:noreply, pages, state}

end

Optimizing Flows

- You don't really have to

- use Flow.partition/2 to set different settings for sections of the flow

- :stages ~ flow parallelism

- :min_demand, :max_demand

- which parts are IO bound, which are CPU bound?

- Think about how much data is being sent between stages

- Application-specific (depth-first url traversal)

There's more!

- Flow.Window (windows and triggers)

- late events

- GenStage.*Dispatcher

- GenStage processes in supervision trees

- ...

Links

- José's intro to GenStage and Flow: https://youtu.be/XPlXNUXmcgE

- crawlie repo: https://github.com/nietaki/crawlie

- using crawlie: https://github.com/nietaki/crawlie_example

Live demo

(time permitting)

Crawlie

By Jacek Królikowski

Crawlie

Lessons learned about GenStage and Flow

- 3,650