To Err is Human

Introduction to modern safety thinking

Avishai Ish-Shalom (@nukemberg)

Software Fairy @Wix

Gitlab Failure

GitLab Failure TLDR

31/01/2017, around tea time

- gitlab.com goes down for 18 hours

- Irrecoverable data loss: 5k projects, 5k comments, 700 users

- Wrong DB server erased

- All backups failed

Long Version (1/2)

- Increased load on DB

- WAL replication failed

- Attempts to re-mirror slave failed

-

Primary DB reconfigured, still no joy

-

pg_basebackup assumed to be the culprit

-

Engineer tries to remove DB directory on slave, removed on primary instead

Long version (2/2)

- Attempts to restore from pg_dump backups fail - S3 bucket was empty

- Backups silently failed for months (email issue)

- Azure disk snapshots not enabled

- Latest LVM snapshot 6 hours old (by chance), but on staging

- Staging environment very slow, snapshot copy takes 18 hours to complete

- Restore from backup was never tested

What Happened?

Preventing Human Error

- Post mortem investigation of accidents

- Revoke privileges

- Write strict procedures for everything

- Punish people who make mistakes

- Reviews, approvals, committees

- Specialization

From

Safety I

to

Safety II

Safety I in a Nutshell

- Focus on preventing bad things

- Accidents are caused by failures and malfunctions. The purpose of an investigation is to identify the causes

- Humans are a substantial cause of failure

- Tight control of "Risky" operations

- Special "safety" teams

When something happens or a risk observed, act!

Humans are the Problem

- Eliminate human intervention where possible

- Following procedures prevents problems

- Divide and control

The Falling Domino Model

- Linear cause and effect

- Single cause

- Time ordered

- Cut the chain - prevent the failure

The Different Causes Hypothesis

Failures have different (special) causes than success

Does Safety I work?

- Culture of fear

- Gaming stats

- Complex failures cannot be prevented

- Hurts your main business

The Usual Suspects

- Bias towards new information - "anchoring" effect

- Confirmation bias

- Hindsight bias

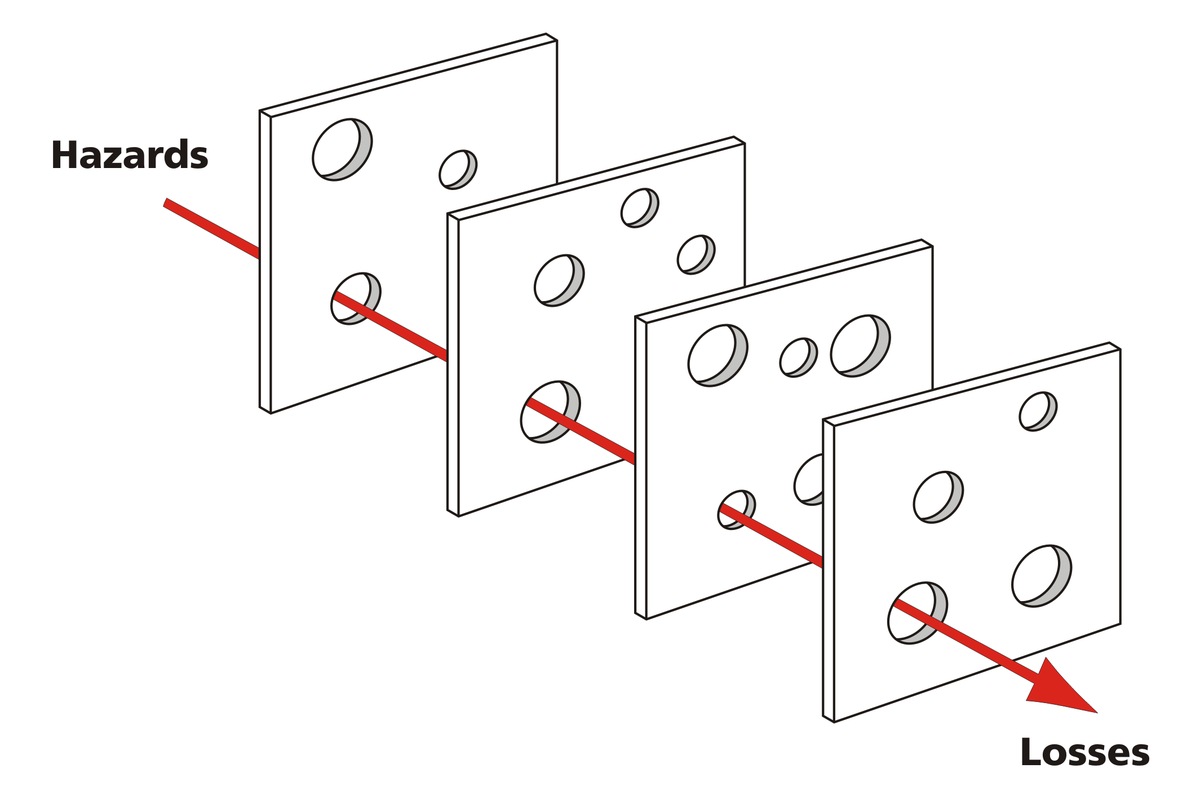

Swiss Cheese Model

- Combination of causes

- Multiple layers

- Non linear

- Explains complex failures

"Human Error"

Nobody comes to work to die.

Human Error

or

Inhuman Systems?

Human Variability

- Performance varies naturally

- Humans excel at dealing with novel situations

- Humans suck at repetitive/dull cognitive tasks

- Fatigue

- Biases

Goal Conflicts

No system exists just to be safe.

How Do Things Go Right?

Work as Imagined

vs

Work as Done

- Adjustments to a changing world

- People bypass regulation to get work done

- Regulations out of touch with reality

- Production pressures

Safety II

Safety == maximum success

Safety II in a Nutshell

- Focus on making things go right

- Humans are a source of resilience

- Complex world, multiple causes

- Can't separate "failure" from "success"

Safety is created by the people who do the work!

Let's Take a Look at our Industry

The NOC

(what's wrong with this picture?)

NOC

- Humans bad at monitoring screens

- Distracting environment

- Bad ergonomics

- Noise, stress

- Separate staff from original engineers

- Limited access

Safety I incarnate

Dashboards

Better Dashboards

We Can Do Better!

- Etsy

- Netflix

Continuous Deployment!

Nagios Herald

Operator Context

Moving to Safety II

Human Oriented Systems

- Better C&C UX

- Operator context

- Simplified systems

- Human friendly automation

Study "Work as Done"

- Empower people

- Blameless post mortems

- Forget "root cause"

- Study "normal" work

- Shorter, better feedback loops

- Consolidate emergency and normal procedures

Learning more

-

Safety Differently, Dekker (2017)

-

Ironies of Automation, Bainbridge (1983)

-

How complex systems fail, Cook (2002)

-

Field Guide to Understanding Human Error, Dekker (2002)

-

Normal Accidents: Living with High-Risk Technologies, Perrow (1984)

-

Safety I and Safety II, Hollnagel (2014)

Questions?

To Err is Human

By Avishai Ish-Shalom

To Err is Human

An introduction to modern safety thinking

- 3,614