O. Moussa

Background Information

Course:

Learning Outcome:

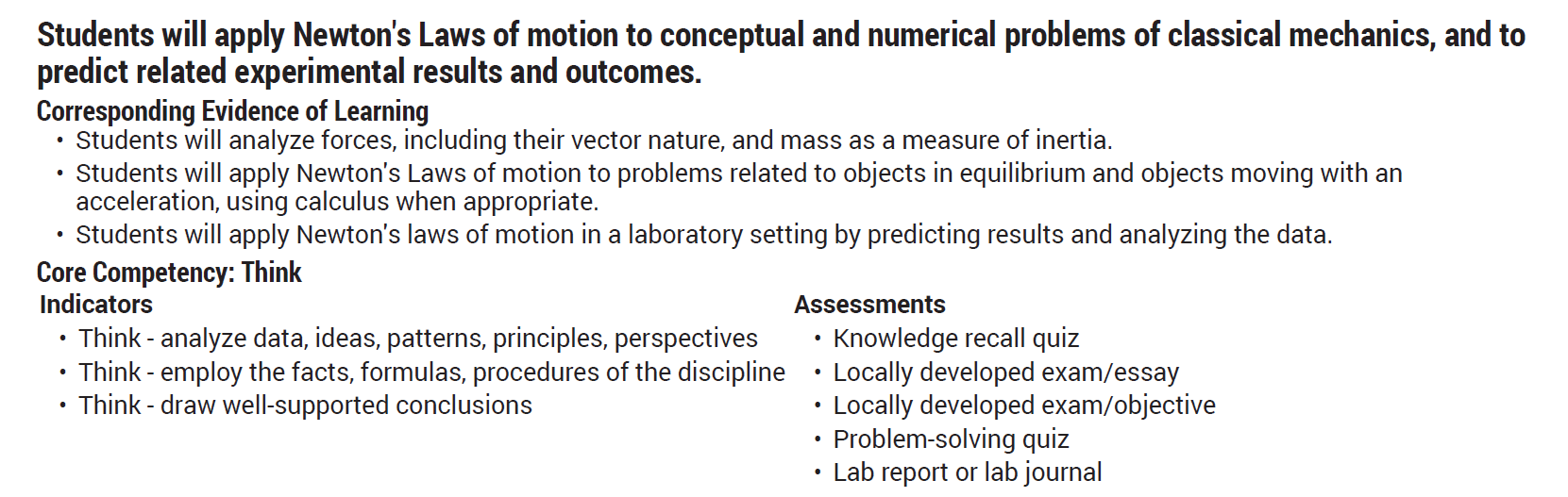

Modality:

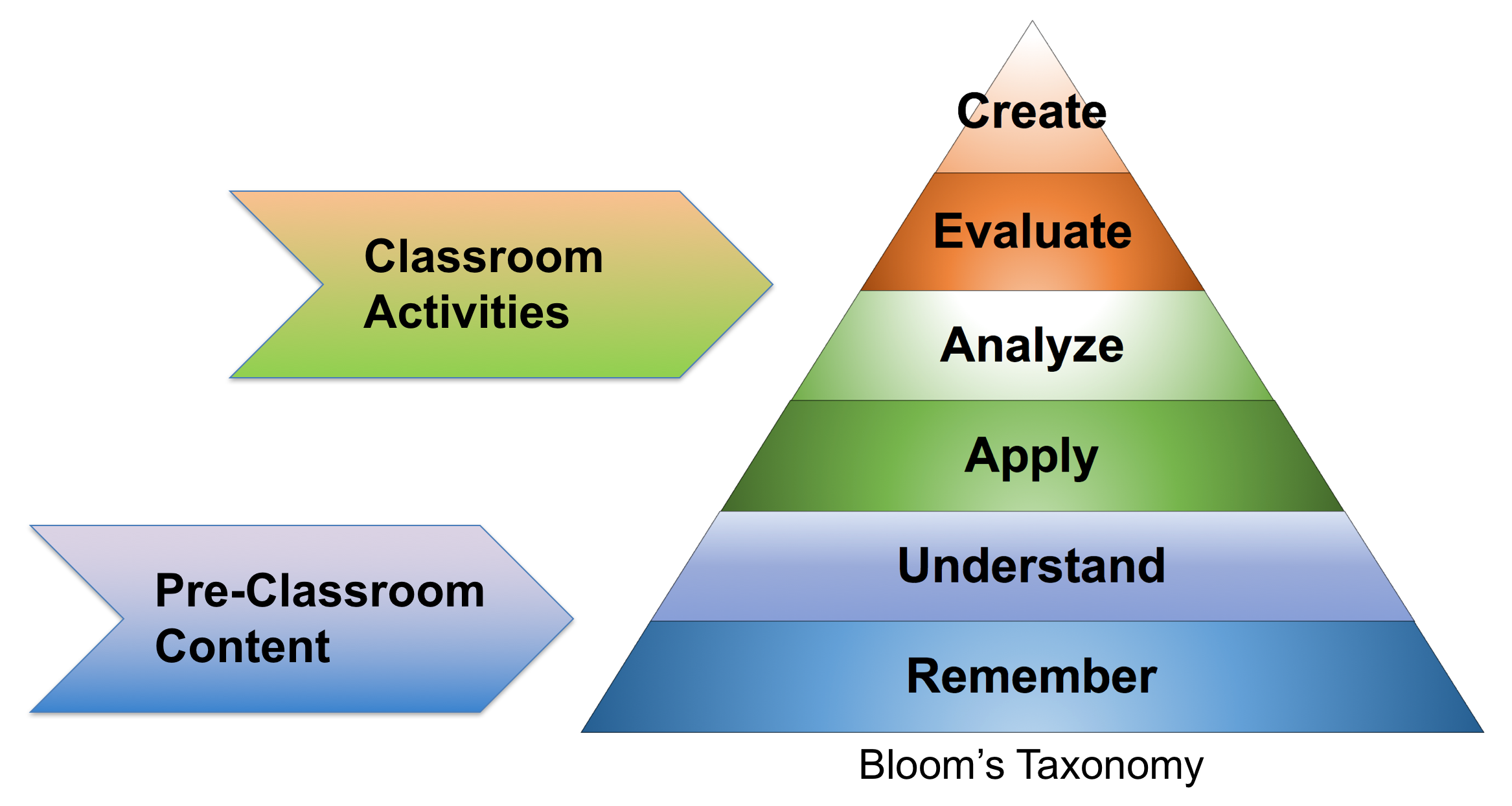

Mixed-mode

(flipped model)

Create a

lesson

Organization / Interactivity / Embedded Formative Assessment

What is entropy?

- disorder?

- uncertainty?

- surprise?

- information?

@trang1618

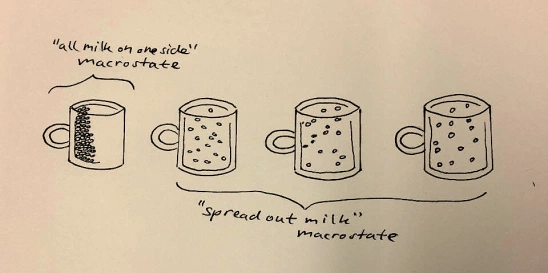

A microstate specifies the position and velocity of all the atoms in the coffee.

A macrostate specifies temperature, pressure on the side of the cup, total energy, volume, etc.

What is entropy?

- themodynamics/statistical mechanics

a

Boltzman constant

number of configurations

a

\[S = - k_B\sum_i p_i\log p_i = k_B\log \Omega\]

(assume each microstate is equally probable)

\[S = - k_B\sum_i p_i\log p_i\]

expected value of

log(probability that a microstate \(i\) is occupied)

[J/K]

The second law of thermodynamics states that the total entropy of an isolated system can never decrease over time, and is constant if and only if all processes are reversible.

Entropy always increases.

Why is that?

Because it's overwhelmingly more likely that it will.

-- Brian Cox

What is entropy?

- information theory: expected value of self-information

a

Shannon's entropy

probability of the

possible outcome \(x_i\)

a

\[H(X) = -\sum_{i = 1}^np(x_i)\log_2p(x_i)\]

[bits]

Source coding theorem.

The Shannon entropy of a model ~ the number of bits of information gain by doing an experiment on a system your model describes.

Destination 2023

By omoussa

Destination 2023

- 155