LXD

Showing an alternative containment solution compatible with Docker through example

what's LXD?

Canonical's way to containment management

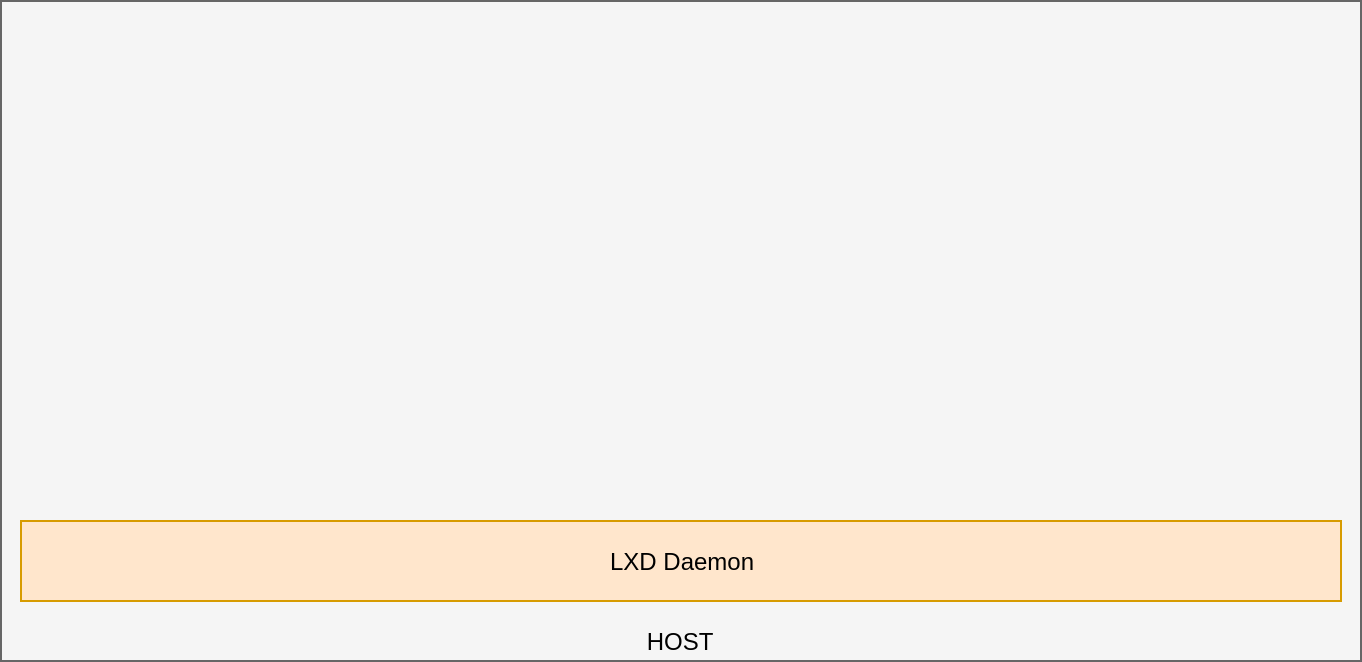

Host Linux Machine Containers

Hypervisor based on LXC

Infrastructure-as-a-Service

Compatible with Docker

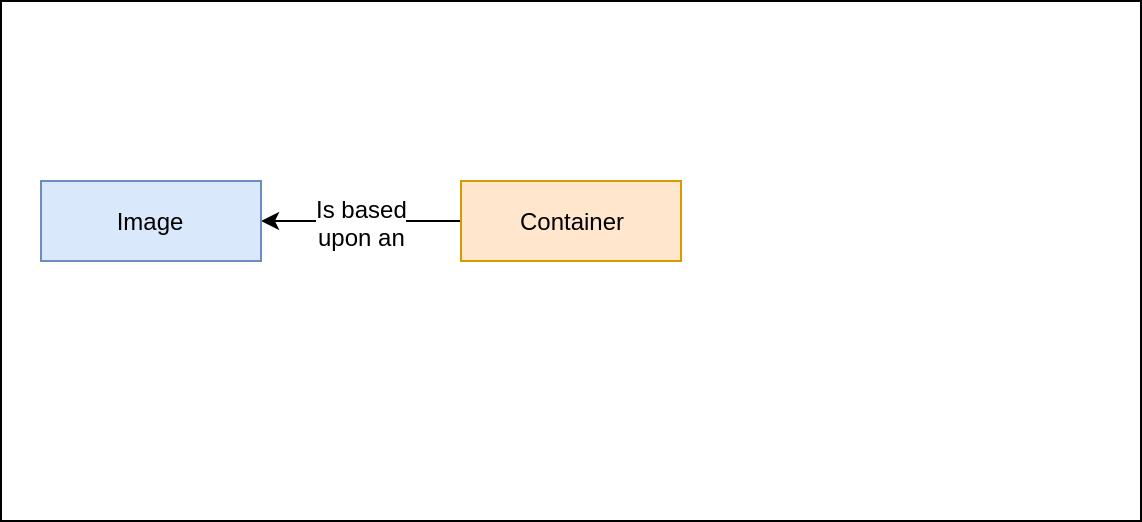

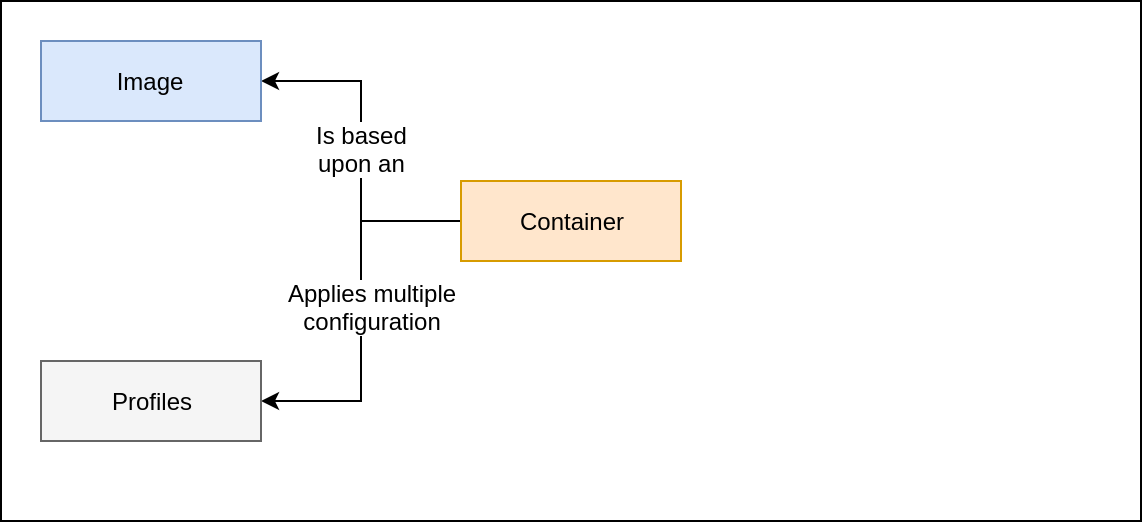

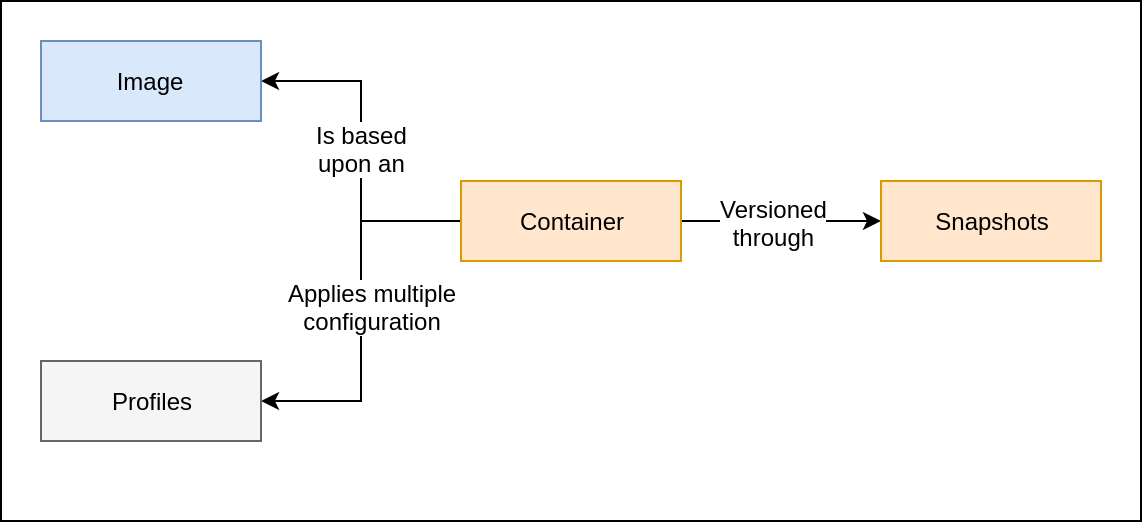

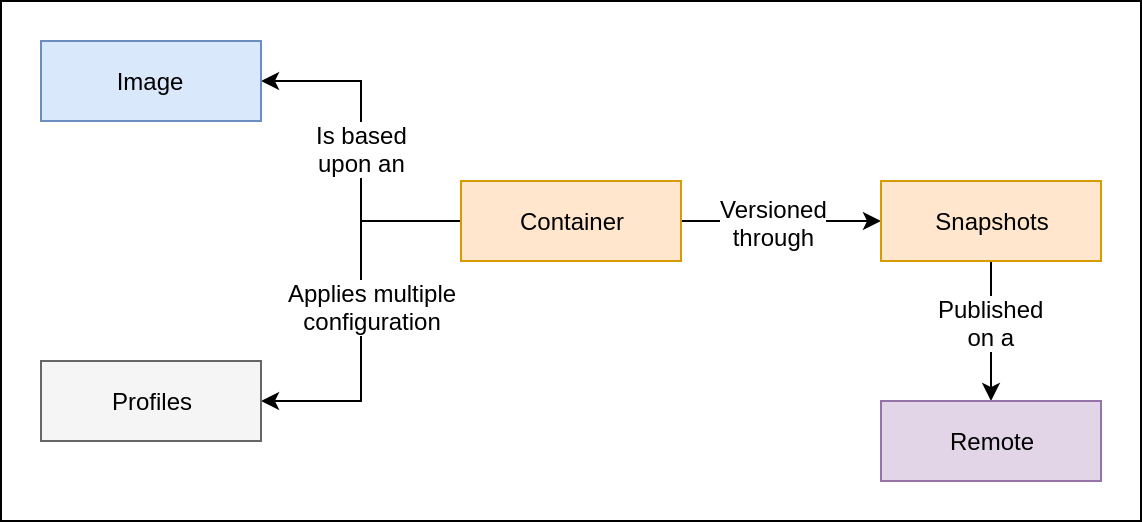

What's made of?

installing LXD

sudo add-apt-repository ppa:ubuntu-lxc/lxd-stable

sudo apt update

sudo apt install lxdsudo apt install lxd zfsutils-linuxDIY hadoop cluster

What do we need?

Let's play with LXD, the hardfun way.

DIY hadoop cluster

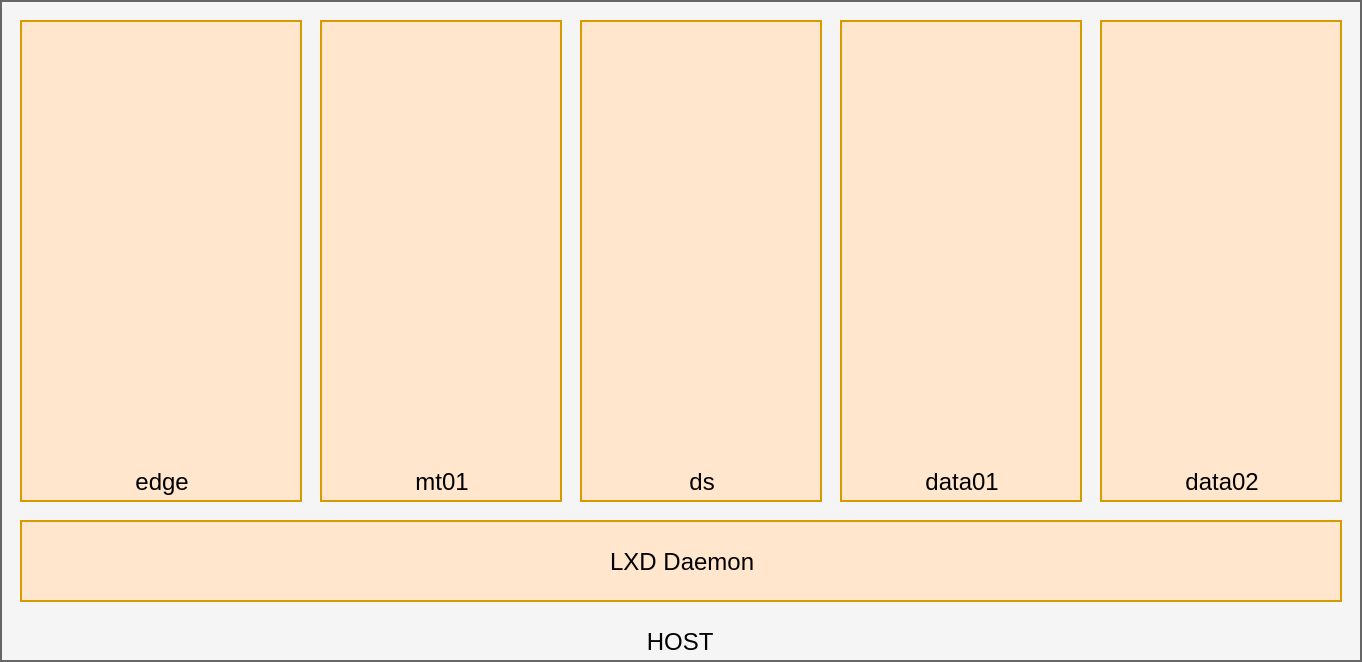

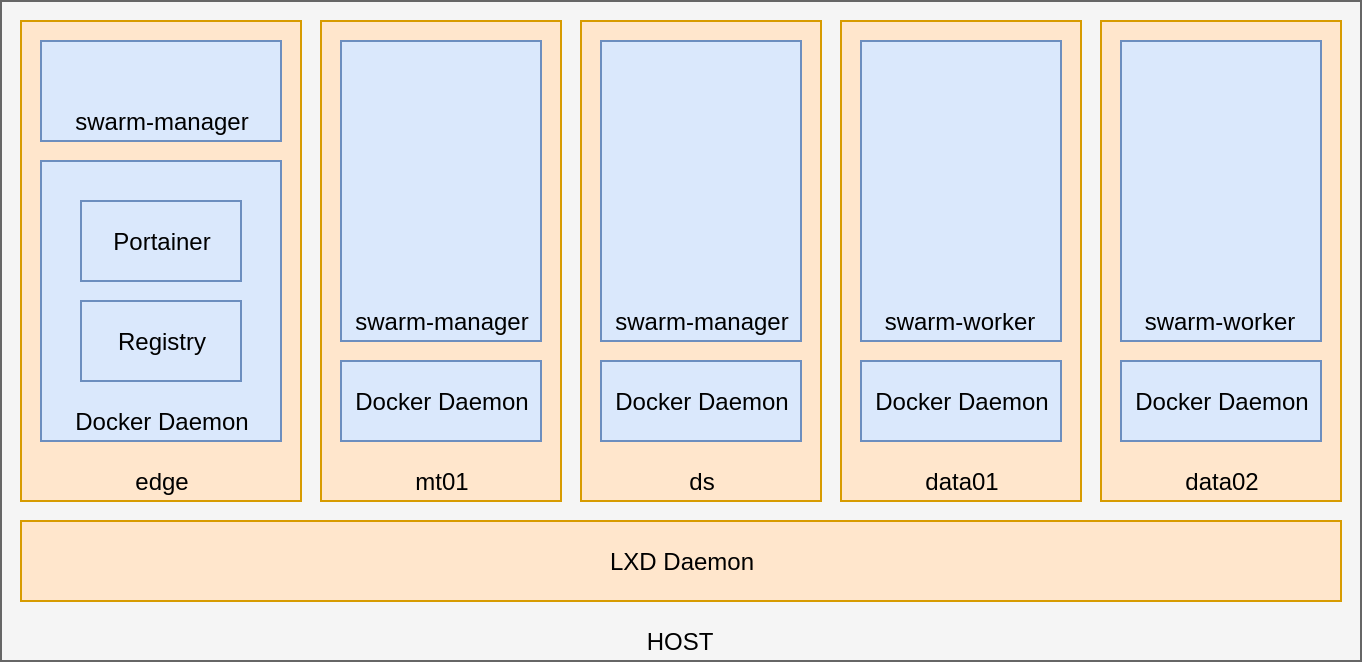

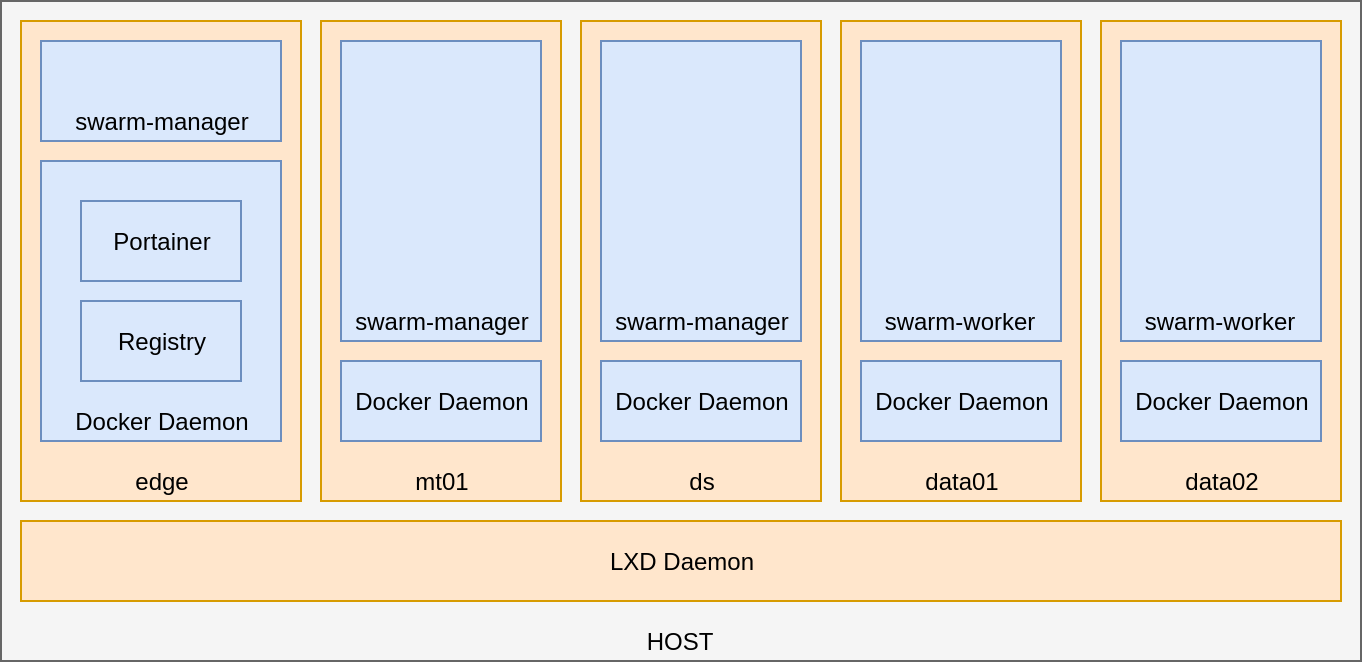

A Linux-based Host with LXD...

DIY hadoop cluster

with several Machine Containers,

DIY hadoop cluster

each of them with Docker on a Swarm Cluster...

DIY hadoop cluster

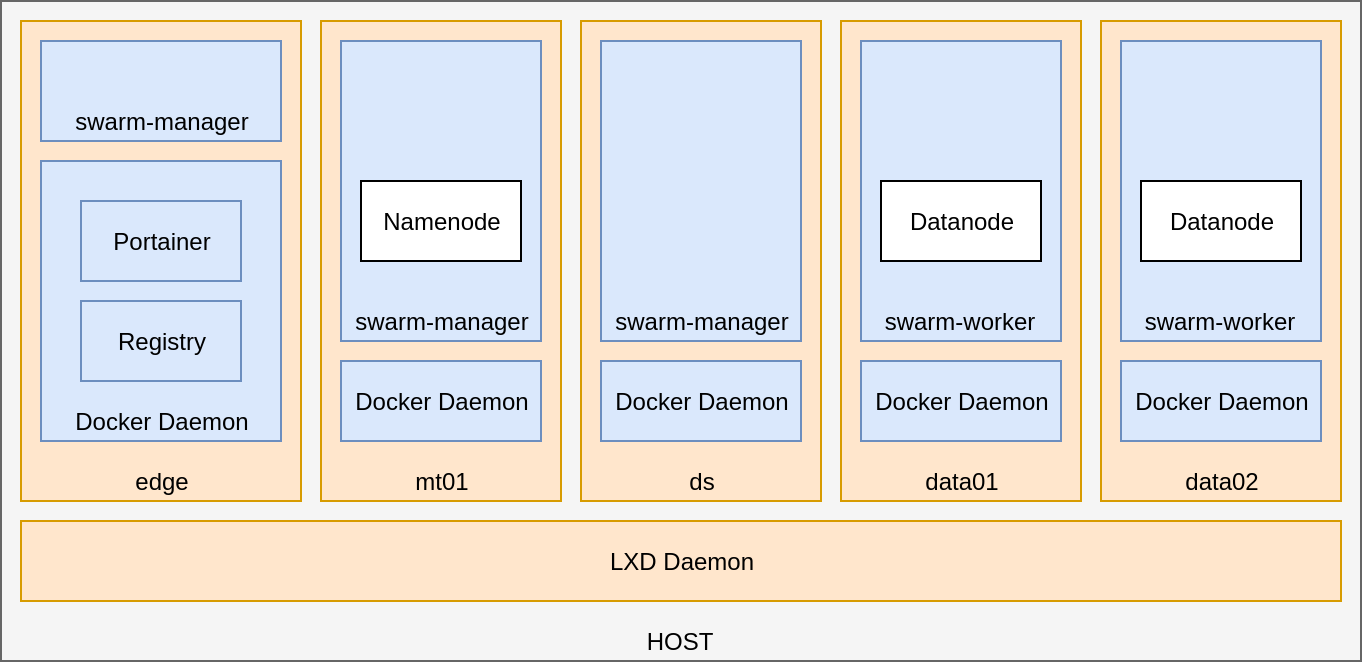

with an HDFS to store the data...

DIY hadoop cluster

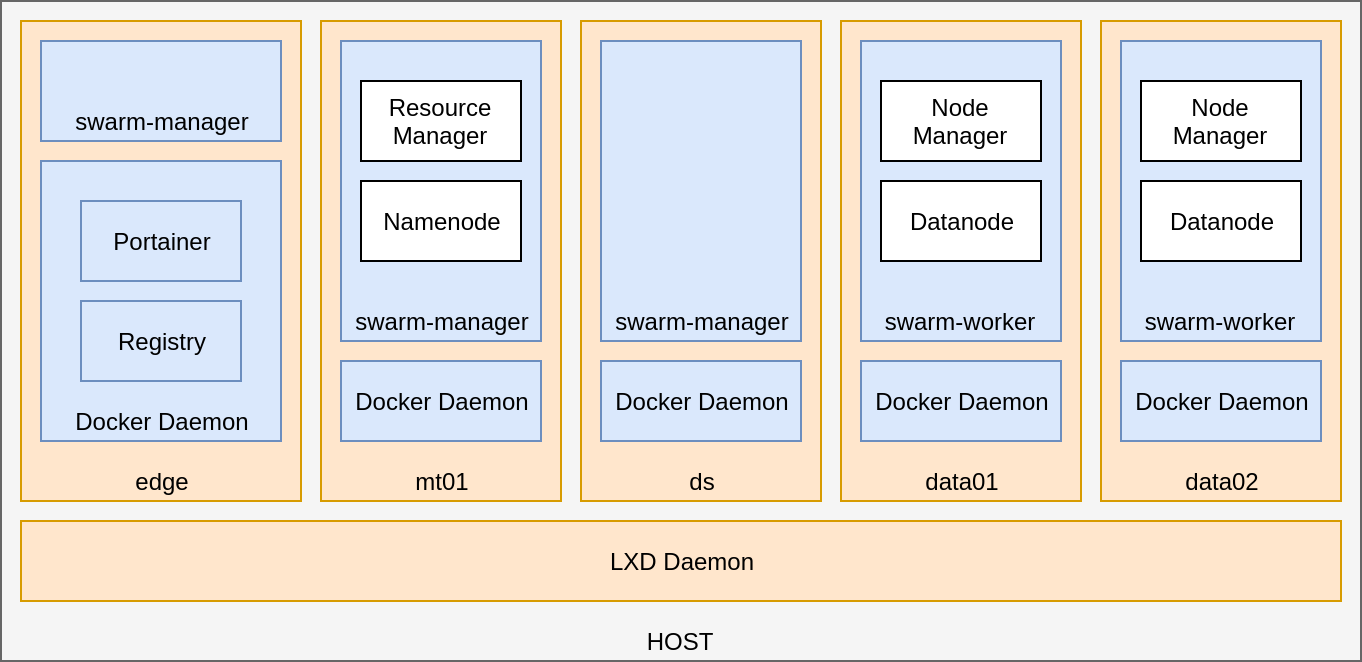

and Yarn to distribute computation stuff...

DIY hadoop cluster

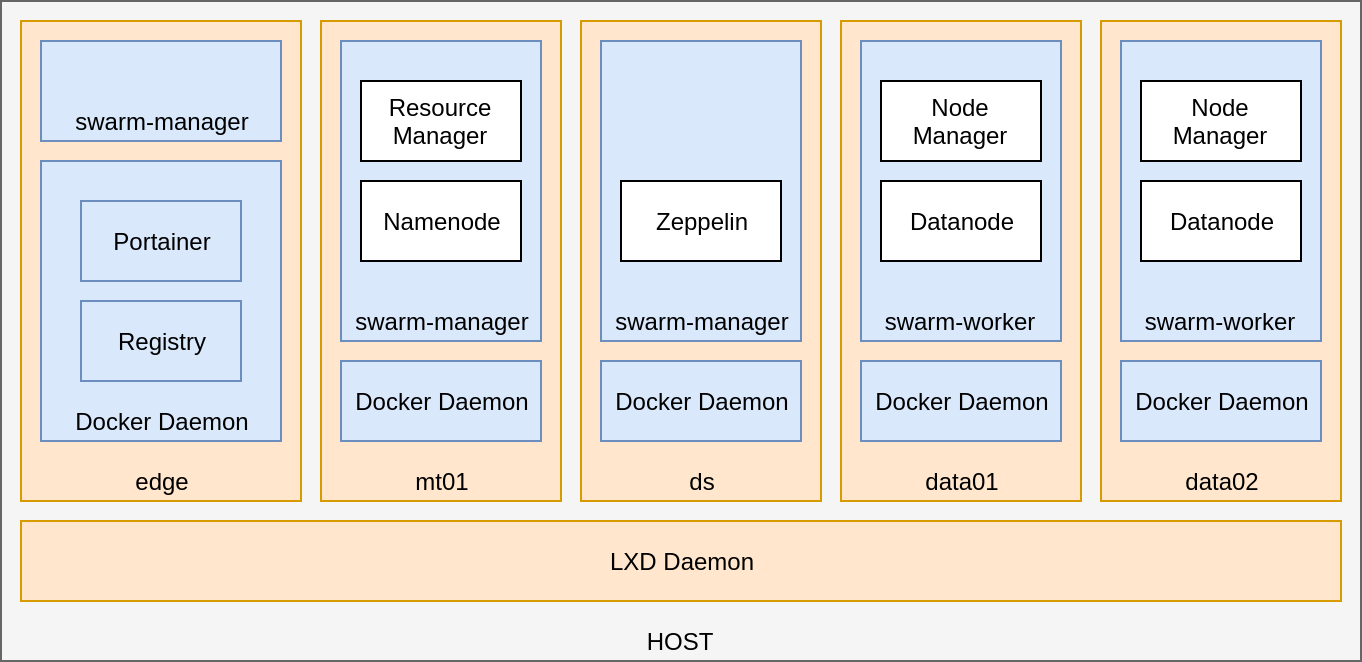

and lastly, a Zeppelin to see shiny stuff.

lxd profiles

#!/bin/bash

$ lxc profile list

+-------------------+---------+

| default | 0 |

+-------------------+---------+

| docker | 0 |

+-------------------+---------+

$ lxc profile show default

config: {}

description: Default LXD profile

devices:

eth0:

nictype: bridged

parent: lxdbr0

type: nic

root:

path: /

pool: default

type: disk

name: default

They allow to compose configurations over containers

The "default" profile sets:

- The NIC device attached to the bridge network.

- The Hard Disk pointing to the ZFS pool.

Both devices are created during LXD setup.

docker-profile

#!/bin/bash

KERNEL_MODULES="linux.kernel_modules overlay,nf_nat,ip_tables"

KERNEL_MODULES="$KERNEL_MODULES,ip6_tables,netlink_diag,br_netfilter"

KERNEL_MODULES="$KERNEL_MODULES,xt_conntrack,nf_conntrack,ip_vs,vxlan"

lxc profile delete docker_privileged

lxc profile copy docker docker_privileged

lxc profile set docker_privileged security.nesting true

lxc profile set docker_privileged security.privileged true

lxc profile set docker_privileged linux.kernel_modules $KERNEL_MODULES

lxc profile set docker_privileged raw.lxc lxc.aa_profile=unconfinedThe Kernel Modules allow nested containers and other networking capabilities

the image

#!/bin/bash

lxc init images:ubuntu/xenial hadoop-base-swarm -p default -p docker_privileged

lxc start hadoop-base-swarm

sleep 15Let's create the base image from Ubuntu Xenial (17.04):

The command

lxc start returns immediately,

so it's required to wait a little.

the image

#!/bin/bash

lxc file push docker-install.sh hadoop-base-swarm/tmp/docker-install.sh

lxc exec hadoop-base-swarm -- bash /tmp/docker-install.sh

sleep 15Next, upload and execute a script to install Docker:

Notice that everything is issued from the host machine.

docker-install.sh

#!/bin/sh

# Install Docker CE

apt update -qq

apt install -y apt-transport-https ca-certificates curl software-properties-common build-essential

sh -c "curl -fsSL https://download.docker.com/linux/ubuntu/gpg | apt-key add -"

add-apt-repository \

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

edge"

apt update -qq

apt install -y docker-ce

# Install Docker-Compose

curl -L https://github.com/docker/compose/releases/download/1.16.1/docker-compose-`uname -s`-`uname -m` -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

# https://github.com/lxc/lxd/issues/2977#issuecomment-331322777

touch /.dockerenv

# Install network tools

apt install telnet

A simple shell script uploaded during image creation:

Notice the .dockerenv created at the image root. This is required to bypass an issue with docker swarm.

the image

#!/bin/bash

lxc stop hadoop-base-swarm

lxc publish hadoop-base-swarm --alias hadoop-base-swarm

lxc delete hadoop-base-swarmFinally, publish the image to the local repository:

Now we have a base image of Ubuntu with Docker installed and ready to be used from other containers.

machine containers

Now that we have a base image, we need to set some basic constraints.

- CPU

- Memory

- Disk

RESOURCE-BASED

NETWORKING

- Topology

setting resources

Resource-based constraints are easy to state:

#!/bin/bash

lxc init hadoop-base-swarm edge -p docker_privileged

lxc config set edge limits.cpu 1

lxc config set edge limits.memory 1024MB

lxc config device add edge root disk pool=lxd path=/ size=8GB

lxc start edge

sleep 15This allows setting a container with a single CPU and 1024MB of RAM, with an 8GB-sized disk.

mapping devices

Now, we need to upload the docker images, and certs:

#!/bin/bash

lxc config device add edge dockertmp disk source=$(realpath ./../docker) path=/docker

lxc config device add edge registrytmp disk source=$(realpath registry) path=/registryInstead of pushing files, here we're mapping folders from the host FS to the container's FS.

These are treated as hardware components of the container.

networking

What about machine connectivity?

#!/bin/bash

$ lxc profile show default

config: {}

description: Default LXD profile

devices:

eth0:

nictype: bridged

parent: lxdbr0

type: nic

root:

path: /

pool: default

type: disk

name: defaultThe default profile adds the eth0

A device that bridges the host ethernet NIC with the guest eth0 to be created in the container.

LXD sets up a DNS server to access machines by their name

macvlan

#!/bin/bash

lxc profile copy default default_macvlan

lxc profile device set default_macvlan eth0 nictype macvlan

lxc profile device set default_macvlan eth0 parent eth0This profile borrows default to modify eth0:

Instead of attaching the container's eth0 device to the

lxdbr0, it is attached to the physical interface.

docker registry

To set up the docker registry, we need to add some certificates:

#!/bin/bash

lxc exec edge -- docker run -v /registry:/certs -e SSL_SUBJECT=edge paulczar/omgwtfssl

lxc exec edge -- cp /registry/cert.pem /etc/ssl/certs/

lxc exec edge -- mkdir -p /etc/docker/certs.d/edge:443/

lxc exec edge -- cp /registry/ca.pem /etc/docker/certs.d/edge:443/ca.crt

lxc exec edge -- cp /registry/key.pem /etc/docker/certs.d/edge:443/client.key

lxc exec edge -- cp /registry/cert.pem /etc/docker/certs.d/edge:443/client.certThis will allow to have a secured Docker registry where to pull images from.

run the registry

With everything set, we can start the docker registry:

#!/bin/bash

# Create a registry container

lxc exec edge -- docker run -d -p 443:5000 --restart=always \

--name registry --env-file /registry/env -v /registry:/registry \

registry:2

# Build and publish the docker images in the registry

lxc exec edge -- make -C /dockerdocker swarm

With everything set, we can start the docker registry:

#!/bin/bash

lxc exec edge -- docker swarm initSeems simple enough.

swarming in

What about other managers and workers?

#!/bin/bash

JOIN_TOKEN=`lxc exec edge -- docker swarm join-token manager --quiet`

lxc exec mt01 -- docker swarm join --token ${JOIN_TOKEN} edgeJust fetch the join token from any of the managers and join them to the party.

nodes have roles

Apart from joining the nodes into the swam, except edge, any node has a role set:

#!/bin/bash

lxc exec edge -- docker node update --label-add role-namenode=true mt01

lxc exec edge -- docker node update --label-add role-spark-master=true mt01#!/bin/bash

lxc exec edge -- docker node update --label-add role-datanode=true data01

lxc exec edge -- docker node update --label-add role-spark-worker=true data01hadoop services

We're here now:

And we're ready to throw some stuff into the cluster.

the datanode

#!/bin/bash -x

lxc exec edge-local-grid -- docker service create \

--name "datanode-data04-local-grid" \

--hostname "{{.Node.Hostname}}" \

--restart-condition none \

--network vxlan-local-grid \

--publish mode=host,target=50010,published=50010 \

--publish mode=host,target=50020,published=50020 \

--publish mode=host,target=50075,published=50075 \

--constraint "node.labels.role-datanode==true" \

--replicas 4 \

--env-file /docker/hadoop.env \

edge-local-grid:443/local.grid/hdfs-datanode:2.8.1Let's take a closer look

docker service

#!/bin/bash -x

lxc exec edge -- docker service create \

--name "datanode-data02-local-grid" \

--hostname "{{.Node.Hostname}}" \

--restart-condition none \

--network vxlan-local-grid \

--publish mode=host,target=50075,published=50075 \

--constraint "node.labels.role-datanode==true" \

--replicas 2 \

--env-file /docker/hadoop.env \

edge:443/local.cluster/hdfs-datanode:2.8.1Why use docker service?

Allows me to control the hostname of the service instance 1 .

1 Templating the hostname is available since Docker 17.10 (edge)

docker service

#!/bin/bash -x

lxc exec edge -- docker service create \

--name "datanode-data02-local-grid" \

--hostname "{{.Node.Hostname}}" \

--restart-condition none \

--network vxlan-local-grid \

--publish mode=host,target=50075,published=50075 \

--constraint "node.labels.role-datanode==true" \

--replicas 2 \

--env-file /docker/hadoop.env \

edge:443/local.cluster/hdfs-datanode:2.8.1Why use docker service?

Allows me to control the hostname of the service instance 1 .

Allows me to control how to expose ports and bypass the Swarm's routing mesh.

1 Templating the hostname is available since Docker 17.10 (edge)

service constraints

#!/bin/bash -x

lxc exec edge -- docker service create \

--name "datanode-data02-local-grid" \

--hostname "{{.Node.Hostname}}" \

--restart-condition none \

--network vxlan-local-grid \

--publish mode=host,target=50075,published=50075 \

--constraint "node.labels.role-datanode==true" \

--replicas 2 \

--env-file /docker/hadoop.env \

edge:443/local.cluster/hdfs-datanode:2.8.1Through

constraints, I can specify where should the services be deployed.

That's why previously, there was a step setting labels on each of the cluster nodes.

And how do you avoid balancing the services randomly?

"service discovery"

#!/bin/bash -x

lxc exec edge -- docker service create \

--name "datanode-data02-local-grid" \

--hostname "{{.Node.Hostname}}" \

--restart-condition none \

--network vxlan-local-grid \

--publish mode=host,target=50075,published=50075 \

--constraint "node.labels.role-datanode==true" \

--replicas 2 \

--env-file /docker/hadoop.env \

edge:443/local.cluster/hdfs-datanode:2.8.1Creating an additional network, allows services to share the network visibility.

And why attach all services to the same network?

"service discovery"

#!/bin/bash -x

lxc exec edge -- docker service create \

--name "datanode-data02-local-grid" \

--hostname "{{.Node.Hostname}}" \

--restart-condition none \

--network vxlan-local-grid \

--publish mode=host,target=50075,published=50075 \

--constraint "node.labels.role-datanode==true" \

--replicas 2 \

--env-file /docker/hadoop.env \

edge:443/local.cluster/hdfs-datanode:2.8.1Creating an additional network, allows services to share the network visibility.

That's why previously, there was a step setting labels on each of the cluster nodes.

And why attach all services to the same network?

#!/bin/bash

lxc exec edge -- docker node update --label-add role-datanode=true data01

lxc exec edge -- docker node update --label-add role-spark-worker=true data01some conclusions

LXD offers a compact solution for hardware virtualization:

It allows a fine-grained control of resources such as CPU, memory and disk.

It allows to define complex virtual network layouts, but requires some knowledge on how networking works.

It allows using different file-system solutions for the containers persistence layer. (ZFS, BTRFS, etc)

and docker-compose?

Why all the hasle? Why not use docker-compose?

Basically, it has its use cases, and do things as it seems fit.

But a hadoop cluster involves specific data locality, thus meaning each service has to be located on a specific place.

That's all for today

Many thanks for coming by

Code available at:

Sources

LXD as an alternative containment solution compatible with Docker

By Oriol López Sánchez

LXD as an alternative containment solution compatible with Docker

Showing an alternative containment solution compatible with Docker through example

- 199