Kubernetes Introduction

PanChuan 2018.11.08

Outline

- Kubernetes Overview

- Kubernetes Objects

- Kubernetes Work Mechanism

Kubernetes Overview

-

Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications

-

It groups containers that make up an application into logical units for easy management and discovery

Kubernetes Overview

Kubernetes is Greek for pilot or hemlsman (the person holding the ship's steering wheel)

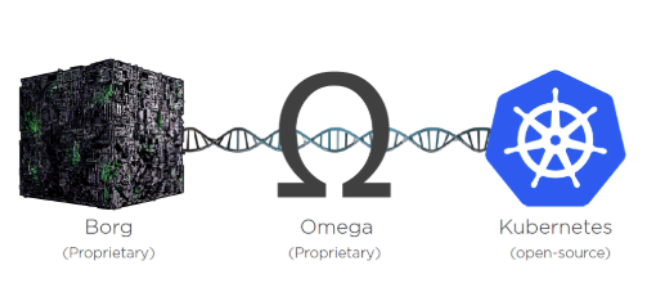

Kubernetes History

2003

2013

2014

Kubernetes History

- 2014: Key Players Joined K8s community.

Microsoft/RedHat/IBM/Docker

- 2015: V1.0 release & CNCF

- 2016: The year kubernetes goes mainstream

- 2017: Enterprise Adoption & Support

https://blog.risingstack.com/the-history-of-kubernetes/

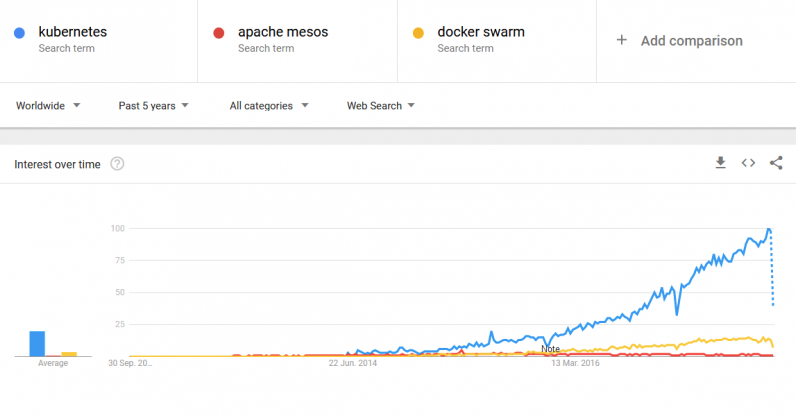

K8S Trends

Kubernetes Needs Backgroud

- Monoliths app -> Microservice app

- Becomes too complex when doing configuration/deployment/management

- Need automation

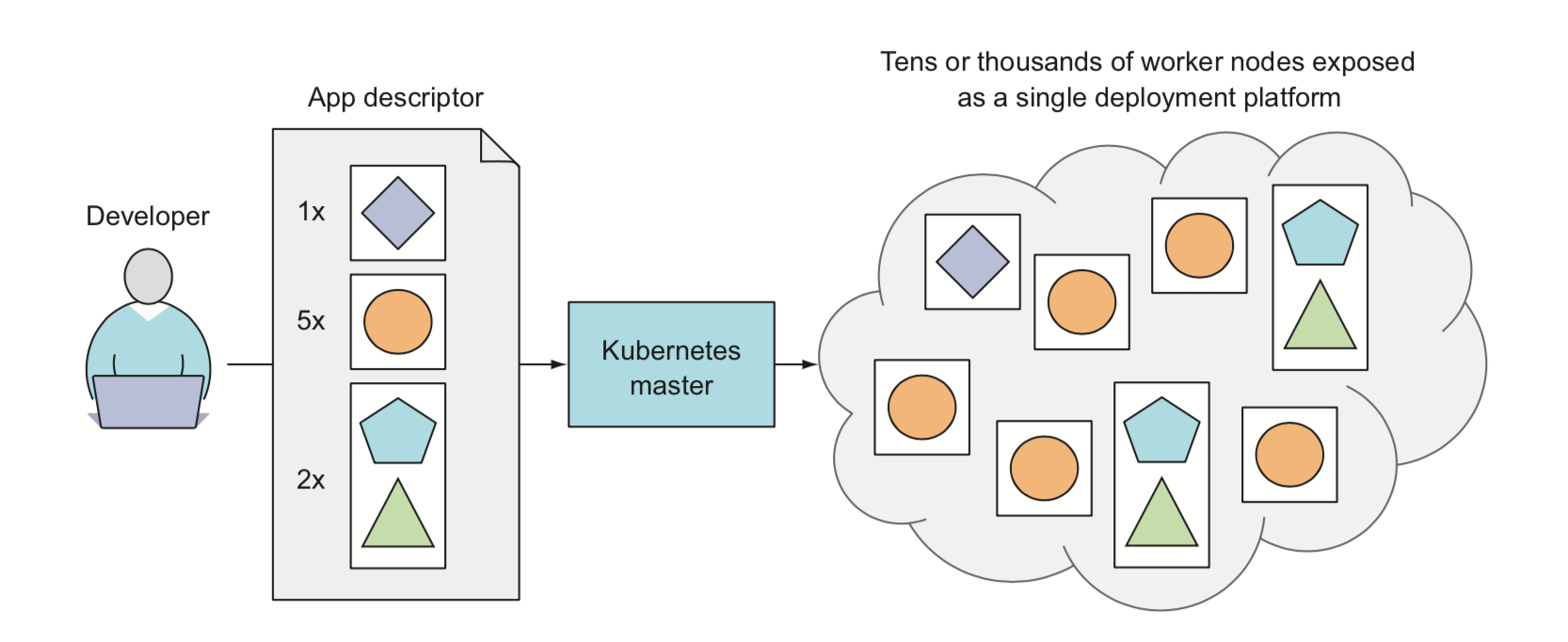

Kubernetes high-level

- k8s expose the whole DC as a single deployment platform

- can be thought as an operating system for the cluster

Kubernetes Features

- Service discovery and load balancing

- Automatic binpacking

- Self-healing

- Automated rollouts and rollbacks

- Storage orchestration

- Horizontal scaling

- Batch execution

- Secret and configuration management

Kubernetes Benefits

- Simplify application deployment.

- Achieving better utilization of hardware.

- Better sleeping (self-healing, auto-scaling).

- DevOps to NoOps

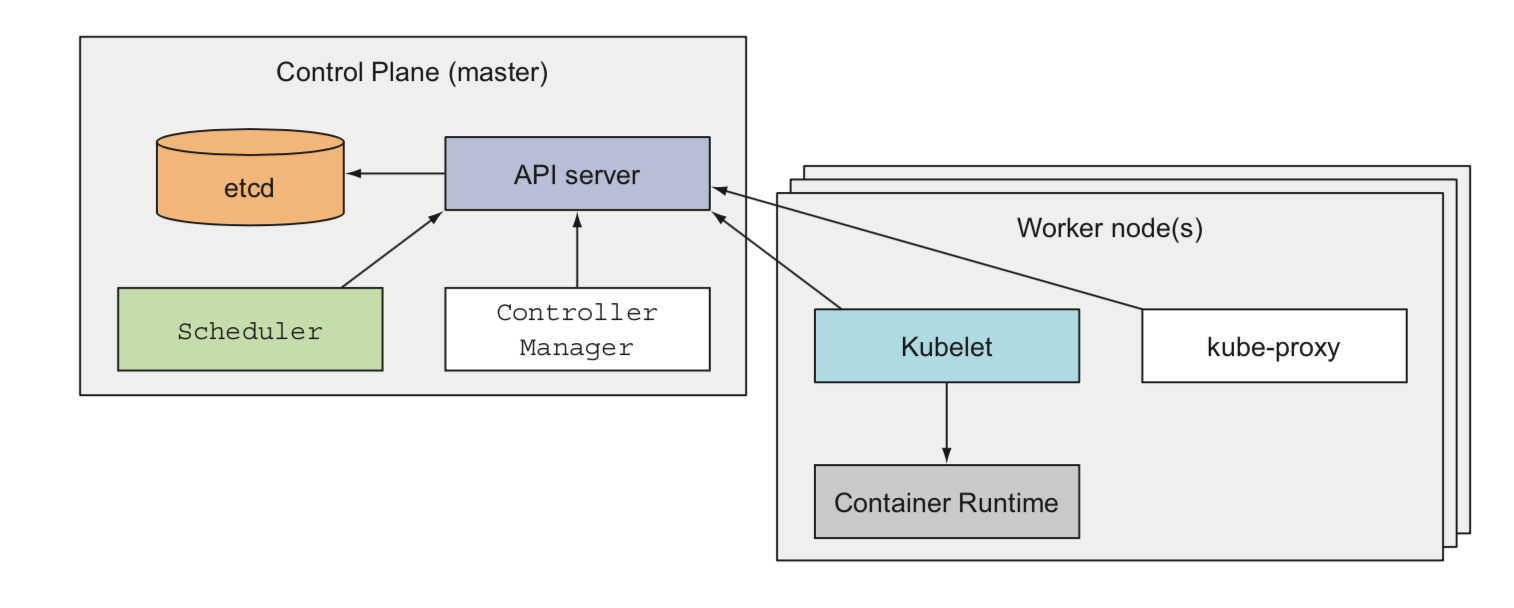

Kubernetes Architecture

Container Runtime: Docker, rkt or else

Kubernetes Objects

- K8S objects are persistent entities in k8s system, these entities represent the state of cluster

- Post your desired state to Kubernetes API

Describe Objects

- Provide the object spec use json/yaml file.

apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2 # tells deployment to run 2 pods matching the template

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80Pods

- Pods are the central and most important concept

- encapsulated with one or more container, run and scheduled as smallest unit

why need Pods

- Containers are designed to run only a single process per container.

- Multiple containers are better than one container running multiple process.

- take the advantage of all the features container provide, meanwhile giving the process the illusion of running together

Pods feature

- containers in pods are not so isolated by

sharing Linux namespace

- same network interface and hostname

- Flat network between pods (No NAT)

Create a Pod

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

env: test

name: nginx

spec:

containers:

- image: docker-registery.itv.qiyi.domain/nginx:latest

name: nginx

ports:

- containerPort: 80

protocol: TCPkubectl create -f ningx.yamlDon't Abuse Pods

- Pods are relatively lightweight

- A pod is also the basic unit of scaling

- Typical Multi-container pods: sidecar containers

Pods Lables

- A label is an arbitrary key-value pair attach to a resource

- Use label-selector to select specific resource

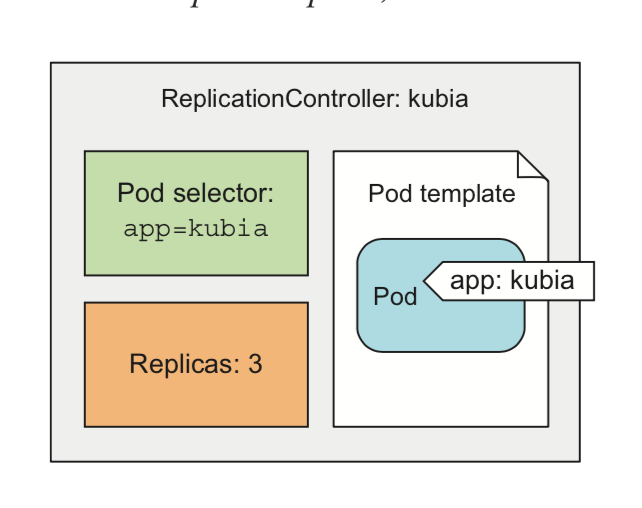

ReplicaSets

- Don't create pods directly, create ReplicaSets /Deployment instead

- RS keeps Pods running automatically and healthy

- 3 essential parts of a RS: A label selector, A replica count, A pod template

Container Probe

- liveness probe & readness Probe

- three mechanisms to probe a container:

- HTTP Get probe (check code status)

- TCP socket (check if can connect)

- Exec cmd (check ret status)

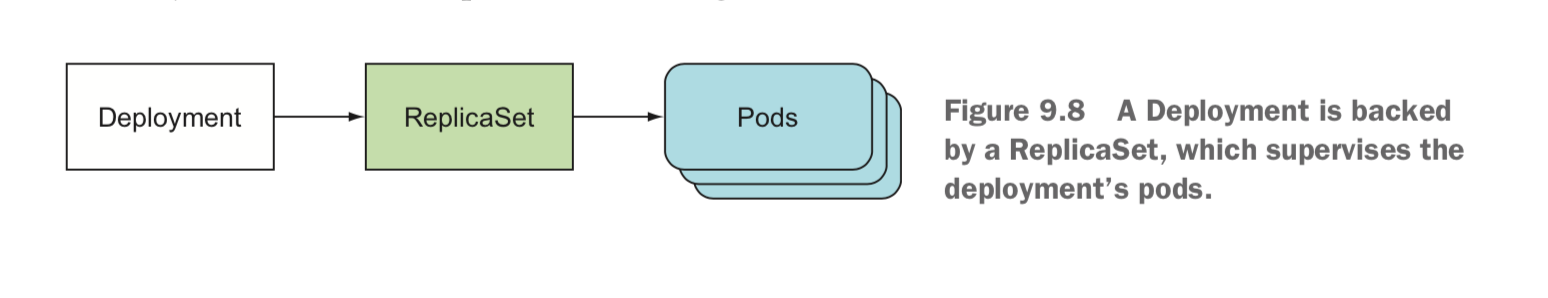

Deployments

- A higher-level resource meat for deploying application and updating them declaratively

Deployments

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-demo

spec:

replicas: 3

template:

metadata:

name: nginx-demo

labels:

app: nginx

env: test

spec:

containers:

- image: docker-registry.itv.qiyi.domain/nginx:latest

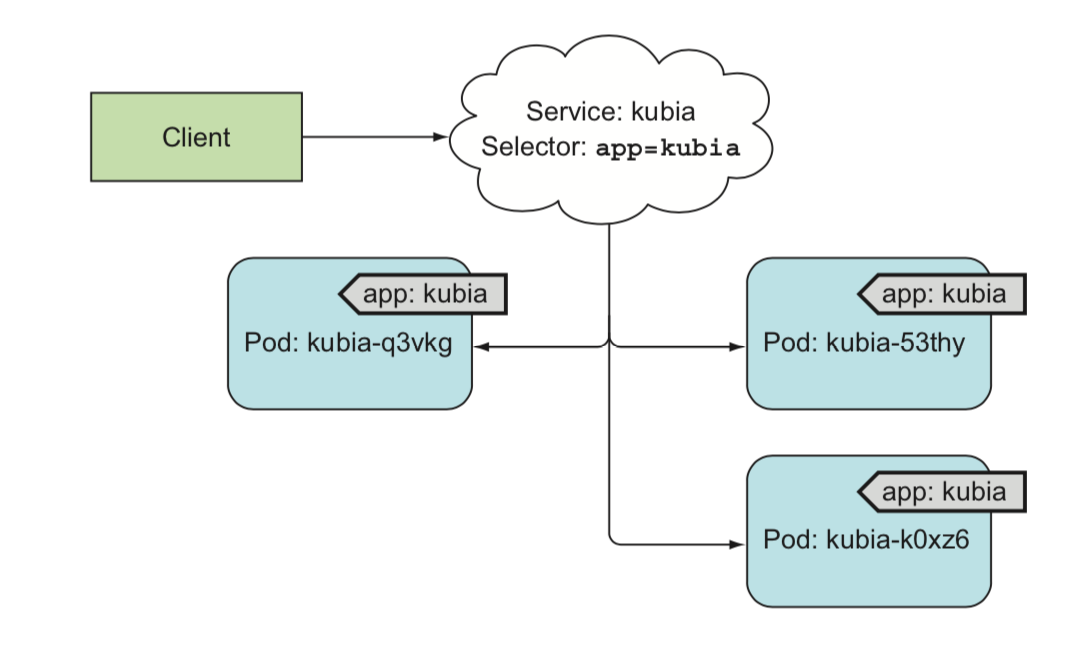

name: nginx-demoService

- Pods are ephemeral, needs a way to provide stable service

- Use label-selector to organize pods

- Each service has an IP:Port that never change while the service exists

Service

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

ports:

- port: 80

targetPort: 8080

selector:

app: nginxkubectl get svc- cluster IP is virtual and only accessible inside the cluster, can't ping

Service Discovery

- through Environment variables. Pods running order matters

- through DNS

epginfo.product.svc.cluster.lcoalExpose service to external

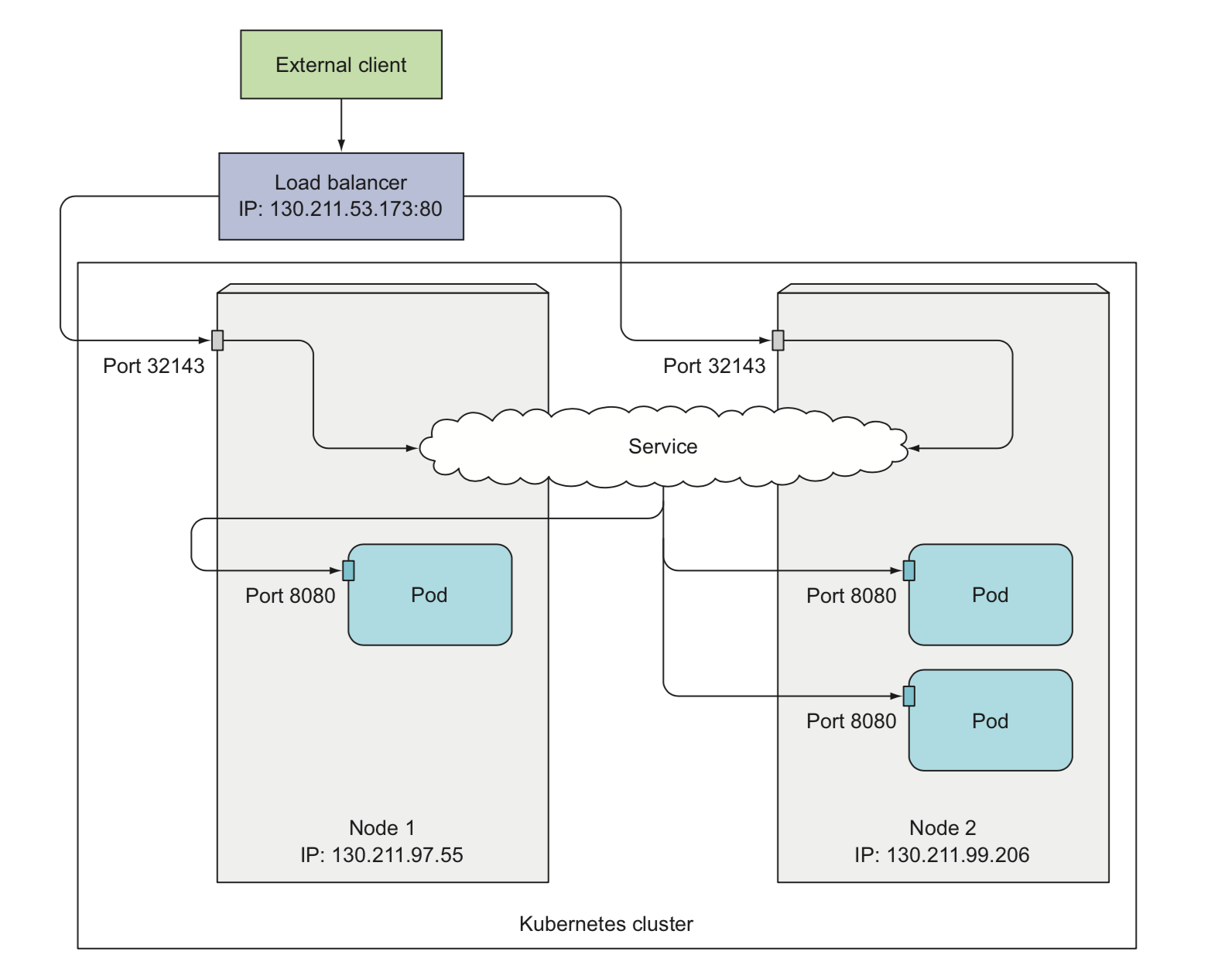

- NodePort, each cluster node opens a port on the node and redirect traffic to the underlying service

- LoadBalancer, an extension of the NodePort

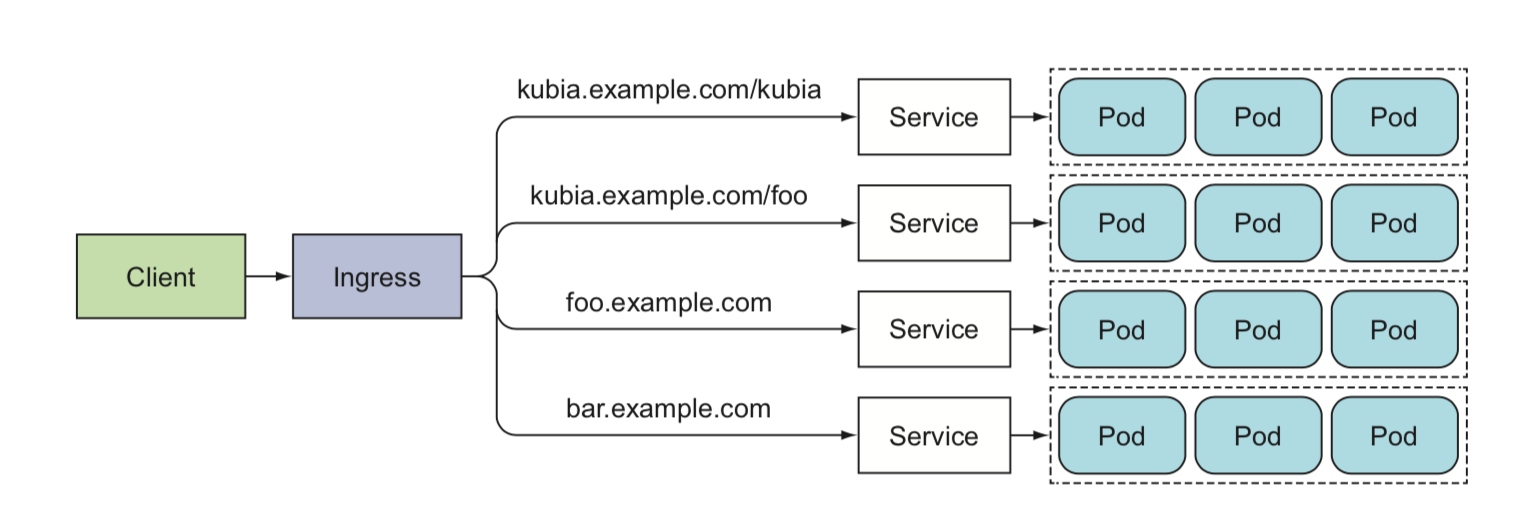

- Ingress, operates at the HTTP level, routes different path to different service

NodePort :

=======

apiVersion: v1

kind: Service

metadata:

name: kubia-nodeport

spec:

type: NodePort

ports:

- port: 80

targetPort: 8080

nodePort: 30123

selector:

app: kubiaservice Load balancer

service Ingress

Ingress controller: Nginx, Traefik, Kong, HAProxy

DaemonSet

- Run exactly one instance of a pod on every worker node

- Example: logCollector, kube-proxy, consul-agent

Job

- Job performs a single completable task

apiVersion: batch/v1

kind: Job

metadata:

name: batch-job

spec:

template:

metadata:

labels:

app: batch-job

spec:

restartPolicy: OnFailure

containers:

- name: main

image: luksa/batch-jobCronJob

- Schedule Jobs to run periodically or sometime in the future

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: batch-job-every-fifteen-minutes

spec:

schedule: "0,15,30,45 * * * *" // Linux cron table syntax

jobTemplate:

spec:

template:

metadata:

labels:

app: periodic-batch-job

spec:

restartPolicy: OnFailure

containers:

- name: main

image: luksa/batch-jobNamespace

- Kubernetes groups objects into namespace

- Example: prod/staging/dev

- some resource is cluster-level and doesn't belong to any namespace. Node/PersistemVolume ect.

Other Resources

- ConfigureMap

- Secrets

- Persistent Volumes

....

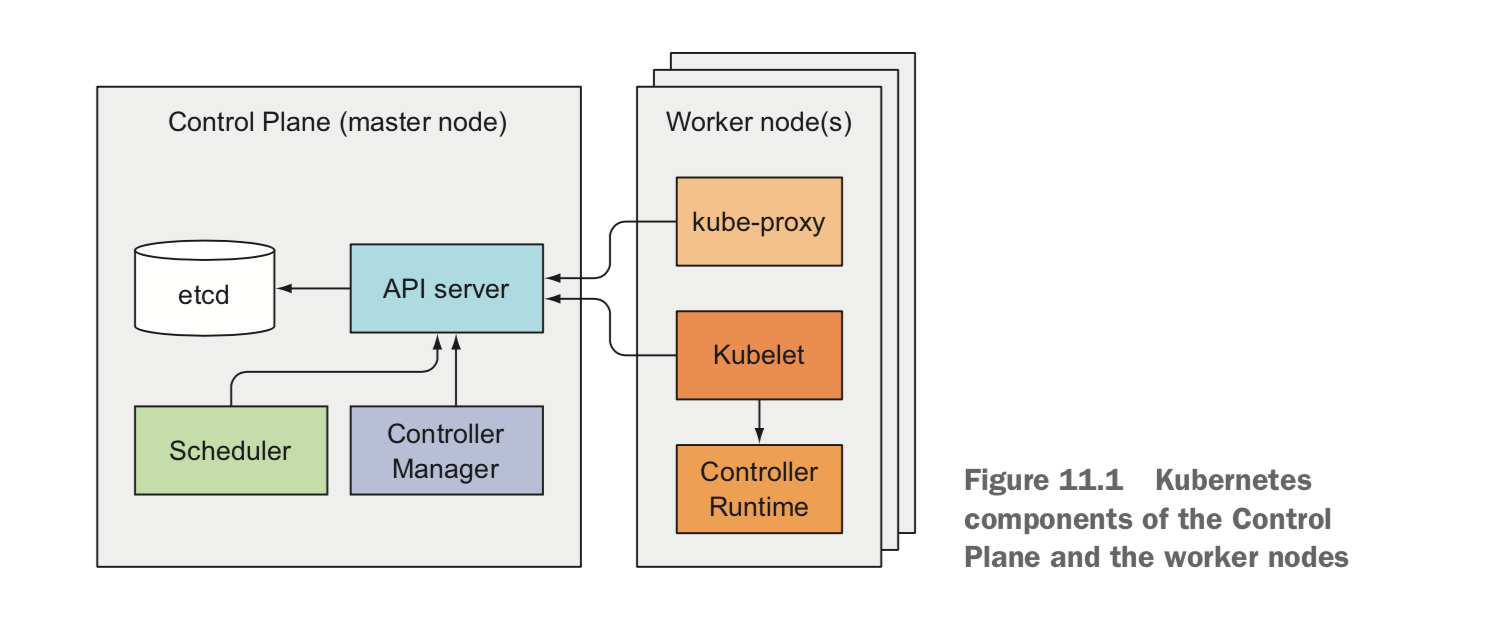

K8S Mechanism

- Master node and work node components:

- ADD-ON components:

- DNS server

- Dashboard

- CNI net plugin

- Heapster

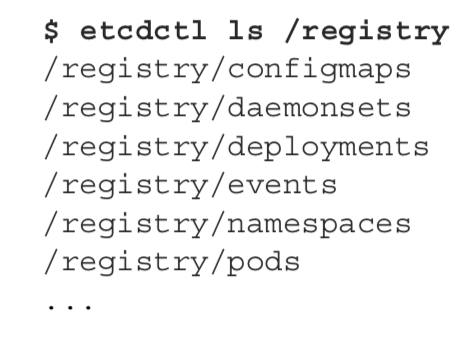

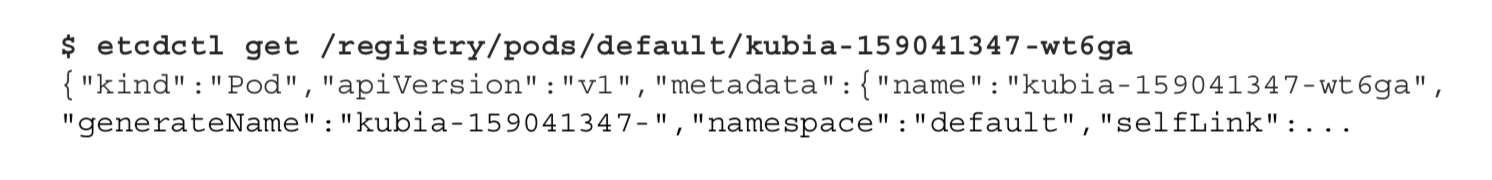

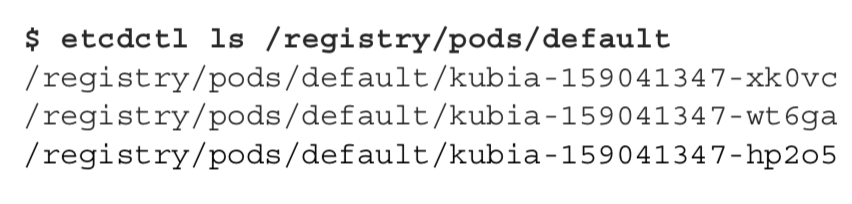

Etcd

- Kubernetes store all cluster state and metadata in ectd

- API server is the component that talk with etcd

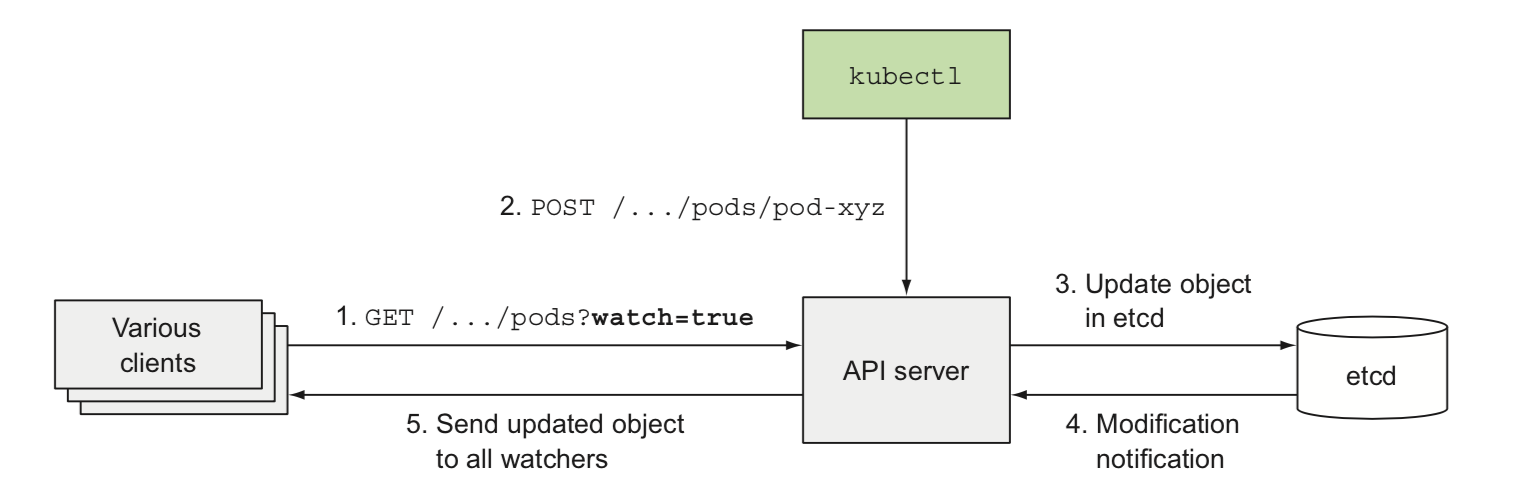

API Server

- Api Server is the central component used by all other components

- Api Server clients can request to be notified when a resource is created, modified or deleted (http streaming watch)

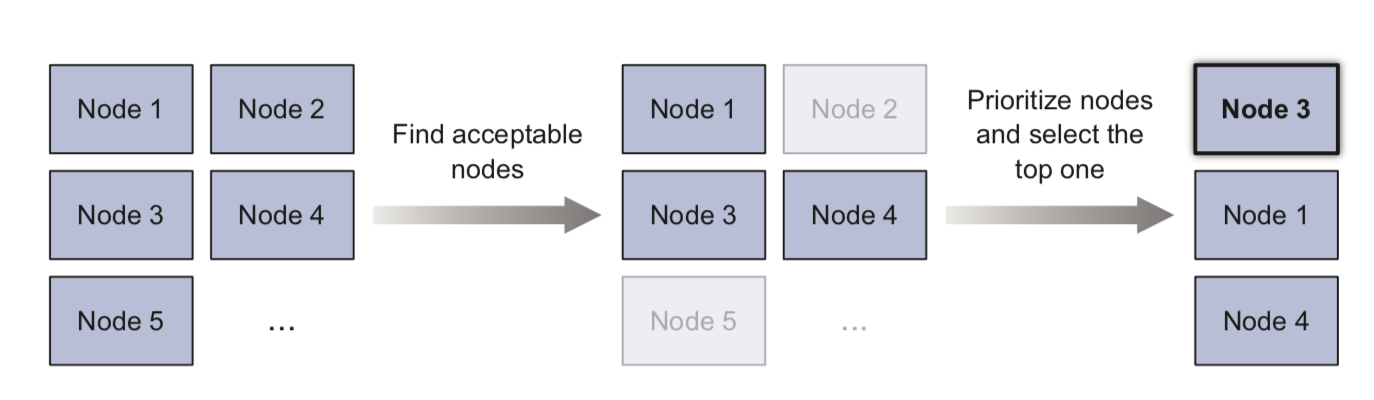

Scheduler

- A scheduler waits for newly created pods by watching api server

- Scheduler update the pod definition by api and not talk to kubelet running on work node

- Schedule algorithm is configurable and customized scheduler is support

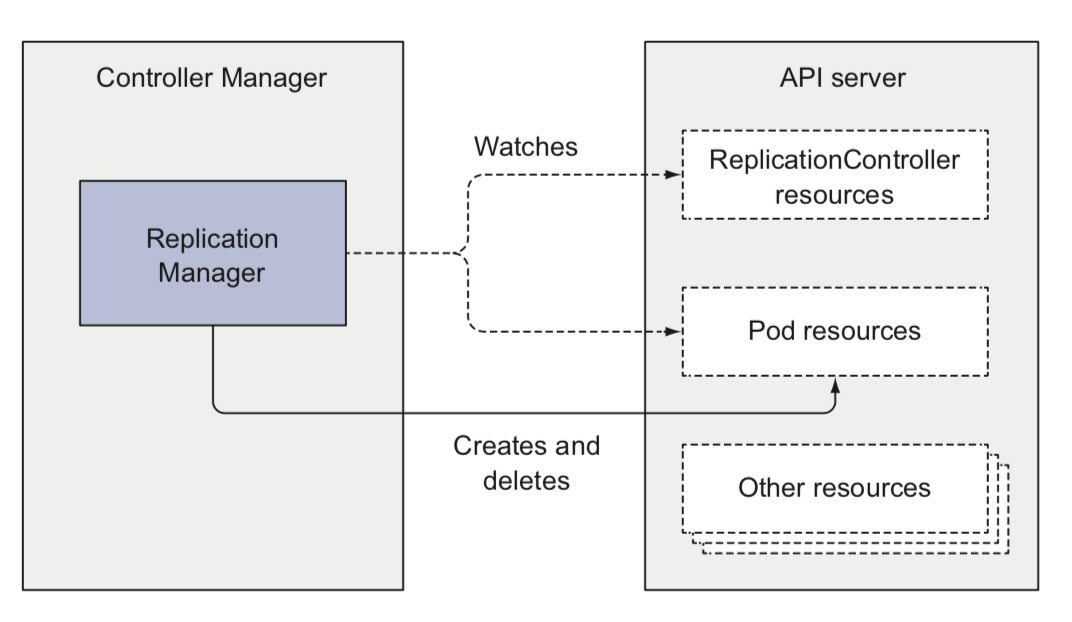

Controller manager

- Almost each kind resource has a corresponding controller

- controller make sure the actual state of the system converge toward the desired state

any possible changes trigger the controller to recheck the desired vs. actual replica count and act accrodingly

Kubelet

- Kubelet is the component responsible for everything running on a worker node

- Kubelet create containers and monitors running containers and reports their status, events, and resource consumption to API server

Cooperate

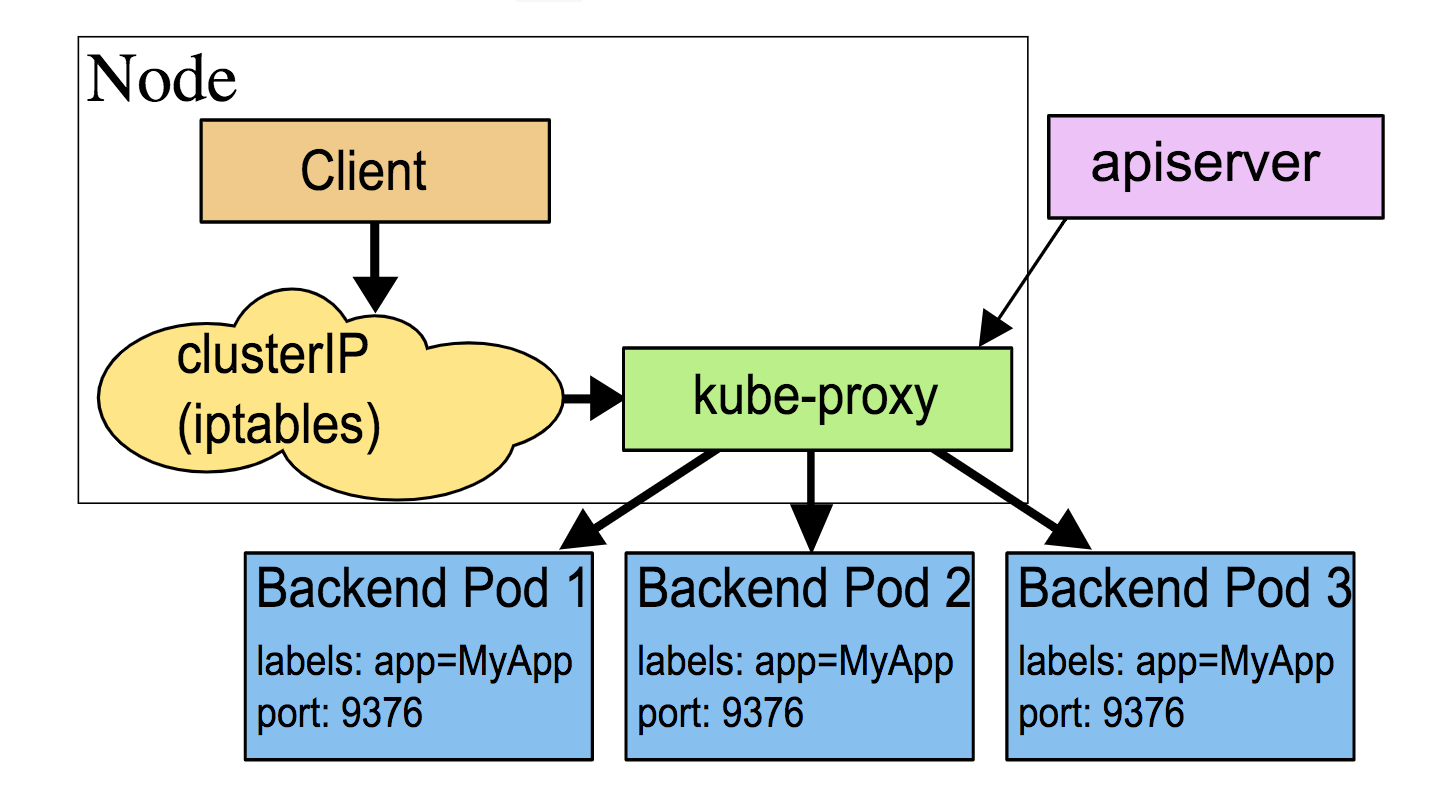

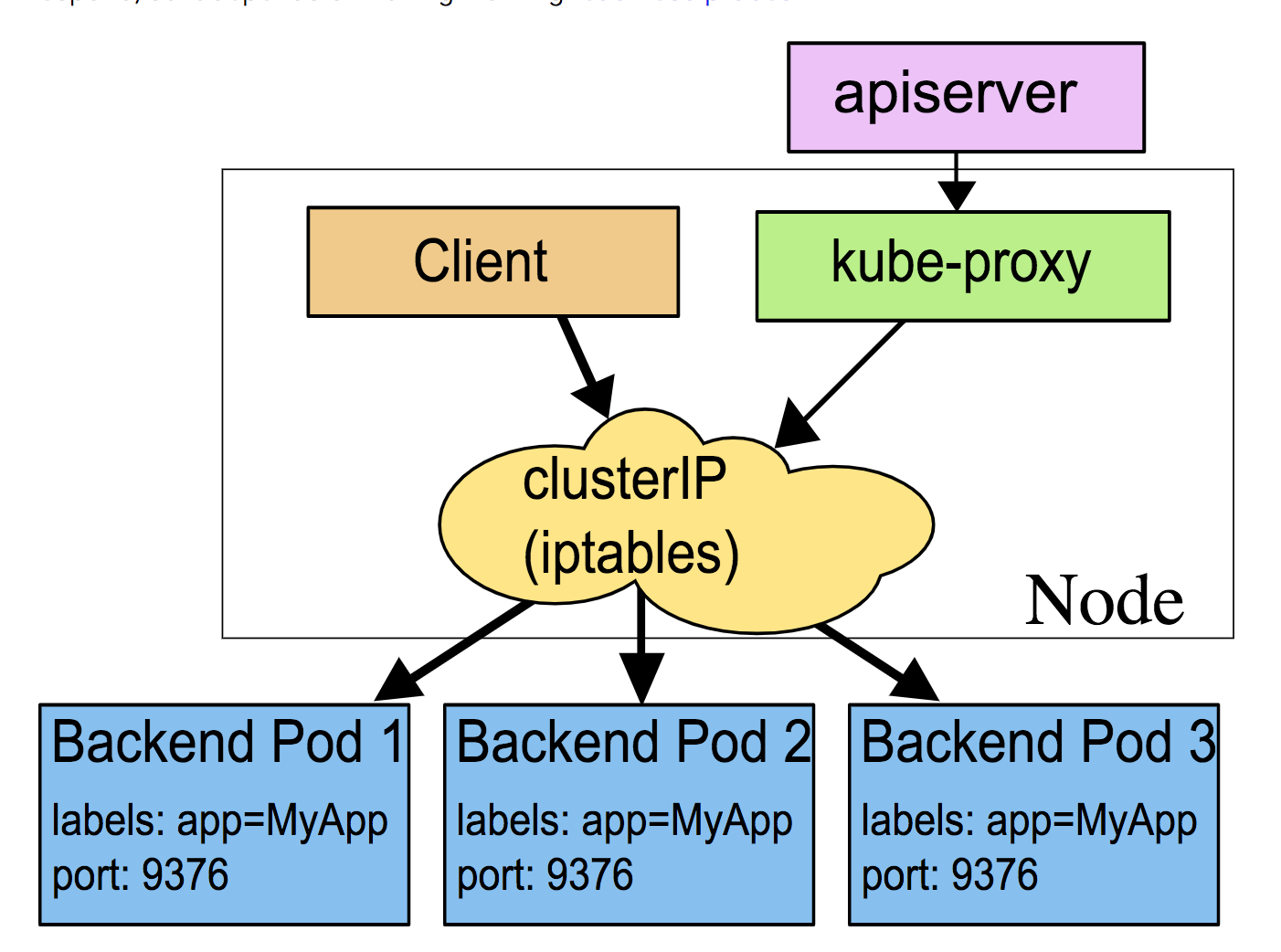

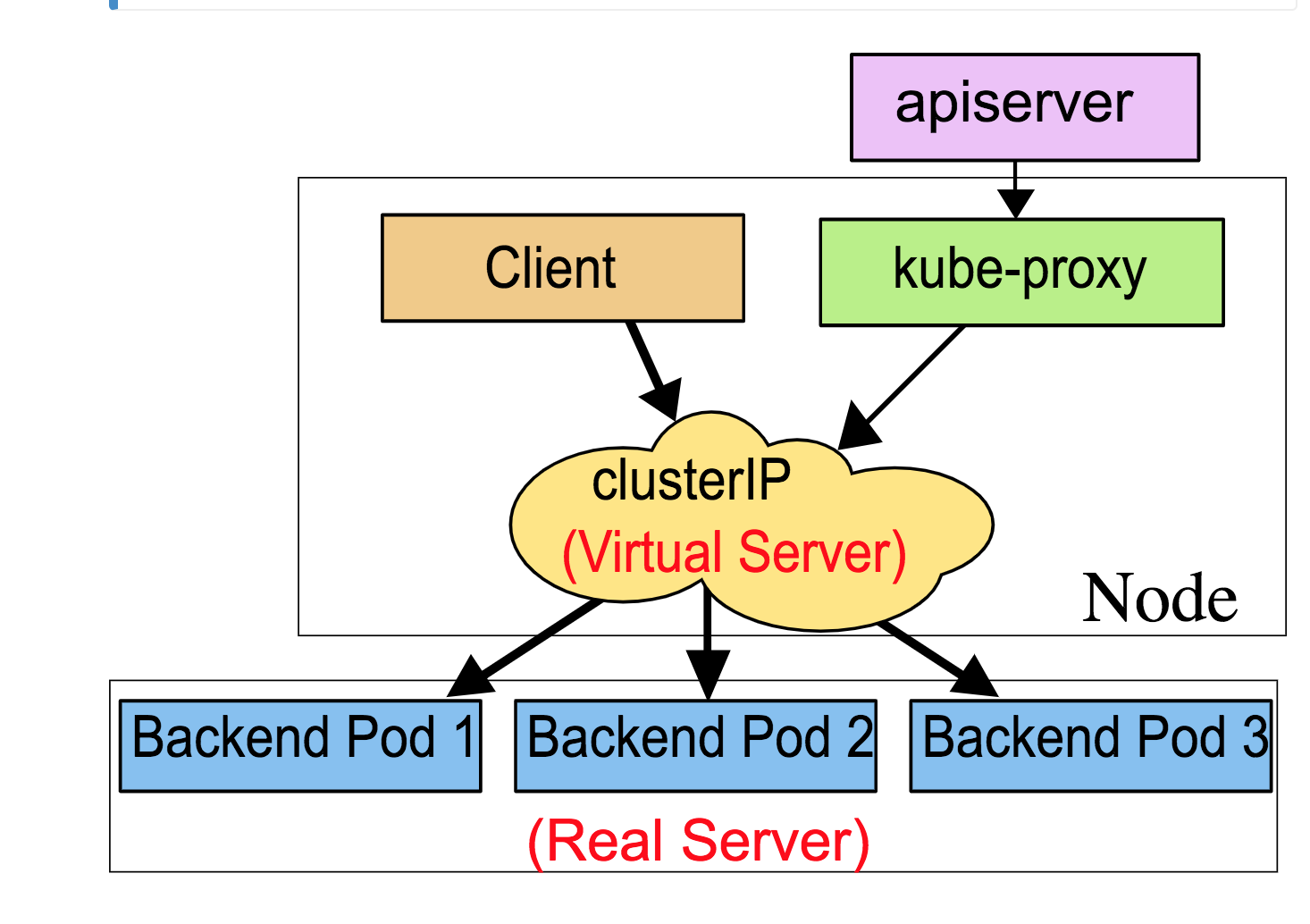

Kube-Proxy

- Everything related to service is handled by kube-proxy process running on each node

- Kube-proxy create vIP:port in iptables rules when a new service created (through API Server)

Proxy-Mode: userspace

Proxy-Mode: iptables

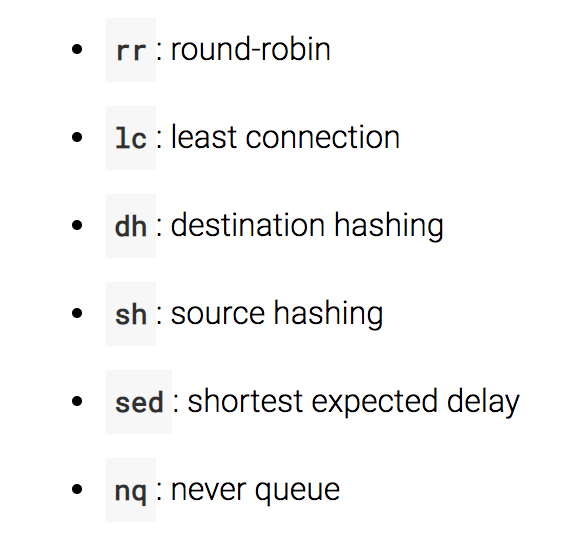

Proxy-Mode: ipvs (v1.9 beta)

- based on ipvs kernel modules hook function

K8S Control Plane HA

- Run multiple master nodes for HA

- API server can run multiple instance

- Controller Manager and Scheduler run as leader and standing by.

Reference

- https://kubernetes.io

- <Kubernetes In Action>

The End

QA

Kubernetes Introduction

By panchuan

Kubernetes Introduction

- 1,515