ExecutionContext

Petra Bierleutgeb

@pbvie

Demystified

Future[ExecutionContextDemystified]

(a.k.a. agenda)

Motivation

Why do we need an ExecutionContext every time we use Future?

Agenda

- concurrency, parallelism, asynchrony

- concurrency on the JVM

- concurrency in Scala

- way of a Future - from Future.apply to Runnable.run

- Scala's global execution context

- alternatives and optimizations

Life is Async

...and we don't like to wait

Async in real life

- asynchrony is actually much more natural than synchrony

- we're experts in context switching

- do we ever really wait?

Concurrency != Parallelism !=Asynchrony

Concurrency

Concurrency

- opposite of working sequentially

- i.e. start and finish A, start and finish B,...

- concurrency is possible even if there's only one CPU

- why is that useful:

The term Concurrency refers to techniques that make programs more usable. If you can load multiple documents simultaneously in the tabs of your browser and you can still open menus and perform other actions, this is concurrency.

https://wiki.haskell.org/Parallelism_vs._Concurrency

"

Parallelism

"

Parallelism

- imagine we have three tasks: Task A, Task B and Task C

- we work on A, B and C at exactly the same time

(by using multiple CPUs) - common pattern: split task in subtasks that can be processed in parallel

The term Parallelism refers to techniques to make programs faster by performing several computations at the same time. This requires hardware with multiple processing units.

https://wiki.haskell.org/Parallelism_vs._Concurrency

"

Asynchrony

Asynchrony

- opposite of working synchronous

- actively waiting for operation to complete before doing something else

- often implemented via callbacks

"An asynchronous operation is an operation which continues in the background after being initiated, without forcing the caller to wait for it to finish before running other code."

https://blog.slaks.net/2014-12-23/parallelism-async-threading-explained/

Concurrency on the JVM

A whirlwind tour of threads and friends

Level 1: Using Thread directly

class MyThread extends Thread {

override def run(): Unit = {

// do async work

}

}

val t = new MyThread()

t.start()- tightly couples functional and execution logic

- low-level and rather complex

- creation of Threads is expensive

- does not degrade gracefully

Level 2: Decouple

- Runnable and Callable[T]

- Executor and ExecutorService

- java.util.concurrent.Future[T]

Runnable and Callable[T]

-

Java provides two interfaces to describe async pieces of work

- Runnable: no result

- Callable[T]: result of type T

- specify what to run not how

- interfaces with one single no-arg method

Executor and ExecutorService

- Decouple execution logic from implementation

- Responsible for

- accepting task(s) to be executed

- deciding how (potentially) async code should be run

- deciding if and when new threads are created

java.util.concurrent.Future[T]

- result of an asynchronous operation

- not comparable to scala.concurrent.Future

- can be polled to see if computation is complete

Level 3: Callbacks and Composability

- since Java 1.8: java.util.concurrent.CompletableFuture[T]

- comparable to Scala Futures

- describe what should be done with a result once it becomes available (without actively waiting for it)

Concurrency in Scala

A Scala developer's concurrency toolbox

Scala Standard Lib

- Future[T]

- Promise[T]

- Awaitable

- ExecutionContext

Future[T]

...a future is a placeholder object for a value that may be become available at some point in time, asynchronously. It is not the asynchronous computation itself.

"

Future[T]

- read-only container for a value of type T that may become available at some point in time

- in case of failure the Future will contain the Throwable

- offers methods for transformation

- Futures are eager

- Future.apply takes by-name parameter

// scala.concurrent.Future

def apply[T](body: =>T)(implicit executor: ExecutionContext): Future[T]Side note: by-name params

- `body` will not be evaluated immediately

- why is that important?

- whatever we pass to Future.apply is meant to be scheduled for execution by our EC

- we don't want to evaluate the expression right away

(on the current thread!)

// scala.concurrent.Future

def apply[T](body: =>T)(implicit executor: ExecutionContext): Future[T]Side note: semantics

- read as

// scala.concurrent.Future

def apply[T](body: =>T)(implicit executor: ExecutionContext): Future[T]...It is not the asynchronous computation itself.

"Create a placeholder object that may contain the result of the given expression once it is completed...or an error"

Creating a Future[T]

-

by transforming another Future

- map, flatMap, transform, transformWith,...

- using factory methods on scala.concurrent.Future

- unit, never, successful, failed

- using Future.apply

- using Promise

Promise[T]

- writable, single-assignment container, which can complete a Future[T]

- 3 concrete implementations

- KeptPromise.Success (already completed)

- KeptPromise.Failed (already completed)

- DefaultPromise

- DefaultPromise extends AtomicReference to store the value

Promise[T]

- completing a Future[T]

val f: Future[Int] = promiseSample()

def promiseSample(): Future[Int] = {

val p = Promise[Int]

someLongRunningOp(p)

p.future

}

def someLongRunningOp(p: Promise[Int]): Future[Unit] = Future {

Thread.sleep(5000) // do something slow

p.success(5)

}Creating a Future[T] - Revisited

-

by transforming another Future

- map, flatMap, transform, transformWith,...

- using factory methods on scala.concurrent.Future

- unit, never, successful, failed

- using Future.apply

- using Promise

implemented using Promise

Awaitable

- actively wait/block until a Future is completed

- Await.ready and Await.result

- accept timeouts

-

implemented on concrete Promise implementations

- KeptPromise can return result immediately

- DefaultPromise tries to wait for the result

ExecutionContext

What is it and what do we need it for?

ExecutionContext: What is it

- extends functionality of Executor with error reporting

- like a Java Executor it's used to execute Runnables

// java.util.concurrent.Executor

public interface Executor {

void execute(Runnable command);

}trait ExecutionContext {

def execute(runnable: Runnable): Unit

def reportFailure(cause: Throwable): Unit

}Side Note: Laws of JVM Concurrency

- it all comes down to Runnable.run eventually

=> we can't break that law

- Future is just a high-level concept that hides that complexity from us

- when we create/use Futures it will be translated to executor.execute(runnable) calls for us

that's our execution context

ExecutionContext

- reminder: Future contains a result of a (potentially async) operation

-

ExecutionContext decides how that operation will be executed

- usually ECs uses an underlying thread pool

- operation doesn't have the be asynchronous

- specialized ECs might execute operations synchronously on the calling thread for optimization

Way of a Future

From Future.apply to running on an EC

Way of a Future

Future {

2 + 3

}Future[Int]

Cannot find an implicit ExecutionContext. You might pass

an (implicit ec: ExecutionContext) parameter to your method

or import scala.concurrent.ExecutionContext.Implicits.global.Way of a Future

import scala.concurrent.ExecutionContext.Implicits.global

Future {

2 + 3

}Future.apply(2 + 3)def apply[T](body: =>T)(implicit executor: ExecutionContext): Future[T] =

unit.map(_ => body)Way of a Future

Future.apply(2 + 3)def apply[T](body: =>T)(implicit executor: ExecutionContext): Future[T] =

unit.map(_ => body)val unit: Future[Unit] = successful(())

def successful[T](result: T): Future[T] = Promise.successful(result).future

// scala.concurrent.Promise

def successful[T](result: T): Promise[T] = fromTry(Success(result))

def fromTry[T](result: Try[T]): Promise[T] =

impl.Promise.KeptPromise[T](result)KeptPromise.Successful(Success(()))

Way of a Future

Future.apply(2 + 3)def apply[T](body: =>T)(implicit executor: ExecutionContext): Future[T] =

unit.map(_ => body)def map[S](f: T => S)(implicit executor: ExecutionContext): Future[S] =

transform(_ map f)

// scala.concurrent.impl.Promise

override def transform[S](f: Try[T] => Try[S])

(implicit executor: ExecutionContext): Future[S] = {

val p = new DefaultPromise[S]()

onComplete { result =>

p.complete(try f(result) catch { case NonFatal(t) => Failure(t) })

}

p.future

}Way of a Future

val p = new DefaultPromise[S]()

onComplete { result =>

p.complete(try f(result) catch { case NonFatal(t) => Failure(t) })

}

p.future// scala.concurrent.impl.Promise.KeptPromise.Kept

override def onComplete[U](func: Try[T] => U)

(implicit executor: ExecutionContext): Unit =

(new CallbackRunnable(executor.prepare(), func)).executeWithValue(result)returned to caller immediately

`this` is our KeptPromise with result Success(())

Side Note: Laws of JVM Concurrency

2. Runnable.run takes no params and returns nothing

=> how do we get data in and out?

- input and output (or callback) need to be stored on the Runnable itself

Way of a Future

// scala.concurrent.impl.CallbackRunnable

def executeWithValue(v: Try[T]): Unit = {

require(value eq null) // can't complete it twice

value = v

try executor.execute(this) catch { case NonFatal(t) => executor reportFailure t }

}// scala.concurrent.impl.CallbackRunnable

override def run() = {

require(value ne null) // must set value to non-null before running!

try onComplete(value) catch { case NonFatal(e) => executor reportFailure e }

}this is our EC

result =>

p.complete(try f(result) catch { case NonFatal(t) => Failure(t) })(t: Try[Unit]) => t.map(_ => 2 + 3)

Success(())

Summing it up

- expression passed to Future.apply gets scheduled on an EC

- Future.apply is implemented via Future.unit.map

- implemented via Promise.transform

- create new promise and return p.future immediately

- when the current Promise completes, execute given expression on given EC

- create CallbackRunnable

- callback will complete Promise p with result

Reminder

Future(1) // don't do thisDon't use Future.apply to wrap already available values

It will trigger the whole chain of transform, create a Runnable and execute the expression on the given EC

Use Future.successful instead

// way better!

Future.successful(1)

...it will create a KeptPromise without all the overhead

Bonus Content: One more example

val f: Future[Int] =

Future(2 + 3).map(r => r + 1).map(r => r * 5).map(r => r - 1)Working with not yet available data

- Future(2 + 3) has type Future[Int]

- ...but it's actually a DefaultPromise

val f: Future[Int] =

Future(2 + 3).map(r => r + 1).map(r => r * 5).map(r => r - 1)// implementation of Promise.future

def future: this.type = thisThe Promise and the returned Future are actually the same instance

DefaultPromise.onComplete

final def onComplete[U](func: Try[T] => U)(implicit executor: ExecutionContext): Unit =

dispatchOrAddCallback(new CallbackRunnable[T](executor.prepare(), func))@tailrec

private def dispatchOrAddCallback(runnable: CallbackRunnable[T]): Unit = {

get() match {

case r: Try[_] => ... // promise is already completed => execute callback

case dp: DefaultPromise[_] => ... // result is another DP (i.e. when using flatMap)

case listeners: List[_] => ... // promise is not completed => add callback to list

}

}our ExecutionContext

will be called when this Promise is completed

final def tryComplete(value: Try[T]): Boolean = {

val resolved = resolveTry(value)

tryCompleteAndGetListeners(resolved) match {

...

case rs => rs.foreach(r => r.executeWithValue(resolved)); true

}

}execute stored callbacks with

result of this Promise

ExecutionContext.global

a.k.a. Scala's default ExecutionContext

ExecutionContext.global

- good general-purpose ExecutionContext

- => when in doubt, use the global ExecutionContext

- backed by a work-stealing ForkJoinPool

- java.util.concurrent.ForkJoinPool (since Scala 2.12)

-

tries to keep the CPU busy, support for blocking

(more on that later)

divide and conquer!

Configuration Options

- how many threads will the following code start?

def doSomethingAsync()(ec: ExecutionContext): Future[Int] = ...

implicit val ec: ExecutionContext = ExecutionContext.global

Future.traverse(1 to 25)(i => doSomethingAsync())=> depends on configuration

Configuration Options

- scala.concurrent.context.minThreads

- default: 1

- scala.concurrent.context.numThreads

- default: number of CPUs

- scala.concurrent.context.maxThreads

- default: number of CPUs

- scala.concurrent.context.maxExtraThreads

- default: 256

Configuration Options

- configured via system properties

- can be passed via command-line

- -Dscala.concurrent.context.maxThreads=3

- or via sbt:

fork in run := true

javaOptions += "-Dscala.concurrent.context.maxThreads=3"Configuration Options

- what to keep in mind

- application might behave differently depending on the machine it's run on

- Runtime.getRuntime.availableProcessors doesn't work well in Docker containers (prior to Java 10)

- when using multiple ECs: adapt number of CPUs accordingly

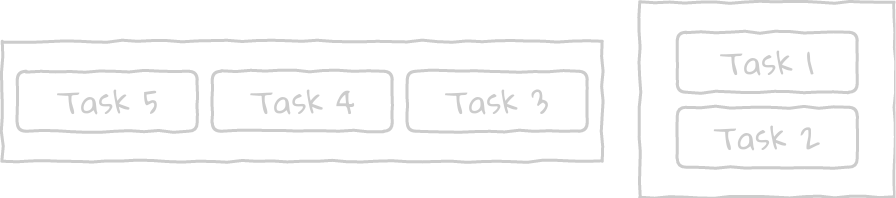

Why blocking is bad

- blocked threads can't be used to perform other tasks even though they are not doing actual work

- other tasks have to wait because no free threads are available

- risk of pool-induced deadlocks

waiting for Task 4

waiting for Task 3

Queue

maxThreads = 2

Meet BlockContext

- notifies EC that the current thread is about to block

- ExecutionContext will spin up another thread to compensate for blocked thread

- starts up to scala.concurrent.context.maxExtraThreads additional threads

- before Scala 2.12 that number was unbounded

BlockContext Example

def slowOp(a: Int, b: Int)(implicit ec: ExecutionContext): Future[Int] = Future {

logger.debug("slowOp")

Thread.sleep(80)

a + b

}def combined(a: Int, b: Int)(implicit ec: ExecutionContext): Future[Int] = {

val seqF = for (i <- 1 to 100) yield slowOp(a + i, b + i + 1)

Future.sequence(seqF).map(_.sum)

}0.950 ops/s

BlockContext Example

[DEBUG] Thread[20,scala-execution-context-global-20,5] - slowOp {}

[DEBUG] Thread[18,scala-execution-context-global-18,5] - slowOp {}

[DEBUG] Thread[15,scala-execution-context-global-15,5] - slowOp {}

[DEBUG] Thread[17,scala-execution-context-global-17,5] - slowOp {}

[DEBUG] Thread[22,scala-execution-context-global-22,5] - slowOp {}

[DEBUG] Thread[16,scala-execution-context-global-16,5] - slowOp {}

[DEBUG] Thread[19,scala-execution-context-global-19,5] - slowOp {}

[DEBUG] Thread[21,scala-execution-context-global-21,5] - slowOp {}

[DEBUG] Thread[18,scala-execution-context-global-18,5] - slowOp {}

[DEBUG] Thread[15,scala-execution-context-global-15,5] - slowOp {}

[DEBUG] Thread[20,scala-execution-context-global-20,5] - slowOp {}

[DEBUG] Thread[17,scala-execution-context-global-17,5] - slowOp {}

[DEBUG] Thread[16,scala-execution-context-global-16,5] - slowOp {} BlockContext Example

def slowOpBlocking(a: Int, b: Int)(implicit ec: ExecutionContext): Future[Int] = Future {

logger.debug("slowOpBlocking")

blocking {

Thread.sleep(80)

}

a + b

}def combined(a: Int, b: Int)(implicit ec: ExecutionContext): Future[Int] = {

val seqF = for (i <- 1 to 100) yield slowOpBlocking(a + i, b + i + 1)

Future.sequence(seqF).map(_.sum)

}6.861 ops/s

BlockContext Example

...

[DEBUG] Thread[3177,scala-execution-context-global-3177,5] - slowOpBlocking {}

[DEBUG] Thread[3178,scala-execution-context-global-3178,5] - slowOpBlocking {}

[DEBUG] Thread[3179,scala-execution-context-global-3179,5] - slowOpBlocking {}

[DEBUG] Thread[3180,scala-execution-context-global-3180,5] - slowOpBlocking {}

[DEBUG] Thread[3181,scala-execution-context-global-3181,5] - slowOpBlocking {}

[DEBUG] Thread[3182,scala-execution-context-global-3182,5] - slowOpBlocking {}

[DEBUG] Thread[3183,scala-execution-context-global-3183,5] - slowOpBlocking {}

[DEBUG] Thread[3184,scala-execution-context-global-3184,5] - slowOpBlocking {}

...Alternatives and Optimizations

Alternatives

- ExecutionContext.fromExecutor allows to create an ExecutionContext from every Java Executor(Service)

-

Libraries like Monix or Scalaz provide alternative Future/Task implementations and custom ECs

- Tasks are lazy (unlike Futures)

- finer-grained control about async boundaries (i.e. when to switch to a new thread)

Optimizations

- don't mix (too much)

- have dedicated ECs for IO (blocking) and computations

-

context switching is expensive

- small and fast (non-blocking) tasks can benefit from batching

- ...or synchronous execution

Summing it up

Summing it up

- An ExecutionContext

- is responsible to decide how Futures are mapped to Threads

- When using Futures

- a CallbackRunnable will be scheduled on the provided ExecutionContext

- the provided (async) operation will be executed eagerly

- Scala's global execution context

- is backed by a work-stealing ForkJoinPool

- provides support for blocking {...}

The End

Thank you!

Questions?

Execution Context Demystified - ScalaDays Berlin 2018

By Petra Bierleutgeb

Execution Context Demystified - ScalaDays Berlin 2018

- 2,517