MACHINE LEARNING

Lecture 6

神經網路(Neural Network)

數據處理

模型

優化

訓練

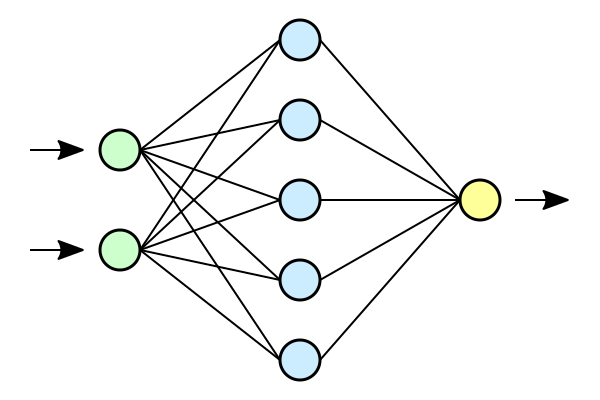

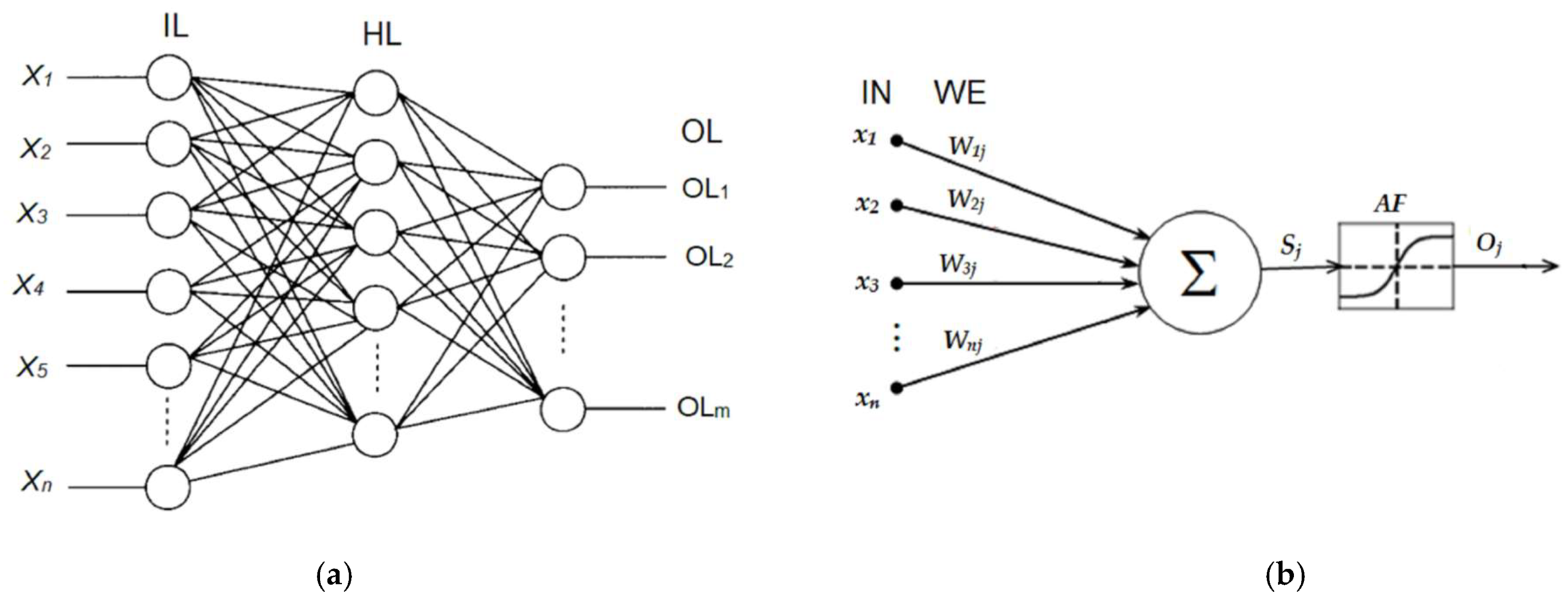

Neural Network

Forwardpropagation

out

h1

b1

h2

w2

out = h_{1} \times w_{1} + h_{2} \times w_{2}+b_{1}

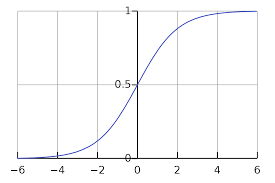

\frac{1}{1+e^{-out}}

Backpropagation

用前向傳播的資訊計算

再用連鎖律(對 又是他)

把權重的偏微分算出來更新

ㄜ一些噁心的東東

\frac{\partial Loss}{\partial w} =\frac{\partial Loss}{\partial h}\frac{\partial h}{\partial w}

我又來荼毒了對不幾

Loss = \frac{1}{2} (out - y)^{2}

今天的損失函數 ouo

in1

out

h1

w2

w1

\frac{\partial Loss}{\partial w_{1}} =in_{1}(out -y)w_{2}

\frac{\partial Loss}{\partial w2} =(out -y)h_{1}

ㄜ如果要用激發函數就要再用一次連鎖

bias ㄋ

\frac{\partial Loss}{\partial h}

\frac{\partial Loss}{\partial b} = (out-y) w_{2}

Training Set

and

Test Set

1+1=2 (80%)

1+2=? (20%)

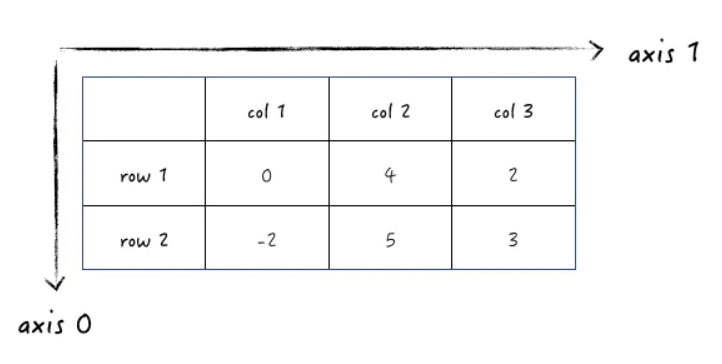

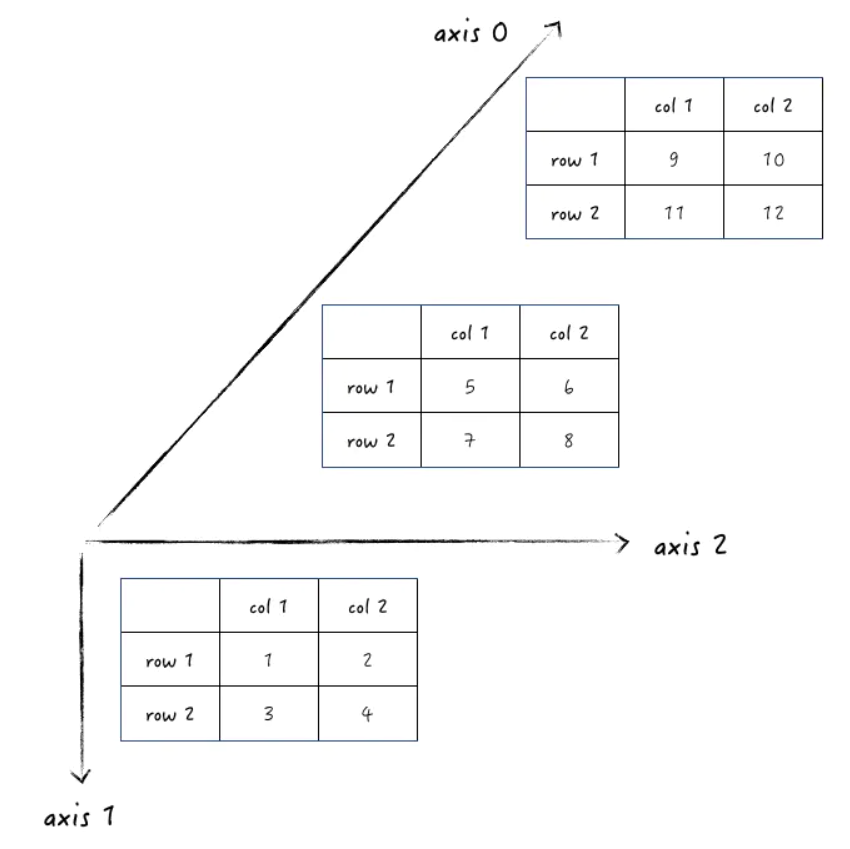

Numpy Axis

二維

[[0, 4, 2], [-2, 5, 3]]

三維

[ [ [1, 2],[3, 4] ], [ [5, 6], [7, 8] ], [ [9, 10], [11, 12] ] ]

Min-Max Normalization

X_{norm} = \frac{X - X_{min}}{X_{max}-X_{min}} \in [0,1]

X_{norm} = \frac{X - \mu}{X_{max}-X_{min}} \in [-1,1]

食酢

還記得雷夢要買房嗎(?

波士頓房價問題(?

ML L6

By richardliang

ML L6

- 401