Good Bot, Bad Bot

Richard Lindsey @Velveeta

What's the Goal?

Not just test coverage, but test coverage that provides the most value with the least amount of effort.

LLMs: The Good

-

They're really really fast.

-

IDE agents can take entire project context into account.

-

NLP is getting ridiculously good.

-

They can be taught!

LLMs: The Bad

-

They're really really dumb.

-

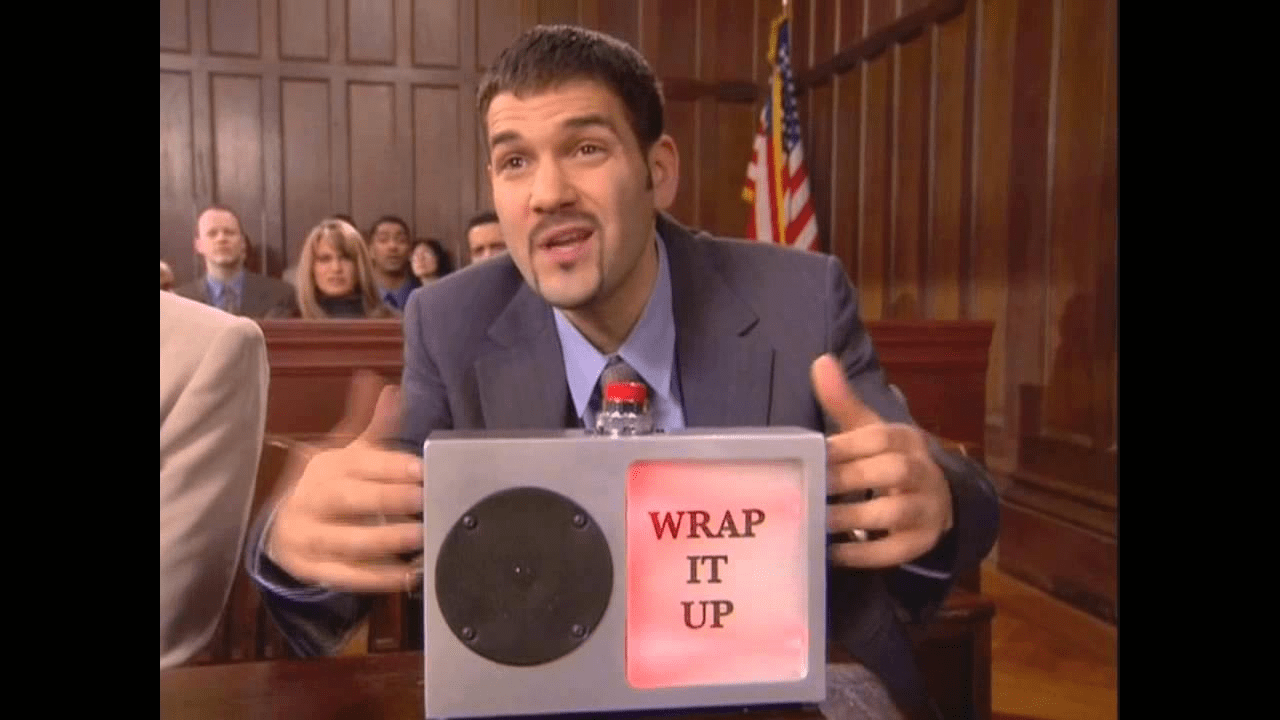

They can be overly thorough.

-

They do exactly what you tell them.

-

They can run you around in circles if you let them.

Initial Results

interface CheckInOutInformationProps {

checkIn: ReactNode;

checkOut: ReactNode;

}

it('accepts checkIn as a ReactNode', () => {});

it('accepts checkIn as a string', () => {});

it('accepts checkIn as a number', () => {});

it('accepts checkIn as a boolean', () => {});

it('accepts checkIn as null', () => {});

it('accepts checkIn as undefined', () => {});

it('accepts checkOut as a ReactNode', () => {});

it('accepts checkOut as a string', () => {});

it('accepts checkOut as a number', () => {});

it('accepts checkOut as a boolean', () => {});

it('accepts checkOut as null', () => {});

it('accepts checkOut as undefined', () => {});

Double Feedback Loop

-

Iterate on its instruction file.

-

Run the test output.

-

Find the best and worst examples.

-

Refine the instruction set with those examples.

Become a Mentor

-

You want a Junior Engineer, not a yes-man. AI agents are optimized to be agreeable by default.

-

Guardrails are how you teach an agreeable system to become a critical one.

Refine With Examples

#### Compound component pattern

- This repo often uses compound components where subcomponents are attached as properties:

- RootComponent.ComponentA = ChildComponentA

- When testing these, describe blocks must use the attached path:

- describe("RootComponent", () => {

describe("RootComponent.ComponentA", () => { ... })

describe("RootComponent.ComponentB", () => { ... })

})

**Examples of FORBIDDEN patterns:**

```ts

// ❌ BAD: Structural CSS selectors - NEVER USE THESE

container.querySelectorAll("div > p")

container.querySelectorAll(".card .content > .item")

container.querySelector("div.foo span.bar")

```

#### Working with existing tests

- **ALWAYS check for existing test files** before generating new tests

- If a test file already exists:

- Read and understand the existing test structure and patterns

- Identify which functionality is already covered

- Add ONLY the missing tests for new functionality

- Maintain consistency with existing test style and naming

- Do NOT duplicate existing testsBe Conversational, It Works

> One other tweak to make, specifically to your instruction set, is that they're

starting to get phrased very specifically to this particular component. E.g. you

just added instructions that said "If both `checkIn` and `checkOut` accept `ReactNode`,

test BOTH props with string and JSX values". This could, however, be any number

of props that render ReactNode, so let's not lock down this list to specific

examples, but rather, make it generic for any given prop. I don't want to risk

you interpreting this in the future as these specific prop names needing these

specific types of tests.

> We've introduced another brittle piece of testing infrastructure here. You're

doing a querySelectorAll with a path of "div > p", and this is an internal detail

that could potentially change and break this test. We don't care about the internal

markup structure, but we do care that our amenities are listed as expected. Please

update your libs/stays/CLAUDE.md and .claude/commands/test.md files to include

verbiage that will prevent you from creating these kinds of brittle selector strings

in the future, and determine the strongest and least likely to break method of

asserting that these assertions can be resolved.

Summary

Invest in the instruction set, not the prompt. This should be considered a team-level resource to be updated with both good and bad examples as additional application complexity yields shortcomings in test output.

Summary

Optimize with specific good and bad examples from actual test output, but whenever possible, abstract it to broader examples, from which it can infer for problems it hasn't yet seen.

Summary

Don't try to automate test authoring, build an extensive set of rules that teach your agent how to exercise good test judgement. This is how you prevent a swath of useless tests, and start finding much higher value.

fn.

Richard Lindsey @Velveeta

Good Bot, Bad Bot

By Richard Lindsey

Good Bot, Bad Bot

- 17