Fast, Simple Concurrency with Scala Native

Richard Whaling

@RichardWhaling

Scala Days 2019

This talk is about:

- bare-metal concurrency on scala native

- native as a platform

- scala as a platform

- sustainable libraries and communities

and it's about native-loop, a new concurrency library for Scala Native 0.4

- an extensible event loop and IO system

- backed by the libuv event loop

- works with other C libraries (libcurl, etc)

http://github.com/scala-native/scala-native-loop

Talk Outline

- Background:

- Concurrency in Scala

- Scala Native

- Why LibUV?

- API overview

- Implementation deep dive:

- libuv-based ExecutionContext

- The future

About me:

- @RichardWhaling

- Lead Data Engineer at M1Finance

- Author of:

Background: Concurrency

- Programs that do one thing at a time are called synchronous

- Programs that do more than one thing at a time are called asynchronous or concurrent

- Synchronous programs are easier to write correctly but bottleneck for certain workloads

- Concurrent programs can perform better but can be fiendishly complex and buggy

- Java and C++ basically got concurrency wrong

- Javascript was the first mainstream language to move in the right direction

Concurrency in Scala

def delay(duration:Duration)(implicit ec:ExecutionContext):Future[Unit]

delay(3 seconds).flatMap { _ =>

println("beep")

delay(2 seconds)

}.flatMap { _ =>

println("boop")

delay(1 second)

}.onComplete { _ =>

println("done")

}- Asynchronous results are passed in Futures

- No mutable state

- ExecutionContext abstracts over backends

- Map, flatmap, etc.

Common Concurrency Issues

- Blocking code starves the ExecutionContext

- Race conditions with mutable state

So we introduce a higher-level model: Actors, Streaming, etc...

Yet problems persist.

Hot Take: the JVM's threading and memory model is hostile to closure-heavy patterns.

Not At All Hot Take: a huge proportion of JVM libraries will happily block a thread

Design Questions

In Scala Native we are not constrained by the JVM,

nor can we piggyback on its successes;

whatever we build, we build from scratch.

Can Scala Native provide a model of IO and concurrency that is true to Scala?

Can we provide a model of IO and concurrency that is a better fit than the JVM?

Let's talk about Scala Native

Scala Native is:

- Scala!

- a scalac plugin

- targets LLVM rather than JVM bytecode

- no JVM, good coverage of JDK classes

- produces compact, optimized native binaries

- most ordinary Scala code "just works"

- full control over memory allocation

- struct and array layout

- C FFI

- An embedded scala DSL with the capabilities of C

But that's not all!

Caveat

- this low-level functionality is powerful but dangerous

- you don't need unsafe functionality to use scala native

- ideally: idiomatic Scala API on top of low-level code

- Scala Native is (for now) single-threaded

- No JDK - essential classes re-implemented in Scala

- C FFI fills in the gaps

But what is "idiomatic Scala"?

How can we provide the essential capabilities for the ecosystem?

Introducing libuv

- Cross-platform C event loop

- Used by node.js, julia, neovim, many others

- Comprehensive non-blocking IO capabilities

- Supports Windows, Mac, BSD

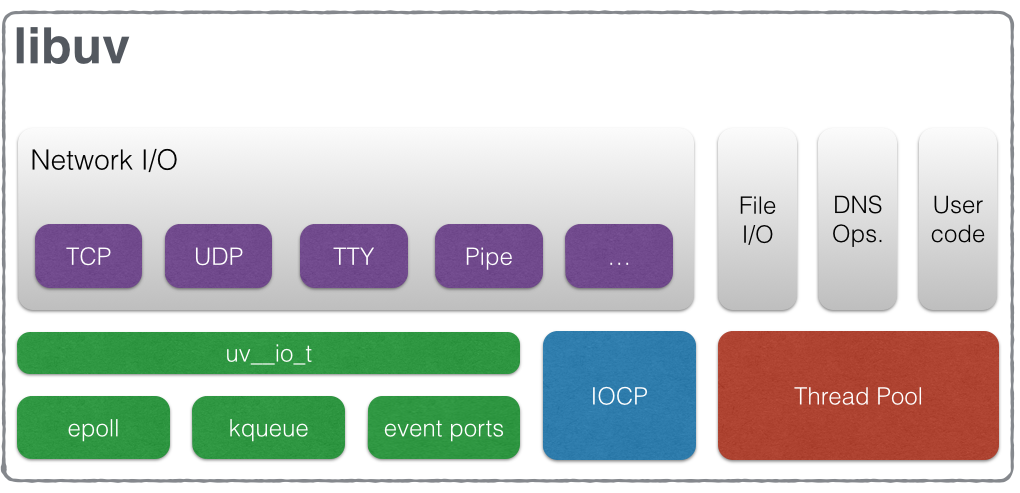

LibUV's IO system

libuv abstracts over different operating systems

and different kinds of IO

Consistent model of callbacks attached to handles

libuv and Scala Native

- Scala Native code runs on a single thread

- use system primitives for high-performance IO:

- epoll

- kqueue

- IOCP

- consistent callback API

- battle-hardened, production-grade performance

- extensible with other C libraries (curl, postgres, redis)

Can we design our API avoid the "callback hell"

or "pyramid of doom" anti-pattern?

A Tour of Native-Loop

High Level Design Goals:

- ExecutionContext and Future support

- Comprehensive IO

- HTTP/HTTPS client/server

- Minimalism

- Sustainability

Meta-goal: an unopinionated base for other idioms: streams, actor model, IO monad, etc

EventLoop

trait EventLoopLike extends ExecutionContextExecutor {

def addExtension(e:LoopExtension):Unit

def run(mode:Int = UV_RUN_DEFAULT):Unit

}

- Fully functional ExecutionContext

- LoopExtension mechanism for adding additional modules and C libraries

- Exposes low-level loop for libuv calls

- Loop.run() to invoke by hand

- SN issue #1626: override runtime.loop()

Timer

object Timer {

def delay(duration:Duration)(implicit ec:ExecutionContext):Future[Unit]

}

object Main {

implicit val ec:ExecutionContext = EventLoop

def main(args:Array[String]):Unit = {

println("hello!")

Timer.delay(3 seconds).onComplete { _ =>

println("goodbye!")

}

EventLoop.run()

}

}

- Returns a () after duration

- Can also do repeating schedules

- Chainable with map/flatMap/etc

Streams (take 1)

trait Pipe[I,O] {}

def main(args:Array[String]):Unit = {

val p = FilePipe(c"./data.txt")

.map { d =>

println(s"consumed $d")

d

}.addDestination(Tokenizer("\n"))

.addDestination(Tokenizer(" "))

.map { d => d + "\n" }

.addDestination(FileOutputPipe(c"./output.txt", false))

EventLoop.run()

}- First attempt at IO relied on Pipe[I,O] as a primitive

- Reactive streams don't model raw sockets or files well

- Redesign goal: provide a base for high-level IO

- Reactive Streams, Monix, Cats-effect, ZIO, etc.

Streams, take 2

trait Stream {

def open(fd:Int): Stream

def stream(fd:Int)(f:String => Unit):Future[Unit]

def write(s:String):Future[Unit]

def pause():Unit

def close():Future[Unit]

}

def main(args:Array[String]):Unit = {

val stdout = open(STDOUT)

Pipe(STDIN).stream { line =>

println(s"read line $line")

val split = line.trim().split(" ")

for (word <- split) {

stdout.write(word + "\n")

}

}

EventLoop.run()

}- Exposes all LibUV capabilities, including pausing

- No type parameters, strings only

- Open question: Future vs Callbacks?

Curl

Curl.get(c"https://www.example.com").map { response =>

Response(200,"OK",Map(),response.body)

}Curl gives us a highly capable service client, basically for free

- Massively scalable

- Fully integrated with LibUV polls/timers

- HTTPS support

- FTP, SCP, many other protocols

- Goal - STTP and requests-scala support

- Hard problem - is there a "primitive" low-level API we could provide?

Imperative Server API

trait Server {

def serve(port:Int)(handler:(Request,Conection) => Unit):Unit

}

def main(args:Array[String]):Unit = {

Server.serve(9999) { request, connection =>

val response = makeResponse(request)

connectionHandle.sendResponse(response)

}

EventLoop.run()

}- an imperative-style callback lets us pass a request and a capability to respond to a handler

- this lets us abstract over sync/async/streaming

- is there a way to avoid defining a Request and Response type?

- It would be great if Scala had something like Rack/WSGI. Lightweight Servlets?

- Sca-lets?

Server DSL(s)

def main(args:Array[String]):Unit = {

Service()

.getAsync("/async") { r => Future {

s"got (async routed) request $r"

}.map { message => OK(

Map("asyncMessage" -> message)

)

}

}

.get("/") { r => OK {

Map("default_message" -> s"got (default routed) request $r")

}

}

.run(9999)

}- We're shipping a high-level service API as well

- Async support, JSON support

- Router is rudimentary

- Next steps: port Cask, play.api.routing.sird, others?

All Of the Above

def main(args:Array[String]):Unit = {

Service()

.getAsync("/async") { r => Future {

s"got (async routed) request $r"

}.map { message => OK(

Map("asyncMessage" -> message)

)

}

}

.getAsync("/fetch/example") { r =>

Curl.get(c"https://www.example.com").map { response =>

Response(200,"OK",Map(),response.body)

}

}

.get("/") { r => OK {

Map("default_message" -> s"got (default routed) request $r")

}

}

.run(9999)

}Because LibUV coordinates everything, all of these capabilities can be mixed and matched

Performance

- Raw HTTP request throughput metrics aren't representative

- Aiming for high hundreds/low thousands of requests/second on benchmarks with good QOS

- Real impact is service density:

- < 1 CPU/instance

- 100-200 MB RAM

- < 10 MB binary/container

- Versus realistic JVM Scala usage:

- 2-4x+ CPU

- 5x+ RAM / instance

- 10x+ larger footprint

- Real scenario: 8 clustered services, 3 - 5 instances/service

So how do we implement it?

Systems Programming 101

To implement what we've seen, we will need two techniques from systems programming:

- pointers

- unsafe casts

A pointer is a representation of the location of a piece of data. A Ptr[T] stores the location of a T, as a 8-byte unsigned integer.

An unsafe cast lets us treat a Ptr[T] as any other type, typically another Ptr[X] or just Long

Data structures designed for generic programming in C often have a "void pointer" field for custom data structures.

In Scala Native we represent these as Ptr[Byte]

Example: Memory Allocation

val raw_data:Ptr[Byte] = malloc(sizeof[Long])

val long_ptr:Ptr[Long] = raw_data.asInstanceOf[Ptr[Long]]

// the ! operator updates a pointer's contents on the left-hand side

!long_ptr:Ptr = 0

// on the right-hand side, it dereferences the pointer to read its value

printf(c"pointer at %p has value %d\n", long_ptr:Ptr, !long_ptr:Ptr)

!int_ptr = 1

printf(c"pointer at %p has value %d\n", long_ptr:Ptr, !long_ptr:Ptr)

//this will segfault

free(raw_data)

printf(c"pointer at %p has value %d\n", long_ptr:Ptr, !long_ptr:Ptr)

not shown: arrays and pointer arithmetic

Example: C FFI

@extern

object Quicksort {

type Comparator = CFuncPtr2[Ptr[Byte],Ptr[Byte],Int]

def qsort(array:Ptr[Byte],num:CSize,size:CSize,cmp:Comparator):Unit = extern

}

- Scala Native code can call C functions

- C functions can call SN code too!

- But C functions aren't like Scala functions: they are static

- This constrains some design patterns

Example: C FFI

@extern

object Quicksort {

type Comparator = CFuncPtr2[Ptr[Byte],Ptr[Byte],Int]

def qsort(array:Ptr[Byte],num:CSize,size:CSize,cmp:Comparator):Unit = extern

}

object App {

type MyStruct = CStruct3[CString,CString,Int]

val myStructComp = new Comparator {

def apply(left:Ptr[Byte],right:[Byte]):Int = {

val l = left.asInstanceOf[Ptr[MyStruct]]

val r = right.asInstanceOf[Ptr[MyStruct]]

l - r

}

}

def main(args:Array[String]):Unit = {

// ...

val data:Ptr[MyStruct] = ???

val data_size = ???

qsort(data,data_size,sizeof[MyStruct],myStructComp)

// ...

}

}

Greenspun's tenth rule

- Void pointers, function arguments, and casts are C's affordances for generic programming

- This technique feels closer to a dynamic language than a type-safe one, but it suffices

- LibUV's callbacks will let us insert enough Scala Native code into the event loop to provide a full concurrent environment (without leaking unsafe details)

- So how does it work?

"Any sufficiently complicated C or Fortran program contains an ad hoc, informally specified, bug ridden, and slow implementation of half of Common Lisp"

ExecutionContext from Scratch

- Scala Native includes an EC already

- The catch - it runs after main() returns

object ExecutionContext {

def global: ExecutionContextExecutor = QueueExecutionContext

private object QueueExecutionContext extends ExecutionContextExecutor {

def execute(runnable: Runnable): Unit = queue += runnable

def reportFailure(t: Throwable): Unit = t.printStackTrace()

}

private val queue: ListBuffer[Runnable] = new ListBuffer

private[runtime] def loop(): Unit = { // this runs after main() returns

while (queue.nonEmpty) {

val runnable = queue.remove(0)

try {

runnable.run()

} catch {

case t: Throwable =>

QueueExecutionContext.reportFailure(t)

}

}

}

}

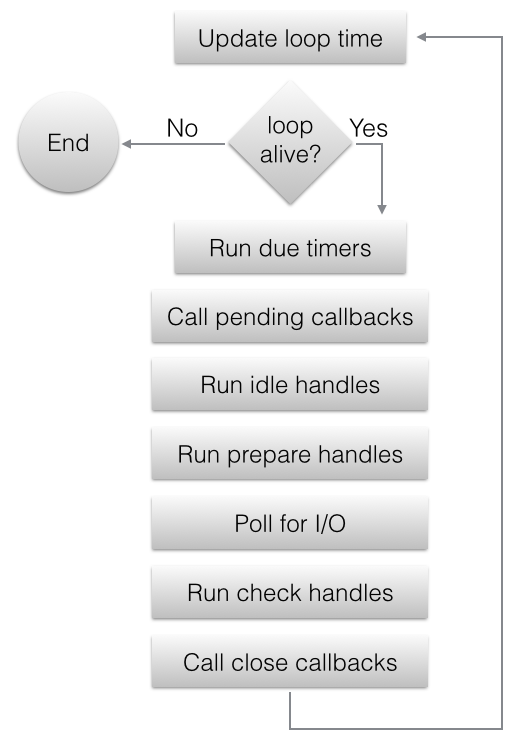

LibUV's event loop

We just need to adapt a queue-based EC to libuv's lifecycle of callbacks

- We queue up work

- A prepare handle run immediately prior to IO

- It runs tasks until the queue is exhausted

- When there are no more tasks and no more IO, we are done!

- The catch - how do we track IO that isn't a Future?

EventLoop and LoopExtensions

trait EventLoopLike extends ExecutionContextExecutor {

def addExtension(e:LoopExtension):Unit

def run(mode:Int = UV_RUN_DEFAULT):Unit

}

trait LoopExtension {

def activeRequests():Int

}

The LoopExtension trait lets us coordinate Future execution with other IO tasks on the same loop, and modularize our code.

Our EventLoop

object EventLoop extends EventLoopLike {

val loop = uv_default_loop()

private val taskQueue = ListBuffer[Runnable]()

def execute(runnable: Runnable): Unit = taskQueue += runnable

def reportFailure(t: Throwable): Unit = {

println(s"Future failed with Throwable $t:")

t.printStackTrace()

}

// ...

execute() is invoked as soon as a Future is ready to start running, but we can defer it until a callback fires

Our EventLoop callback

// ...

private def dispatchStep(handle:PrepareHandle) = {

while (taskQueue.nonEmpty) {

val runnable = taskQueue.remove(0)

try {

runnable.run()

} catch {

case t: Throwable => reportFailure(t)

}

}

if (taskQueue.isEmpty && !extensionsWorking) {

println("stopping dispatcher")

LibUV.uv_prepare_stop(handle)

}

}

private val dispatcher_cb = CFunctionPtr.fromFunction1(dispatchStep)

private def initDispatcher(loop:LibUV.Loop):PrepareHandle = {

val handle = stdlib.malloc(uv_handle_size(UV_PREPARE_T))

check(uv_prepare_init(loop, handle), "uv_prepare_init")

check(uv_prepare_start(handle, dispatcher_cb), "uv_prepare_start")

return handle

}

private val dispatcher = initDispatcher(loop)

// ...LoopExtensions

private val extensions = ListBuffer[LoopExtension]()

private def extensionsWorking():Boolean = {

extensions.exists( _.activeRequests > 0)

}

def addExtension(e:LoopExtension):Unit = {

extensions.append(e)

}

All the actual IO work will be implemented as LoopExtensions

We'll implement the simplest one, a delay.

Timer

object Timer extends LoopExtension {

EventLoop.addExtension(this)

var serial = 0L

var timers = mutable.HashMap[Long,Promise[Unit]]() // the secret sauce

override def activeRequests():Int =

timers.size

def delay(dur:Duration):Future[Unit] = ???

val timerCB:TimerCB = ???

}

@extern

object TimerImpl {

type Timer: Ptr[Long] // why long and not byte?

type TimerCB = CFuncPtr1[TimerHandle,Unit]

def uv_timer_init(loop:Loop, handle:TimerHandle):Int = extern

def uv_timer_start(handle:TimerHandle, cb:TimerCB,

timeout:Long, repeat:Long):Int = extern

}How is it safe to treat Timer as Ptr[Long]?

Working with Opaque Structs

Sometimes it's necessary to work with a data structure without knowing its internal layout.

Ptr[CStruct3[Ptr[Byte],Float,CString]]

Ptr[CStruct1[Ptr[Byte]]

Ptr[CStruct1[Long]]

Ptr[Long](No safety guarantees)

- Allocation and initialization is hard, we'll need help

- "type puns" allow us to cast data between unrelated types:

Timer

def delay(dur:Duration):Future[Unit] = {

val millis = dur.toMillis

val promise = Promise[Unit]()

serial += 1

val timer_id = serial

timers(timer_id) = promise

val timer_handle = stdlib.malloc(uv_handle_size(UV_TIMER_T))

uv_timer_init(EventLoop.loop,timer_handle)

!timer_handle = timer_id

uv_timer_start(timer_handle, timerCB, millis, 0)

promise.future

}We can store an 8-byte serial number in the TimerHandle, and retrieve it in our callback.

Timer

val timerCB = new TimerCB {

def apply(timer_handle:TimerHandle):Unit = {

println("callback fired!")

val timer_id = !timer_handle

val timer_promise = timers(timer_id)

timers.remove(timer_id)

println(s"completing promise ${timer_id}")

timer_promise.success(())

}

}

We can dereference the TimerHandle safely - the compiler thinks it's a Ptr[Long] so it only reads the first 8 bytes.

Then we use the serial number for a map lookup to retrieve our state.

Timer

object Main {

implicit val ec:ExecutionContext = EventLoop

def main(args:Array[String]):Unit = {

Timer.delay(3 seconds).flatMap { _ =>

println("beep")

Timer.delay(2 seconds)

}.flatMap { _ =>

println("boop")

Timer.delay(1 second)

}.onComplete { _ =>

println("done")

}

EventLoop.run()

}

}

It just works!

What's Next?

- Improve support in SN for user-supplied event loop

- STTP has great (blocking) curl/native support

- Plan to spin out server API

- Get feedback on streaming IO API

Building a Community

- All of this needs contributors to be sustainable

- There are a LOT of low-hanging fruit out there

- Weekend-scale side projects can have a big impact!

- Huge shout out to Scala Native's contributors

- Get involved!

Thanks!

@RichardWhaling

Fast, Simple Concurrency with Scala Native

By Richard Whaling

Fast, Simple Concurrency with Scala Native

- 1,074