Concurrency in Ruby

Concurrency and Parallelism

- Concurrency:

- A condition that exists when at least two threads are making progress. A more generalized form of parallelism that can include time-slicing as a form of virtual parallelism.

- Parallelism:

- A condition that arises when at least two threads are executing simultaneously.

"Concurrency is about dealing with lots of things at once. Parallelism is about doing lots of things at once." - Rob Pike

Sources:

https://vimeo.com/49718712

http://www.jstorimer.com/blogs/workingwithcode/8085491-nobody-understands-the-gil

http://tenderlovemaking.com/2012/06/18/removing-config-threadsafe.html

Concurrency and Parallelism

Concurrency approaches

- Threads (Java). Threads sharedin memory

- New process per request (PHP). No Deadlocks and threadsafe

- Actor Model (Erlang, Scala). Messaging between actors

- AMQP (RabbitMQ, kafka). Advanced Message Queuing Protocol

- Hybrid solutions (e.g. Shoryuken) Amazon SQS thread-based message processor

Ruby challenges

GIL & GC

Global Interpreter Lock

GIL is a locking mechanism that is meant to protect your data integrity(CRuby, CPython) It force to run only one thread at a time even on a multicore processor

Garbage Collection

In computer science, garbage collection (GC) is a form of automatic memory management. The garbage collector, or just collector, attempts to reclaim garbage, or memory occupied by objects that are no longer in use by the program. - Wikipedia

Resources other than memory, such as network sockets, database handles, user interaction windows, and file and device descriptors, are not typically handled by garbage collection.

A well known issue in MRI

- About 12% of CPU time used in garbage collection per process on MRI compared to under 2% on JRuby

- Lots of GC pauses on MRI, requests have to wait for the pauses

- Huge waste of CPU

Source: https://www.youtube.com/watch?v=etCJKDCbCj4

Why GIL?

- Some C extensation aren't Threadsafe

- Deadlocks

- Data integrity

Example

$ ruby pushing_nil.rb

5000

$ jruby pushing_nil.rb

4446

$ rbx pushing_nil.rb

3088array = []

5.times.map do

Thread.new do

1000.times do

array << nil

end

end

end.each(&:join)

puts array.sizeImplementation

VALUE

rb_ary_push(VALUE ary, VALUE item)

{

long idx = RARRAY_LEN(ary);

ary_ensure_room_for_push(ary, 1);

RARRAY_ASET(ary, idx, item);

ARY_SET_LEN(ary, idx + 1);

return ary;

}Is it so bad?

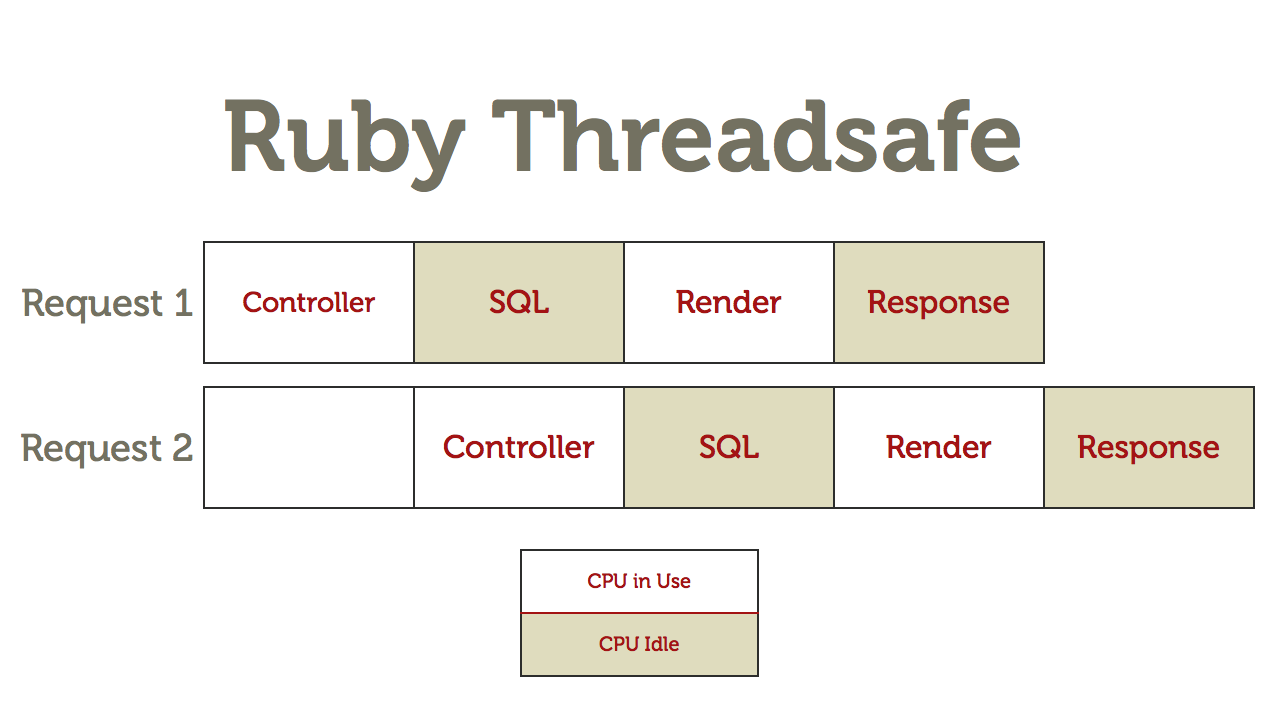

In web each thread spend quite a lot of time in IOs which won’t block the thread scheduler. So if you receive two quasi-concurrent requests you might not even be affected by the GIL

Yehuda Katz

Multiple Process/Forking

- Passanger

- Unicorn

- Resque

- delayed_job

It allow to fork as many process as need and control them in case of hanging or memory leaks

Actors/Fibers

- Actors implementations like Celluloid (sidekiq)

- Fibers

Non blocking IO

Reactor pattern

- Goliath

- Thin

- Twisted

- EvenMachine

- Node.js(http://howtonode.org/control-flow-part-ii/file-write.js)

Reactor Pattern

If DB query(I/O) blocking other requests then wrap it in a fiber, trigger an async call and pause the fiber so another request can get processed. Once the DB query comes back, it wakes up the fiber it was trigger from, which then sends the response back to the client.

Pros/Cons delayed_job

Pros/Cons delayed_job

PROS:

- Easy to setup

CONS:

- Use db to store jobs thus makes it not scalabale

- Use process based approach

- No comprehensive monitoring solution, no alerting

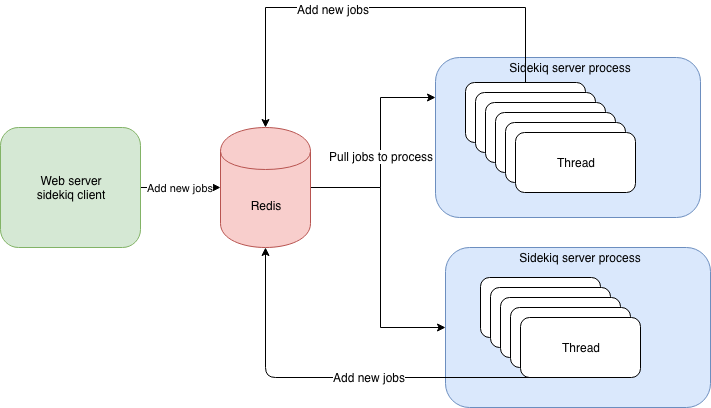

Cons/Pros sidekiq

Cons/Pros sidekiq

Cons/Pros sidekiq

CONS:

- Extra dependency on redis

PROS:

- Scalable, widely used solution

- Actively supported

- Thread based, saves resources

- Out of box monitoring solution and web UI

- Rich in features (failed jobs self retry, expire and unique jobs)

- Scheduled jobs execution

- Advanced queueing management

- Clear Ruby API

- Middleware API

Ent. sidekiq Pros

PROS:

- Jobs encryption

- Retain historical metrics

- Support long running jobs by rolling restarts strategy

- Rate limiting to third parties

- Multi-process

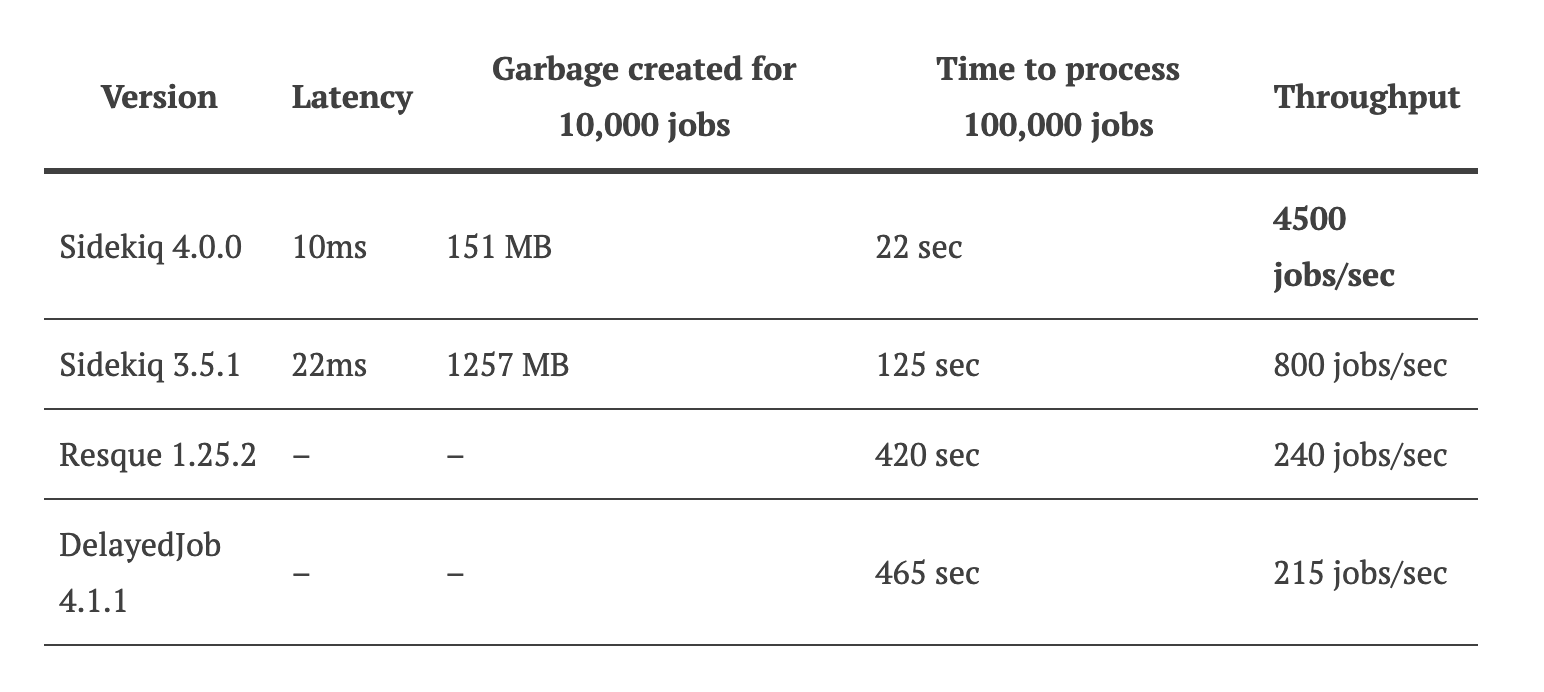

Sidekiq

🔥🔥🔥 PERFORMANCE 🔥🔥🔥

Sidekiq

Distribute load among workers

heavy: sidekiq -C config/sidekiq.yml -c 3

-q document_generation, 8

-q bulk_queue, 4

-q slow_queue, 2

lite: sidekiq -C config/sidekiq.yml -c 3

-q reports, 8

-q fast_queue, 4

-q slow_queue, 2

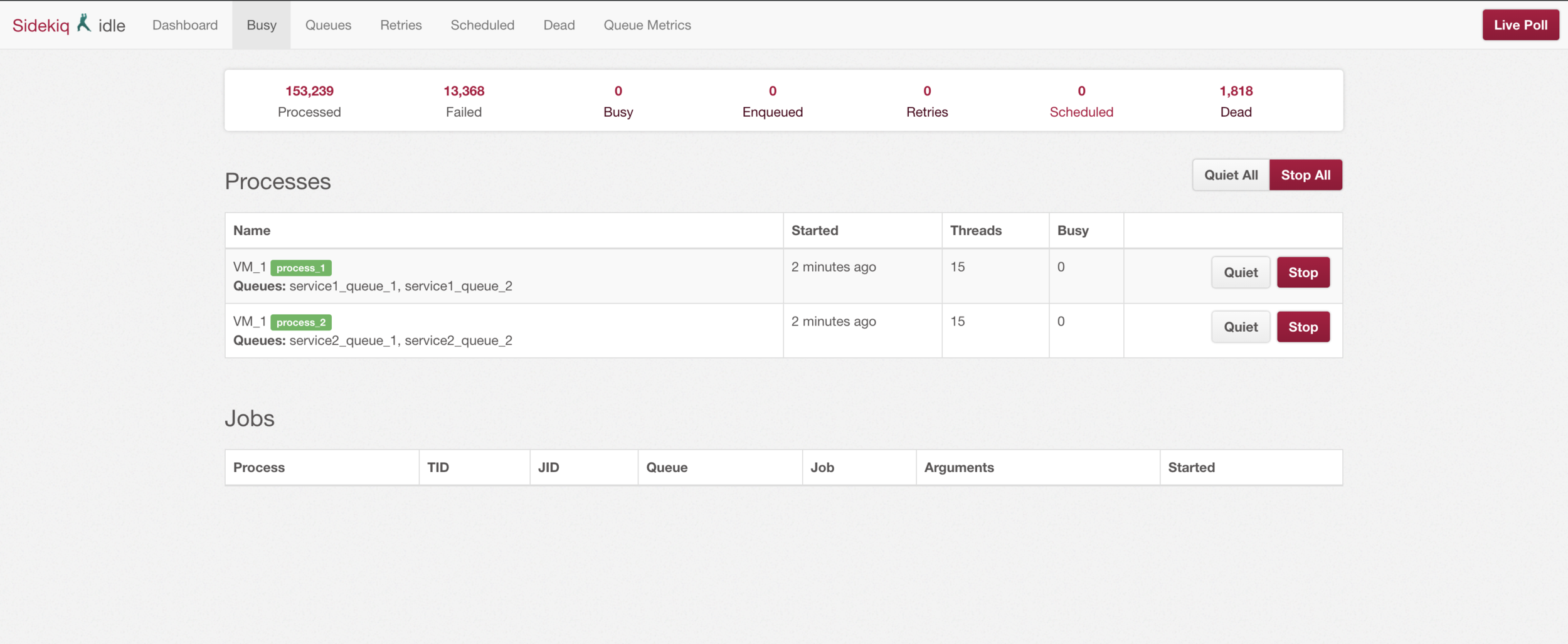

Sidekiq

Distribute load among workers

Sidekiq

Sufficient out-of-box monitoring UI

Sidekiq

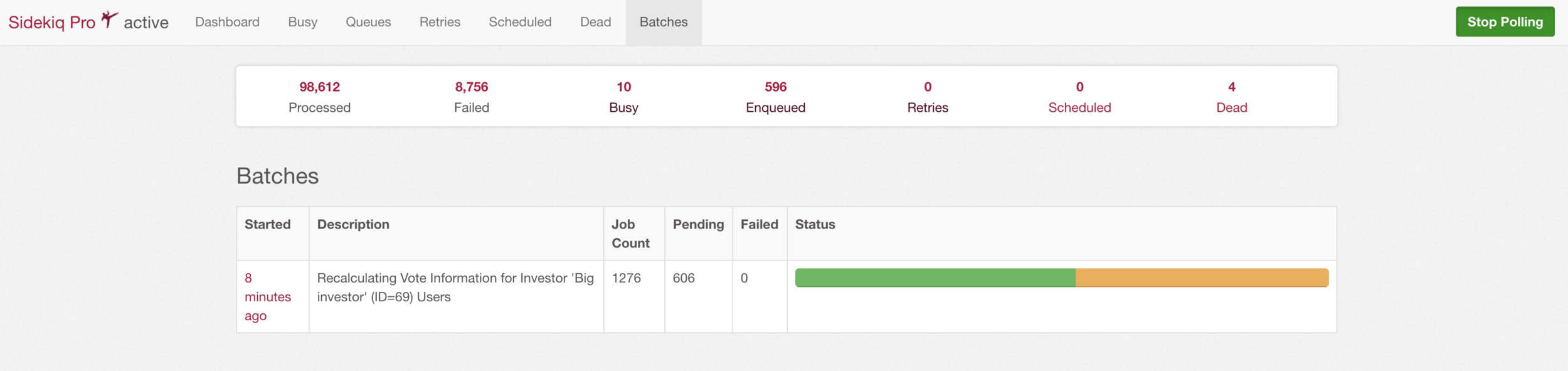

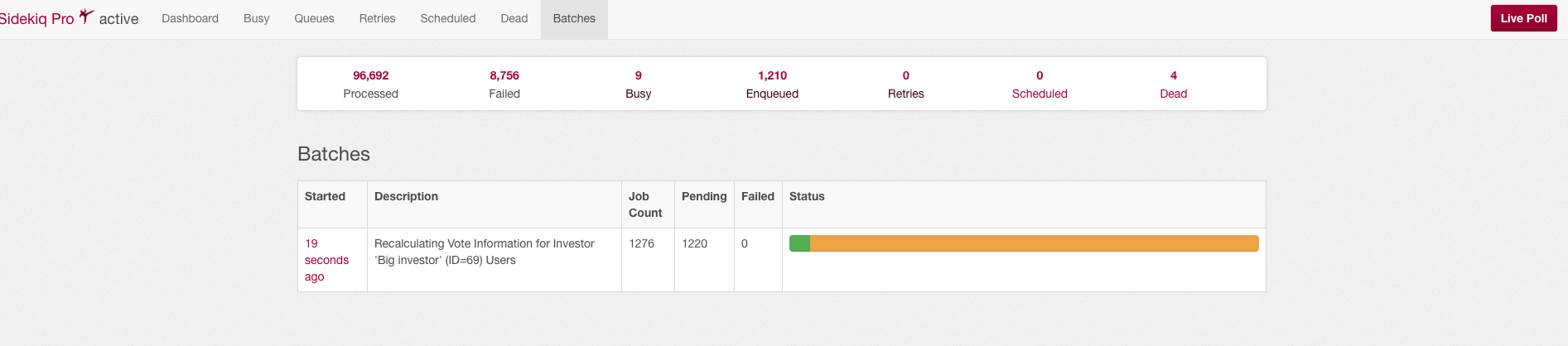

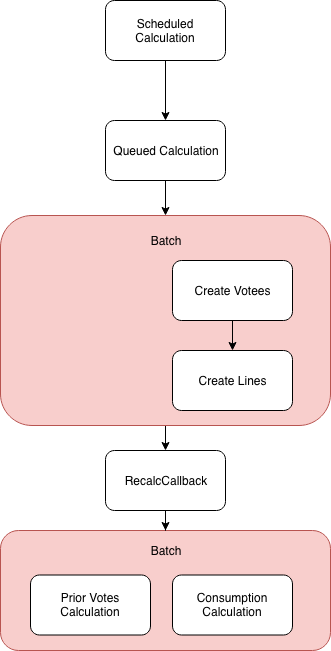

Processing in batches

Sidekiq

Processing in batches

Sidekiq

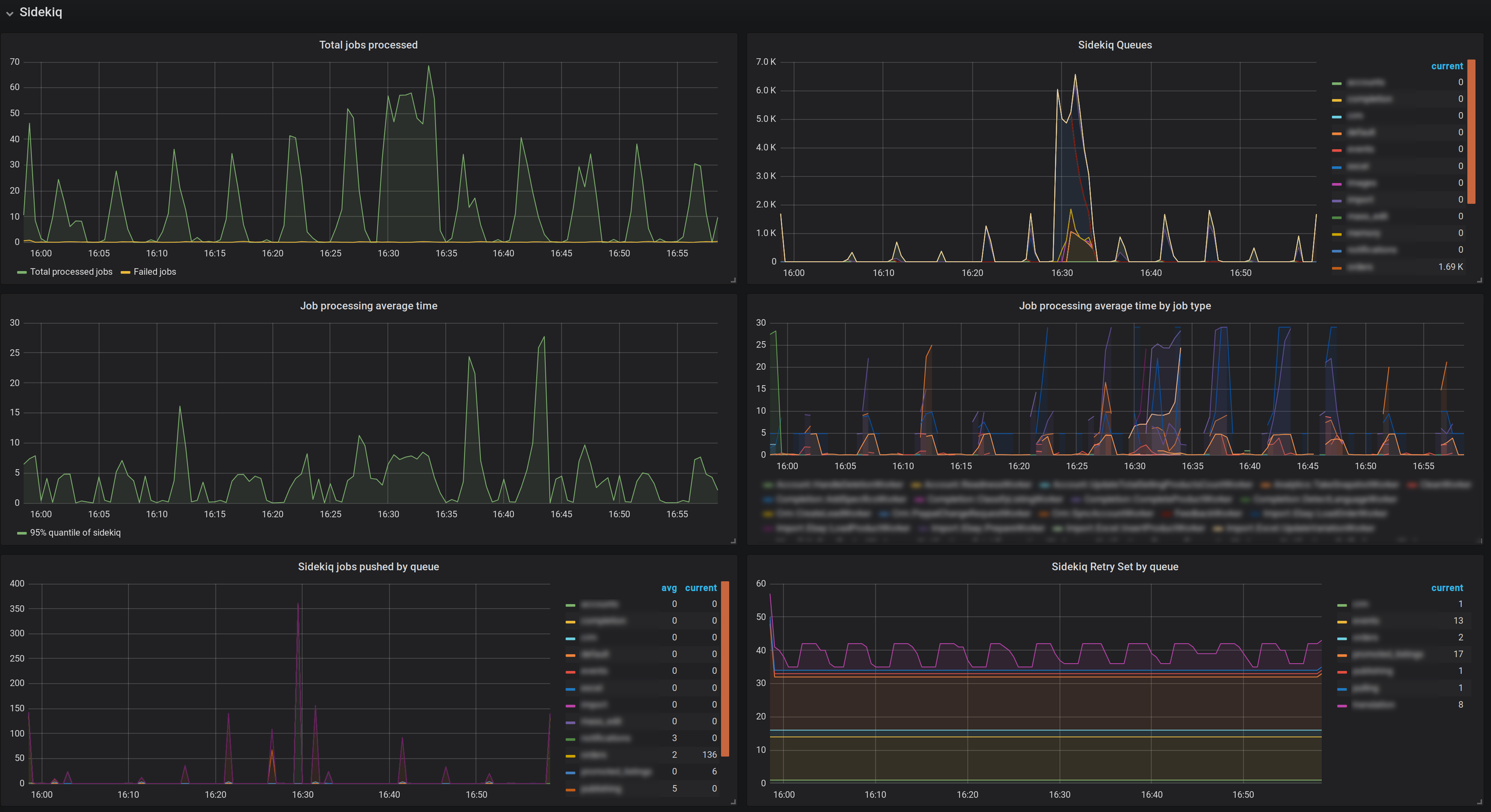

Prometheus/Grafana monitoring & alerting

Credits:

1. https://www.youtube.com/watch?v=eHPp-tpCAZ0

2. http://en.wikipedia.org/wiki/Global_Interpreter_Lock

3. http://tonyarcieri.com/2012-the-year-rubyists-learned-to-stop-worrying-and-love-the-threads

4. https://practicingruby.com/articles/gentle-intro-to-actor-based-concurrency

5. https://github.com/celluloid/celluloid/wiki/Gotchas

6. http://merbist.com/2011/02/22/concurrency-in-ruby-explained/

7. https://en.wikipedia.org/wiki/Actor_model

8. http://www.jstorimer.com/products/working-with-ruby-threads

Concurrency in Ruby

By Roman Bambycha

Concurrency in Ruby

- 444